I must start this chapter with a story. While creating slides for two secure software papers at the November 2001 Microsoft Professional Developer’s Conference, a friend told me that I would soon by out of a job because once managed code and the .NET Framework shipped, all security issues would go away. This made me convert the SQL injection demonstration code from C++ to C# to make the point that he was wrong.

Managed code certainly takes some of the security burden off the developer, especially if you have a C or C++ background, but you cannot disengage your brain, regardless of the programming language you use. We trust you will take the design and coding issues in this chapter to heart as you create your first .NET applications. I say this because we are at the cusp of high adoption of Microsoft .NET, and the sooner we can raise awareness and the more we can help developers build secure software from the outset, the better everyone will be. This chapter covers some of the security mistakes that you can avoid, as well as some best practices to follow when writing code using the .NET common language runtime (CLR), Web services, and XML.

Be aware that many of the lessons in the rest of this book apply to managed code. Examples include the following:

Don’t store secrets in code or web.config files.

Don’t create your own encryption; rather, use the classes in the System.Security.Cryptography namespace.

Don’t trust input until you have validated its correctness.

Managed code, provided by the .NET common language runtime, helps mitigate a number of common security vulnerabilities, such as buffer overruns, and some of the issues associated with fully trusted mobile code, such as ActiveX controls. Traditional security in Microsoft Windows considers only the principal’s identity when performing security checks. In other words, if the user is trusted, the code runs with that person’s identity and therefore is trusted and has the same privileges as the user. Technology based on restricted tokens in Windows 2000 and later helps mitigate some of these issues. Refer to Chapter 7, for more information regarding restricted tokens. However, security in .NET goes to the next level by providing code with different levels of trust based not only on the user’s capabilities but also on system policy and evidence about the code. Evidence consists of properties of code, such as a digital signature or site of its origin, that security policy uses to grant permissions to the code.

This is important because especially in the Internet-connected world, users often want to run code with an unknown author and no guarantee whether it was written securely. By trusting the code less than the user (just one case of the user-trust versus code-trust combination), highly trusted users can safely run code without undue risk. The most common case where this happens today is script running on a Web page: the script can come from any Web site safely (assuming the browser implementation is secure) because what the script can do is severely restricted. .NET security generalizes the notion of code trust, allowing much more powerful trade-offs between security and functionality, with trust based on evidence rather than a rigid, predetermined model as with Web script.

Note

In my opinion, the best and most secure applications will be those that take advantage of the best of security in the operating system and the best of security in .NET, because each brings a unique perspective to solving security problems. Neither approach is a panacea, and it’s important that you understand which technology is the best to use when building applications. You can determine which technologies are the most appropriate based on the threat model.

However, do not let that lull you into a false sense of security. Although the .NET architecture and managed code offer ways to reduce the chance of certain attacks from occurring, no cure-all exists.

Important

The CLR offers defenses against certain types of security bugs, but that does not mean you can be a lazy programmer. The best security features won’t help you if you don’t follow core security principles.

Before I get started on best practices, let’s take a short detour through the world of .NET code access security (CAS).

This section is a brief outline of the core elements of code access security in the .NET CLR. It is somewhat high-level and is no replacement for a full, in-depth explanation, such as that available in .NET Framework Security (details in the bibliography), but it should give you an idea of how CAS works, as well as introduce you to some of the terms used in this chapter.

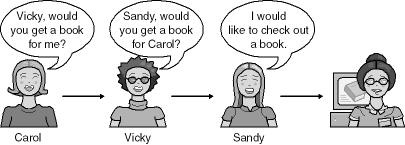

Rather than going into detail, I thought I would use diagrams to outline a CAS-like scenario: checking out a book from a library. In this example, Carol wants to borrow a book from a library, but she is not a member of the library, so she asks her friends, Vicky and Sandy, to get the book for her. Take a look at Figure 18-1.

Life is not quite as simple as that; after all, if the library gave books to anyone who walked in off the street, it would lose books to unscrupulous people. Therefore, the books must be protected by some security policy—only those with library cards can borrow books. Unfortunately, as shown in Figure 18-2, Carol does not have a library card.

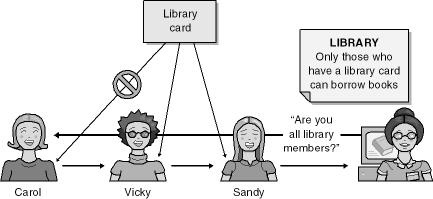

Figure 18-2. The library’s policy is enforced and Carol has no library card, so the book cannot be loaned.

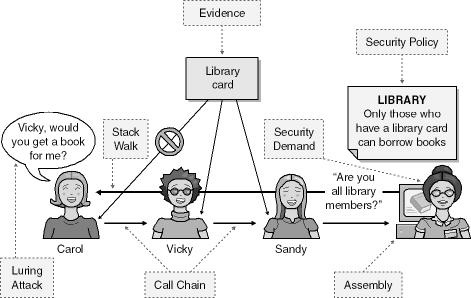

Unbelievably, you just learned the basics of CAS! Now let’s take this same scenario and map CAS nomenclature onto it, beginning with Figure 18-3.

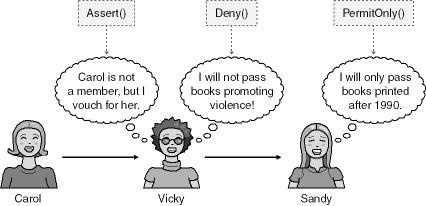

Finally, in the real world, there may be ways to relax the system to allow Carol to borrow the book, but only if certain conditions, required by Vicky and Sandy, are met. Let’s look at the scenario in Figure 18-4, but add some modifiers, as well as what these modifiers are in CAS.

As I mentioned, this whirlwind tour of CAS is intended only to give you a taste for how it works, but it should provide you with enough context for the rest of this chapter.

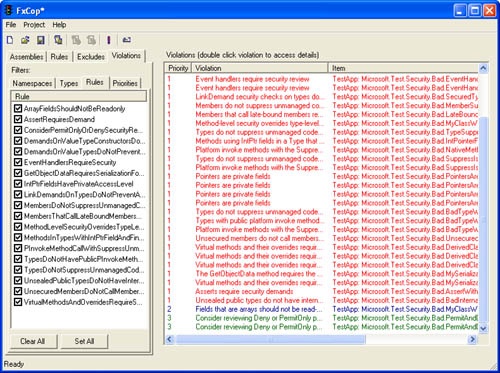

Before I start outlining secure coding issues and best practices, you should be aware of a useful tool named FxCop available from http://www.gotdotnet.com. FxCop is a code analysis tool that checks your .NET assemblies for conformance to the .NET Framework Design Guidelines at http://msdn.microsoft.com/library/en-us/cpgenref/html/cpconnetframeworkdesignguidelines.asp. You should run this tool over every assembly you create and then rectify appropriate errors. Like all tools, if this tool flags no security vulnerabilities, it does not mean you are secure, but it’s a good minimum start bar. Figure 18-5 shows the result of running the tool on a test assembly.

Note

FxCop can produce an XML file that lists any design guidelines violations in your assembly. However, if you want a more readable report, you can add <?xml-stylesheet href="C:Program FilesMicro-soft FxCopXmlviolationsreport.xsl" type="text/xsl"?> after the first line, <?xml version="1.0"?>.

Two common errors, among many, flagged by FxCop are the lack of a strong name on the assembly and the failure of an assembly to specify permission requests. Let’s look at both in detail.

Names are a weak form of authentication or evidence. If someone you didn’t know gave you a file on a CD containing a file named excel.exe, would you blindly run it? You probably would if you needed a spreadsheet, because you’d think the file would be Microsoft Excel. But how do you really know it is Microsoft Excel? .NET solves this spoofing problem by providing strong names that consist of the file’s simple text name, version number, and culture information—plus a public key and a digital signature.

To create a strong name, you must create a strong name key pair by using the sn.exe tool. The syntax for creating a key pair is SN -k keypair.snk. The resulting file contains a private and public key used to sign and verify signed assemblies. (I’m not going to explain asymmetric encryption; refer to the cryptography books in this book’s bibliography for more information.) If this key pair is more than an experimental key, you should protect it like any other private/public key pair—jealously.

Note that the strong name is based on the key pair itself, not on a certificate as with Authenticode. As a developer, you can create the key pair that defines your own private namespace; others cannot use your namespace because they don’t know the private key. You can additionally get a certificate for this key pair if you like, but the strong-name identity does not use certificates. This means that the signature cannot be identified with the name of the publisher, although you do know that the same publisher is controlling all strong names of a certain key pair, assuming the private key is kept private.

In addition to using strong names, you may want to Authenticode-sign an assembly in order to identify the publisher. To do so, you must first strong-name-sign the assembly and then Authenticode-sign over that. You cannot use Authenticode first because the strong-name signature will appear as "tampering" to the Authenticode signature check.

Important

Unlike certificates, strong-name private keys cannot be revoked, so you must take precautions to protect the keys. Consider declaring one trusted individual to be the "keymaster," the person who keeps the private key on a floppy in her safe.

Note

Presently, strong names use 1024-bit RSA keys.

You should next extract the public-key portion of the key pair with SN -p keypair.snk public.snk. You’ll see why this is important in a moment. The signing process happens only when the code is compiled and the binary is created, and you reference the key information by using an [assembly: AssemblyKeyFile(filename)] directive. In the case of a default Visual Studio .NET application, this directive is in the AssemblyInfo.cs or AssemblyInfo.vb file, and it looks like this in a Visual Basic .NET application:

Imports System.Reflection

<Assembly: AssemblyKeyFileAttribute("c:keyskeypair.snk")>You should realize that such an operation could potentially leave the private key vulnerable to information disclosure from a bad developer. To mitigate this risk, you can use delay-signing, which uses only the public key and not the private/public key pair. Now the developers do not have access to the private key, and the full signing process occurs prior to shipping the code using SN -R assemblyname.dll keypair.snk command. However, your development computers must bypass signature verification by using the SN -Vr assemblyname.dll command because the assembly does not have a strong name.

Important

Keep in mind that strong-named assemblies can reference only other strong-named assemblies.

Enforcing delay-signing requires that you add the following line to the assembly in Visual Basic .NET:

<Assembly: AssemblyDelaySignAttribute(true)>

Or in C#, this line:

[assembly: AssemblyDelaySign(true)]

Note that in C# you can drop the Attribute portion of the name.

Tip

Developers performing day-to-day development on the assembly should always delay-sign it with the public key.

Strong-named assemblies used for business logic in Web applications must be stored in the server’s global assembly cache (GAC) by using the .NET Configuration tool (Mscorcfg.msc) or gacutil.exe. This is because of the way that ASP.NET loads signed code.

Now let’s look at permissions and permission request best practices.

Requesting permissions is how you let the .NET common language runtime know what your code needs to do to get its job done. Although requesting permissions is optional and is not required for your code to compile, there are important execution reasons for requesting appropriate permissions within your code. When your code demands permissions by using the Demand method, the CLR verifies that all code calling your code has the appropriate permissions. Without these permissions, the request fails. Verification of permissions is determined by performing a stack-walk. It’s important from a usability and security perspective that your code receives the minimum permissions required to run, and from a security perspective that it receives no more permissions than it requires to run.

Requesting permissions increases the likelihood that your code will run properly if it’s allowed to execute. If you do not identify the minimum set of permissions your code requires to run, your code will require extra error-handling code to gracefully handle the situations in which it’s not granted one or more permissions. Requesting permissions helps ensure that your code is granted only the permissions it needs. You should request only those permissions that your code requires, and no more.

If your code does not access protected resources or perform security-sensitive operations, it’s not necessary to request any permissions. For example, if your application requires only FileIOPermission to read one file, and nothing more, add this line to the code:

[assembly: FileIOPermission(SecurityAction.RequestMinimum, Read = @"c:filesinventory.xml")]

Note

All parameters to a declarative permission must be known at compile time.

You should use RequireMinimum to define the minimum must-have grant set. If the runtime cannot grant the minimal set to your application, it will raise a PolicyException exception and your application will not run.

In the interests of least privilege, you should simply reject permissions you don’t need, even if they might be granted by the runtime. For example, if your application should never perform file operations or access environment variables, include the following in your code:

[assembly: FileIOPermission(SecurityAction.RequestRefuse, Unrestricted = true)] [assembly: EnvironmentPermission(SecurityAction.RequestRefuse, Unrestricted = true)]

If your application is a suspect in a file-based attack and the attack requires file access, you have evidence (no pun intended) that it cannot be your code, because your code refuses all file access.

The CLR security system gives your code the option to request permissions that it could use but does not need to function properly. If you use this type of request, you must enable your code to catch any exceptions that will be thrown if your code is not granted the optional permission. An example includes an Internet-based game that allows the user to save games locally to the file system, which requires FileIOPermission. If the application is not granted the permission, it is functional but the user cannot save the game. The following code snippet demonstrates how to do this:

[assembly: FileIOPermission(SecurityAction.RequestOptional, Unrestricted = true)]

If you do not request optional permissions in your code, all permissions that could be granted by policy are granted minus permissions refused by the application. You can opt to not use any optional permissions by using this construct:

[assembly: PermissionSet(SecurityAction.RequestOptional, Unrestricted = false)]

The net of this is an assembly granted the following permissions by the runtime:

(PermMaximum (PermMinimum PermOptional)) - PermRefused

This translates to: minimum and optional permissions available in the list of maximum permissions, minus any refused permissions.

More Information

You can determine an assembly’s permission requests by using caspol –a –resolveperm myassembly.exe, which shows what kind of permissions an assembly would get if it were to load, or by using permview in the .NET Framework SDK, which shows an assembly request—an assembly’s input to the policy which may or may not be honored.

The .NET CLR offers a method, named Assert, that allows your code, and downstream callers, to perform actions that your code has permission to do but its callers might not have permission to do. In essence, Assert means, "I know what I’m doing; trust me." What follows in the code is some benign task that would normally require the caller to have permission to perform.

Important

Do not confuse the .NET common language runtime security CodeAccessPermission.Assert method with the classic C and C++ assert function or the .NET Framework Debug.Assert method. The latter evaluate an expression and display a diagnostic message if the expression is false.

For example, your application might read a configuration or lookup file, but code calling your code might not have permission to perform any file I/O. If you know that your code’s use of this file is benign, you can assert that you’ll use the file safely.

That said, there are instances when asserting is safe, and others when it isn’t. Assert is usually used in scenarios in which a highly trusted library is used by lower trusted code and stopping the stack-walk is required. For example, imagine you implement a class to access files over a universal serial bus (USB) interface and that the class is named UsbFileStream and is derived from FileStream. The new code accesses files by calling USB Win32 APIs, but it does not want to require all its callers to have permission to call unmanaged code, only FileIOPermission. Therefore, the UsbFileStream code asserts UnmanagedCode (to use the Win32 API) and demands FileIOPermission to verify that its callers are allowed to do the file I/O.

However, any code that takes a filename from an untrusted source, such as a user, and then opens it for truncate is not operating safely. What if the user sends a request like ../../boot.ini to your program? Will the code delete the boot.ini file? Potentially yes, especially if the access control list (ACL) on this file is weak, the user account under which the application executes is an administrator, or the file exists on a FAT partition.

When performing security code reviews, look for all security asserts and double-check that the intentions are indeed benign, especially if you have a lone Assert with no Demand or an Assert and a Demand for a weak permission. For example, you assert unmanaged code and demand permission to access an environment variable.

Note

To assert a permission requires that your code has the permission in the first place.

Important

Be especially careful if your code asserts permission to call unmanaged code by asserting SecurityPermissionFlag.UnmanagedCode; an error in your code might lead to unmanaged code being called inadvertently.

You should follow some simple guidelines when building applications requiring the Demand and Assert methods. Your code should assert one or more permissions when it performs a privileged yet safe operation and you don’t require callers to have that permission. Note that your code must have the permission being asserted and SecurityPermissionFlag.Assertion, which is the right to assert.

For example, if you assert FileIOPermission, your code must be granted FileIOPermission but any code calling you does not require the permission. If you assert FileIOPermission and your code has not been granted the permission, an exception is raised once a stack-walk is performed.

As mentioned, your code should use the Demand method to demand one or more permissions when you require that callers have the permission. For example, say your application uses e-mail to send notifications to others, and your code has defined a custom permission named EmailAlertPermission. When your code is called, you can demand the permission of all your callers. If any caller does not have EmailAlertPermission, the request fails.

Important

A demand does not check the permissions of the code doing the Demand, only its callers. If your Main function has limited permissions, it will still succeed a full trust demand because it has no callers. To check the code’s permissions, either call into a function and initiate the Demand there—it’ll see the caller’s permissions—or use the SecurityManager.IsGranted method to directly see whether a permission is granted to your assembly (and only your assembly—callers may not have permission). This does not mean you can write malicious code in Main and have it work! As soon as the code calls classes that attempt to perform potentially dangerous tasks, they will incur a stack-walk and permission check.

Important

For performance reasons, do not demand permissions if you call code that also makes the same demands. Doing so will simply cause unneeded stack-walks. For example, there’s no need to demand EnvironmentPermission when calling Environment.GetEnvironmentVariable, because the .NET Framework does this for you.

It is feasible to write code that makes asserts and demands. For example, using the e-mail scenario above, the code that interfaces directly with the e-mail subsystem might demand that all callers have EmailAlertPermission (your custom permission). Then, when it writes the e-mail message to the SMTP port, it might assert SocketPermission. In this scenario, your callers can use your code for sending e-mail, but they do not require the ability to send data to arbitrary ports, which SocketPermission allows.

Finally, you cannot call Permission.Assert twice—it will throw an exception. If you want to assert more than one permission, you must create a permission set, add those permissions to the set, and assert the whole set, like this:

try {

PermissionSet ps =

new PermissionSet(PermissionState.Unrestricted);

ps.AddPermission(new FileDialogPermission

(FileDialogPermissionAccess.Open));

ps.AddPermission(new FileIOPermission

(FileIOPermissionAccess.Read,@"c:files"));

ps.Assert();

} catch (SecurityException e) {

// oops!

}Once you’ve completed the task that required the special asserted permission, you should call CodeAccessPermission.RevertAssert to disable the Assert. This is an example of least privilege; you used the asserted permission only for the duration required, and no more.

The following sample C# code outlines how asserting, demanding, and reverting can be combined to send e-mail alerts. The caller must have permission to send e-mail, and if the user does, she can send e-mail over the SMTP socket, even if she doesn’t have permission to open any socket:

using System;

using System.Net;

using System.Security;

using System.Security.Permissions;

//Code fragment only; no class or namespace included.

static void SendAlert(string alert) {

//Demand caller can send e-mail.

new EmailAlertPermission(

EmailAlertPermission.Send).Demand();

//Code will open a specific port on a specific SMTP server.

NetworkAccess na = NetworkAccess.Connect;

TransportType type = TransportType.Tcp;

string host = "mail.northwindtraders.com";

int port = 25;

new SocketPermission(na, type, host, port).Assert();

try {

SendAlertTo(host, port, alert);

} finally {

//Always revert, even on failure

CodeAccessPermission.RevertAssert();

}

}When an Assert, Deny, and PermitOnly are all on the same frame, the Deny is honored first, then Assert, and then PermitOnly.

Imagine method A() calls B(), which in turn calls C(), and A() denies the ReflectionPermission permission. C() could still assert ReflectionPermission, assuming the assembly that contains it has the permission granted to it. Why? Because when the runtime hits the assertion, it stops performing a stack-walk and never recognizes the denied permission in A(). The following code sample outlines this without using multiple assemblies:

private string filename = @"c:filesfred.txt";

private void A() {

new FileIOPermission(

FileIOPermissionAccess.AllAccess,filename).Deny();

B();

}

private void B() {

C();

}

private void C() {

try {

new FileIOPermission(

FileIOPermissionAccess.AllAccess,filename).Assert();

try {

StreamWriter sw = new StreamWriter(filename);

sw.Write("Hi!");

sw.Close();

} catch (IOException e) {

Console.Write(e.ToString());

}

} finally {

CodeAccessPermission.RevertAssert();

}

}If you remove the Assert from C(), the code raises a SecurityException when the StreamWriter class is instantiated because the code is denied the permission.

I’ve already shown code that demands permissions to execute correctly. Most classes in the .NET Framework already have demands associated with them, so you do not need to make additional demands whenever developers use a class that accesses a protected resource. For example, the System.IO.File class automatically demands FileIOPermission whenever the code opens a file. If you make a demand in your code for FileIOPermission when you use the File class, you’ll cause a redundant and wasteful stack-walk to occur. You should use demands to protect custom resources that require custom permissions.

A link demand causes a security check during just-in-time (JIT) compilation of the calling method and checks only the immediate caller of your code. If the caller does not have sufficient permission to link to your code—for example, your code demands the calling code have IsolatedStorageFilePermission at JIT time—the link is not allowed and the runtime throws an exception when the code is loaded and executed.

Link demands do not perform a full stack-walk, so your code is still susceptible to luring attacks, in which less-trusted code calls highly trusted code and uses it to perform unauthorized actions. The link demand specifies only which permissions direct callers must have to link to your code. It does not specify which permissions all callers must have to run your code. That can be determined only by performing a stack-walk.

Now to the issue. Look at this code:

[PasswordPermission(SecurityAction.LinkDemand, Unrestricted=true)]

[RegistryPermissionAttribute(SecurityAction.PermitOnly,

Read=@"HKEY_LOCAL_MACHINESOFTWAREAccountingApplication")]

public string returnPassword() {

return (string)Registry

.LocalMachine

.OpenSubKey(@"SOFTWAREAccountingApplication")

.GetValue("Password");

}

...

public string returnPasswordWrapper() {

return returnPassword();

}Yes, I know, this code is insecure because it transfers secret data around in code, but I want to make a point here. To call returnPassword, the calling code must have a custom permission named PasswordPermission. If the code were to call returnPassword and it did not have the custom permission, the runtime would raise a security exception and the code would not gain access to the password. However, if code called returnPasswordWrapper, the link demand would be made only against its called returnPassword and not the code calling returnPasswordWrapper, because a link demand goes only one level deep. The code calling returnPasswordWrapper now has the password.

Because link demands are performed only at JIT time and they only verify that the caller has the permission, they are faster than full demands, but they are potentially a weaker security mechanism.

The moral of this story is you should never use link demands unless you have carefully reviewed the code. A full stack-walking demand takes a couple of microseconds to execute, so you’ll rarely see much performance gain by replacing demands with link demands. However, if you do have link demands in your code, you should double-check them for security errors, especially if you cannot guarantee that all your callers satisfy your link-time check. Likewise, if you call into code that makes a link demand, does your code perform tasks in a manner that could violate the link demand? Finally, when a link demand exists on a virtual derived element, make sure the same demand exists on the base element.

Important

To prevent misuse of LinkDemand and reflection (the process of obtaining information about assemblies and types, as well as creating, invoking, and accessing type instances at run time), the reflection layer in the runtime does a full stack-walk Demand of the same permissions for all late-bound uses. This mitigates possible access of the protected member through reflection where access would not be allowed via the normal early-bound case. Because performing a full stack walk changes the semantics of the link demand when used via reflection and incurs a performance cost, developers should use full demands instead. This makes both the intent and the actual run-time cost most clearly understood.

Be incredibly careful if you use SuppressUnmanagedCodeSecurityAttribute in your code. Normally, a call into unmanaged code is successful only if all callers have permission to call into unmanaged code. Applying the custom attribute SuppressUnmanagedCodeSecurityAttribute to the method that calls into unmanaged code suppresses the demand. Rather than a full demand being made, the code performs only a link demand for the ability to call unmanaged code. This can be a huge performance boost if you call many native Win32 function, but it’s dangerous too. The following snippet applies SuppressUnmanagedCodeSecurityAttribute to the MyWin32Funtion method:

using System.Security;

using System.Runtime.InteropServices;

⋮

public class MyClass {

⋮

[SuppressUnmanagedCodeSecurityAttribute()]

[DllImport("MyDLL.DLL")]

private static extern int MyWin32Function(int i);

public int DoWork() {

return MyWin32Function(0x42);

}

}You should double-check all methods decorated with this attribute for safety.

Important

You may have noticed a common pattern in LinkDemand and SuppressUnmanagedCodeSecurityAttribute—they both offer a trade-off between performance and security. Do not enable these features in an ad hoc manner until you determine whether the potential performance benefit is worth the increased security vulnerability. Do not enable these two features until you have measured the performance gain, if any. Follow these best practices if you choose to enable SuppressUnmanagedCodeSecurity: the native methods should be private or internal, and all arguments to the methods must be validated.

You should be aware that if your objects are remotable (derived from a MarshalByRefObject) and are accessed remotely across processes or computers, code access security checks such as Demand, LinkDemand, and InheritanceDemand are not enforced. This means, for example, that security checks do not go over SOAP in Web services scenarios. However, code access security checks do work between application domains. It’s also worth noting that remoting is supported in fully trusted environments only. That said, code that’s fully trusted on the client might not be fully trusted in the server context.

It might be unsuitable for arbitrary untrusted code to call some of your methods. For example, the method might provide some restricted information, or for various reasons it might perform minimal error checking. Managed code affords several ways to restrict method access; the simplest way is to limit the scope of the class, assembly, or derived classes. Note that derived classes can be less trustworthy than the class they derive from; after all, you do not know who is deriving from your code. Do not infer trust from the keyword protected, which confers no security context. A protected class member is accessible from within the class in which it is declared and from within any class derived from the class that declared this member, in the same way that protected is used in C++ classes.

You should consider sealing classes. A sealed class—Visual Basic uses NotInheritable—cannot be inherited. It’s an error to use a sealed class as a base class. If you do this, you limit the code that can inherit your class. Remember that you cannot trust any code that inherits from your classes. This is simply good object-oriented hygiene.

You can also limit the method access to callers having permissions you select. Similarly, declarative security allows you to control inheritance of classes. You can use InheritanceDemand to require that derived classes have a specified identity or permission or to require that derived classes that override specific methods have a specified identity or permission. For example, you might have a class that can be called only by code that has the EnvironmentPermission:

[EnvironmentPermission

(SecurityAction.InheritanceDemand, Unrestricted=true)]

public class Carol {

⋮

}

class Brian : Carol {

⋮

}In this example, the Brian class, which inherits from Carol, must have EnvironmentPermission.

Inheritance demands go one step further: they can be used to restrict what code can override virtual methods. For example, a custom permission, PrivateKeyPermission, could be demanded of any method that attempts to override the SetKey virtual method:

[PrivateKeyPermission

(SecurityAction.InheritanceDemand, Unrestricted=true)]

public virtual void SetKey(byte [] key) {

m_key = key;

DestroyKey(key);

}You can also limit the assembly that can call your code, by using the assembly’s strong name:

[StrongNameIdentityPermission(SecurityAction.LinkDemand, PublicKey="00240fd981762bd0000...172252f490edf20012b6")]

And you can tie code back to the server where the code originated. This is similar to the ActiveX SiteLock functionality discussed in Chapter 16. The following code shows how to achieve this, but remember: this is no replacement for code access security. Don’t create insecure code with the misguided hope that the code can be instantiated only from a specific Web site and thus malicious users cannot use your code. If you can’t see why, think about cross-site scripting issues!

private void function(string[] args) {

try {

new SiteIdentityPermission(

@"*.explorationair.com").Demand();

} catch (SecurityException e){

//not from the Exploration Air site

}

}I know I mentioned this at the start of this chapter, but it’s worth commenting on again. Storing data in configuration files, such as web.config, is fine so long as the data is not sensitive. However, passwords, keys, and database connection strings should be stored out of the sight of the attacker. Placing sensitive data in the registry is more secure than placing it in harm’s way. Admittedly, this does violate the xcopy-deployment goal, but life’s like that sometimes.

ASP.NET v1.1 supports optional Data Protection API encryption of secrets stored in a protected registry key. (Refer to Chapter 9, for information about DPAPI.) The configuration sections that can take advantage of this are <processModel>, <identity>, and <sessionState>. When using this feature, the configuration file points to the registry key and value that holds the secret data. ASP.NET provides a small command-line utility named aspnet_setreg to create the protected secrets. Here’s an example configuration file that accesses the username and password used to start the ASP.NET worker process:

<system.web> <processModel enable="true" userName="registry:HKLMSoftwareSomeKey,userName" password="registry:HKLMSoftwareSomeKey,passWord" ... /> </system.web>

The secrets are protected by CryptProtectData using a machine-level encryption key. Although this does not mitigate all the threats associated with storing secrets—anyone with physical access to the computer can potentially access the data—it does considerably raise the bar over storing secrets in the configuration system itself.

This technique is not used to store arbitrary application data; it is only for usernames and passwords used for ASP.NET process identity and state service connection data.

I well remember the day the decision was made to add the AllowPartiallyTrustedCallersAttribute attribute to .NET. The rationale made perfect sense: most attacks will come from the Internet where code is partially trusted, where code is allowed to perform some tasks and not others. For example, your company might enforce a security policy that allows code originating from the Internet to open a socket connection back to the source server but does not allow it to print documents or to read and write files. So, the decision was made to not allow partially trusted code to access certain assemblies that ship with the CLR and .NET Framework, and that includes, by default, all code produced by third parties, including you. This has the effect of reducing the attack surface of the environment enormously. I remember the day well because this new attribute prevents code from being called by potentially hostile Internet-based code accidentally. Setting this option is a conscious decision made by the developer.

If you develop code that can be called by partially trusted code and you have performed appropriate code reviews and security testing, use the AllowPartiallyTrustedCallersAttribute assembly-level custom attribute to allow invocation from partially trusted code:

[assembly:AllowPartiallyTrustedCallers]

Assemblies that allow partially trusted callers should never expose objects from assemblies that do not allow partially trusted callers.

Important

Be aware that assemblies that are not strong-named can always be called from partially trusted code.

Finally, if your code is not fully trusted, it might not be able to use code that requires full trust callers, such as strong-named assemblies that lack AllowPartiallyTrustedCallersAttribute.

You should also be aware of the following scenario, in which an assembly chooses to refuse permissions:

Strong-named assembly A does not have AllowPartiallyTrustedCallersAttribute.

Strong-named assembly B uses a permission request to refuse permissions, which means it is now partially trusted, because it does not have full trust.

Assembly B can no longer call code in Assembly A, because A does not support partially trusted callers.

Important

The AllowPartiallyTrustedCallersAttribute attribute should be applied only after the developer has carefully reviewed the code, ascertained the security implications, and taken the necessary precautions to defend from attack.

If you call into unmanaged code—and many people do for the flexibility—you must make sure the code calling into the unmanaged is well-written and safe. If you use SuppressUnmanagedCodeSecurityAttribute, which allows managed code to call into unmanaged code without a stack-walk, ask yourself why it’s safe to not require public callers to have permission to access unmanaged code.

Delegates are similar in principle to C/C++ function pointers and are used by the .NET Framework to support events. If your code accepts delegates, you have no idea a priori what the delegate code is, who created it, or what the writer’s intentions are. All you know is the delegate is to be called when your code generates an event. You also do not know what code is registering the delegate. For example, your component, AppA, fires events; AppB registers a delegate with you by calling AddHandler. The delegate could be potentially any code, such as code that suspends or exits the process by using System.Environment.Exit. So, when AppA fires the event, AppA stops running, or worse.

Here’s a mitigating factor—delegates are strongly-typed, so if your code allows a delegate only with a function signature like this

public delegate string Function(int count, string name, DateTime dt);

the code that registers the delegate will fail when it attempts to register System.Environment.Exit because the method signatures are different.

Finally, you can limit what the delegate code can do by using PermitOnly or Deny for permissions you require or deny. For example, if you want a delegate to read only a specific environment variable and nothing more, you can use this code snippet prior to firing the event:

new EnvironmentPermission( EnvironmentPermissionAccess.Read,"USERNAME").PermitOnly();

Remember that PermitOnly applies to the delegate code (that is, the code called when your event fires), not to the code that registered the delegate with you. It’s a little confusing at first.

You should give special attention to classes that implement the ISerializable interface if an object based on the class could contain sensitive object information. Can you see the potential vulnerability in the following code?

public void WriteObject(string file) {

Password p = new Password();

Stream stream = File.Open(file, FileMode.Create);

BinaryFormatter bformatter = new BinaryFormatter();

bformatter.Serialize(stream, p);

stream.Close();

}

[Serializable()]

public class Password: ISerializable {

private String sensitiveStuff;

public Password() {

sensitiveStuff=GetRandomKey();

}

//Deserialization ctor.

public Password (SerializationInfo info, StreamingContext context) {

sensitiveStuff =

(String)info.GetValue("sensitiveStuff", typeof(string));

}

//Serialization function.

public void GetObjectData

(SerializationInfo info, StreamingContext context) {

info.AddValue("sensitiveStuff", sensitiveStuff);

}

}As you can see, the attacker has no direct access to the secret data held in sensitiveStuff, but she can force the application to write the data out to a file—any file, which is always bad!—and that file will contain the secret data. You can restrict the callers to this code by demanding appropriate security permissions:

[SecurityPermissionAttribute(SecurityAction.Demand, SerializationFormatter=true)]

For some scenarios, you should consider using isolated storage rather than classic file I/O. Isolated storage has the advantage that it can isolate data by user and assembly, or by user, domain, and assembly. Typically, in the first scenario, isolated storage stores user data used by multiple applications, such as the user’s name. The following C# snippet shows how to achieve this:

using System.IO.IsolatedStorage; ... IsolatedStorageFile isoFile = IsolatedStorageFile.GetStore( IsolatedStorageScope.User | IsolatedStorageScope.Assembly, null, null);

The latter scenario—isolation by user, domain, and assembly—ensures that only code in a given assembly can access the isolated data when the following conditions are met: when the application that was running when the assembly created the store is using the assembly, and when the user for whom the store was created is running the application. The following Visual Basic .NET snippet shows how to create such an object:

Imports System.IO.IsolatedStorage ... Dim isoStore As IsolatedStorageFile isoStore = IsolatedStorageFile.GetStore( _ IsolatedStorageScope.User Or _ IsolatedStorageScope.Assembly Or _ IsolatedStorageScope.Domain, _ Nothing, Nothing)

Note that isolated storage also supports roaming profiles by simply including the IsolatedStorageScope.Roaming flag. Roaming user profiles are a Microsoft Windows feature (available on Windows NT, Windows 2000, and some updated Windows 98 systems) that enables the user’s data to "follow the user around" as he uses different PCs.

Note

You can also use IsolatedStorageFile.GetUserStoreForAssembly and IsolatedStorageFile.GetUserStoreForDomain to access isolated storage; however, these methods cannot use roaming profiles for the storage.

A major advantage using isolated storage has over using, say, the FileStream class is the fact that the code does not require FileIOPermission to operate correctly.

Do not use isolated storage to store sensitive data, such as encryption keys and passwords, because isolated storage is not protected from highly trusted code, from unmanaged code, or from trusted users of the computer.

Disabling tracing and debugging before deploying ASP.NET applications sounds obvious, but you’d be surprised how many people don’t do this. It’s bad for two reasons: you can potentially give an attacker too much information, and a negative performance impact results from enabling these options.

You can achieve this disabling in three ways. The first involves removing the DEBUG verb from Internet Information Services (IIS). Figure 18-6 shows where to find this option in the IIS administration tool.

Figure 18-6. You can remove the DEBUG verb from each extension you don’t want to debug—in this case, SOAP files.

You can also disable debugging and tracing within the ASP.NET application itself by adding a Page directive similar to the following one to the appropriate pages:

<%@ Page Language="VB" Trace="False" Debug="False" %>

Finally, you can override debugging and tracing in the application configuration file:

<trace enabled = ’false’/> <compilation debug = ’false’/>

By default, ASP.NET the configuration setting <customErrors> is set to remoteOnly and gives verbose information locally and nothing remotely. Developers commonly change this on staging servers to facilitate off-the-box debugging and forget to restore the default before deployment. This should be set to either remoteOnly (default) or On. Off is inappropriate for production servers.

<configuration> <system.web> <customErrors> defaultRedirect="error.htm" mode="RemoteOnly" <error statusCode="404" redirect="404.htm"/> </customErrors> </system.web> </configuration>

Don’t deserialize data from untrusted sources. This is a .NET-specific version of the "All input is evil until proven otherwise" mantra outlined in many parts of this book. The .NET common language runtime offers classes in the System.Runtime.Serialization namespace to package and unpackage objects by using a process called serializing. (Some people refer to this process as freeze-drying.) However, your application should never deserialize any data from an untrusted source, because the reconstituted object will execute on the local machine with the same trust as the application.

To pull off an attack like this also requires that the code receiving the data have the SerializationFormatter permission, which is a highly privileged permission that should be applied to fully trusted code only.

Note

The security problem caused by deserializing data from untrusted sources is not unique to .NET. The issue exists in other technologies. For example, MFC allows users to serialize and deserialize an object by using CArchive::Operator>> and CArchive::Operator<<. That said, all code in MFC is unmanaged and hence, by definition, run as fully trusted code.

The .NET environment offers wonderful debug information when code fails and raises an exception. However, the information could be used by an attacker to determine information about your server-based application, information that could be used to mount an attack. One example is the stack dump displayed by code like this:

try {

// Do something.

} catch (Exception e) {

Result.WriteLine(e.ToString());

}It results in output like the following being sent to the user:

System.Security.SecurityException: Request for the permission of type System.Security.Permissions.FileIOPermission... at System.Security.SecurityRuntime.FrameDescHelper(...) at System.Security.CodeAccessSecurityEngine.Check(...) at System.Security.CodeAccessSecurityEngine.Check(...) at System.Security.CodeAccessPermission.Demand() at System.IO.FileStream..ctor(...) at Perms.ReadConfig.ReadData() in c: emppermspermsclass1.cs:line 18

Note that the line number is not sent other than in a debug build of the application. However, this is a lot of information to tell anyone but the developers or testers working on this code. When an exception is raised, simply write to the Windows event log and send the user a simple message saying that the request failed.

try {

// Do something.

} catch (Exception e) {

#if(DEBUG)

Result.WriteLine(e.ToString());

#else

Result.WriteLine("An error occurred.");

new LogException().Write(e.ToString());

#endif

}

public class LogException {

public void Write(string e) {

try {

new EventLogPermission(

EventLogPermissionAccess.Instrument,

"machinename").Assert();

EventLog log = new EventLog("Application");

log.Source="MyApp";

log.WriteEntry(e, EventLogEntryType.Warning);

} catch(Exception e2) {

//Oops! Can’t write to event log.

}

}

}Depending on your application, you might need to call EventLogPermission(…).Assert, as shown in the code above. Of course, if your application does not have the permission to write to the event log, the code will raise another exception.

The .NET Framework and the CLR offer solutions to numerous security problems. Most notably, the managed environment helps mitigate buffer overruns in user-written applications and provides code access security to help solve the trusted, semitrusted, and untrusted code dilemma. However, this does not mean you can be complacent. Remember that your code will be attacked and you need to code defensively.

Much of the advice given in this book also applies to managed applications: don’t store secrets in Web pages and code, do run your applications with least privilege by requiring only a limited set of permissions, and be careful when making security decisions based on the name of something. Also, you should consider moving all ActiveX controls to managed code, and certainly all new controls should be managed code; simply put, managed code is safer.

Finally, Microsoft has been proactively providing many .NET security-related documents at http://msdn.microsoft.com. You should use "Security Concerns for Visual Basic .NET and Visual C# .NET Programmers" at http://msdn.microsoft.com/library/en-us/dv_vstechart/html/vbtchSecurityConcernsForVisualBasicNETProgrammers.asp as a springboard to some of the most important.