Chapter 2. Confidence as a product

Abstract

System stakeholders require understanding of the risks and confidence in systems. System assurance involves making a clear, comprehensive, and defensible case that security safeguards are adequate against the threats to the system. System assurance complements system engineering and risk assessment by focusing on building security arguments to justify the security posture of the system, gathering evidence, and communicating cybersecurity knowledge. This chapter describes the nature of assurance, demonstrates the need of argument in support of complex claims, explains the difference between positive and negative claims and between the process-based and goal-based assurance. Architecture-driven assurance cases are introduced where confidence is produced by justifying that every system location was inspected; all locations in the system were found to be protected from identified threats, and no location was found to be vulnerable. Assurance case is a knowledge product that provides confidence when consumed by the system stakeholders.

Keywords

assurance, assurance case, assurance argument, security, security posture, confidence, risk, risk, assessment, security engineering, system assurance, security safeguard, security countermeasure, architecture, evidence, location of a vulnerability

The words of some men are trusted simply on account of their reputation for caution, judgment, and veracity. But this does not mean that the question of their right to our confidence cannot arise in the case of all their assertions: only that we are confident that any claim they make weightily and seriously will in fact prove to be well-founded, to have a sound case behind it, to deserve—have a right to—our attention on its merits.

—Stephen Toulmin, The Uses Of Argument

2.1. Are you confident that there is no black cat in the dark room?

When assessing security posture of the system, concept of location is very important in achieving confidence that the system is secure to operate. In other words, it is confidence that every system location is visited and evaluated, all locations in the system are found to be protected from identified threats, and no location is found to be vulnerable.

Importance of location for achieving confidence is best illustrated through the following example, “How to find a black cat in a dark room?”

As we all know, it is hard to find a black cat in a dark room, especially if we are not sure that the cat is there. Searching through a large space, for example, the size of a skyscraper, can take a long time and may be quite costly. For that reason, many skyscraper managers would decide to wait for the cat to become hungry, at which point it will show up by itself. Security risk assessment is often a lot like that, too.

But what is the process of finding the cat? First, we have to make some assumptions: We are going to look for a live cat, not a stuffed animal cat nor a photo of a cat nor any other form or shape like a cat. Second, we need to clarify the scope: What are the confines of the room in which we are going to look for the cat. The outcome of the exercise of finding something (for example, finding a cat) within the given confines involves determining the location of the object. There are two possible situations that we need to be aware of: 1) there is at least one cat in the room; and 2) there are no cats in the room. So, how do we find a cat? We perform some search activity; For example, we take a net and try to catch the cat. When we have caught something (and identified the location of this object), we need to know that this object is indeed a cat (and not a chair, or a hat, or a rat). The object must feel like a cat, look like a cat, and behave like a cat.

So, it is the essential characteristics of the object that we need to focus on in order to conduct the search. In our example, we will focus on the following four groups of characteristics:

• Characteristics of being a cat: cat has a tail, cat has pointy ears, cat meows, cat runs after mice, cat likes milk, cat has very large eye sockets, cat has a specialized jaw, cat has seven lumbar vertebrae.

• Characteristics of being a living thing: thing breathes, thing has temperature, thing eats food, thing makes sound, thing has smell, thing moves from one location to another.

• Characteristics of being in the room: location of thing is inside room, thing enters room, thing is not at location that is outside room.

• Characteristics of being: it has color, it has weight, it has height, it has length, it can be located, it is at a single location at any given time.

From this perspective, not all facts about cats are equally useful for our search. It is clear that we need to focus on the discernable characteristics, the ones that we can incorporate into our search procedure. For example, the following facts involving cats are not relevant to search: cats are believed to have been domesticated in ancient Egypt; the word “cat” is derived from the Turkish word “qadi”; cats are valued by humans for companionship; cats are associated with humans for at least 9500 years. A discernable characteristic gives an objective and repeatable procedure to say if some location is occupied by a cat or not.

The need of finding a black cat in the dark room can be motivated by several subtly different scenarios, each of which determines a different desired outcome of the search. Here are three scenarios:

1. Justify that there are five black cats in the room

2. Justify that there is no black cat in the room

3. Justify that there will be no black cats in the room for the next 12 months

In the first scenario, we located five black cats. In presenting a set of five locations, we make an implicit claim that each location contains a black cat. So each location has to pass the “contains a black cat” test. We assume that cats are not removed from their locations. It could have been ten or more black cats in the room but we found five and claimed there are five black cats. How can a bystander be confident in the outcome of our search? First of all, we must convince her that there are no false reports (e.g., the dark brown cat). Validating the claim that “a given location contains a black cat” requires systematic application of the set of the essential characteristics. A critical bystander may question our way of identifying a black cat and may ask us to produce the evidence for each cat that we claim. But, how do we know that there were no more than five black cats in the room? How important is it to know if there are only five black cats?

In the second scenario, the experience of our search is used to justify the claim “there is no black cat in the room.” This second scenario is different because, at least at the first site, no explicit location is produced, otherwise the fact of finding a location containing a black cat becomes a counter evidence to the claim. The two claims are quite different, too. In the first scenario, we made a positive claim that “there is a location in the room that contains the black cat.” In the second scenario, we make a negative claim that “there is no black cat in the room.” However, let's be clear; this claim is also about locations. This claim says that “No one location in the room contains a black cat.”

Validating the negative claim that “there is no black cat in the room” goes beyond search, because it requires additional evidence gathering activities to justify the claim. This claim raises many critical questions: How thorough was the search? (Did you visit all corners of the room? Did you look under the couch?) What exactly did you see during the search? Did you see any cats at all? And how did you know that what you found was not the cat? Justification of this claim requires more evidence than in the first scenario involving a positive claim.

There are two ways we can build justification of our claim. The “process-based” assurance produces evidence of compliance to the objectives of the search. This brings confidence that the search team performed its duties according to the statement of work. For example, we agreed that the search team will put a bowl of milk at the entrance of the room and will call the cat by saying “Kitty-kitty-kitty” at least three times. The advantage of the “process-based” assurance is that it always deals with a positive claim, so the evidence is, in most cases, some sort of record of performance. For example, as evidence, the search team brings us a movie, demonstrating that they have indeed performed the required steps and no black cat showed up. The evidence directly supports the claim that the required steps have been performed. The benefit of the “process-based” assurance approach is that it is aligned with the statement of work and that it requires direct evidence of compliance. The statement of work provides the argument framework for the justification and guides the evidence collection, so when the consumer of the assurance believes in the statement of work, he is very likely to be convinced by the corresponding evidence.

On the other hand, the “goal-based” assurance requires an argument to justify the original negative claim, in our case the claim “there are no black cats in the room.” The evidence collected by the search team must support this argument. Goal-based assurance addresses the critical questions head-on.

Design of an argument requires some planning. The concept of “location” introduced earlier turns out to be essential for reasoning about the search, as the evidence collecting procedure associated with the argument deals with locations. Locations with similar characteristics that suggest common search tactics are often arranged into “zones,” or “components.” Zones usually have entry points, where cats and other things may enter and exit. Each zone constrains the behaviors that can occur within that zone. The patterns of locations determine the patterns of behaviors that may take place in these locations (see Figure 1).

|

| Figure 1 The room with black cats |

In the world of systems, it is the architecture that provides a language for describing different locations within a system. Each component determines some pattern of behaviors (for example, the movements of cats through the room to their hiding places, or the flow of data packets through the web server). Behavior rules are based on certain continuity of flow: Cats do not simply appear at a given location, and the same principle applies to the flows of control and data through software in cyber systems.

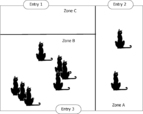

Let's assume that the room has three different zones presented in Figure 2. Zone A has one entry point, and we learned that it is some sort of revolving door not friendly to cats. Also, Zone A does not have any other features; it is one open, empty space, with no hiding places. Zone B has only one entry point that is suitable for cats. Zone B has some additional features inside where cats could hide. Zone C has an entry point and we learned that this entry point is claimed to be unsuitable for cats to enter.

|

| Figure 2 Zones within the room |

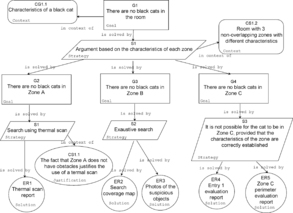

Characteristics of locations determine the structure of the argument. For example, the knowledge of the zones from Figure 2 determines the strategy to justify that a black cat is not in the room. We justify separately that a cat is not in each zone. For Zone C, we need to check that the entry point is indeed unsuitable for cats to enter Zone C. Then we need to check that there are indeed no other entry points to Zone C. We request these two checks and use the reports as evidence to justify the claim that “there are no black cats in Zone C.” To be precise, we justify the first sub-claim, “the entry point to Zone C is not suitable for any cat to enter the zone,” using the first report as evidence. We may further support the claim by indirect evidence to the credibility of the person performing the report. The evidence in support to the second sub-claim, “Zone C has only one entry point,” is provided by the second report. Again, we may want to supplement this evidence by the side argument, justifying the credibility and thoroughness of the analysis method. The top claim is justified by the argument that if a zone has a single entry point that is not suitable for any cat, then it is not possible that a cat enters the zone, and therefore, the zone does not contain a cat, and therefore, does not contain any black cat. This claim is justified by the two sub-claims rather than directly by evidence (see Figure 3).

|

| Figure 3 Assurance case for Scenario 2—there is no black cat in the room |

The same argument is not defendable when applied to Zone A, because the entry points to Zone A may be suitable for a cat. The definition “is not friendly to cats” does not imply “not suitable for cats to enter the zone beyond the reasonable doubt,” so we need a different strategy. We can still check that there are no other entry points to Zone A; we can check that the gates repel cats most of the time, while leaving a remote possibility that a cat may have gotten through. This leaves some uncertainty, so we cannot fully justify the claim. To cover the deficit of assurance, we can make an additional check. For example, based on the assumed characteristics of Zone A, we could use a thermal scanner. If the scan did not report any objects with a temperature higher than 20 degrees Celsius, we can use this as evidence to support the claim that “there are no cats in Zone A.” The consumer of our assurance case may still not be convinced: What if the thermal scanner is malfunctioning? What if there are some places that are hidden from the scanner? We could further request an additional check. We could let a dog into Zone A and observe that the thermal scan was showing the dog, that the dog was moving around Zone A, and that the dog did not bark. These reported facts can be added as further evidence to address the critical questions of the reviewer and justify the claim that “there are no cats in Zone A.”

Zone B does not share the assumptions we made for Zone A, which warranted the use of the thermal scan. We need a different strategy to support the claim that “there are no cats in Zone B.” We could do an exhaustive search of Zone B and produce the testimony and photos as evidence that the search party has indeed visited all locations in Zone B and that no location contained a cat at the time of the search. The argument should also describe the measures that prevented a cat to roam within the Zone B while the search was being performed.

The next step is to present the assurance case explicitly, in a visual format, where all claims and all assumptions are shown as individual icons and all links between claims, sub-claims, and evidence are visible. Visual representation of the assurance case adds to the clarity of the argument and encourages review of the assurance case, which facilitates its consumption.

The knowledge of the architecture of the room helped us design the argument that involves a different search strategy for each zone, taking advantage of the individual characteristics of each zone. The design of the argument determined the evidence gathering during the execution of the search.

Now let's consider the third scenario: “there will be no black cats in the room for the next 12 months.” Here the outcome is a pure claim; for example, “the risk of a cat entering the room within the next 12 months is made as low as reasonably practicable.” Again, this is a claim about all locations: “no location in the room has high risk of a cat entering that location within the next 12 months.” There is a further difference from our second scenario. In the previous scenario, the “no location” claim referred to the past events—one of the implicit assumptions in the “no black cat in the room” scenario is bounded with a certain timeframe: “no location in the room contained the cat at the time of search.” In the “will be no black cats in the room” scenario, we are interested in a future timeframe.

As we extend the timeframe from “here and now” to cover a future period of time, we need to change the justification approach. It does not make sense to justify this claim by the characteristics of a single search. Instead, the justification of the claim comes from the characteristics of the risk mitigation countermeasures that we apply. In particular, we would like to claim that the countermeasures were set up in such a way that no location in the room will contain a cat. The assurance scenario also involves a “no location” claim, and to justify this claim, we must produce the evidence of the countermeasures and their efficiency. For any complex claim, the connection between the evidence and the claim is no longer trivial, and required an argument that contains a number of intermediate sub-claims and additional warrants.

Let's see how this approach works for the “will be no black cats scenario.” Again we use a different argument for each zone, taking advantage of the unique characteristics of each zone (see Figure 4). The previous argument for Zone C is applicable to the assurance scenario, because one can reasonably assume that if an entry point is not suitable for any cat to enter Zone C now, it will not be suitable for those cats in the future, so Zone C is sealed, free from “cat encounters.” However, the arguments for Zone A and B are no longer applicable, because they both involved a single search, which was sufficient for justifying claims about the events-to-date, but not sufficient as a guarantee for future events. In other words, a one-time search is not a sufficient countermeasure, as cats may enter the room after the search is over. This argument leaves too much “assurance deficit.”

|

| Figure 4 Assurance case for Scenario 3—there will be no cat in the room |

In order to design the assurance argument, we need to check to see if the system has any features that mitigate the risk, or modify the system and add some countermeasures. For example, we could recommend a security guard at the entry point to Zone B. In the “assurance scenario,” a guard is a countermeasure against cats entering Zone B, as well as the evidence collection mechanism—we can take a testimony from the guard that no cats entered Zone B within the last three hours, which would support the claim that there is no cat in Zone B. Each countermeasure must be associated with an evidence collecting mechanism, because in order to justify the claim, we need to understand that the countermeasure was actually working.

The concept of location is important for the selection of countermeasures, as they are associated with particular locations based on the characteristics of the behaviors around these locations (in our example, near the entry points). In particular, the entry point in Zone C did not require an additional countermeasure; however, the entry points to Zones A and B did. Positioning of the countermeasure is important, since a diligent reviewer of the assurance case may identify the possibility of the countermeasure bypass. The reviewer may ask the following critical question: Is it possible to behave in such a way that the countermeasure is not efficient, leaving the system exposed? If the critical question is not addressed to satisfaction by given argument, the argument is identified as a weak argument that needs to be revised where revision is backed by additional countermeasures identified through risk assessment activity. This simple idea is called “defense in depth.” We can keep adding countermeasures to eliminate any remaining undesired behaviors [NDIA 2008].

Assurance is an architectural activity that identifies locations where an assurance case reveals weak argument, and therefore, identifies the need for additional countermeasures to be implemented in these locations to mitigate the risk. Assurance evidence usually takes the form of a report demonstrating the presence of a countermeasure and the proof of its performance [NDIA 2008], [ISO 15026], [SSE-CMM 2003].

The weak argument leads to a weak assurance case, which leads to low level confidence that the system is not vulnerable. From the architecture perspective, it is important to realize that there is a certain location associated with the vulnerability. How do we know where the vulnerability zone is? This zone is determined by the combination of the threat and inadequately addressed system's exposure through either the missing or inadequate countermeasure or implemented vulnerability. For example, in the black cat example, the vulnerability area for Zone B is located around the entry point to Zone B where a cat can enter. The exposed area, which is the zone in which an unmitigated threat is possible, is the entire Zone B.

The assurance scenario is more complex than the previous ones because it involves timeframes and the relationships between threats and countermeasures. It raises more critical questions: What are the countermeasures? For each countermeasure, what particular threat behaviors does it mitigate? (Did we consider all possible ways cats are getting into the room? Did we consider all possible locations that cats can hide in the room? For each given location, how do we know that there is no cat there?) A critical person may ask how do we know that the countermeasures are indeed in place? How do we know that they will still be there and efficient in the next 12 months?

Traditional detection of vulnerabilities is to some extent similar to the first scenario: A team of experts looks at the system and identifies five problems. They may argue why the identified problems are risky (why we should believe that a situation passes “the cat test”). However, there is a gap between opportunistic approach in finding vulnerability and assembling defensible assurance arguments that all vulnerabilities are identified. We can also say that scenarios 2 and 3 produce more knowledge than scenario 1. In particular, scenario 1 produces knowledge about five locations in the room where cats were claimed to be found, while scenario 2 provides knowledge about all locations in the room. Scenario 3 provides knowledge about all locations and about the countermeasures, and connects countermeasures to the characteristics of all locations in the room over the desired period of time. We also say that scenarios 2 and 3 produce more confidence than scenario 1 because they provide justification to the claims.

2.2. The nature of assurance

There is a great deal of confusion surrounding the term “assurance,” especially in the area of cyber security, as this is a relatively new area. There is a general understanding that some “assurance” has to accompany a system, however, there is no consensus for which of the several disciplines that are involved in building a system is best positioned to perform “assurance.” As the result, several of these disciplines mention assurance, often in passing, but there does not seem to be a single champion. As a result, various parts of assurance are fragmented across several handbooks, often using conflicting viewpoints and terminology. None of the disciplines focus on assurance or are positioned to handle convergence of assurance of both safety and security properties of cyber systems and their material extensions.

For example, the International Security Engineering Association (ISSEA) promotes the discipline of Security Engineering to address the goals of understanding security risks associated with an enterprise, providing security guidance into other engineering activities, establishing confidence in the correctness of security mechanisms, and “integrating the efforts of all engineering disciplines and specialties into a combined understanding of the trustworthiness of a system.” According to ISSEA, security engineering interfaces with many other disciplines, including Enterprise Engineering, Systems Engineering, Software Engineering, Human Factors Engineering, Communications Engineering, Hardware Engineering, Test Engineering, and System Administration. Security engineering is divided into three basic areas: risk assessment, engineering, and assurance [SSE-CMM 2003]. Such partitioning, however, may lead one to believe that assurance is something specific to security engineering. Yet, assurance has been developed in the context of safety engineering for many years [Wilson 1997], [Kelly 1998], [SafSec 2006].

There is an area of Quality Assurance that is developing its own arsenal of methods and techniques, related to validation, verification, and testing, usually with the emphasis on products.

Finally, the ISO/IEC standard 15026, “System and Software Assurance,” treats assurance as common for safety and security, as this standard “…provides requirements for the life cycle, including development, operation, maintenance, and disposal of systems and software products that are critically required to exhibit and be shown to possess properties related to safety, security, dependability, or other characteristics. It defines an assurance case as the central artifact for planning, monitoring, achieving, and showing the achievement and sustainment of the properties and for related support of other decision making.” [ISO 15026]

A common topic to assurance for safety and security is the emphasis on analysis of the engineering artifacts, rather than development of the engineering artifacts, and especially a common methodological framework for analyzing design artifacts and implementing artifacts. Of course, engineering discipline requires analysis to evaluate alternative designs; however, validation and verification activities, including testing, is considered a separate specialty. In the area of software engineering, there is a significant emphasis on engineering new systems; some attention to validation and verification activities, including testing; however, very little attention to the analysis and modification of already existing code, also known as maintenance engineering.

So, system assurance deserves a status of a stand-alone engineering discipline in order to focus on these common topics. This discipline can then interface with system security engineering and safety engineering to provide guidance for arguing safety or security.

In order to provide a clear picture, detailed interactions between system engineering, risk analysis, and assurance need to be considered.

2.2.1. Engineering, risk, and assurance

System engineering and system assurance are intimately related. To assure a system means to demonstrate that system engineering principles were correctly followed to meet the security goals. However, “good” system engineering as it is commonly understood does not guarantee that the resulting system will have the necessary level of system assurance. The need for providing additional guidance for system assurance is based on the rapid evolution of threats and changes in the operating environments of systems. The assurance of systems has usually been relegated to various processes as part of a broader systems engineering strategy. For example, discussion and validation of requirements is performed to ensure a consistent program definition and architecture. Testing and evaluation are done to ensure that the developed system meets its specifications and the requirements behind them. Various organizational audits and assessments track the use of systems engineering principles throughout the program's life cycle, to ensure that organizational performance is a function of good practice. Because of the complexity and nature of the evolving threats, however, it is important to plan and coordinate these practices and processes, to keep the threat in mind across the system's life cycle. System assurance cannot be executed as an isolated business process at a specific time in the program's schedule, but must be executed continuously from the very earliest conceptualization of the system to its fielding and eventual disposal.

Today, the risk management process often does not consider assurance issues in an integrated way, resulting in project stakeholders unknowingly accepting risks that can have unintended and severe financial, legal, and national security implications. Addressing assurance issues late in the process or in a nonintegrated manner may result in the system not being used as intended, or in excessive costs or delays in system certification or accreditation. Although assurance does not provide any additional security services or safeguards, it rather refers to the security of the product or service and that the product or service fulfills the requirements of the situation, and satisfies the Security Requirements. This may appear to be a less than important aspect at first sight, particularly when the cost of providing or obtaining assurance is factored in. However, it should never be forgotten that, while assurance does not provide additional security services or safeguards, it does serve to reduce the uncertainty associated with vulnerabilities, and thus, often eliminates the need for additional security services or safeguards. Risk management must consider the broader consequences of a failure (e.g., to the mission, people's lives, property, business services, or reputation), not just the failure of the system to operate as intended. In many cases, layered defenses (e.g., defense-in-depth or engineering-in-depth) may be necessary to provide acceptable risk mitigation.

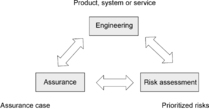

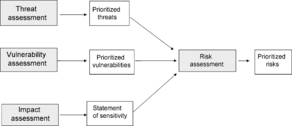

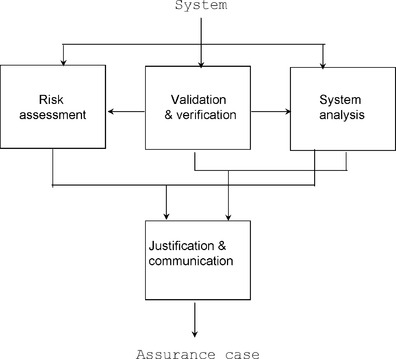

The System Security Engineering Capability Maturity Model (SSE-CMM) developed by ISSEA [SSE-CMM 2003], considers risk assessment, engineering, and assurance as key components of the process that delivers security and, although these components are related, it is possible and useful to examine them separately. The risk assessment process identifies and prioritizes threats to the developing product or system, the security engineering process works with other engineering disciplines to determine and implement security solutions, and the assurance process establishes confidence in the security solutions and conveys this confidence to stakeholders (see Figure 5).

|

| Figure 5 Interdependency—risk, engineering and assurance |

The Risk Assessment – A major goal of security engineering is the reduction of risk. Risk assessment is the process of identifying problems that have not yet occurred. Risks are assessed by examining the likelihood of the threat and vulnerability and by considering the potential impact of an unwanted incident. Associated with that likelihood is a factor of uncertainty, which will vary dependent upon a particular situation. This means that the likelihood can only be predicted within certain limits. In addition, impact assessed for a particular risk also has associated uncertainty, as the unwanted incident may not turn out as expected. Because the factors may have a large amount of uncertainty as to the accuracy of the predictions associated with them, planning and the justification of security can be very difficult. One way to partially deal with this problem is to implement techniques to detect the occurrence of an unwanted incident.

An unwanted incident is made up of three components: threat, vulnerability, and impact. Vulnerabilities are properties of the asset that may be exploited by a threat and include weaknesses. If either the threat or the vulnerability is not present, there can be no unwanted incident and thus no risk. Risk management is the process of assessing and quantifying risk, and establishing an acceptable level of risk for the organization (see Figure 6). Managing risk is an important part of the management of security.

|

| Figure 6 Incident components: threat, vulnerability, and impact |

Risks are mitigated by the implementation of safeguards, which may address the threat, the vulnerability, the impact, or the risk itself. However, it is not feasible to mitigate all risks or completely mitigate all of any particular risk. This is in large part due to the cost of risk mitigation and to the associated uncertainties. Thus, some residual risk must always be accepted. In the presence of high uncertainty, risk acceptance becomes very problematic due to its inexact nature. One of the few areas under the risk taker's control is the uncertainty associated with the system.

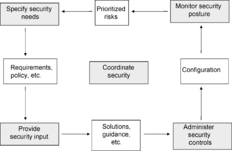

The Engineering – Security engineering is a process that proceeds through concept, design, implementation, test, deployment, operation, maintenance, and decommission. Throughout this process, security engineers must work closely with the other system engineering teams. This helps to ensure that security is an integral part of the larger process, and not a separate and distinct activity. Using the information from the risk process described above, and other information about system requirements, relevant laws, and policies, security engineers work with the stakeholders to identify security needs. Once needs are identified, security engineers identify and track specific requirements (seeFigure 7).

|

| Figure 7 Process of creating solution to security problem |

The process of creating solutions to security problems generally involves identifying possible alternatives and then evaluating the alternatives to determine which is the most promising. The difficulty in integrating this activity with the rest of the engineering process is that the solutions cannot be selected on security considerations alone. Rather, a wide variety of other considerations, including cost, performance, technical risk, and ease of use must be addressed. Typically, these decisions should be captured to minimize the need to revisit issues. The analyses produced also form a significant basis for assurance efforts.

Later in the life cycle, the security engineer is called on to ensure that products and systems are properly configured in relation to the perceived risks, ensuring that new threats do not make the system unsafe to operate.

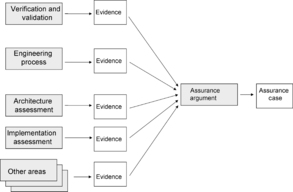

The Assurance – Assurance provides a very important element when performing a security risk assessment and during the risk management phase of determining if additional safeguards are required and if so, whether they are implemented correctly by engineering. Although assurance does not live in isolation, we can distinctly talk about assurance life cycle, assurance requirements, assurance infrastructure, assurance stakeholders, assurance management, and assurance specialized expertise (see Figure 8). For this reason, there is a need to treat assurance as a stand-alone discipline and as such, it is explored in detail throughout the book.

|

| Figure 8 Types of evidences for assurance case |

2.2.2. Assurance case

The umbrella component in system assurance is “assurance case,” which is defined as a reasoned, auditable argument created to support the contention that a defined system will satisfy particular requirements, along with supporting evidence. This structured approach allows for the documentation of all relevant information that can be analyzed to validate if the application is meeting the set objectives, which lends itself to a repeatable process that allows you to go back and review the information to justify any decisions made based on the results of the assessment. It also aids the collection of supporting evidence at different points over time, providing the ability to analyze trends in both risk and trustworthiness [[Toulmin 1984] and [Toulmin 2003]], [Kelly 1998], [NDIA 2008], [ISO 15026].

The purpose of assurance case is to convince stakeholders, such as system owner, regulators, or acquirers, about certain properties of the system that are not obvious from system descriptions. Thus, a security assurance case is about confidentiality, integrity, and availability of information and services provided by the systems in cyberspace. In other words, assurance case is the documented assurance (i.e., argument and supporting evidence) of the achievement and maintenance of a system's security. It is primarily the means by which those who are accountable for the mission, system, or service provision, assure themselves that those services are delivering, and will continue to deliver, an acceptable level of security.

Assurance case was developed by the safety community, primarily in the UK where it has been mandated by a number of legislations as the means to argue about safety of systems [Kelly 1998], [Wilson 1997]. Assurance case is necessary for regulators, as the main objective of regulation of certain activity is to ensure that those who are accountable for the security discharge their responsibility properly followed by an adequate means of obtaining regulatory approval for the service or project concerned.

The development of an assurance case is not an alternative to carrying out security assessment; rather, it is a means of structuring and documenting a summary of the results of such an assessment and other activities (e.g., simulations, surveys, etc.), in a way that a reader can readily follow the logical reasoning as to why a change or ongoing service can be considered safe and secure.

Procurers of critical computer-based systems have to assess the suitability of implementations provided by external contractors. What an assessor requires is a clear, comprehensible, and defensible argument, with supporting evidence that a system will behave acceptably.

The designers of a system will obviously take security requirements into account, but the satisfactory design and implementation of such requirements does not manifest itself clearly in the models and artifacts of the development process; for example, one cannot look at a development process and assume a system's reliability and security.

A contractor may provide the assessor with a large amount of diverse material on which to make their assessment. They may provide a formal specification of the system and a design by refinement. They may produce hundreds of pages of carefully crafted fault tree analysis. They may place great emphasis on the coverage and success of the system testing strategy. Each of these sources may provide a useful line of argument or a key piece of evidence, but individually they are insufficient grounds for an assessor to believe that a system is acceptably secure.

Also, individual analyses models are likely to be based on a number of underlying assumptions, which are not easily captured in the model itself. There will always be issues that cannot be captured in any specific analysis model; principal amongst these is the justification of the model itself.

What an assessor requires is an overall argument bringing together the various strands of reasoning and diverse sources of evidence in the form of an assurance case, which makes clear the underlying assumptions and rationale. An effective assurance case needs to be a clear, comprehensive, and defensible argument that a system will behave acceptably throughout its operations and decommissioning.

There is a need for analysis models and artifacts to derive measures of security, reliability, etc., to make the properties manifest and controllable.

A structured account of these analyses models is usually presented to the appropriate regulatory authority as an assurance case.

An assurance case consists of four principal elements: objectives, argument, evidence, and context. Security case will emphasize security objectives, namely confidentiality, integrity, and availability. To assure security, the systems owner has to demonstrate that security objectives are addressed. The security argument communicates the relationship between the evidence and objectives and shows that evidence indicates that objectives have been achieved. Context identifies the basis of the argument. Argument without evidence is unjustified and therefore, unconvincing. Evidence without argument is unexplained—it can be unclear that (or how) security objectives have been satisfied. [Eurocontrol 2006]

Requirements are supported by claims. Claims are supported by other (sub) claims. Leaf sub-claims are supported by evidence. The structured tree of sub-claims defines context for argument.

2.2.2.1. Contents of an assurance case

A good assurance case should include at least the following [Eurocontrol 2006]:

• Claim – what the assurance case is trying to show—this should be directly related to the claim that the subject of the assurance case is acceptably secure.

• Purpose – why is the assurance case being written and for whom.

• Scope – what is, and is not, covered.

• System Description – a description of the system/change and its operational and physical environment, sufficient only to explain what the assurance case addresses and for the reader to understand the remainder of the assurance case.

• Justification – for project assurance cases, the justification for introducing the change (and therefore potentially for incurring some risk).

• Argument – a reasoned and well-structured assurance argument showing how the aim is satisfied.

• Evidence – Concrete, agreed-upon, available facts about the system of interest that can support the claim directly or indirectly.

• Caveats – all assumptions, outstanding security issues, and any limitations or restrictions on the operation of the system.

• Conclusions – a simple statement to the effect that the claim has been satisfied to the stated caveats.

2.2.2.1.1. Assurance claims

Assurance case involves one or more Assurance Claims (from here on referred to as “claims”). Each claim is phrased as a proposition and presents a statement about a desired result, objective, or point of measure that is important to assess and understand; it is answered as true or false. A typical system assurance claim is a safeguard effectiveness statement – a claim that a particular security control adequately mitigates certain identified risks. The characteristics of claims can be summarized as follows:

• Claims should be bounded.

• Claims should never be open-ended.

• Claims should be provable.

In particular, the claim statement should involve discernable facts, such that they can be recognized with confidence among the available facts about the subject of the assurance case, at least in principle, even if the evidence gathering involves some complex analysis that will derive additional intermediate facts. When forming claims, the answers on the following questions could be helpful:

• What statements would convince us that the claim is true?

• What are the reasons for why we can say that the claim is true?

2.2.2.1.2. Assurance arguments

The role of an assurance argument is to succinctly explain the relationship between an assurance claim and an assurance evidence, and is used to clearly describe how a claim is justified:

• How evidence should be collected and interpreted; and

• Ultimately, what evidence supports the claim.

Assurance Arguments (from here on referred to as “argument”) assist in the claim structure build-up and can be viewed as a strategy for substantiating bigger goals or high-level claims by decomposing an assurance claim into a number of smaller sub-claims. While evidence is the set of available, agreed-upon and undisputed facts about the system of interest, assurance argument addresses the conceptual distance between the available facts and the claim statements, for example, when the claim involves analysis, or when the available facts needs to be accumulated because none of them provides direct support to the claim.

Decomposition is repeated until there is no need for further simplification since the claim can now be objectively proven. End results of this divide-and-conquer strategy are sub-claims that do not need further refinement and could be viewed as a contractual agreement on how evidence will be collected and how much of evidence is required:

• When evidence is considered missing?

• What level of evidence collecting is required?

These sub-claims form the set of measurable goals for collecting evidence.

A typical assurance case requires decomposition of the top claims into several levels of sub-claims that are eventually supported by evidence. In some cases, a single assurance argument may be used to support multiple assurance claims.

An assurance argument can be constructed based on an evaluation and testing of the product. This approach is often used for security-related products, particularly under the Security Evaluation or Common Criteria scheme, ISO/IEC 15408-1:2005 [ISO 15408], [Merkow 2005]. In this case, the assurance argument is based upon the Protection Profile of the product and the Evaluated Assurance Level achieved.

Assurance arguments can be constructed in many other ways and drawn from many different sources. In the examples given previously, the assurance arguments have been based upon:

• Testing and evaluation of the product or service;

• The reputation of the supplier;

• The professional competence of the engineers performing the work; and

• The maturity of the processes used.

Other sources that could be used include:

• The methods used in the design of the product or service;

• The tools used in the design of the product;

• The tools used in the performance of the service; and

• Many other potential sources.

All of the above can be used to support the assurance claim. Which strategy is used in a particular instance largely depends upon the needs of the assurance recipient and how they will make use of the assurance case associated with the product or service.

2.2.2.1.3. Assurance evidence

The assurance evidence are the concrete facts that were collected in support of a given claim or sub-claim. For each claim, agreed upon collection techniques are used to capture the evidence.

All assurance components are interdependent as they together demonstrate in a clear and defendable manner, how the agreed-upon, readily available facts support meaningful claims about the effectiveness of the safeguards of the system of interest and its resulting security posture.

It is important to note that although assurance does not add any additional safeguards to counter risks related to security, it does provide the confidence that the controls that have been implemented will reduce the anticipated risk.

Assurance can also be viewed as the confidence that the safeguards will function as intended. This confidence is derived from the properties of correctness and effectiveness. Correctness is the property that the safeguards, as designed, implement the requirements. Effectiveness is the property that the safeguards provide security adequate to meet the customer's security needs. The strength of the evidentiary support to the claims is determined by the required level of assurance.

2.2.2.2. Structure of the assurance argument

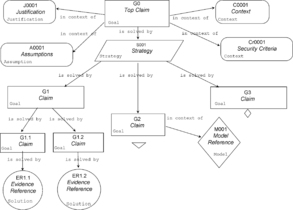

Goal-Structuring Notation (GSN), developed by the University of York in the UK, provides a visual means of setting out hierarchical assurance arguments, with textual annotation and references to supporting evidence [Kelly 1998], [Eurocontrol 2006].

The structured approach of GSN, if correctly applied, brings some rigor into the process of building assurance arguments and provides the means for capturing essential explanatory material, including assumptions, context, and justifications within the argument framework. Figure 9 shows, in an adapted form of GSN, a specimen argument and evidence structure to illustrate the GSN symbology most commonly used in assurance cases (see also Table 1).

|

| Figure 9 Assurance case presented in Goal-Structuring Notation |

| Claim – A claim should take the form of a simple predicate—i.e., a statement that can be shown to be only true or false. Goal-Structuring Notation (GSN) provides for the structured, logical decomposition of claims into lower-level claims. For an argument structure to be sufficient, it is essential to ensure that, at each level of decomposition: the set of claims covers everything that is needed in order to show that the parent claim is true; there is no valid (counter) claim that could undermine the parent claim. In Figure 9, for example, if it can be shown that the claim G1 is satisfied by the combination of claims G1.1 and G1.2, then we need to show that G1.1 and G1.2 are true in order to show that G1 is true. If this principle is applied rigorously all the way down, through, and across a GSN structure, then it is necessary to show only that each argument at the very bottom of the structure is satisfied (i.e., shown to be true) in order to assert that the top-level claim has been satisfied. Satisfaction of the lowest-level claims is the purpose of evidence. Unnecessary (or misplaced) claims do not in themselves invalidate an argument structure; however, they can seriously detract from a clear understanding of the essential claims and should be avoided. In GSN, claims are often called goals because the assurance case provides guidance to the evidence gathering and system analysis activities. |

| Evidence – It follows from the information above that, for an argument structure to be considered to be complete, every branch must be terminated in a reference to the item of evidence that supports the claim to which it is attached. Evidence therefore must be: appropriate to, and necessary to support, the related claim — spurious evidence (i.e., information which is not relevant to a claim) must be avoided since it would serve only to confuse the agreement sufficient to support the related claim – inadequate evidence undermines the related claim and consequently all the connected higher levels of the structure. |

| Argument – Strategies are a useful means of adding “comment” to the structure to explain, for example, how the decomposition will develop. They are not predicates and do not form part of the logical decomposition of the claim; rather, they are there purely for explanation of the decomposition. In GSN, argument is often referred to as strategy, since it explains the decomposition of a claim into sub-claims, and therefore, describes the strategy of assurance. |

| Assumption – An assumption is a statement whose validity has to be relied upon in order to make an argument. Assumptions may also be attached to other GSN elements including strategies and evidence. |

| Context – Context provides information necessary for a claim (or other GSN element) to be understood or amplified. Context may include a statement, which limits the scope of a claim in some way. Criteria are the means by which the satisfaction of a claim can be checked. |

| Justification – A justification is used to give a rationale for the use or satisfaction of a particular claim or strategy. More generally, it can be used to justify the change that is the subject of the safety case. | |

| Model – A model is some representation of the system, sub-system, or environment (e.g., simulations, data flow diagrams, circuit layouts, state transition diagrams etc.). |

2.3. Overview of the assurance process

An assurance process involves several activities that analyze a system and produce an assurance case for the consumption of the system stakeholders. The assurance case is the enabling mechanism aimed to show that the system will meet its prioritized requirements, and that it will operate as intended in the operational environment, minimizing the risk of being exploited through weaknesses and vulnerabilities. It is a means to identify, in a structured way, all the assurance components and their relations. As previously stated, those components are presented as claims, arguments, and evidence, where claims trace through to their supporting arguments and from those arguments to the supporting evidence. System assurance is not something radically new; on the contrary, it provides a repeatable and systematic way to perform risk assessment and system analysis, which are established engineering disciplines [Landoll 2006], [Payne 1993]. System assurance also provides guidance to the validation and verification activities as they are performed against the system, as well as against the processes of the risk assessment. Once the assurance assessment is completed, risk assessment can be performed. This process starts by reviewing claims found in breach of compliance. Evidence from noncompliant claims is used to calculate risk and identify course of actions to mitigate it. Each stakeholder will have their own risk assessment—e.g., security, liability, performance, and regulatory compliance.

The system assurance is the way to communicate the findings of the risk analysis, system analysis, validation, and verification in a systematic, rational, clear, and convincing way for the consumption of the stakeholders (see Figure 10). The key to system assurance is management of knowledge about the system, its mission, and its environment.

|

| Figure 10 Information flow |

Besides that, system assurance, through the use of its components, presents a powerful way of modeling and assessing trustworthiness in a more formal way (degree of formalism varies depending on use of narrative arguments versus well-structured, formalized sub-claims as arguments); it brings significant value in area of:

• Providing traceability between high-level objectives/policies to system artifacts;

• Bringing automation, repeatability, and objectivity to the assessment process;

• Unlike any other traditional assessments that focus on particular view of the system (e.g., CMMI = process view, QA testing = technical view) assurance case provides a cross-domain view, bringing all system components such as functional, architectural, operational, and process together.

2.3.1. Producing confidence

System assurance provides coordinated guidance for multidisciplinary, cross-domain activities that generate facts about the system, and use these facts as evidence to communicate the discovered knowledge and transform it into confidence. The aim of the end result is to achieve the acceptable measures of system assurance and manage the risk of exploitable vulnerabilities.

The confidence produced using this formal approach can be viewed as product due to the following characteristics:

• Measurable – confidence can be measured with results expressed as achieved confidence level of high, medium, or low. Achieved levels are based on findings from system analysis activities.

• Acceptable – the methods that produced the confidence are clear, objective, and as such, acceptable to consumers.

• Repeatable – every time confidence is produced using the same “acceptable” methods on the same set of system artifacts, it would result in the same “confidence level.”

• Transferable – measured level of confidence produced by acceptable and repeatable methods is transferable to its consumers; and as such, confidence can be packaged together with a system as its attribute.

2.3.2. Economics of confidence

Today, most of assessment activities that make up assurance processes are informal, subjective, and manual due to lack of comprehensive tooling and agreed-upon assurance content in machine-readable form, which makes assessment approaches resist automation. Less automation means a laborious, unpredictable, lengthy, and costly assurance process.

The good example would be the Common Criteria (CC) Evaluation Assurance Process [ISO 15408], [Merkow 2005]—IT system certification process of commercial products that will be deployed in the government environment. CC Evaluation Assurance Levels (EAL1 through EAL7 numerical rating) of an IT system reflect at what level the system was tested to verify if it meets all of its security requirements. The intent of the higher levels is to provide higher confidence that the system's principal security features are reliably implemented, focusing on covering development of product with a given level of strictness, and only higher EALs involve evaluation of formal system artifacts (EAL 5 – to EAL 7), leading to a costly and laborious evaluation process.

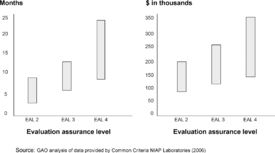

In 2006, the US Government Accountability Office (GAO) published a report on common criteria evaluations that summarized a range of costs and schedules reported for evaluations performed at levels EAL2 through EAL4 [GAO-06-392 2006] that is summarized in Figure 11.

|

| Figure 11 Summarized cost of Common Criteria Evaluation |

While certification cost for EAL1 through EAL4 is measured in hundreds of thousands of dollars, EAL5 through EAL7 is measured in millions of dollars. For example, EAL 7 of OS Separation Kernel can cost up to $5M and last up to 2.5 years—not so practical to be applied across all systems.

Automation in security assurance would be game changing.

Bibliography

Eurocontrol, Organization For The Safety of Air Navigation, European Air Traffic Management, Safety Case Development Manual, DAP/SSH/091, (2006) .

ISO/IEC 15026 Systems and Software Engineering – Systems and Software Assurance, Draft (2009) .

ISO/IEC 15408-1:2005 Information Technology - Security Techniques - Evaluation Criteria for IT Security Part 1: Introduction and General Model, (2005) .

S.E. Toulmin, An Introduction to Reasoning. (1984) Macmillan, New York, NY.

S.E. Toulmin, The Uses of Argument. (2003) Cambridge University Press, New York, NY.

T.P. Kelly, Arguing Safety – A Systematic Approach to Managing Safety Cases. (1998) University of York; PhD Thesis.

D.J. Landoll, The Security Risk Assessment Handbook. (2006) Auerbach Publications, New York, NY.

M.S. Merkow, J. Breithaupt, Computer Security Assurance Using the Common Criteria. (2005) Thompson Delmar Learning, Clifton Park, NY.

NDIA, Engineering for System Assurance Guidebook, (2008) .

C.N. Payne, K.N. Froscher, C.E. Landwehr, Toward A Comprehensive Infosec Certification Methodology, Center for High Assurance Computing Systems Naval Research Laboratory, In: Proc. 16th National Computer Security Conference (1993) NCSC/NIST, Baltimore MD, pp. 165–172.

ISSEA, In: SSE-CMM Systems Security Engineering – Capability Maturity Model, 3.0 (2003);http://www.sse-cmm.org/index.html.

S.P. Wilson, T.P. Kelly, J.A. McDermid, Safety Case Development: Current Practice, Future Prospects, In: Proc. Safety of Software Based Systems - Twelfth Annual CSR Workshop (1997); York, England.

SafSec, In: (Editors: B. Dobbing, S. Lautieri) Integration of Safety & Security Certification (2006) Praxis High Integrity Systems, UK.

U.S. Government Accountability Office, Information Assurance. (2006) ; National Partnership Offers Benefits, but Faces Considerable Challenges. March 2006, Washington, DC.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.