Chapter 8. OMG software assurance ecosystem

Abstract

Affordable cost-effective assurance requires a common infrastructure to support discovering system knowledge, exchanging knowledge, integrating knowledge from multiple sources, collating pieces of knowledge, and distributing the accumulated knowledge. This chapter describes the protocols of the OMG Software Assurance Ecosystem, focusing on the use of generic and concrete knowledge and ways to efficiently integrate these units of knowledge within the fact-oriented system model that is utilized for vulnerability analysis. The standard protocol for exchanging system facts defines how concrete system facts can be automatically discovered from various system artifacts by compliant knowledge discovery tools; how cybersecurity facts can be integrated into the system model; and how patterns and checklists can work as queries to the fact repository for the purposes of security posture analysis. Common infrastructure with support for automation is the key to affordable assurance.

Keywords

ecosystem, software assurance ecosystem, information exchange, information exchange protocol, cybersecurity pattern

Hercule Poirot: Remember, Monsieur Fraser, our weapon is our knowledge, but it may be a knowledge we do not know we possess.

—Agatha Christie, The ABC Murders

8.1. Introduction

Affordable cost-effective assurance requires efficiency in discovering system knowledge, exchanging knowledge, integrating knowledge from multiple sources, collating pieces of knowledge, and distributing the accumulated knowledge. Evidence of the effectiveness of the safeguards have to be specific to the system of interest. Therefore, there is a need to discover accurate knowledge about the system of and use it together with the general units of knowledge such as the off-the-shelf vulnerability knowledge, vulnerability patterns and threat knowledge.

The OMG Assurance Ecosystem involves a rigorous approach to knowledge discovery and knowledge sharing where individual knowledge units are treated as facts. These facts can be described using statements, in a controlled vocabulary of noun and verb phrases using structured English and represented in efficient fact-based repositories, or represented in a variety of machine-readable formats, including XML. This fact-oriented approach allows discovery of accurate facts by tools, integration of facts from multiple sources, analysis, and reasoning, including derivation of new facts from the initial facts, collation of facts, and verbalization of facts in the form of English statements. Generic units of knowledge can also be represented as facts and integrated with the concrete facts about the system of interest. This uniform environment industrializes the use of knowledge in system assurance - allows description of the patterns of facts, sharing patterns as content and using automated tools to search for occurrences of patterns in the fact-based repository. The key to the fact-oriented approach is the conceptual commitment to the common vocabulary which is the key contract that starts an ecosystem.

To better explain this industrialized approach to knowledge in the assurance context, let's consider a real-life example that most of us have experienced at one time or the other—security screening of bags at airports around the world. Luggage screening is a particular safeguard of the airport security program that utilizes machinery, procedures, and security screeners to check each carry-on bag against anything that could be used as weapons on board the aircraft. The machinery consists of a conveyor belt for transporting bags to the inspection station, which involves electronic detection and imaging equipment and its operator, the screener. The equipment used is a variation of an X-ray machine that can detect explosives by looking at the density of the items being examined. The screening machine employs Computed Axial Tomography (CAT) technology originally designed for and used in the medical field to generate images (CAT scans) of tissue density in a “slice” through the patient's body. The screening machine uses a library of weapons and explosives patterns and produces a color-coded image to assist screeners with their threat resolution procedures. In other words, general knowledge about weapons and items that could be used as weapons is converted into patterns that these X-ray machines can automatically detect and display when applied to items in the bag. The conceptual commitment of the X-ray machine does not involve a full taxonomy of items in bags, for example, a clothing item, a personal care item, or a personal electronic device. The X-ray machine does not produce a listing of the items in the bag. Instead, the contents of a bag are describes as density slides of the material. The density slices of the bag is the concrete knowledge of all items in the bag, and when scanned by these special X-ray machines, the color-coded images are generated by applying patterns to the density slices. The X-ray machine produces a distilled color-coded image to the screener.

This is an illustration of merging general knowledge and concrete knowledge in a repeatable and systematic automated procedure. It is important to note that all elements of the luggage screening system, including the screening personnel, commit to the same predefined vocabulary of density slices (formed shapes with corresponding density of the item's material), so that patterns can be recognized and properly marked (e.g., metal knife vs. plastic knife, where the metal knife would be colored as a threat, while a plastic knife would be colored as neutral object according to the agreed upon color-coding schema). The resulting color-coded images are displayed on the monitor of the security screener, who reviews them and determines a course of action by applying a threat resolution procedure (e.g., bag passed inspection, further manual inspection is needed, bag needs to be removed). Manual screening is limited to situations where the automated images are inconclusive. Manual screening is informal but implies a different conceptualization (the screener looks at the real items around the area where an inconclusive image was produced) and a higher resolution (not just the shape based on the density of material, but the actual shape, color, weight, and so on, of the item).

In terms of system assurance, luggage screening is an operational safeguard that has technical and procedural elements. Understanding the effectiveness of this safeguard contributes to our confidence in the overall airport security posture. However, we use this example to illustrate systematic, repeatable, and fact-oriented inspection methods that involve collaboration based on shared conceptual commitment. The bag under inspection illustrates a system of interest where the concrete items in the bag need to be inspected (what are they?) and assessed (are they dangerous?). There are many ways to conceptualize the bag contents, resulting in concrete facts of the system of interest. Concrete knowledge has to align with the general knowledge that we are using for assurance (such as the concepts of explosives and weapons as particular dangerous items). Our inspection must make repeatable and systematic decisions on whether the bag under inspection contains dangerous items.

Several things must be aligned in order to make this inspection cost effective: Machine-readable patterns of dangerous items must be available, the machine-readable descriptions of the concrete items in the bag under inspection (the concrete facts) must be available, and automatic pattern recognition must be done. All these components must agree on the same common conceptualization, so that the way security patterns are expressed match the concrete facts. The contents of the bag are therefore X-rayed and represented as shapes based on the density of the material. The library of dangerous items such as explosives and weapons (e.g., handguns, knifes, scissors, etc.) is based on the characteristics of these shapes. At this point, automatic pattern recognition is done, where patterns are used as queries to the normalized representation of the bag's contents. When a match is found between a pattern and facts from the normalized representation, the corresponding color-coded images are generated. The X-ray machine performs concrete knowledge discovery. Both units of knowledge, generic and concrete, are merged using the pattern-matching algorithms of the screening machine. The common conceptualization creates an ecosystem that:

• Integrates CAT technology, X-ray and imaging machines, procedures, and personnel (security screeners), and

• Unifies units of concrete knowledge (density slices of the items in the bag) and generic knowledge (predefined items and patterns in the form of shapes of the corresponding material density) as they are independently merged using certain technology, facilitating their processing to determine if the bag contains items that match given patterns.

Without such an ecosystem, security screeners would need to manually inspect every item in every bag, which would be intrusive to customers, time-consuming, unrepeatable, lengthy, and costly. Manual inspection would require that passengers send bags for inspection days before their scheduled departure.

8.2. OMG assurance ecosystem: toward collaborative cybersecurity

An ecosystem is a community of participants who engage in exchanges of content using an explicit shared body of knowledge. An ecosystem involves a certain communication infrastructure, or “plumbing,” that includes a number of standard protocols implemented by using knowledge-management tools. Each protocol is based on a certain conceptualization that defines the corresponding interchange format. An ecosystem opens up a market where participants exchange tools, services, and content in order to solve significant problems. The essential characteristic of an ecosystem is establishment of knowledge content as an explicit product, separated from producer and consumer tools, and delivered through well-defined protocols, in order to enable economies of scale and rapid accumulation of actionable knowledge. It is important to establish such an ecosystem for assurance in order to provide defenders of cybersystems with systematic and affordable solutions.

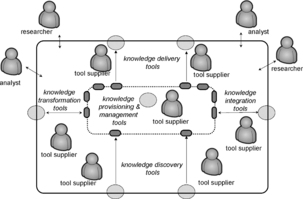

Figure 1 describes the key participants of the Assurance Ecosystem and the knowledge exchanges between them.

|

| Figure 1 Knowledge exchange between assurance participants |

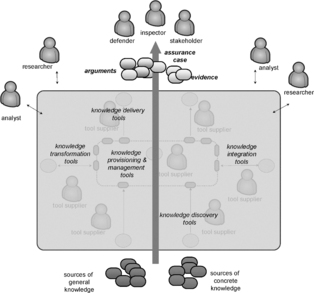

The purpose of the ecosystem is to facilitate the collection and accumulation of assurance knowledge, and to ensure its efficient and affordable delivery to the defenders of cybersystems, as well as to other stakeholders. An important feature of cybersecurity knowledge is separation between the general knowledge (applicable to large families of systems) and concrete knowledge (accurate facts related to the system of interest). To emphasize this separation, a certain area at the top of the ecosystem “marketplace” in the middle of the diagram represents concrete knowledge, while the rest represents general knowledge. Keep in mind that “concrete” facts are specific to an individual system. Thus, the “concrete knowledge” area represents multiple local cyberdefense environments, while the “general knowledge” area is the knowledge owned by the entire cyberdefense community. So the “defender,” “inspector,” and “stakeholder” icons represent people who are interested in providing assurance of one individual system.

Figure 1 also illustrates exchanges in the Assurance Ecosystem and the corresponding protocols. Exchanges are indicated by arrows. Participants in the ecosystem are shown by two kinds of icons. The first icon, the “actor” icon, represents a certain class of participants involved in the given information exchange. A single “actor” icon represents the entire class of real participants that can be involved in multiple concurrent interactions. The second icon—a grey oval—represents a tool. The ecosystem figure shows two clusters of knowledge discovery tools. As is true of participants, each cluster represents multiple real tools. Icons that look like a rectangle with rounded corners represent the units of knowledge either as the sources or as the results of exchanges within the ecosystem. Figure 1 illustrates important classes of information exchanges, differentiated by the classes of the ecosystem participants.

Let's see how the Assurance Ecosystem enables the defense team.

Knowledge delivery protocols. The purpose of the ecosystem, the tools, and the “plumbing,” is to deliver knowledge to the defense team, as is illustrated by Flows #10 and #11. Flow #10 delivers concrete facts about the system and its environment, which constitutes the evidence of the assurance case. Flow #11 delivers general knowledge, which is used largely to develop the argument. The standards of the Assurance Ecosystem define the protocols for delivering the argument[ARM] and the evidence [SAEM].

System analysis protocols. Information Flows #9 and #8 illustrate the process of system analysis. This process is guided by the overall assurance argument and works with concrete facts about the system. The analyst starts with the top-level assurance claims and develops the subclaims, guided by analysis of the architecture of the system. The analyst requests a certain kind of analysis to be performed on the system in order to produce evidence to the subclaims. The analyst is also the source of some unique facts, such as the threat model and the value statements for the assets of the system. Usually, there is no machine-readable source of these facts, so the analyst's role is to collect this data. Flow #9 illustrates how these facts are added to the “concrete knowledge” repository.

General knowledge protocol. In the process of building the assurance case, general knowledge is utilized, as illustrated by Flow #7. For example, in order to build the threat model of the system, the analyst needs to know about recent incidents involving similar systems. The threat model is an example of concrete knowledge that is specific to the system of interest, while the database of security incidents searchable by the system features—the attacker's motivation, capability and the resulting injury—is an example of general cybersecurity knowledge. One of the central concerns addressed by the OMG Assurance Ecosystem is defining efficient mechanisms for utilizing general cybersecurity knowledge by the analyst and the defense team (Flow #7). Building shared conceptual committment in the form of a common vocabulary is described in Chapter 9.

Concrete knowledge discovery protocol. The majority of concrete cybersecurity facts are provided by automated tools, as indicated by Flow #4. The key protocol of the OMG Assurance Ecosystem is the standard protocol for exchanging system facts, called the Knowledge Discovery Metamodel (KDM), described in Chapter 11. There are several important scenarios here, all illustrated by Flow #3, followed by Flow #4. Each scenario is characterized by a unique source of knowledge and the corresponding class of knowledge discovery tools.

1. Accessing existing repositories that contain facts required for assurance. The role of the knowledge discovery tool in this scenario is to provide an adaptor that will bridge the format of the existing repository to the format of the ecosystem facts. For example, the ITIL repository contains information related to the system's assets; the architecture repository contains operational and system views, as well as the data dictionaries; the human resources database contains information about personnel; business models contain information about the business rules; the problem report tracking system contains information about the vulnerabilities; and the network management system contains facts related to the network configuration of the system and configuration settings, such as the firewall configurations. Efficient assurance process must utilize any available units of data in order to avoid mistakes arising from incorrect interpretation of the manual data collection, access the latest snapshot, and be able to update during the subsequent assessments.

2. Discovering network configuration. The role of the “knowledge discovery tool” in this scenario is to scan the network and map the hosts, the network topology, and the open ports. The source of concrete knowledge is the network itself.

3. Discovering information from system artifacts, such as code, database schemas, and deployment configuration files. The role of the knowledge discovery tool is to perform application mining. This activity includes mining for basic behavior and architecture facts, as well as the automated detection of vulnerabilities, determined by known vulnerability patterns, also known as static code analysis. Some classes of application mining tools work with source code, while others work with machine code. The database structure can be discovered from accessing the data definition file (source code) or from querying the database itself.

4. Discovering facts from informal documents, such as requirements, policy definitions, and manuals. The role of a knowledge discovery tool in this scenario is to assist in linguistic analysis of the document and to extract the facts relevant to security assurance of the system.

Content import protocol. Knowledge discovery, especially the process of detecting vulnerabilities, is often driven by general knowledge (indicated as Flow #6). For example, a NIST SCAP vulnerability scanner tool is driven by vulnerability descriptions from the National Vulnerability Database (NVD). An antivirus tool is driven by virus patterns.

Integration protocol. Flow #5 represents the integration scenario, which is also quite central to the purpose of the Assurance Ecosystem. As you can see, the facts related to the security of the system can come from various sources, where each source described a partial view of the system. Integrating these pieces of knowledge into a single coherent picture facilitates system analysis. The integration process is illustrated by Flow #5 followed by Flow #4 back into the repository. The role of the tool in this scenario is to identify common entities and to collate the facts from multiple sources. This is a form of knowledge discovery because integration produces new facts, describing the links between different views. Knowledge integration does not use any external sources of knowledge (Flow #3 is not utilized).

Knowledge refinery protocol. A similar process, also described by Flow #5 that is followed by Flow #4 back into the repository, is called knowledge refinery—the process of deriving new facts from existing facts. This process often involves general facts (Flow #6) as well as concrete facts (Flow #5) as inputs. The knowledge refinery process by which additional concrete facts are produced (Flow #4) from the primitive facts (Flow #5) and general patterns (Flow #6) by a knowledge refinery tool is one possible implementation of Flow #7.

Knowledge sharing protocol. The last but definitely not the least important information flow on the right side of the diagram is Flow #2, which describes how the Assurance Ecosystem influences tool suppliers to develop better and more efficient knowledge discovery tools. The direct benefit to the defense team comes from the use of efficient knowledge discovery tools, so the loop by which the general cybersecurity knowledge flows to the suppliers of the knowledge discovery tools is very important to the purpose of the Assurance Ecosystem.

Knowledge creation protocol. Moving to the left side of the Assurance Ecosystem diagram, the information Flow #1 describes the primary source of cybersecurity knowledge. All general knowledge is produced by security researchers through analysis of systems, security incidents, malware, experience reports, and so on. The role of the Assurance Ecosystem is to define efficient interfaces in support of Flow #1 and to enable the accumulation of content and its delivery to the consumers. The OMG Assurance Ecosystem builds upon the existing best practices of information exchanges within the cybersecurity community and brings modern technology to define the next generation of open-standards-based collaborative cybersecurity.

Flows #12, 13, 14, and 15 conclude the picture.

Bridging protocol. Existing sources of general cybersecurity knowledge include any existing repositories that do not comply with the “plumbing” standards of the Assurance Ecosystem. This includes the current NVD [NVD] and [US-CERT] the Open Source Vulnerability Database [OSVDB], the Bugtraq list, proprietary repositories of the intrusion detection tools, risk management systems and their proprietary repositories, and the like. Information Flow #13, followed by #14, illustrates how this content is bridged into the Assurance Ecosystem. The role of the knowledge discovery tool in this scenario is to provide an adaptor between the formats.

Knowledge-sharing protocol. Flow #12 is the counterpart of Flow #2 and illustrates how the knowledge accumulated and distributed within the Assurance Ecosystem can influence tool suppliers, resulting in additional and more efficient data feeds into the Ecosystem.

Bridging protocol. Flow #15 concludes the picture. This flow illustrates how information from the Assurance Ecosystem can be distributed to outside communities, including tools that use proprietary formats and ecosystems built upon different information exchange standards.

Protocols of the Assurance Ecosystem are contracts that ensure interoperability between participants, including tools and people. An implementation of the Assurance Ecosystem provides the “plumbing” for the information exchanges and facilitates collaborations, leading to rapid accumulation of common knowledge. There is ample evidence that standards-based ecosystems jump-start vibrant communities and remove barriers to entry into the market of tools, services, and content, which attracts new talent. As a result, the information content is created and shared, while the quality of the content is rapidly improving through repeated use by tools and by competing peers. Exactly this kind of environment for cybersecurity is needed to close the knowledge gap between attackers and defenders. So, what is represented as a cluster of knowledge discovery tools in the Assurance Ecosystem diagram is a hub-and-spike knowledge-driven integration architecture that defines plug-and-play interfaces for tools. The interfaces are determined by the information exchange protocols (see Figure 2).

|

| Figure 2 Knowledge-driven integration |

In a physical sense, the tools in the Assurance Ecosystem provide interfaces to people who work with tools. The Assurance Ecosystem defines the logical interfaces that are provisioned by the compliant tools (see Figure 3).

|

| Figure 3 Logical interfaces provisioned by the compliant tools |

The purpose of the collaborations is to provide an integrated end-to-end solution to performing assessments of systems and delivering assurance to system stakeholders (see Figure 4).

|

| Figure 4 End-to-end solution delivering assurance to system stakeholders |

Bibliography

Object Management Group, Argumentation Metamodel (ARM). (2010) .

[NVD] National Vulnerability Database (NVD), http://nvd.nist.gov/home.cfm.

[OSVDB] OSVDB, The Open Source Vulnerability Database, http://osvdb.org.

Object Management Group, Software Assurance Evident Metamodel (SAEM). (2010) .

[US-CERT] US-CERT United States Computer Emergency Readiness Team, http://www.us-cert.gov.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.