Enterprise Data Management

Abstract

Data are the means to coordinate activity, record actions and responsibilities, solve problems, develop plans, and measure performance. Every business activity has supporting data that, in context, are information about the business. This chapter describes a data management architecture that is appropriate for the agile enterprise, to support the integration of the building blocks, recognize relevant events and trends, capture and share knowledge, and evaluate performance and value delivery. The architecture includes management of master data, business metadata, and other key enterprise data services.

Keywords

Data management architecture; Master data; Data modeling; Business metadata; Data services; Data ownership; Legal records; Data residency; Analytics; Knowledge management; Enterprise logical data model

Data are the lifeblood of the enterprise. Without data there can be no coordinated activity, no record of accomplishments, no ability to solve problems, and no plans for the future. Management of data is a critical responsibility and becomes increasingly critical as enterprises become international and require greater agility.

Data are the elemental form of enterprise information and knowledge. When presented in a useful form and context, data become information. When that useful form provides insights on experience, behavior, and solutions, it becomes knowledge. In this chapter, we are concerned with the management of data, involving capture, validation, transformation, storage, communication, and coordination of operations to provide information and knowledge in the pursuit of enterprise objectives. While data exist in many forms—on paper, in conversations, as measurements—here we are concerned with data in electronic form, communicated, stored, and computed electronically.

In this chapter, we will focus primarily on the data management architecture (DMA) for the agile enterprise. We will then discuss metadata—data about data—that is important to the structure, meaning, and credibility of data. Finally, we will consider a general approach to enterprise transformation to implement the DMA.

Data Management Architecture

The DMA defines the components and relationships that participate in the creation, storage, communication, and presentation of data in an agile enterprise. In the agile enterprise, data are stored in distributed databases that are contained and maintained by associated service units. All accesses to a database are performed by the service unit. A service unit is the master source of some of the data in its database, it may replicate some of its data from other service units, and it may manage some data for its internal operations. Data are shared, primarily, through the exchange of messages through the Intranet.

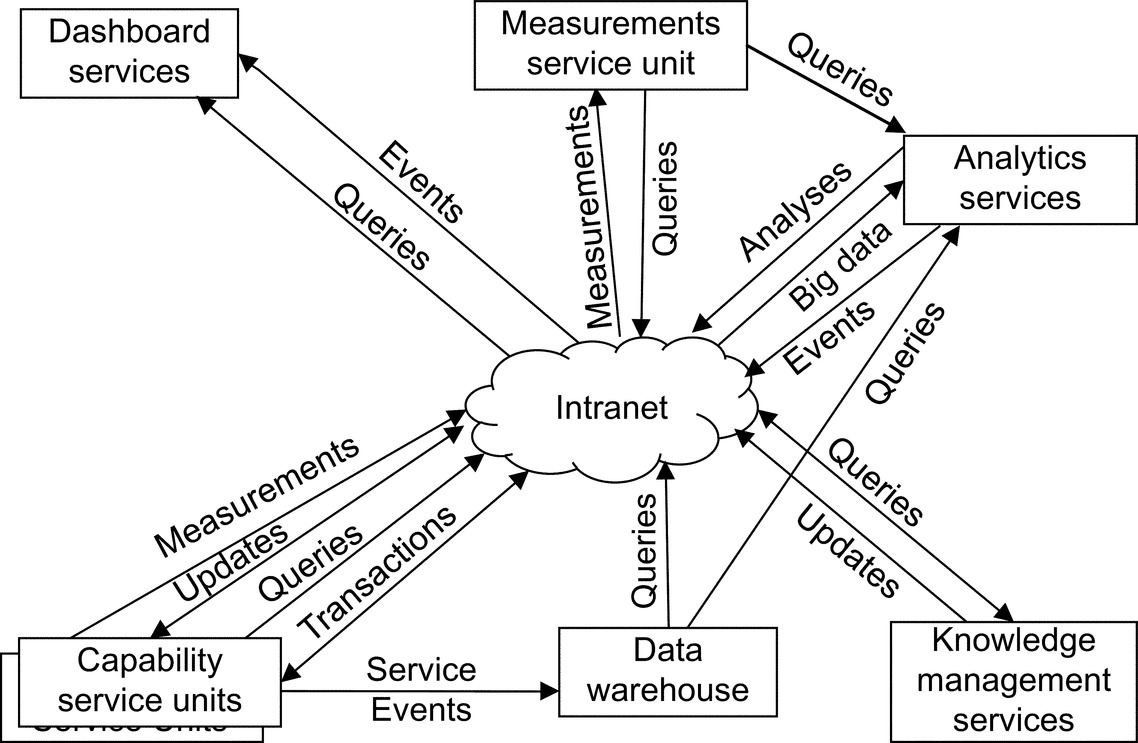

An overview of the architecture is depicted in Fig. 6.1. All of the rectangles represent service units. The underlying technical infrastructure is defined in Chapter 3, and details of notification requirements are discussed in Chapter 8. In the following sections, we will begin with a discussion of the general patterns and principles associated with this architecture, followed by discussions of each of the components depicted in Fig. 6.1.

General Patterns and Principles

There are several general patterns and principles that are key to the operation of the agile data architecture:

• Service unit groups

• Data encapsulation

• Data sharing

• Access locking

• Legacy systems

• Outsourcing

• Legal records

• Data compatibility

These are discussed in the sections that follow.

Data Ownership

Each database is managed by a service unit that owns primary responsibility for the security, integrity, recoverability, and residency (geographical location) of the data. The database contains data for which the service unit is the master source along with data obtained from other service units and data created locally to support the service unit operation.

The agile enterprise will have many service units and thus many databases. The increased number of databases and frequency of shared data exchanges are enabled by internet technology, advances in middleware technology, and increased communications bandwidth.

The master data of a service unit may be data on a specific subject, or it may be a subset of data of a particular subject. For example, order management may be performed by different implementations of an order management service unit that serves different countries. Each service unit is then the master source for orders originated in its assigned geographical area.

Data Encapsulation

Every database or other form of data storage is accessed only by a service unit that is the data owner. This hides the particular data storage structure and technology from other users of the data, and it provides the opportunity to control access and the integrity of updates to the data. Every service unit has associated shared data and includes the services necessary for accessing the data and accepting updates.

Data elements for widely referenced business entities, such as customers, suppliers, employees, products, and the organization structure, each may be maintained in one or more independent service units.

Service Unit Groups

Service units may be clustered in a service unit group where they provide similar capabilities for economies of scale in the management of resources and technology. A group may share a database. When a database is shared by multiple service units, each of the supported service units has ownership responsibility and control for a portion of the database.

The service units of a service unit group must be managed by a common parent organization. This common manager has responsibility for the sharing of data between the service units. This group organization may improve performance by supporting sharing of data between the service units without replication that requires coordination of updates, but the cluster should, nevertheless, involve service units with similar capabilities that together represent a more general capability.

Data Sharing

A service unit will respond to the authorized queries against its master data, and it will honor requests for events to be generated when selected master data elements change. These change notices may be generated by actions of the service unit, but the notices can be managed more effectively if they are initiated by database triggers.

Master data may be requested from a service unit as needed, but for operating efficiency, a receiving service unit may store and maintain a replica of selected data obtained from the master source. Notices of change will keep the replicated data current (see notification service discussion in Chapter 8). The use of replication of data will depend on the frequency at which a receiving service unit must access the data, the frequency of updates to the data, and the volume of data that meet the recipient requirements.

Note that a service unit may associate local data with a business data entity without necessarily sharing all the data associated with the business entity. For example, the line items of an order might be replicated in the production service unit, while the status and operational detail associated with each line item remains local to the production service unit.

Access Locking

If synchronization is critical, requests to the master data service may require that data be retrieved from the master service unit with a lock (or “check-out” and later “check-in”) that prevents changes to the locked data until the requester has completed the associated operation and releases the lock. A service unit may store data from another service unit without locking to reduce the need for frequent retrievals and locking of stable data elements. However, without locking, there is a risk that the retrieved copy will become out of synchronization with the master, while another operation relies on an updated version of that data. Notices of changes will provide the opportunity to update the copy, but then it is still possible that the recipient has already relied on obsolete data.

Locks should be avoided if at all possible due to the risk of performance consequences to other services that would be locked out and the risk that interactions of multiple lock requests could result in a deadlock.

For example, consider if A obtains a lock on X and B obtains a lock on Y, then B requests a lock on X which is suspended pending release by A, and A requests a lock on Y that is suspended pending a release by B. Both A and B are then suspended pending further action by the other.

Legacy Systems

Some service units may be implemented as interfaces to legacy systems or purchased software that has not been designed for compatibility with the enterprise architecture. Data from these different sources must be accessible and combinable to provide a consistent view of enterprise operations. The service unit must provide an interface appropriate to its services and the data that are associated with it as a master data owner. The service unit(s) must ensure that the legacy system respects the ownership of master data of other service units. Compatibility is achieved through message transformation, discussed in Chapter 3.

Outsourcing

Services may be outsourced at a variety of levels of granularity. For example, a service could be as simple as a stock market query about a current stock price, or it may be outsourcing of a complex set of services that provide a major portion of the human resource management business function. Where the outsourcing represents a substantial business function, it includes management of enterprise master data, such as employee records in the case of human resource management outsourcing, and it may involve one or more external service units. Those service units, for the most part, will be capability service units, delivering business services, as discussed later.

The objectives for outsourced services should be the same as for corresponding internal service units. Services should have well-defined interfaces that are independent of whether the services are internal or outsourced. If the outsourcing is a substantial business function, it conceptually includes a number of service units characterized as service unit groups.

If the outsourcing provider implements best practices, the service interfaces should be consistent with service interfaces that its competitors either have or will have in the future. The outsourcing provider must provide access to its service units for sharing data that are needed or provided by other users within the client enterprise. For example, in the case of HR outsourcing, the client will need access to employee records along with a variety of services related to employment, benefits, compensation, and so forth, depending on the scope of the outsourcing.

Implementation of business capabilities as services should minimize the changes necessary for implementation of outsourcing. However, management of master data by the outsourced services must be reconciled with the internal service units that share that data. The internal organization responsible for management of the outsourcing relationship must have ownership responsibility for the outsourced master data.

The interfaces of the outsourced service unit(s) must continue to be supported for access to the outsourced capabilities and data. It may be necessary for the interface implementation to generate events and manage locks for changes to shared master data, and process updates for data from other sources.

Legal Records

The enterprise will receive and create electronic records that have legal effect, including internal records that involve authorization of transfers of assets or expenditures. These records should be represented in XML with electronic signatures. Some will also be encrypted for confidentiality. XML is discussed further regarding message exchange later. See the discussion of encryption and electronic signatures in Chapter 7.

These records must be preserved in their original form for the signatures and encryptions to remain valid. It is the responsibility of the service unit that creates the record or receives the record from an external source to preserve the original form along with a decrypted copy for internal sharing and processing. Some records will be cumulative, with cumulative signatures. Each extended record must be viewed as a new version of the record.

Data Compatibility

Throughout the enterprise, shared data must be compliant with a shared enterprise logical data model (ELDM), discussed further in the later section on metadata. The format of messages as exchanged must be consistent, but the format can be reconciled by the message exchange middleware discussed in Chapter 3. A service unit may use different internal formats for shared data and manage additional data elements and structures that are not shared.

However, shared data must not only have consistent elements and semantics (the meanings) but also represent a consistent point or period in time. Conventional enterprise applications control the relationships between the data they manage so that, within the scope of the enterprise application, data retrieved for a particular view are consistent. In contrast, service units are loosely coupled and must ensure that their database elements, particularly measurements, are consistent in time.

For example, some measurements may be accumulated weekly, while others are monthly. These measurements cannot be converted from one interval to the other.

Export Regulation

Enterprises often have operations in multiple countries. Many countries have laws or regulations that restrict the export of certain categories of data across their borders. This is particularly true for data related to personal privacy. The development of cloud computing has added the risk that such data could be deployed to a cloud computing node in a different country for workload balancing or to compensate for failure of another node.

Standards for control of data residency are currently under consideration in the OMG (Object Management Group). One approach could be to tag potentially sensitive data so that restrictions can be applied by the message exchange service based on the regulatory requirements affecting communication from the sender location to the receiver location. An attempt to send a message in violation of an export regulation should be detected at the sender. Of course this also requires that the message exchange facility knows the current geographic locations.

Capability Service Units

A capability service unit implements business capabilities and contributes to value streams.

Life-Cycle Ownership

Typically, a service receives a business request to produce a product or service for an external or internal customer. The request is pending until either it fails or the desired result is delivered. The service owns the request for the life-cycle of the request. This supports updates of the associated master data.

Consequently, a service retains responsibility for a request until all work is completed. Instead of transferring responsibility, the service unit delegates to service units that contribute to fulfillment of the request. The service unit that receives a request is the service unit that delivers the result.

In traditional, silo-based business systems, a request will be accepted by an organization and associated system, and when that organization's work is completed, it will transfer responsibility for continued work to the next organization. So each of these organizations has responsibility for a segment in the request life-cycle and thus responsibility for maintaining the associated master data.

Under certain circumstances, a request will be received as an asynchronous message that initiates another value stream, typically to deal with exceptions or support services. These have the form of a transfer of control. The service unit that accepts that transfer of control is then responsible for the life-cycle of that request for action. See the example in Bottom-Up Analysis in Chapter 3.

The service unit that receives a request is responsible for the master data records for that request. However, note that each of the service units engaged to contribute to the work receives its request and has life-cycle responsibility to resolve that request. As a result, a query for the status of a primary transaction is directed to the primary service unit that may, in turn, propagate the request through active delegations to provide more detail about the current status unless the delegation services send updates to the master data of the primary service. So the whole truth about a particular order, person, or product design may require propagation of a query to multiple service unit databases.

The identifier of the original request should be part of the request to each of the delegated services. This allows for data about a particular stage of processing to be requested directly from the service unit that performed that work.

Data Warehouse Support

Data on selected service events are sent to the data warehouse. These will be used for analysis of trends in performance and product or resource demand. The specific events and the scope of the event notices delivered to the data warehouse will depend on the value streams and business requirements for the data warehouse to support data analyses.

Measurement Reporting

Capability service units are the sources of value contributions. Measurements of value contributions may be taken at appropriate points in a service unit to correspond to collaborations and activities in the enterprise value delivery model. The level of granularity may vary depending on the level of concern and the ability of the process management services to capture appropriate measurements.

Potentially, detailed measurement reporting would be turned on when a particular value stream or capability is of particular interest and turned off when only high-level performance reporting is needed.

Measurements

The value measurements service unit captures individual value measurements and may report the latest measurement from a particular source, or it may develop statistical measurements for a period of time. If only a current measurement is reported, it may be appropriate to also report the variance for the last “n” reported measurements.

Note that in the long term, value measurements should be reported for value streams beyond the mainstream production operations so that various support services may also be monitored.

The measurements are in the context of the current value delivery model. This supports queries and reports on current and statistical measurements of performance of activities, capability methods, and value stream value propositions in the context of the current business design.

Note that this places a data capture requirement on the processes or activities being measured. A process that is engaged as a shared service must be measured in each of the contexts in which it is engaged. The measurements will differ depending on the requests. At the top-level process, some of the measurements for subprocesses can be taken from the results of activity that engages the subprocess assuming they can be and are measured at that level. If there are several levels of delegation with significant variance in performance from unit-to-unit of production, then performance may be difficult if not impossible to understand without the detail of the lower-level processes.

There should be a direct mapping between the VDM model capability methods and the operational business processes. The value types to be measured should be determined by stakeholder priorities.

Measurements should be captured for different scenarios, so there may be different measurements depending on product mix, production rate, and other relevant differences in circumstances. The modeling environment should enable business leaders to examine the differences between scenarios and monitor greater detail in areas of particular interest.

The value measurements service should also support tracking particular measurements for trends, variances, exceptional occurrences, and status related to objectives.

Separate value delivery models can represent to-be business configurations with measurement objectives for business transformation.

Data Warehouse

A data warehouse supports analysis of current and historical service events to identify trends, events, and correlations for insights on business operations and market patterns (based on customer orders). Current technology should support more timely and relevant data analyses.

Traditional Mode of Operation

Under established business intelligence services, a data warehouse receives inputs of events from business services as well as other sources and updates its database for an integrated view of the data. Inputs are typically taken as periodic batch extracts from production systems. The general assumption is that the cross-enterprise reporting is not time critical, so periodic updates provide data that are sufficiently current.

The process for feeding a data warehouse is often called extract transform load because the relevant data must be extracted from the production systems, transformed to a common format, and loaded in an appropriate manner to be reconciled with data from other sources. Transformation may include “cleansing” the data and merger of related data from different sources. The OMG Common Warehouse Metamodel (CWM, http://www.omg.org/technology/documents/modeling_spec_catalog.htm#CWM) specification provides a standard for modeling the transformation of data from different sources and different format technologies.

Agile Enterprise Data Warehouse

The agile enterprise supports more timely data warehouse analysis by accepting events as they occur. Unlike traditional data warehouse implementations, the agile enterprise data warehouse is limited to capture of internal events provided by capability service units and data from other sources are received by the analytics service unit. Possible events include initiation of a process, completion of a process, or completion of an activity within a process. Not all service events at all levels are of interest, so output of event notices should be turned on for events of interest.

The data warehouse provides these data for analysis by the analytics service unit. We include traditional business intelligence services under the heading of analytics. The measurements service unit provides another perspective for support of analytics.

Business Metadata

The integration of data from multiple sources involves data of differing quality and reliability. Within a single service unit, the quality and reliability of that service unit's data may be well understood, but as the users of data become more remote from the sources, the users may not understand the inaccuracies that can occur in the data they are using for analysis, planning, and decision making. Business metadata, such as the sources and credibility of data, should be captured with the data records being reported. Metadata is discussed further in a later section.

Analytics

Analytics refers to new mathematical and computational techniques for identification of important insights and opportunities from analysis of large volumes of data. Traditionally, data analysis has required substantial time investment in data collection and analysis, and it has been limited by availability of relevant data. More recently, large volumes of data have become available from many sources through the Internet and wireless communications. This includes data from tracking web activity, sensors, social media, and market activity. The Internet of Things will make large volumes of data available from a multitude of devices connected to the Internet.

Distributed computing technology has made it possible to rapidly analyze large volumes of data including unstructured text. Hadoop (http://hadoop.apache.org/) is an open source software product based on technology developed by Google for processing very large data sets in distributed computing clusters. The result is massively parallel computing that produces results in a fraction of the time required for conventional systems.

Various analytical techniques are built on top of Hadoop to analyze all forms of data: structured, semistructured (such as XML), and unstructured.

Analytics associated with “big data” has become an important aspect of strategic planning. However, it does require an investment, so the analyses should be focused on insights that available data can support and on issues that have value to the enterprise. The technology as well as the availability of useful big data are still evolving.

The agile DMA brings together three perspectives for analytics:

1. Big data provide access to specific, detailed events or actions both inside and outside the enterprise. The relevant events will depend on the industry and the particular enterprise.

2. The data warehouse service unit captures data from capability service events to support analyses of operational, customer, and supplier behavior.

3. The measurements service unit reflects operational performance that can be captured and analyzed at a range of levels of detail, for values including costs, timeliness, and quality measurements.

Business leaders should focus attention on aspects of the business that would have a significant impact on the business if business-as-usual assumptions were no longer valid. Assumptions may be related to customer demand, current technology, economic conditions, customer satisfaction, changing cost factors, changes in competition, and so on. Some analyses need only be performed periodically to discover and confirm patterns. Other concerns may call for continuous monitoring. Business leaders should work closely with analytics experts to identify meaningful analyses that may have a significant business impact.

In the final analysis, analytics is about mitigation of risk—risk of delayed response to a disruptive change, or risk of a missed opportunity that could have been revealed through a deeper understanding of an evolving business. Analytics does not provide business solutions, but it provides the basis for innovations and better decisions.

Dashboard Services

The agile enterprise architecture drives centralization of many planning and decision-making activities to ensure effective integration of services, optimize performance from an enterprise perspective, maintain or improve enterprise agility, and support governance. This strengthening of enterprise management and governance requires that enterprise leaders at multiple levels have access to a wealth of data from many sources and the ability to combine data from those different sources to gain appropriate insights and ensure accountability and control.

The dashboard concept arose from the vision of business leaders having real-time readings on what is happening in the business and the ecosystem so that the business can be driven with greater insight and agility in response to disruptions and emerging risks and opportunities. The dashboard service unit provides readings on business performance and opportunities that require management attention along with access to data for optimization and transformation of the business.

The dashboard must not just display raw data but must display data in context. The enterprise VDM model is a primary context for internal operating data. Additional models are needed for the context of market data, business transformation management, financial data, regulatory compliance indicators, and data on human resource management.

Dashboards must be tailored to the needs of individual business leaders. A dashboard should be initially configured by a technical support person, but it should provide controls that enable the user to refine the configuration as required to tap into and display events and measurements from selected services. The business leader should be able to identify certain measurements, trends, or events for continuous tracking; set alarms triggered by variance beyond expected or allowed limits; and look deeper into the models, ad hoc, to explore relevant factors. They should be able to selectively monitor certain business measurements or events and set thresholds for alerts.

Knowledge Management Services

Enterprise agility requires access to shared knowledge about how the enterprise works as well as knowledge that provides the basis for achieving optimization of operations and competitive advantage. Knowledge management involves the identification, retention, and retrieval of relevant insights and experiences. Much of this enterprise knowledge is in the heads of the enterprise's employees. Some of it has been or can be captured in textual documents. Still less has been codified in business rules and models. Knowledge management is critical to enterprise agility because it provides insights for determining what changes are needed and how to make them.

The primary challenge of knowledge management is to support knowledge capture and access and to engage the right people to contribute needed knowledge.

Traditionally, relevant documents and subject matter experts were managed within a departmental silo, often at a single office location. With a capability-based architecture, these functional capabilities are divided into more granular, shared services. The services may be geographically distributed, and some employees may work from home so there may be little informal, face-to-face contact. In addition, in support of adaptation initiatives, it is necessary to share knowledge more widely so that many factors can be considered to optimize from an enterprise perspective.

Knowledge management services, identified in Fig. 6.1 and discussed later, represent key aspects of the broader concept of knowledge sharing. Knowledge is an asset of the enterprise, but much of it is in the heads of its employees and will continue to be. Some of it can be captured for more effective sharing and to mitigate losses that result from employee turnover. Some knowledge can be encoded in computer applications as business rules (discussed in Chapter 5). Business models are also another form of structured knowledge. We have discussed business models in Chapters 2 and 4, and we focus on business models again in Chapters 9 and 11. Some individuals with expertise related to business capabilities can be identified by their current roles in VDM and BCM models and their contributions to knowledge management conversations and documents.

Here we focus on knowledge captured in electronic conversations and textual documents using existing practices and technologies. Both of these involve knowledge in text that is subject to web-search technology and analytics.

Knowledge Conversations

There are two popular forms of electronic conversations: email and group discussions. Both of these are based on communications among members of an interest group. An interest group has a web page that identifies the area of interest, the list of members, any access restrictions, and a form to apply for membership in the interest group. The web page should provide access to the email threads and discussions described below as well as the knowledge documents discussed later.

The interest group membership should be limited to enterprise employees. Members of an interest group should be self-selecting except that participation in some groups must be authorized if the interest group deals with restricted subject matter.

Email Threads

Email threads occur frequently between two or more participants with a common interest. Participants often share significant insights. This knowledge sharing should be captured and cataloged by the enterprise for future reference.

Each interest group, discussed above, should have a group email distribution list used to deliver relevant emails. An interest group member may have an insight to share and send a message to the interest group. More often, a member will have a question or an issue to resolve and will send an email for suggestions or opinions. In either case, the subject line should identify the topic to be discussed, and it becomes the identifier of the thread. The interest group web page should track all active threads by subject line. A member should be able to select an active thread to join.

Participants may come and go as the thread evolves. An email contributor may add a potentially interested, nonmember participant to a thread to join the discussion. Another participant may determine that the topic is not of interest to him or her, and opt out of the thread. All messages are captured and accessible from the interest group web page by all employees unless the interest group is restricted.

A participant in a thread may create a branch thread by changing the subject to a new topic. Members of the interest group should be notified of initiation of the new thread and opt in if they are interested. The original thread and the branch may both continue with the same participants, but each should be true to the topic in its subject line.

Group Discussions

Members of an interest group should also have the option of participating in a discussion where their comments on a particular topic are captured, in sequence on a web page. This service is patterned after LinkedIn and similar services.

A member can initiate discussion on a topic that will be announced to other members in the interest group. Any member that contributes a comment to the topic is optionally notified of subsequent comments by email. A discussion is closed if there are no new comments for a defined period such as a month (to allow for vacations or other distractions).

A branch discussion thread can be initiated to explore a new, related topic. The new topic is opened in a new web page and is announced to the interest group, so members can opt in. The contributors of the old topic continue to receive notices of comments to both the original and branch topics.

Knowledge Documents

Knowledge documents include two basic forms: authored documents and encyclopedia entries.

Authored Documents

An authored document is a natural language and/or a presentation file in a final form prepared by humans for human understanding. Authored documents address particular topics to support proposals, presentations, results of analyses, understanding of mechanisms, explanation of specifications, and capture of other knowledge of value for sharing and future reference. These data are just as important to the operation of the business as are structured data, but they are often given little attention from an IT perspective because they represent unstructured data.

Each document is given a unique identifier and a formatted header that includes the document type (from defined alternatives), tags for web search, an abstract, the author(s), the date created, a statement of the context (the associated project, initiative or other reason for development), links to previous and/or subsequent editions, and identification of relevant interest groups, the same as those discussed for conversations above. Each document is posted in a directory for the year in which it was created. Once an authored document is posted, it cannot be changed, but it may be linked to a newer edition.

Encyclopedia Entries

The encyclopedia is a wiki containing discussions of distinct topics. An employee may create a new topic with an associated discussion, a list of related, existing topics, and the identity of one or more of the relevant interest groups as defined for conversations above. The interest group members are notified of each new topic.

Persons interested in a topic may request notice of subsequent changes to the content. Anyone can add to or revise the topic content. The changes made by individual contributors are logged. Conflicting views should be included, identified, and subject to email discussion and potential resolution by an associated interest group.

Metadata

Metadata are data about data. Metadata are important for design of systems as well as for accountability and consideration of credibility. Metadata define the context of data and thus are essential to understanding the meaning of data. Consequently, metadata are an essential part of presenting data to users.

Metadata are a normal part of the technical design and operation of information systems. However, it is important to recognize that there are often additional business requirements for metadata. Metadata that specify the meaning and relationships of data elements are often called technical metadata because they are used for the design and implementation of systems. Business metadata refers to metadata that define the business context and quality of the data, such as the timeliness, source, and reliability. These metadata are important to business people because they can affect their reliance, interpretations, and resulting decisions.

For example, a customer order form provides metadata that describe the data fields. Data about the source, reliability, and timeliness of a record are metadata. Data about the structure of rules, neural networks, and business models are metadata. Computer programs are metadata although they are not usually described that way. So computers not only capture, store, and communicate data that represent things about the enterprise, but they also capture, store, and communicate data that specify what the data mean and what computers do with them.

In this section, we will discuss six forms of business metadata:

• Data dictionaries

• Data directory

• Data virtualization

• Value delivery model

• Logical data model

Embedded Metadata

Individual records often contain some data that are about the data in the record. The most common is a time stamp, indicating when the record was created or changed. A record often contains the identifier for the person or device that created, changed, or approved the record. When a file, such as a document or a model, is created as a unit, then the file will have associated metadata. When a file or database contains records from different sources, it is often important to identify the source in the data for accountability, particularly if data from the different sources differ in quality.

Messages and other documents expressed in XML contain metadata. XML embeds identifiers of all data elements as well as data for encryption and signatures.

Data Dictionaries

Each database will have a data dictionary that defines the data elements, structures, and constraints of the database. In the DMA, there will be a separate database and associated data dictionaries for the database of each service unit or group of service units. Separate databases and data dictionaries are consistent with the hiding of service unit implementation and support the autonomy of a service unit, so changes can be made without reconciling the database design with other organizations.

Data Directory

Here, we define a data directory in the context of the DMA. The data directory contains the metadata needed to understand and manage the distributed databases and files of the DMA.

The data directory provides an overview of the data managed by each service unit including the master data managed by the service unit, and the data that is replicated from the master data of another service unit. It references the (ELDM, discussed below) to identify the business data entities. It identifies the business data entities of master data that is the responsibility of each service unit and it identifies the service units that also maintain replicas of the master data entities.

The data directory may include identification of the organization unit responsible for each service unit, and the geographical location of the service unit.

The data directory should include other business metadata that is not embedded in the actual data such as information about the quality and timeliness of the data. The data directory should also include data security requirements and information on data recoverability.

Data Virtualization

Data virtualization is software product capability that supports unified access to heterogeneous data sources in a form consistent with a unified data model such as the ELDM, discussed below. In the DMA, it will be desirable to perform queries that integrate data from multiple service units.

The data virtualization service requires that each service unit provide a mapping of its master data or any additional data that may be subject to queries to the associated elements of the enterprise master data model. This may include additional metadata that would be of interest to a requestor. The mapping specification may be based on the OMG CWM.

Note that the virtualization facility circumvents the rule that a database is hidden within its service unit and all accesses must go through the service unit. Updates through virtualization must be prohibited since that would undermine the control of the service unit to ensure data integrity. Queries must still be subject to access authorization, but access controls associated with the database may be sufficient. Queries might also be “depersonalized,” so queries are unable to access data that could identify individual entities.

VDML Metadata

VDML represents two kinds of metadata: measure specifications and business items.

Measure Specifications

VDML captures value measurements. A Measure Library defines the measures, the Value Library defines the use of the measurements, and the VDML model defines the context in which these measurements occur. Various other VDML elements, such as participants, resources, and activities, may have associated measurements that describe their characteristics or behavior. For example, a store may record the number of business items waiting for an activity, or a deliverable flow may have a specified transit time.

The VDML model as context is important for reporting measurements of the Measurements Service Unit. Measurements received from business operations will be recorded on appropriate model elements. Users will look at the model to find relevant measurements.

Measurements may be captured in different contexts (eg, product mix or production rate) and associated with different VDML scenarios. The scenario defines the particular business context of the measurements.

These measurements may be reports of individual measures of occurrence (eg, one execution of an activity), or they may be statistical values with a mean and standard deviation representing the measurements during a period of time. Additional metadata may be associated with the VDML context element such as the source of the measurement. The association of measurements with activities, capabilities, and organization units provides identification of responsibility for performance measurements.

Business Items

Business item elements represent things that flow through activity networks and between collaborations. These may be records, orders, resources, products, parts, and so on. From a data modeling perspective, these are views based on a particular business entity. For example, a business item may be a customer order including order items, item detail, such as descriptions and prices, and a reference to the customer. The order is the key entity, identified by an order number, and the business item is represented as a nested data structure.

The VDML model does not include individual business items unless it has been extended to support simulation. The business item elements are described in the Business Item Library, and the data structure can be described to the extent it is relevant to the VDM analysis. The detail of the Business Item structure can be found in the ELDM. Additional metadata, such as data quality and range, may be included in the Business Item Definition and the data directory.

Enterprise Logical Data Model

The ELDM specifies the meaning, format, and relationships of all of the structured data of the enterprise that is exchanged between service units or is accessible through queries. It is an abstract metamodel, disassociated from the actual sources and storage of the data. Physical data models are used by technical people to define how the data are actually stored in databases as well as the data structures that may be exchanged by services and used within applications.

There must be an enterprise-wide understanding of the form and meaning of data exchanged, particularly since service user/provider relationships may be many to one or many to many and may change over time. The ELDM provides the common understanding for the exchange of data between service units and for management coordination, recordkeeping, planning, and decision-making activities.

Note that it is not essential that the business terms used to describe the data elements are the same throughout the enterprise, but the business concepts and the meaning of the data must be consistent. So data may be presented in a report or display in New York with English captions and annotations, and the same data may be presented in a report or display in Paris with French captions and annotations, and there need be no misunderstanding.

Development of an ELDM is a major undertaking, but some of the work has already been done. The enterprise would not function today if there were not some common understanding of the data in reports and records and the data communicated between systems. Sometimes the inconsistencies are not in the data per se but in what it is called in different departments. The current ability to exchange data requires that some degree of common understanding was developed in the past although that may not have been captured in a shared data model.

Industry Standards

From an industry perspective, understanding the data exchanged between enterprise applications was a major challenge of enterprise application integration. Suppliers of enterprise systems realized that the integration of their systems would be less costly and disruptive if they could exchange data consistent with a common data model, so they promoted the development of standards for exchange data. Many such standards were developed by the Open Applications Group, Inc. (OAGi).

Common data models are evident in industry standards such as those developed by the United Nations Centre for Trade Facilitation and Electronic Business, XML Business Reporting Language, and Human Relations XML. In the telecommunications industry, the TeleManagement Forum has developed a substantial ELDM called Shared Information/Data.

As the scope of electronic integration of the enterprise expands to include relationships with customers and suppliers, the scope of need for data consistency expands. When orders were exchanged, and coordination was accomplished by human-to-human communications, the people involved were able to compensate for inconsistencies, translating the terminology and the data formats as required. Computers are not so forgiving. Thus, there is a need for a shared specification for data exchange.

As a result of these forces, the world of information systems is converging toward a common LDM. Although new concepts emerge, we can expect this industry convergence to continue so that most differences will be only where innovative business solutions provide an enterprise with competitive advantage. For the most part, even significantly different business processes and product designs will use accepted interchange data models.

To the individual enterprise, industry convergence on a common data model reduces the cost of developing data models and database designs; reduces the cost of developing, maintaining, and executing data transformations; and improves the ability to measure and analyze operating performance and key indicators from an enterprise perspective. Consequently, it is the interest of every enterprise to align as much as possible to the emerging global logical data model.

Logical data models that should reflect the emerging common model are available as products for purchase or as a basis for consulting and integration services. Work on an ELDM should not start from scratch.

Data Modeling

In the meantime, each enterprise must establish and maintain an ELDM as the basis for exchange, storage, and retrieval of shared data. The primary form of enterprise data modeling is class models, generally associated with object-oriented programming and the Unified Modeling Language (UML, http://www.omg.org/spec/UML/2.4.1). These models provide a relatively robust way of representing business entities, their attributes, and their relationships. Implementations of computer applications usually employ relational databases, so for database technical design, entity-relationship modeling is more suitable, but we do not get into that level of detail here. We focus on class models that are the generally accepted form of logical data models.

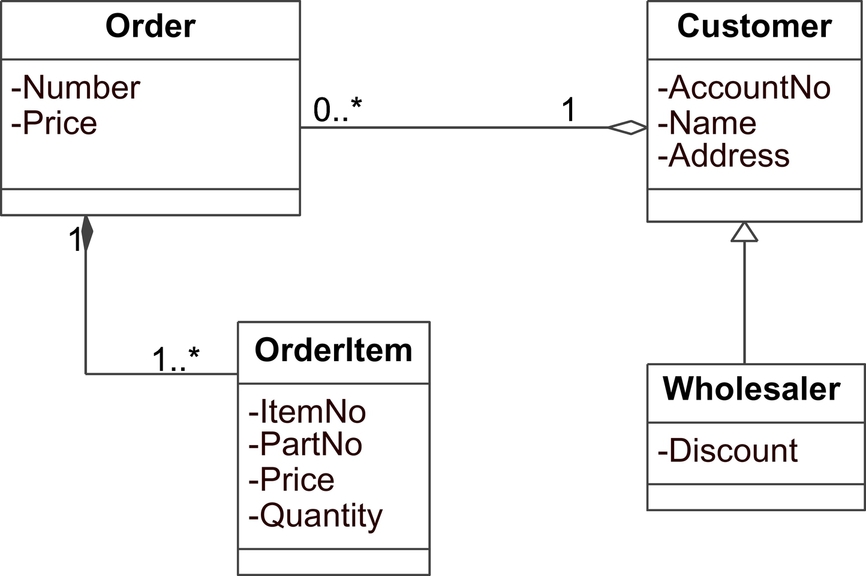

A class defines the representation of similar persons, places, or things in the business domain. Class models rely on a concept called inheritance, whereby a class may inherit characteristics defined for a more general class. Fig. 6.2 illustrates a class diagram for a customer-order example. A class may represent a generalization of similar things, such as Customer, or it may be specialized to represent a more specific type of things, such as Retail Customer or Wholesaler. The more specialized class is said to inherit from the more general class, so characteristics of Customer are inherited by Wholesaler, as indicated by the open-headed arrow from Wholesaler to Customer in the diagram. This is a UML class diagram. UML is the generally accepted standard developed by OMG for modeling a number of aspects of an object-oriented application.

Inheritance provides a way to describe similarities between classes. Several different classes may inherit common characteristics from a shared parent class, or a specialized class may be defined as adding characteristics to a more general class. In the class diagram in Fig. 6.2, the Order, Customer, and Order Item classes show attributes of the classes within the boxes. The Wholesaler class is a specialization of Customer. Wholesaler inherits Account No, Name, and Address from Customer and has an additional attribute that is a discount specification that presumably is not meaningful for most customers. Wholesaler implicitly has all the other attributes and relationships of Customer, so a Wholesaler “is” a customer with an additional attribute. A Wholesaler can occur anywhere in a model or database that a Customer can occur. The numbers on the relationships indicate the cardinality, for example, an order must have one customer, but a customer can have zero or more orders (0..⁎).

The ELDM is represented with a class model, but the data may be expressed in different forms for storage and exchange. Generally, data exchanged between services are expressed in XML. Data stored in databases are most often in relational tables. Data warehouses have specialized data structures to support retrieval and analysis. Transformations between these and other representations can be modeled with tools based on the OMG CWM.

An ELDM must represent everything that may be shared through message exchanges, event notices, or queries. Service units may also include their local data since it will be related to the service unit master data and data it replicates from other service units. This may require thousands of classes, but not all these classes are implemented in a single database. A database stores the subset of the data that are of interest to the associated service unit or group of service units.

For example, in a manufacturing enterprise, incoming orders are captured by the order management service unit along with related sales and customer data. The order requirements are included in the delegation to the order fulfillment service unit, but without the sales and customer detail. The order fulfillment service unit will add data related to scheduling the order and may translate sales order specifications to production specifications before submitting the production request. During production, the production service unit may add data regarding the specific configuration, component serial numbers, or batches applicable to each order. So, as the order proceeds through fulfillment and closure of the sale, each of the service units will receive the data required to do their work and add data relevant to their work. Some data will ultimately be added to the completed master order in the sales organization and other data will be held as local, working data in the participating service units.

To get a comprehensive report on a customer order, it might be necessary to query several databases that hold data related to that order. When such reporting, analysis, or archiving occurs, the ELDM ensures that the data from different sources can be combined to produce a consistent representation and the meaning will be generally understood.

Enterprise Transition

The DMA suggests a major, technical restructuring associated with transformation of the enterprise. Here we take a brief look at key aspects of that transformation from a DMA perspective. The topics below are presented in approximate chronological order. See also the value delivery maturity model in Appendix A.

Messaging Infrastructure

The agile enterprise messaging infrastructure has been discussed in Chapter 3. It is fundamental to the integration of services. The infrastructure must include a notification broker to direct event notices to subscribers. Here we are concerned with communicating changes to data in databases. These change notices may be needed to keep copies of data consistent with the master data, they may be needed to monitor status or measurements as they change, or they may be needed to initiate some action. More about the events and the notification broker is discussed in Chapter 8.

Requests for data transfers, the data transfers, and notices of updates should have a standard message format that is independent of the particular database technology.

Knowledge Management Service Unit

Work can start early to define interest groups, support conversations, capture documents, and support wiki authoring. There is minimal investment required in a supporting infrastructure, and the focus can be on providing incentives to contribute and web pages for collaboration.

Enterprise Logical Data Model

The ELDM need not represent all of the data of the enterprise. The most important data are those exchanged in messages including event notices, data queries, and updates. The basic format of these messages must be defined. Data that are created and used within a service unit are less important, but individual service units must extend the ELDM to integrate the local data with the shared data. Within the shared-data subset, the initial focus should be on the application to shared services. Consideration also must be given to business metadata.

The starting point should be industry standards, where available. Purchase of an enterprise data model should be considered since it will provide a major step forward.

Preferred Database Management System

A database management system that supports triggers is an important component. Implementation hiding of service units includes the database and its management system, but support for triggers to support event generation will add significant value. In some cases, it will be worthwhile to consider conversion of the database of a legacy system to support triggers, particularly if that legacy system is to be “wrapped” in a capability service unit interface.

Opportunistic Service Unit Development

Service units should be developed based on opportunities for return on investment or competitive advantage. Some of these may be implemented by wrapping legacy systems with service unit interfaces; large applications may be configured as a virtual cluster of service units with a shared database. Each service unit will include supporting services for reporting status, responding to queries, requesting change notices, and so on. Consistent protocols should be defined for these supporting services for economies of development and integration of service units (see Chapter 9 regarding business transformation management). These supporting services may be implemented in phases depending upon the needs of services they support.

Data Warehouse Upgrade

If the enterprise has a data warehouse capability, it should be upgraded to accept service event notices as they occur rather than in batch. Priorities should be defined for tracking the events that provide the greatest potential business value. Existing systems as well as service units can be updated to generate event notices based on the priorities.

Analytics Service Unit

An initial analytics service unit organization should be established to provide analytics expertise, set priorities, and identify available sources of data. Initiatives should start small to implement some tools and develop working relationships, management appreciation of analytics, and insights on potential strategic opportunities. Development of an analytics capability is both a technical and a cultural challenge.

Data Directory

As service units are established, there will be an increasing need for information about the master data they each support and the services that replicate the master data. Additional metadata will also become increasingly important. A data directory service unit should be developed as the need emerges.

Value Delivery Model

Development of an enterprise VDM model requires a substantial investment. Value delivery modeling should start with a segment of a single line of business where there is a recognized need for improvement with measurable business value. The scope of the VDM model should evolve over time, driven by business value opportunities. The long-term goal is to develop and maintain a VDM model that represents the current state of the business and is the basis for modeling potential future states of the business.

Value Measurements

Output of value measurements should be part of the development of shared services. Priority measurements should be driven by customer value priorities. Measurement should focus on the results of business processes aligned with VDM model capability methods. Activity-level measurements may be developed later.

Although the current business structure may not be ideal, the VDM model collaborations should align with the current business process hierarchy so that VDM model measurements will reflect the actual performance of business operations. It is important for the VDM model to represent value streams that are being measured so that the measurements have the VDM model to define a context for the measurement data.

Model-Based Dashboards

Management dashboards should be developed to provide managers with access to up-to-date measurements and events. Measurements should be presented in the context of the VDM model and other models. The VDM model should provide support for viewing the broader business design context and related capabilities and measurements.

Investment in dashboard capabilities should proceed when there is a critical mass of measurements and events that are of interest to business leaders. Individual business leaders should not be introduced to dashboards if the dashboard does not provide more timely accurate and complete data of interest to the business leader by comparison to the information provided by their supporting staff.

Moving Forward

Security is a fundamental concern for data management, and appropriate security mechanisms must be part of the data management infrastruc-ture. Security is the focus of the next chapter.