Chapter 5. Integer Security

1. Douglas A. Gwyn is retired from the U.S. Army and is an Emeritus Member of INCITS PL22.11. David Keaton is a senior member of the technical staff in the CERT Program of Carnegie Mellon’s Software Engineering Institute (SEI) and chair of INCITS PL22.11. David Svoboda is a member of the technical staff for the SEI’s CERT.

Everything good is the transmutation of something evil: every god has a devil for a father.

—Friedrich Nietzsche, Sämtliche Werke: Kritische Studienausgabe

5.1. Introduction to Integer Security

The integers are formed by the natural numbers including 0 (0, 1, 2, 3, . . .) together with the negatives of the nonzero natural numbers (–1, –2, –3, . . .). Viewed as a subset of the real numbers, they are numbers that can be written without a fractional or decimal component and fall within the set {. . . –2, –1, 0, 1, 2, . . .}. For example, 65, 7, and –756 are integers; 1.6 and 1½ are not integers.

Integers represent a growing and underestimated source of vulnerabilities in C programs, primarily because boundary conditions for integers, unlike other boundary conditions in software engineering, have been intentionally ignored. Most programmers emerging from colleges and universities understand that integers have fixed limits. However, because these limits were deemed sufficient or because testing the results of each arithmetic operation was considered prohibitively expensive, violations of integer boundary conditions have gone unchecked for the most part in commercial software.

When developing secure systems, we cannot assume that a program will operate normally, given a range of expected inputs, because attackers are looking for input values that produce an abnormal effect. Digital integer representations are, of course, imperfect. A software vulnerability may result when a program evaluates an integer to an unexpected value (that is, a value other than the one obtained with pencil and paper) and then uses the value as an array index, size, or loop counter.

Because integer range checking has not been systematically applied in the development of most C software systems, security flaws involving integers will definitely exist, and some of them will likely cause vulnerabilities.

5.2. Integer Data Types

An integer type provides a model of a finite subset of the mathematical set of integers. The value of an object having integer type is the mathematical value attached to the object. The representation of a value for an object having integer type is the particular encoding of the value in the bit pattern contained in the storage allocated for the object.

C provides a variety of standard integer types (with keyword-specified names) and allows implementations to define other extended integer types (with non-keyword reserved identifier names); either can be included in type definitions in standard headers.

The standard integer types include all the well-known integer types that have existed from the early days of Kernighan and Ritchie C (K&R C). These integer types allow a close correspondence with the underlying machine architecture. Extended integer types are defined in the C Standard to specify integer types with fixed constraints.

Each integer-type object in C requires a fixed number of bytes of storage. The constant expression CHAR_BIT from the <limits.h> header gives the number of bits in a byte, which must be at least 8 but might be greater depending on the specific implementation. With the exception of the unsigned char type, not all of the bits are necessarily available to represent the value; unused bits are called padding. Padding is allowed so that implementations can accommodate hardware quirks, such as skipping over a sign bit in the middle of a multiple-word representation.

The number of nonpadding bits used to represent a value of a given type is called the width of that type, which we denote by w(type) or sometimes just N. The precision of an integer type is the number of bits it uses to represent values, excluding any sign and padding bits.

For example, on architectures such as x86-32 where no padding bits are used, the precision of signed types is w(type) – 1, while, for unsigned types, the precision equals w(type).

There are other ways to represent integers, such as arbitrary-precision or bignum arithmetic. Those methods dynamically allocate storage as required to accommodate the widths necessary to correctly represent the values. However, the C Standard does not specify any such scheme, and, unlike C++, built-in operators such as + and / cannot be overloaded and used in expressions containing such abstract data types. Applications such as public-key encryption generally use such a scheme to get around the limitations of C’s fixed sizes.

The standard integer types consist of a set of signed integer types and corresponding unsigned integer types.

Unsigned Integer Types

C requires that unsigned integer types represent values using a pure binary system with no offset. This means that the value of the binary number is ![]() . The rightmost bit has the weight 20, the next bit to the left has the weight 21, and so forth. The value of the binary number is the sum of all the set bits. This means that all-zero value bits always represent the value 0, and the value 1 is represented by all zeros except for a single 1 bit, which is the least significant bit. Unsigned integer types represent values from 0 through an upper limit of 2w(type) − 1.

. The rightmost bit has the weight 20, the next bit to the left has the weight 21, and so forth. The value of the binary number is the sum of all the set bits. This means that all-zero value bits always represent the value 0, and the value 1 is represented by all zeros except for a single 1 bit, which is the least significant bit. Unsigned integer types represent values from 0 through an upper limit of 2w(type) − 1.

All bitwise operators (|, &, ^, ~) treat the bits as pure binary, as shown in Example 5.1.

Example 5.1. Bitwise Operators: 13 ^ 6 = 11

1 1 0 1 = 13

^ 0 1 1 0 = 6

--------------

1 0 1 1 = 11

Unsigned integers are the natural choice for counting things. The standard unsigned integer types (in nondecreasing length order) are

1. unsigned char

2. unsigned short int

4. unsigned long int

5. unsigned long long int

The keyword int can be omitted unless it is the only integer-type keyword present.

Nondecreasing length order means that, for example, unsigned char cannot be longer than unsigned long long int (but can be the same size). The many different widths reflect existing hardware; as time progressed, registers also became larger, so longer and longer types were introduced as needed.

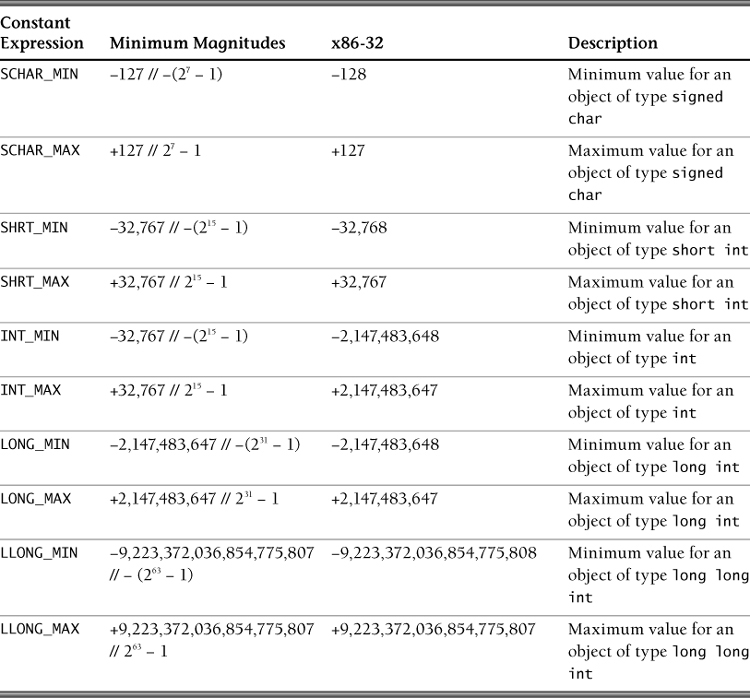

Compiler- and platform-specific integral limits are documented in the <limits.h> header file. Familiarize yourself with these limits, but remember that these values are platform specific. For portability, use the named constants and not the actual values in your code. The “Minimum Magnitudes” column in Table 5.1 identifies the guaranteed portable range for each unsigned integer type, that is, the smallest maximum value allowed by an implementation. These magnitudes are replaced by implementation-defined magnitudes with the same sign, such as those shown for the x86-32 architecture.

Because these are unsigned values, the minimum magnitude is always 0, and no constants are defined for it.

Minimum widths for the standard unsigned types are unsigned char (8), unsigned short (16), unsigned int (16), unsigned long (32), and unsigned long long (64).

C added a first-class Boolean type. An object declared type _Bool is large enough to store the values 0 and 1 and acts as unsigned. When any scalar value is converted to _Bool, the result is 0 if the value compares equal to 0; otherwise, the result is 1.

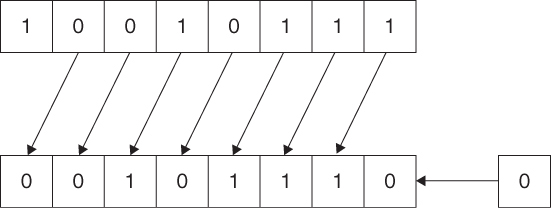

Wraparound

A computation involving unsigned operands can never overflow, because a result that cannot be represented by the resulting unsigned integer type is reduced modulo the number that is 1 greater than the largest value that can be represented by the resulting type. For addition and multiplication, this is the same as pretending that there are additional high-order (most significant) bits appended to make sufficient room for the representation and then discarding these bits.

You can visualize wraparound using the 4-bit unsigned integers wheel shown in Figure 5.1.

Incrementing a value on the wheel produces the value immediately clockwise from it. Note that incrementing an unsigned integer at its maximum value (15) results in the minimum value for that type (0). This is an example of wraparound, shown in Example 5.2.

1 unsigned int ui;

2 ui = UINT_MAX; // e.g., 4,294,967,295 on x86-32

3 ui++;

4 printf("ui = %u

", ui); // ui = 0

5 ui = 0;

6 ui--;

7 printf("ui = %u

", ui); // ui = 4,294,967,295 on x86-32

An unsigned integer expression can never evaluate to less than zero because of wraparound. Therefore, it is possible to code tests in C that are always true or always false. In Example 5.3, i can never take on a negative value, so this loop will never terminate.

Example 5.3. Unsigned Integer Expression and Wraparound

for (unsigned i = n; --i >= 0; ) // will never terminate

Such tests are probably coding errors, but this wraparound-induced infinite loop is not considered to be an error according to the language definition. Whether it is an error from the intended algorithm’s point of view depends on the algorithm; for counting things (++n), it is certainly an error. If you have counted 32,768 events, you probably do not expect the code to act as if no events occurred after the next event is registered.

This type of software failure occurred on Saturday, December 25, 2004, when Comair halted all operations and grounded 1,100 flights after a crash of its flight-crew-scheduling software. The software failure was the result of a 16-bit counter that limits the number of changes to 32,768 in any given month. Storms earlier in the month caused many crew reassignments, and the 16-bit value was exceeded.

To avoid such unplanned behavior, it is important to check for wraparound either before performing the operation that might cause it or (sometimes) afterward. The limits from <limits.h> are helpful, but naïve use of them does not work. Example 5.4 shows a check for wraparound.

Example 5.4. Testing for Wraparound

1 unsigned int i, j, sum;

2 if (sum + i > UINT_MAX) // cannot happen, because sum + i wraps

3 too_big();

4 else

5 sum += i;

You must implement your test in a manner that eliminates the possibility of wraparound:

1 if (i > UINT_MAX - sum) // much better!

2 too_big();

3 else

4 sum += i;

The same problem exists when checking against the minimum unsigned value 0:

1 if (sum - j < 0) // cannot happen, because sum – j wraps

2 negative();

3 else

4 sum -= j;

The proper test is as follows:

1 if (j > sum) // correct

2 negative();

3 else

4 sum -= j;

Unless the exact-width types such as uint32_t from <stdint.h> are used (discussed in the section “Other Integer Types”), the width used in a wraparound depends on the implementation, which means different results on different platforms. Unless the programmer takes this into account, a portability error will likely occur.

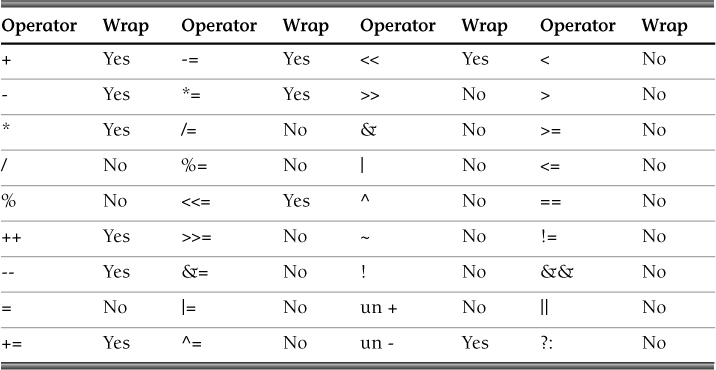

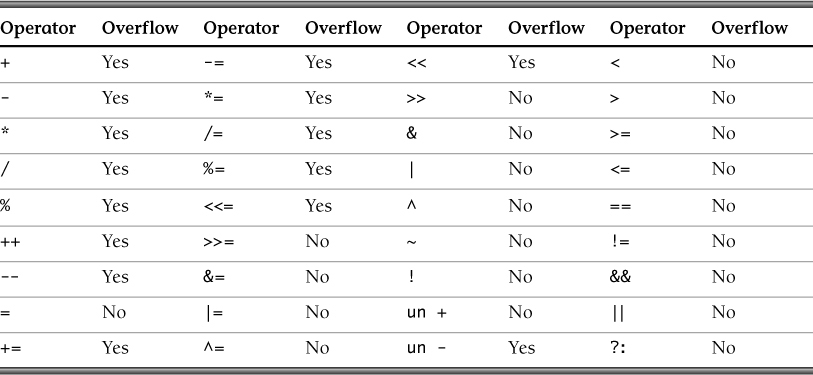

Table 5.2 indicates which operators can result in wrapping.

Signed Integer Types

Signed integers are used to represent positive and negative values, the range of which depends on the number of bits allocated to the type and the representation.

In C, each unsigned integer type, excluding the type _Bool, has a corresponding signed integer type that occupies the same amount of storage.

Standard signed integer types include the following types, in nondecreasing length order (for example, long long int cannot be shorter than long int):

1. signed char

2. short int

3. int

4. long int

5. long long int

Except for char, signed can be omitted (unadorned char acts like either unsigned char or signed char, depending on the implementation and, for historical reasons, is considered a separate type). int can be omitted unless it is the only keyword present.

Furthermore, all sufficiently small nonnegative values have the same representation in corresponding signed and unsigned types. One bit, which is called the sign bit and is treated as the highest-order bit, indicates whether the represented value is negative. The C Standard permits negative values to be represented as sign and magnitude, one’s complement, or two’s complement.

Sign and Magnitude

The sign bit represents whether the value is negative (sign bit set to 1) or positive (bit set to 0), and the other value (nonpadding) bits represent the magnitude of the value in pure binary notation (same as for unsigned). To negate a sign and magnitude value, just toggle the sign bit.

For example, 0000101011 equals 43 in pure binary notation or sign and magnitude. To negate this value, simply set the sign bit: 1000101011 = –43.

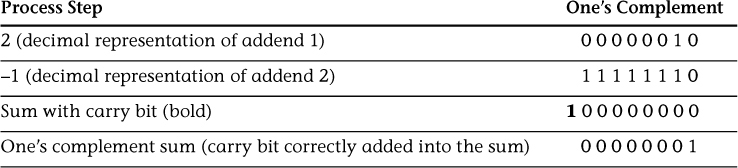

One’s Complement

In one’s complement representation, the sign bit is given the weight –(2N–1 – 1), and the other value bits have the same weights as for unsigned. For example, 1111010100 equals –43 in one’s complement representation. Given a width of 10 bits, the sign bit is given the weight –(29 – 1) or –511. The remaining bits equal 468, so 468 – 511 = –43.

To negate a one’s complement value, toggle each bit (including the sign bit).

Two’s Complement

In two’s complement representation, the sign bit is given the weight –(2N–1), and the other value bits have the same weights as for unsigned. For example, 1111010101 equals –43 in two’s complement representation. Given a width of 10 bits, the sign bit is given the weight –(29) or –512. The remaining bits equal 469, so 469 – 512 = –43.

All three methods are in use on various platforms. However, on desktop systems, two’s complement is most prevalent.

To negate a two’s complement value, first form the one’s complement negation and then add 1 (with carries as required).

Table 5.3 shows the binary and decimal representations for illustrative values of an 8-bit two’s complement (signed) integer type with no padding (that is, N = 8). Starting from the rightmost bit int, the binary representation and increment i from 0 to N, the weight of each bit is 2i, except for the leftmost bit, whose weight is –2i.

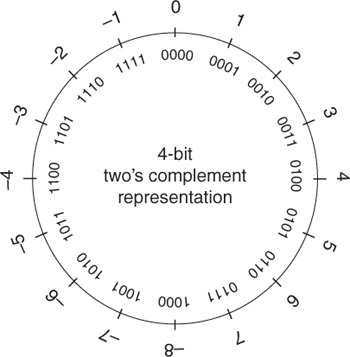

Figure 5.2 shows the two’s complement representation for 4-bit signed integers.

Note that incrementing a 4-bit two’s complement signed integer at its maximum value (7) results in the minimum value for that type (–8).

Integer Representations Compared

Table 5.4 shows the sign and magnitude, one’s complement, and two’s complement representations for some interesting values assuming a width of 10 and ignoring padding.

Sign and magnitude and one’s complement have two representations for the mathematical value 0: normal zero and negative zero. The negative zero representation might be produced by logical operations but is not allowed for the result of any arithmetic operation unless one of the operands had a negative zero representation.

On a computer using two’s complement arithmetic, a signed integer ranges from –2N–1 through 2N–1 – 1. When one’s complement or signed-magnitude representations are used, the lower bound is –2N–1 + 1, and the upper bound remains the same.

Signed Integer Ranges

The “Minimum Magnitudes” column in Table 5.5 identifies the guaranteed portable range for each standard signed integer type. They are replaced by implementation-defined magnitudes with the same sign, for example, those shown for the x86-32 architecture.

C-Standard–mandated minimum widths for the standard signed types are signed char (8), short (16), int (16), long (32), and long long (64).

Actual widths for a given implementation may be inferred from the maximum representable values defined in <limits.h>. The sizes (number of storage bytes) of these types of objects can be determined by sizeof(typename); the size includes padding (if any).

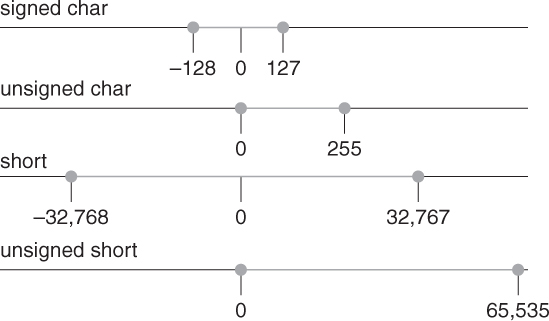

The minimum and maximum values for an integer type depend on the type’s representation, signedness, and width. Figure 5.3 shows the ranges of integer types for x86-32.

Integer Overflow

Overflow occurs when a signed integer operation results in a value that cannot be represented in the resulting type. Signed integer overflow is undefined behavior in C, allowing implementations to silently wrap (the most common behavior), trap, or both. Because signed integer overflow produces a silent wraparound in most existing C compilers, some programmers assume that this is a well-defined behavior.

(Source: From xkcd.com, available under a Creative Commons Attribution-Noncommercial license)

The following code shows the consequences of signed integer overflows on platforms that silently wrap. The signed integer i is assigned its maximum value of 2,147,483,647 and then incremented. This operation results in an integer overflow, and i is assigned the value –2,147,483,648 (the minimum value for an int). The result of the operation (2,147,483,647 + 1 = –2,147,483,648) clearly differs from the mathematical result.

1 int i;

2 i = INT_MAX; // 2,147,483,647

3 i++;

4 printf("i = %d

", i); /* i = -2,147,483,648 */

Integer overflows also occur when a signed integer already at its minimum value is decremented:

1 i = INT_MIN; // -2,147,483,648;

2 i--;

3 printf("i = %d

", i); /* i = 2,147,483,647 */

Conforming C compilers can deal with undefined behavior in many ways, such as ignoring the situation completely (with unpredictable results), translating or executing the program in a documented manner characteristic of the environment (with or without the issuance of a diagnostic message), or terminating a translation or execution (with the issuance of a diagnostic message). Because compilers are not obligated to generate code for undefined behaviors, those behaviors are candidates for optimization. By assuming that undefined behaviors will not occur, compilers can generate code with better performance characteristics. For example, GCC version 4.1.1 optimizes out integer expressions that depend on undefined behavior for all optimization levels.

The negative of two’s complement most negative value for a given type cannot be represented in that type because two’s complement representation is asymmetrical, with the value 0 being represented as a “positive” number. This asymmetrical representation has caused errors such as the following:

// undefined or wrong for the most negative value

#define abs(n) ((n) < 0 ? -(n) : (n))

A similar error occurs, for example, when converting a character string to an integer using the following code:

01 int my_atoi(const unsigned char *s) {

02 _Bool neg;

03 int val = 0;

04 if (neg = *s == '-')

05 ++s;

06 while (isdigit(*s)) {

07 if (val > INT_MAX/10) // this check is correct

08 err: report_error("atoi overflow"); // assumed to not return

09 else

10 val *= 10;

11 int i = *s++ - '0'; // C Standard requires *s - '0' to work

12 if (i > INT_MAX - val) // this check is correct

13 goto err;

14 val += i;

15 }

16 return neg ? -val : val;

17 }

The problem with this solution is that for a two’s complement implementation, a valid negative value (INT_MIN, for example, –32,768) incorrectly reports an overflow. A correct solution must take into account that the positive range and the negative range of int may be different.

Table 5.6 indicates which operators can result in overflow.

Character Types

The CERT C Secure Coding Standard [Seacord 2008], “INT07-C. Use only explicitly signed or unsigned char type for numeric values,” recommends using only signed char and unsigned char types for the storage and use of small numeric values (that is, values between the range of SCHAR_MIN and SCHAR_MAX, or 0 and UCHAR_MAX, respectively) because that is the only portable way to guarantee the signedness of the character types. Plain char should never be used to store numeric values because compilers have the latitude to define char to have the same range, representation, and behavior as either signed char or unsigned char.

In the following example, the char type variable c may be signed or unsigned:

1 char c = 200;

2 int i = 1000;

3 printf("i/c = %d

", i/c);

The value of the initializer 200 (which has type signed int) is not representable in the (signed) char type (which is undefined behavior). The compiler should diagnose this (but is not required to). Many compilers will, with or without a warning message, convert the 200 to –56 by the standard modulo-word-size rule for converting unsigned to signed. Assuming 8-bit two’s complement character types, this code may either print out i/c = 5 (unsigned) or i/c = -17 (signed). In the signed case, the value 200 exceeds SCHAR_MAX, which is +127 in this instance.

In addition, the bit patterns of 8-bit unsigned 200 and 8-bit two’s complement (signed) –56 are the same; however, using one’s complement, the rule would still produce the value –56, but the bit pattern would be different.

It is much more difficult to reason about the correctness of a program when you do not know whether the integers are signed or unsigned. Declaring the variable c unsigned char makes the subsequent division operation independent of the signedness of char, and it consequently has a predictable result:

1 unsigned char c = 200;

2 int i = 1000;

3 printf("i/c = %d

", i/c);

Data Models

A data model defines the sizes assigned to standard data types for a given compiler. These data models are typically named using an XXXn pattern, where each X refers to a C type and n refers to a size (typically 32 or 64):

• ILP64: int, long, and pointer types are 64 bits wide.

• LP32: long and pointer are 32 bits wide.

• LLP64: long long and pointer are 64 bits wide.

The data model for x86-32, for example, is ILP32, as shown in Table 5.7.

Other Integer Types

C also defines additional integer types in the <stdint.h>, <inttypes.h>, and <stddef.h> standard headers. These types include the extended integer types, which are optional, implementation-defined, fully supported extensions that, along with the standard integer types, make up the general class of integer types. Identifiers such as whatever_t defined in the standard headers are all typedefs, that is, synonyms for existing types—not new types. (Despite the name, typedefs never define a new type.)

size_t

size_t is the unsigned integer type of the result of the sizeof operator and is defined in the standard header <stddef.h>. Variables of type size_t are guaranteed to be of sufficient precision to represent the size of an object. The limit of size_t is specified by the SIZE_MAX macro.

K&R C (the early dialect of C described by Brian Kernighan and Dennis Ritchie in The C Programming Language) did not provide size_t. The C standards committee introduced size_t to eliminate a portability problem because, in some cases, unsigned int is too small to represent the size of the address space and sometimes unsigned long long is too large (and consequently inefficient).

The portable and efficient way to declare a variable that contains a size is

size_t n = sizeof(thing);

Similarly, the portable and efficient way to define a function foo that takes a size argument is

void foo(size_t thing);

Functions with parameters of type size_t often have local variables that count up to or down from that size and index into arrays, and size_t is often a good type for those variables. Similarly, variables that represent the count of elements in an array should be declared as size_t, particularly for character arrays in which the element count can be as large as the largest object that can be allocated in the system.

ptrdiff_t

ptrdiff_t is the signed integer type of the result of subtracting two pointers and is defined in the standard header <stddef.h>.

When two pointers are subtracted, the result is the difference of the subscripts of the two array elements. The size of the result is implementation defined, and its type (a signed integer type) is ptrdiff_t. For example, given the following declarations:

T *p, *q;

for any nonvoid type T, you can write expressions such as

d = p - q;

If you declare d as

ptrdiff_t d;

the preceding assignment should behave properly when compiled with any standard C compiler. If you declare d as some other integer type, such as

int d;

the assignment might provoke a warning from the compiler, or worse, silently truncate the assigned value at runtime, for example, if ptrdiff_t were an alias for long long int for that implementation.

The lower and upper limits of ptrdiff_t are defined by PTRDIFF_MIN and PTRDIFF_MAX respectively. The minimum acceptable limits defined by the C Standard are

PTRDIFF_MIN –65535

PTRDIFF_MAX +65535

These limits require that any 16-bit implementation must have at least a 17-bit ptrdiff_t to represent all possible differences of 16-bit pointers.

Although the standard does not explicitly guarantee that sizeof(ptrdiff_t) equals sizeof(size_t), it typically is the case on 32-bit or 64-bit implementations. This may seem somewhat surprising because a signed integer type may not be able to represent the difference between two pointers on such a system.

For example, on a system that supports objects up to 232 – 1 bytes, the sizeof operator can yield values only from 0 to 232 – 1, so 32 bits are sufficient. However, pointer subtraction for pointers to elements of an array of 232 – 1 bytes could yield values from –(232 – 1) to +(232 – 1). Consequently, ptrdiff_t would have to be at least 33 bits to represent all possible differences. However, C allows an implementation in which ptrdiff_t is 32 bits by making it undefined behavior if the result of subtracting two pointers is not representable in an object of type ptrdiff_t. In most cases, a ptrdiff_t overflow on a two’s complement machine with quiet wraparound on overflow does not affect the result of the operation. To program securely, however, you should carefully consider the consequences of your operation when the subtraction of two pointers may result in such large values.

intmax_t and uintmax_t

intmax_t and uintmax_t are integer types with the greatest width and can represent any value representable by any other integer types of the same signedness. Among other applications, intmax_t and uintmax_t can be used for formatted I/O on programmer-defined integer types. For example, given an implementation that supports 128-bit unsigned integers and provides a uint_fast128_t type, a programmer may define the following type:

typedef uint_fast128_t mytypedef_t;

This creates a problem with using these types with formatted output functions, such as printf(), and formatted input functions, such as scanf(). For example, the following code prints the value of x as an unsigned long long, even though the value is of a programmer-defined integer type:

mytypedef_t x;

printf("%llu", (unsigned long long) x);

There is no guarantee that this code prints the correct value of x because x may be too large to represent as an unsigned long long.

The intmax_t and uintmax_t types are capable of representing any value representable by any other integer types of the same signedness, allowing conversion between programmer-defined integer types (of the same signedness) and intmax_t and uintmax_t:

01 mytypedef_t x;

02 uintmax_t temp;

03 /* ... */

04 temp = x; /* always secure*/

05

06 /* ... change the value of temp ... */

07

08 if (temp <= MYTYPEDEF_MAX) {

09 x = temp;

10 }

Formatted I/O functions can be used to input and output greatest-width integer typed values. The j length modifier in a format string indicates that the following d, i, o, u, x, X, or n conversion specifier will apply to an argument with type intmax_t or uintmax_t. The following code guarantees that the correct value of x is printed, regardless of its length, provided that mytypedef_t is an unsigned type:

mytypedef_t x;

printf("%ju", (uintmax_t) x);

In addition to programmer-defined types, there is no requirement that an implementation provide format length modifiers for implementation-defined integer types. For example, a machine with an implementation-defined 48-bit integer type may not provide format length modifiers for the type. Such a machine would still have to have a 64-bit long long, with intmax_t being at least that large. The CERT C Secure Coding Standard [Seacord 2008], “INT15-C. Use intmax_t or uintmax_t for formatted IO on programmer-defined integer types,” provides further examples of this use of the intmax_t and uintmax_t types.

intptr_t and uintptr_t

The C Standard does not guarantee that an integer type exists that is big enough to hold a pointer to an object. However, if such a type does exist, its signed version is called intptr_t, and its unsigned version is called uintptr_t.

Arithmetic on those types is not guaranteed to produce a useful value. For example, a pointer might be a “capability” (which is basically a nonsequential hash value or magic cookie), or it might be a segment descriptor and an offset within the segment. These are also reasons there might not even be an integer big enough to hold a pointer.

Therefore, you can do nothing portable with those types. The X/Open System Interface (XSI) flavor of POSIX requires that they exist but does not require their arithmetic to do anything meaningful in relation to pointers. They are in the C Standard to allow nonportable code, such as in device drivers, to be written.

Platform-Independent Integer Types for Controlling Width

C introduced integer types in <stdint.h> and <inttypes.h>, which provide typedefs to give programmers better control over width. These integer types are implementation defined and include the following types:

• int#_t, uint#_t, where # represents an exact width: for example, int8_t, uint24_t

• int_least#_t, uint_least#_t, where # represents a width of at least that value: for example, int_least32_t, uint_least16_t

• int_fast#_t, uint_fast#_t, where # represents a width of at least that value for fastest integer types: for example, int_fast16_t, uint_fast64_t

The <stdint.h> header also defines constant macros for the corresponding maximum (and for signed types, minimum) representable values for the extended types.

Platform-Specific Integer Types

In addition to the integer types defined in the C Standard types, vendors often define platform-specific integer types. For example, the Microsoft Windows API defines a large number of integer types, including __int8, __int16, __int32, __int64, ATOM, BOOLEAN, BOOL, BYTE, CHAR, DWORD, DWORDLONG, DWORD32, DWORD64, WORD, INT, INT32, INT64, LONG, LONGLONG, LONG32, LONG64, and so forth.

If you are a Windows programmer, you will frequently come across these types. You should use these types where appropriate but understand how they are defined, particularly when combined in operations with differently typed integers.

5.3. Integer Conversions

Converting Integers

Conversion is a change in the underlying type used to represent the value resulting from assignment, type casting, or computation.

Conversion from a type with one width to a type with a wider width generally preserves the mathematical value. However, conversion in the opposite direction can easily cause loss of high-order bits (or worse, when signed integer types are involved) unless the magnitude of the value is kept small enough to be represented correctly.

Conversions occur explicitly as the result of a cast or implicitly as required by an operation. For example, implicit conversions occur when operations are performed on mixed types or when a value needs to be converted to an appropriate argument type.

For example, most C programmers would not think twice before adding an unsigned char to a signed char and storing the result in a short int. In this case, the C compiler makes the necessary conversions so that the operation “just works” from the programmer’s point of view. While implicit conversions simplify programming, they can also lead to lost or misinterpreted data. This section describes how and when conversions are performed and identifies their pitfalls.

The C Standard rules define how C compilers handle conversions. These rules, which are described in the following sections, include integer promotions, integer conversion rank, and usual arithmetic conversions.

Integer Conversion Rank

Every integer type has an integer conversion rank that determines how conversions are performed.

The following rules for determining integer conversion rank are defined by the C Standard:

• No two different signed integer types have the same rank, even if they have the same representation.

• The rank of a signed integer type is greater than the rank of any signed integer type with less precision.

• The rank of long long int is greater than the rank of long int, which is greater than the rank of int, which is greater than the rank of short int, which is greater than the rank of signed char.

• The rank of any unsigned integer type is equal to the rank of the corresponding signed integer type, if any.

• The rank of any standard integer type is greater than the rank of any extended integer type with the same width.

• The rank of _Bool shall be less than the rank of all other standard integer types.

• The rank of char is equal to the rank of signed char and unsigned char.

• The rank of any extended signed integer type relative to another extended signed integer type with the same precision is implementation defined but still subject to the other rules for determining the integer conversion rank.

• For all integer types T1, T2, and T3, if T1 has greater rank than T2 and T2 has greater rank than T3, then T1 has greater rank than T3.

The C Standard recommends that the types used for size_t and ptrdiff_t should not have an integer conversion rank greater than that of signed long int, unless the implementation supports objects large enough to make this necessary.

Integer conversion rank provides a standard rank ordering of integer types that is used to determine a common type for computations.

Integer Promotions

An object or expression with an integer type whose integer conversion rank is less than or equal to the rank of int and unsigned int is promoted when used in an expression where an int or unsigned int is required. The integer promotions are applied as part of the usual arithmetic conversions.

The integer promotions preserve value, including sign. If all values of the original, smaller type can be represented as an int:

• The value of the original, smaller type is converted to int.

• Otherwise, it is converted to unsigned int.

The following code fragment illustrates the use of integer promotions:

1 int sum;

2 char c1, c2;

3 sum = c1 + c2;

The integer promotions rule requires the promotion of the values of each variable in this example (c1 and c2) to type int. The two values of type int are added, yielding a value of type int, and the result is stored in the integer-typed variable sum.

Integer promotions are performed to avoid arithmetic errors resulting from the overflow of intermediate values and to perform operations in a natural size for the architecture. In the following code segment, the value of c1 is multiplied by the value of c2, and the product is divided by the value of c3 according to operator precedence rules. The compiler has license to reorder the evaluation of these subexpressions in an actual implementation.

1 signed char cresult, c1, c2, c3;

2 c1 = 100;

3 c2 = 3;

4 c3 = 4;

5 cresult = c1 * c2 / c3;

If the expression is evaluated in this order, without integer promotions, the multiplication of c1 and c2 results in an overflow of the signed char type on platforms where signed char is represented by an 8-bit two’s complement value because the result of the operation exceeds the maximum value of signed char (+127) on these platforms. Because of integer promotions, however, c1, c2, and c3 are converted to int (which has a minimum range of –32,767 to +32,767), and the overall expression is evaluated successfully. The resulting value is then truncated and stored in cresult. Because the result is in the range of the signed char type, the truncation does not result in lost or misinterpreted data.

Another example is applying the bitwise complement operator ~ to a value of type unsigned char:

unsigned char uc = UCHAR_MAX; // 0xFF

int i = ~uc;

On the x86-32architecture, for example, uc is assigned the value 0xFF. When uc is used as the operand to the complement operator ~, it is promoted to signed int by zero-extending it to 32 bits:

0x000000FF

The complement of this value is

0xFFFFFF00

Consequently, this operation always results in a negative value of type signed int on this platform.

Usual Arithmetic Conversions

The usual arithmetic conversions are a set of rules that provides a mechanism to yield a common type (technically called a common real type) when

• Both operands of a binary operator are balanced to a common type

• The second and third arguments of the conditional operator (?:) are balanced to a common type

Balancing conversions involves two operands of different types. One or both operands may be converted.

Many operators that accept integer operands perform conversions using the usual arithmetic conversions, including *, /, %, +, -, <, >, <=, >=, ==, !=, &, ^, |, and the conditional operator ?:. After integer promotions are performed on both operands, the following rules are applied to the promoted operands:

1. If both operands have the same type, no further conversion is needed.

2. If both operands have signed integer types or both have unsigned integer types, the operand with the type of lesser integer conversion rank is converted to the type of the operand with greater rank. For example, if a signed int operand is balanced with a signed long operand, the signed int operand is converted to signed long.

3. If the operand that has unsigned integer type has rank greater than or equal to the rank of the other operand’s type, the operand with signed integer type is converted to the type of the operand with unsigned integer type. For example, if a signed int operand is balanced with an unsigned int operand, the signed int operand is converted to unsigned int.

4. If the type of the operand with signed integer type can represent all of the values of the type of the operand with unsigned integer type, the operand with unsigned integer type is converted to the type of the operand with signed integer type. For example, if a 64-bit two’s complement signed long operand is balanced with a 32-bit unsigned int operand, the unsigned int operand is converted to signed long.

5. Otherwise, both operands are converted to the unsigned integer type corresponding to the type of the operand with signed integer type.

Conversions from Unsigned Integer Types

Conversions of smaller unsigned integer types to larger unsigned integer types are always safe and are accomplished by zero-extending the value.

When expressions contain unsigned integer operands of different widths, the C Standard requires the result of each operation to have the type (and representation range) of the wider of its operands. Provided that the corresponding mathematical operation produces a result within the representable range of the result type, the resulting represented value will be that of the mathematical value.

What happens when the mathematical result value cannot be represented in the result type?

Unsigned, Loss of Precision

For unsigned integer types only, C specifies that the value is reduced modulo 2w(type), which is the number that is 1 greater than the largest value that can be represented by the resulting type.

Assuming the following declarations are compiled for an implementation where w(unsigned char) is 8:

unsigned int ui = 300;

unsigned char uc = ui;

the value 300 is reduced modulo 28, or 300 – 256 = 44, when uc is assigned the value stored in ui.

Conversion of a value in an unsigned integer type to a narrower width is well defined as being modulo the narrower width. This is accomplished by truncating the larger value and preserving the low-order bits. Data is lost if the value cannot be represented in the new type. If the programmer does not anticipate this possibility, a programming error or vulnerability could occur.

Conversions that occur between signed and unsigned integer types of any size can result in lost or misinterpreted data when a value cannot be represented in the new type.

Unsigned to Signed

When converting a large unsigned value to a signed type of the same width, the C Standard states that when the starting value is not representable in the new (signed) type:

• The result is implementation defined, or

• An implementation-defined signal is raised.

Minimally, dependence on implementation-defined behavior is a porting issue and, if unanticipated by the programmer, a likely source of errors.

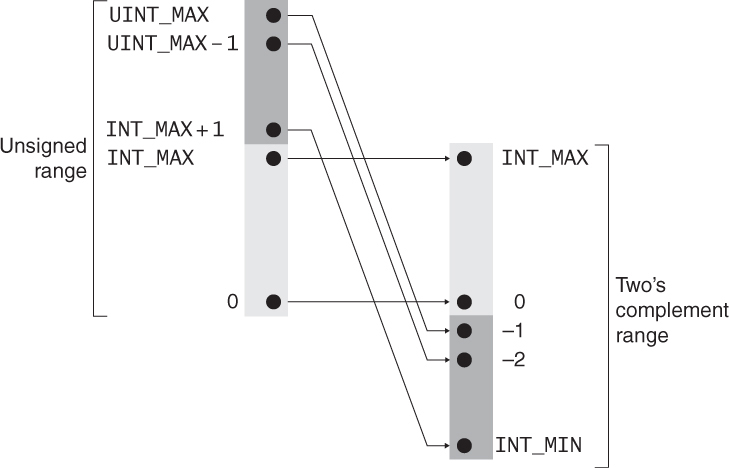

A common implementation is to not raise a signal but preserve the bit pattern, so no data is lost. In this case, the high-order bit becomes the sign bit. If the sign bit is set, both the sign and magnitude of the value change. For example, if the unsigned int value is UINT_MAX –1, as shown in Figure 5.4, the corresponding signed value is –2.

Type range errors, including loss of data (truncation) and loss of sign (sign errors), can occur when converting from an unsigned type to a signed type. When a large unsigned integer is converted to a smaller signed integer type, the value is truncated and the high-order bit becomes the sign bit. The resulting value may be negative or positive depending on the value of the high-order bit following truncation. Data will be lost (or misinterpreted) if the value cannot be represented in the new type. If the programmer does not anticipate this possibility, a programming error or vulnerability could occur.

The following example results in a truncation error on most implementations:

1 unsigned long int ul = ULONG_MAX;

2 signed char sc;

3 sc = (signed char)ul; /* cast eliminates warning */

Ranges should be validated when converting from an unsigned type to a signed type. The following code, for example, can be used when converting from unsigned long int to a signed char:

1 unsigned long int ul = ULONG_MAX;

2 signed char sc;

3 if (ul <= SCHAR_MAX) {

4 sc = (signed char)ul; /* use cast to eliminate warning */

5 }

6 else {

7 /* handle error condition */

8 }

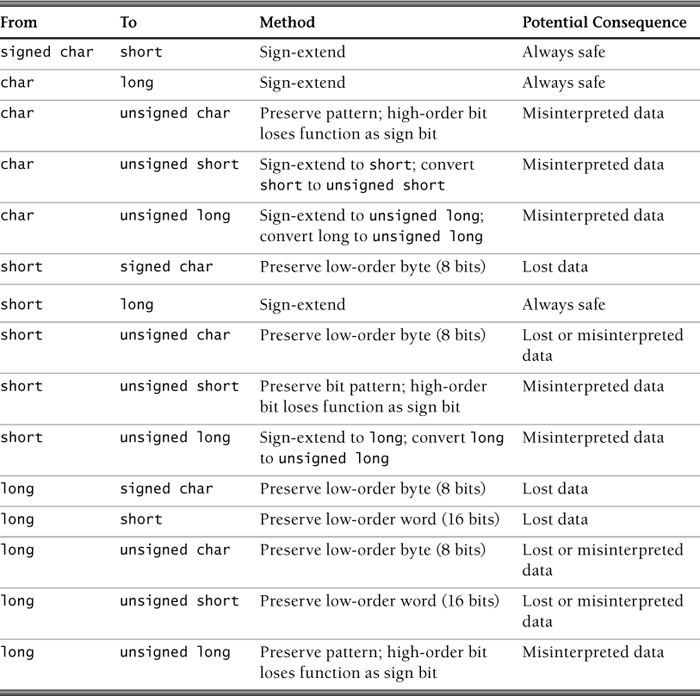

Table 5.8 summarizes conversions from unsigned integer types for the x86-32 architecture.

This table does not include signed/unsigned int (which is the same size as long on x86-32) and signed/unsigned long long.

Conversions from Signed Integer Types

Conversions of smaller signed integer types to larger signed integer types are always safe and are accomplished in two’s complement representation by sign-extending the value.

Signed, Loss of Precision

Conversion of a value in a signed integer type to a narrower width result is implementation defined or may raise an implementation-defined signal. A common implementation is to truncate to the smaller size. In this case, the resulting value may be negative or positive depending on the value of the high-order bit following truncation. Data will be lost (or misinterpreted) if the value cannot be represented in the new type. If the programmer does not anticipate this possibility, a programming error or vulnerability could occur.

For example, for an implementation in which the width of int is greater than the width of short, the following code has implementation-defined behavior or may raise an implementation-defined signal:

signed long int sl = LONG_MAX;

signed char sc = (signed char)sl; /* cast eliminates warning */

For a typical implementation in which sc is truncated, sc = -1.

Ranges should be validated when converting from a signed type to a signed type with less precision. The following code can be used, for example, to convert from a signed int to a signed char:

1 signed long int sl = LONG_MAX;

2 signed char sc;

3 if ( (sl < SCHAR_MIN) || (sl > SCHAR_MAX) ) {

4 /* handle error condition */

5 }

6 else {

7 sc = (signed char)sl; /* use cast to eliminate warning */

8 }

Conversions from signed types with greater precision to signed types with lesser precision require both the upper and lower bounds to be checked.

Signed to Unsigned

Where unsigned and signed integer types are operated on, the usual arithmetic conversions determine the common type, which will have width at least that of the widest type involved. C requires that if the mathematical result is representable in that width, that value is produced. When a signed integer type is converted to an unsigned integer type, the width (2N) of the new type is repeatedly added or subtracted to bring the result into the representable range.

When a signed integer value is converted to an unsigned integer value of equal or greater width and the value of the signed integer is not negative, the value is unchanged. When using two’s complement, for instance, the conversion to a greater width integer type is made by sign-extending the signed value.

For example, the following code compares the value of c (a signed integer) to the value of ui (an unsigned integer of greater size on x86-32):

1 unsigned int ui = ULONG_MAX;

2 signed char c = -1;

3 if (c == ui) {

4 puts("Why is -1 = 4,294,967,295???");

5 }

Because of integer promotions, c is converted to an unsigned int with a value of 0xFFFFFFFF, or 4,294,967,295. This is a good example of the inherent problem with comparing a negative and an unsigned value.

Type range errors, including loss of data (truncation) and loss of sign (sign errors), can occur when converting from a signed type to an unsigned type. The following code results in a loss of sign:

signed int si = INT_MIN;

unsigned int ui = (unsigned int)si; /* cast eliminates warning */

When a signed integer type is converted to an unsigned integer type of equal width, no data is lost because the bit pattern is preserved. However, the high-order bit loses its function as a sign bit. If the value of the signed integer is not negative, the value is unchanged. If the value is negative, the resulting unsigned value is evaluated as a large signed integer. For example, if the signed value is –2, as shown in Figure 5.5, the corresponding unsigned int value is UINT_MAX - 1.

Ranges should be validated when converting from a signed type to an unsigned type. For example, the following code can be used when converting from signed int to unsigned int:

1 signed int si = INT_MIN;

2 unsigned int ui;

3 if (si < 0) {

4 /* handle error condition */

5 }

6 else {

7 ui = (unsigned int)si; /* cast eliminates warning */

8 }

Table 5.9 summarizes conversions from signed integer types on the x86-32 platform. Conversions from signed int are omitted from the table because both int and long have 32-bit precision on this architecture.

Conversion Implications

Implicit conversions simplify C language programming. However, conversions have the potential for lost or misinterpreted data. Avoid conversions that result in

• Loss of value: conversion to a type where the magnitude of the value cannot be represented

• Loss of sign: conversion from a signed type to an unsigned type resulting in loss of sign

The only integer type conversion guaranteed to be safe for all data values and all conforming implementations is to a wider type of the same signedness.

5.4. Integer Operations

Integer operations can result in exceptional condition errors such as overflow, wrapping, and truncation. Exceptional conditions occur when the product of an operation cannot be represented in the type resulting from the operation. Table 5.10 indicates which exceptional conditions are possible when performing operations on integral values. Not included are errors caused by the usual arithmetic conversions that are applied when balancing operands to a common type.

As you can see from this table, most integer operations cause exceptional conditions, even in cases where no type coercion takes place. Of particular importance to security are operations on integer values that originate from untrusted sources and are used in any of the following ways:

• As an array index

• In any pointer arithmetic

• As a length or size of an object

• As the bound of an array (for example, a loop counter)

• As an argument to a memory allocation function

• In security-critical code

In the following sections we examine integer operations, discuss the possible resulting exceptional conditions, and review effective mitigation strategies. The high-level semantics of these integer operations (as defined by the C Standard), as well as the specific implementation of these operations on x86-32, are described. Precondition and postcondition tests for signed overflow and unsigned wrapping are described as appropriate.

Assignment

In simple assignment (=), the value of the right operand is converted to the type of the assignment expression and replaces the value stored in the object designated by the left operand. These conversions occur implicitly and can often be a source of subtle errors.

In the following program fragment, the int value returned by the function f() can be truncated when stored in the char and then converted back to int width before the comparison:

1 int f(void);

2 char c;

3 /* ... */

4 if ((c = f()) == -1)

5 /* ... */

In an implementation in which “plain” char has the same range of values as unsigned char (and char is narrower than int), the result of the conversion cannot be negative, so the operands of the comparison can never compare equal. As a result, for full portability, the variable c should be declared as int.

In the following program fragment, the value of i is converted to the type of the assignment expression c = i, that is, char type:

1 char c;

2 int i;

3 long l;

4 l = (c = i);

The value of the expression enclosed in parentheses is then converted to the type of the outer assignment expression, that is, long int type. The comparison l == i will not be true after this series of assignments if the value of i is not in the range of char.

An assignment from an unsigned integer to a signed integer or from a signed integer to an unsigned integer of equal width can cause the resulting value to be misinterpreted. In the following program fragment, a negative signed value is converted to an unsigned type of equal width:

1 int si = -3;

2 unsigned int ui = si;

3 printf("ui = %u

", ui); /* ui = 65533 */

Because the new type is unsigned, the value is converted by repeatedly adding or subtracting 1 more than the maximum value that can be represented in the new type until the value is in the range of the new type. The resulting value will be misinterpreted as a large, positive value if accessed as an unsigned value.

On most implementations, the original value can be trivially recovered by reversing the operation:

si = ui;

printf("si = %d

", si); /* si = -3 */

However, because the resulting value cannot be represented as a signed int, the result is implementation defined or an implementation-defined signal is raised. In most cases, this code would not result in an error. But instead of concentrating on the details of platform-specific conversions, you should focus on choosing appropriate width and signedness for each intended purpose, along with ways to ensure that the type limits are not exceeded. Then you have no need to worry about implementation-defined behaviors.

Truncation occurs as the result of assignment or casting from a type with greater width to a type with lesser width. Data may be lost if the value cannot be represented in the resulting type. For example, adding c1 and c2 in the following program fragment produces a value outside the range of unsigned char for an implementation where unsigned char is represented using 8 bits (28 – 1, or 255):

1 unsigned char sum, c1, c2;

2 c1 = 200;

3 c2 = 90;

4 sum = c1 + c2;

Assuming that all values of type unsigned char can be represented as int in this implementation, the value is converted to an int. The addition succeeds without wrapping, but the assignment to sum causes truncation because the unsigned char type has a lesser width than the value resulting from the addition. Because the conversion is to an unsigned type, the result of this operation is well defined but can be an error if the programmer expects the mathematical result.

Assuming that signed int has a width of 32 bits, signed char has a width of 8 bits, and two’s complement representation is shown in the following program fragment:

signed int si = SCHAR_MAX + 1;

signed char sc = si;

si is initialized to 128, which is represented in memory as

00000000 00000000 00000000 10000000

When this value is assigned to sc, it is truncated to

10000000

If interpreted in 8-bit two’s complement representation, the result now has a negative value (SCHAR_MIN, or –128) because the high-order bit is set. In this case, the data cannot be recovered simply by reversing the operation:

si = sc;

because this assignment results in sign-extending sc:

11111111 11111111 11111111 10000000

The sign extension in this case preserves the value (SCHAR_MIN, or –128).

The data can be recovered using the appropriate cast:

si = (unsigned char) sc;

But this assumes that the programmer intentionally truncated to an integer size that was too small to represent this value. Doing so is not a recommended practice and is presumably an error.

Addition

Addition can be used to add two arithmetic operands or a pointer and an integer. If both operands are of arithmetic type, the usual arithmetic conversions are performed on them. The result of the binary + operator is the sum of the operands. Incrementing is equivalent to adding 1. When an expression that has integer type is added to a pointer, the result is a pointer. This is called pointer arithmetic and is not covered in this chapter.

The result of adding two integers can always be represented using 1 more bit than the width of the larger of the two operands. For example, assuming an 8-bit two’s complement signed char type, the minimum value of SCHAR_MIN is –128. For this implementation, SCHAR_MIN + SCHAR_MIN = –256, which can be represented as a 9-bit two’s complement value. Consequently, the result of any integer operation can be represented in any type with a width greater than the width of the larger addend.

Integer addition can cause an overflow or wraparound if the resulting value cannot be represented in the number of bits allocated to the integer’s representation.

The x86-32 instruction set includes an add instruction that takes the form add destination,source. This instruction adds the first (destination) operand to the second (source) operand and stores the result in the destination operand. The destination operand can be a register or memory location, and the source operand can be an immediate, register, or memory location. For example, add ax,bx adds the 16-bit bx register to the 16-bit ax register and leaves the sum in the ax register [Intel 2010].

Signed and unsigned overflow conditions resulting from an addition operation are detected and reported on x86-32. x86-32 instructions, including the add instruction, set flags in the flags register, as shown in Figure 5.6.

The two’s complement system has the advantage of not requiring that the addition and subtraction circuitry examine the signs of the operands to determine whether to add or subtract. This means that, for two’s complement architectures such as x86-32, a single instruction serves to add and subtract both signed and unsigned values. Consequently, the add instruction evaluates the result for both signed and unsigned integer operands and sets the OF and CF to indicate an overflow or carry in the signed or unsigned result respectively. Although each addition operation sets both the OF and CF, the OF has no meaning after an unsigned addition, and the CF has no meaning after a signed addition.

The Microsoft Visual C++ compiler, for example, generates the following instructions for the addition of the two signed int values si1 and si2:

1 si1 + si2

2 mov eax, dword ptr [si1]

3 add eax, dword ptr [si2]

and exactly the same instructions for the addition of the two unsigned int values ui1 and ui2:

1 ui1 + ui2

2 mov eax, dword ptr [ui1]

3 add eax, dword ptr [ui2]

In each case, the first addend is moved into the 32-bit eax register and then added to the second addend.

The addition of 64-bit signed long long and unsigned long long requires two separate addition instructions on x86-32:

01 sll = sll1 + sll2;

02 mov eax, dword ptr [sll1]

03 add eax, dword ptr [sll2]

04 mov ecx, dword ptr [ebp-8]

05 adc ecx, dword ptr [ebp-18h]

06 ull = ull1 + ull2;

07 mov eax, dword ptr [ull1]

08 add eax, dword ptr [ull2]

09 mov ecx, dword ptr [ebp-28h]

10 adc ecx, dword ptr [ebp-38h]

The add instruction adds the low-order 32 bits. If this addition wraps, the extra carry bit is stored in CF. The adc instruction then adds the high-order 32 bits, along with the value of the carry bit, to produce the correct 64-bit sum.

Avoiding or Detecting Signed Overflow Resulting from Addition

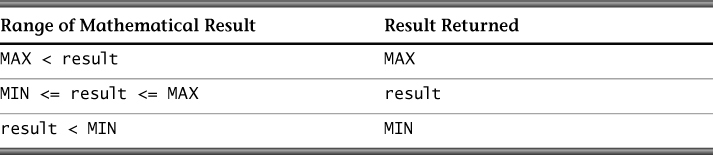

Signed integer overflow is undefined behavior in C, allowing implementations to silently wrap (the most common behavior), trap, saturate (stick at the maximum/minimum value), or perform any other behavior that the implementation chooses.

Postcondition Test Using Status Flags

At the x86-32 assembly level, signed overflows can be detected using either the jo instruction (jump if overflow) or the jno instruction (jump if not overflow) after execution of the add instruction (32-bit case) or adc instruction (64-bit case).

This allows for the creation of a library of arithmetic functions that check the status of the overflow flag and return a status code to indicate when overflow has occurred. The following function would allow the addition of signed int values in such a library:

01 _Bool add_int(int lhs, int rhs, int *sum) {

02 __asm {

03 mov eax, dword ptr [lhs]

04 add eax, dword ptr [rhs]

05 mov ecx, dword ptr [sum]

06 mov dword ptr [ecx], eax

07 jo short j1

08 mov al, 1 // 1 is success

09 jmp short j2

10 j1:

11 xor al, al // 0 is failure

12 j2:

13 };

14 }

Although it works, the solution has a number of problems. First, the function implementation depends on compiler-specific extensions (in this case Microsoft’s) to incorporate assembly language instructions in a C program. Second, this code relies on the x86-32 instruction set and is consequently nonportable. Third, this approach has been reported to have poor performance in optimized code because of the inability of the compiler to optimize the assembly language instructions. Finally, this approach is hard to use because it prevents the use of standard inline arithmetic. For example, the following code:

1 int a = /* ... */;

2 int b = /* ... */;

3

4 int sum = a + b + 3;

would need to be implemented as follows:

1 int a = /* ... */;

2 int b = /* ... */;

3

4 if ( add_int(a, b, &sum) && add_int(sum, 3, &sum) ) {

5 /* ok */

6 }

7 else {

8 /* overflow */

9 }

Precondition Test, Two’s Complement

Another approach to eliminating integer exceptional conditions is to test the values of the operands before an operation to prevent overflow. This testing is especially important for signed integer overflow, which is undefined behavior that can result in a trap on some architectures (for example, a division error on x86-32). The complexity of these tests varies significantly.

The following code performs a precondition test of the addition’s operands to ensure that no overflow occurs. It employs the principle that an addition overflow has occurred when the two operands have the same sign as each other and the result has the opposite sign. It also takes advantage of the fact that unsigned integer operations wrap in C, so they can be used to detect signed overflow without any danger of causing a trap.

01 signed int si1, si2, sum;

02

03 /* Initialize si1 and si2 */

04

05 unsigned int usum = (unsigned int)si1 + si2;

06

07 if ((usum ^ si1) & (usum ^ si2) & INT_MIN) {

08 /* handle error condition */

09 } else {

10 sum = si1 + si2;

11 }

Exclusive-or can be thought of as a “not-equal” operator on each individual bit. We are concerned only with the sign position, so we mask with INT_MIN, which has only the sign bit set.

This solution works only on architectures that use two’s complement representation. Although most modern platforms use that type of representation, it is best not to introduce unnecessary platform dependencies. (See The CERT C Secure Coding Standard [Seacord 2008], “MSC14-C. Do not introduce unnecessary platform dependencies.”) This solution can also be more expensive than a postcondition test, especially on RISC CPUs.

Precondition Test, General

The following code tests the suspect addition operation to ensure that no overflow occurs regardless of the representation used:

01 signed int si1, si2, sum;

02

03 /* Initialize si1 and si2 */

04

05 if ((si2 > 0 && si1 > INT_MAX - si2) ||

06 (si2 < 0 && si1 < INT_MIN - si2)) {

07 /* handle error condition */

08 }

09 else {

10 sum = si1 + si2;

11 }

This solution is more readable and more portable but may be less efficient than the solution that is specific to two’s complement representation.

Downcast from a Larger Type

The true sum of any two signed integer values of width w can always be represented in w+1 bits, as shown in Figure 5.7.

As a result, performing the addition in a type with greater width will always succeed. The resulting value can be range-checked before downcasting to the original type.

Generally, this solution is implementation dependent in C because the standard does not guarantee that any one standard integer type is larger than another.

Avoiding or Detecting Wraparound Resulting from Addition

Wraparound can occur when adding two unsigned values if the sum of the operands is larger than the maximum value that can be stored in the resulting type. Although unsigned integer wrapping is well defined by the C Standard as having modulo behavior, unexpected wrapping has led to numerous software vulnerabilities.

Postcondition Test Using Status Flags

At the x86-32 assembly level, unsigned overflows can be detected using either the jc instruction (jump if carry) or the jnc instruction (jump if not carry). These conditional jump instructions are placed after the add instruction in the 32-bit case or after the adc instruction in the 64-bit case. The following function, for example, can be used to add two values of type size_t:

01 _Bool add_size_t(size_t lhs, size_t rhs, size_t *sum) {

02 __asm {

03 mov eax, dword ptr [lhs]

04 add eax, dword ptr [rhs]

05 mov ecx, dword ptr [sum]

06 mov dword ptr [ecx], eax

07 jc short j1

08 mov al, 1 // 1 is success

09 jmp short j2

10 j1:

11 xor al, al // 0 is failure

12 j2:

13 };

14 }

Testing for wraparound by testing status flags suffers from all the same problems as checking status flags for signed overflow.

Precondition Test

The following code performs a precondition test of the addition’s operands to guarantee that there is no possibility of wraparound:

01 unsigned int ui1, ui2, usum;

02

03 /* Initialize ui1 and ui2 */

04

05 if (UINT_MAX - ui1 < ui2) {

06 /* handle error condition */

07 }

08 else {

09 usum = ui1 + ui2;

10 }

Postcondition Test

Postcondition testing occurs after the operation is performed and then tests the resulting value to determine if it is within valid limits. This approach is ineffective if an exceptional condition can result in an apparently valid value; however, unsigned addition can always be tested for wrapping.

The following code performs a postcondition test to ensure that sum usum resulting from the addition of two addends of unsigned int type is not less than the first operand, which would indicate that wraparound has occurred:

1 unsigned int ui1, ui2, usum;

2

3 /* Initialize ui1 and ui2 */

4

5 usum = ui1 + ui2;

6 if (usum < ui1) {

7 /* handle error condition */

8 }

Subtraction

Like addition, subtraction is an additive operation. For subtraction, both operands must have arithmetic type or be pointers to compatible object types. It is also possible to subtract an integer from a pointer. Decrementing is equivalent to subtracting 1. In this section we are concerned only with the subtraction of two integral values.

Postcondition Test Using Status Flags

The x86-32 instruction set includes sub (subtract) and sbb (subtract with borrow). The sub instruction subtracts the source operand from the destination operand and stores the result in the destination operand. The destination operand can be a register or memory location, and the source operand can be an immediate, register, or memory location. However, the destination operand and source operand cannot both be memory locations.

The sbb instruction is usually executed as part of a multibyte or multiword subtraction in which a sub instruction is followed by an sbb instruction. The sbb instruction adds the source operand (second operand) and the carry flag and subtracts the result from the destination operand. The result of the subtraction is stored in the destination operand. The carry flag represents a borrow from a previous subtraction.

The sub and sbb instructions set the overflow and carry flags to indicate an overflow in the signed and unsigned result respectively.

Subtraction for the x86-32 architecture is similar to addition. The Microsoft Visual C++ compiler, for example, generates the following instructions for the subtraction of two values of type signed long long:

1 sll1 - sll2

2

3 mov eax, dword ptr [sll1]

4 sub eax, dword ptr [sll2]

5 mov ecx, dword ptr [ebp-0E0h]

6 sbb ecx, dword ptr [ebp-0F0h]

The sub instruction subtracts the low-order 32 bits. If this subtraction wraps, the extra carry bit is stored in CF. The sbb instruction adds the source operand (representing the high-order 32 bits) and the carry [CF] flag and subtracts the result from the destination operand (representing the high-order 32 bits) to produce the 64-bit difference.

Avoiding or Detecting Signed Overflow Resulting from Subtraction

At the x86-32 assembly level, signed overflows resulting from subtraction can be detected using either the jo instruction (jump if overflow) or the jno instruction (jump if not overflow) after execution of the sub instruction (32-bit case) or sbb instruction (64-bit case).

Precondition Test

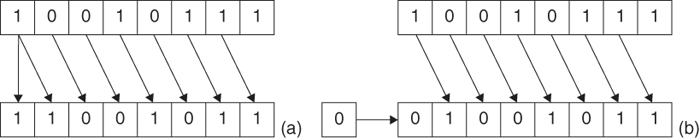

Overflow cannot occur when subtracting two positive values or two negative values. Assuming two’s complement representation, the following code tests the operands of the subtraction to guarantee that there is no possibility of signed overflow using bit operations. It makes use of the principle that a subtraction overflow has occurred if the operands have opposite signs and the result has the opposite sign of the first operand. It also takes advantage of the wrapping behavior of unsigned operations in C.

01 signed int si1, si2, result;

02

03 /* Initialize si1 and si2 */

04

05 if ((si1 ^ si2) & (((unsigned int)si1 - si2) ^ si1) & INT_MIN) {

06 /* handle error condition */

07 }

08 else {

09 result = si1 - si2;

10 }

Exclusive-or is used as a bitwise “not-equal” operator. To test the sign position, the expression is masked with INT_MIN, which has only the sign bit set.

This solution works only on architectures that use two’s complement representation. Although most modern platforms use that type of representation, it is best not to introduce unnecessary platform dependencies. (See The CERT C Secure Coding Standard [Seacord 2008], “MSC14-C. Do not introduce unnecessary platform dependencies.”)

It is also possible to implement a portable precondition test for overflow resulting from subtraction. If the second operand is positive, check that the first operand is less than the minimum value for the type plus the second operand. For operands of signed int type, for example, test that op1 < INT_MIN + op2. If the second operand is negative, check that the first operand is greater than the maximum value for the type plus the second operand.

Avoiding or Detecting Wraparound Resulting from Subtraction

Wraparound can occur when subtracting two unsigned values if the difference between the two operands is negative.

Postcondition Test Using Status Flags

At the x86-32 assembly level, unsigned wraparound can be detected using either the jc instruction (jump if carry) or the jnc instruction (jump if not carry). These conditional jump instructions are placed after the sub instruction in the 32-bit case or sbb instruction in the 64-bit case.

Precondition Test

The following program fragment performs a precondition test of the subtraction operation’s unsigned operands to guarantee there is no possibility of unsigned wrap:

01 unsigned int ui1, ui2, udiff;

02

03 /* Initialize ui1 and ui2 */

04

05 if (ui1 < ui2){

06 /* handle error condition */

07 }

08 else {

09 udiff = ui1 - ui2;

10 }

Postcondition Test

The following program fragment performs a postcondition test that the result of the unsigned subtraction operation udiff is not greater than the first operand:

1 unsigned int ui1, ui2, udiff ;

2

3 /* Initialize ui1 and ui2 */

4

5 udiff = ui1 - ui2;

6 if (udiff > ui1) {

7 /* handle error condition */

8 }

Multiplication

Multiplication in C can be accomplished using the binary * operator that results in the product of the operands. Each operand of the binary * operator has arithmetic type. The usual arithmetic conversions are performed on the operands. Multiplication is prone to overflow errors because relatively small operands, when multiplied, can overflow a given integer type.

In general, the product of two integer operands can always be represented using twice the number of bits used by the larger of the two operands. For example, the unsigned range of an integer with width N is 0 to 2N – 1. The result of squaring the maximum unsigned value for a given width is represented by the following formula:

0 ≤ x * y ≤ (2N – 1)2 = 22N – 2N+1 + 1

The product in this case can require up to 2N bits to represent.

The signed two’s complement range of a type with width N is –2N–1 to 2N–1 – 1. The minimum two’s complement results from multiplying the minimum and maximum values:

0 ≤ x * y ≤ (–2N–1) (2N–1 – 1) = –22 N –2 + 2 N –1

In this case, the product requires up to 2N – 2 bits plus 1 bit for the sign bit equals 2N – 1 bits.

The maximum two’s complement value results from squaring the signed two’s complement minimum value:

x * y ≤ (–2 N –1)2 = 22 N –2

In this case, the product requires up to 2N bits including the sign bit.

This means that, for example, the product of two 8-bit operands can always be represented by 16 bits, and the product of two 16-bit operands can always be represented by 32 bits.

Because doubling the number of bits provides 1 more bit than is needed to hold the result of signed multiplication, room is left for an extra addition or subtraction before it becomes necessary to check for overflow. Because a multiplication followed by an addition is common, an overflow check can be skipped fairly often.

Postcondition Test Using Status Flags

The x86-32 instruction set includes both a mul (unsigned multiply) and imul (signed multiply) instruction. The mul instruction performs an unsigned multiplication of the destination operand and the source operand and stores the result in the destination operand.

The mul instruction is shown here using C-style pseudocode:

01 if (OperandSize == 8) {

02 AX = AL * SRC;

03 else {

04 if (OperandSize == 16) {

05 DX:AX = AX * SRC;

06 }

07 else { // OperandSize == 32

08 EDX:EAX = EAX * SRC;

09 }

10 }

The mul instruction accepts 8-, 16-, and 32-bit operands and stores the results in 16-, 32-, and 64-bit destination registers respectively. This is referred to as a widening-multiplication instruction because twice the number of bits allocated for the product is twice the size of the operands. If the high-order bits are required to represent the product of the two operands, the carry and overflow flags are set. If the high-order bits are not required (that is, they are equal to 0), the carry and overflow flags are cleared.

The x86-32 instruction set also includes imul, a signed form of the mul instruction with one-, two-, and three-operand forms [Intel 2004]. The carry and overflow flags are set when significant bits (including the sign bit) are carried into the upper half of the result and cleared when they are not.

The imul instruction is similar to the mul instruction in that the length of the product is calculated as twice the length of the operands.

The principal difference between the mul (unsigned multiply) instruction and the imul (signed multiply) instruction is in the handling of the flags. If the flags are not tested and the most significant half of the result is not used, it makes little difference which instruction is used. Consequently, the Microsoft Visual Studio compiler uses the imul instruction for both signed and unsigned multiplication:

01 int si1 = /* some value */;

02 int si2 = /* some value */;

03 int si_product = si1 * si2;

04 mov eax, dword ptr [si1]

05 imul eax, dword ptr [si2]

06 mov dword ptr [ui_product], eax

07

08 unsigned int ui1 = /* some value */;

09 unsigned int ui2 = /* some value */;

10 unsigned int ui_product = ui1 * ui2;

11 mov eax, dword ptr [ui1]

12 imul eax, dword ptr [ui2]

13 mov dword ptr [ui_product], eax

To test for signed overflow or unsigned wrapping following multiplication, it is first necessary to determine if the operation is a signed or unsigned operation and use the appropriate multiplication instruction. For the mul instruction, the overflow and carry flags are set to 0 if the upper half of the result is 0; otherwise, they are set to 1. For the imul instruction, the carry and overflow flags are set when significant bits (including the sign bit) are carried into the upper half of the result and cleared when the result (including the sign bit) fits exactly in the lower half of the result.

Downcast from a Larger Type

A similar solution for detecting signed overflow or unsigned wrapping following multiplication can be implemented in the C language without resorting to assembly language programming. This solution is to cast both operands to an integer type that is at least twice the width of the larger of the two operands and then multiply them. As already shown, this is guaranteed to work because in all cases the product can be stored in 2N bits.

In the case of unsigned multiplication, if the high-order bits are required to represent the product of the two operands, the result has wrapped.

01 unsigned int ui1 = /* some value */;

02 unsigned int ui2 = /* some value */;

03 unsigned int product;

04

05 /* Initialize ui1 and ui2 */

06

07 static_assert(

08 sizeof(unsigned long long) >= 2 * sizeof(unsigned int),

09 "Unable to detect wrapping after multiplication"

10 );

11

12 unsigned long long tmp = (unsigned long long)ui1 *

13 (unsigned long long)ui2;

14 if (tmp > UINT_MAX) {

15 /* handle unsigned wrapping */

16 }

17 else {

18 product = (unsigned int)tmp;

19 }

For signed integers, all 0s or all 1s in the upper half of the result and the sign bit in the lower half of the result indicate no overflow.

The following solution guarantees that there is no possibility of signed overflow on systems where the width of long long is at least twice the width of int:

01 /* Initialize si1 and si2 */

02

03 static_assert(

04 sizeof(long long) >= 2 * sizeof(int),

05 "Unable to detect overflow after multiplication"

06 );

07

08 long long tmp = (long long)si1 * (long long)si2;

09

10 if ( (tmp > INT_MAX) || (tmp < INT_MIN) ) {

11 /* handle signed overflow */

12 }

13 else {

14 result = (int)tmp;

15 }

Both solutions use static assertion to ensure that the tests for unsigned wrapping and signed overflow succeed. See The CERT C Secure Coding Standard [Seacord 2008], “DCL03-C. Use a static assertion to test the value of a constant expression,” to find out more about static assertions.