Chapter 15. Models

In this chapter, you learn how to use 3D models in your applications. You integrate a third-party library for importing myriad file formats, and you author a set of classes to represent models at run-time. Along the way, you get some more hands-on experience creating DirectX applications.

Motivation

Now that you’ve authored a couple applications, and you’ve manually specified vertices and indices, you’re probably thinking about a better way to do this. Clearly, you cannot hard-code the vertices for every object you’ll render. Instead, you’ll get your objects from an artist who uses a 3D modeling package such as Autodesk Maya or 3D Studio Max. Your job is to read these assets at runtime and extract the data necessary to render them to the screen. This is a bit easier said than done. The next few sections detail an approach for using 3D models.

Model File Formats

A lot of file formats for storing 3D models exist, and they can be grossly categorized into three groups: authoring formats, game/graphics engine formats, and interchange formats. An authoring format is specific to a modeling application. For example, Autodesk Maya has two native file formats, Maya ASCII (.ma) and Maya Binary (.mb). 3D Studio Max uses .3DS files. Such formats typically contain authoring-related data that’s not applicable within the game engine. For example, Autodesk Maya files store a creation history that details the steps used to produce the final model; your rendering engine just needs the final result.

A game/graphics engine file format stores distilled versions of assets that don’t contain authoring-related data. Furthermore, these formats are commonly optimized for loading into a particular engine. If a game/graphics engine is intended to be used outside the game studio (for example, for a game that’s intended to be modified, or modded), the format will be publicly documented or otherwise supported so that user-created assets can be stored correctly. For instance, Blizzard’s Starcraft II format MDX3 (.m3) has been publicly released and has a 3D Studio Max importer/exporter plug-in.

The third category, interchange formats, contains a set of file formats that are independent of an authoring tool or game/graphics engine. These formats are intended to allow the exchange of assets between software applications—sort of a one-format-to-rule-them-all idea. Perhaps the chief interchange format is COLLADA (Collaborative Design Activity), which a variety of authoring tools and game engines support.

Regardless of category, a file can be stored as text (for example the XML-based COLLADA format) or binary. Text formats require more disk space to store the same asset, but any text editor can read and edit them. However, just because a file format can be visualized as strings doesn’t mean that it’s really human readable. You can find some hairy text formats out there that you’ll corrupt just as easily as a binary file if you’re not careful. It’s usually a good idea to let the authoring tool save the file and to leave hand-editing as a last resort.

Something else to consider is that not all formats are capable of storing the data your application might require. For example, the Wavefront OBJ (.obj) format is a simple and widely supported file format, but it does not store animation data.

In practice, you want to settle on a specific authoring format. Such standardization makes debugging your assets simpler. (Yes, you will find problems in your assets than can cause a runtime crash or otherwise produce incorrect rendering.) If you are unable to standardize, you’ll have to wrangle multiple formats into a consistent representation that your rendering engine can access.

The Content Pipeline

Asset wrangling is the job of the content pipeline. In the graphics pipeline, primitives are fed in and pixels come out. For the content pipeline, an artist provides assets in one format, and game-ready assets are output. For example, you might convert an .obj model file into a format your game engine can use. But unlike the Direct3D graphics pipeline, no preconstructed content pipeline can help manipulate your assets. You have to build any such system or adopt a third-party utility.

Typically, assets are processed through the content pipeline at build time and saved into a game engine format that you’ve created (or adopted). At runtime, you load these assets and use their data. You could perform any content manipulation at runtime, but you would incur the corresponding overhead. It’s not uncommon for a game engine to support both build-time and runtime asset conditioning, with runtime use removed from a release version of the game. In this chapter, you convert your assets at runtime, but the system you develop can be easily applied to a build-time content pipeline.

A content pipeline has many aspects, from the workflow for converting assets (which might include a GUI or a revision-control system) to the myriad types of content needed for your application (such as 3D models, textures, or audio files). But the underpinning of a content pipeline is serialization and deserialization—writing and reading files. At its core, the content pipeline revolves around parsing one or more input formats to produce a uniform output that the game engine can consume. You might be able to standardize on a single authoring format, but this book makes no such assumption and opts to support as many input formats as possible. However, writing parsers for many file formats is outside the scope of this text. Instead, we adopt an open source package called the Open Asset Import Library, which is available at http://assimp.sourceforge.net.

The Open Asset Import Library

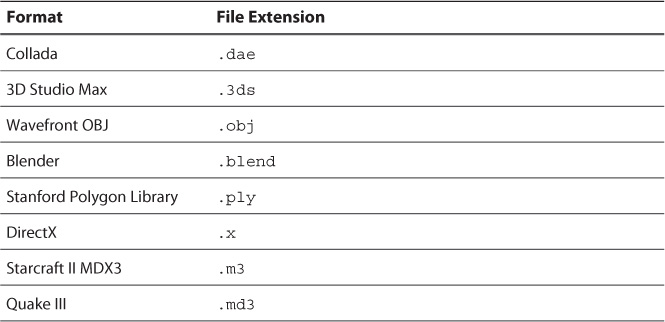

The Open Asset Import Library is a C++ API that imports a large variety of 3D model formats and represents them in a consistent fashion. Table 15.1 lists just a few of the supported formats. Visit the Open Asset Import Library website for a complete list.

To use the library, first extract the package to the external directory and add Include Directory and Library Directory search paths to your Library project. Then add a reference to the assimp.lib file in your Game or Library projects (or to an entirely separate project to create a build-time content pipeline). The Open Asset Import Library is distributed as a dynamic link library (.dll), and assimp.lib is an import library. The DLL contains the actual implementation of the API. Therefore, you must copy the DLL to your output directory (where your executable resides) before launching your application. A post-build step can handle this nicely. If you have trouble with these steps, you can find complete projects on the book’s companion website.

Although you use the Open Asset Import Library to read various model formats, you’ll create your own classes to represent 3D models. In this way, you remove any explicit runtime dependency on this library.

What’s in a Model?

So what exactly does a 3D model contain? This question has no single answer, but models are commonly described as a set of meshes. A mesh is a group of vertices, edges, and faces that describe the shape of a 3D object. By describing a model as a set of meshes, you allow for multiple “subobjects” to be treated both separately and as a whole. For example, a car might be described by separate meshes for the chassis, two doors, and four wheels. The doors could be transformed independently from the wheels and the chassis, but all the objects might have a shared position in world space. Furthermore, you could apply a different shader to each mesh. For instance, the chassis and doors might use an environment mapping effect, whereas the wheels use a diffuse shader.

A model can also contain inputs to a shader, or what could be called model materials. If a material represents an instance of an effect with values for shader variables, a model material is where (at least some of) the values of those variables originate. For example, a model material would refer to the name of the shader to use for rendering and a list of name/value pairs matching initial values for shader constants. A model material could also include a list of texture references (a texture reference is a filename that refers to a texture) or embedded textures (textures whose actual data is stored inside the model file). However, embedded textures are less common than texture references because duplicate embedded textures waste memory.

Using this definition of a model, Listing 15.1 presents the declaration of the Model class.

Listing 15.1 The Model.h Header File

#pragma once

#include "Common.h"

namespace Library

{

class Game;

class Mesh;

class ModelMaterial;

class Model

{

public:

Model(Game& game, const std::string& filename, bool flipUVs =

false);

~Model();

Game& GetGame();

bool HasMeshes() const;

bool HasMaterials() const;

const std::vector<Mesh*>& Meshes() const;

const std::vector<ModelMaterial*>& Materials() const;

private:

Model(const Model& rhs);

Model& operator=(const Model& rhs);

Game& mGame;

std::vector<Mesh*> mMeshes;

std::vector<ModelMaterial*> mMaterials;

};

}

As you can see, the Model class contains collections for Mesh and ModelMaterial objects and carries around a Game class reference. Note that model materials are contained within the model, but they describe the properties of a mesh. Multiple meshes can thus refer to the same model material.

The Model constructor accepts a filename to read and a Boolean to flip a model’s vertical texture coordinates (for loading OpenGL-style models). Note that this declaration has no dependency on the Open Asset Import Library. The Model class implementation hides the specifics of asset importing from anything consuming the Model interface. We defer a full discussion of the Model class Implementation until after we present the associated Mesh and ModelMaterial classes.

Meshes

A mesh can be minimally described by a set of vertices, but it can also include indices, normals, tangents, binormals, texture coordinates, and vertex colors. A mesh can also reference a model material. Listing 15.2 presents the declaration of the Mesh class.

Listing 15.2 The Mesh.h Header File

#pragma once

#include "Common.h"

struct aiMesh;

namespace Library

{

class Material;

class ModelMaterial;

class Mesh

{

friend class Model;

public:

Mesh(Model& model, ModelMaterial* material);

~Mesh();

Model& GetModel();

ModelMaterial* GetMaterial();

const std::string& Name() const;

const std::vector<XMFLOAT3>& Vertices() const;

const std::vector<XMFLOAT3>& Normals() const;

const std::vector<XMFLOAT3>& Tangents() const;

const std::vector<XMFLOAT3>& BiNormals() const;

const std::vector<std::vector<XMFLOAT3>*>& TextureCoordinates()

const;

const std::vector<std::vector<XMFLOAT4>*>& VertexColors()

const;

UINT FaceCount() const;

const std::vector<UINT>& Indices() const;

void CreateIndexBuffer(ID3D11Buffer** indexBuffer);

private:

Mesh(Model& model, aiMesh& mesh);

Mesh(const Mesh& rhs);

Mesh& operator=(const Mesh& rhs);

Model& mModel;

ModelMaterial* mMaterial;

std::string mName;

std::vector<XMFLOAT3> mVertices;

std::vector<XMFLOAT3> mNormals;

std::vector<XMFLOAT3> mTangents;

std::vector<XMFLOAT3> mBiNormals;

std::vector<std::vector<XMFLOAT3>*> mTextureCoordinates;

std::vector<std::vector<XMFLOAT4>*> mVertexColors;

UINT mFaceCount;

std::vector<UINT> mIndices;

};

}

Note the forward declaration of the aiMesh structure and its use within a private constructor of the Mesh class. This is the mesh representation from the Open Asset Import Library. It’s included here because the implementation of the Model class uses the Open Asset Import Library to convert assets at runtime. Making it private within the Mesh class keeps the interface free of this dependency and implies that you could excise the Open Asset Import Library without affecting the rest of your application. You would do exactly that if you made a build-time content pipeline.

Also note the Mesh::CreateIndexBuffer() method. If a mesh contains indices, it has all the information required to populate an index buffer. This isn’t the case for creating vertex buffers because the definition of a vertex buffer depends on the shader used to render the mesh.

Model Materials

The final type in the 3D model system is the ModelMaterial class. As with models and meshes, you can use a variety of representations to describe a model’s shader associations and inputs. The ModelMaterial class stores only a name and a list of textures. The name should match the effect to use when rendering a mesh. For example, the model material’s name would be BasicEffect to use the BasicEffect.fx file. The texture list stores filenames, and each element in the collection is associated with the texture’s intended usage. For example, a texture could be identified as the diffuse color texture or a specular map. The TextureType enumeration defines the supported texture types.

Listing 15.3 presents the declarations of the ModelMaterial class and the TextureType enumeration.

Listing 15.3 The ModelMaterial.h Header File

#pragma once

#include "Common.h"

struct aiMaterial;

namespace Library

{

enum TextureType

{

TextureTypeDifffuse = 0,

TextureTypeSpecularMap,

TextureTypeAmbient,

TextureTypeEmissive,

TextureTypeHeightmap,

TextureTypeNormalMap,

TextureTypeSpecularPowerMap,

TextureTypeDisplacementMap,

TextureTypeLightMap,

TextureTypeEnd

};

class ModelMaterial

{

friend class Model;

public:

ModelMaterial(Model& model);

~ModelMaterial();

Model& GetModel();

const std::string& Name() const;

const std::map<TextureType, std::vector<std::wstring>*>

Textures() const;

private:

static void InitializeTextureTypeMappings();

static std::map<TextureType, UINT> sTextureTypeMappings;

ModelMaterial(Model& model, aiMaterial* material);

ModelMaterial(const ModelMaterial& rhs);

ModelMaterial& operator=(const ModelMaterial& rhs);

Model& mModel;

std::string mName;

std::map<TextureType, std::vector<std::wstring>*> mTextures;

};

}

Asset Loading

With the declarations of the Model, Mesh, and ModelMaterial classes in hand, we can now discuss loading assets into these types. This is where the Open Asset Import Library comes in.

Loading Models

Just as the Model class is the root type of your custom model system, the aiScene type is the root structure for data imported with the Open Asset Import Library. An aiScene object is populated with a call to Importer::ReadFile() and exposes members for a model’s meshes and materials. All asset loading originates in the Model constructor, which loads meshes and model materials by instantiating their respective classes. Listing 15.4 presents the implementation of the Model class.

Listing 15.4 The Model.cpp File

#include "Model.h"

#include "Game.h"

#include "GameException.h"

#include "Mesh.h"

#include "ModelMaterial.h"

#include <assimp/Importer.hpp>

#include <assimp/scene.h>

#include <assimp/postprocess.h>

namespace Library

{

Model::Model(Game& game, const std::string& filename, bool flipUVs)

: mGame(game), mMeshes(), mMaterials()

{

Assimp::Importer importer;

UINT flags = aiProcess_Triangulate | aiProcess_

JoinIdenticalVertices | aiProcess_SortByPType |

aiProcess_FlipWindingOrder;

if (flipUVs)

{

flags |= aiProcess_FlipUVs;

}

const aiScene* scene = importer.ReadFile(filename, flags);

if (scene == nullptr)

{

throw GameException(importer.GetErrorString());

}

if (scene->HasMaterials())

{

for (UINT i = 0; i < scene->mNumMaterials; i++)

{

mMaterials.push_back(new ModelMaterial(*this,

scene->mMaterials[i]));

}

}

if (scene->HasMeshes())

{

for (UINT i = 0; i < scene->mNumMeshes; i++)

{

Mesh* mesh = new Mesh(*this, *(scene->mMeshes[i]));

mMeshes.push_back(mesh);

}

}

}

Model::~Model()

{

for (Mesh* mesh : mMeshes)

{

delete mesh;

}

for (ModelMaterial* material : mMaterials)

{

delete material;

}

}

Game& Model::GetGame()

{

return mGame;

}

bool Model::HasMeshes() const

{

return (mMeshes.size() > 0);

}

bool Model::HasMaterials() const

{

return (mMaterials.size() > 0);

}

const std::vector<Mesh*>& Model::Meshes() const

{

return mMeshes;

}

const std::vector<ModelMaterial*>& Model::Materials() const

{

return mMaterials;

}

}

If you were authoring a build-time content pipeline, the work performed in the Model constructor would be saved to an intermediate file format. Then you would add a second constructor/method that deserialized these intermediary files and could (optionally) remove the Open Asset Import Library functionality from your runtime Library project. Furthermore, any flipping of the vertical texture coordinates would be performed in a build-time content pipeline and would be removed from runtime model loading.

Loading Meshes

Loading a mesh entails reading the actual vertices, indices, normals, and so on, so it involves more code than for the Model class. Still, the patterns are the same. The Open Asset Import Library aiMesh structure exposes the necessary data, and you simply transfer it to your custom Mesh class. Listing 15.5 presents an abbreviated implementation of the Mesh class. The omitted methods are single-line accessors to expose the private data members. You can find a full implementation on the book’s companion website.

Listing 15.5 The Mesh.cpp File (Abbreviated)

#include "Mesh.h"

#include "Model.h"

#include "Game.h"

#include "GameException.h"

#include <assimp/scene.h>

namespace Library

{

Mesh::Mesh(Model& model, aiMesh& mesh)

: mModel(model), mMaterial(nullptr), mName(mesh.mName.C_Str()),

mVertices(), mNormals(), mTangents(), mBiNormals(),

mTextureCoordinates(), mVertexColors(), mFaceCount(0),

mIndices()

{

mMaterial = mModel.Materials().at(mesh.mMaterialIndex);

// Vertices

mVertices.reserve(mesh.mNumVertices);

for (UINT i = 0; i < mesh.mNumVertices; i++)

{

mVertices.push_back(XMFLOAT3(reinterpret_cast<const

float*>(&mesh.mVertices[i])));

}

// Normals

if (mesh.HasNormals())

{

mNormals.reserve(mesh.mNumVertices);

for (UINT i = 0; i < mesh.mNumVertices; i++)

{

mNormals.push_back(XMFLOAT3(reinterpret_cast<const

float*>(&mesh.mNormals[i])));

}

}

// Tangents and Binormals

if (mesh.HasTangentsAndBitangents())

{

mTangents.reserve(mesh.mNumVertices);

mBiNormals.reserve(mesh.mNumVertices);

for (UINT i = 0; i < mesh.mNumVertices; i++)

{

mTangents.push_back(XMFLOAT3(reinterpret_cast<const

float*>(&mesh.mTangents[i])));

mBiNormals.push_back(XMFLOAT3(reinterpret_cast<const

float*>(&mesh.mBitangents[i])));

}

}

// Texture Coordinates

UINT uvChannelCount = mesh.GetNumUVChannels();

for (UINT i = 0; i < uvChannelCount; i++)

{

std::vector<XMFLOAT3>* textureCoordinates = new std::vector<XMFLOAT3>();

textureCoordinates->reserve(mesh.mNumVertices);

mTextureCoordinates.push_back(textureCoordinates);

aiVector3D* aiTextureCoordinates = mesh.mTextureCoords[i];

for (UINT j = 0; j < mesh.mNumVertices; j++)

{

textureCoordinates->push_back(XMFLOAT3(reinterpret_

cast<const float*>(&aiTextureCoordinates[j])));

}

}

// Vertex Colors

UINT colorChannelCount = mesh.GetNumColorChannels();

for (UINT i = 0; i < colorChannelCount; i++)

{

std::vector<XMFLOAT4>* vertexColors = new

std::vector<XMFLOAT4>();

vertexColors->reserve(mesh.mNumVertices);

mVertexColors.push_back(vertexColors);

aiColor4D* aiVertexColors = mesh.mColors[i];

for (UINT j = 0; j < mesh.mNumVertices; j++)

{

vertexColors->push_back(XMFLOAT4(reinterpret_cast

<const float*>(&aiVertexColors[j])));

}

}

// Faces (note: could pre-reserve if we limit primitive types)

if (mesh.HasFaces())

{

mFaceCount = mesh.mNumFaces;

for (UINT i = 0; i < mFaceCount; i++)

{

aiFace* face = &mesh.mFaces[i];

for (UINT j = 0; j < face->mNumIndices; j++)

{

mIndices.push_back(face->mIndices[j]);

}

}

}

}

Mesh::~Mesh()

{

for (std::vector<XMFLOAT3>* textureCoordinates :

mTextureCoordinates)

{

delete textureCoordinates;

}

for (std::vector<XMFLOAT4>* vertexColors : mVertexColors)

{

delete vertexColors;

}

}

void Mesh::CreateIndexBuffer(ID3D11Buffer** indexBuffer)

{

assert(indexBuffer != nullptr);

D3D11_BUFFER_DESC indexBufferDesc;

ZeroMemory(&indexBufferDesc, sizeof(indexBufferDesc));

indexBufferDesc.ByteWidth = sizeof(UINT) * mIndices.size();

indexBufferDesc.Usage = D3D11_USAGE_IMMUTABLE;

indexBufferDesc.BindFlags = D3D11_BIND_INDEX_BUFFER;

D3D11_SUBRESOURCE_DATA indexSubResourceData;

ZeroMemory(&indexSubResourceData, sizeof

(indexSubResourceData));

indexSubResourceData.pSysMem = &mIndices[0];

if (FAILED(mModel.GetGame().Direct3DDevice()->

CreateBuffer(&indexBufferDesc, &indexSubResourceData, indexBuffer)))

{

throw GameException("ID3D11Device::CreateBuffer()

failed.");

}

}

}

The specific syntax of the Mesh constructor might require close inspection to fully understand, but the broad strokes are pretty simple. You just iterate through the various aiMesh structure members and copy their data to Mesh class members. Visit the Open Asset Import Library website for more details on the aiMesh type.

Note that the Model::mTextureCoordinates and Model::mVertexColors class members are vectors of vectors. This means that a mesh can have multiple sets of coordinates and colors, which would apply to separate textures or rendering techniques. Also note the Model::mMaterial class member. This is a pointer back to one of the ModelMaterial objects stored in the Model class.

Finally, take a look at the Mesh::CreateIndexBuffer() method. On examination, this code should look familiar: This is the same code you wrote to create an index buffer for the cube demo (in the last chapter). It’s merely been added to the Mesh class for reusability.

Loading Model Materials

Listing 15.6 presents the implementation for the ModelMaterial class. This is the last piece of the model system before you can exercise your code with a demo. The ModelMaterial constructor pulls data from the aiMaterial structure of the Open Asset Import Library. The only odd bit of implementation here is the mapping between the Open Asset Import Library texture types and your custom TextureType enumeration. Again, this is done to hide the Open Asset Import Library from the rest of your application. The static mapping is initialized within the ModelMaterial::InitializeTextureTypeMappings() method.

Listing 15.6 The ModelMaterial.cpp File

#include "ModelMaterial.h"

#include "GameException.h"

#include "Utility.h"

#include <assimp/scene.h>

namespace Library

{

std::map<TextureType, UINT> ModelMaterial::sTextureTypeMappings;

ModelMaterial::ModelMaterial(Model& model)

: mModel(model), mTextures()

{

InitializeTextureTypeMappings();

}

ModelMaterial::ModelMaterial(Model& model, aiMaterial* material)

: mModel(model), mTextures()

{

InitializeTextureTypeMappings();

aiString name;

material->Get(AI_MATKEY_NAME, name);

mName = name.C_Str();

for (TextureType textureType = (TextureType)0; textureType < TextureTypeEnd; textureType = (TextureType)(textureType + 1))

{

aiTextureType mappedTextureType =

(aiTextureType)sTextureTypeMappings[textureType];

UINT textureCount = material->GetTextureCount

(mappedTextureType);

if (textureCount > 0)

{

std::vector<std::wstring>* textures = new std::vector

<std::wstring>();

mTextures.insert(std::pair<TextureType, std::vector<std

::wstring>*>(textureType, textures));

textures->reserve(textureCount);

for (UINT textureIndex = 0; textureIndex <

textureCount; textureIndex++)

{

aiString path;

if (material->GetTexture(mappedTextureType,

textureIndex, &path) == AI_SUCCESS)

{

std::wstring wPath;

Utility::ToWideString(path.C_Str(), wPath);

textures->push_back(wPath);

}

}

}

}

}

ModelMaterial::~ModelMaterial()

{

for (std::pair<TextureType, std::vector<std::wstring>*>

textures : mTextures)

{

DeleteObject(textures.second);

}

}

Model& ModelMaterial::GetModel()

{

return mModel;

}

const std::string& ModelMaterial::Name() const

{

return mName;

}

const std::map<TextureType, std::vector<std::wstring>*> ModelMaterial::Textures() const

{

return mTextures;

}

void ModelMaterial::InitializeTextureTypeMappings()

{

if (sTextureTypeMappings.size() != TextureTypeEnd)

{

sTextureTypeMappings[TextureTypeDifffuse] =

aiTextureType_DIFFUSE;

sTextureTypeMappings[TextureTypeSpecularMap] =

aiTextureType_SPECULAR;

sTextureTypeMappings[TextureTypeAmbient] =

aiTextureType_AMBIENT;

sTextureTypeMappings[TextureTypeHeightmap] =

aiTextureType_HEIGHT;

sTextureTypeMappings[TextureTypeNormalMap] =

aiTextureType_NORMALS;

sTextureTypeMappings[TextureTypeSpecularPowerMap] =

aiTextureType_SHININESS;

sTextureTypeMappings[TextureTypeDisplacementMap] =

aiTextureType_DISPLACEMENT;

sTextureTypeMappings[TextureTypeLightMap] =

aiTextureType_LIGHTMAP;

}

}

}

Some functionality is missing from this implementation of a model material—specifically, a set of name/value pairs matching shader variables. We have intentionally left this out at this stage because we haven’t introduced a full-fledged material system yet. You’ll develop a material system in the next chapter.

A Model Rendering Demo

It’s time to put the model system to work. For this demo, you’ll render a sphere model that contains just a single mesh. The sphere model (Sphere.obj) is stored in Wavefront OBJ format, and you can find it on the companion website. You’ll use the BasicEffect.fx file for rendering (which doesn’t use a texture; just interpolated vertex colors).

Start by adding a ModelDemo class whose declaration is identical to the CubeDemo type of the last chapter. Add two new class members, an unsigned integer for storing the number of indices in the model (ModelDemo::mIndexCount) and a CreateVertex() method with the following prototype:

void CreateVertexBuffer(ID3D11Device* device, const Mesh& mesh,

ID3D11Buffer** vertexBuffer) const;

The chief differences between the demos is how the vertex and index buffers are initialized. This happens within the ModelDemo::Initialize() method. In the cube demo, you manually constructed the vertex and index buffers. In the model demo, you load the Sphere.obj file and build the buffers from the single mesh within the model. Listing 15.7 presents an abbreviated ModelDemo::Initialize() method and the newly added ModelDemo::CreateVertexBuffer() method. The code omitted from the ModelDemo::Initialize() method comes directly from the cube demo—specifically, the code for compiling a shader; creating an effect object; looking up the technique, pass, and WorldViewProjection variable; and creating the input layout.

void ModelDemo::Initialize()

{

// ... Previously presented code omitted for brevity ...

// Load the model

std::unique_ptr<Model> model(new Model(*mGame,

"Content\Models\Sphere.obj", true));

// Create the vertex and index buffers

Mesh* mesh = model->Meshes().at(0);

CreateVertexBuffer(mGame->Direct3DDevice(), *mesh, &mVertexBuffer);

mesh->CreateIndexBuffer(&mIndexBuffer);

mIndexCount = mesh->Indices().size();

}

void ModelDemo::CreateVertexBuffer(ID3D11Device* device, const Mesh&

mesh, ID3D11Buffer** vertexBuffer) const

{

const std::vector<XMFLOAT3>& sourceVertices = mesh.Vertices();

std::vector<BasicEffectVertex> vertices;

vertices.reserve(sourceVertices.size());

if (mesh.VertexColors().size() > 0)

{

std::vector<XMFLOAT4>* vertexColors = mesh.VertexColors().

at(0);

assert(vertexColors->size() == sourceVertices.size());

for (UINT i = 0; i < sourceVertices.size(); i++)

{

XMFLOAT3 position = sourceVertices.at(i);

XMFLOAT4 color = vertexColors->at(i);

vertices.push_back(BasicEffectVertex(XMFLOAT4(position.x, position.y, position.z, 1.0f), color));

}

}

else

{

for (UINT i = 0; i < sourceVertices.size(); i++)

{

XMFLOAT3 position = sourceVertices.at(i);

XMFLOAT4 color = ColorHelper::RandomColor();

vertices.push_back(BasicEffectVertex(XMFLOAT4(position.x, position.y, position.z, 1.0f), color));

}

}

D3D11_BUFFER_DESC vertexBufferDesc;

ZeroMemory(&vertexBufferDesc, sizeof(vertexBufferDesc));

vertexBufferDesc.ByteWidth = sizeof(BasicEffectVertex) * vertices.

size();

vertexBufferDesc.Usage = D3D11_USAGE_IMMUTABLE;

vertexBufferDesc.BindFlags = D3D11_BIND_VERTEX_BUFFER;

D3D11_SUBRESOURCE_DATA vertexSubResourceData;

ZeroMemory(&vertexSubResourceData, sizeof(vertexSubResourceData));

vertexSubResourceData.pSysMem = &vertices[0];

if (FAILED(device->CreateBuffer(&vertexBufferDesc,

&vertexSubResourceData, vertexBuffer)))

{

throw GameException("ID3D11Device::CreateBuffer() failed.");

}

The ModelDemo::Initialize() method loads the Sphere.obj file by instantiating a Model object. If loading succeeds, there is exactly one mesh for this particular object, which is retrieved through the Model::Meshes() accessor. This mesh is used to initialize the vertex and index buffers.

You’ve already examined the Mesh::CreateIndexBuffer() method, but new in Listing 15.7 is the ModelDemo::CreateVertexBuffer() method. The last few lines of this method build the vertex buffer and are the same as for the manually constructed vertices of the cube demo. But in this method, you build your BasicEffectVertex array dynamically from the data stored in the mesh. Note the test for vertex colors: If the mesh contains vertex colors, they are used. Otherwise, a random color is assigned to each vertex (assign a solid color, if you prefer).

The ModelDemo::Draw() method is identical to the cube demo, except that you specify mIndexCount as the first argument to the ID3D11DeviceContext::Draw() method.

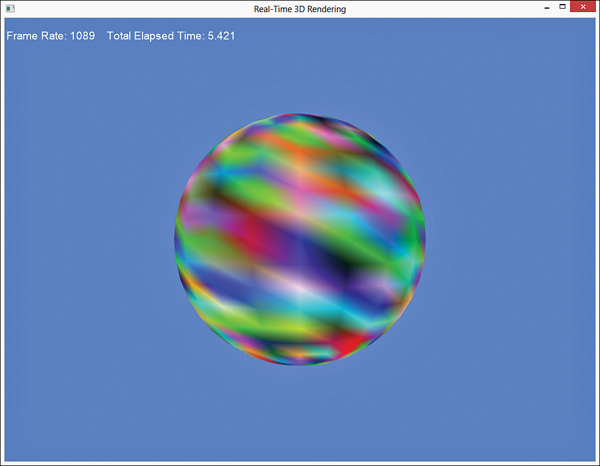

Integrate this demo into your RenderingGame class, and you’ll see output similar to Figure 15.1.

Texture Mapping

Let’s extend the work in the model demo to apply a texture. Start by creating a new class called TexturedModelDemo that begins as a copy of the ModelDemo class. Next, add the following class members:

ID3D11ShaderResourceView* mTextureShaderResourceView;

ID3DX11EffectShaderResourceVariable* mColorTextureVariable;

The ID3D11ShaderResourceView interface specifies a resource (for example, a texture) that a shader can use. The ID3DX11EffectShaderResourceVariable interface is the Effect 11 type that represents the shader variable that will accept a shader resource (for example, the HLSL Texture2D type).

For this demo, you’ll use the TextureMapping.fx effect that you authored back in Chapter 5, “Texture Mapping.” To refresh your memory, this effect has a WorldViewProjection matrix and a ColorTexture as shader constants, and the vertex shader accepts the object position and texture coordinates for each vertex. You also want to replace the main10 technique to main11 and use the technique11 keyword.

Because this effect has a different input signature, you need a different vertex structure. Instead of the BasicEffectVertex type, declare the TextureMappingVertex structure as follows:

typedef struct _TextureMappingVertex

{

XMFLOAT4 Position;

XMFLOAT2 TextureCoordinates;

_TextureMappingVertex() { }

_TextureMappingVertex(XMFLOAT4 position, XMFLOAT2

textureCoordinates)

: Position(position), TextureCoordinates(textureCoordinates) {

}

} TextureMappingVertex;

The remaining changes lie within the TexturedModelDemo class implementation. Listing 15.8 presents the TexturedModelDemo::Initialize(), TexturedModelDemo::Draw(), and TexturedModelDemo::CreateVertexBuffer() methods. Visit the companion website for a complete class implementation.

Listing 15.8 The TexturedModelDemo Class Implementation (Abbreviated)

void TexturedModelDemo::Initialize()

{

SetCurrentDirectory(Utility::ExecutableDirectory().c_str());

// Compile the shader

UINT shaderFlags = 0;

#if defined( DEBUG ) || defined( _DEBUG )

shaderFlags |= D3DCOMPILE_DEBUG;

shaderFlags |= D3DCOMPILE_SKIP_OPTIMIZATION;

#endif

ID3D10Blob* compiledShader = nullptr;

ID3D10Blob* errorMessages = nullptr;

HRESULT hr = D3DCompileFromFile(L"Content\Effects\TextureMapping.

fx", nullptr, nullptr, nullptr, "fx_5_0", shaderFlags, 0, &compiledShader, &errorMessages);

if (errorMessages != nullptr)

{

GameException ex((char*)errorMessages->GetBufferPointer(), hr);

ReleaseObject(errorMessages);

throw ex;

}

if (FAILED(hr))

{

throw GameException("D3DX11CompileFromFile() failed.", hr);

}

// Create an effect object from the compiled shader

hr = D3DX11CreateEffectFromMemory(compiledShader->

GetBufferPointer(), compiledShader->GetBufferSize(), 0, mGame->

Direct3DDevice(), &mEffect);

if (FAILED(hr))

{

throw GameException("D3DX11CreateEffectFromMemory()

failed.", hr);

}

ReleaseObject(compiledShader);

// Look up the technique, pass, and WVP variable from the effect

mTechnique = mEffect->GetTechniqueByName("main11");

if (mTechnique == nullptr)

{

throw GameException("ID3DX11Effect::GetTechniqueByName() could not

find the specified technique.", hr);

}

mPass = mTechnique->GetPassByName("p0");

if (mPass == nullptr)

{

throw GameException("ID3DX11EffectTechnique::GetPassByName()

could not find the specified pass.", hr);

}

ID3DX11EffectVariable* variable = mEffect->GetVariableByName

("WorldViewProjection");

if (variable == nullptr)

{

throw GameException("ID3DX11Effect::GetVariableByName() could

not find the specified variable.", hr);

}

mWvpVariable = variable->AsMatrix();

if (mWvpVariable->IsValid() == false)

{

throw GameException("Invalid effect variable cast.");

}

variable = mEffect->GetVariableByName("ColorTexture");

if (variable == nullptr)

{

throw GameException("ID3DX11Effect::GetVariableByName() could

not find the specified variable.", hr);

}

mColorTextureVariable = variable->AsShaderResource();

if (mColorTextureVariable->IsValid() == false)

{

throw GameException("Invalid effect variable cast.");

}

// Create the input layout

D3DX11_PASS_DESC passDesc;

mPass->GetDesc(&passDesc);

D3D11_INPUT_ELEMENT_DESC inputElementDescriptions[] =

{

{ "POSITION", 0, DXGI_FORMAT_R32G32B32A32_FLOAT, 0, 0,D3D11_

INPUT_PER_VERTEX_DATA, 0 },

{ "TEXCOORD", 0, DXGI_FORMAT_R32G32_FLOAT, 0, D3D11_APPEND_

ALIGNED_ELEMENT, D3D11_INPUT_PER_VERTEX_DATA, 0 }

};

if (FAILED(hr = mGame->Direct3DDevice()->CreateInputLayout

(inputElementDescriptions, ARRAYSIZE(inputElementDescriptions),

passDesc.pIAInputSignature, passDesc.IAInputSignatureSize,

&mInputLayout)))

{

throw GameException("ID3D11Device::CreateInputLayout()

failed.", hr);

}

// Load the model

std::unique_ptr<Model> model(new Model(*mGame, "Content\Models\

Sphere.obj", true));

// Create the vertex and index buffers

Mesh* mesh = model->Meshes().at(0);

CreateVertexBuffer(mGame->Direct3DDevice(), *mesh, &mVertexBuffer);

mesh->CreateIndexBuffer(&mIndexBuffer);

mIndexCount = mesh->Indices().size();

// Load the texture

std::wstring textureName = L"Content\Textures\EarthComposite.

jpg";

if (FAILED(hr = DirectX::CreateWICTextureFromFile(mGame->

Direct3DDevice(), mGame->Direct3DDeviceContext(), textureName.c_str(),

nullptr, &mTextureShaderResourceView)))

{

throw GameException("CreateWICTextureFromFile() failed.", hr);

}

}

void TexturedModelDemo::Draw(const GameTime& gameTime)

{

ID3D11DeviceContext* direct3DDeviceContext =

mGame->Direct3DDeviceContext();

direct3DDeviceContext->IASetPrimitiveTopology(D3D11_PRIMITIVE_

TOPOLOGY_TRIANGLELIST);

direct3DDeviceContext->IASetInputLayout(mInputLayout);

UINT stride = sizeof(TextureMappingVertex);

UINT offset = 0;

direct3DDeviceContext->IASetVertexBuffers(0, 1, &mVertexBuffer,

&stride, &offset);

direct3DDeviceContext->IASetIndexBuffer(mIndexBuffer, DXGI_FORMAT_

R32_UINT, 0);

XMMATRIX worldMatrix = XMLoadFloat4x4(&mWorldMatrix);

XMMATRIX wvp = worldMatrix * mCamera->ViewMatrix() *

mCamera->ProjectionMatrix();

mWvpVariable->SetMatrix(reinterpret_cast<const float*>(&wvp));

mColorTextureVariable->SetResource(mTextureShaderResourceView);

mPass->Apply(0, direct3DDeviceContext);

direct3DDeviceContext->DrawIndexed(mIndexCount, 0, 0);

}

void TexturedModelDemo::CreateVertexBuffer(ID3D11Device* device, const Mesh& mesh, ID3D11Buffer** vertexBuffer) const

{

const std::vector<XMFLOAT3>& sourceVertices = mesh.Vertices();

std::vector<TextureMappingVertex> vertices;

vertices.reserve(sourceVertices.size());

std::vector<XMFLOAT3>* textureCoordinates = mesh.

TextureCoordinates().at(0);

assert(textureCoordinates->size() == sourceVertices.size());

for (UINT i = 0; i < sourceVertices.size(); i++)

{

XMFLOAT3 position = sourceVertices.at(i);

XMFLOAT3 uv = textureCoordinates->at(i);

vertices.push_back(TextureMappingVertex(XMFLOAT4(position.x,

position.y, position.z, 1.0f), XMFLOAT2(uv.x, uv.y)));

}

D3D11_BUFFER_DESC vertexBufferDesc;

ZeroMemory(&vertexBufferDesc, sizeof(vertexBufferDesc));

vertexBufferDesc.ByteWidth = sizeof(TextureMappingVertex) *

vertices.size();

vertexBufferDesc.Usage = D3D11_USAGE_IMMUTABLE;

vertexBufferDesc.BindFlags = D3D11_BIND_VERTEX_BUFFER;

D3D11_SUBRESOURCE_DATA vertexSubResourceData;

ZeroMemory(&vertexSubResourceData, sizeof(vertexSubResourceData));

vertexSubResourceData.pSysMem = &vertices[0];

if (FAILED(device->CreateBuffer(&vertexBufferDesc,

&vertexSubResourceData, vertexBuffer)))

{

throw GameException("ID3D11Device::CreateBuffer() failed.");

}

}

In the TexturedModelDemo::Initialize() method, the TextureMapping.fx file is compiled, the effect object is created, and the main11 technique and p0 pass are located. Then the WorldViewProjection and ColorTexture variables are queried. Next, the input layout is created. Note the updated D3D11_INPUT_ELEMENT_DESC array that specifies the POSITION and TEXCOORD semantics for the two shader inputs.

Next, the Sphere.obj model is loaded and the vertex and index buffers are created. Note that the TexturedModelDemo::CreateVertexBuffer() method now builds TextureMappingVertex objects with positions and texture coordinates read from the model. No vertex colors are used for this shader.

Finally, the EarthComposite.jpg file is loaded into the mTextureShaderResourceView member using the CreateWICTextureFromFile() function. This function resides in the DirectX namespace and is declared in the WICTextureLoader.h header file. This header file comes from the DirectXTK library, which Chapter 3, “Tools of the Trade,” introduced. Be sure you’ve set up your projects to correctly reference this library. You can find the EarthComposite.jpg file on the companion website.

The TexturedModelDemo::Draw() method is similar to the original model demo. It now uses the size of the TextureMappingVertex struct when calling the ID3D11DeviceContext::IASetVertexBuffers() method and calls the SetResource() method of the mColorTextureVariable member to update the texture.

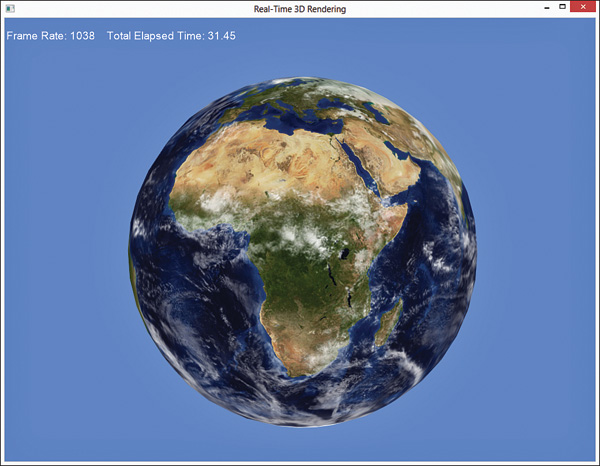

Figure 15.2 shows the output of the textured model demo.

Figure 15.2 The output of the textured model demo. (Original texture from Reto Stöckli, NASA Earth Observatory. Additional texturing by Nick Zuccarello, Florida Interactive Entertainment Academy.)

Summary

In this chapter, you learned how to use 3D models in your applications. You employed the Open Asset Import Library to read various model file formats, and you authored a set of classes to represent models at runtime. Then you wrote two demos to exercise this system, and you learned how to apply 2D textures to your models.

In the next chapter, you author a material system to support the myriad shaders you’ve authored and facilitate code reuse.

Exercises

1. Create a build-time content pipeline from the code you’ve written in this chapter. This can be as simple as a command-line application that accepts a single input file and produces a single output, or your application can operate on a batch of file inputs. Ideally, your content pipeline will be presented through a graphical user interface.

2. Experiment with loading various model formats. For example, write demos to load .x and .3ds files (DirectX and 3D Studio Max formats). If your models use .dds texture files, investigate the CreateDDSTextureFromFile() method from the DirectXTK library.