Chapter 1. The Network Management World Must Change: Why Should You Care?

This chapter covers

The latest trends in network management

Why you should care about these trends

Why network engineers need new skills for the future

Why existing technologies such as CLI, SNMP, NetFlow/IPFIX, syslog, and so on are not sufficient for network management and automation

Why working with multiple protocols and data models is a pain. Why mapping data models is a source of troubles.

Why automation, automation, automation?

By the end of this chapter, you will understand why the typical ways of managing networks are not sufficient in today’s world, why all the trends in the networking industry these days converge into more programmability, and why the move toward more automation is a compulsory transformation. If you are a network engineer, you will recognize the limitations of existing practices, such as using the command-line interface (CLI), Simple Network Management Protocol (SNMP), NetFlow/IP Flow Information eXport (IPFIX), syslog, and so on. Also, as a network operator, you will understand the challenges of needing to adapt, both your knowledge and your way of working, to be more efficient in managing the services in a network.

Introduction

The Internet changed all aspects of life for virtually everyone. Most of it is taken it for granted these days. When you hear in your house, “Hey, there is no Internet!”, you know you must treat this like a top-priority case in any respectable technical assistance center in the world—assuming you want to keep some level of peace in your household. Granted, the Internet is more important today than ever before, whether for business, education, social networking, banking, or simply leisure. Expressed differently, the Internet continues to get more and more vital.

Behind the Internet infrastructure, network operators work hard to design, deploy, maintain, and monitor networks. What are those network operators actually doing? Trying to categorize the network operator job into different tasks (starting from the old but still sometimes relevant FCAPS model, which stands for Fault, Configuration, Accounting, Performance, and Security Management), the FCAPS model is an international standard, defined by the International Telecommunications Union (ITU),1 that describes the various network management areas.

Table 1-1, the ITU-T FCAPS Model Reference ITU-T M.3400 and ISO / IEC 7498-4 (Open Systems Interconnection—Basic Reference Model, Part 4: Management Framework), describes the main objectives of each functional area in the FCAPS model.

Table 1-1 ITU-T FCAPS Model

Management Functional Areas (MFAs) |

Management Function Set Groups |

|---|---|

Fault |

Alarm Surveillance; Fault Localization & Correlation; Testing; Trouble Administration; Network Recovery |

Configuration |

Network Planning & Engineering, Installation; Service Planning & Negotiation; Discovery; Provisioning; Status & Control |

Accounting |

Usage Measurement, Collection, Aggregation, Mediation; Tariffing & Pricing |

Performance |

Performance Monitoring & Control; Performance Analysis & Trending; Quality Assurance |

Security |

Access Control & Policy; Customer Profiling; Attack Detection, Prevention, Containment and Recovery; Security Administration |

Note

Every respectable network management book contains a reference to FCAPS, so we’ll quickly cover this topic and move on.

This model offers the benefit of segmenting the administration work into more independent and understandable aspects. Fault management is compulsory to analyze the fault situations and manage networks in a proactive way. Configuration management is essential, not only to instantiate new services, but also to improve services and fix mistakes. Accounting management helps with the traffic use measurements, for billing or simply collection. Performance management deals with the on-demand or continuous monitoring of services, in order to proactively detect network faults or degradations.

The one entry in FCAPS that may be more independent is security: With the growing pressure to secure network devices (wireless access points, switches, and routers) and all network-attached devices (PCs, tablets, smartphones, and so on), security engineer became a job all its own.

Note that, a couple of years ago, a new term started to appear next to FCAPS: FCAPS + E (Energy Management). All these devices consume a lot of energy, including core routers from the Internet, data centers full of servers, and also the devices connected to the Internet. For example, an IP phone consumes as little as 2.3 W (when idle) but up to 14.9 W, depending on the Power over Ethernet (PoE, Standard 802.3AF) classification and the enabled functionality.

Today’s and tomorrow’s customers care about services. The only time they care about the network is when it is not working. Providers who deliver services need to deliver them on demand and provide a great end-user customer experience. When a service is not operating to customer expectations, the provider needs to fix the service as quickly as possible. Existing methods of configuring, monitoring, and troubleshooting service-impacting network issues do not meet customer needs for on-demand, high-quality, instant services. Automating all aspects of service delivery is required—from the application server through the network connection to the end consumer. Automated monitoring of service quality, issue detection, and automated recovery are required to deliver a high-quality service experience. Programmatic automation is the key to large-scale and high-quality service delivery.

Network engineers who embrace programmatic automation will be key to the next generation of service delivery. By extending their skills to implement model-driven programmatic automation, they will be valuable contributors, helping their customers deliver the next generation of services.

The five (or six if you count Energy) FCAPS management categories require more automation and more programmability. The time for disconnected network management systems based on these categories is over. The network needs to be managed as a single entity. Obviously, automation of a single category is a step in the right direction. However, automating network configuration without integrating fault management, without looking at the performance of the network and services, without thinking about the security aspects, and without collecting the accounting information, does not provide a full automation picture. In that sense, the FCAPS categories are being blurred these days. Engineers coming from different FCAPS backgrounds will need to integrate their domain-specific knowledge into a company-wide, FCAPS-wide, common automation framework, basically working toward a DevOps model, which is addressed later in this chapter.

Network operators can clearly envision the services model of the future: All network elements are part of a single, programmable fabric. Services can be assembled from a wide range of virtualized devices on the fly, each automatically advertising their programmability capabilities. They can be designed at a high level, independent of the complexities and device dependencies of the heterogeneous underlying infrastructure.

As an example, network service chaining, also known as service function chaining (SFC),2 is a feature that uses software-defined networking (SDN) capabilities to create a service chain of connected network services (such as traffic shaper, intrusion prevention system, intrusion detection system, content filtering, WAN acceleration, firewall, and so on) and connect them in a virtual chain. Network operators can automate SFC end-to-end and provision in order to create or even re-order the services within minutes.

This model is now achievable through the power of SDN and network function virtualization (NFV). Implementing it, however, requires a fully programmable network, whose server resources can scale as the demand grows. Virtualized network functions (VNFs) must be remotely programmable by an SDN controller, and configurations and changes must be entirely automated, without requiring manual intervention by human network operators. To make this possible, equipment vendors, VNF vendors, and server vendors across the industry must evolve their virtualized devices to be programmable in SDN environments. To do it, a standard configuration management protocol is needed to provide secured, reliable transport and support network-wide transactions in the creation, modification, and deletion of configuration information in VNFs.

Going one step further in the services model of the future, the framework should include service assurance. When a service is degraded, an event automatically triggers the network to reconfigure itself. Opening the door for intent-based networking, network operators must specify the service’s characteristics (connectivity, bandwidth, performance metrics such as max delay/loss/jitter, and so on) as opposed to the network configuration. From there, the network will optimize its configuration to support all the services.

The industry zeroed in on NETCONF as a configuration protocol, along with the YANG data modeling language. Originally designed as a management protocol, the NETCONF/YANG combination provides a simple, standardized way to enable a programmable interface to any device or service. Using YANG as the data modeling language in the networking industry initiates a transformational change in network operation: data model–driven management. Throughout the book, you will discover the NETCONF/YANG combination’s clear benefits to programmability, including the following:

Faster service deployment enabled by automation

Fewer service deployment errors enabled by programmatic validation of configuration changes

Improved serviceability (that is, faster time to diagnose and repair issues)

Reduced operating costs by reducing legacy network engineering expenses

As network engineer, you should invest in yourself and become the network engineering of the future using programmatic automation to improve your productivity and the customer experience. This book is a key step in your transformation.

The following sections examine industry trends, some initiated years ago. Most if not all of these trends point to transformation change and network programmability with YANG.

The Industry Has Changed: What Are the Trends?

This section analyzes some trends in the last few years in the networking industry. Analyzing all these trends helps you understand why some operators are embracing more automation and, if you’re not convinced yet, why all operators must adopt data model–driven management now.

Reduced Deployment Time

Operators test router images extensively before going into production. This is obviously a required procedure. In the not-too-distant past, it was not unusual to test new router software for three to six months before effectively deploying a new service in production. This included a mix of manual configuration and network management testing.

More automation in routers and switches was already a goal at that time, but the traditional development lifecycle for equipment vendors was historically (too) long. Let’s take a typical example from an SNMP management system. In this example, the equipment is missing Management Information Base (MIB) modules or some objects within an MIB module (more on SNMP and MIB modules in the section “SNMP: For Monitoring But Not for Configuration”). The expected lifecycle for this missing automation piece is as follows: reporting this feature request to the equipment vendor product management, “battling” for the relative position in the roadmap compared to all other requests, waiting for the implementation, validating a test image, validating the official image once available, and, finally, upgrading the production routers. Needless to say, a long time passed between the feature request and the new production service.

Deploying new services is the only way for operators to grow their revenue, and time to market is key. Spending months to validate a new network element image and a new service is not viable any longer. The rate of deploying new services must improve all the time. Today, operators want to “smoke test” (the preliminary testing to reveal simple failures severe enough to reject a prospective software release) their images, services, or even entire points of presence (PoPs) on virtual devices—before the real deployment. In cloud environments, new services are expected to be up and running in seconds.

Automation can help reduce the deployment time. Thanks to programmability, new features are validated, new services are deployed, and routers are upgraded in no time. This requires consistent and complete instrumentation application programming interfaces (APIs) in network devices with the end goal that everything that can be automated in networking vendors is automated. As a consequence, operators reduce the service deployment time and offer differentiated services compared to the competition. Adapting the management software is typically faster than waiting for the traditional development lifecycle for equipment vendors.

CLI Is No Longer the Norm (If a Feature Cannot Be Automated, It Does Not Exist)

While it may be enjoyable the first couple of times to configure networks manually for learning and testing, the CLI is not a scalable way to introduce new features in production networks. There have been countless “network down” situations due to manual misconfiguration, sometimes called “fat-finger typing.” A typical example is with access list management: Some, if not most, network engineers have inadvertently locked themselves out from the router configuration while updating an access list at least once in their career. It is so easy to mistype an IP address. (You are probably smiling right now, remembering some similar experience in the past.)

The CLI is an interface for configuring and monitoring network elements, designed for consumption by users who will think through an extra space or an added comma, or even a submenu. Although the CLI is not an API, you unfortunately had to treat it as one because that is all you had for so long. However, using the CLI for automation is neither reliable nor cost-effective.

First off, many service-related configuration changes involve more than one device, such as the point-to-point L3VPN example, which requires the configuration of four different devices, or a fully meshed L3VPN, which could involve many more devices. Indeed, every new addition to the fully meshed L3VPN network can entail updating all L3VPN endpoints, updating an access list, introducing an IP service level agreement (SLA) type of probe, and so on. In these examples, only networking devices are discussed. Many modern services these days encompass more than traditional physical networking devices; they include virtual machines, containers, virtual network functions, and so on. The fact is that configuration changes are becoming more and more complex.

Note

An IP SLA is an active method of monitoring and reliably reporting on network performance. “Active” (as opposed to “passive”) monitoring refers to the fact that an IP SLA continuously generates its own traffic across the network and reports on it in real time. Typically, IP SLA probes monitor performance metrics such as delay, packet loss, and jitter.

Second, using highly trained network experts (for example, CCIEs) to insert new commands on network elements is not very cost-effective, unless the goal is obviously to troubleshoot a problem. As the frequency of changes is rapidly increasing, the idea here is to get the human out of the loop for service configuration and monitoring. As a matter of fact, due to the frequency of changes in the network, it became humanly impossible deal with the CLI, as mentioned by Sam Aldrin, Google architect, in his “Data-Driven Ops at Scale: Sensors, Telemetry and Analytics in Large Data Center Networks” talk at the MPLS+SDN+NFVVOLRD conference in 2016.

Third, and most important, although the CLI is human-friendly, it is not suitable for automation. Consider the following:

The CLI is not standardized. While the networking device configuration CLI is similar, it is not consistent from a syntax and semantic point of view across vendors or across a specific vendor’s set of operating systems.

There are dependency issues when configuring devices via the CLI. In some cases, a CLI command for configuring an interface must be entered before configuring a VLAN. If those steps aren’t executed in the proper order, the configuration fails—or worse, it’s only partially completed.

In addition to those dependencies, the CLI provides limited error reporting—at least not error reporting in a format easily consumable by scripts.

The CLI does not produce any structured output. Therefore, the only way to extract information from a show command is via “screen scraping” (or extracting data from output using regular expression pattern matching). Finally, the “show commands” change frequently, to display more features, more counters, and so on. This issue is that even the smallest change to a show command, such adding a space to the output, might break an Expect script that extracts a specific value.

Can you at least use some CLI-based tools? Looking back at the example of deploying those L3VPNs in the previous section, the NMS involved at that time used Expect3 scripts for device configuration and monitoring, with all the known limitations. Expect is an extension to the Tcl scripting language,4 to automate interactions with text-based interfaces for protocols such as Telnet, Secure Shell (SSH), and so on. Typically, you open a terminal session to a network element, introduce a command, and analyze the answer. For example, when you’re logging on to a router, the script codes that the next anticipated word is “login,” and you insert the login username string; then the next anticipated word is “password,” to which the script responds with the password, and so on. This language works fine until the device provides an unexpected answer. A typical example is a customized prompt, changed from “login:” to “Welcome, please enter your credentials:”. At this point, the script, which expected “login:”, receives an unexpected answer and it fails. Another typical example from the technical assistance center (TAC) is the Authentication, Authorization, and Accounting (AAA) configuration that changes the “login:” and “password:” prompts to “username:” and “password:”. The Expect scripts must be adapted for those new prompts. The Expect script customizations, which rely on the CLI for all the different use cases, cost a lot of time, money, and unplanned outages.

It is not impossible to use the CLI for management, but it is expensive and difficult, as the industry has noted well over the last three decades. In fact, this was identified as one of the main reasons why networking did not evolve as fast as the rest of the IT industry by the Stanford Clean Slate project,5 the foundation of the SDN movement (see “The Future of Networking, and the Past of Protocols,”6 by Scott Shenker et al.). On the tooling front, there is an abundance of libraries and tools to interact with the CLI. Next to Expect, tools such as Paramiko7 in Python, Ansible,8 Puppet,9 Chef,10 and so on exist, but when it comes to the CLI, there is no machine-consumable abstraction to make the work easy and more scalable. This results in spending time chasing CLI changes rather than focusing on the goals of automation. On top of that, CLI management has some unforeseen operational consequences. For example, some operators are afraid of upgrading their devices, fearing that the automation scripts will break, up to the point where even required security patches are delayed or not applied. As a side note, this fear of upgrading also explains why the adoption of data model”“driven automation was slow: Starting from a clean state is impossible for operators, who have to rely on existing CLI-based automation scripts for legacy devices. Considering the time between a new protocol specification and its deployment in networks, it is interesting to observe a clear difference between the lifecycle of network management protocols and other types of protocols. A routing protocol might take a couple of years from specification to deployment, whereas network management protocols usually take a decade. The reasons are practical: You need to upgrade the devices and controller/NMS to benefit from the new management protocol, while also updating the CLI-based scripts as backup plan, and at the same time still managing legacy devices that cannot be upgraded.

In conclusion, some operators rightly assert nowadays that if a feature cannot be automated, it does not exist. (This assertion actually comes from a Google engineer during a presentation for a new feature without programmability.) That leads to the development of APIs to monitor and configure the network elements directly...and the full automation story.

Hardware Commoditization and Disaggregation

Another trend in the industry is hardware commoditization, mainly Linux based, as opposed to more exotic purpose-built hardware, along with software disaggregation from the hardware.

In the data center, it is a well-accepted practice to order servers from one hardware company and to buy, independently, the operating system best suited for your specific needs: Windows, Linux, another Unix variant, and so on. These days, you can even easily support multiple operating systems with virtualization.

In the network world, equipment vendors have historically provided their own hardware with their own software, running on proprietary Application-Specific Integrated Circuits (ASICs), with the consequence that customers had to buy a “package” of a specific software tied to a hardware. Although this hardware equipment is optimized for performance in the core, at the edge, in the data center...you name it, the challenge of managing a complete network increases. Indeed, the costs of validating, managing, and maintaining features/services across the network explode when different device types have different operating systems, different feature sets, different CLIs, different management, and different licenses—even sometimes from the same vendors!

There is a growing tendency in the industry to disaggregate the software from the hardware, starting with the data center operation. Off-the-shelf components, with common chips, make up the hardware part of a solution. These are often referred to as white boxes. Historically, the massively scalable data centers, also known as hyperscalers, were the first ones that automated at large scale (for example, with top-of-rack switches). For this automation, they requested an SDK (software development kit) and a related set of APIs. Automation at scale when using the same switch type, from the same vendor, with the same software version is not that difficult. However, the hyperscalers were also the first ones that wanted to go one step further in the disaggregation, with the ability to order or develop their own networking software, mainly from open source projects.

The end goal is to be able to assemble white boxes as unified hardware, with Linux as the operating system, and specific applications for the different networking functions—potentially buying the BGP function from one vendor, the interior gateway protocol (IGP) from another one, and the RADIUS and management functions from a third one. The future is that vendors compete on an application basis: features, robustness, quality, support, upgrade, and so on.

For operators following this trend, the advantages are clear:

Thanks to Linux on networking devices, network and server engineers now speak the same language as their respective devices. They use the same tools, thus blurring the boundary between server and network management, and reducing the support costs (see the next section, “The DevOps Time”). Not only “routers” and “switches” run on Linux, but the Linux environment offers a plethora of tools and applications, including management operations.

Using Linux implies a broader common knowledge: people are better trained, starting directly in school. With this hardware commoditization, networking is perceived as not being that hard any longer because the vendor CLI is not unique. In other words, different equipment vendors are no longer competing on the CLI front. Instead of hiring people who know the Cisco or Juniper CLI, operators can hire candidates with Linux and scripting skills. So senior network engineers should focus less on vendor specifics and more on the broader network architecture and technology underpinnings. On the vendor front, certifications such as CCIE, (partly) based on the CLI knowledge, should be re-centered on programming the network independent of the CLI and on the operational aspects.

Having the same hardware all over in the network, as opposed to more exotic, purpose-built hardware, reduces the network complexity (with the disadvantage that a hardware bug would hit all platforms). Specific software and applications, on the same generic hardware, might optimize some devices. It is a fact that networks become more and more complex, with an increased operational cost, consequently, so any simplification is welcome. Disaggregation specifically means that vendors need a more open and modular platform. Note that disaggregation does not prevent traditional vendors from competing, using proprietary ASICs with their software to gain advantage. For example, Cisco is joining the trend of disaggregation in data center networks, saying it now allows data center customers to run its Nexus operating system (NX-OS) on third-party switches and to use any network operating system on its Nexus switches, thus meeting the demands of hyperscalers and large service providers. The network administrator has some freedom to select the best software either on generic hardware to reduce the cost or on the best hardware (for some extra performance optimization). Expressed this way, the disaggregation might be an opportunity for traditional equipment vendors to provide their software on generic platforms.

Decoupling the software and hardware implies the advantage of having two independent lifecycle managements, one for the software and one for the hardware. While dealing with two lifecycle managements adds a little to the complexity (traditionally the vendor assembled the software), disaggregation gives the added flexibility to pick the best of breed. In certain cases, that flexibility outweighs the complexity. A software upgrade is as simple as upgrading a Linux package. Replacing the software from one vendor with another, or even a different OS train from the same vendor, is easy because the software is just another package. As a Cisco example, moving from an IOS-XE train to an IOS-XR one should not require any hardware change. In the end, the requirement is to enable hardware innovation and software innovation to grow independently.

Network and server engineers can now focus on more business-oriented tasks as opposed to just network operation and maintenance. More and more, the network is becoming a business enabler, and the link between the business and the network is precisely the software. Quickly adapting the software to the business needs becomes key—for example, to add an application for performance probing, to supplement the network with virtual load balancers, to inject specific routes on top of an IGP for particular applications...you name it. With the time gained from automation (coming from disaggregation in this case, but not only from disaggregation), engineers will be the key enablers of networking innovations to serve the business needs.

In summary, hardware commoditization and disaggregation allow for and require more automation. Programmatic management based on YANG, discussed throughout the rest of the book, is a way to manage these new networks.

The DevOps Time

Networks engineers need to adapt. The only constant in this networking industry is change. So how does one adapt? In other words, how does one stay relevant in a couple of years? Interestingly, pretty much all network engineer job descriptions these days require some sort of scripting skills. Reflecting on this, you should learn scripting as a way to automate network management tasks, and not just server tasks with shell scripts. If you were to ask around which programming language to learn, the answer will consistently be “start with Python.”

Python is a modern programming language that is easy to read and write and yet is powerful enough to be used as a convenient tool for daily parsing tasks, performance management, and configuration. Many SDKs are provided in Python, and there is a vast number of available libraries. Useful scripts can be created in no time. Interacting with the Python command line adds to the fun of learning Python. As an example, a course such as “An Introduction to Interactive Programming in Python”11 from Coursera12 provides the required basics with weekly videos, quizzes, and mini-projects that master Python. With a few professionals teaching something interesting, a community around the world wanting to learn and ready to share its knowledge, you easily get a final project that looks like this YouTube video13 [Asteroids game in Python (coursera.org)].

Will all network engineers have to become full-time programmers in the future? No. The trend toward automation, SDN, cloud, virtualization, everything-as-a-service, and so on does not imply that network engineers will disappear. When a (young) application designer complains that his call to the API “get-me-a-new-service-over-the-network (bandwidth = infinite, packetloss = 0, one-way-delay = 0, jitter = 0, cost = 0)” doesn’t work as expected, you will still need a network engineer to troubleshoot. The best (that is, the most efficient) network engineers will be the ones with the ability to script their automated network-related tasks, whether for configuration or service assurance, and can modify their scripts on the fly. Instead of repeating a manual task, you must automate it!

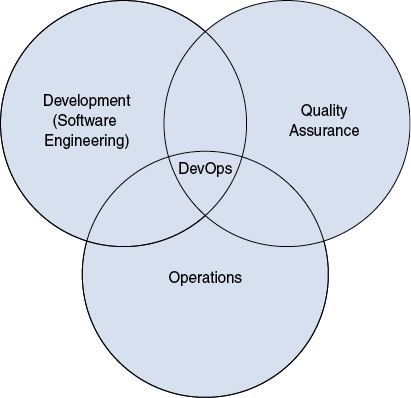

So, what is DevOps? The combination of Development and Operations, DevOps is a software engineering practice that aims at unifying the software development and software operations.

Why? Because software engineers do not necessarily understand networking, and networking engineers do not necessarily understand software, so combining forces to get the best out of both worlds makes sense.

DevOps advocates automation and monitoring at all steps of software construction, from integration, testing, and releasing to deployment and infrastructure management. Expressed differently, DevOps is the intersection of development, operations, and quality assurance, as it emphasizes the interdependence of applications (or services), development, and IT operations, as shown in Figure 1-1.

Figure 1-1 DevOps

This new DevOps mentality is about blending development and operations to provide quicker service deployment and iteration. As a practical example, it means that two historically distinct groups, the network support team and the application/server support team, now work as a single team, thus avoiding the ping-pong game of “this is a networking issue” versus “this is a server issue.” The networking nirvana is being able to manage network elements the same way you manage servers.

Note

From now on, we’ll stop making a distinction between network engineers and compute engineers (dealing with the servers), because in the future this distinction will completely disappear. Indeed, the networking and application worlds will come together. Unless we’re making a specific point, we’ll use the term “operation engineers” or “DevOps engineers” instead.

Software-Defined Networking

The new paradigm for many years now has been software-defined networking (SDN). Granted, although SDN is not a new term, it is an overloaded term—a buzzword that means many different things to many different people.

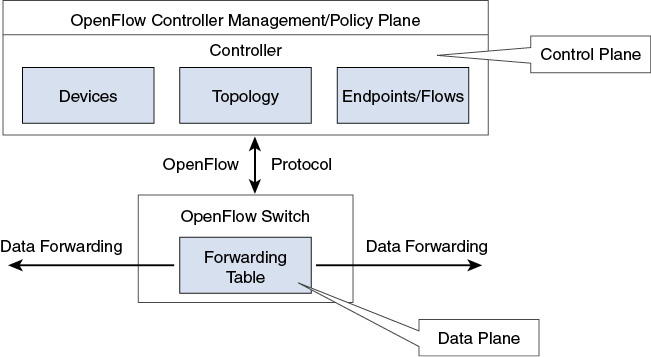

The first SDN discussions introduced the concept of the separation of the control and data plane entities, with a focus on OpenFlow (now part of the Open Networking Foundation15) as the open standard to configure the data plane—Forwarding Information Base (FIB), or Media Access Control (MAC) table.

Here are some of the use cases for configuring the control plane independent of an Interior Gateway Protocol (IGP) such as OSPF or IS-IS (Intermediate System to Intermediate System):

Research in terms of programmable data planes: traffic engineering, service insertion, tunneling, and so on

The ability to run control software on general-purpose hardware (commodity servers)

A centralized controller taking care of all forwarding aspects for the entire network

Typically, an OpenFlow controller configures the data plane, via the API, the CLI, or a GUI, in an OpenFlow switch, as depicted in Figure 1-2. This OpenFlow controller manages the control plane, for which it requires the full topology view and the endpoints/flows.

Figure 1-2 OpenFlow

Through the years, the notion of SDN has been evolving. OpenFlow, as a fundamental packet-forwarding paradigm, was actually an enabler—an enabler for more and easier network programmability. For example, the Open vSwitch Database Management Protocol (OVSDB, RFC 7047) is an open source effort to configure virtual switches in virtualized server environments. The OpenDaylight16 project, an open source controller project, did a great job of adding multiple configuration protocols as southbound interfaces, next to OpenFlow:

PCEP, the Path Computation Element communication Protocol (RFC 5440), is a communication protocol between a Path Computation Client (PCC) and a Path Computation Element (PCE), or between two PCEs, which includes path computation requests and path computation replies as well as notifications of specific states related to the use of a PCE in the context of Multiprotocol Label Switching (MPLS) and Generalized MPLS (GMPLS) traffic engineering.

Forces, which stands for FORwarding and Control Element Separation (RFC 5810), aims to define a framework and associated protocol(s) to standardize information exchange between the control and forwarding plane.

BGP-LS, which stands for BGP Link State Distribution (RFC 7752), is a mechanism by which link-state and traffic engineering information is collected from networks and shared with external components using the BGP routing protocol.

NETCONF/YANG provides a simple, standardized way to enable a programmable interface to any device or service (covered extensively in this book).

Therefore, the term SDN evolved to mean a variety of things: network virtualization in the cloud, dynamic service chains for service provider subscribers, dynamic traffic engineering, dynamic network configuration, network function virtualization, open and programmable interfaces, and so on. What is for sure is that SDN is much more than OpenFlow and simply splitting the control and data planes.

A pragmatic definition of SDN comes from David Ward’s foreword in the book SDN: Software Defined Networks,17 by Ken Gray and Tom Nadeau: “SDN functionally enables the network to be accessed by operators programmatically, allowing for automated management and orchestration techniques; application of configuration policy across multiple routers, switches, and servers; and the decoupling of the application that performs these operations from the network device’s operating system.”

Some might say this is actually a definition of DevOps, not SDN. The bottom line is that SDN, as a control plane separation paradigm, enabled more network programmability requirements, optimized speed and flexibility, and made a move from configuration time to software time.

In the end, SDN as a control plane separation paradigm (configuring the Routing Information Base [RIB] or the FIB) and DevOps (which some call SDN) are complementary: They use the same concepts and the same tools. As a practical example, a network operator might be configuring an IGP for distributed forwarding with tools based on NETCONF and YANG and inject specific policies on top of the IGP, directly in the RIB.

Network Function Virtualization

Another trend in the industry, again explaining why some operators are embracing more automation these days, is network function virtualization (NFV). NFV offers more flexibility to customers in terms of service deployments and greatly reduces the complexity and time-to-market associated with new services. For example, virtual functions such as firewall, virtual intrusion detection system, deep packet inspection, and so on, are now added via the click of a button thanks to lifecycle service orchestration. Previously, those functions were integrated into dedicated hardware appliances, which added to the overall cost—not only of the installation, the connectivity, and the physical space, but also the ongoing maintenance.

As mentioned in the whitepaper “Trends in NFV Management,”18 network operators have sound business reasons for embracing NFV. When network elements are virtualized instances rather than physical appliances, they can be provisioned and changed much more quickly. They scale dynamically with demand, allowing operators to spin up (or down) network resources for their customers as needed, in minutes or even seconds, rather than waiting weeks or months to deploy new hardware. They are automated and driven by machine logic rather than manual processes, thus driving down operational costs and complexity as well as reducing the risk of human error. And they radically shorten the time needed to move from receiving an order to generating revenue from a fully functional service. NFVs also allow operators more freedom to choose best-of-breed VNFs from a variety of vendors, and to easily move from one VNF to another due to superior pricing or features. The operator can take one VNF (say, a firewall) from one vendor and replace it with a similar VNF from another vendor—and accomplish this much more quickly and easily than replacing physical appliances.

Much of the discussion of VNF management among providers today focuses on onboarding—getting their VNFs booted and spun up in their initial configured state, placed in the right location, connected to the right physical and virtual networks, and ensuring that they have the appropriate resources and licenses. All of this “Day 1” and “Day 0” setup is important, and operators should be (and are) working to make it simpler. But often lost in the discussion is their responsibility for what happens “Day 1” and beyond, once VNFs are up and running. How do network operators link virtualized elements with service orchestration and other Operations Support System (OSS) and Business Support System (BSS) systems to allow for ongoing configuration changes? How do they automate the day-to-day management of multivendor VNFs in dynamic, large-scale networks?

Too many operators still view virtualization as a function of taking existing operating systems, booting them up in a virtual machine (VM), providing the same interfaces used when the product was a physical device, and that’s it. Performing some runtime configuration management has been done for a long time for all devices, the thinking goes, and it has worked just fine, so there is no reason to do anything different. However, virtualized environments bring a new set of challenges that the tried-and-true mechanisms used for conventional physical appliances simply cannot account for.

Ultimately, it will be time to move away from the concept of “configuring” network appliances, as we begin to view virtualized functions as software to be programmed. This book discusses how to use NETCONF and YANG to introduce a more standardized API into network elements and unlock the value of full NFV automation.

Elastic Cloud: Pay As You Grow

Historically, new companies that wanted to enter a new business, relying on networking for that purpose, had to buy a series of networking equipment and operate it even before the first customer purchase order. CapEx (capital expenditure) is the money spent on buying, maintaining, and improving the fixed assets, while OpEx (operating expenditure) is the ongoing cost for operating the product or the business. For new companies, the CapEx is the cost of the network itself, while the OpEx is the IT employees’ salaries (or the consulting fees) for operating the network. Not only does this CapEx require some nonnegligible amount of money to be invested by the young company, but ordering, installing, and operating this networking implies a delay before satisfying the first customer order. On top of that, sizing this network correctly might prove challenging for a new company: If it’s sized too small, this might impact the future services and company growth, and if it’s sized too big, this would be a waste of money.

These days, new businesses are not keen on investing such a huge amount of CapEx/OpEx on Day 1 for the networking aspects. Instead, having a linear cost as the business grows is the ideal solution: the more customers, the more revenue, and as a consequence the more investment in the infrastructure. This is the elastic concept, also known as “pay as you grow,” with an ideal CapEx linear investment starting at an amount close to zero. When the elastic concept involves networking, it refers to the dynamic adaption of the network based on load. The cloud is the typical way to offer some compute, storage, and networking features, in an elastic way, thanks to virtualization—hence the name “elastic cloud.”

Here is a typical example: A yangcatalog.org19 website (a repository of YANG tools and the metadata around YANG models with the purpose of driving collaboration between authors and adoption by consumers) was staged by having a virtual computer on which to run the web service: an Amazon Web Service (AWS) EC2. The following is from the Amazon documentation: “Amazon Elastic Compute Cloud (Amazon EC2) is a web service that provides secure, resizable compute capacity in the cloud. It is designed to make web-scale cloud computing easier for developers. [...] Amazon EC2 reduces the time required to obtain and boot new server instances to minutes, allowing you to quickly scale capacity, both up and down, as your computing requirements change. Amazon EC2 changes the economics of computing by allowing you to pay only for capacity that you actually use.”

Initially, a free AWS instance did the trick for the yangcatalog.org19 website. However, as more services were added to the website, it required more capacity (memory, CPU, and storage). Based on the website’s success, it was an investment in a real plan, paying for more resources and paying by the second for active servers (that is, “elastic cloud”). Although this example mentions AWS, you should note that multiple similar solutions exist in the industry, such as Microsoft Azure, Google Cloud Platform, and OVH Cloud, to name a few.

More complex examples than the YANG catalog include VNFs, such as virtual firewalls, virtual intrusion detection systems, load balancers, WAN accelerators, and so on, as mentioned in the previous section, but the elastic cloud principles remain.

In terms of cloud management, you need to distinguish two different types: on one side you have system-level management, and on the other side you have service management or NFV management inside the container or virtual machine.

System-level management consists of tasks such as the following:

Preparing the environment with Linux packages installation

Upgrading the OS and applying patches

Setting up containers or virtual machines

Scaling up, which means growing the infrastructure (compute, storage, and networking), to accommodate more/better services

Scaling out, which means replicating the infrastructure

This system-level management, which is more of a procedural type of management (a workflow driven by action), might benefit from a Business Process Modeling Language (BPML) such as Topology or Orchestration Specification for Cloud Applications (TOSCA20), a template based mechanism that describes the topology of cloud-based services, their components, and their relationships.

The service management inside the container or virtual machine consists of tasks such as these:

Starting up and stopping the service.

More importantly, maintaining or upgrading the service. For example, in the case of updating an access list entry, you cannot stop the virtual firewall.

This management benefits more from a schema-like language such as YANG, which provides the API and semantic-based actions. This is the model-driven management paradigm, which is explored in the next section.

Data Model–Driven Management

Scripts are relatively easy to create and, to some extent, fun to write. The hard part is the maintainability. Try to look at one of your troubleshooting scripts one year after creation and, unless the script contains a well-known convention (such as PEP8,21 a style guide for Python code), a clean structure, and some documentation, chances are it will look unfamiliar. Improving this script would require quite some time, and mostly likely the famous “If it ain’t broke, don’t fix it” principle would prevail.

Good scripts are based on good APIs (sets of functions and procedures that enable the creation of applications that access the features or data of an operating system, application, or other service), which fundamentally should provide the following benefits:

Abstraction: A programmable API should abstract away the complexities of the underlying implementation. The DevOps engineers should not need to know unnecessary details, such as a specific order of configurations in a network element or specific steps to take if something fails. If this isn’t intuitive for humans, the sequencing of commands becomes even more complex for configuration engines. Configurations should function more like filling in a high-level checklist (these are the settings you need; now the system can go figure out how to properly group and order them).

Data specification: The key thing an API does—whether it is a software or network API—is provide a specification for the data. First, it answers the question of what the data is—an integer, string, or other type of value? Next, it specifies how that data is organized. In traditional programing, this is called the data structure, though in the world of network programmability and databases, the more common term is schema, also known as data models. Since the network is basically being treated as a (distributed) database, the term (database) schema is sometimes used.

Means of accessing the data: Finally, the API provides a standardized framework for how to read and manipulate the device’s data.

Data model-driven management builds on the idea of specifying in models the semantics, the syntax, the structure, and constraints of management objects. From there, scripts use APIs rendered from those models via tooling. The advantage is that, as long as the models are updated in a backward-compatible way, the previous set of APIs is still valid.

An important advantage of data model–driven management is the separation of the models from the protocols and encodings, which means that it is easier to add protocols and encodings. Data model–driven management was initially built on NETCONF and XML, but other protocols/encodings have since seen the light: RESTCONF with JavaScript Object Notation (JSON), gRPC Network Management Interface (gNMI) with protobuf, and so on. Note that XML and JSON are text-file formats used to store structured data for embedded and web applications. Chapter 2, “Data Model–Driven Management,” covers the different protocols and encodings.

As you will see in Chapter 7, “Automation Is as Good as the Data Models, Their Related Metadata, and the Tools: For the Network Architect and Operator,” once the models are well specified and the full toolchain is in place, then the automation is simplified and the OPEX reduced. While the data model–driven management concepts are not new, it is one viable type of management in today’s networks. So data model–driven management is a special trend in this book, as this book is entirely dedicated to all aspects of this transition in the industry.

When applying an API to a complex environment, the key is that vendors implement it in a standards-based way. There should be a common way to define and access data across different devices and vendors—not separate, proprietary interfaces that operators must learn for every different device and function in their network.

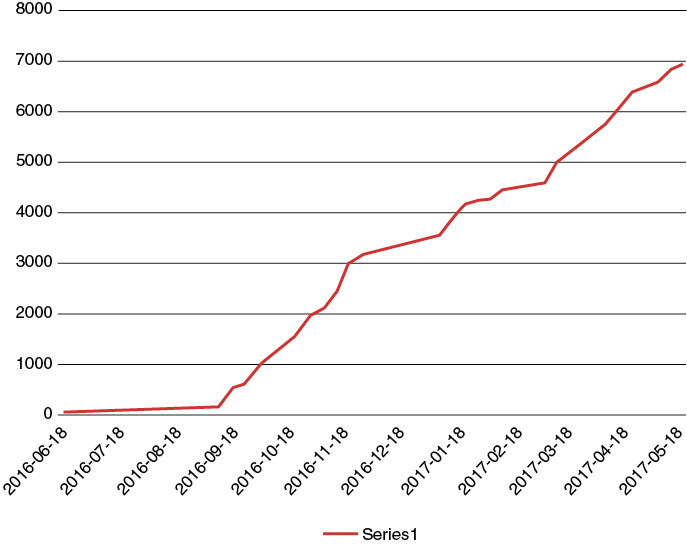

Figure 1-3 illustrates how changing from management via static CLI scripts to a model-driven approach impacts the network operations. It shows a service provider in the Americas who was migrating its services from one set of older devices to a newer set. Notice that the transition was initially rather slow, and took some time to complete at the initial pace. Then a data model–driven automation tool was introduced in September 2016 (in this case, a Cisco product called NSO, Network Services Orchestrator) that fundamentally changed the way the network was transitioning.

Figure 1-3 Number of Circuits Moved by Date, Initially Based on CLI Scripts, Then with Data–Model Driven Automation Software

(Data Model–Driven) Telemetry

Telemetry is a big buzzword in the networking industry these days. Like any buzzword, telemetry means different things to different people—exactly like SDN a few years ago. In different discussions with people from different backgrounds, telemetry meant the following:

The science and technology of automatic measurement and transmission of measurement data.

The mechanism to push any monitoring information to a collector (in that sense, NetFlow is a telemetry mechanism). Since it is about streaming data on regular basis, it is also known as “streaming telemetry.”

The data model–driven push of information, streaming YANG objects.

The hardware-based telemetry, pushing packet-related information directly from ASICs.

Device-level telemetry, such as the pushing of information about hardware and software inventory, configuration, the enabled/licensed features, and so on, with the intention to automate diagnostics, understand overall usage, and provide install base management.

In discussions, it is important to justify the reason why data model-driven telemetry is the most useful type of telemetry that requires automation. First off, why is telemetry necessary? You’ve heard all types of reasons: because SNMP is boring, because SNMP is slow, because SNMP is not precise in terms of polling time—you name it. You may have even heard “because SNMP is not secure.” Well, SNMPv3 provides security with authentication and privacy! Anyway, there are bigger reasons to focus on data model–driven telemetry:

SNMP does not work for configuration, although it is suitable for monitoring (see RFC 3535 for a justification).

Network configuration is based on YANG data models, with protocol/encoding such as NETCONF/XML, RESTCONF/JSON, and gNMI/protobuf.

Knowing that a configuration is applied does not imply that the service is running; you must monitor the service operational data on top.

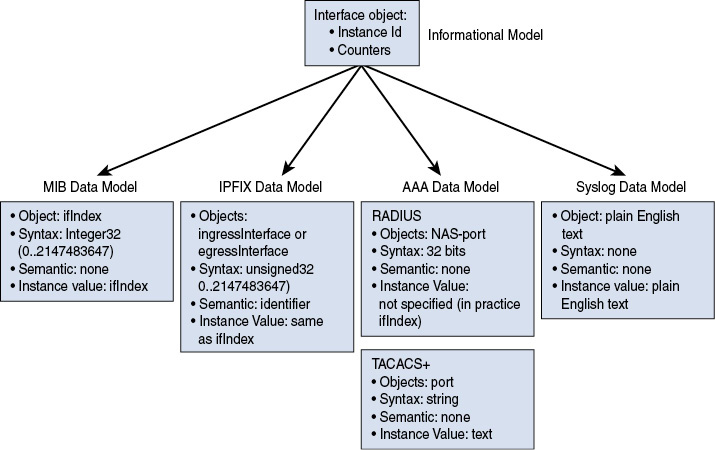

There is not much correlation between the MIB modules, typically used for network monitoring, and YANG modules used for configuration, except maybe a few indices such as the ifIndex in “The Interfaces Group MIB” (RFC 2863) or the interface key name in “A YANG Data Model for Interface Management” (RFC 7223, obsoleted by RFC 8343).

Any intent-based mechanism requires a quality assurance feedback loop, which is nothing more than the telemetry mechanism, as explained in the next section.

Therefore, since the configuration is “YANG data model driven,” so must the telemetry.

The only exception to data model–driven telemetry might be one from hardware-based telemetry: pushing a lot of telemetry directly from ASICs (for a subset of the traffic, at line rate), which might not leave room on the network element for a YANG-related encoding without a penalty in terms of the telemetry export rate. However, it is still possible to describe the exported data with a YANG data model with most any encoding type.

Operations engineers need to manage the network as a whole, independently of the use cases or the management protocols. Here is the issue: with different protocols come different data models, and different ways to model the related type of information. In such a case, the network management must perform the difficult and time-consuming job of mapping data models: the one from configuration with the one from monitoring. Network management was a difficult task with the CLI, MIB modules, YANG models, IPFIX information elements, syslog plain text, TACACS+, RADIUS, and so on. Therefore, protocol design decisions that do not simplify this data model mapping issue are frowned upon. Ideally, the network should present data arriving over different protocols that use the same concepts, structures, and names so that the data can be merged into a single consistent view. In other words, a single data model is needed.

Intent-Based Networking

The services are becoming more and more complex, with a mix of networking functions at the edge, the core, and the data center, as well as the combination of networking, compute, and storage in the cloud. With this increased complexity today and, at the same time, an increased frequency of changes, it is important to know to get the humans out of the loop, to focus on automation. Data model–driven management simplifies the automation and specifies that the telemetry must be data model driven. Now, does the network behave as expected? Are the new services operational? Are the SLAs respected? You could check that the network device, the virtual machine, or the container is reachable and check that the services or the VNFs are correctly configured. However, validating the configuration and reachability of individual components does not imply that the services are running optimally or meet the SLAs.

The cost of service failure is going up significantly these days. Imagine the monetary implications of one hour of downtime for Facebook services, whose business model depends solely on advertisements. Imagine the monetary implications of one hour of downtime for AWS, whose business model depends on web service utilization.

This is where the notion of intent-based networking comes to play, with networks constantly learning and adapting. Historically, managing in a prescriptive mode by focusing on detailing the necessary network configuration steps was common. Contrary to the prescriptive approach, the intent-based approach focuses on the higher-level business policies and on what is expected from the network. In other words, the prescriptive approach focuses on how, while the intent-based approach focuses on what. For example, the prescriptive way of configuring an L3VPN service involves following a series of tasks, expressing the how. For example, you must configure a VRF called “customer1” on provider edge router1 under the interface “eth0,” a default gateway pointing to router1 on the customer edge router, the MPLS-VPN connectivity between the provider edge router1 and router2, and so on.

Conversely, the intent-based way focuses on what is required from the network (for example, a VPN service between the London and Paris sites for your customer).

Where intent-based networking creates the most value is with constant learning, adapting, and optimizing, based on the feedback loop mechanism, as shown in the following steps:

STEP 1. Decomposition of the business intent (the what) to network configuration (the how). This is where the magic happens. For a single task such as “a VPN service between the London and Paris sites for customer C,” you need to understand the corresponding devices in Paris and London, the mapping of the operator topology, the current configuration of the customer devices, the operator core network configuration, the type of topology (such as hub and spoke or fully meshed), the required Quality of Service (QoS), the type of IP traffic (IPv4 and/or IPv6), the IGP configuration between the customer and the operator, and so on. Examine all the possible parameters for an L3VPN service in the specifications of the YANG data model for L3VPN service delivery (RFC 8299).

STEP 2. The automation. This is the easy part, once the what is identified. Based on the data model–driven management and a good set of YANG models, a controller or orchestrator translates the YANG service model (RFC 8299) into a series of network device configurations. Thanks to NETCONF and two-phase commit (more on this later), you are now sure that all devices are correctly configured.

STEP 3. The monitoring with data model–driven telemetry provides a real-time view of the network state. Any fault, configuration change, or even behavior change is directly reported to the controller and orchestrator (refer to the previous section).

STEP 4. Data analytics correlate and analyze the impact of the new network state for service assurance purposes, isolating the root cause issue—sometimes, even before the degradation happens. From there, the next network optimization is deduced, almost in real time, before going back to step 1 to apply the new optimizations.

This constant feedback loop, described in four steps, is the foundation for networks that constantly learn and adapt. It allows moving away from reactive network management where a network fault (or worse, a customer call) triggers the troubleshooting to constant monitoring focused on the SLAs. The combination of predictive analytics and artificial intelligence, combined with continuous learning and adapting, is the main enabler here. From here, the next logical step is not too futuristic: self-healing networks.

Even if this book doesn’t cover the “intent,” per se, it covers steps 2 and 3 in order to realize intent-based networking.

Software Is Eating the World

As Marc Andreessen correctly predicted in the article “Why Software Is Eating The World”23 in the Wall Street Journal in 2011, “software programming tools and Internet-based services make it easy to launch new global software-powered start-ups in many industries—without the need to invest in new infrastructure and train new employees.”

This is a trend in the industry, where consumers are offered all the possibilities: online banking, hotel and flight booking, virtual lobby assistants, booking a taxi via an application, placing calls from their PCs, watching TV or reading a book on a tablet, listening to music on their mobile phones, and connected cars. While those offer more flexibility to the end users, the service provider wants to reduce its own OpEx by using software, thus reducing the human interaction and hence costs.

Coming back to the world of networking, in no time these days you can register a domain name, create multiple email addresses, and host a website. Almost instantaneously, with a few clicks, you can enable IPv6, add a database to your website, enable protection with a firewall, and create an SSL certificate. The on-demand cloud computing platforms, which offer some storage, compute resources, and some basic networking, can add a virtual network and include some virtual networking functions such as firewall, virtual intrusion detection system, or accounting.

The main point is that all these new services are available almost immediately, thanks to software. And this software in the background is composed of automation and sometimes data analytics for customization.

Existing Network Management Practices and Related Limitations

Managing networks is not new. This section looks at “traditional” network management practices. Traditionally, networks have used different management protocols for different FCAPS management aspects, with different management models and different practices—typically, CLI and “screen scraping,” SNMP, NetFlow and IPFIX, and syslog. From there, you can observe the respective protocol limitations, which highlight the issue of the different data models in the next section.

These protocols describe the current practical normality of most of the network operation that happens in the world today. While each section can seem a little disparate and contain a multitude of little details, the picture comes together in the summary at the end of the chapter. The big picture will be clearer once you have seen a snapshot of how each of these environments work to get a glimpse into some of their gory details.

CLI: Is This an API?

Most devices have a built-in command-line interface (CLI) for configuration and troubleshooting purposes. Network access to the CLI has traditionally been through the Telnet protocol, with the addition of the SSH protocol to address security issues associated with Telnet. At some point in time, the CLI was the only way to access devices, for both configuration and troubleshooting. And the CLI grew with any new features to become a huge list of configuration and show commands.

CLI: Explained

CLIs are generally task-oriented, which makes them easier to use for human operators. As the goal is human consumption, the design principle is for the CLI to change to be more readable. As an example, an old IOS command was changed from show mac-address-table to show mac address-table, probably motivated by a developer thinking it was better from an interface point view or maybe to be consistent with a new “show mac” feature. For users, who most of the time use the command autocomplete function while interacting with the device, this change is not be an issue. Indeed, most command-line interfaces provide context-sensitive help that reduces the learning curve. However, a script that sends commands to the device would now fail as a consequence of this CLI change.

On the other side, a saved sequence of textual commands is easily replayed: Typically, the same access list, in the form of a CLI snippet, can be applied to multiple devices. With simple substitutions and arbitrary text-processing tools, operation engineers can apply similar CLI snippets to network elements. Typically, the access-list snippet contains a couple of arguments to be replaced, depending on the managed device characteristics.

CLI: Limitations

The “CLI is No Longer the Norm” section covered already most of the CLI limitations, and this section adds some practical configuration examples, such as the following snippet:

Conf t router ospf x vrf xxx

Here is another example:

Conf t vrf xxx router ospf x

In other words, it is very well possible that a command on different devices, even from the same vendor, behaves differently. What is the root cause behind those differences? CLIs typically lack a common data model.

These VRF examples lead to the second CLI limitation: The CLI is context-sensitive and order-specific. A question mark helps with the listing of all available options, but entering one command might offer a submenu of commands. While adding commands raises a problem, removing commands offers some challenges, too. Coming back to the VRF example, removing a VRF might remove the entire VRF-related set of commands, again depending on device implementation. Since CLIs are proprietary, in syntax and semantics they cannot be used efficiently to automate processes in an environment with a heterogenous set of devices.

The command-line interface is primarily targeted at human users, who can adapt to minor syntax and format changes easily. Using the CLIs as a programmatic interface is troublesome because of parsing complexities. For example, CLIs don’t report consistent error code in case of failure—a necessary automation property. On top of that, there is no way to discover new CLI changes: CLIs often lack proper version control for the syntax and the semantics. It is therefore time-consuming and error-prone to maintain programs or scripts that interface with different versions of a command-line interface. What’s more, the CLIs keep evolving, as more features are introduced. Sure, those changes are documented in the release notes, at least for the configuration commands, but the automation cannot consume those release notes.

So, is the CLI an API? It was used like one, but it is fragile.

As a quiz, Example 1-1 shows four potential situations operations engineers might encounter dealing with a CLI. Can you find the errors? What is wrong with these outputs?

Example 1-1 CLI Quizzes

Router1#show run Command authorization failed Router1# Router2#show run Unable to read configuration. Try again later Router2# Router3#show run Router3# Router4#show run ... description %Error with interface ... Router4#

The show command on Router1 looks like an AAA (Authorization, Authentication, Accounting) issue, while it might point to an non-volatile random-access memory (NVRAM) issue on Router2. The Router3 output doesn’t look right, unless the configuration is empty. Finally, the Router4 output looks like an error, while actually it’s not! In this particular case, the operations engineer configured the interface description with “%Error with interface” to flag an issue with this specific interface. Again, for a user, this description helps; however, for a CLI-based script, the consequences might be unexpected. In this particular case, this caused a bug in an Expect3 script in automating configuration archival. When the script tried to upload the configuration, it stopped working once it encountered this particular description line, thinking that the script itself was in error. Indeed, the script was based on a regular expression (regex) based on “Error”. This example perfectly illustrates the difficulties of using the CLI for automation. Let’s face it—the CLI is not suited for automation. It is not machine-friendly and lacks a well-defined format. The CLI has been used for years, as it was the only way to configure and collect device information, but it’s very fragile.

SNMP: For Monitoring But Not for Configuration

SNMP, the Simple Network Management Protocol, is a protocol specified by the IETF. Actually, there have been multiple protocol versions. Here is a brief history of the different SNMP versions:

SNMPv1 (historic): The first version of the protocol, specified by RFC 1157. This document replaces the earlier versions that were published as RFC 1067 and RFC 1098. The security is based on SNMP community strings.

SNMPsec (historic): This version of the protocol added strong security to the protocol operations of SNMPv1 and is specified by RFC 1351, RFC 1352, and RFC 1353. Security is based on parties. Few, if any, vendors implemented this version of the protocol, which is now largely forgotten.

SNMPv2p (historic): For this version, much work was done to update the SNMPv1 protocol and Structure of Management Information version 1, and not just security. The result was updated protocol operations, new protocol operations and data types, and party-based security from SNMPsec. This version of the protocol, now called party-based SNMPv2, is defined by RFC 1441, RFC 1445, RFC 1446, RFC 1448, and RFC 1449. (Note that this protocol has also been called SNMPv2 classic, but that name has been confused with community-based SNMPv2. Thus, the term SNMPv2p is preferred.)

SNMPv2c (experimental): This version of the protocol is called community string-based SNMPv2. Specified by RFC 1901, RFC 1905, and RFC 1906, it is an update of the protocol operations and data types of SNMPv2p and uses community-based security from SNMPv1.

SNMPv2u (experimental): This version of the protocol uses the protocol operations and data types of SNMPv2c and security based on users. It is specified by RFC 1905, RFC 1906, RFC 1909, and RFC 1910.

SNMPv3 (standard): This version of the protocol is a combination of user-based security and the protocol operations and data types from SNMPv2p and provides support for proxies. The security is based on that found in SNMPv2u and SNMPv2*, and updated after much review. The documents defining this protocol are multiple:

RFC 3410: Introduction and Applicability Statements for Internet-Standard Management Framework.

RFC 3411: An Architecture for Describing SNMP Management Frameworks. Now updated by RFC 5343: Simple Network Management Protocol (SNMP) Context EngineID Discovery, and by RFC 5590: Transport Subsystem for the Simple Network Management Protocol (SNMP).

RFC 3412: Message Processing and Dispatching for SNMP.

RFC 3413: SNMPv3 Applications.

RFC 3414: User-Based Security Model (USM) for version 3 of SNMPv3.

RFC 3415: View-Based Access Control Model (VACM) for SNMP.

RFC 3584: Coexistence between Version 1, Version 2, and Version 3 of the Internet-standard Network Management Framework.

The goal of this book is not to delve into the SNMP details. There are plenty of valuable references, books, and tutorials dedicated to the technical aspects of SNMP, Structure of Management Information (SMI), and Management Information Base (MIB), so there is no point in covering everything here, especially when one of the key messages from this book, as you will quickly realize, is to move away from SNMP. However, let’s look at just a few concepts that are necessary within this book.

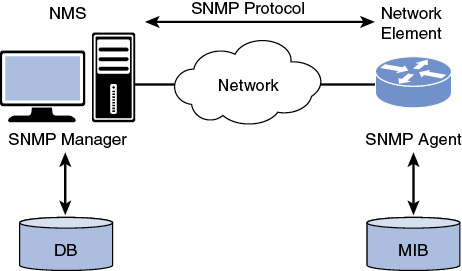

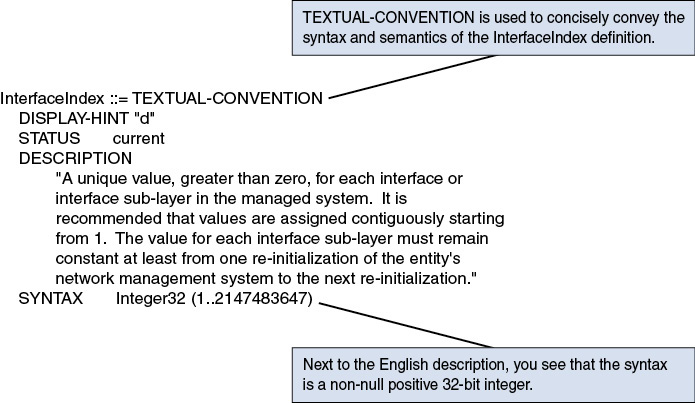

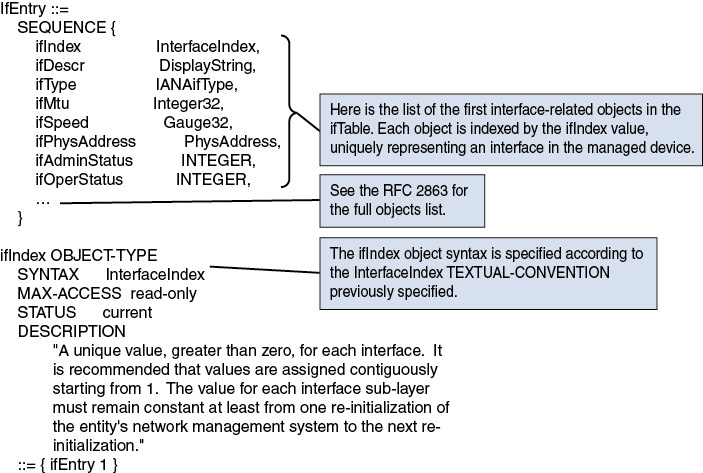

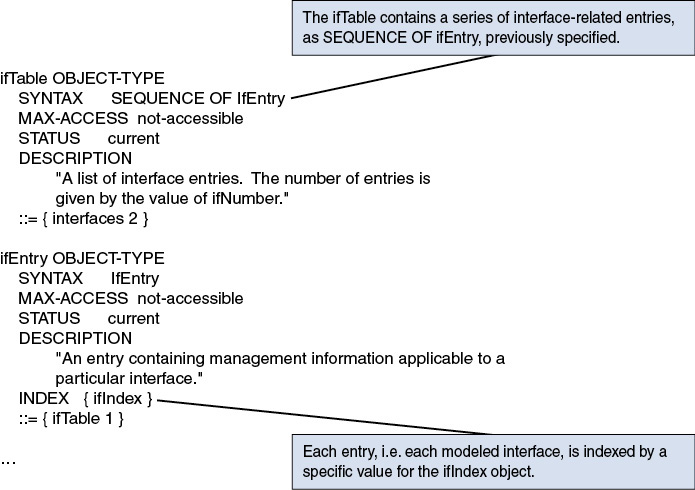

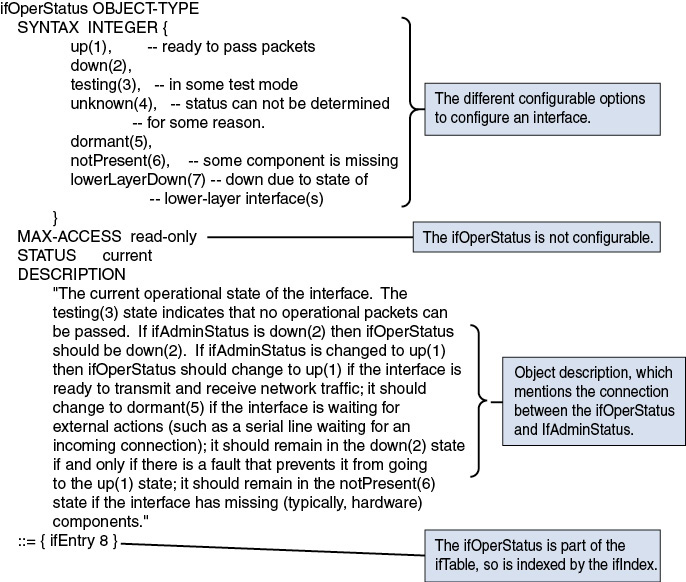

SNMP: Explained

As displayed in Figure 1-4, an SNMP Agent, embedded in a device to be managed (typically a router or a switch in the networking context), responds to requests for information and actions from the SNMP Manager, sitting in a Network Management System (NMS): typical information includes interface counters, system uptime, the routing table, and so on. These data sets are stored in the device memory and are retrieved from a network management application by SNMP polling. The MIB is the collection of those managed objects residing in a virtual information store in the SNMP Agent. A collection of related managed objects is defined in a specific MIB module. For example, interfaces-related objects are specified in the “Interfaces Group MIB” document, detailed by the IETF in the RFC 2863. The SMI defines the rules for describing management information, using the Abstract Syntax Notation One (ASN.1) as an interface description language. In other words, SMI is the data-modeling language used to describe objects to be managed via the SNMP protocol.

Figure 1-4 The SNMP Basic Model

The following types of interactions occur between the SNMP Manager and SNMP Agent:

Read: The ability to read managed objects from the SNMP Agent, characterized by “read-only” objects in MIB modules. A typical example is the polling of interface counters’ statistics, described by the ifInOctets and ifOutOctets managed objects (RFC 2863).

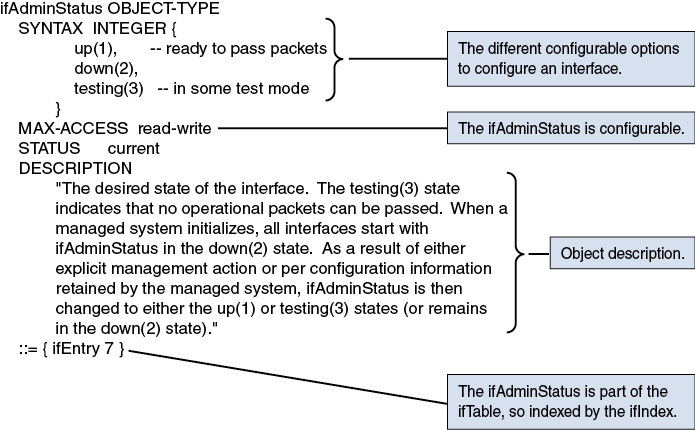

Write: The ability to set managed objects in an SNMP Agent, if the managed objects are specified with a “read-write” status in the MIB module (for example, changing an interface administrative status with the ifAdminStatus managed objects [RFC 2863]).

Notification, also known as trap or inform: This is a push-based mechanism (as opposed to pull, when the SNMP Manager polls information from the SNMP Agent) from the SNMP Agent to the SNMP Manager. A typical example is a linkUp or linkDown notification [RFC 2863], sent to the SNMP Manager when the interface operational status changes.

SNMP: Limitations

The idea behind the SNMP specifications was to develop a generic protocol used for the configuration and monitoring of devices and networks, effectively covering the FCAP aspects, with the S of Security being treated separately. The SNMP notifications covers the Fault aspects, the configuration of read-write MIB objects covers the Configuration aspects, while the myriad of read-only MIB objects could cover some aspects of Accounting and Performance.

SNMPv1 was published in 1990, and SNMPv3 was finished in 2002. So many years of experience went into the third version. While it took some time for SNMPv3, with its added security considerations, to be widely implemented, years later it drew an important conclusion: SNMP has always done a good job in terms of monitoring devices. However, it fails at device configuration.

In 2003, RFC 3535, “Overview of the 2002 IAB Network Management Workshop,” documented the outcomes of a dialogue started between network operators and protocol developers to guide the IETFs’ focus on future work regarding network management. This paper reported a list of strong (+), weak (-), and neutral (o) points related to the SNMP protocol.

Multiple factors prevented SNMP from replacing the device CLI as the primary configuration approach. Some points are briefly addressed in RFC 3535 and complemented with some experience here:

First, a question of money: The SNMP Agent code costs too much to develop, test, and maintain, compared to the CLI code. SNMP seems to work reasonably well for small devices that have a limited number of managed objects and where end-user management applications are shipped by the vendor. For more complex devices, SNMP becomes too expensive and too hard to use [SNMP Set: Can it be saved?].24

Poor performance for bulk data transfers, due to User Datagram Protocol (UDP) characteristics. The typical examples are routing tables, with the polling of the BGP table, which takes a considerable amount of time. SNMP has the same data transfer behavior as Trivial File Transfer Protocol (TFTP), which is UDP-based, so a lot of time is spent waiting. Especially if there is any latency in the network, this becomes problematic. However, the good thing about SNMP being UDP-based is that the traffic can get through in case of congestion. Note that one operator who went from SNMP polling to NETCONF saw a tenfold increase in performance in a real production network. A more typical value is two or three times faster with NETCONF (which is TCP-based, so it’s an FTP-style transfer).

Poor performance on query operations that were not anticipated during the MIB design. A typical example is the following query: Which outgoing interface is being used for a specific destination address?

It is usually not possible to retrieve complete device configurations via SNMP so that they can be compared with previous configurations or checked for consistency across devices. There is usually only incomplete coverage of device features via the SNMP interface, and there is a lack of differentiation between configuration data and operational state data for many features. For example, while SNMP Manager set the interface state with the ifAdminStatus object, it must poll the ifOperStatus object to check the applied operational state. This example is an obvious one for any network operator, but this link between those two objects cannot be discovered in a programmatic way.

MIB modules and their implementations are not available in a timely manner (sometimes MIB modules lag years behind), which forces users to use the CLI. Indeed, as already mentioned, it is a reality that device management has been an afterthought. Once operators are “forced” to use scripts to manage their CLI (for example with Expect scripts), there is not much incentive to use a different mechanism for not much added value.

Lexicographic ordering is sometimes artificial with regard to internal data structures and causes either significant runtime overhead or increases implementation costs or implementation delay, or both. A typical example is the routing table, whose data needs to be rearranged before answering an SNMP request.

Operators view current SNMP programming/scripting interfaces as being too low-level and thus too time-consuming and inconvenient for practical use. Also, device manufacturers find SNMP instrumentations inherently difficult to implement, especially with complex table indexing schemes and table interrelationships. As a practical example, RFC 5815 specifies an MIB module for the monitoring and configuration of IPFIX (IP Flow Information eXport), also known as NetFlow version 10. Created in a very flexible way, this MIB module allows all possible configuration options allowed by the Flexible NetFlow CLI options. Therefore, some table entries require up to four indices, increasing the complexity to a point where it is not recommended implementing this MIB module. For the record, the same exercise was performed with a YANG module and produced RFC 6728: Configuration Data Model for the IPFIX and Packet Sampling (PSAMP) Protocols.

There is a semantic mismatch between the low-level data-oriented abstraction level of MIB modules and the task-oriented abstraction level desired by network operators. Bridging the gap with tools is in principle possible, but in general it is expensive because it requires some serious development and programming efforts.

MIB modules often lack a description of how the various objects can be used to achieve certain management functions. MIB modules are often characterized as a list of ingredients without a recipe.

SNMP lacks a way to find out the version of the SNMP MIB modules implemented on the device, let alone a mechanism to get a copy. Accessing the vendor website or calling customer support is a compulsory step.

The SMI language is hard to deal with and not very practical.

SNMP traps are used to track state changes, but often syslog messages are considered more useful since they usually contain more information to describe the problem. SNMP traps usually require subsequent SNMP GET operations to figure out what the trap really means.

Note that an IETF effort to fix SMI and SNMP, SMIng (SMI Next Generation),25 concluded in 2003 without clear results.

In 2014, the Internet Engineering Steering Group (IESG),26 the IETF group responsible for technical management of IETF activities and the Internet standards process, issued a statement on “Writable MIB modules.”27

If not already clear before from the state of the industry, this statement definitively discourages specifying MIB modules for configuration and sets the new direction by pointing to the NETCONF/YANG solution.

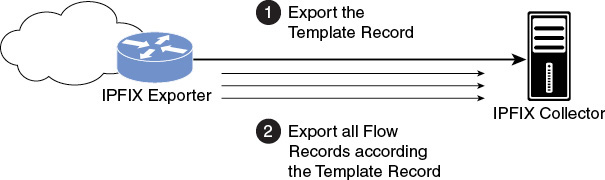

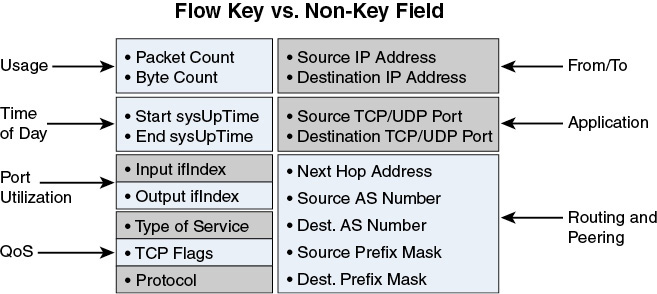

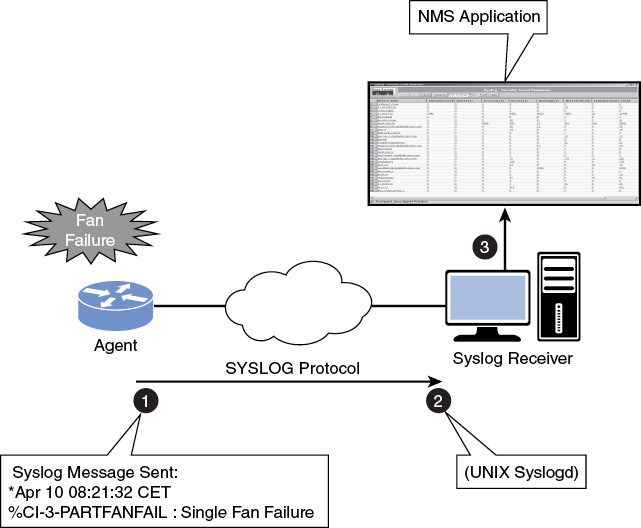

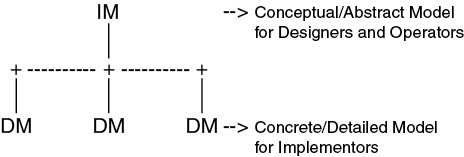

NetFlow and IPFIX: Mainly for Flow Records