3

Embracing Software Development Variance

Engineers have found it difficult to convey the reality of software unpredictability within their organizations. Organizations must accept the reality of software development schedule and content variability to ultimately increase predictability. The business side needs to recognize that Waterfall planning has not, and will never, achieve the level of predictability they seek.

The chapter begins with industry data that demonstrate the software industry's inability to achieve schedule and effort predictability over decades of trying. For many years, the software industry assumed that predictability was just a case of improving software estimation methods. Software engineering pioneers like Barry Boehm and Steve McConnell made significant contributions that helped improve estimation processes. Yet, there is a natural limit to the degree to which software development effort and schedule can be estimated. Steve McConnell referred to it as still being a “black art.” [1] This chapter provides an explanation of how the nature of software development makes it impossible to make accurate predictions for both schedule and content.

The chapter ends with the answer to a puzzling question, “Why can other departments like sales and manufacturing make and meet long‐term commitments?” It provides insight into how engineering leaders can better deal with expectations of long‐term projections.

3.1 The Cone of Uncertainty

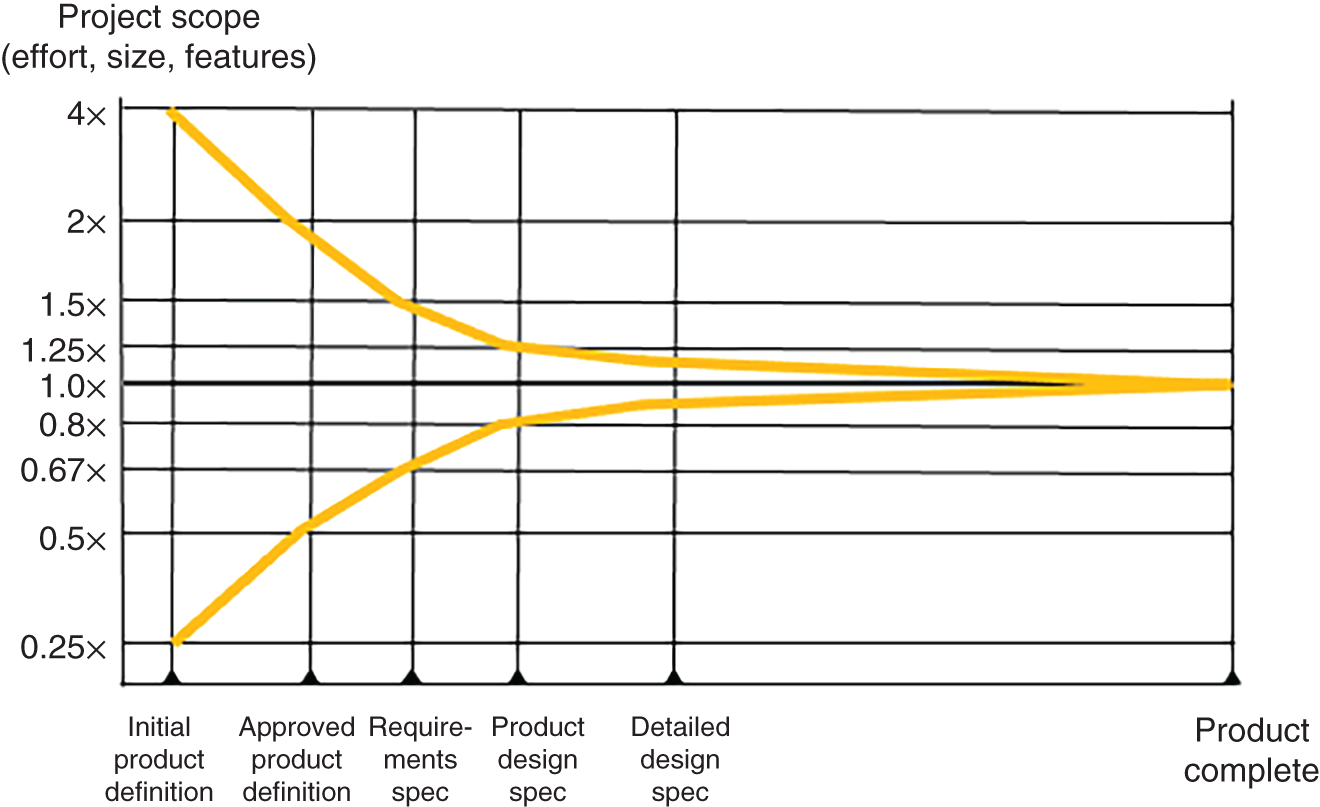

Steve McConnell is well known in the software industry for defining the term “Cone of Uncertainty” to document software estimation error. The cone is based on analysis of hundreds of software projects. We'll review the traditional Waterfall cone and then relate it to Agile development (see Figure 3.1).

Figure 3.1 McConnell Cone of Uncertainty. Used with permission of Steve McConnell.

The vertical axis shows relative estimation error in software effort. Most projects fall within the error bounds of overestimation and underestimation by factors of 4x and 0.25x, respectively, where x is the actual effort when the project is completed. Estimates tend to grow rather than shrink because they're based on what is known at the time of the estimate. Further analysis usually identifies additional requirements. Therefore, the top half of the chart tends to be denser reflecting that most software projects go over schedule and budget.

A company could narrow the cone through improved estimation techniques and requirements best practices, but it didn't change the characteristic shape. Attempts to fix schedule, content, and resources with Waterfall planning typically ended up with missed schedules and/or dropped content. And, today, Waterfall planning with Agile expects that estimates for schedule, content, and resources can be done prior to requirements development.

Of course, the business rejects the idea of the cone. They don't get Cones of Uncertainty from sales or manufacturing, and we don't see it presented by hardware engineering. Some departments do make longer‐term commitments that they usually achieve. I've heard some people in the software industry explain the lack of predictability relative to hardware engineering as “software being harder.” Hardware engineering has many uncertainties that software doesn't have to deal with, from device availability to electromagnetic interference, so that's not the reason. Don't try to tell them that software is harder.

3.2 Software Development Estimation Variance Explained

Whether the business likes it or not, software has characteristics that limit the accuracy of effort and schedule estimates. It is because software development requires hierarchical decomposition into smaller components, eventually resulting in “modules” with defined functionality and interfaces. This is the point when the work can be understood by an individual developer and reasonably accurate estimates can be generated. The number of “modules” that can be done in parallel is only known after detailed design, so schedule estimates prior to detailed design have large uncertainty. The problem is that the “detailed design specification” point in the Cone of Uncertainty is well beyond the planning stage. Commitments have already been cast in stone. In Waterfall, software development commitments are expected at the requirements stage and even during the conception stage.

I've used a tax preparation analogy to explain the software estimation challenge for people without software development experience. Suppose you were asked to determine how many accountants could be added to prepare an individual's tax return to minimize schedule. The number of tax forms varies for an individual based on the types of income and deductions. The specific tax forms to be completed, and their inputs, are only determined during the tax preparation process. Therefore, the shortest tax preparation schedule could only be estimated with any reasonable degree of accuracy after a “detailed design” step has been completed to determine the number of forms, their inputs, and the approximate effort for each accountant, which would take a significant part of overall tax preparation schedule.

However, the business simply cannot accept the large opening at the planning stages of the cone. How likely is it that you would get project approval by saying the project will cost somewhere between $250K and $4M, and it will take anywhere from 10 to 16 months? And in today's Agile world, we would want to add that we're not sure which features will actually be delivered. The cone has been viewed by the business as an attempt by engineering to avoid commitment.

So, why is hardware engineering able to make commitments earlier in the development cycle? I've managed hardware development. Hardware typically serves as a foundation for software functionality. The way users interact with the system is mostly determined by the software and must cover an almost infinite combination of cases. This is what drives pages and pages of software requirements. In hardware engineering, the architecture, number of circuit boards, and interfaces can usually be estimated fairly accurately at the system design stage where a hardware block diagram is available. Hardware engineering then estimates with proxies like the number of inputs and outputs and estimated component count. Software estimation uncertainty is analogous to asking hardware engineering to estimate schedule and cost without knowing the number or types of circuit boards to be developed. Would they be predictable?

Estimation uncertainty was supposed to be addressed by Agile. It was supposed to reduce long‐term commitments to be able to deliver in shorter cycles with increased predictability. The product owner sets the priorities for the work, and engineering provides them with progress in the form of a burndown chart. Content delivery can be changed based on actual team velocities. A product owner is dedicated to the team to provide implementation guidance throughout sprint development to make sure the team builds the right thing.

As established in Chapter 2, Waterfall planning continues to be imposed on Agile development. The business response was, “You can call how you develop anything you want, but you're still going to have to make delivery and cost commitments like everybody else.” This leaves an impossible problem for the poor project manager still accountable for schedule, cost, and content.

What about just adding resources when estimates increase or a new functionality is added? This fallacy was exposed in the classic book The Mythical Man‐Month [2] by Fred Brooks where he showed that adding staff to a late project makes it later. It's as true now as it was then. Software development requires specific knowledge about the domain, software base, and development practices that take months to acquire. This presents a drain on current staff. Organizations need to assume fixed development capacity and make trade‐offs between schedule and/or content.

3.3 Making and Meeting Feature Commitments

Construx provided methods to meet Waterfall schedules using the same variable content concept adopted by Agile. The Cone of Uncertainty could be used to initially commit to a set of features and make additional commitments as estimation accuracy improved during the development cycle. We will show later how this method can be applied at the Investment level.

The Cone of Uncertainty provides guidance on estimation variability. It shows that estimates vary between 0.5x and 2x at product definition and 0.67–1.5x when requirements are complete. Detailed requirements are not available in Agile development when effort forecasts are required. I recommend an expected variance between 1.5x and 2x depending on the level of epic and user story decomposition.

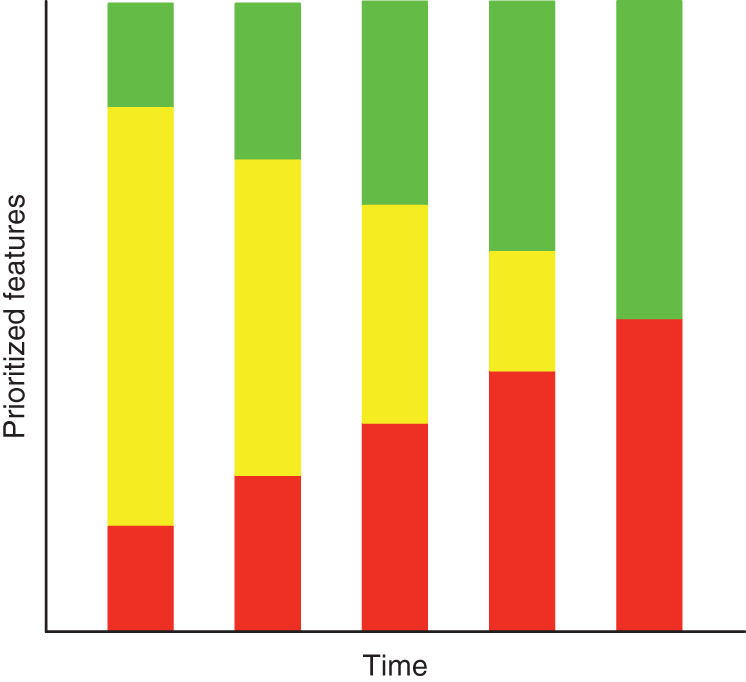

Features are ranked by priority in the chart below. The top bar represents committed features. The bottom bar shows features that will not be included in the release. The bar in the middle marks features that are still targets for the release (see Figure 3.2).

Figure 3.2 Feature content forecast chart

We need to account for the potential variability of remaining features using the minimum Cone of Uncertainty factor of 1.5x or more. At each update, the effort of the remaining features is multiplied by 1.5 to account for worst case. Features likely to be completed within the schedule are those that can be completed based on current capacity. These features are targets. Features with effort that falls outside of the capacity limit are not likely to make the release.

Consider five features expected to be delivered in a release, each with estimated effort of 200 hours shown in the first column in Table 3.1.

Given a capacity of 1200 hours that can be completed within the development interval, it is possible that all can be delivered if the estimates are accurate. Of course, we know that's not likely, so we multiply them by the Cone of Uncertainty factor of 1.5 to estimate worst‐case effort. Features A and B have been completed by this reporting week and the actual hours can be entered in the table. The Forecast row includes the actuals from the features completed and the worst‐case estimates of remaining features. Cumulative effort is shown in the last column.

Table 3.1 Feature content forecast example.

| Forecast | Worst‐case | Actual | Forecast | Cumulative forecast | |

|---|---|---|---|---|---|

| Feature A | 200 | 300 | 175 | 175 | 175 |

| Feature B | 200 | 300 | 230 | 230 | 405 |

| Feature C | 200 | 300 | 300 | 705 | |

| Feature D | 200 | 300 | 300 | 1005 | |

| Feature E | 200 | 300 | 300 | 1305 | |

| Feature F | 200 | 300 | 300 | 1605 | |

| Totals | 1200 | 1800 |

Given a capacity limit of 1200 hours, Features C and D can remain as targets because they can be completed within the projected capacity. Features E and F are unlikely to be delivered. Of course, estimated capacity may also vary and be accounted for in the feature forecasts.

In reality, this method was not adopted by many organizations because of the perception that pressure was the only way to motivate engineering to achieve, or get close to, development targets. Contingency was viewed by project managers and executives as an opportunity for engineering to slack off.

3.4 How Other Departments Meet Commitments

The business expects forecasts from sales, operations, and manufacturing that are also based on risky assumptions, so why is engineering any different? Cries of “software is more complex” won't cut it. I've managed hardware development and have also been exposed to the challenges that manufacturing faces. For example, unforeseen component shortages or loss of a vendor may require a quick redesign or a substitute device approved by hardware engineering. Manufacturing is dependent on deliveries from hundreds of suppliers and must react quickly to changes in their production forecasts.

I'll share my experience on how other departments make and meet commitments. Manufacturing is managing an ongoing process where they build the same thing over and over, which is easier than estimating new development effort from a set of requirements for each project. As discussed above, software development estimation involves sequential decomposition where accurate estimates can only be derived later in the project, and we've already established that hardware development can usually do this decomposition earlier in the project. Nevertheless, manufacturing deserves a lot of credit for excellent processes to rapidly respond to change to avoid production losses.

Sales need to meet revenue forecasts for the company to achieve company financial commitments. They also deal with many variables, like new competition and late products. However, they are, by the nature of their roles, great negotiators. They preserve contingency when they make their forecasts. In fact, they want to set forecasts low so they can exploit sales accelerators that increase commissions with higher sales volume. They've learned to play the game.

So, why doesn't engineering play the game? First of all, they're just too darn analytical. If you ask an engineer for an estimate, they will do their best to provide you with an answer with the precision of two decimal points. It's a problem to solve, not a game to play. The second reason is that they are under intense pressure to commit to shorter schedules and lower development costs from project management, ending up with the “prove you can't deliver it sooner” schedule.

3.5 Agile Development Implications

There is no major release milestone for completion of detailed design with Agile development. The design takes place incrementally during each sprint. This means that Agile estimation uncertainty is, at best, at the product definition phase on the Cone of Uncertainty where estimation errors vary by a factor of two. This is certainly outside the bounds of business expectations at the time of project approval and financial forecasts. There are some good Agile estimation methods that may reduce this uncertainty, but it is not likely to be less than the cone variance factor of 1.5.

The demand for corporate financial predictability perpetuates Waterfall release planning despite its inaccuracies. Agile has not provided product and project managers with an alternative. The solution involves embracing software estimation unpredictability to improve business predictability. This seems like an oxymoron, but it can be achieved by balancing long‐term commitments and development capacity contingency, and by reducing the fraction of development capacity allocated to committed features. We also need to recognize that the business financial reporting demands financial predictability, not predictability in terms of feature schedules. Product management creates feature schedules, so changing to a more predictable way to achieve financial targets is within their control.

3.6 Summary

- Software estimation variance must be accepted by organizations to successfully implement Agile.

- The Cone of Uncertainty shows that estimates and schedules set by Waterfall planning were usually not met.

- Software development estimates lack accuracy prior to the design stage because the number of elements to build is not known.

- Hardware is a platform for software functionality and the number of circuit boards and elements can usually be estimated without detailed functional requirements, providing more accurate development effort and schedule estimates earlier in the development cycle.

- The only way to achieve Waterfall schedules is to leave contingency for features that may not be delivered.

- A different approach for achieving financial predictability is within the control of product and project management.

References

- 1 McConnell, S. (2006). Software Estimation: Demystifying the Black Art. Microsoft Press.

- 2 Brooks, F.P. (1974). The Mythical Man-Month and Other Essays on Software Engineering. Addison Wesley Longman Publishing Co.