11

MODE ERROR IN SUPERVISORY CONTROL

This chapter provides a comprehensive overview of mode error and possible countermeasures (mode error was introduced as a classic pattern behind the label “human error”). Mode errors occur when an operator executes an intention in a way that would be appropriate if the device were in one configuration (one mode) when it is in fact in another configuration. Note that mode errors are not simply just human error or a machine failure. Mode errors are a kind of human-machine system breakdown in that it takes both a user who loses track of the current system configuration, and a system that interprets user-input differently depending on the current mode of operation. The potential for mode error increases as a consequence of a proliferation of modes and interactions across modes without changes to improve the feedback to users about system state and activities.

Several studies have shown how multiple modes can lead to erroneous actions and assessments, and several design techniques have been proposed to reduce the chances for mode errors (for examples of the former see Lewis and Norman (1986); for examples of the latter see Monk, (1986) and Sellen, Kurtenbach, and Buxton (1992)). These studies also illustrate how evaluation methods can and should be able to identify computerized devices which have a high potential for mode errors. To understand the potential for mode error one needs to analyze the computer-based device in terms of what modes and mode transitions are possible, the context of how modes may come into effect in different situations, and how the mode of the device is represented in these different contexts.

Characteristics of the computer medium (e.g., virtuality) and characteristics of and pressures on design make it easy for developers to proliferate modes and to create more complex interactions across modes. The result is new opportunities for mode errors to occur and new kinds of mode-related problems. This chapter provides an overview of the current knowledge and understanding of mode error.

THE CLASSIC CONCEPT OF MODE ERROR

The concept of mode error was originally developed in the context of relatively simple computerized devices, such as word processors, used for self-paced tasks where the device only reacts to user inputs and commands. Mode errors in these contexts occur when an intention is executed in a way appropriate for one mode when, in fact, the system is in a different mode. In this case, mode errors present themselves phenotypically as errors of commission. The mode error that precipitated the chain of events leading to the Strasbourg accident, in part, may have been of this form (Monnier, 1992; Lenorovitz, 1992). The pilot appears to have entered the correct digits for the planned descent given the syntactical input requirements (33 was entered, intended to mean an angle of descent of 3.3 degrees); however, the automation was in a different descent mode which interpreted the entered digits as a different instruction (as meaning a rate of descent of 3,300 feet per minute). Losing track of which mode the system was in, mode awareness, seems to have been another component of mode error in this case.

In one sense a mode error involves a breakdown in going from intention to specific actions. But in another sense a breakdown in situation assessment has occurred – the practitioner has lost track of device mode. One part of this breakdown in situation assessment seems to be that device or system modes tend to change at a different rhythm relative to other user inputs or actions. Mode errors emphasize that the consequences of an action depend on the context in which it is carried out. On the surface, the operator’s intention and the executed action(s) appear to be in correspondence; the problem is that the meaning of action is determined by another variable – the system’s mode status.

Designers should examine closely the mode characteristics of computerized devices and systems for the potential for creating this predictable form of human-computer breakdown. Multiple modes shape practitioner cognitive processing in two ways. First, the use of multiple modes increases memory and knowledge demands – one needs to know or remember the effects of inputs and the meanings of indications in the various modes. Second, it increases demands on situation assessment and awareness. The difficulty of these demands is conditional on how the interface signals device mode (observability) and on characteristics of the distributed set of agents who manage incidents. The difficulty of keeping track of which mode the device is in also varies depending on the task context (time-pressure, interleaved multiple tasks, workload).

Design countermeasures to the classic mode problems are straightforward in principle:

![]() Eliminate unnecessary modes (in effect, recognize that there is a cost in operability associated with adding modes for flexibility, marketing, and other reasons).

Eliminate unnecessary modes (in effect, recognize that there is a cost in operability associated with adding modes for flexibility, marketing, and other reasons).

![]() Look for ways to increase the tolerance of the system to mode error. Look at specific places where mode errors could occur and (since these are errors of commission) be sure that (a) there is a recovery window before negative consequences accrue and (b) that the actions are reversible.

Look for ways to increase the tolerance of the system to mode error. Look at specific places where mode errors could occur and (since these are errors of commission) be sure that (a) there is a recovery window before negative consequences accrue and (b) that the actions are reversible.

![]() Provide better indications of mode status and better feedback about mode changes.

Provide better indications of mode status and better feedback about mode changes.

MODE ERROR AND AUTOMATED SYSTEMS

Human supervisory control of automated resources in event-driven task domains is a quite different type of task environment compared to the applications in the original research on mode error. Automation is often introduced as a resource for the human supervisor, providing him with a large number of modes of operation for carrying out tasks under different circumstances. The human’s role is to select the mode best suited to a particular situation.

However, this flexibility tends to create and proliferate modes of operation which create new cognitive demands on practitioners. Practitioners must know more – both about how the system works in each different mode and about how to manage the new set of options in different operational contexts. New attentional demands are created as the practitioner must keep track of which mode the device is in, both to select the correct inputs when communicating with the automation, and to track what the automation is doing now, why it is doing it, and what it will do next. These new cognitive demands can easily congregate at high-tempo and high-criticality periods of device use thereby adding new workload at precisely those time periods where practitioners are most in need of effective support systems.

These cognitive demands can be much more challenging in the context of highly automated resources. First, the flexibility of technology allows automation designers to develop much more complicated systems of device modes. Designers can provide multiple levels of automation and more than one option for many individual functions. As a result, there can be quite complex interactions across the various modes including “indirect” mode transitions. As the number and complexity of modes increase, it can easily lead to separate fragmented indications of mode status. As a result, practitioners have to examine multiple displays, each containing just a portion of the mode status data, to build a complete assessment of the current mode configuration.

Second, the role and capabilities of the machine agent in human-machine systems have changed considerably. With more advanced systems, each mode itself is an automated function which, once activated, is capable of carrying out long sequences of tasks autonomously in the absence of additional commands from human supervisors. For example, advanced cockpit automation can be programmed to automatically control the aircraft shortly after takeoff through landing. This increased capability of the automated resources themselves creates increased delays between user input and feedback about system behavior. As a result, the difficulty of error or failure detection and recovery goes up and inadvertent mode settings and transitions may go undetected for long periods. This allows for mode errors of omission (i.e., failure to intervene) in addition to mode errors of commission in the context of supervisory control.

Third, modes can change in new ways. Classically, mode changes only occurred as a reaction to direct operator input. In advanced technology systems, mode changes can occur indirectly based on situational and system factors as well as operator input. In the case of highly automated cockpits, for example, a mode transition can occur as an immediate consequence of pilot input. But it can also happen when a preprogrammed intermediate target (e.g., a target altitude) is reached or when the system changes its mode to prevent the pilot from putting the aircraft into an unsafe configuration.

This capability for “indirect” mode changes, independent of direct and immediate instructions from the human supervisor, drives the demand for mode awareness. Mode awareness is the ability of a supervisor to track and to anticipate the behavior of automated systems. Maintaining mode awareness is becoming increasingly important in the context of supervisory control of advanced technology which tends to involve an increasing number of interacting modes at various levels of automation to provide the user with a high degree of flexibility. Human supervisors are challenged to maintain awareness of which mode is active and how each active or armed mode is set up to control the system, the contingent interactions between environmental status and mode behavior, and the contingent interactions across modes. Mode awareness is crucial for any users operating a multi-mode system that interprets user input in different ways depending on its current status.

A cruise ship named the Royal Majesty, sailing from Bermuda to Boston in the Summer of 1995. It had more than 1,000 people onboard. Instead of Boston, the Royal Majesty ended up on a sandbank close to the Massachusetts shore. Without the crew noticing, it had drifted 17 miles off course during a day and a half of sailing. Investigators discovered afterward that the ship’s autopilot had defaulted to DR (Dead Reckoning) mode (from NAV, or Navigation mode) shortly after departure. DR mode does not compensate for the effects of wind and other drift (waves, currents), which NAV mode does. A northeasterly wind pushed the ship steadily off its course, to the side of its intended track. The U.S. National Transportation Safety Board investigation into the accident judged that “despite repeated indications, the crew failed to recognize numerous opportunities to detect that the vessel had drifted off track” (NTSB, 1997, p. 34).

A crew ended up 17 miles off track, after a day and a half of sailing. How could this happen? As said before, hindsight makes it easy to see where people were, versus where they thought they were. In hindsight, it is easy to point to the cues and indications that these people should have picked up in order to update or correct or even form their understanding of the unfolding situation around them. Hindsight has a way of exposing those elements that people missed, and a way of amplifying or exaggerating their importance. The key question is not why people did not see what we now know was important. The key question is how they made sense of the situation the way they did. What must the crew in question at the time have seen? How could they, on the basis of their experiences, construct a story that was coherent and plausible? What were the processes by which they became sure that they were right about their position, and how did automation help with this?

The Royal Majesty departed Bermuda, bound for Boston at 12:00 noon on the 9th of June 1995. The visibility was good, the winds light, and the sea calm. Before departure the navigator checked the navigation and communication equipment. He found it in “perfect operating condition.” About half an hour after departure the harbor pilot disembarked and the course was set toward Boston.

Just before 13:00 there was a cutoff in the signal from the GPS (Global Positioning System) antenna, routed on the fly bridge (the roof of the bridge), to the receiver – leaving the receiver without satellite signals. Post-accident examination showed that the antenna cable had separated from the antenna connection. When it lost satellite reception, the GPS promptly defaulted to dead reckoning (DR) mode. It sounded a brief aural alarm and displayed two codes on its tiny display: DR and SOL. These alarms and codes were not noticed. (DR means that the position is estimated, or deduced, hence “ded,” or now “dead,” reckoning. SOL means that satellite positions cannot be calculated.) The ship’s autopilot would stay in DR mode for the remainder of the journey.

Why was there a DR mode in the GPS in the first place, and why was a default to that mode neither remarkable, nor displayed in a more prominent way on the bridge? When this particular GPS receiver was manufactured (during the 1980s), the GPS satellite system was not as reliable as it is today. The receiver could, when satellite data was unreliable, temporarily use a DR mode in which it estimated positions using an initial position, the gyrocompass for course input and a log for speed input. The GPS thus had two modes, normal and DR. It switched autonomously between the two depending on the accessibility of satellite signals.

By 1995, however, GPS satellite coverage was pretty much complete, and had been working well for years. The crew did not expect anything out of the ordinary. The GPS antenna was moved in February, because parts of the superstructure occasionally would block the incoming signals, which caused temporary and short (a few minutes, according to the captain) periods of DR navigation. This was to a great extent remedied by the antenna move, as the cruise line’s electronics technician testified. People on the bridge had come to rely on GPS position data and considered other systems to be backup systems. The only times the GPS positions could not be counted on for accuracy were during these brief, normal episodes of signal blockage. Thus, the whole bridge crew was aware of the DR-mode option and how it worked, but none of them ever imagined or were prepared for a sustained loss of satellite data caused by a cable break – no previous loss of satellite data had ever been so swift, so absolute, and so long-lasting.

When the GPS switched from normal to DR on this journey in June 1995, an aural alarm sounded and a tiny visual mode annunciation appeared on the display. The aural alarm sounded like that of a digital wristwatch and was less than a second long. The time of the mode change was a busy time (shortly after departure), with multiple tasks and distracters competing for the crew’s attention. A departure involves complex maneuvering, there are several crew members on the bridge, and there is a great deal of communication. When a pilot disembarks, the operation is time constrained and risky. In such situations, the aural signal could easily have been drowned out. No one was expecting a reversion to DR mode, and thus the visual indications were not seen either. From the insider perspective, there was no alarm, as there was not going to be a mode default. There was neither a history, nor an expectation of its occurrence.

Yet even if the initial alarm was missed, the mode indication was continuously available on the little GPS display. None of the bridge crew saw it, according to their testimonies. If they had seen it, they knew what it meant, literally translated – dead reckoning means no satellite fixes. But as we saw before, there is a crucial difference between data that in hindsight can be shown to have been available and data that were observable at the time. The indications on the little display (DR and SOL) were placed between two rows of numbers (representing the ship’s latitude and longitude) and were about one sixth the size of those numbers. There was no difference in the size and character of the position indications after the switch to DR. The size of the display screen was about 7.5 by 9 centimeters, and the receiver was placed at the aft part of the bridge on a chart table, behind a curtain. The location is reasonable, because it places the GPS, which supplies raw position data, next to the chart, which is normally placed on the chart table. Only in combination with a chart do the GPS data make sense, and furthermore the data were forwarded to the integrated bridge system and displayed there (quite a bit more prominently) as well.

For the crew of the Royal Majesty, this meant that they would have to leave the forward console, actively look at the display, and expect to see more than large digits representing the latitude and longitude. Even then, if they had seen the two-letter code and translated it into the expected behavior of the ship, it is not a certainty that the immediate conclusion would have been “this ship is not heading towards Boston anymore,” because temporary DR reversions in the past had never led to such dramatic departures from the planned route. When the officers did leave the forward console to plot a position on the chart, they looked at the display and saw a position, and nothing but a position, because that is what they were expecting to see. It is not a question of them not attending to the indications. They were attending to the indications, the position indications, because plotting the position it is the professional thing to do. For them, the mode change did not exist.

But if the mode change was so nonobservable on the GPS display, why was it not shown more clearly somewhere else? How could one small failure have such an effect – were there no backup systems? The Royal Majesty had a modern integrated bridge system, of which the main component was the navigation and command system (NACOS). The NACOS consisted of two parts, an autopilot part to keep the ship on course and a map construction part, where simple maps could be created and displayed on a radar screen. When the Royal Majesty was being built, the NACOS and the GPS receiver were delivered by different manufacturers, and they, in turn, used different versions of electronic communication standards.

Due to these differing standards and versions, valid position data and invalid DR data sent from the GPS to the NACOS were both labeled with the same code (GP). The installers of the bridge equipment were not told, nor did they expect, that (GP-labeled) position data sent to the NACOS would be anything but valid position data. The designers of the NACOS expected that if invalid data were received, they would have another format. As a result, the GPS used the same data label for valid and invalid data, and thus the autopilot could not distinguish between them. Because the NACOS could not detect that the GPS data was invalid, the ship sailed on an autopilot that was using estimated positions until a few minutes before the grounding.

A principal function of an integrated bridge system is to collect data such as depth, speed, and position from different sensors, which are then shown on a centrally placed display to provide the officer of the watch with an overview of most of the relevant information. The NACOS on the Royal Majesty was placed at the forward part of the bridge, next to the radar screen. Current technological systems commonly have multiple levels of automation with multiple mode indications on many displays. An better design strategy is to collect these in the same place and another solution is to integrate data from many components into the same display surface. This presents an integration problem for shipping in particular, where quite often components are delivered by different manufacturers.

The centrality of the forward console in an integrated bridge system also sends the implicit message to the officer of the watch that navigation may have taken place at the chart table in times past, but the work is now performed at the console. The chart should still be used, to be sure, but only as a backup option and at regular intervals (customarily every half-hour or every hour). The forward console is perceived to be a clearing house for all the information needed to safely navigate the ship.

As mentioned, the NACOS consisted of two main parts. The GPS sent position data (via the radar) to the NACOS in order to keep the ship on track (autopilot part) and to position the maps on the radar screen (map part). The autopilot part had a number of modes that could be manually selected: NAV and COURSE. NAV mode kept the ship within a certain distance of a track, and corrected for drift caused by wind, sea, and current. COURSE mode was similar but the drift was calculated in an alternative way. The NACOS also had a DR mode, in which the position was continuously estimated. This backup calculation was performed in order to compare the NACOS DR with the position received from the GPS. To calculate the NACOS DR position, data from the gyrocompass and Doppler log were used, but the initial position was regularly updated with GPS data. When the Royal Majesty left Bermuda, the navigation officer chose the NAV mode and the input came from the GPS, normally selected by the crew during the 3 years the vessel had been in service.

If the ship had deviated from her course by more than a preset limit, or if the GPS position had differed from the DR position calculated by the autopilot, the NACOS would have sounded an aural and clearly shown a visual alarm at the forward console (the position-fix alarm). There were no alarms because the two DR positions calculated by the NACOS and the GPS were identical. The NACOS DR, which was the perceived backup, was using GPS data, believed to be valid, to refresh its DR position at regular intervals. This is because the GPS was sending DR data, estimated from log and gyro data, but labeled as valid data. Thus, the radar chart and the autopilot were using the same inaccurate position information and there was no display or warning of the fact that DR positions (from the GPS) were used. Nowhere on the integrated display could the officer on watch confirm what mode the GPS was in, and what effect the mode of the GPS was having on the rest of the automated system, not to mention the ship.

In addition to this, there were no immediate and perceivable effects on the ship because the GPS calculated positions using the log and the gyrocompass. It cannot be expected that a crew should become suspicious of the fact that the ship actually is keeping her speed and course. The combination of a busy departure, an unprecedented event (cable break) together with a nonevent (course keeping), and the change of the locus of navigation (including the intrasystem communication difficulties) shows that it made sense, in the situation and at the time, that the crew did not know that a mode change had occurred.

Even if the crew did not know about a mode change immediately after departure, there was still a long voyage at sea ahead. Why did none of the officers check the GPS position against another source, such as the Loran-C receiver that was placed close to the GPS? (Loran-C is a radio navigation system that relies on land-based transmitters.) Until the very last minutes before the grounding, the ship did not act strangely and gave no reason for suspecting that anything was amiss. It was a routine trip, the weather was good and the watches and watch changes uneventful.

Several of the officers actually did check the displays of both Loran and GPS receivers, but only used the GPS data (because those had been more reliable in their experience) to plot positions on the paper chart. It was virtually impossible to actually observe the implications of a difference between Loran and GPS numbers alone. Moreover, there were other kinds of cross-checking. Every hour, the position on the radar map was checked against the position on the paper chart, and cues in the world (e.g., sighting of the first buoy) were matched with GPS data. Another subtle reassurance to officers must have been that the master on a number of occasions spent several minutes checking the position and progress of the ship, and did not make any corrections.

Before the GPS antenna was moved, the short spells of signal degradation that led to DR mode also caused the radar map to jump around on the radar screen (the crew called it “chopping”) because the position would change erratically. The reason chopping was not observed on this particular occasion was that the position did not change erratically, but in a manner consistent with dead reckoning. It is entirely possible that the satellite signal was lost before the autopilot was switched on, thus causing no shift in position. The crew had developed a strategy to deal with this occurrence in the past. When the position-fix alarm sounded, they first changed modes (from NAV to COURSE) on the autopilot and then they acknowledged the alarm. This had the effect of stabilizing the map on the radar screen so that it could be used until the GPS signal returned. It was an unreliable strategy, because the map was being used without knowing the extent of error in its positioning on the screen. It also led to the belief that, as mentioned earlier, the only time the GPS data were unreliable was during chopping. Chopping was more or less alleviated by moving the antenna, which means that by eliminating one problem a new pathway for accidents was created. The strategy of using the position-fix alarm as a safeguard no longer covered all or most of the instances of GPS unreliability.

This locally efficient procedure would almost certainly not be found in any manuals, but gained legitimacy through successful repetition becoming common practice over time. It may have sponsored the belief that a stable map is a good map, with the crew concentrating on the visible signs instead of being wary of the errors hidden below the surface. The chopping problem had been resolved for about four months, and trust in the automation had grown.

Especially toward the end of the journey, there appears to be a larger number of cues that retrospective observers would see as potentially revelatory of the true nature of the situation. The first officer could not positively identify the first buoy that marked the entrance of the Boston sea lanes (Such lanes form a separation scheme delineated on the chart to keep meeting and crossing traffic at a safe distance and to keep ships away from dangerous areas). A position error was still not suspected, even with the vessel close to the shore. The lookouts reported red lights and later blue and white water, but the second officer did not take any action. Smaller ships in the area broadcast warnings on the radio, but nobody on the bridge of the Royal Majesty interpreted those to concern their vessel. The second officer failed to see the second buoy along the sea lanes on the radar, but told the master that it had been sighted.

The first buoy (“BA”) in the Boston traffic lanes was passed at 19:20 on the 10th of June, or so the chief officer thought (the buoy identified by the first officer as the BA later turned out to be the “AR” buoy located about 15 miles to the west-southwest of the BA). To the chief officer, there was a buoy on the radar, and it was where he expected it to be, it was where it should be. It made sense to the first officer to identify it as the correct buoy because the echo on the radar screen coincided with the mark on the radar map that signified the BA. Radar map and radar world matched. We now know that the overlap between radar map and radar return was a mere stochastic fit. The map showed the BA buoy, and the radar showed a buoy return. A fascinating coincidence was the sun glare on the ocean surface that made it impossible to visually identify the BA. But independent cross-checking had already occurred: The first officer probably verified his position by two independent means, the radar map and the buoy.

The officer, however, was not alone in managing the situation, or in making sense of it. An interesting aspect of automated navigation systems in real workplaces is that several people typically use it, in partial overlap and consecutively, like the watch-keeping officers on a ship. At 20:00 the second officer took over the watch from the chief officer. The chief officer must have provided the vessel’s assumed position, as is good watch-keeping practice. The second officer had no reason to doubt that this was a correct position. The chief officer had been at sea for 21 years, spending 30 of the last 36 months onboard the Royal Majesty. Shortly after the takeover, the second officer reduced the radar scale from 12 to 6 nautical miles. This is normal practice when vessels come closer to shore or other restricted waters. By reducing the scale, there is less clutter from the shore, and an increased likelihood of seeing anomalies and dangers.

When the lookouts later reported lights, the second officer had no expectation that there was anything wrong. To him, the vessel was safely in the traffic lane. Moreover, lookouts are liable to report everything indiscriminately; it is always up to the officer of the watch to decide whether to take action. There is also a cultural and hierarchical gradient between the officer and the lookouts; they come from different nationalities and backgrounds. At this time, the master also visited the bridge and, just after he left, there was a radio call. This escalation of work may well have distracted the second officer from considering the lookouts’ report, even if he had wanted to.

After the accident investigation was concluded, it was discovered that two Portuguese fishing vessels had been trying to call the Royal Majesty on the radio to warn her of the imminent danger. The calls were made not long before the grounding, at which time the Royal Majesty was already 16.5 nautical miles from where the crew knew her to be. At 20:42, one of the fishing vessels called, “fishing vessel, fishing vessel call cruise boat,” on channel 16 (an international distress channel for emergencies only). Immediately following this first call in English the two fishing vessels started talking to each other in Portuguese. One of the fishing vessels tried to call again a little later, giving the position of the ship he was calling. Calling on the radio without positively identifying the intended receiver can lead to mix-ups. In this case, if the second officer heard the first English call and the ensuing conversation, he most likely disregarded it since it seemed to be two other vessels talking to each other. Such an interpretation makes sense: If one ship calls without identifying the intended receiver, and another ship responds and consequently engages the first caller in conversation, the communication loop is closed. Also, as the officer was using the 6-mile scale, he could not see the fishing vessels on his radar. If he had heard the second call and checked the position, he might well have decided that the call was not for him, as it appeared that he was far from that position. Whomever the fishing ships were calling, it could not have been him, because he was not there.

At about this time, the second buoy should have been seen and around 21:20 it should have been passed, but was not. The second officer assumed that the radar map was correct when it showed that they were on course. To him the buoy signified a position, a distance traveled in the traffic lane, and reporting that it had been passed may have amounted to the same thing as reporting that they had passed the position it was (supposed to have been) in. The second officer did not, at this time, experience an accumulation of anomalies, warning him that something was going wrong. In his view, this buoy, which was perhaps missing or not picked up by the radar, was the first anomaly, but not perceived as a significant one. The typical Bridge Procedures Guide says that a master should be called when (a) something unexpected happens, (b) when something expected does not happen (e.g., a buoy), and (c) at any other time of uncertainty. This is easier to write than it is to apply in practice, particularly in a case where crew members do not see what they expected to see. The NTSB report, in typical counterfactual style, lists at least five actions that the officer should have taken. He did not take any of these actions, because he was not missing opportunities to avoid the grounding. He was navigating the vessel normally to Boston.

The master visited the bridge just before the radio call, telephoned the bridge about one hour after it, and made a second visit around 22:00. The times at which he chose to visit the bridge were calm and uneventful, and did not prompt the second officer to voice any concerns, nor did they trigger the master’s interest in more closely examining the apparently safe handling of the ship. Five minutes before the grounding, a lookout reported blue and white water. For the second officer, these indications alone were no reason for taking action. They were no warnings of anything about to go amiss, because nothing was going to go amiss. The crew knew where they were. Nothing in their situation suggested to them that they were not doing enough or that they should question the accuracy of their awareness of the situation.

At 22:20 the ship started to veer, which brought the captain to the bridge. The second officer, still certain that they were in the traffic lane, believed that there was something wrong with the steering. This interpretation would be consistent with his experiences of cues and indications during the trip so far. The master, however, came to the bridge and saw the situation differently, but was too late to correct the situation. The Royal Majesty ran aground east of Nantucket at 22:25, at which time she was 17 nautical miles from her planned and presumed course. None of the over 1,000 passengers were injured, but repairs and lost revenues cost the company $7 million.

The complexity of modes, interactions across modes, and indirect mode changes create new paths for errors and failures. No longer are modes only selected and activated through deliberate explicit actions. Rather, modes can change as a side effect of other practitioner actions or inputs depending on the system status at the time. The active mode that results may be inappropriate for the context, but detection and recovery can be very difficult in part due to long time-constant feedback loops.

An example of such an inadvertent mode activation contributed to a major accident in the aviation domain (the Bangalore accident) (Lenorovitz, 1990). In that case, one member of the flight crew put the automation into a mode called OPEN DESCENT during an approach without realizing it. In this mode aircraft speed was being controlled by pitch rather than thrust (controlling by thrust was the desirable mode for this phase of flight, that is, in the SPEED mode). As a consequence, the aircraft could not sustain both the glide path and maintain the pilot-selected target speed at the same time. As a result, the flight director bars commanded the pilot to fly the aircraft well below the required descent profile to try to maintain airspeed. It was not until 10 seconds before impact that the other crew member discovered what had happened, too late for them to recover with engines at idle. How could this happen?

One contributing factor in this accident may have been several different ways of activating the OPEN DESCENT mode (i.e., at least five). The first two options involve the explicit manual selection of the OPEN DESCENT mode. In one of these two cases, the activation of this mode is dependent upon the automation being in a particular state.

The other three methods of activating the OPEN DESCENT mode are indirect in the sense of not requiring any explicit manual mode selection. They are related to the selection of a new target altitude in a specific context or to protections that prevent the aircraft from exceeding a safe airspeed. In this case, for example, the fact that the automation was in the ALTITUDE ACQUISITION mode resulted in the activation of OPEN DESCENT mode when the pilot selected a lower altitude. The pilot may not have been aware of the fact that the aircraft was within 200 feet of the previously entered target altitude (which is the definition of ALTITUDE ACQUISITION mode). Consequently, he may not have expected that the selection of a lower altitude at that point would result in a mode transition. Because he did not expect any mode change, he may not have closely monitored his mode annunciations, and hence missed the transition.

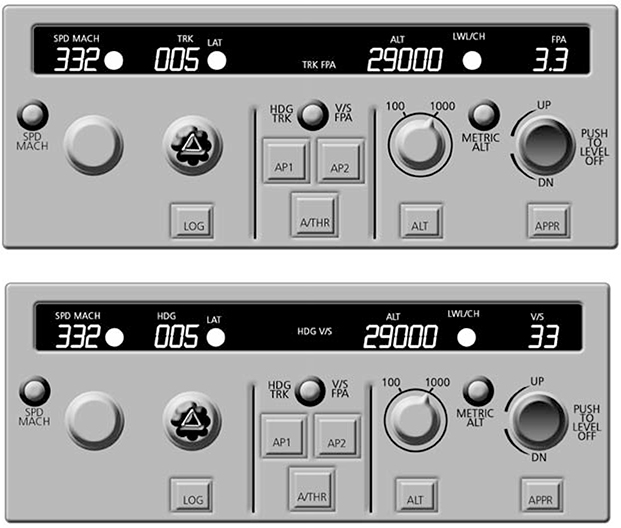

Display of data can play an important role when user-entered values are interpreted differently in different modes. In the following example, it is easy to see how this may result in unintended system behavior. In a current highly automated or “glass cockpit” aircraft, pilots enter a desired vertical speed or a desired flight path angle via the same display. The interpretation of the entered value depends on the active display mode. Although the different targets differ considerably (for example, a vertical speed of 2,500 feet vs. a flight path angle of 2.5 degrees), these two targets on the display look almost the same (see Figure 11.1). The pilot has to know to pay close attention to the labels that indicate mode status. He has to remember the indications associated with different modes, when to check for the currently active setting, and how to interpret the displayed indications. In this case, the problem is further aggravated by the fact that feedback about the consequences of an inappropriate mode transition is limited. The result is a cognitively demanding task; the displays do not support a mentally economical, immediate apprehension of the active mode.

Coordination across multiple team members is another important factor contributing to mode error in advanced systems. Tracking system status and behavior becomes more difficult if it is possible for other users to interact with the system without the need for consent by all operators involved (the indirect mode changes are one human-machine example of this).

This problem is most obvious when two experienced operators have developed different strategies of system use. When they have to cooperate, it is particularly difficult for them to maintain awareness of the history of interaction with the system which may determine the effect of the next system input. In addition, the design of the interface to the automation may suppress important kinds of cues about the activities of other team members.

The demands for mode awareness are critically dependent on the nature of the interface between the human and machine agents (and as pointed out above between human agents as well). If the computerized device also exhibits another of the HCI problems we noted earlier – not providing users with effective feedback about changes in the state of a device, automated system, or monitored process – then losing track of which mode the device is in may be surprisingly easy, at least in higher workload periods.

Some of these factors contributed to a fatal test flight accident that involved one of the most advanced automated aircraft in operation at the time (Aviation Week and Space Technology, April 3, April 10, and April 17, 1995). The test involved checking how the automation could handle a simulated engine failure at low altitude under extreme flight conditions, and it was one of a series of tests being performed one after the other. The flight crew’s task in this test was to set up the automation to fly the aircraft and to stop one engine to simulate an engine failure.

There were a number of contributing factors identified in the accident investigation report. During takeoff, the co-pilot rotated the aircraft rather rapidly which resulted in a slightly higher than planned pitch angle (a little more than 25 degrees) immediately after takeoff. At that point, the autopilot was engaged as planned for this test. Immediately following the autopilot engagement, the captain brought the left engine to idle power and cut off one hydraulic system to simulate an engine failure situation. The automation flew the aircraft into a stall. The flight crew recognized the situation and took appropriate recovery actions, but there was insufficient time (altitude) to recover from the stall before a crash killing everyone aboard the aircraft.

How did this happen? When the autopilot was selected, it immediately engaged in an altitude capture mode because of the high rate of climb and because the pilot selected a rather low level-off altitude of 2,000 ft. As a consequence, the automation continued to try to follow an altitude acquisition path even when it became impossible to achieve it (after the captain had brought the left engine to idle power).

The automation performs protection functions which are intended to prevent or recover from unsafe flight attitudes and configurations. One of these protection functions guards against excessive pitch which results in too low an airspeed and a stall. This protection is provided in all automation configurations except one – the very altitude acquisition mode in which the autopilot was operating.

At the same time, because the pitch angle exceeded 25 degrees at that point, the declutter mode of the Primary Flight Display activated. This means that all indications of the active mode configuration of the automation (including the indication of the altitude capture mode) were hidden from the crew because they had been removed from the display for simplification.

Ultimately, the automation flew the aircraft into a stall, and the crew was not able to recover in time because the incident occurred at low altitude.

Clearly, a combination of factors contributed to this accident and was cited in the report of the accident investigation. Included in the list of factors are the extreme conditions under which the test was planned to be executed, the lack of pitch protection in the altitude acquisition mode, and the inability of the crew to determine that the automation had entered that particular mode or to assess the consequences. The time available for the captain to react (12 seconds) to the abnormal situation was also cited as a factor in this accident.

A more generic contributing factor in this accident was the behavior of the automation which was highly complex and difficult to understand. These characteristics made it hard for the crew to anticipate the outcome of the maneuver. In addition, the observability of the system was practically non-existent when the declutter mode of the Primary Flight Display activated upon reaching a pitch angle of more than 25 degrees up.

The above examples illustrate how a variety of factors can contribute to a lack of mode awareness on the part of practitioners. Gaps or misconceptions in practitioners’ mental models may prevent them from predicting and tracking indirect mode transitions or from understanding the interactions between different modes. The lack of salient feedback on mode status and transitions (low observability) can also make it difficult to maintain awareness of the current and future system configuration. In addition to allocating attention to the different displays of system status and behavior, practitioners have to monitor environmental states and events, remember past instructions to the system, and consider possible inputs to the system by other practitioners. If they manage to monitor, integrate, and interpret all this information, system behavior will appear deterministic and transparent. However, depending on circumstances, missing just one of the above factors can be sufficient to result in an automation surprise and the impression of an animate system that acts independently of operator input and intention.

As illustrated in the above sections, mode error is a form of human-machine system breakdown. As systems of modes become more interconnected and more autonomous, new types of mode-related problems are likely, unless the extent of communication between man and machine changes to keep pace with the new cognitive demands.

THE “GOING SOUR” SCENARIO

Incidents that express the consequences of clumsy use of technology such as mode error follow a unique signature. Minor disturbances, misactions, miscommunications and miscoordinations seem to be managed into hazard despite multiple opportunities to detect that the system is heading towards negative consequences.

For example, this has occurred in several aviation accidents involving highly automated aircraft (Billings, 1996; Sarter, Woods and Billings, 1997). Against a background of the activities, the flight crew misinstructs the automation (e.g., a mode error). The automation accepts the instructions providing limited feedback confirming only the entries themselves. The flight crew believes they have instructed the automation to do one thing when in fact it will carry out a different instruction. The automation proceeds to fly the aircraft according to these instructions even though this takes the aircraft towards hazard (e.g., off course towards a mountain or descending too rapidly short of the runway). The flight crew, busy with other activities, does not see that the automation is flying the aircraft differently than they had expected and does not see that the aircraft is heading towards hazard until very late. At that point it is too late for the crew to take any effective action to avoid the crash.

When things go wrong in this way, we look back and see a process that gradually went “sour” through a series of small problems. For this reason we call them “going sour” scenarios. This term was originally used to describe scenarios in anesthesiology by Cook, Woods and McDonald (1991). A more elaborate treatment for flightcrew-automation breakdowns in aviation accidents can be found in Sarter, Woods and Billings (1997). In the “going sour” class of accidents, an event occurs or a set of circumstances come together that appear to be minor and unproblematic, at least when viewed in isolation or from hindsight. This event triggers an evolving situation that is, in principle, possible to recover from. But through a series of commissions and omissions, misassessments and miscommunications, the human team or the human-machine team manages the situation into a serious and risky incident or even accident. In effect, the situation is managed into hazard.

After-the-fact, going sour incidents look mysterious and dreadful to outsiders who have complete knowledge of the actual state of affairs. Since the system is managed into hazard, in hindsight, it is easy to see opportunities to break the progression towards disaster. The benefits of hindsight allow reviewers to comment:

![]() “How could they have missed X, it was the critical piece of information?”

“How could they have missed X, it was the critical piece of information?”

![]() “How could they have misunderstood Y, it is so logical to us?”

“How could they have misunderstood Y, it is so logical to us?”

![]() “Why didn’t they understand that X would lead to Y, given the inputs, past instructions and internal logic of the system?”

“Why didn’t they understand that X would lead to Y, given the inputs, past instructions and internal logic of the system?”

In fact, one test for whether an incident is a going sour scenario is to ask whether reviewers, with the advantage of hindsight, make comments such as, “All of the necessary data was available, why was no one able to put it all together to see what it meant?” Unfortunately, this question has been asked by a great many of the accident investigation reports of complex system failures regardless of work domain.

Luckily, going sour accidents are relatively rare even in complex systems. The going sour progression is usually blocked because of two factors:

![]() the problems that can erode human expertise and trigger this kind of scenario are significant only when a collection of factors or exceptional circumstances come together;

the problems that can erode human expertise and trigger this kind of scenario are significant only when a collection of factors or exceptional circumstances come together;

![]() the expertise embodied in operational systems and personnel allows practitioners to avoid or stop the incident progression usually.

the expertise embodied in operational systems and personnel allows practitioners to avoid or stop the incident progression usually.

COUNTERMEASURES

Mode error illustrates some of the basic strategies researchers have identified to increase the human contribution to safety:

![]() increase the system’s tolerance to errors,

increase the system’s tolerance to errors,

![]() avoid excess operational complexity,

avoid excess operational complexity,

![]() evaluate changes in technology and training in terms of their potential to create specific genotypes or patterns of failure,

evaluate changes in technology and training in terms of their potential to create specific genotypes or patterns of failure,

![]() increase skill at error detection by improving the observability of state, activities and intentions,

increase skill at error detection by improving the observability of state, activities and intentions,

![]() make intelligent and automated machines team-players,

make intelligent and automated machines team-players,

![]() invest in human expertise.

invest in human expertise.

We will discuss a few of these in the context of mode error and awareness.

AVOID EXCESS OPERATIONAL COMPLEXITY

Designers frequently do not appreciate the cognitive and operational costs of more and more complex modes. Often, there are pressures and other constraints on designers that encourage mode proliferation. However, the apparent benefits of increased functionality may be more than counterbalanced by the costs of learning about all the available functions, the costs of learning how to coordinate these capabilities in context, and the costs of mode errors. Users frequently cope with the complexity of the modes by “re-designing” the system through patterns of use; for example, few users may actually use more than a small subset of the resident options or capabilities.

Avoiding excess operational complexity is a difficult issue because no single developer or organization decides to make systems complex. But in the pursuit of local improvements or in trying to accommodate multiple customers, systems gradually get more and more complex as additional features, modes, and options accumulate. The cost center for this increase in creeping complexity is the user who must try to manage all of these features, modes and options across a diversity of operational circumstances. Failures to manage this complexity are categorized as “human error.” But the source of the problem is not inside the person. The source is the accumulated complexity from an operational point of view. Trying to eliminate “erratic” behavior through remedial training will not change the basic vulnerabilities created by the complexity. Neither will banishing people associated with failures. Instead human error is a symptom of systemic factors. The solutions are system fixes that require change at the blunt end of the system. This coordinated system approach must start with meaningful information about the factors that predictably affect human performance.

Mode simplification in aviation illustrates both the need for change and the difficulties involved. Not all modes are used by all pilots or carriers due to variations in operations and preferences. Still they are all available and can contribute to complexity for operators. Not all modes are taught in transition training; only a set of “basic” modes is taught, and different carriers define different modes as “basic.” It is very difficult to get agreement on which modes represent excess complexity and which are essential for safe and efficient operation.

ERROR DETECTION THROUGH IMPROVED FEEDBACK

Research has shown that systems are effective through effective detection and recovery of developing trouble before negative consequences occur. Error detection is improved by providing better feedback, especially feedback about the future behavior of the underlying system or automated systems. In general, increasing complexity can be balanced with improved feedback. Improving feedback is a critical investment area for improving human performance.

One area of need is improved feedback about the current and future behavior of the automated systems. As technological change increases machines’ autonomy, authority and complexity, there is a concomitant need to increase observability through new forms of feedback emphasizing an integrated dynamic picture of the current situation, agent activities, and how these may evolve in the future. Increasing autonomy and authority of machine agents without an increase in observability leads to automation surprises. As discussed earlier, data on automation surprises has shown that crews generally do not detect their miscommunications with the automation from displays about the automated system’s state, but rather only when system behavior becomes sufficiently abnormal.

This result is symptomatic of low observability where observability is the technical term that refers to the cognitive work needed to extract meaning from available data. This term captures the fundamental relationship among data, observer and context of observation that is fundamental to effective feedback. Observability is distinct from data availability, which refers to the mere presence of data in some form in some location. For human perception, “it is not sufficient to have something in front of your eyes to see it” (O’Regan, 1992, p. 475).

Observability refers to processes involved in extracting useful information (Rasmussen, 1985 first introduced the term referring to control theory). It results from the interplay between a human user knowing when to look for what information at what point in time and a system that structures data to support attentional guidance (Woods, 1995a). The critical test of observability is when the display suite helps practitioners notice more than what they were specifically looking for or expecting (Sarter and Woods, 1997).

One example of displays with very low observability on the current generation of flight decks is the flight-mode annunciations on the primary flight display. These crude indications of automation activities contribute to reported problems with tracking mode transitions. As one pilot commented, “changes can always sneak in unless you stare at it.” Simple injunctions for pilots to look closely at or call out changes in these indications generally are not effective ways to redirect attention in a changing environment.

For new display concepts to enhance observability they will need to be:

![]() transition-oriented – provide better feedback about events and transitions;

transition-oriented – provide better feedback about events and transitions;

![]() future-oriented – the current approach generally captures only the current configuration; the goal is to highlight operationally significant sequences and reveal what will or should happen next and when;

future-oriented – the current approach generally captures only the current configuration; the goal is to highlight operationally significant sequences and reveal what will or should happen next and when;

![]() pattern-based – practitioners should be able to scan at a glance and quickly pick up possible unexpected or abnormal conditions rather than have to read and integrate each individual piece of data to make an overall assessment.

pattern-based – practitioners should be able to scan at a glance and quickly pick up possible unexpected or abnormal conditions rather than have to read and integrate each individual piece of data to make an overall assessment.

MECHANISMS TO MANAGE AUTOMATED RESOURCES

Giving users visibility into the machine agent’s reasoning processes is only one side of the coin in making machine agents into team players. Without also giving the users the ability to direct the machine agent as a resource in their reasoning processes, the users are not in a significantly improved position. They might be able to say what’s wrong with the machine’s solution, but remain powerless to influence it in any way other than through manual takeover. The computational power of machine agents provides a great potential advantage, that is, to free users from much of the mundane legwork involved in working through large problems, thus allowing them to focus on more critical high-level decisions. However, in order to make use of this potential, the users need to be given the authority and capabilities to make those decisions. This means giving them control over the problem solution process.

A commonly proposed remedy for this is, in situations where users determine that the machine agent is not solving a problem adequately, to allow users to interrupt the automated agent and take over the problem in its entirety. Thus, the human is cast into the role of critiquing the machine, and the joint system operates in essentially two modes – fully automatic or fully manual. The system is a joint system only in the sense that either a human agent or a machine agent can be asked to deal with the problem, not in the more productive sense of the human and machine agents cooperating in the process of solving the problem. This method, which is like having the automated agent say “either you do it or I’ll do it,” has many obvious drawbacks. Either the machine does all the job without any benefits of practitioners’ information and knowledge, and despite the brittleness of the machine agents, or the user takes over in the middle of a deteriorating or challenging situation without the support of cognitive tools. Previous work in several domains (space operations, electronic troubleshooting, aviation) and with different types of machine agents (expert systems, cockpit automation, flight-path-planning algorithms) has shown that this is a poor cooperative architecture. Instead, users need to be able to continue to work with the automated agents in a cooperative manner by taking control of the automated agents.

Using the machine agent as a resource may mean various things. As for the case of observability, one of the main challenges is to determine what levels and modes of interaction will be meaningful to users. In some cases the users may want to take very detailed control of some portion of a problem, specifying exactly what decisions are made and in what sequence, while in others the users may want only to make very general, high level corrections to the course of the solution in progress. Accommodating all of these possibilities is difficult and requires very careful iterative analysis of the interactions between user goals, situational factors, and the nature of the machine agent.

ENHANCING HUMAN EXPERTISE

Mode awareness also indicates how technology change interacts with human expertise. It is ironic that many industries seem to be reducing the investment in human expertise, at the very time when they claim that human performance is a dominant contributor to accidents.

One of the myths about the impact of new technology on human performance is that as investment in automation increases less investment is needed in human expertise. In fact, many sources have shown how increased automation creates new and different knowledge and skill requirements.

Investigations of mode issue in aviation showed how the complexity of the automated flight deck creates the need for new knowledge about the functions and interactions of different automated subsystems and modes. Data showed how the complexity of the automated flight deck makes it easy for pilots to develop oversimplified or erroneous mental models of the tangled web of automated modes and transition logics. Training departments struggle within very limited time and resource windows to teach crews how to manage the automated systems as a resource in differing flight situations. Many sources have identified incidents when pilots were having trouble getting a particular mode or level of automation to work successfully. In these cases they persisted too long trying to get a particular mode of automation to carry out their intentions instead of switching to another means or a more direct means to accomplish their flight path management goals. For example, after an incident someone may ask those involved, “Why didn’t you turn it off?” Response: “It didn’t do what it was supposed to, so I tried to get it to do what I had programmed it to do.” The new knowledge and skill demands seem to be most relevant in relatively infrequent situations where different kinds of factors push events beyond the routine.

For training managers and departments the result is a great deal of training demands that must be fitted into a small and shrinking training footprint. The new roles, knowledge and skills for practitioners combine with economic pressures to create a training double bind. Trainers may cope with this double bind in many ways. They may focus limited training resources on a basic subset of features, modes and capabilities leaving the remainder to be learned on the job. Another tactic is to teach recipes. But what if the deferred material is the most complicated or difficult to learn? What if people have misconceptions about those aspects of device function – how will they be corrected? What happens when circumstances force practitioners away from the limited subset of device capabilities with which they are most familiar or most practiced? All of these become problems or conditions that can (and have) contributed to failure.

New developments which promise improved training may not be enough by themselves to cope with the training double bind. Economic pressure means that the benefits of improvements will be taken in productivity (reaching the same goal faster) rather than in quality (more effective training). Trying to squeeze more yield from a shrinking investment in human expertise will not help prevent the kinds of incidents and accidents that we label human error after the fact.