Chapter 3

Secure Software Design

3.1 Introduction

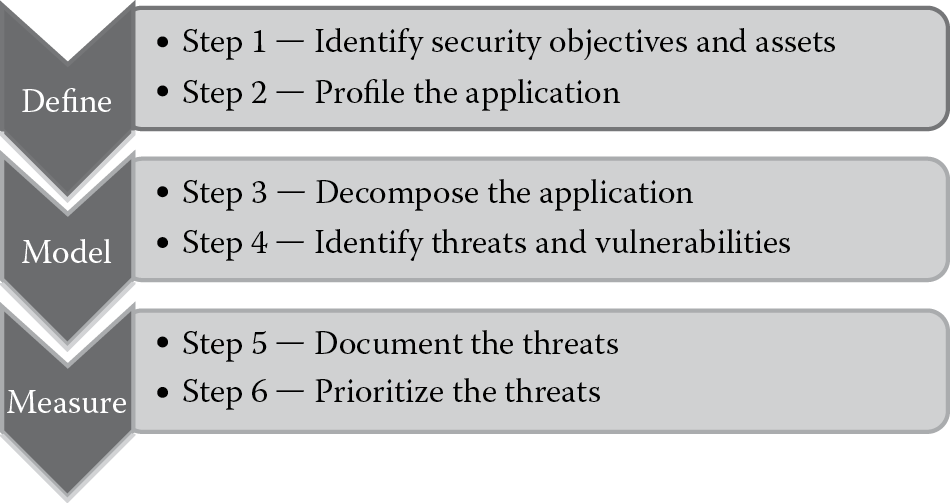

One of the most important phases in the software development life cycle (SDLC) is the design phase. During this phase, software specifications are translated into architectural blueprints that can be coded during the implementation (or coding) phase that follows. When this happens, it is necessary for the translation to be inclusive of secure design principles. It is also important to ensure that the requirements that assure software security are designed into the software in the design phase. Although writing secure code is important for software assurance, the majority of software security issues has been attributed to insecure or incomplete design. Entire classes of vulnerabilities that are not syntactic or code related such as semantic or business logic flaws are related to design issues. Attack surface evaluation using threat models and misuse case modeling (covered in Chapter 2), control identification, and prioritization based on risk to the business are all essential software assurance processes that need to be conducted during the design phase of software development. In this chapter, we will cover secure design principles and processes and learn about different architectures and technologies, which can be leveraged for increasing security in software. We will end this chapter by understanding the need for and the importance of conducting architectural reviews of the software design from a security perspective.

3.2 Objectives

As a CSSLP, you are expected to

- Understand the need for and importance of designing security into the software.

- Be familiar with secure design principles and how they can be incorporated into software design.

- Have a thorough understanding of how to threat model software.

- Be familiar with the different software architectures that exist and the security benefits and drawbacks of each.

- Understand the need to take into account data (type, format), database, interface, and interconnectivity security considerations when designing software.

- Know how the computing environment and chosen technologies can have an impact on design decisions regarding security.

- Know how to conduct design and architecture reviews with a security perspective.

This chapter will cover each of these objectives in detail. It is imperative that you fully understand the objectives and be familiar with how to apply them to the software that your organization builds or procures.

3.3 The Need for Secure Design

Software that is designed correctly improves software quality. In addition to quality aspects of software, there are other requirements that need to be factored into its design. Some are privacy requirements as well as globalization and localization requirements, including security requirements. We learned in earlier chapters that software can meet all quality requirements and still be insecure, warranting the need for explicitly designing the software with security in mind.

IBM Systems Sciences Institute, in its research work on implementing software inspections, determined that it was 100 times more expensive to fix software bugs after the software is in production than when it is being designed. The time that is necessary to fix identified issues is shorter when the software is still in the design phase. The cost savings are substantial because there is minimal to no disruption to business operations. Besides the aforementioned time and cost-saving benefits, there are several other benefits of designing security early in the SDLC. Some of these include the following:

- Resilient and recoverable software: Security designed into software decreases the likelihood of attack or errors, which assures resiliency and recoverability of the software.

- Quality, maintainable software that is less prone to errors: Secure design not only increases the resiliency and recoverability of software, but such software is also less prone to errors (accidental or intentional), and that is directly related to the reliability of the software. This makes the software easily maintainable while improving the quality of the software considerably.

- Minimal redesign and consistency: When software is designed with security in mind, there is a minimal need for redesign. Using standards for architectural design of software also makes the software consistent, regardless of who is developing it.

- Business logic flaws addressed: Business logic flaws are those which are characterized by the software’s functioning as designed, but the design itself makes circumventing the security policy possible. Business logic flaws have been commonly observed in the way password-recovery mechanisms are designed. In the early days, when people needed to recover their passwords, they were asked to answer a predefined set of questions for which they had earlier provided answers that were saved to their profiles on the system. These questions were either guessable or often had a finite set of answers. It is not difficult to guess the favorite color of a person or provide an answer from the finite set of primary colors that exists. The software responds to the user input as designed, and so there is really no issue of reliability. However, because careful thought was not given to the architecture by which password recovery was designed, there existed a possibility of an attacker’s brute-forcing or intelligently bypassing security mechanisms. By designing software with security in mind, business logic flaws and other architectural design issues can be uncovered, which is a main benefit of securely designing software.

Investing the time up front in the SDLC to design security into the software supports the “build-in” virtue of security, as opposed to trying to bolt it on at a later stage. The bolt-on method of implementing security can become very costly, time-consuming, and generate software of low quality characterized by being unreliable, inconsistent, and unmaintainable, as well as by being error prone and hacker prone.

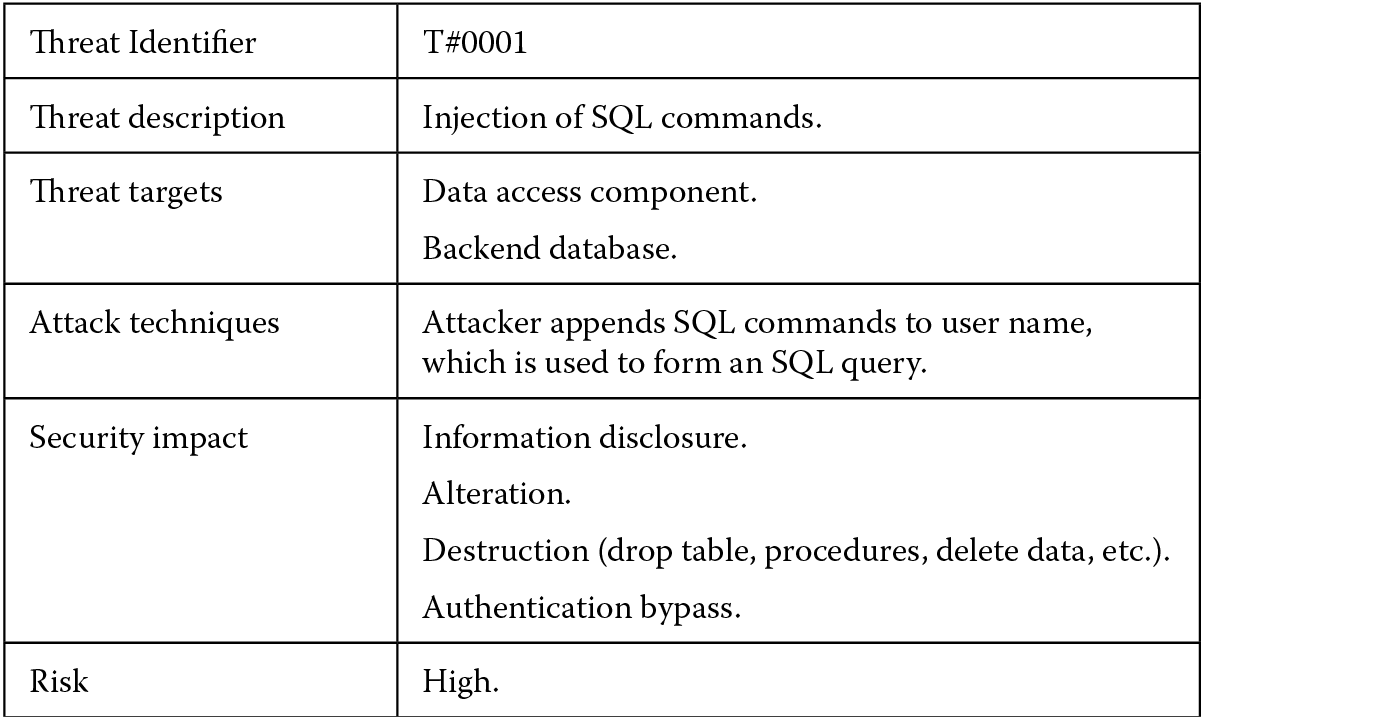

3.4 Flaws versus Bugs

Although it may seem like many security errors are related to insecure programming, the majority of security errors are also architecture based. The line of demarcation between when a software security error is due to improper architecture and when it is due to insecure implementation is not always very distinct, as the error itself may be a result of both architecture and implementation failure. In the design stage, because no code is written, we are primarily concerned with design issues related to software assurance. For the rest of this chapter and book, we will refer to design and architectural defects that can result in errors as “flaws” and to coding/implementation constructs that can cause a breach in security as “bugs.”

It is not quite as important to know which security errors constitute a flaw and which ones a bug, but it is important to understand that both flaws and bugs need to be identified and addressed appropriately. Threat modeling and secure architecture design reviews, which will we cover later in this chapter, are useful in the detection of architecture (flaws) and implementation issues (bugs), although the latter are mostly determined by code reviews and penetration testing exercises after implementation. Business logic flaws that were mentioned earlier are primarily a design issue. They are not easily detectable when reviewing code. Scanners and intrusion detection systems (IDSs) cannot detect them, and application-layer firewalls are futile in their protection against them. The discovery of nonsyntactic design flaws in the logical operations of the software is made possible by security architecture and design reviews. Security architecture and design reviews using outputs from attack surface evaluation, threat modeling, and misuse cases modeling are very useful in ensuring that the software not only functions as it is expected to but that it does not violate any security policy while doing so. Logic flaws are also known as semantic issues. Flaws are broad classes of vulnerabilities that at times can also include syntactic coding bugs. Insufficient input validation and improper error and session management are predominantly architectural defects that manifest themselves as coding bugs.

3.5 Design Considerations

In addition to designing for functionality of software, design for security tenets and principles also must be conducted. In Chapter 2, we learned about various types of security requirements. In the design phase, we will consider how these requirements can be incorporated into the software architecture and makeup. In this section, we will cover how the identified security requirements can be designed and what design decisions are to be made based on the business need. We will start with how to design the software to address the core security elements of confidentiality, integrity, availability, authentication, authorization, and auditing, and then we will look at examples of how to architect the secure design principles covered Chapter 1.

3.5.1 Core Software Security Design Considerations

3.5.1.1 Confidentiality Design

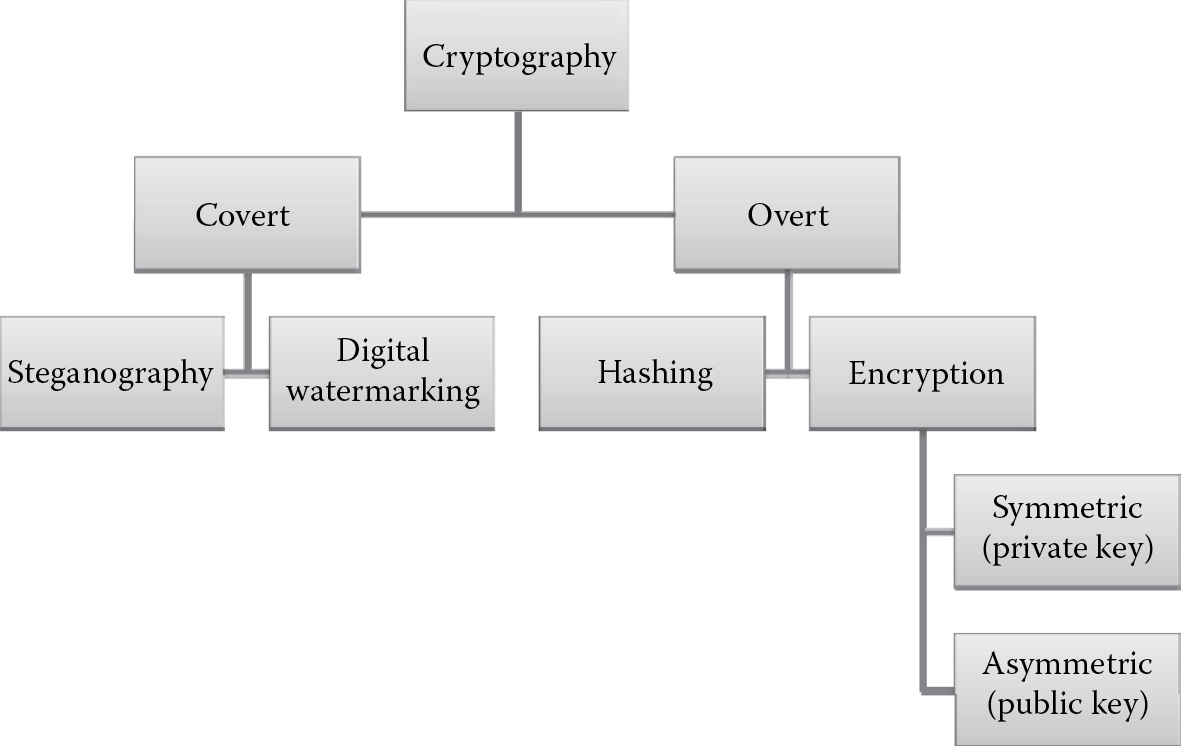

Disclosure protection can be achieved in several ways using cryptographic and masking techniques. Masking, covered in Chapter 2, is useful for disclosure protection when data are displayed on the screen or on printed forms; however, for assurance of confidentiality when the data are transmitted or stored in transactional data stores or offline archives, cryptographic techniques are primarily used. The most predominant cryptographic techniques include overt techniques such as hashing and encryption and covert techniques such as steganography and digital watermarking as depicted in Figure 3.1. These techniques were introduced in Chapter 2 and are covered here in a little more detail with a design perspective.

Cryptanalysis is the science of finding vulnerabilities in cryptographic protection mechanisms. When cryptographic protection techniques are implemented, the primary goal is to ensure that an attacker with resources must make such a large effort to subvert or evade the protection mechanisms that the required effort, itself, serves as a deterrent or makes the subversion or evasion impossible. This effort is referred to as work factor. It is critical to consider the work factor when choosing a technique while designing the software. The work factor against cryptographic protection is exponentially dependent on the key size. A key is a sequence of symbols that controls the encryption and decryption operations of a cryptographic algorithm, according to ISO/IEC 11016:2006. Practically, this is usually a string of bits that is supplied as a parameter into the algorithm for encrypting plaintext to cipher text or for decrypting cipher text to plaintext. It is vital that this key is kept a secret.

The key size, also known as key length, is the length of the key, measured usually in bits or bytes that are used in the algorithm. Given time and computational power, almost all cryptographic algorithms can be broken, except for the one-time pad, which is the only algorithm that is provably unbreakable by exhaustive brute-force attacks. This is, however, only true if the key used in the algorithm is truly random and discarded permanently after use. The key size in a one-time pad is equal to the size of the message itself, and each key bit is used only once and discarded.

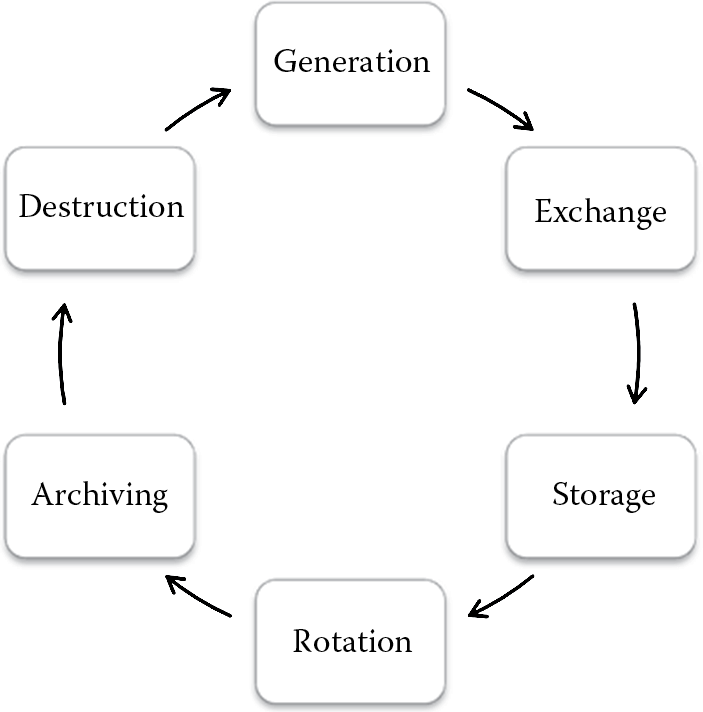

In addition to protecting the secrecy of the key, key management is extremely critical. The key management life cycle includes the generation, exchange, storage, rotation, archiving, and destruction of the key as illustrated in Figure 3.2. From the time that the key is generated to the time that it is completely disposed of (or destroyed), it needs to be protected. The exchange mechanism itself needs to be secure so that the key is not disclosed when the key is shared. When the key is stored in configuration files or in a hardware security module (HSM) such as the Trusted Platform Modules (TPMs) chip for increased security, it needs to be protected using access control mechanisms, TPM security, encryption, and secure startup mechanisms, which we will cover in Chapter 7.

Rotation (swapping) of keys involves the expiration of the current key and the generation, exchange, and storage of a new key. Cryptographic keys need to be swapped periodically to thwart insider threats and immediately upon key disclosure. When the key is rotated as part of a routine security protocol, if the data that are backed up or archived are in an encrypted format, then the key that was used for encrypting the data must also be archived. If the key is destroyed without being archived, the corresponding key to decrypt the data will be unavailable, leading to a denial of service (DoS) should there be a need to retrieve the data for forensics or disaster recovery purposes.

Encryption algorithms are primarily of two types: symmetric and asymmetric.

3.5.1.1.1 Symmetric Algorithms

Symmetric algorithms are characterized by using a single key for encryption and decryption operations that is shared between the sender and the receiver. This is also referred to by other names: private key cryptography, shared key cryptography, or secret key algorithm. The sender and receiver need not be human all the time. In today’s computing business world, the senders and receivers can be applications or software within or external to the organization.

The major benefit of symmetric key cryptography is that it is very fast and efficient in encrypting large volumes of data in a short period. However, this advantage comes with significant challenges that have a direct impact on the design of the software. Some of the challenges with symmetric key cryptography include the following:

- Key exchange and management: Both the originator and the receiver must have a mechanism in place to share the key without compromising its secrecy. This often requires an out-of-band secure mechanism to exchange the key information, which requires more effort and time, besides potentially increasing the attack surface area. The delivery of the key and the data must be mutually exclusive as well.

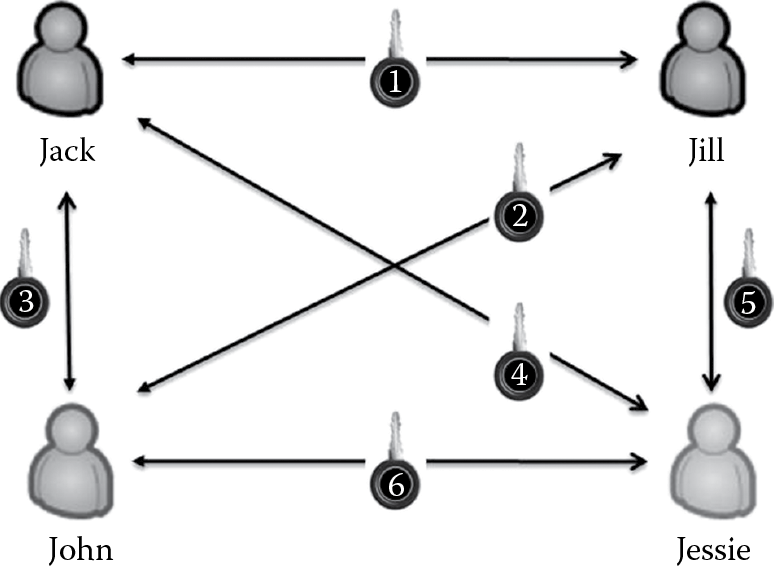

- Scalability: Because a unique key needs to be used between each sender and recipient, the number of keys required for symmetric key cryptographic operations is exponentially dependent on the number of users or parties involved in that secure transaction. For example, if Jack wants to send a message to Jill, then they both must share one key. If Jill wants to send a message to John, then there needs to be a different key that is used for Jill to communicate with John. Now, between Jack and John, there is a need for another key, if they need to communicate. If we add Jessie to the mix, then there is a need to have six keys, one for Jessie to communicate with Jack, one for Jessie to communicate with Jill, and one for Jessie to communicate with John, in addition to the three keys that are necessary as mentioned earlier and depicted in Figure 3.3. The computation of the number of keys can be mathematically represented as

So if there are 10 users/parties involved, then the number of keys required is 45 and if there are 100 users/parties involved, then we need to generate, distribute, and manage 4,950 keys, making symmetric key cryptography not very scalable.

- Nonrepudiation not addressed: Symmetric key simply provides confidentiality protection by encrypting and decrypting the data. It does not provide proof of origin or nonrepudiation.

Some examples of common symmetric key cryptography algorithms along with their strength and supported key size are tabulated in Table 3.1. RC2, RC4, and RC5 are other examples of symmetric algorithms that have varying degrees of strength based on the multiple key sizes they support. For example, the RC2-40 algorithm is considered to be a weak algorithm, whereas the RC2-128 is deemed to be a strong algorithm.

Symmetric Algorithms

|

Algorithm Name |

Strength |

Key Size |

|

DES |

Weak |

56 |

|

Skipjack |

Medium |

80 |

|

IDEA |

Strong |

128 |

|

Blowfish |

Strong |

128 |

|

3DES |

Strong |

168 |

|

Twofish |

Very strong |

256 |

|

RC6 |

Very strong |

256 |

|

AES / Rijndael |

Very strong |

256 |

3.5.1.1.2 Asymmetric Algorithms

In asymmetric key cryptography, instead of using a single key for encryption and decryption operations, two keys that are mathematically related to each other are used. One of the two keys is to be held secret and is referred to as the private key, whereas the other key is disclosed to anyone with whom secure communications and transactions need to occur. The key that is publicly displayed to everyone is known as the public key. It is also important that it should be computationally infeasible to derive the private key from the public key. Though there is a private key and a public key in asymmetric key cryptography, it is commonly known as public key cryptography.

Both the private and the public keys can be used for encryption and decryption. However, if a message is encrypted with a public key, it is only the corresponding private key that can decrypt that message. The same is true when a message is encrypted using a private key. That message can be decrypted only by the corresponding public key. This makes it possible for asymmetric key cryptographic to provide both confidentiality and nonrepudiation assurance.

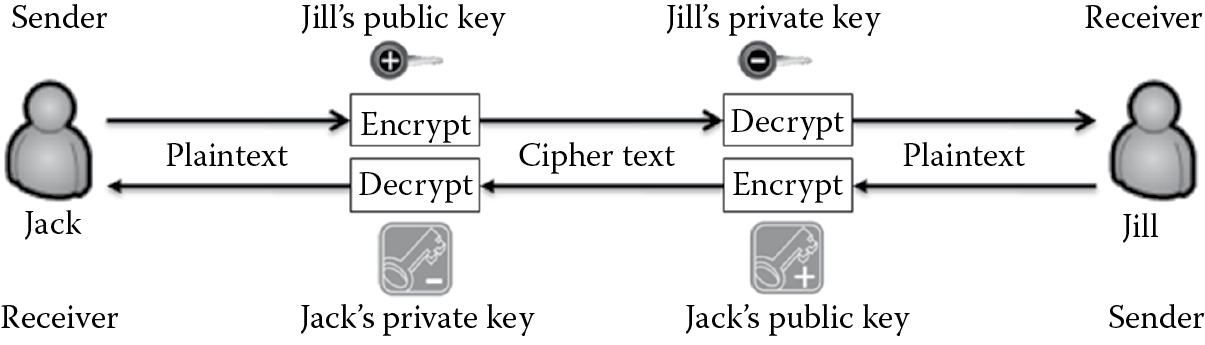

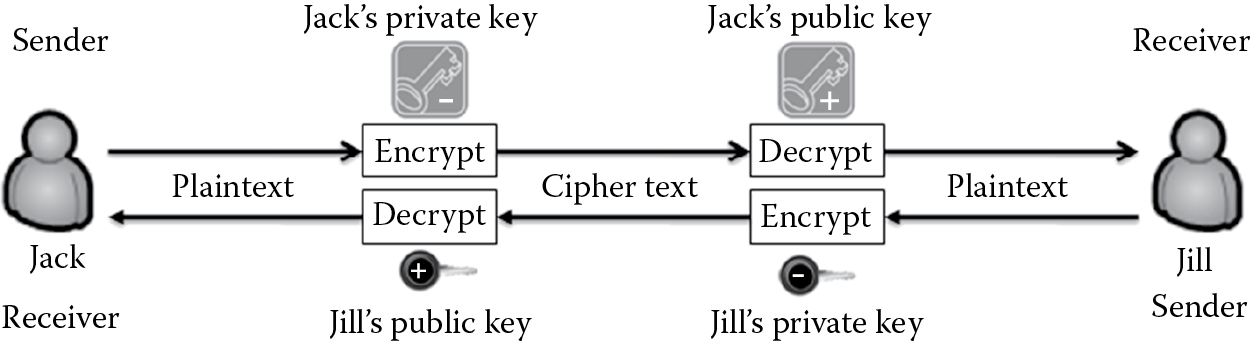

Confidentiality is provided when the sender uses the receiver’s public key to encrypt the message and the receiver uses the corresponding private key to decrypt the message, as illustrated in Figure 3.4. For example, if Jack wants to communicate with Jill, he can encrypt the plaintext message with her public key and send the resulting cipher text to her. Jill can user her private key that is paired with her public key and decrypt the message. Because Jill’s private key should not be known to anyone other than Jill, the message is protected from disclosure to anyone other than Jill, assuring confidentiality. Now, if Jill wants to respond to Jack, she can encrypt the plaintext message she plans to send him with his public key and send the resulting cipher text to him. The cipher text message can then be decrypted to plaintext by Jack using his private key, which again, only he should know.

In addition to confidentiality protection, asymmetric key cryptography also can provide nonrepudiation assurance. Nonrepudiation protection is known also as proof-of-origin assurance. When the sender’s private key is used to encrypt the message and the corresponding key is used by the receiver to decrypt it, as illustrated in Figure 3.5, proof-of-origin assurance is provided. Because the message can be decrypted only by the public key of the sender, the receiver is assured that the message originated from the sender and was encrypted by the corresponding private key of the sender. To demonstrate nonrepudiation or proof of origin, let us consider the following example. Jill has the public key of Jack and receives an encrypted message from Jack. She is able to decrypt that message using Jack’s public key. This assures her that the message was encrypted using the private key of Jack and provides her the confidence that Jack cannot deny sending her the message, because he is the only one who should have knowledge of his private key.

If Jill wants to send Jack a message and he needs to be assured that no one but Jill sent him the message, Jill can encrypt the message with her private key and Jack will use her corresponding public key to decrypt the message. A compromise in the private key of the parties involved can lead to confidentiality and nonrepudiation threats. It is thus critically important to protect the secrecy of the private key.

In addition to confidentiality and nonrepudiation assurance, asymmetric key cryptography also provides access control, authentication, and integrity assurance. Access control is provided because the private key is limited to one person. By virtue of nonrepudiation, the identity of the sender is validated, which supports authentication. Unless the private–public key pair is compromised, the data cannot be decrypted and modified, thereby providing data integrity assurance.

Asymmetric key cryptography has several advantages over symmetric key cryptography. These include the following:

- Key exchange and management: In asymmetric key cryptography, the overhead costs of having to securely exchange and store the key are alleviated. Cryptographic operations using asymmetric keys require a public key infrastructure (PKI) key identification, exchange, and management. PKI uses digital certificates to make key exchange and management automation possible. Digital certificates is covered in the next section.

- Scalability: Unlike symmetric key cryptography, where there is a need to generate and securely distribute one key between each party, in asymmetric key cryptography, there are only two keys needed per user: one that is private and held by the sender and the other that is public and distributed to anyone who wishes to engage in a transaction with the sender. One hundred users will require 200 keys, which is much easier to manage than the 4,950 keys needed for symmetric key cryptography.

- Addresses nonrepudiation: It also addresses nonrepudiation by providing the receiver assurance of proof of origin. The sender cannot deny sending the message when the message has been encrypted using the private key of the sender.

Although asymmetric key cryptography provides many benefits over symmetric key cryptography, there are certain challenges that are prevalent, as well. Public key cryptography is computationally intensive and much slower than symmetric encryption. This is, however, a preferable design choice for Internet environments.

Some common examples of asymmetric key algorithms include Rivest, Shamir, Adelman (RSA), El Gamal, Diffie–Hellman (used only for key exchange and not data encryption), and Elliptic Curve Cryptosystem (ECC), which is ideal for small, hardware devices such as smart cards and mobile devices.

3.5.1.1.3 Digital Certificates

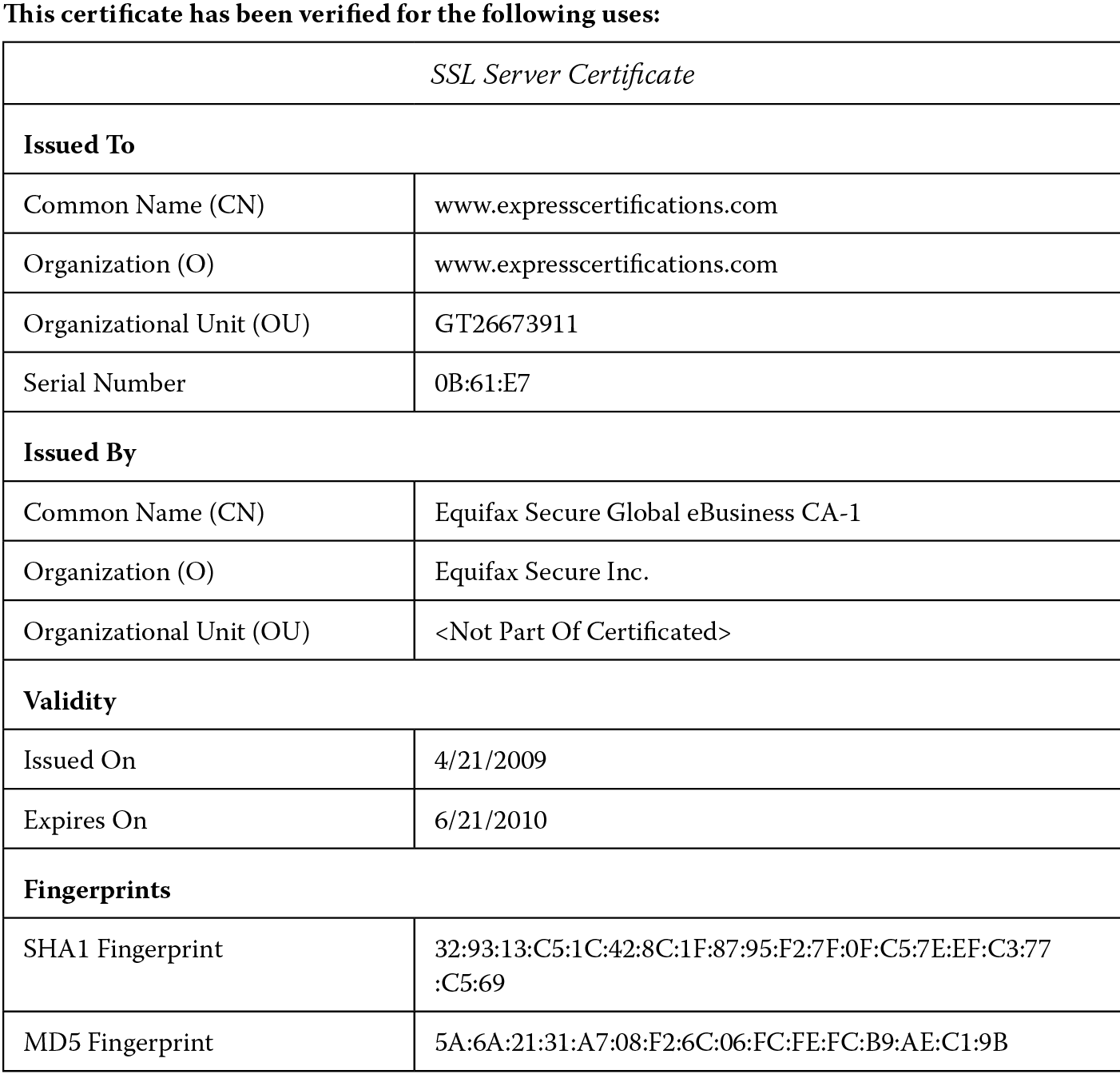

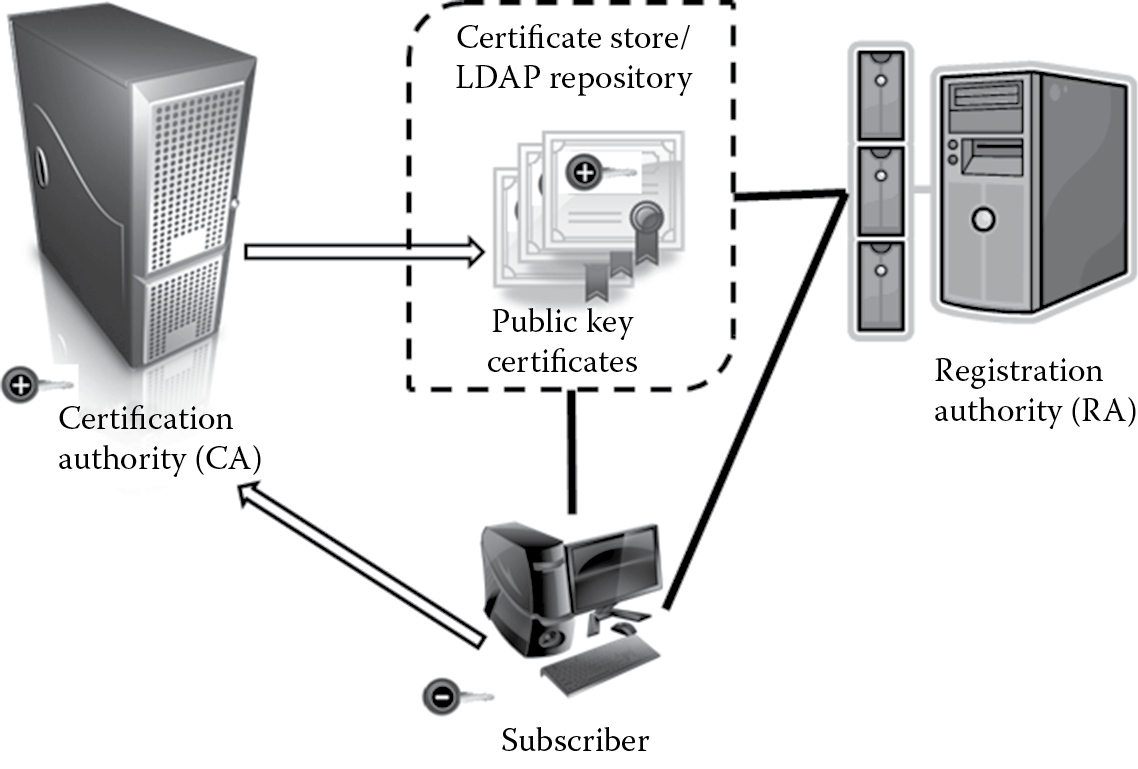

Digital certificates carry in them the public keys, algorithm information, owner and subject data, the digital signature of the certification authority (CA) that issued and verified the subject data, and a validity period (date range) for that certificate. Because a digital certificate contains the digital signature of the CA, it can be used by anyone to verify the authenticity of the certificate itself.

The different types of digital certificates that are predominantly used in Internet settings include the following:

- Personal certificates are used to identify individuals and authenticate them with the server. Secure e-mail using S-Mime uses personal certificates.

- Server certificates are used to identify servers. These are primarily used for verifying server identity with the client and for secure communications and transport layer security (TLS). The Secure Sockets Layer (SSL) protocol uses server certificates for assuring confidentiality when data are transmitted. Figure 3.6 shows an example of a server certificate.

- Software publisher certificates are used to sign software that will be distributed on the Internet. It is important to note that these certificates do not necessarily assure that the signed code is safe for execution but are merely informative in role, informing the software user that the certificate is signed by a trusted, software publisher’s CA.

3.5.1.1.4 Digital Signatures

Certificates hold in them the digital signatures of the CAs that verified and issued the digital certificates. A digital signature is distinct from a digital certificate. It is similar to an individual’s signature in its function, which is to authenticate the identity of the message sender, but in its format it is electronic. Digital signatures not only provide identity verification but also ensure that the data or message have not been tampered with, because the digital signature that is used to sign the message cannot be easily imitated by someone unless it is compromised. It also provides nonrepudiation.

There are several design considerations that need to be taken into account when choosing cryptographic techniques. It is therefore imperative to first understand business requirements pertaining to the protection of sensitive or private information. When these requirements are understood, one can choose an appropriate design that will be used to securely implement the software. If there is a need for secure communications in which no one but the sender and receiver should know of a hidden message, steganography can be considered in the design. If there is a need for copyright and IP protection, then digital watermarking techniques are useful. If data confidentiality in processing, transit, storage, and archives need to be assured, hashing or encryption techniques can be used.

3.5.2 Integrity Design

Integrity in the design assures that there is no unauthorized modification of the software or data. Integrity of software and data can be accomplished by using any one of the following techniques or a combination of the techniques, such as hashing (or hash functions), referential integrity design, resource locking, and code signing. Digital signatures, covered earlier, also provide data or message alteration protection.

3.5.2.1 Hashing (Hash Functions)

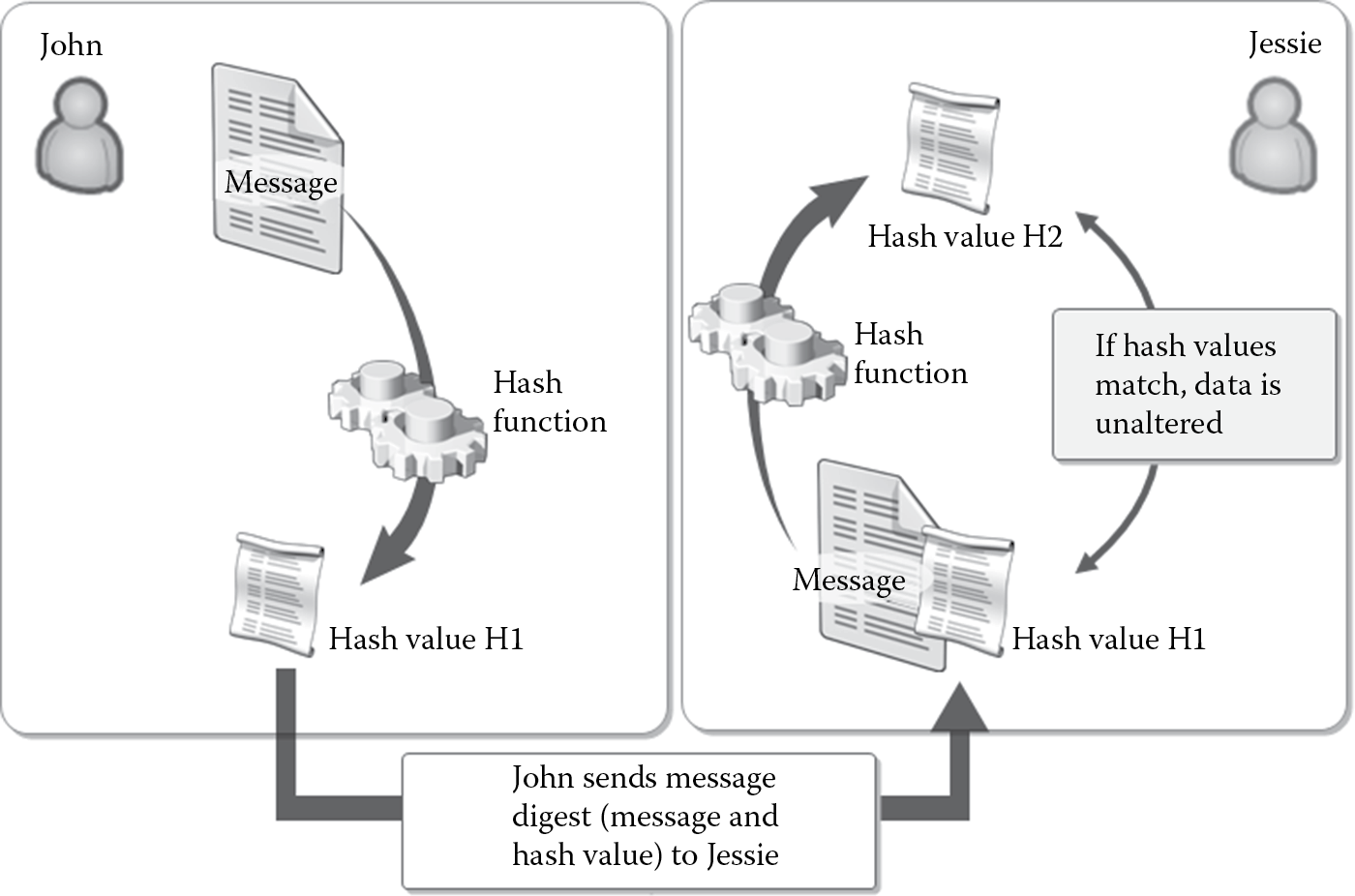

Here is a recap of what was introduced about hashing in Chapter 2: Hash functions are used to condense variable length inputs into an irreversible, fixed-sized output known as a message digest or hash value. When designing software, we must ensure that all integrity requirements that warrant irreversible protection, which is provided by hashing, are factored in. Figure 3.7 describes the steps taken in verifying integrity with hashing. John wants to send a private message to Jessie. He passes the message through a hash function, which generates a hash value, H1. He sends the message digest (original data plus hash value H1) to Jessie. When Jessie receives the message digest, she computes a hash value, H2, using the same hash function that John used to generate H1. At this point, the original hash value (H1) is compared with the new hash value (H2). If the hash values are equal, then the message has not been altered when it was transmitted.

In addition to assuring data integrity, it is also important to ensure that hashing design is collision free. “Collision free” implies that it is computationally infeasible to compute the same hash value on two different inputs. Birthday attacks are often used to find collisions in hash functions. A birthday attack is a type of brute-force attack that gets its name from the probability that two or more people randomly chosen can have the same birthday. Secure hash designs ensure that birthday attacks are not possible, which means that an attacker will not be able to input two messages and generate the same hash value. Salting the hash is a mechanism that assures collision-free hash values. Salting the hash also protects against dictionary attacks, which are another type of brute-force attack. A “dictionary attack” is an attempt to thwart security protection mechanisms by using an exhaustive list (like a list of words from a dictionary).

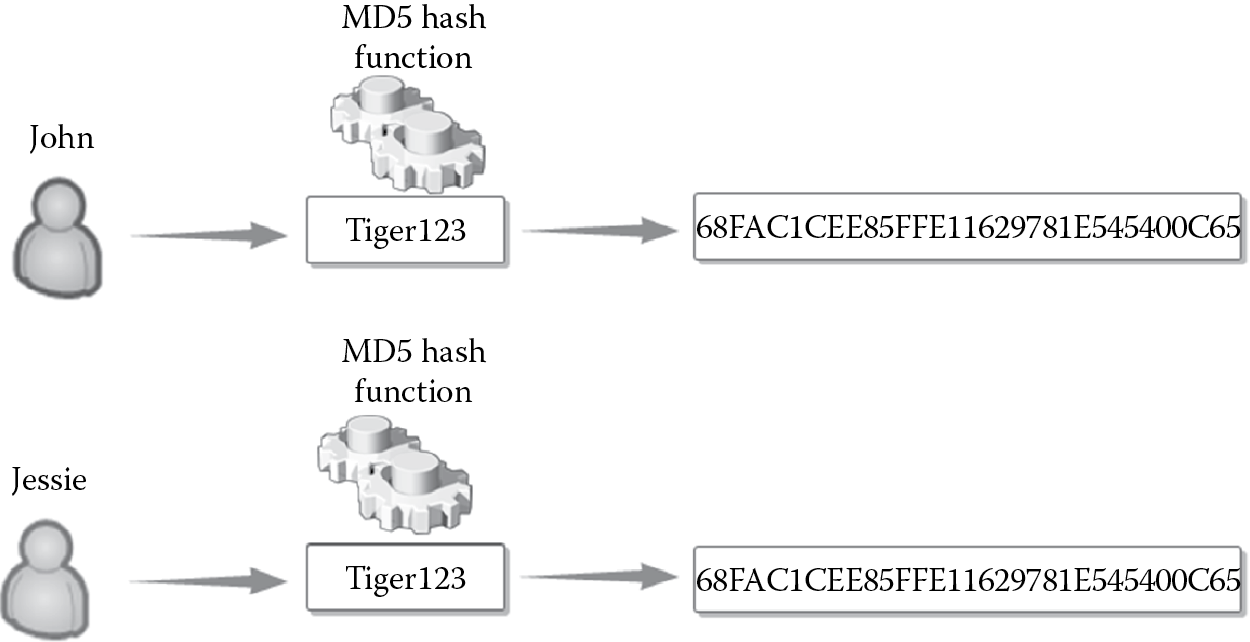

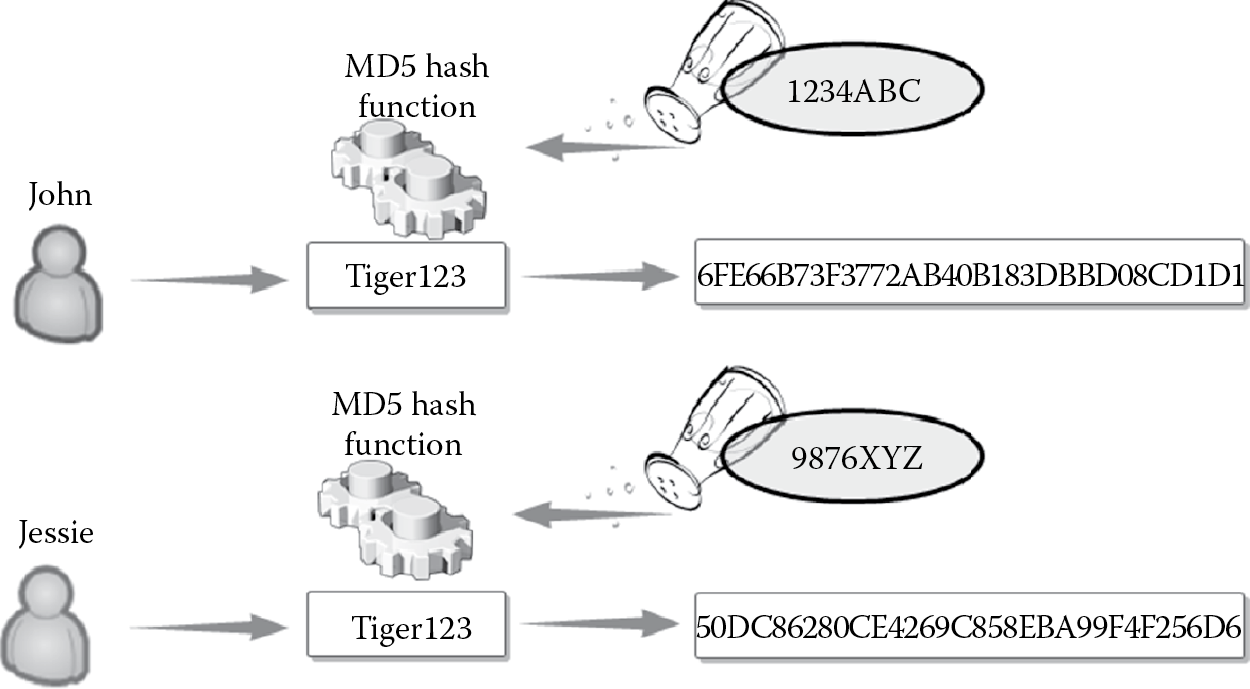

Salt values are random bytes that can be used with the hash function to prevent prebuilt dictionary attacks. Let us consider the following: There is a likelihood that two users within a large organization have the same password. Both John and Jessie have the same password, “tiger123” for logging into their bank account. When the password is hashed using the same hash function, it should produce the same hashed value as depicted in Figure 3.8. The password “tiger123” is hashed using the MD5 hash function to generate a fixed-sized hash value “9E107D9D372BB6826BD81D3542A419D6.”

Even though the user names are different, when the password is hashed, because it generates the same output, it can lead to impersonation attacks, where John can login as Jessie or vice versa. By adding random bytes (salt) to the original plaintext before passing it through the hash function, the output that is generated for the same input is made different. This mitigates the security issues discussed earlier. It is recommended to use a salt value that is unique and random for each user. When the salt value is “1234ABC” for John and is “9876XYZ” for Jessie, the same password, “tiger123” results in different hashed values as depicted in Figure 3.9.

Design considerations should take into account the security aspects related to the generation of the salt, which should be unique to each user and random.

Some of the most common hash functions are the MD2, MD4, and MD5, which were all designed by Ronald Rivest; the Secure Hash Algorithms family (SHA-0, SHA-1, SHA-, and SHA-2) designed by NSA and published by NIST to complement digital signatures and HAVAL. The Ronald Rivest MD series of algorithms generate a fixed, 128-bit size output and has been proven to be not completely collision free. The SHA-0 and SHA-1 family of hash functions generated a fixed, 160-bit sized output. The SHA-2 family of hash functions includes SHA-224 and SHA-256, which generate a 256-bit sized output and SHA-384 and SHA-512 which generate a 512-bit sized output. HAVAL is distinct in being a hash function that can produce hashes in variable lengths (128–256 bits). HAVAL is also flexible to let users indicate the number of rounds (3–5) to be used to generate the hash for increased security. As a general rule of thumb, the greater the bit length of the hash value that is supported, the greater the protection that is provided, making cryptanalysis work factor significantly greater. So when designing the software, it is important to consider the bit length of the hash value that is supported. Table 3.2 tabulates the different hash value lengths that are supported by some common hash functions.

Hash Functions and Supported Hash Value Lengths

|

Hash Function |

Hash Value Length (in bits) |

|

MD2, MD4, MD5 |

128 |

|

SHA |

160 |

|

HAVAL |

Variable lengths (128, 160, 192, 224, 256) |

Another important aspect when choosing the hash function for use within the software is to find out if the hash function has already been broken and deemed unsuitable for use. The MD5 hash function is one such example that the U.S. Computer Emergency Response Team (CERT) of the Department of Homeland Security (DHS) considers as cryptographically broken. DHS promotes moving to the SHA family of hash functions.

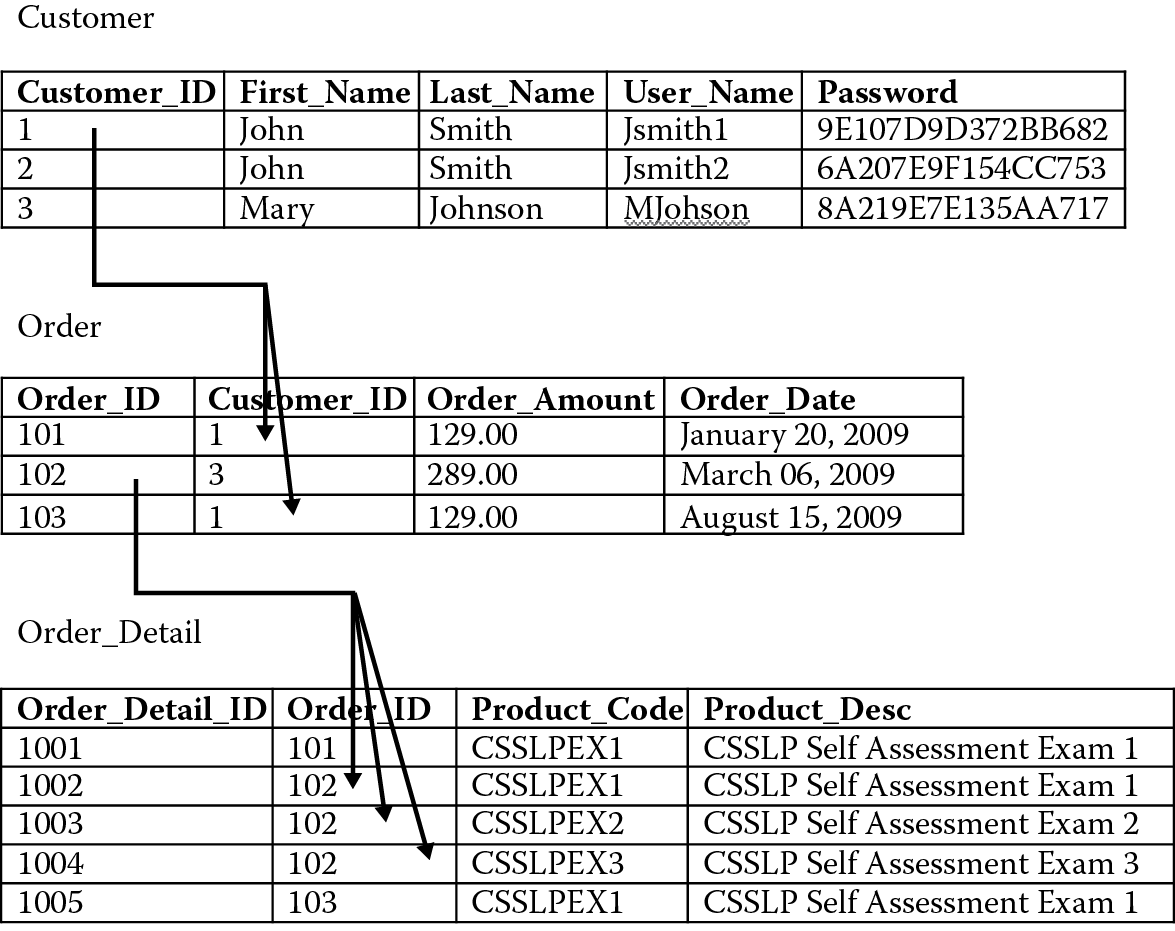

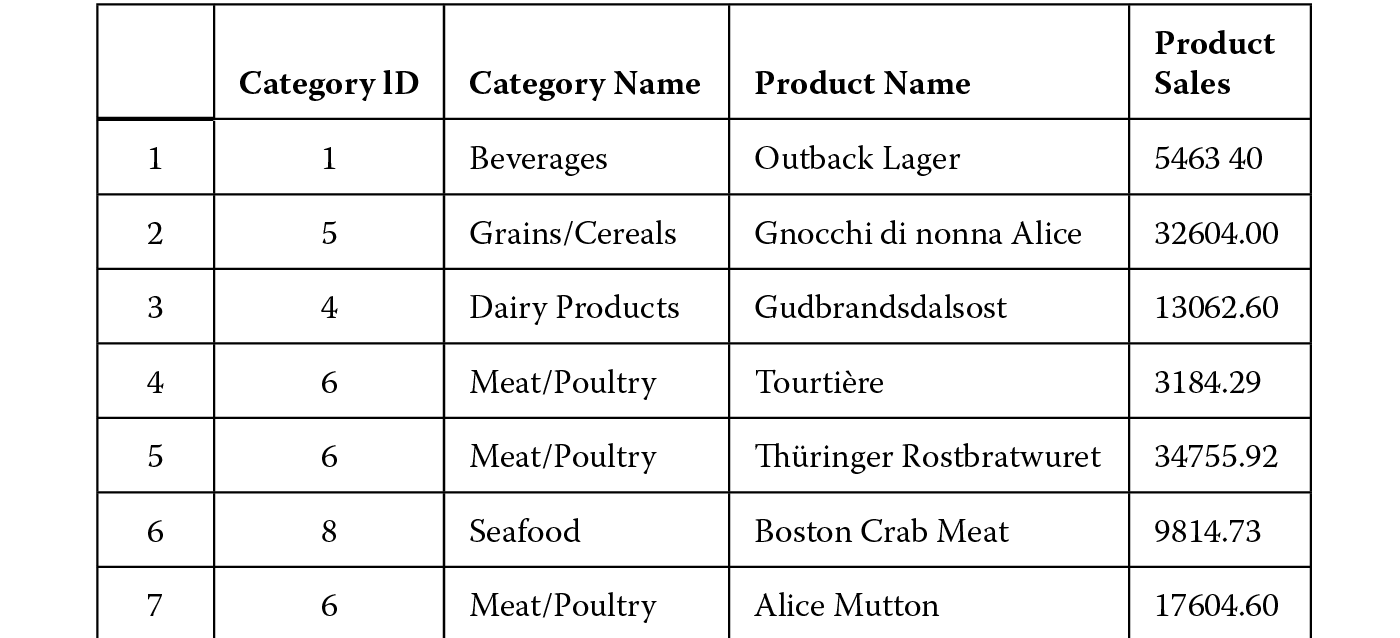

3.5.2.2 Referential Integrity

Integrity assurance of the data, especially in a relational database management system (RDBMS) is made possible by referential integrity, which ensures that data are not left in an orphaned state. Referential integrity protection uses primary keys and related foreign keys in the database to assure data integrity. Primary keys are those columns or combination of columns in a database table, which uniquely identify each row in a table. When the column or columns that are defined as the primary key of a table are linked (referenced) in another table, these column or columns are referred to as foreign keys in the second table. For example, as depicted in Figure 3.10, Customer_ID column in the CUSTOMER table is the primary key because it uniquely identifies a row in the table. Although there are two users with the same first name and last name, “John Smith,” the Customer_ID is unique and can identify the correct row in the database. Customers are also linked to their orders using their Customer_Id, which is the foreign key in the ORDER table. This way, all of the customer information need not be duplicated in the ORDER table. The removal of duplicates in the tables is done by a process called normalization, which is covered later in this chapter. When one needs to query the database to retrieve all orders for customer John Smith whose Customer_ID is 1, then two orders (Order_ID 101 and 103) are returned. In this case, the parent table is the CUSTOMER table and the child table is the ORDER table. The Order_ID is the primary key in the ORDER table, which, in turn, is established as the foreign key in the ORDER_DETAIL table. In order to find out the details of the order placed by customer Mary Johnson whose Customer_ID is 3, we can retrieve the three products that she ordered by referencing the primary key and foreign key relationships. In this case, in addition to the CUSTOMER table being the parent of the ORDER table, the ORDER table, itself, is parent to the ORDER_DETAIL child table.

Referential integrity ensures that data are not left in an orphaned state. This means that if the customer Mary Johnson is deleted from the CUSTOMER table in the database, all of her corresponding order and order details are deleted, as well, from the ORDER and ORDER_DETAIL tables, respectively. This is referred to as cascading deletes. Failure to do so will result in records being present in ORDER and ORDER_DETAILS tables as orphans with a reference to a customer who no longer exists in the parent CUSTOMER table. When referential integrity is designed, it can be set up to either delete all child records when the parent record is deleted or to disallow the delete operation of a customer (parent record) who has orders (child records), unless all of the child order records are deleted first. The same is true in the case of updates. If for some business need, Mary Johnson’s Customer_ID in the parent table (CUSTOMER) is changed, then all subsequent records in the child table (ORDER) should also be updated to reflect the change, as well. This is referred to as cascading updates.

Decisions to normalize data into atomic (nonduplicate) values and establish primary keys and foreign keys and their relationships, cascading updates and deletes, in order to assure referential integrity are important design considerations that ensure the integrity of data or information.

3.5.2.3 Resource Locking

In addition to hashing and referential integrity, resource locking can be used to assure data or information integrity. When two concurrent operations are not allowed on the same object (say a record in the database), because one of the operations locks that record from allowing any changes to it, until it completes its operation, it is referred to as resource locking. Although this provides integrity assurance, it is critical to understand that if resource locking protection is not properly designed, it can lead to potential deadlocks and subsequent DoS. Deadlock is a condition that exists when two operations are racing against each other to change the state of a shared object and each is waiting for the other to release the shared object that is locked.

When designing software, there is a need to consider the protection mechanisms that assure that data or information has not been altered in an unauthorized manner or by an unauthorized person or process, and the mechanisms need to be incorporated into the overall makeup of the software.

3.5.2.4 Code Signing

Code signing is the process of digitally signing the code (executables, scripts, etc.) with the digital signature of the code author. In most cases, code signing is implemented using private and public key systems and digital signatures. Each time code is built, it can be signed or code can be signed just before deployment. Developers can generate their own key or use a key that is issued by a trusted CA for signing their code. When developers do not have access to the key for signing their code, they can sign it at a later phase of the development life cycle, just before deployment, and this is referred to as delayed signing. Delayed signing allows development to continue. When code is signed using the code author’s digital signature, a cryptographic hash of that code is generated. This hash is published along with the software when it is distributed. Any alteration of the code will result in a hash value that will no longer match the hash value that was published. This is how code signing assures integrity and antitampering.

Code signing is particularly important when it comes to mobile code. Mobile code is code that is downloaded from a remote location. Examples of mobile code include Java applets, ActiveX components, browser scripts, Adobe Flash, and other Web controls. The source of the mobile code may not be obvious. In such situations, code signing can be used to assure the proof of origin or its authenticity. Signing mobile code also gives the runtime (not the code itself) permission to access system resources and ensures the safety of the code by sandboxing. Additionally, code signing can be used to ensure that there are no namespace conflicts and to provide versioning information when the software is deployed.

3.5.3 Availability Design

When software requirements mandate the need for continued business operations, the software should be carefully designed. The output from the business impact analysis can be used to determine how to design the software. Special considerations need to be given to software and data replication so that the MTD and the RTO are both within acceptable levels. Destruction and DoS protection can be achieved by proper coding/implementation of the software. Although no code is written in the design phase, in the software design, coding and configuration requirements such as connection pooling, memory management, database cursors, and loop constructions can be looked at. Connection pooling is a database access efficiency mechanism. A connection pool is the number of connections that are cached by the database for reuse. When your software needs to support a large number of users, the appropriate number of connection pools should be configured. If the number of connection pools is low in a highly transactional environment, then the database will be under heavy workload, experiencing performance issues that can possibly lead to DoS. Once a connection is opened, it can be placed in the pool so that other users can reuse that connection, instead of opening and closing a connection for each user. This will increase performance, but security considerations should be taken into account and this is why designing the software from a security (availability) perspective is necessary. Memory leaks can occur if the default query processing, thread limits are not optimized, or because of bad programming; so pertinent considerations of how memory will be managed need to be accounted for in design. Once the processes are terminated, allocated memory resources must be released. This is known as garbage collection and is another important design consideration. Coding constructs that use incorrect cursors and infinite loops can lead to deadlocks and DoS. When these constructs are properly designed, availability assurance is increased.

3.5.4 Authentication Design

When designing for authentication, it is important to consider multifactor authentication and single sign-on (SSO), in addition to determining the type of authentication required as specified in the requirements documentation. Multifactor or the use of more than one factor to authenticate a principal (user or resource) provides heightened security and is recommended. For example, validating and verifying one’s fingerprint (something you are) in conjunction with a token (something you have) and pin code (something you know) before granting access provides more defense in depth than merely using a username and password (something you know). Additionally, if there is a need to implement SSO, wherein the principal’s asserted identity is verified once and the verified credentials are passed on to other systems or applications, usually using tokens, then it is crucial to factor into the design of the software both the performance impact and its security. Although SSO simplifies credential management and improves user experience and performance because the principal’s credential is verified only once, improper design of SSO can result in security breaches that have colossal consequences. A breach at any point in the application flow can lead to total compromise, akin to losing the keys to the kingdom. SSO is covered in more detail in the technology section of this chapter.

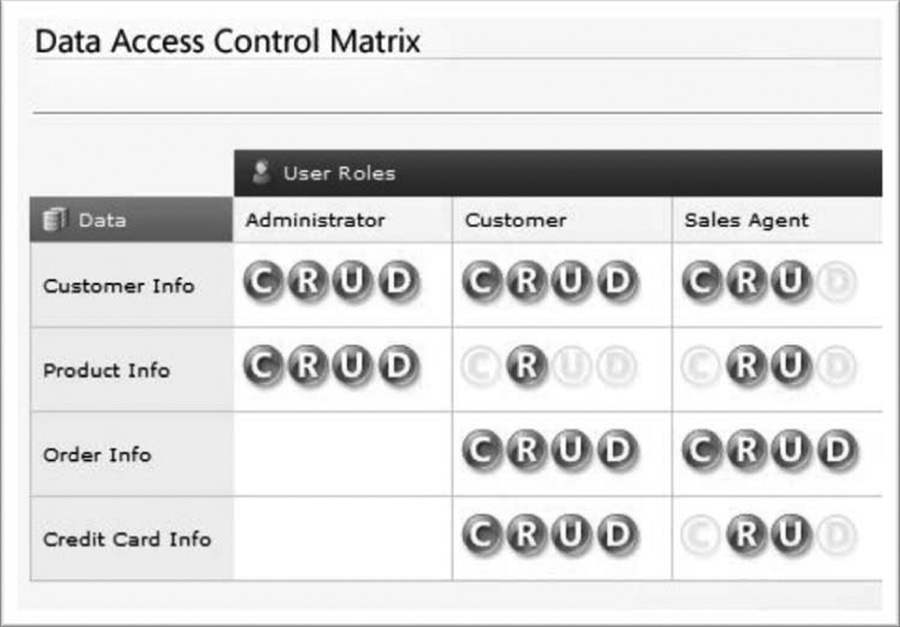

3.5.5 Authorization Design

When designing for authorization, give special attention to the impact on performance and to the principles of separation of duties and least privilege. The type of authorization to be implemented as per the requirements must be determined as well. Are you going to use roles or will we need to use a resource-based authorization, such as a trusted subsystem with impersonation and delegation model, to manage the granting of access rights? Checking for access rights each and every time, as per the principle of complete mediation, can lead to performance degradation and decreased user experience. On the contrary, a design that calls for caching of verified credentials that are used for access decisions can become the Achilles’ heel from a security perspective. When dealing with performance versus security trade-off decisions, it is recommended to err on the side of caution, allowing for security over performance. However, this decision is one that needs to be discussed and approved by the business.

When roles are used for authorization, design should ensure that there are no conflicting roles that circumvent the separation of duties principle. For example, a user cannot be in a teller role and also in an auditor role for a financial transaction. Additionally, design decisions are to ensure that only the minimum set of rights is granted explicitly to the user or resource, thereby supporting least privilege. For example, users in the “Guest” or “Everyone” group account should be allowed only read rights and any other operation should be disallowed.

3.5.6 Auditing/Logging Design

Although it is often overlooked, design for auditing has been proven to be extremely important in the event of a breach, primarily for forensic purposes, and so it should be factored into the software design from the very beginning. Log data should include the who, what, where, and when aspects of software operations. As part of the “who,” it is important not to forget the nonhuman actors such as batch processes and services or daemons.

It is advisable to log by default and to leverage the existing logging functionality within the software, especially if it is commercial off-the-shelf (COTS) software. Because it is a best practice to append to logs and not overwrite them, capacity constraints and requirements are important design considerations. Design decisions to retain, archive, and dispose logs should not contradict external regulatory or internal retention requirements.

Sensitive data should never be logged in plaintext form. Say that the requirements call for logging failed authentication attempts. Then it is important to verify with the business if there is a need to log the password that is supplied when authentication fails. If requirements explicitly call for logging the password upon failed authentication, then it is important to design the software so that the password is not logged in plaintext. Users often mistype their passwords and logging this information can lead to potential confidentiality violation and account compromise. For example, if the software is designed to log the password in plaintext and user Scott whose password is “tiger” mistypes it as “tigwr,” someone who has access to the logs can easily guess the password of the user.

Design should also factor in protection mechanisms of the log itself; and maintaining the chain of custody of the logs will ensure that the logs are admissible in court. Validating the integrity of the logs can be accomplished by hashing the before and after images of the logs and checking their hash values. Auditing in conjunction with other security controls such as authentication can provide nonrepudiation. It is preferable to design the software to automatically log the authenticated principal and system timestamp and not let it be user-defined to avoid potential integrity issues. For example, using the Request.ServerVariables[LOGON_USER] in an IIS Web application or the T-SQL in-built getDate() system function in SQL Server is preferred over passing a user-defined principal name or timestamp.

We have learned about how to design software incorporating core security elements of confidentiality, integrity, availability, authentication, authorization, and auditing.

3.6 Information Technology Security Principles and Secure Design

Special Publication 800-27 of the NIST, which is entitled, “Engineering principles for information technology security (a baseline for achieving security),” provides various IT security principles as listed below. Some of these principles are people oriented, whereas others are tied to the process for designing security in IT systems.

- Establish a sound security policy as the “foundation” for design.

- Treat security as an integral part of the overall system design.

- Clearly delineate the physical and logical security boundaries governed by associated security policies.

- Reduce risk to an acceptable level.

- Assume that external systems are insecure.

- Identify potential trade-offs between reducing risk and increased costs and decreases in other aspects of operational effectiveness.

- Implement layered security. (Ensure no single point of vulnerability.)

- Implement tailored, system security measures to meet organizational security goals.

- Strive for simplicity.

- Design and operate an IT system to limit vulnerability and to be resilient in response.

- Minimize the system elements to be trusted.

- Implement security through a combination of measures distributed physically and logically.

- Provide assurance that the system is, and continues to be, resilient in the face of expected threats.

- Limit or contain vulnerabilities.

- Formulate security measures to address multiple, overlapping, information domains.

- Isolate public access systems from mission critical resources (e.g., data, processes, etc.).

- Use boundary mechanisms to separate computing systems and network infrastructures.

- Where possible, base security on open standards for portability and interoperability.

- Use common language in developing security requirements.

- Design and implement audit mechanisms to detect unauthorized use and to support incident investigations.

- Design security to allow for regular adoption of new technology, including a secure and logical technology upgrade process.

- Authenticate users and processes to ensure appropriate, access control decisions both within and across domains.

- Use unique identities to ensure accountability.

- Implement least privilege.

- Do not implement unnecessary security mechanisms.

- Protect information while it is processed, in transit, and in storage.

- Strive for operational ease of use.

- Develop and exercise contingency or disaster recovery procedures to ensure appropriate availability.

- Consider custom products to achieve adequate security.

- Ensure proper security in the shutdown or disposal of a system.

- Protect against all likely classes of attacks.

- Identify and prevent common errors and vulnerabilities.

- Ensure that developers are trained in how to develop secure software.

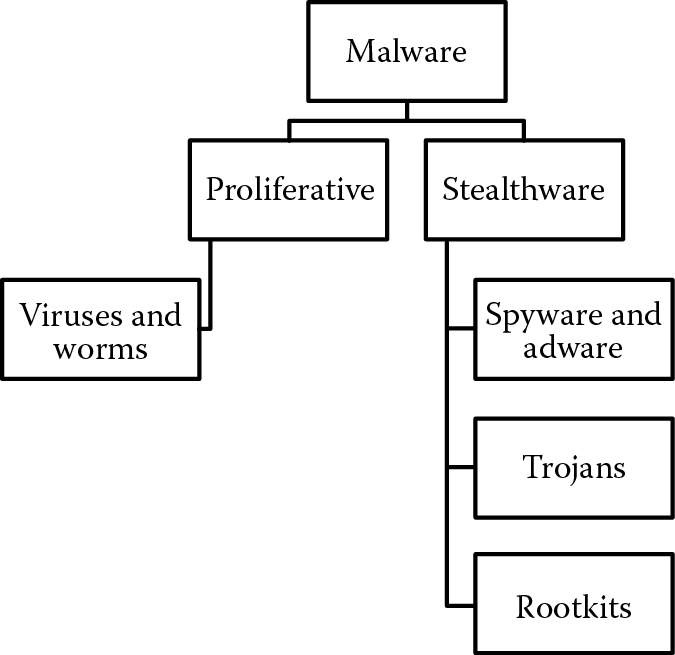

Some of the common, insecure design issues observed in software are the following:

- Not following coding standards

- Improper implementation of least privilege

- Software fails insecurely

- Authentication mechanisms easily bypassed

- Security through obscurity

- Improper error handling

- Weak input validation

3.7 Designing Secure Design Principles

In the following section we will look at some of the IT engineering security principles that are pertinent to software design. The following principles were introduced and defined in Chapter 1. It is revisited here as a refresher and discussed in more depth with examples.

3.7.1 Least Privilege

Although the principle of least privilege is more applicable to administering a system where the number of users with access to critical functionality and controls is restricted, least privilege can be implemented within software design. When software is said to be operating with least privilege, it means that only the necessary and minimum level of access rights (privileges) has been given explicitly to it for a minimum amount of time in order for it to complete its operation. The main objective of least privilege is containment of the damage that can result from a security breach that occurs accidentally or intentionally. Some of the examples of least privilege include the military security rule of “need-to-know” clearance level classification, modular programming, and nonadministrative accounts.

The military security rule of need-to-know limits the disclosure of sensitive information to only those who have been authorized to receive such information, thereby aiding in confidentiality assurance. Those who have been authorized can be determined from the clearance level classifications they hold, such as Top Secret, Secret, Sensitive but Unclassified, etc. Best practice also suggests that it is preferable to have many administrators with limited access to security resources instead of one user with “super user” rights.

Modular programming is a software design technique in which the entire program is broken down into smaller subunits or modules. Each module is discrete with unitary functionality and is said to be therefore cohesive, meaning each module is designed to perform one and only one logical operation. The degree of how cohesive a module is indicates the strength at which various responsibilities of a software module are related. The discreetness of the module increases its maintainability and the ease of determining and fixing software defects. Because each unit of code (class, method, etc.) has a single purpose and the operations that can be performed by the code is limited to only that which it is designed to do, modular programming is also referred to as the Single Responsibility Principle of software engineering. For example, the function CalcDiscount() should have the single responsibility to calculate the discount for a product, while the CalcSH() function should be exclusively used to calculate shipping and handling rates. When code is not designed modularly, not only does it increase the attack surface, but it also makes the code difficult to read and troubleshoot. If there is a requirement to restrict the calculation of discounts to a sales manager, not separating this functionality into its own function, such as CalcDiscount(), can lead potentially to a nonsales manager’s running code that is privileged to a sales manager. An aspect related to cohesion is coupling. Coupling is a reflection of the degree of dependencies between modules, i.e., how dependent one module is to another. The more dependent one module is to another, the higher its degree of coupling, and “loosely coupled modules” is the condition where the interconnections among modules are not rigid or hardcoded.

Good software engineering practices ensure that the software modules are highly cohesive and loosely coupled at the same time. This means that the dependencies between modules will be weak (loosely coupled) and each module will be responsible to perform a discrete function (highly cohesive).

Modular programming thereby helps to implement least privilege, in addition to making the code more readable, reusable, maintainable, and easy to troubleshoot.

The use of accounts with nonadministrative abilities also helps implement least privilege. Instead of using the “sa” or “sysadmin” account to access and execute database commands, using a “datareader” or “datawriter” account is an example of least privilege implementation.

3.7.2 Separation of Duties

When design compartmentalizes software functionality into two or more conditions, all of which need to be satisfied before an operation can be completed, it is referred to as separation of duties. The use of split keys for cryptographic functionality is an example of separation of duties in software. Keys are needed for encryption and decryption operations. Instead of storing a key in a single location, splitting a key and storing the parts in different locations, with one part in the system’s registry and the other in a configuration file, provides more security. Software design should factor in the locations to store keys, as well as the mechanisms to protect them.

Another example of separation of duties in software development is related to the roles that people play during its development and the environment in which the software is deployed. The programmer should not be allowed to review his own code nor should a programmer have access to deploy code to the production environment. We will cover in more detail the separation of duties based on the environment in the configuration section of Chapter 7.

When architected correctly, separation of duties reduces the extent of damage that can be caused by one person or resource. When implemented in conjunction with auditing, it can also discourage insider fraud, as it will require collusion between parties to conduct fraud.

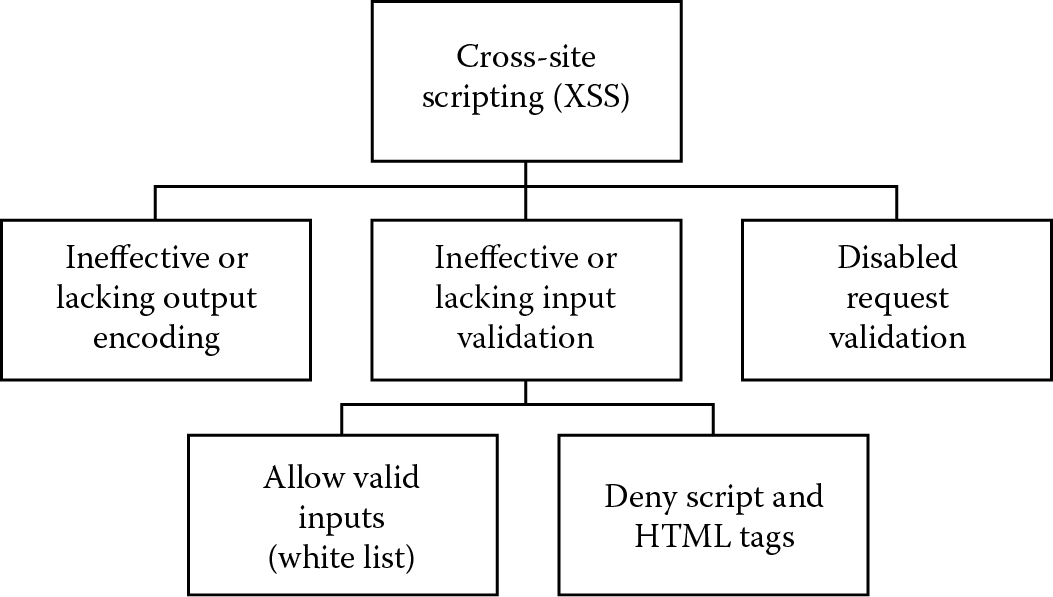

3.7.3 Defense in Depth

Layering security controls and risk mitigation safeguards into software design incorporates the principle of defense in depth. This is also referred to as layered defense. The reasons behind this principle are twofold, the first of which is that the breach of a single vulnerability in the software does not result in complete or total compromise. In other words, defense in depth is akin to not putting all the eggs in one basket. Second, incorporating the defense of depth in software can be used as a deterrent for the curious and nondetermined attackers when they are confronted with one defensive measure over another.

Some examples of defense in depth measures are

- Use of input validation along with prepared statements or stored procedures, disallowing dynamic query constructions using user input to defend against injection attacks

- Disallowing Cross-Site Scripting active scripting in conjunction with output encoding and input or request validation to defend against (XSS)

- The use of security zones, which separates the different levels of access according to the zone that the software or person is authorized to access

3.7.4 Fail Secure

Fail secure is the security principle that ensures that the software reliably functions when attacked and is rapidly recoverable into a normal business and secure state in the event of design or implementation failure. It aims at maintaining the resiliency (confidentiality, integrity, and availability) of software by defaulting to a secure state. Fail secure is primarily an availability design consideration, although it provides confidentiality and integrity protection as well. It supports the design and default aspects of the SD3 initiative, which implies that the software or system is secure by design, secure by default, and secure by deployment. In the context of software security, “fail secure” can be used interchangeably with “fail safe,” which is commonly observed in physical security.

Some examples of fail secure design in software include the following:

- The user is denied access by default and the account is locked out after the maximum number (clipping level) of access attempts is tried.

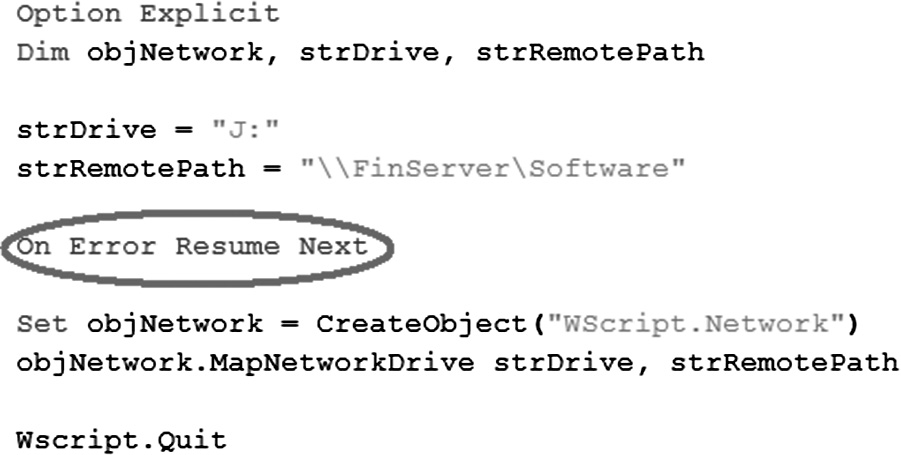

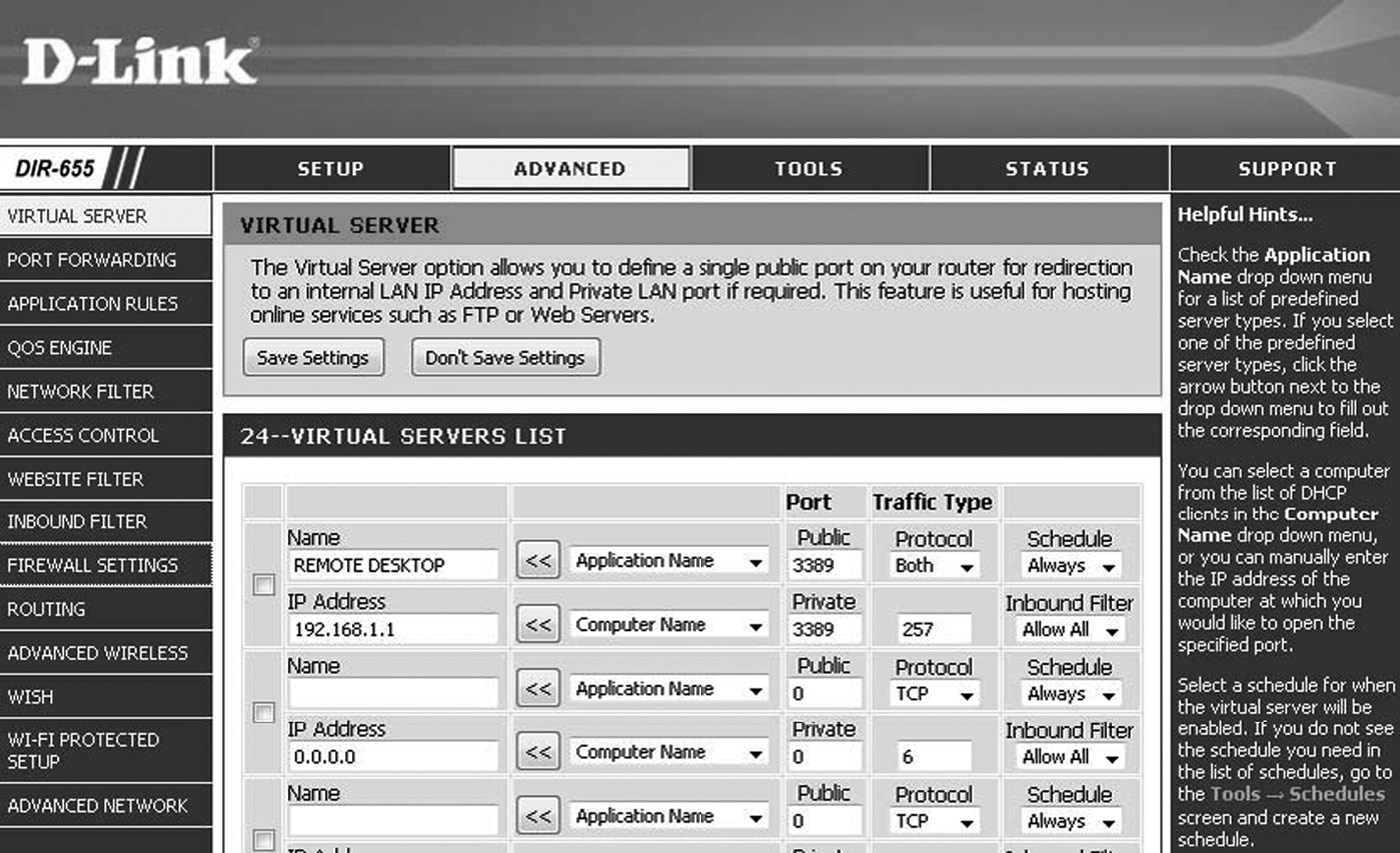

- Not designing the software to ignore the error and resume next operation. The On Error Resume Next functionality in scripting languages such as VBScript as depicted in Figure 3.11.

- Errors and exceptions are explicitly handled and the error messages are nonverbose in nature. This ensures that system exception information, along with the stack trace, is not bubbled up to the client in raw form, which an attacker can use to determine the internal makeup of the software and launch attacks accordingly to circumvent the security protection mechanisms or take advantage of vulnerabilities in the software. Secure software design will take into account the logging of the error content into a support database and the bubbling up of only a reference value (such as error ID) to the user with instructions to contact the support team for additional support.

3.7.5 Economy of Mechanisms

In Chapter 1, we noted that one of the challenges to the implementation of security is the trade-off that happens between the usability of the software and the security features that need to be designed and built in. With the noble intention of increasing the usability of software, developers often design and code in more functionality than is necessary. This additional functionality is commonly referred to as “bells-and-whistles.” A good indicator of which features in the software are unneeded bells-and-whistles is reviewing the requirements traceability matrix (RTM) that is generated during the requirements gathering phase of the software development project. Bells-and-whistles features will never be part of the RTM. While such added functionality may increase user experience and usability of the software, it increases the attack surface and is contrary to the economy of mechanisms, secure design principle, which states that the more complex the design of the software, the more likely there are vulnerabilities. Simpler design implies easy-to-understand programs, decreased attack surface, and fewer weak links. With a decreased attack surface, there is less opportunity for failure and when failures do occur, the time needed to understand and fix the issues is less, as well. Additional benefits of economy of mechanisms include ease of understanding program logic and data flows and fewer inconsistencies. Economy of mechanism in layman’s terms is also referred to as the KISS (Keep It Simple Stupid) principle and in some instances as the principle of unnecessary complexity. Modular programming not only supports the principle of least privilege but also supports the principle of economy of mechanisms.

Taken into account, the following considerations support the designing of software with the economies of mechanisms principle in mind:

- Unnecessary functionality or unneeded security mechanisms should be avoided. Because patching and configuration of newer software versions has been known to security features that were disabled in previous versions, it is advisable to not even design unnecessary features, instead of designing them and leaving the features in a disabled state.

- Strive for simplicity. Keeping the security mechanisms simple ensures that the implementation is not partial, which could result in compatibility issues. It is also important to model the data to be simple so that the data validation code and routines are not overly complex or incomplete. Supporting complex, regular expressions for data validation can result in algorithmic complexity weaknesses as stated in the Common Weakness Enumeration publication 407 (CWE-407).

- Strive for operational ease of use. SSO is a good example that illustrates the simplification of user authentication so that the software is operationally easy to use.

3.7.6 Complete Mediation

In the early days of Web application programming, it was observed that a change in the value of a QueryString parameter would display the result that was tied to the new value without any additional validation. For example, if Pamela is logged in, and the Uniform Resource Locator (URL) in the browser address bar shows the name value pair, user=pamela, changing the value “pamela” to “reuben” would display Reuben’s information without validating that the logged-on user is indeed Reuben. If Pamela changes the parameter value to user=reuben, she can view Reuben’s information, potentially leading to attacks on confidentiality, wherein Reuben’s sensitive and personal information is disclosed to Pamela.

While this is not as prevalent today as it used to be, similar design issues are still evident in software. Not checking access rights each time a subject requests access to objects violates the principle of complete mediation. Complete mediation is a security principle that states that access requests need to be mediated each time, every time, so that authority is not circumvented in subsequent requests. It enforces a system-wide view of access control. Remembering the results of the authority check, as is done when the authentication credentials are cached, can increase performance; however, the principle of complete mediation requires that results of an authority check be examined skeptically and systematically updated upon change. Caching can therefore lead to an increased security risk of authentication bypass, session hijacking and replay attacks, and man-in-the-middle (MITM) attacks. Therefore, designing the software to rely solely on client-side, cookie-based caching of authentication credentials for access should be avoided, if possible.

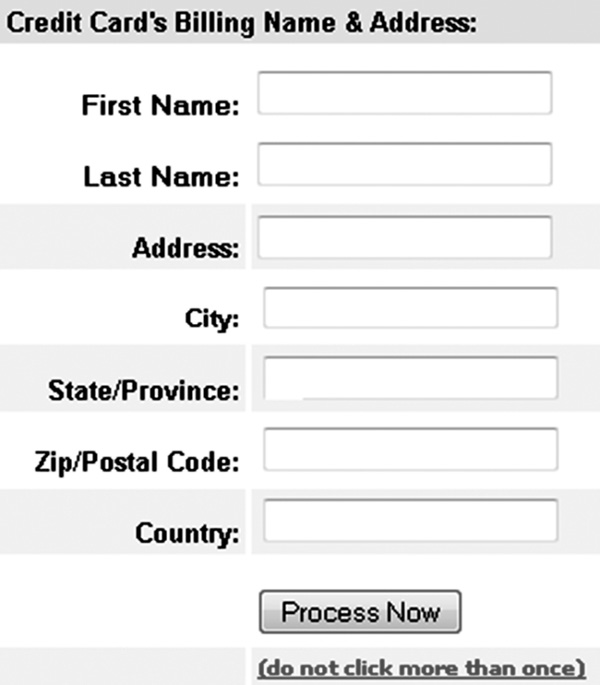

Complete mediation not only protects against authentication threats and confidentiality threats but is also useful in addressing the integrity aspects of software, as well. Not allowing browser postbacks without validation of access rights, or checking that a transaction is currently in a state of processing, can protect against the duplication of data, avoiding data integrity issues. Merely informing the user to not click more than once, as depicted in Figure 3.12, is not foolproof and so design should include the disabling of user controls once a transaction is initiated until the transaction is completed.

The complete mediation design principle also addresses the failure to protect alternate path vulnerability. To properly implement complete mediation in software, it is advisable during the design phase of the SDLC to identify all possible code paths that access privileged and sensitive resources. Once these privileged code paths are identified, then the design must force these code paths to use a single interface that performs access control checks before performing the requested operation. Centralizing input validation by using a single input validation layer with a single, input filtration checklist for all externally controlled inputs is an example of such design. Alternatively, using an external input validation framework that validates all inputs before they are processed by the code may be considered when designing the software.

Complete mediation also augments the protection against the weakest link. Software is only as strong as its weakest component (code, service, interface, or user). It is also important to recognize that any protection that technical safeguards provide can be rendered futile if people fall prey to social engineering attacks or are not aware of how to use the software. The catch 22 is that people who are the first line of defense in software security can also become the weakest link, if they are not made aware, trained, and educated in software security.

3.7.7 Open Design

Dr. Auguste Kerckhoff, who is attributed with giving us the cryptographic Kerckhoff ’s principle, states that all information about the crypto system is public knowledge except the key, and the security of the crypto system against cryptanalysis attacks is dependent on the secrecy of the key. An outcome of Kerckhoff’s principle is the open design principle, which states that the implementation of security safeguards should be independent of the design, itself, so that review of the design does not compromise the protection the safeguards offer. This is particularly applicable in cryptography where the protection mechanisms are decoupled from the keys that are used for cryptographic operations and algorithms used for encryption and decryption are open and available to anyone for review.

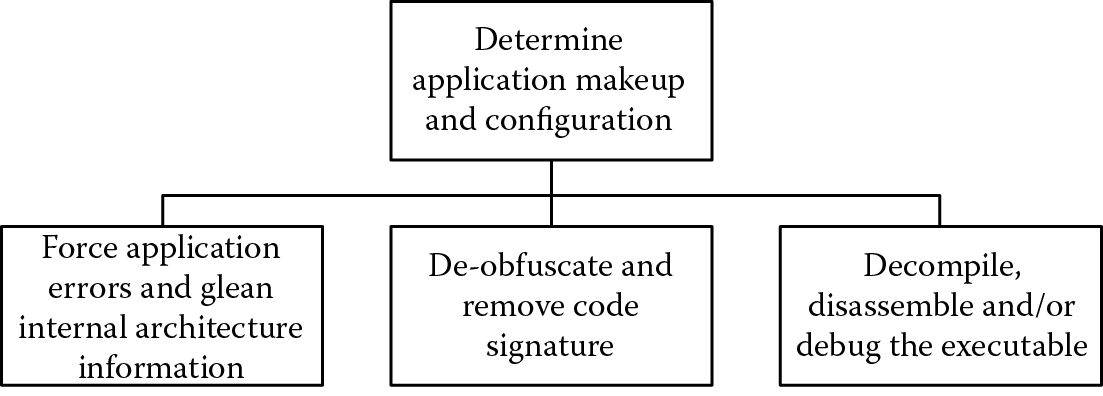

The inverse of the open design principle is security through obscurity, which means that the software employs protection mechanisms whose strength is dependent on the obscurity of the design, so much so that the understanding of the inner workings of the protection mechanisms is all that is necessary to defeat the protection mechanisms. A classic example of security through obscurity, which must be avoided if possible, is the hard coding and storing of sensitive information, such as cryptographic keys, or connection strings information with username and passwords inline code, or executables. Reverse engineering, binary analysis of executables, and runtime analysis of protocols can reveal these secrets. Review of the Diebold voting machines code revealed that passwords were embedded in the source code, cryptographic implementation was incorrect, the design allowed voters to vote an unlimited number of times without being detected, privileges could be escalated, and insiders could change a voter’s ballot choice, all of which could have been avoided if the design was open for review by others. Another example of security through obscurity is the use of hidden form fields in Web applications, which affords little, if any protection against disclosure, as they can be processed using a modified client.

Software design should therefore take into account the need to leave the design open but keep the implementation of the protection mechanisms independent of the design. Additionally, while security through obscurity may increase the work factor needed by an attacker and provide some degree of defense in-depth, it should not be the sole and primary security mechanism in the software. Leveraging publicly vetted, proven, tested industry standards, instead of custom developing one’s own protection mechanism, is recommended. For example, encryption algorithms, such as the Advanced Encryption Standard (AES) and Triple Data Encryption Standard (3DES), are publicly vetted and have undergone elaborate security analysis, testing, and review by the information security community. The inner workings of these algorithms are open to any reviewer, and public review can throw light on any potential weaknesses. The key that is used in the implementation of these proven algorithms is what should be kept secret.

Some of the fundamental aspects of the open design principle are as follows:

- The security of your software should not be dependent on the secrecy of the design.

- Security through obscurity should be avoided.

- The design of protection mechanisms should be open for scrutiny by members of the community, as it is better for an ally to find a security vulnerability or flaw than it is for an attacker.

3.7.8 Least Common Mechanisms

Least common mechanisms is the security principle by which mechanisms common to more than one user or process are designed not to be shared. Because shared mechanisms, especially those involving shared variables, represent a potential information path, mechanisms that are common to more than one user and depended on by all users are to be minimized. Design should compartmentalize or isolate the code (functions) by user roles, because this increases the security of the software by limiting the exposure. For example, instead of having one function or library that is shared between members with supervisor and nonsupervisor roles, it is recommended to have two distinct functions, each serving its respective role.

3.7.9 Psychological Acceptability

One of the primary challenges in getting users to adopt security is that they feel that security is usually very complex. With a rise in attacks on passwords, many organizations resolved to implement strong password rules, such as the need to have mixed-case, alphanumeric passwords that are to be of a particular length. Additionally, these complex passwords are often required to be periodically changed. While this reduced the likelihood of brute-forcing or guessing passwords, it was observed that the users had difficulty remembering complex passwords. Therefore they nullified the effect that the strong password rules brought by jotting down their passwords and sticking them under their desks and, in some cases, even on their computer screens. This is an example of security protection mechanisms that were not psychologically acceptable and hence not effective.

Psychological acceptability is the security principle that states that security mechanisms should be designed to maximize usage, adoption, and automatic application.

A fundamental aspect of designing software with the psychological acceptability principle is that the security protection mechanisms

- Are easy to use

- Do not affect accessibility

- Are transparent to the user

Users should not be additionally burdened as a result of security, and the protection mechanisms must not make the resource more difficult to access than if the security mechanisms were not present. Accessibility and usability should not be impeded by security mechanisms because users will elect to turn off or circumvent the mechanisms, thereby neutralizing or nullifying any protection that is designed.

Examples of incorporating the psychological acceptability principle in software include designing the software to notify the user through explicit error messages and callouts as depicted in Figure 3.13, message box displays and help dialogs, and intuitive user interfaces.

3.7.10 Leveraging Existing Components

Service-oriented architecture (SOA) is prevalent in today’s computing environment, and one of the primary aspects for its popularity is the ability it provides for communication between heterogeneous environments and platforms. Such communication is possible because the SOA protocols are understandable by disparate platforms, and business functionality is abstracted and exposed for consumption as contract-based, application programming interfaces (APIs). For example, instead of each financial institution writing its own currency conversion routine, it can invoke a common, currency conversion, service contract. This is the fundamental premise of the leveraging existing components design principle. Leveraging existing components is the security principle that promotes the reusability of existing components.

A common observance in security code reviews is that developers try to write their own cryptographic algorithms instead of using validated and proven cryptographic standards such as AES. These custom implementations of cryptographic functionality are also determined often to be the weakest link. Leveraging proven and validated cryptographic algorithms and functions is recommended.

Designing the software to scale using tier architecture is advisable from a security standpoint, because the software functionality can be broken down into presentation, business, and data access tiers. The use of a single data access layer (DAL) to mediate all access to the backend data stores not only supports the principle of leveraging existing components but also allows for scaling to support various clients or if the database technology changes. Enterprise application blocks are recommended over custom developing shared libraries and controls that attempt to provide the same functionality as the enterprise application blocks.

Reusing tested and proven, existing libraries and common components has the following security benefits. First, the attack surface is not increased, and second, no newer vulnerabilities are introduced. An ancillary benefit of leveraging existing components is increased productivity because leveraging existing components can significantly reduce development time.

3.8 Balancing Secure Design Principles

It is important to recognize that it may not be possible to design for each of these security principles in totality within your software, and trade-off decisions about the extent to which these principles can be designed may be necessary. For example, although SSO can heighten user experience and increase psychological acceptability, it contradicts the principle of complete mediation and so a business decision is necessary to determine the extent to which SSO is designed into the software or to determine that it is not even an option to consider. SSO design considerations should also take into account the need to ensure that there is no single point of failure and that appropriate, defense in-depth mitigation measures are undertaken. Additionally, implementing complete mediation by checking access rights and privileges, each and every time, can have a serious impact on the performance of the software. So this design aspect needs to be carefully considered and factored in, along with other defense in depth strategies, to mitigate vulnerability while not decreasing user experience or psychological acceptability. The principle of least common mechanism may seem contradictory to the principle of leveraging existing components and so careful design considerations need to be given to balance the two, based on the business needs and requirements, without reducing the security of the software. While psychological acceptability would require that the users be notified of user error, careful design considerations need to be given to ensure that the errors and exceptions are explicitly handled and nonverbose in nature so that internal, system configuration information is not revealed. The principle of least common mechanisms may seem to be diametrically opposed to the principle of leveraging existing components, and one may argue that centralizing functionality in business components that can be reused is analogous to putting all the eggs in one basket, which is true. However, proper defense in depth strategies should be factored into the design when choosing to leverage existing components.

3.9 Other Design Considerations

In addition to the core software security design considerations covered earlier, there are other design considerations that need to be taken into account when building software. These include the following:

- Programming language

- Data type, format, range, and length

- Database security

- Interface

- Interconnectivity

We will cover each of these considerations.

3.9.1 Programming Language

Before writing a single line of code, it is pivotal to determine the programming language that will be used to implement the design, because a programming language can bring with it inherent risks or security benefits. In organizations with an initial level of capability maturity, developers tend to choose the programming language that they are most familiar with or one that is popular and new. It is best advised to ensure that the programming language chosen is one that is part of the organization’s technology or coding standard, so that the software that is produced can be universally supported and maintained.

The two main types of programming languages in today’s world can be classified into unmanaged or managed code languages. Common examples of unmanaged code are C/C++ while Java and all .NET programming languages, which include C# and VB.Net, are examples of managed code programming languages.

Unmanaged code programming languages are those that have the following characteristics:

- The execution of the code is not managed by any runtime execution environment but is directly executed by the operating system. This makes execution relatively faster.

- Is compiled to native code that will execute only on the processor architecture (X86 or X64) against which it is compiled.

- Memory allocation is not managed and pointers in memory addresses can be directly controlled, which makes these programming languages more susceptible to buffer overflows and format string vulnerabilities that can lead to arbitrary code execution by overriding memory pointers.

- Requires developers to write routines to handle memory allocation, check array bounds, handle data type conversions explicitly, force garbage collection, etc., which makes it necessary for the developers to have more programming skills and technical capabilities.

Managed code programming languages, on the other hand, have the following characteristics:

- Execution of the code is not by the operating system directly, but instead, it is by a managed runtime environment. Because the execution is managed by the runtime environment, security and nonsecurity services such as memory management, exception handling, bounds checking, garbage collection, and type safety checking can be leveraged from the runtime environment and security checks can be asserted before the code executes. These additional services can cause the code to execute considerably slower than the same code written in an unmanaged code programming language. The managed runtime environment in the .NET Framework is the Common Language Runtime (CLR).

- Is not directly compiled into native code but is compiled into an Intermediate Language (IL) and an executable is created. When the executable is run, the Just-in-Time (JIT) compiler of the CLR compiles the IL into native code that the computer will understand. This allows for platform independence, as the JIT compiler handles the compilation of the IL into native code that is processor architecture specific. The Common Language Infrastructure (CLI) Standard provides encoding information and description on how the programming languages emit the appropriate encoding when compiled.

- Because memory allocation is managed by the runtime environment, buffer overflows and format string vulnerabilities are mitigated considerably.

- Time to develop software is relatively shorter because most memory management, exception handling, bounds checking, garbage collection, and type safety checking are automatically handled by the runtime environment. Type safety means that the code is not allowed to access memory locations outside its bounds if it is not authorized to do so. This also means that implicit casting of one data type to another, which can lead to errors and exceptions, is not allowed. Type safety plays a critical role in isolating assemblies and security enforcement. It is provided by default to managed code programming languages, making it an important consideration when choosing between an unmanaged or managed code programming language.