Chapter 5. Scale-Free Networks

Zipf’s Law

Zipf’s law describes a relationship between the frequencies and ranks of words in natural languages; see http://en.wikipedia.org/wiki/Zipf%27s_law. The “frequency” of a word is the number of times it appears in a body of work. The “rank” of a word is its position in a list of words sorted by frequency. The most common word has rank 1, the second most common has rank 2, etc.

Specifically, Zipf’s Law predicts that the frequency, f, of the word with rank r is:

where s and c are parameters that depend on the language and the text.

If you take the logarithm of both sides of this equation, you get:

So if you plot ![]() versus

versus ![]() , you should get a straight line with

slope

, you should get a straight line with

slope ![]() and intercept

and intercept ![]() .

.

Write a program that reads a text from a file, counts word

frequencies, and prints one line for each word in descending order of

frequency. You can test it by downloading an out-of-copyright book in

plain text format from http://gutenberg.org. You might want to

remove punctuation first.

If you need some help getting started, you can download http://thinkcomplex.com/Pmf.py, which provides an

object named Hist that maps from

value to frequencies.

Plot the results and check whether they form a straight line. For plotting suggestions, see pyplot. Can you estimate the value of s?

You can download my solution from http://thinkcomplex.com/Zipf.py.

Cumulative Distributions

A distribution is a statistical description of a set of values. For example, if you collect the population of every city and town in the U.S., you would have a set of about 14,000 integers.

The simplest description of this set is a list of numbers, which would be complete but not very informative. A more concise description is a statistical summary like the mean and variation, but that is not a complete description because there are many sets of values with the same summary statistics.

One alternative is a histogram, which divides the range of possible values into “bins” and counts the number of values that fall in each bin. Histograms are common, so they are easy to understand, but it is tricky to get the bin size right. If the bins are too small, the number of values in each bin is also small, so the histogram doesn’t give much insight. If the bins are too large, they lump together a wide range of values, which obscures details that might be important.

A better alternative is a cumulative

distribution function (CDF), which maps from a value,

x, to the fraction of values less than or equal to

x. If you choose a value at random, ![]() is the probability that the value you get is less

than or equal to x.

is the probability that the value you get is less

than or equal to x.

For a list of n values, the simplest way to

compute CDF is to sort the values. Then the CDF of the

ith value (starting from 1) is ![]() .

.

I have written a class called Cdf that provides functions for creating and

manipulating CDFs. You can download it from http://thinkcomplex.com/Cdf.py.

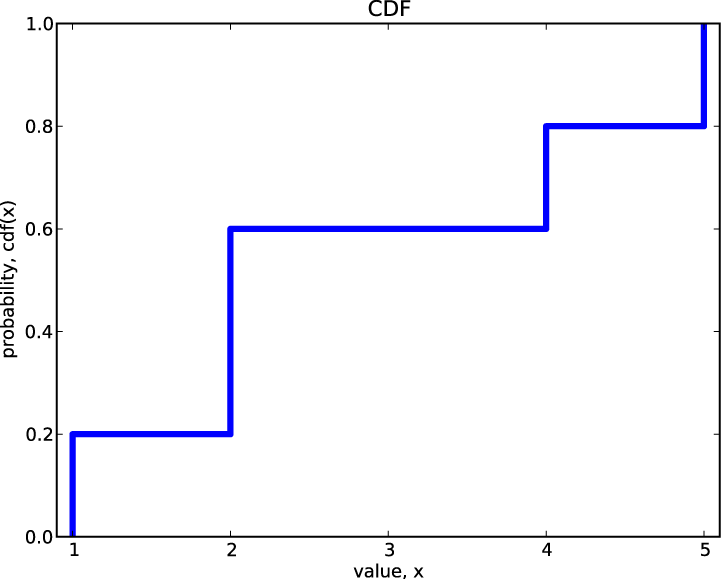

As an example, we’ll compute the CDF for the values {1,2,2,4,5}:

import Cdf cdf = Cdf.MakeCdfFromList([1,2,2,4,5])

MakeCdfFromList can take any

sequence or iterator. Once you have the Cdf, you can find the probability,

![]() , for a given value:

, for a given value:

prob = cdf.Prob(2)

The result is 0.6, because 3/5 of the values are less than or equal to 2. You can also compute the value for a given probability:

value = cdf.Value(0.5)

The value with probability 0.5 is the median, which in this example is 2.

To plot the Cdf, you can use

Render, which returns a list of

value-probability pairs.

xs, ps = cdf.Render()

for x, p in zip(xs, ps):

print x, pThe result is:

1 0.0 1 0.2 2 0.2 2 0.6 4 0.6 4 0.8 5 0.8 5 1.0

Each value appears twice. That way when we plot the CDF, we get a stair-step pattern.

import matplotlib.pyplot as pyplot

xs, ps = cdf.Render()

pyplot.plot(xs, ps, linewidth=3)

pyplot.axis([0.9, 5.1, 0, 1])

pyplot.title('CDF')

pyplot.xlabel('value, x')

pyplot.ylabel('probability, CDF(x)')

pyplot.show()Figure 5-1 shows the CDF for the values {1,2,2,4,5}.

I drew vertical lines at each of the values, which is not mathematically correct. To be more rigorous, I should draw a discontinuous function.

Continuous Distributions

The distributions we have seen so far are sometimes called empirical distributions because they are based on a dataset that comes from some kind of empirical observation.

An alternative is a continuous distribution, which is characterized by a CDF that is a continuous function. Some of these distributions, like the Gaussian or normal distribution, are well known, at least to people who have studied statistics. Many real-world phenomena can be approximated by continuous distributions, which is why they are useful.

For example, if you observe a mass of radioactive material with an instrument that can detect decay events, the distribution of times between events will most likely fit an exponential distribution. The same is true for any series where an event is equally likely at any time.

The CDF of the exponential distribution is:

The parameter, ![]() , determines the mean and variance of the

distribution. This equation can be used to derive a simple visual test

for whether a dataset can be well approximated by an exponential

distribution. All you have to do is plot the complementary distribution on a

log-y scale.

, determines the mean and variance of the

distribution. This equation can be used to derive a simple visual test

for whether a dataset can be well approximated by an exponential

distribution. All you have to do is plot the complementary distribution on a

log-y scale.

The complementary distribution (CCDF) is just ![]() ; if you plot the complementary distribution of a

dataset that you think is exponential, you expect to see a function like

this:

; if you plot the complementary distribution of a

dataset that you think is exponential, you expect to see a function like

this:

If you take the log of both sides of this equation, you get:

So on a log-y scale, the CCDF should look

like a straight line with slope ![]() .

.

Write a function called plot_ccdf that takes a list of values and the

corresponding list of probabilities and plots the CCDF on a

log-y scale.

To test your function, use expovariate from the random module to generate 100 values from an

exponential distribution. Plot the CCDF on a

log-y scale, and see if it falls on a straight

line.

Pareto Distributions

The Pareto distribution is named after the economist Vilfredo Pareto, who used it to describe the distribution of wealth; see http://en.wikipedia.org/wiki/Pareto_distribution. Since then, people have used it to describe phenomena in the natural and social sciences, including sizes of cities and towns, of sand particles and meteorites, and of forest fires and earthquakes.

The Pareto distribution is characterized by a CDF with the following form:

The parameters

xm and

![]() determine the location and shape of the

distribution.

xm is

the minimum possible value.

determine the location and shape of the

distribution.

xm is

the minimum possible value.

Values from a Pareto distribution often have these properties:

- Long tail

Pareto distributions contain many small values and a few very large ones.

- 80/20 rule

The large values in a Pareto distribution are so large that they make up a disproportionate share of the total. In the context of wealth, the 80/20 rule says that 20% of the people own 80% of the wealth.

- Scale free

Short-tailed distributions are centered around a typical size, which is called a “scale.” For example, the great majority of adult humans are between 100 and 200 cm in height, so we could say that the scale of human height is a few hundred centimeters. But for long-tailed distributions, there is no similar range (bounded by a factor of two) that contains the bulk of the distribution. So we say that these distributions are “scale-free.”

To get a sense of the difference between the Pareto and Gaussian distributions, imagine what the world would be like if the distribution of human height were Pareto.

In Pareto World, the shortest person is 100 cm, and the median is 150 cm, so that part of the distribution is not very different from ours. But if you generate 6 billion values from this distribution, the tallest person might be 100 km—that’s what it means to be scale-free!

There is a simple visual test that indicates whether an empirical distribution is well-characterized by a Pareto distribution: on a log-log scale, the CCDF looks like a straight line. The derivation is similar to what we saw in the previous section.

The equation for the CCDF is:

Taking the log of both sides yields:

So if you plot ![]() versus

versus ![]() , it should look like a straight line

with slope

, it should look like a straight line

with slope ![]() and intercept

and intercept ![]() .

.

Write a version of plot_ccdf that plots the complementary CCDF on

a log-log scale.

To test your function, use paretovariate from the random module to generate 100 values from a

Pareto distribution. Plot the CCDF on a log-y

scale and see if it falls on a straight line. What happens to the

curve as you increase the number of values?

The distribution of populations for cities and towns has been proposed as an example of a real-world phenomenon that can be described with a Pareto distribution.

The U.S. Census Bureau publishes data on the population of every incorporated city and town in the United States. I wrote a small program that downloads this data and converts it into a convenient form. You can download it from http://thinkcomplex.com/populations.py.

Read over the program to make sure you know what it does, and then write a program that computes and plots the distribution of populations for the 14,593 cities and towns in the dataset.

Plot the CDF on linear and log-x scales so you can get a sense of the shape of the distribution. Then plot the CCDF on a log-log scale to see if it has the characteristic shape of a Pareto distribution.

What conclusion do you draw about the distribution of sizes for cities and towns?

Barabási and Albert

In 1999, Barabási and Albert published a paper in Science called “Emergence of Scaling in Random Networks,” which characterizes the structure (also called “topology”) of several real-world networks, including graphs that represent the interconnectivity of movie actors, World Wide Web (WWW) pages, and elements in the electrical power grid in the western United States. You can download the paper from http://www.sciencemag.org/content/286/5439/509.

They measure the degree (number of connections) of each node and

compute ![]() , the probability that a vertex has

degree k; then they plot

, the probability that a vertex has

degree k; then they plot

![]() versus k on a

log-log scale. The tail of the plot fits a straight line, so they

conclude that it obeys a power law;

that is, as k gets large,

versus k on a

log-log scale. The tail of the plot fits a straight line, so they

conclude that it obeys a power law;

that is, as k gets large,

![]() is asymptotic to

is asymptotic to ![]() , where

, where ![]() is a parameter that determines the

rate of decay.

is a parameter that determines the

rate of decay.

They also propose a model that generates random graphs with the same property. The essential features of the model that distinguish it from the Erdős-Rényi model and the Watts-Strogatz model are:

- Growth

Instead of starting with a fixed number of vertices, Barabási and Albert start with a small graph and add vertices gradually.

- Preferential attachment

When a new edge is created, it is more likely to connect to a vertex that already has a large number of edges. This “rich get richer” effect is characteristic of the growth patterns of some real-world networks.

Finally, they show that graphs generated by this model have a distribution of degrees that obeys a power law. Graphs that have this property are sometimes called scale-free networks; see http://en.wikipedia.org/wiki/Scale-free_network. That name can be confusing because it is the distribution of degrees that is scale-free, not the network.

In order to maximize confusion, distributions that obey the power

law are sometimes called scaling

distributions because they are invariant under a change of

scale. That means that if you change the units in which the quantities

are expressed, the slope parameter, ![]() , doesn’t change. You can read http://en.wikipedia.org/wiki/Power_law for the details,

but it is not important for what we are doing here.

, doesn’t change. You can read http://en.wikipedia.org/wiki/Power_law for the details,

but it is not important for what we are doing here.

This exercise asks you to make connections between the Watts-Strogatz (WS) and Barabási-Albert (BA) models.

Read Barabási and Albert’s paper, and implement their algorithm for generating graphs. See if you can replicate their Figure 2(A), which shows

versus k

for a graph with 150,000 vertices.

versus k

for a graph with 150,000 vertices.Use the WS model to generate the largest graph you can in a reasonable amount of time. Plot

versus k,

and see if you can characterize the tail behavior.

versus k,

and see if you can characterize the tail behavior.Use the BA model to generate a graph with about 1,000 vertices, and compute the characteristic length and clustering coefficient as defined in the Watts and Strogatz paper. Do scale-free networks have the characteristics of a small world graph?

Zipf, Pareto, and Power Laws

At this point, we have seen three phenomena that yield a straight line on a log-log plot:

The similarity in these plots is not a coincidence; these visual tests are closely related.

Starting with a power law distribution, we have:

If we choose a random node in a scale free network,

![]() is the probability that its degree

equals k.

is the probability that its degree

equals k.

The cumulative distribution function, ![]() , is the probability that the degree is less than or

equal to k, so we can get that by summation:

, is the probability that the degree is less than or

equal to k, so we can get that by summation:

For large values of k, we can approximate the summation with an integral:

To make this a proper CDF, we could normalize it so that it goes to 1 as k goes to infinity, but that’s not necessary because all we need to know is:

This shows that the distribution of k is

asymptotic to a Pareto distribution with ![]() .

.

Similarly, if we start with a straight line on a Zipf plot,[5] we have:

where f is the frequency of the word with rank r. Inverting this relationship yields:

Now subtracting 1 and dividing through by the number of different words, n, we get:

This is only interesting because if r is the

rank of a word, then ![]() is the fraction of words with lower ranks, which is

the fraction of words with higher frequency, which is the CCDF of the

distribution of frequencies:

is the fraction of words with lower ranks, which is

the fraction of words with higher frequency, which is the CCDF of the

distribution of frequencies:

To characterize the asymptotic behavior for large

n, we can ignore c and

![]() , which yields:

, which yields:

This shows that if a set of words obeys Zipf’s law, the

distribution of their frequencies is asymptotic to a Pareto distribution

with ![]() .

.

So the three visual tests are mathematically equivalent; a dataset that passes one test will pass all three. But as a practical matter, the power law plot is noisier than the other two because it is the derivative of the CCDF.

The Zipf and CCDF plots are more robust, but Zipf’s law is only applicable to discrete data (like words), not continuous quantities. CCDF plots work with both.

For these reasons—robustness and generality—I recommend using CCDFs.

The Stanford Large Network Dataset Collection is a repository of datasets from a variety of networks, including social networks, communication and collaboration, Internet, and road networks. See http://snap.stanford.edu/data/index.html.

Download one of these datasets and explore. Is there evidence of small world behavior? Is the network scale-free? What else can you discover?

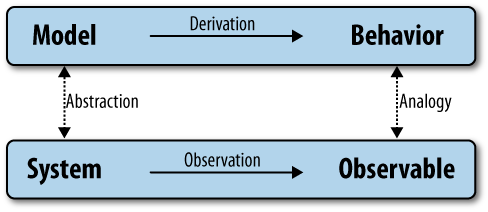

Explanatory Models

We started the discussion of networks with Milgram’s small world experiment, which shows that path lengths in social networks are surprisingly small; hence the phrase “six degrees of separation.”

When we see something surprising, it is natural to ask “why?”, but sometimes it’s not clear what kind of answer we are looking for. One kind of answer is an explanatory model (see Figure 5-2). The logical structure of an explanatory model is:

In a system, S, we see something observable, O, that warrants explanation.

We construct a model, M, that is analogous to the system; that is, there is a correspondence between the elements of the model and the elements of the system.

By simulation or mathematical derivation, we show that the model exhibits a behavior, B, that is analogous to O.

We conclude that S exhibits O because S is similar to M, M exhibits B, and B is similar to O.

At its core, this is an argument by analogy, which says that if two things are similar in some ways, they are likely to be similar in other ways. Argument by analogy can be useful, and explanatory models can be satisfying, but they do not constitute a proof in the mathematical sense of the word.

Remember that all models leave out (or “abstract away”) details that we think are unimportant. For any system, there are many possible models that include or ignore different features. Also, there might be models that exhibit different behaviors—B, B’, and B”—that are similar to O in different ways. In that case, which model explains O?

The small world phenomenon is an example: the Watts-Strogatz (WS) model and the Barabási-Albert (BA) model both exhibit small world behavior, but they offer different explanations:

The WS model suggests that social networks are small because they include both strongly connected clusters and “weak ties” that connect clusters (see http://en.wikipedia.org/wiki/Mark_Granovetter).

The BA model suggests that social networks are small because they include nodes with high degrees that act as hubs, and that hubs grow over time due to preferential attachment.

As is often the case in young areas of science, the problem is not that we have no explanations, but that we have too many.

Are these explanations compatible; that is, can they both be right? Which do you find more satisfying as an explanation, and why?

Is there data you could collect or experiments you could perform that would provide evidence in favor of one model over the other?

Choosing among competing models is the topic of Thomas Kuhn’s essay, “Objectivity, Value Judgment, and Theory Choice.” Kuhn was a historian of science who wrote The Structure of Scientific Revolutions in 1962, and spent the rest of his life explaining what he meant to say.

What criteria does Kuhn propose for choosing among competing models? Do these criteria influence your opinion about the WS and BA models? Are there other criteria you think should be considered?

[5] This derivation follows Adamic, “Zipf, power law and Pareto—a ranking tutorial,” available at http://www.hpl.hp.com/research/idl/papers/ranking/ranking.html.