Now that we’ve run through the standard varieties of remote research, we’d like to show you a few variations of remote research we’ve come up with in the past, which adapt remote techniques to address special testing conditions or to improve on existing research methods. These variations demonstrate how adaptable remote methods are, and we hope you’ll be encouraged to make your own modifications and adjustments to the basic methodological approach we’ve laid out in this book.

Each section in this chapter features a mini-case study of the research projects in which we developed each technique, so you will not only hear about the technique, but also get some insight into the way we devise new remote research techniques.

In some cases, instead of seeing your users’ computer screens, you need to have them see or take limited control over one of yours. This type of control is useful when you need to show your interface to your users but either can’t or don’t want to give them direct access to it on their own computers. Let’s say you want users to try your prototype software but don’t want them to be able to install it on their computers. When you use reverse screen sharing and give them remote access to one of your computers, they can use the interface without having possession of the interface code or files in any way.

In 2008 we designed an international remote study for a multinational bank. The bank wanted to keep a new Web interface relatively secret until its release and didn’t want its fairly advanced prototype to be floating out in the Internet wilderness. Since the target audiences were scattered across the Pacific Rim (the Philippines, Australia, Hong Kong) and our clients were based in different countries as well, remote testing was definitely the most attractive testing solution.

We needed to come up with a way to keep the Web prototype safe on our computers while still allowing users to interact with it normally. Ordinarily, you might be inclined to make a Web-based mockup that the users could access temporarily via password protection, but if you’re on a tight deadline, that approach won’t always be an option. We needed to test the interface ASAP, so we opted for reverse screen sharing.

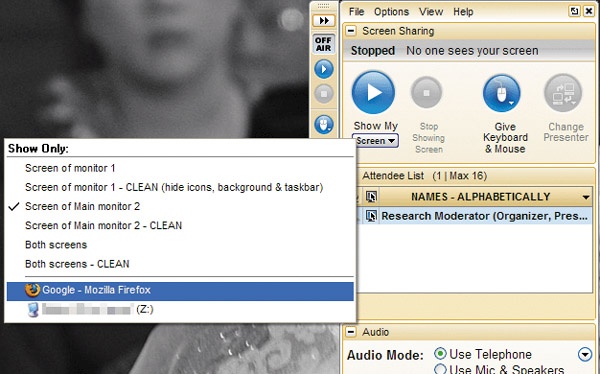

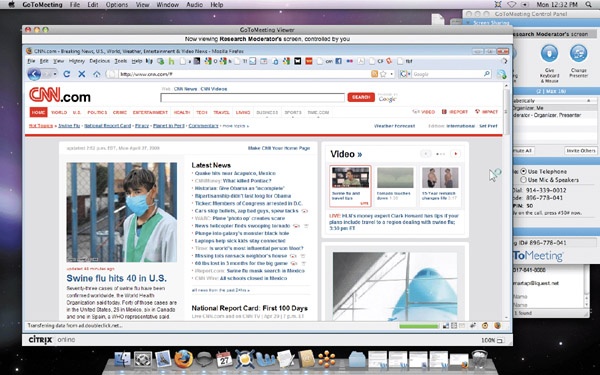

First, we loaded the prototype onto a second Internet-connected computer (which we’ll refer to as the “prototype computer”) and connected it to a monitor of its own, next to the moderator’s computer setup. We used GoToMeeting for screen sharing and hosted the screen sharing session on the prototype computer. The moderator contacted the participants and instructed them to join the GoToMeeting session as usual. Once participants had joined, the moderator used the “Give Keyboard and Mouse Control” function on the prototype computer, specifying that the participants could access only the window with the prototype in it (see Figure 9-1). After that was all set up, participants were able to view and interact with the prototype on their own computer screen, though they were really just remotely viewing and controlling the prototype computer’s desktop (see Figure 9-2).

Another perk of remote access is that if your interface requires any sophisticated software to run properly, you can set it up in advance without requiring users to download, set up, and install it themselves.

On the other hand, this method does takes slightly longer to set up, and depending on the strength of the Internet connection, there may be some slight (~1 second) lag, causing the interface to seem sluggish. The sluggishness is more of an issue when testing internationally. Run a pilot session in advance to make sure that the approach is viable or arrange in advance for your users to be on a fast and reliable Internet connection. (We arranged in advance for our Hong Kong users to be at a location with a solid connection.) This method also slightly restricts the types of interfaces you’re able to test effectively. If you’re testing a video game, for instance, the lag might impact the experience too much to provide a decent user experience.

Other than those limitations, remote access is a great way to keep your interface airtight, without too much extra hassle or setup. Really, all it takes is an extra computer to serve as the prototype computer.

Final note: any screen sharing solution that allows you to give users remote access to your computer desktop can be used for this method, but be sure that you use a method that lets you easily and permanently disable the remote access once the session has ended.

It doesn’t take a genius to see that mobile computing devices are taking over. Our relationship to technology is ever moving toward the academic portents of ubiquitous computing; at the very least, we’re going to have some super fancy cell phones. The richest and most exciting future for remote research is on mobile devices, but currently the technology to conduct the research isn’t completely there yet. There’s been lots of academic and professional work on how to properly research mobile interfaces, but most of these studies have involved cumbersome apparatuses, structured in-lab usage, expensive ethnographic interviews, or quantitative data gathering.

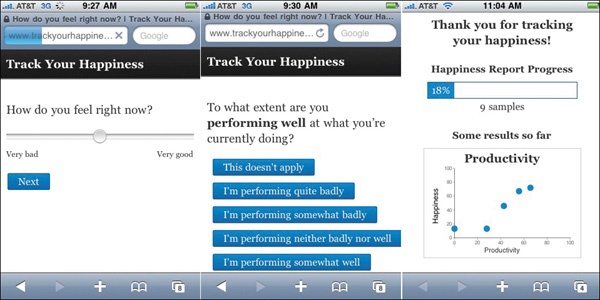

One study we find very promising isn’t a user research study at all, but a study on happiness. Harvard researcher Matt Killingsworth and Visnu Pitiyanuvath’s “Track Your Happiness” study uses iPhones to gather random experience samples from people. Participants are notified by text message over the course of the day; the message directs them to a mobile-friendly Web site that asks them questions about what they were doing and how they felt in the very moment before they received the notification. After each survey, participants are displayed some preliminary results—for example, how their happiness has correlated with whether they were doing something they wanted to do (see Figure 9-3). This is a fantastic example of time-aware research because it collects data that’s dependent on the native timeline of participants.

This research approach—having users self-report their circumstances and feelings at random intervals during the day—is known as “experience sampling,” and what makes it so promising is that it plays to the strengths of remote research. By getting on the participants’ time, researchers are able to get a more real sense of what the participants are really thinking and feeling in their real lives, rather than in a controlled lab environment. And by simply adapting currently existing technology (SMS, smartphone browsers) to suit research purposes, it doesn’t force participants to work around awkward and unrepresentative testing equipment.

Figure 9-3. The “Track Your Happiness” iPhone survey: screenshots of questions and preliminary results.

We’re beginning to see some services and Web applications that will make this type of research feasible; in the preceding chapter we introduced the HaveASec app, which allows iPhone developers to push surveys to their mobile app users and receive responses in real time. This capability could easily be applied to mobile live recruiting. You could call up users on their mobile device right when they’re in the middle of using your application, so you can learn about not only what they’re doing with it, but where they are, how their reception and location are affecting their usage, and all those other excellent native environment insights. Mobile screen sharing is also beginning to make inroads. PocketMeeting, Veency, and Screensplitr are emerging examples of mobile screen and interface sharing.

Of course, there will be many challenges for mobile remote research, not least of which is convincing mobile users who are likely busy, on the go, or in a noisy public space to participate in a live study. You’d also need to find a way for the users to both talk on their phone and use the phone app at the same time (a headset would work). Safety would naturally be a consideration, as would battery life, reception, and social factors. The participants may be in a potentially awkward social space (library, dentist chair, restroom).

But as with remote research over the Web, UX research methods for mobile interfaces will improve and become easier as the technology advances. Improved phone video cameras, Web video streaming and video chat, VoIP calling, automated screen-recording and activity monitoring, GPS location tracking, and third-party apps are all likely developments that will prove to be invaluable to understanding how real people use their mobile devices. And accessibility is a factor, too. Right now, only a tiny fraction of the population owns phones capable of this type of research, but as smartphones become cheaper and more practical, we can begin to get a broader picture of how people live.

If you recall from Chapter 1, we suggested that lab testing can be preferable over remote testing when you’re unable to give remote access to your interface for any reason. We designed and conducted a series of such studies in 2007 and 2008 for Electronic Arts’ highly anticipated PC game, Spore. There were a number of reasons why the game couldn’t be tested remotely, and chief among them was that the game was still in the development process and couldn’t be released to the general public for confidentiality’s sake.

The most practical alternative was to conduct the testing in our lab, but we decided that it was important to maintain the native environment aspect as much as possible. A video game is a special kind of interface in which preserving a comfortable and relaxed atmosphere is crucial for producing a realistic user experience. We attempted to replicate aspects of a native environment, minimizing the distraction by other players, research moderators, or by arbitrary tasks not related to gameplay. The result was what we call a simulated native environment, or a lab setting that resembles a natural usage setting as much as possible (see Figure 9-4).

Figure 9-4. Participants each had their own isolated stations, tricked out with a Dell XPS laptop, mouse, and microphone headset through which they communicated with the moderators.

For each session, we had six agency-recruited participants come to our loft office, where they each sat at their own desk equipped with a laptop, a microphone headset, and a webcam. We instructed them to play the game as if they were at home, with the only difference being that they were to think-aloud and say whatever was going through their minds as they played.

Elsewhere, our clients from Electronic Arts (EA) and our moderators were stationed in an observation room, where we projected the players’ game screens, the webcam video, and the survey feedback onto the wall, allowing us to see the users’ facial expressions and their in-game behaviors side by side (see Figure 9-5). Using the microphone headsets and the free game chat app TeamSpeak, we were able to speak with players one on one, occasionally asking them what they were trying to do or prompting them to go more in depth about something they’d said or done in the game. This method allowed us to conduct six one-on-one studies at the same time, which we called “one-to-many moderating.”

Figure 9-5. In the observation room, the moderators sat side-by-side with the observers, monitoring all six players’ expressions, gameplay behaviors, and survey answers at once.

EA was interested in collecting some quantitative data as well. When participants reached certain milestones in the game, they would fill out a touchscreen survey at their side, answering a few questions about their impressions of the game. These surveys were designed by Immersyve, a game research company in Florida, basing their quantitative assessment of fun and enjoyment on a set of validated metrics called Player Experience of Need Satisfaction (PENS).

A great deal of the work that went into preparing for this study went into figuring out our incredibly baroque hardware and equipment setup. Each of the six stations needed to have webcam, gameplay, survey, and TeamSpeak media feeds broadcasted live to the observation room; that’s 18 video feeds and 6 audio feeds. Not only did the two (yes, two) moderators have to be able to hear the participants’ comments, but so did the dozen or so EA members. On top of that, all the streams had to be recorded for later analysis, which required hooking up digital recording units to each station (software recording solutions performed poorly), enabling TeamSpeak recording at each station, and using the webcam software’s built-in recording function.

Your setup doesn’t have to be quite this complicated. Our multiuser approach was only necessary due to the volume of users we had to test, but if possible, take it easy and test one or two users at a time, with a single moderator. Here’s a step-by-step guide on how to set up a simulated native environment lab for a single user.

First, set up the observation room.

You’ll need at least two computers: one for the moderator and another to mirror the participants’ webcam and desktop video. Set up the following on the moderator computer:

Software to voice chat with your participant (Skype, or TeamSpeak if you’re going to be testing more than one participant at the same time)

Any instant messaging client you can use to communicate with moderators and observers

A word processor to take notes with and display your facilitator guide

On the other observation room computer, you’ll want to install VNC, a remote desktop control tool you can use to mirror the participant’s webcam output, and a projector, if you want to display it on the wall.

Finally, set up a monitor that will connect to the participants’ computer so you can mirror their computer screen.

Now get the participants’ station ready. Because this is “simulated native environment” research, there’s an aesthetic component to setting up the participants’ station. You don’t need to go as far as to make your testing area look like a “real living room,” whatever that is, but neither should it look too much like an office or a lab. Soft floor lighting and a comfortable chair and desk can go a long way; aim for informal and low-key.

The participants’ computer is going to need a lot of careful preparation. You’ll want to set it up with the following:

Skype (or TeamSpeak, depending on what the moderator is using)

A microphone headset for communicating with the moderator

VNC, to be able to connect with the observation room computer

A Digital Video Recorder (DVR) unit, to record the computer’s audio/video output (we used the Archos TV+)

The interface to be tested

A VGA cable long enough to reach the observation room

You’ll also want to make sure there’s nothing else on the computer desktop that might disrupt the participants’ experience, especially anything that reminds participants that they’re in a research study. Shortcuts to the interface you’re testing should be fine, but otherwise try to stick to the default computer appearance.

Finally, there’s the participants’ computer. In this day and age, chances are that your participants’ computer won’t be fast enough to handle running a recording webcam, a screen recorder, VNC screen sharing, voice chat, and the test interface all at the same time without negatively affecting computer performance, so using a second computer to delegate the computing tasks is an option. On this second computer, you can run the webcam and any other testing materials, such as the surveys we used in our Spore study. You can also run the voice chat program on this computer, if it’s not important to record the sound on your interface. If your webcam has a built-in microphone, enable it. It’s good to have a redundant audio stream in case the TeamSpeak/Skype recording fails. Table 9-1 breaks down what equipment is necessary at each location in a simulated native environment.

Table 9-1. Simulated Native Environment Equipment Setup at a Glance ![]() http://www.flickr.com/photos/rosenfeldmedia/4287138812/

http://www.flickr.com/photos/rosenfeldmedia/4287138812/

Zone | Equipment/Software | |

|---|---|---|

Observation room | Moderator computer | TeamSpeak Microphone headset Any word processor for notes Facilitator guide IM to chat with moderators and observers |

Observation computer | VNC viewer Projector | |

Room | Headphones for observers One monitor per participant | |

Each participant station | Participant computer | TeamSpeak VNC server Microphone headset DVR unit (we used Archos TV+) Extra VGA moniror out, with monitor cable long enough to reach observarion room (if not using VNC to share desktop) Interface to be tested |

Station computer | Webcam Survey, full-screen mode (optional) Touchscreen monitor (optional) VNC server Screen recording software |

Simulated native environments address a lot of the things that personally bug us about standard lab testing. The major benefits to this approach are the physical absence of moderators and other participants, which eases the Hawthorne effect and groupthink. In this study, the users mostly weren’t prompted to respond to focus questions or perform specific tasks, but instead just voiced their thoughts aloud unprompted, giving us insight into the things they noticed most about the game, instead of what we just assumed were the most important elements.

It’s still worth noting the shortcomings of this approach compared to true remote methods, though. You’re still testing users on a prepared computer, rather than their own home computer; it’s not really feasible to precisely replicate the experience of being at home or in an office because homes and offices obviously vary. The equipment overhead for this kind of testing is also a lot greater than for typical remote testing. At the very least, you’ll need a station for each user, equipped with computer, webcam, Internet access, and microphone headset. And, of course, you’re still restricted by all the physical and geographic predicaments of lab research, which means you can have no-shows, people may show up late, and so on.

There are still many ways to go with the native environment approach. Even with all our efforts to keep the process as natural and unobtrusive as possible, there are still opportunities to bring the experience even closer to players’ typical behaviors. Obviously, we’d love to do game research remotely, allowing participants to play right at home, on their own systems, without even getting up.

Consider, if you will, what a hypothetical in-home game research session might be like: a player logs in to an online video game service like XBox Live and is greeted with a pop-up inviting him/her to participate in a one-hour user research study to earn 8,000 XBox Live points. (The pop-up is configured to appear only to players whose accounts are of legal age, to avoid issues of consent with minors.) The player agrees and is automatically connected by voice chat to a research moderator, who is standing by. While the game is being securely delivered and installed to the player’s XBox, the moderator introduces the player to the study and gets consent to record the session. Once the game is finished installing, the player tests the game for an hour, giving his/her think-aloud feedback the entire time, while the moderator takes notes and records the session. At the end of the session, the game is automatically and completely uninstalled from the player’s XBox, and the XBox Live points are instantly awarded to the player’s account.

Remote research on PC games is already feasible, and for games with built-in chat and replay functionality, the logistics should be much easier to manage. Console game research, on the other hand, will likely require a substantial investment by console developers to make this possible, and handheld consoles present even more challenges. Lots of basic infrastructure and social advances would need to happen before our hypothetical scenario becomes viable:

Widespread game console broadband connectivity

Participant access to voice chat equipment

An online live recruiting mechanism for games, preferably integrated into an online gaming framework

Secure digital delivery of prototype or test build content (may be easy with upcoming server-side processing game services like OnLive)

Gameplay screen sharing or mirroring

As game consoles become more and more like fully featured PCs and content delivery platforms, we expect that most of these barriers will fall in good time. We also believe that allowing players to give feedback at home, the most natural environment for gameplay, would yield the most natural feedback, bringing game evaluation and testing further into the domain of good UX research.

One of the major limitations of current remote testing methods is that you’re restricted to testing computer software interfaces. Screen sharing won’t give you the whole picture if you’re trying to test a physical interface, especially a mobile one like a cell phone. On the bright side, technology and Internet access get more portable and mobile every day, and researchers are starting to figure out ways to embed technology into the physical world to make research more flexible and natural. There’s been plenty of mobile device research in the past, but it’s been largely focused on quantitative data gathering, random “experience sampling,” ethnographic interviews, heavily structured lab research, or usability-focused studies. Comparatively little has been done to directly observe and document rich natural user behavior with mobile or location-dependent interfaces, such as in-car GPS systems, mobile devices, and cell phones.

In 2008, we conducted an ethnographic study on behalf of a major auto manufacturer whose R&D team wanted to understand two things: first, to observe how people use different technologies in the car over the course of everyday driving—cell phones, in-car radios, iPods, GPS systems, and anything else that drivers might bring in; and second, to understand what needs these usages fulfill. The R&D team wanted to be involved in the sessions as they were ongoing (as they would in a normal remote study), but we obviously couldn’t have the whole team pile into the car with the passenger and moderator. The solution was surprisingly straightforward (see Figure 9-6).

Figure 9-6. The moderator rides along in the back seat, observing and filming the driver. The footage is streamed live to the Web.

The idea was to conduct a standard ride-along automobile ethnography, with a moderator interviewing a participant in situ, but also to use an EVDO Internet connection (go-anywhere wireless Internet access), a laptop, and a webcam to broadcast the sessions and communicate with the clients. (We also had a cameraman accompany us to document the experience in high-definition video, but that was mostly for our own benefit.) To broadcast, we signed up for an account on Stickam, a live Web video streaming service (others include Justin.tv, UStream.tv, and Qik).

We recruited our users in advance from the Los Angeles and San Francisco Bay areas through a third-party recruiting agency, selecting them on the basis of gender (equal balance), age (25–55), and level of personal technology usage (high vs. low).

The setup was very bare bones, but here’s a checklist of our equipment, all of which is handy to bring along:

Sierra Wireless 57E EVDO ExpressCard

Logitech webcam

Web video streaming account (we used Stickam)

A fully charged laptop with extra batteries

A point-and-shoot digital camera to take quick snapshots for later reference

Our methods weren’t actually all that different from a normal moderated study: we had users think aloud as they went about their tasks (while still following all road safety precautions, of course) and also explain to us how and why they used devices in the ways that they did (see Figure 9-7). For the purposes of our study, we found it helpful to focus on a few behavioral areas that were often very revealing of participants’ usages of technology:

At what points did users engage with their devices?

How did device usage affect other device interactions?

How did they combine or modify devices to achieve a particular effect?

What functions did they use the most, and which were they prevented from using?

Who else rides in the car (kids, pets), and how did their presence influence their capacity and inclination to use personal technologies?

Researching users in a moving environment is different from ethnographies you’d perform in a workspace or home, in the sense that some elements of the users’ “surroundings” are constantly changing, while others remain stable. Even though we had to keep our attention mostly on what the users were doing with their technology and their driving behavior, often we encountered situations when what was happening outside the car affected the users’ behavior. For example, one user who was fiddling with her iPod hit a sudden bump in the road, startling her and putting her attention back to driving.

Which reminds us—accidents do happen. We made sure to have participants read and sign two liability release waivers before the study, to cover our behinds in case the participants got into an accident. One form was an easy-to-understand, straightforward one-pager, and the other was a stronger and more exhaustive legal document. We didn’t want participants blaming us for causing the accident by distracting them from their driving. (Accordingly, this is another good reason why the moderator should stay as unobtrusive as possible.)

The car environment allowed for reasonable laptop usage, but many mobile technologies would make it impractical to use a laptop while keeping up with users, especially when standing or walking. In those situations, you may want to concentrate on recording the session and transcribe it later, though this sort of transcription can make the analysis process a whole lot lengthier. If you can afford it, we recommend using an online transcription service such as CastingWords.

Another issue is wireless Web connection: depending on the locations, distances, and speeds traveled during the sessions, EVDO access may become unstable or spotty. The study should be designed with accessible coverage in mind, whether via wireless connection, EVDO, or even cell phone tethering (i.e., using your cell phone to connect your laptop to the Internet), which will probably be getting more popular and accessible in the coming years. If you’ll be driving through a tunnel, getting on a subway, etc., expect connection outages.

As always, keeping the task as natural as possible is important for understanding how users will interact with a device naturally. As with simulated environment testing, we recommend downplaying the moderator’s physical presence or keeping the moderator out of the users’ field of vision entirely; our moderator rode in the back seat. Since the study was scheduled, we asked the users to choose a time during which they’d be doing something completely typical in their car, whether going to the grocery store, picking up kids, commuting, and so on. This approach helped us reduce the amount of moderator involvement in dictating the tasks and allowed us to focus on the natural tasks. In our car study, when users decided midsession to change their mind about where they wanted to go or what they wanted to do in their car, we made sure the moderator intervened only if that would have completely deviated from the goals of the study, which didn’t happen. Otherwise, you should just accept it as an example of good ol’ real-world behavior.

In our study, the quality of live streaming video to remote observers was limited by bandwidth issues, both because of the coverage problems we just described and because EVDO wasn’t really implemented with hardcore high-definition video streaming in mind (see Figure 9-8). We’ll all probably have to wait a few more years before the reliability and quality of mobile livecasts becomes a nonissue.

And then there’s moderator presence, which is a liability in many ways we’ve already discussed. Not to get too Big Brother-y, but the development of more powerful and portable monitoring tools—light, wearable livecasting equipment—can eliminate the moderator’s presence altogether, allowing users to communicate with the moderator remotely through earpieces or videophones or microphone arrays or whatever other device best suits the study. This will not only resolve the issue of moderator disruption and intrusion, but also opens up new domains for mobile user observations, including longer-term ethnographies (during which the researchers can “pop in” at specified times to eavesdrop on natural behavior), as well as studies of situations in which physical accompaniment is difficult or impossible (e.g., on a motorcycle, in a crowd, etc). It’s all a matter of time until talking to users anywhere, doing practically anything, will be as simple as having them wear a little clip-on camera as they go about living their lives.

It’s fitting that the practice of observing how people use new technology requires the researchers themselves to use new technology to its fullest; that’s what remote research methods are about, really. To that end, you (and all user researchers) should always be on the lookout for new tools, services, devices, and trends in mobile technology that you can exploit to help capture user behaviors that are as close as possible to real life.

You can use various aspects of remote research and new technology to adapt to novel testing challenges.

Secure interfaces can be tested with a method called “reverse screensharing”: allowing your participants to view and take control of one of your computers, so that the code remains secure on your end.

Mobile interface research will be huge and will offer new opportunities for Time-Aware Research; there will be many new considerations for mobile testing.

Even if you conduct research in a lab, you can use webcams and screen sharing to remove the moderator’s physical presence and conduct multiple one-on-one sessions simultaneously.

To test interfaces in moving environments like cars, you can use a wireless EVDO connection and webcasting services to record and stream sessions, and also communicate with clients.