Chapter 6. User Testing for Voice User Interfaces

AS WITH ANY TYPE OF APPLICATION DESIGN, user testing is extremely important when creating voice user interfaces (VUIs). There are many similarities to testing regular mobile apps, but there are differences, as well. This chapter outlines practical ways (from cheap to more expensive) to get your VUI tested, how to interview your subjects, and how to measure success. It will enable VUI designers to immediately execute user testing at even early stages of development. It will focus primarily on VUI-specific methodologies.

Special VUI Considerations

Generally speaking, it is preferable not to reveal to the users at the start of testing that the system uses speech recognition up front (unless they would know this by the app description when downloading it in the real world, or from marketing). One of the important things to determine during testing is whether users understand that they can talk to the system. Do they know how, or when?

I have observed people failing to speak when speech was allowed because it was not at all obvious that they could speak. Some of these users later commented, “I sure wish I could speak there!” Obviously, the design needed some work, but I might not have discovered this had I explicitly informed them that they could always speak to the app from the outset.

A common strategy with GUI testing is asking people to narrate what they’re doing, which obviously won’t work when testing a real VUI system.

If you already have experience in conducting other types of usability testing and want to jump straight into the more VUI-focused portion of this chapter, skip ahead to Things to Look For.

Background Research on Users and Use Cases

As with any type of design, conducting user research in the early stages is highly recommended. Although we will summarize a few basic principles of user research, you can learn more about it from sources dedicated to the subject. O’Reilly Media has an abundance of user research video tutorials available online, which you can access at http://bit.ly/user-research-fundamentals-lp.

Don’t Reinvent the Wheel

One of the first questions to ask during your research phase is whether something similar to what you’re developing has already been done, albeit in a different way. For example, perhaps you are creating a mobile app for a service that already exists as a website. It’s important to examine what already exists to learn what works (or doesn’t). For VUI systems in particular, an interactive voice response (IVR) system version often already exists (sometimes DTMF [touch tone] only, and sometimes voice-enabled). This is a great place to discover what similar features are already out there and how they are handled.

Mobile VUIs should never be an exact replica of their IVR counterparts, but it is still possible to learn a lot from them, such as how concepts are grouped and which ones are used most frequently.

If there is a similar IVR system out there, it often means there is a human agent supporting it, and therefore a call center. Sitting in a call center for an afternoon listening in on calls can provide a wealth of information. Listening to real users call in can reveal things that a study of just the IVR system itself might not identify.

In addition, interviewing call center agents can also provide a huge amount of valuable information. They are the ones who know what users are really calling in about, what the biggest complaints are, and what information can be difficult for callers to find.

But perhaps you’re designing a mobile app that has no IVR component. What problem does your VUI solve? What real-world things exist that might help you gain insights?

Cortana, for example, is a virtual assistant. For inspiration, Microsoft interviewed real-life personal assistants. Designers found that human assistants often needed to ask for help when they did not understand an assigned task. Therefore, Microsoft made sure to have Cortana ask for help if she needs it, just like her human counterparts.[34]

In addition, real personal assistants often carry around a notebook about the person they’re assisting; Cortana maintains a similar notebook.

Always interview people who are in the space you’re trying to serve. As an example, a company I was consulting for had decided to create a VUI app about Parkinson’s disease. The original idea was an app to help people with Parkinson’s, providing more information about the disease, helping with medication management, and other self-care tasks. When conducting user research, I also wanted to speak to those closest to Parkinson’s patients: their caregivers. When I did so, I realized that an equally important problem was helping the caregivers themselves! It modified the direction and feature set we had planned for building the app. I also reached out to support groups for both Parkinson’s patients and their caregivers. This goes without saying, but be respectful and transparent when conducting your user research—people are often willing to talk, and want to help, but be open about what you’re trying to accomplish. Don’t dismiss people’s ideas—you’re not making a promise to implement them, but you need to listen closely to the issues people are dealing with.

Designing a Study with Real Users

Testing with real users, early and often, is crucial. After your app is out there, it is much more difficult to change the user experience (UX) than it is earlier, during design and development. As designers, it’s easy to view your app through your own lens of desires and experiences. Having other people test will open your eyes to things that might never have occurred to you otherwise. As an example, when showing an early version of our mobile app from Sensely, I discovered that many people had no idea that they could scroll down to see more items in a list. We were so used to using the app ourselves, it didn’t occur to us, but we quickly realized we had to modify the design.

Task Definition

Whether doing early-stage testing, trying out a prototype, or conducting a full-blown usability test on a working system, it’s important to carefully define the tasks you’ll request your users to perform. As introduced in the book Voice User Interface Design:

In a typical usability test, the participant is presented with a number of tasks, which are designed to exercise the parts of the system you wish to test. Given that it is seldom possible to test a system exhaustively, the tests are focused on primary dialog paths (e.g., features that are likely to be used frequently), tasks in areas of high risk, and tasks that address the major goals and design criteria identified during requirements definition.

You should write the task definitions carefully to avoid biasing the participant in any way. You should describe the goal of the task without mentioning command words or strategies for completing the task.[35]

Be careful when writing your task: you want to avoid giving away too much and instead provide just the essential information. As Harris says, “the scenario needs only a hint of plot.”[36] Describe tasks in the way users would talk about them—don’t use technical terms, or things that give away key commands.

Harris suggests making the early tasks “relatively simple, even trivial, to put the participants at ease.”[37]

Jennifer Balogh, coauthor of Voice User Interface Design, stresses the importance of task order, and that to avoid ordering effects, it’s best to randomize them:

Order effects can happen. So, if you have everyone in a study go through all the tasks in the same order, one task can influence the behavior in later tasks. I experienced this in one study in which the task was to have the subject ask for an agent. After the subject did this, on subsequent tasks, the subjects were more likely to ask for an agent. This command wouldn’t have occurred to them otherwise (when the agent task was not before other tasks, the subjects did not ask for an agent). The solution (although time consuming) is to rotate the tasks across subjects. One way to do this is with a technique called Latin Square design.

A Latin Square design allows you to present each task in every position and have each task follow every other task without having to go through every permutation. For example, 5 tasks would result in 120 permutations. In a Latin Square design, there would only be five conditions, as presented in Table 6-1.

Task 1 | Task 2 | Task 3 | Task 4 | Task 5 | |

Subject 1 | A | B | E | C | D |

Subject 2 | B | C | A | D | E |

Subject 3 | C | D | B | E | A |

Subject 4 | D | E | C | A | B |

Subject 5 | E | A | D | B | C |

You can find more on Latin Square design by searching online.

Choosing Participants

As with all user testing, it’s best to sample subjects who are in the demographics of the people for whom you’re actually designing the system. If you are creating a health app for people with chronic heart failure, your test subjects should not be healthy college students. The further away from the true demographic your subjects are, the less reliable the results will be.

How many people should you test? If you get the right demographics, you don’t need that many. Usability expert Jakob Nielsen recommends five users for most types of testing. The reason five is enough? Here’s how he explains it:

The vast majority of your user research should be qualitative; that is, aimed at collecting insights to drive your design, not numbers to impress people in PowerPoint. The main argument for small tests is simply return on investment: testing costs increase with each additional study participant, yet the number of findings quickly reaches the point of diminishing returns. There’s little additional benefit to running more than five people through the same study; ROI drops like a stone with a bigger N.[38]

Recruiting users can be tough. There are companies that can help you find subjects, but their costs are often prohibitive for smaller companies. If your company is large enough to afford a recruiting firm, it’s a very effective way to get users in the right demographic. Recruiting companies will require a “screener,” a document outlining the qualifications you need for your users. Questions might include age range, what type of smartphone they own, their level of expertise with certain apps, their geographic location, or anything else to ensure the right demographics.

Note

For more on screeners, check out the User Testing Blog at https://www.usertesting.com/blog/2015/01/29/screener-questions/.

For some testing, it’s also appropriate to recruit friends and family. That can be a great way to do some quick and dirty testing and get feedback on crucial aspects quickly.

Remote testing widens the net because you can recruit people who aren’t local. For quick and affordable testing, services such as Task Rabbit can identify people to do your study, although you can’t choose the demographics. There are also online services such as UserTesting (https://usertesting.com/) and UserBob (https://userbob.com/), both of which have a pool of users available for testing. There are some technical hurdles, however, as the screen capture software used is not always able to capture both the app’s audio (e.g., the virtual assistant speaking) as well as the user’s speech, and the user’s screen. You can overcome this by having the user record the session using a webcam to record the session, but this will limit the pool of users and can add to the cost of user payment, as well.

Be sure to compensate your subjects. Local subjects who come to you for in-person testing should generally be paid more. Subjects who represent very specific demographic groups will also have a higher rate.

Questions to Ask

The questions you ask are crucial to gaining insights from your user testing. Observational data is important, but the right questions can tease out important pieces of the user’s experience. In addition, subjects tend to skew on the positive side, especially during face-to-face sessions—most people want to be nice and will sometimes hesitate to provide negative feedback, or exaggerate the positive. It’s often unconscious, but a good interviewer can get past these issues.

Beware of priming your subjects when giving instructions. As Harris notes, when it comes to what to say, users take substantial cues from the person conducting the testing.[39]

If possible, ask subjects a few questions after each task, and then a set of questions at the very end. The reason for this is because subjects might forget things as they move from task to ask. The best time to ask about first impressions is right after the very first task is completed.

Avoid leading the subject—let them explain in their own words. If a subject asks, “Could I have spoken to the app during that task?” you should first ask, “Would you want to do that?” If the subject says, “I didn’t like it,” don’t say, “Oh, because it didn’t understand when you said ‘show me my shopping list’”?” Instead, say, “Tell me more about that.” Do more listening and less talking. Pauses are OK; sometimes you’ll be typing or writing notes, and the user will add more information on her own.

When you begin testing, remind participants that they’re not being tested, but that they are there to help improve the system, and that you won’t be offended by their feedback. Don’t interrupt when you see them struggling, unless they become too frustrated to continue.

For quantitative questions, the Likert scale is commonly used. Here are some good guidelines about using Likert, L., and Algina, J.:[40]

Put statements or questions in the present tense.

Do not use statements that are factual or capable of being interpreted as factual.

Avoid statements that can have more than one interpretation.

Avoid statements that are likely to be endorsed by almost everyone or almost no one.

Try to have an almost equal number of statements expressing positive and negative feelings.

Statements should be short, rarely exceeding 20 words.

Each statement should be a proper grammatical sentence.

Statements containing universals such as “all,” “always,” “none,” and “never” often introduce ambiguity and you should avoid them.

Avoid the use of indefinite qualifiers such as “only,” “just,” “merely,” “many,” “few,” or “seldom.”

Whenever possible, statements should be in simple sentences rather than complex or compound sentences. Avoid statements that contain “if” or “because” clauses.

Use vocabulary that the respondents can easily understand.

Avoid the use of negatives (e.g., “not”, “none,” “never”).

Table 6-2 presents a sample questionnaire, created by Jennifer Balogh at Intelliphonics, that you should ask at the very end of an in-person user testing session.

( 1 )Strongly Disagree | ( 2 ) Disagree | ( 3 ) Somewhat Disagree | (4 ) Neutral | ( 5 )Somewhat Agree | ( 6 ) AgreE | ( 7 )Strongly AgreE | |

The system is easy to use. | |||||||

I like the flow of the video. | |||||||

The system understands what I say. | |||||||

Sometimes the advice seems irrelevant. | |||||||

It is fun to play around with the system. | |||||||

The idea of talking to a video strikes me as strange. | |||||||

The system is confusing. | |||||||

I find the system entertaining. | |||||||

The video is too choppy. | |||||||

The conversation feels very canned to me. | |||||||

I am happy with the advice offered. | |||||||

I will try to avoid using this system in the future. | |||||||

It feels like I am having a conversation with someone. | |||||||

I like the idea of being able to interact like this. | |||||||

I would be happy to use the system again and again. |

The questionnaire consisted of questions that probed seven different dimensions of the application (labeled categories): accuracy, concept, advice offered (content), ease of use, authenticity of the conversation, likability, and video flow. For each category, the questionnaire included both a positively phrased statement such as, “I like the flow of the video,” and a negatively phrased statement such as, “The video is too choppy.” Because likability was of interest in the study, three additional positively phrased statements covering this category were included in the questionnaire.

Open responses (to be asked verbally)

Thinking about the system overall, what did you like the most about it?

How did you think the system could be improved?

Note that negatively phrased statements (“The system is confusing”) are used along with positively phrased statements (“The system is easy to use.”)

This is important so as not to bias users. As indicated previously, subjects are often more positive when being interviewed than they might be on their own. In addition, I find that asking negative questions causes people to stop and think a bit more about the answer, because it throws them off slightly and they need to reframe their thinking.

In this example, the questions are grouped into similar categories, with a score calculated for each aspect. To allow for direct comparisons between positive and negative statements, answers to negatively phrased statements are reversed such that a response of 1 is mapped onto a score of 7, 2 is mapped onto 6, and so on.

An average score for each of the seven categories was calculated. Here is how the categories (and scores) for this particular user test turned out (using similar questions):

Video Flow | 6.00 |

Ease of Use | 5.67 |

Advice Offered (Content) | 4.83 |

Accuracy | 4.75 |

Likability | 4.73 |

Concept | 4.42 |

Authenticity of Conversation | 3.75 |

These were summarized more quantitatively by Balogh and her assistant (Lalida Sritanyaratana) in the report itself. Here are some of the conclusions:

The highest rated area of the app was the flow of the video. In debriefing, participants had positive comments about the video. Participant 4 said, “I like the way the video flows.” Participant 5 said the “video was smooth.” The other high scoring area was ease of use. Some comments from debriefing corroborated this finding. Participants often said that the app was simple, clear, and easy to use.

The lowest scoring dimension of the application was the authenticity of the conversation. Participants realized that there were planned responses for all the various responses to the app. Participant 3 said that phrases like, “Sorry, I didn’t catch that,” sounded ingenuine to him. Participant 4 said, “It’s OK, but I know the answers are canned.” Similarly, Participant 6 said, “I know that it’s prerecorded.”

For general likability, most participants agreed that they “liked the app.” For this question, Participant 6 was neutral, Participants 3 and 4 somewhat agreed and Participants 1, 2, and 5 agreed. So, the majority of the responses to this question were positive. The average score of 5.33 (where 5 was “somewhat agree”) was the third highest score on any of the questions on the survey. However, some improvement is needed to reach a likability threshold of 5.5.

Things to Look For

While observing your subjects (or looking at videos of remote testing), it’s important to take note of not just what the users did or did not do in the app, but facial expressions and body language. Did they laugh in a place that was unexpected? Did they frown when asked a particular question by the app?

Here are some other things to look for when conducting usability testing of a VUI:

Do your users know when they can speak and when they can’t—that is, do they know that speech is allowed, and if so, do they know when they can begin speaking?

If you’re doing early-stage testing, before the speech recognition is in place, what do they say? How realistic is it to be able to capture?

Where are they confused and hesitant?

Task duration: how long do users needs to complete the task?

If your user asks “What should I do now?” or “Should I press this button?”, don’t answer directly. Gently encourage the user to do whatever they might try when alone. If the app crashes or the user is becoming too frustrated, step in.

Early-Stage Testing

It’s never too early to begin testing your concepts. In addition to traditional methods used to test mobile devices, UX researchers of VUIs often have some other low-fidelity approaches.

Sample Dialogs

One of the first steps for early-stage testing of VUIs, after the initial concepts have been determined, is to create sample dialogs. As you might remember from Chapter 2, a sample dialog is a conversation between the VUI and the user. It’s not meant to be exhaustive, but it should showcase the most common paths as well as rarer but still important paths such as error recovery.

A sample dialog looks like a movie script, with the system and the user taking turns having a dialog.

Here’s a sample dialog for an app that allows the user (a child) to have a conversation with the big man himself, Santa Claus. This was written by Robbie Pickard while at Volio.

TALK TO SANTA CLAUS

SANTA CLAUS sits in a big red chair, facing the USER. The toy factory is behind him—it’s a busy scene.

SANTA CLAUS

Ho! Ho! Ho! Merry Christmas, little one! Welcome to the North Pole! What’s your name?

USER

Claudia.

SANTA CLAUS

That’s a lovely name. And how old are you?

USER

I’m seven.

SANTA CLAUS

Seven! That’s great. The big day isn’t too far away. Are you excited for Christmas?

USER

Yes!

SANTA CLAUS

So am I!

MRS. CLAUS brings Santa some milk and cookies.

MRS. CLAUS

Here you go, Santa; I brought you some milk and cookies.

SANTA CLAUS

Thank you, dear.

Santa holds up the two cookies for the user to see. One is chocolate chip and one is a sugar cookie with white frosting in the shape of a snowman.

(TO USER)

These look delicious...perhaps you can help me. Which one should I eat, the chocolate chip or the snowman?

USER

Eat both of them!

SANTA CLAUS (CHUCKLING)

My, oh my...that’s a lot of cookies, but as you wish!

Santa takes a big bite of both cookies in quick succession, washing it down with a glass of milk.

SANTA CLAUS (CONTINUES)

Well those were delicious! As you can tell, I love Mrs. Claus’s cookies!

(PATTING HIS BELLY)

You know, the elves and I are hard at work making toys for everyone. I gotta ask you: have you been naughty or nice this year?

USER

I’ve been nice!

SANTA CLAUS

Good for you! What’s one nice thing you did this year?

USER

I helped my Mommy clean my room!

SANTA CLAUS

Well, that is nice of you.

Santa gets out a scroll and begins writing on it.

SANTA CLAUS

I’m going to put you on the Nice List. So now for the big question: what do you want for Christmas?

USER

I want a skateboard and a backpack!

SANTA CLAUS (JOLLY AS EVER)

Well, I think that should fit on my sleigh! Don’t forget to leave me some cookies and milk!

THE END

When you have a few sample dialogs written up, do some “table reads” with other people. One person reads for the VUI, and one for the user. How does it sound? Is it repetitive? Stilted?

Do a table read with your developers, as well. You might have some items in your design (such as handling pronouns or referring to a user’s previous behavior) that will take more complex development, and it’s important to get buy-in from the outset and not surprise them late in the game.

One common finding from table reads is having too many transitions with the same wording; for example, saying “thank you” or “got it” three times in a row.

Mock-ups

As with any type of mobile design, mock-ups are a great way to test the look-and-feel of your app in the early stages. If you have an avatar, a mock-up is a good first start to get user’s reactions to how the avatar looks, even if it’s not yet animated, and even if speech recognition is not yet implemented.

Wizard of Oz Testing

Pay no attention to that man behind the curtain!

—THE GREAT AND POWERFUL OZ

Wizard of Oz (WOz) testing occurs when the thing being tested does not yet actually exist, and a human is “behind the curtain” to give the illusion of a fully working system.

WOz testing comes early. According to Harris, WOz testing is a “part of the creative process of speech-system design, not a calibration instrument for an almost-finished model developed at arm’s length from the users.”[41]

To conduct WOz testing, you will need two researchers: the Wizard, and an assistant. The Wizard will generally be focused on listening to the user and enabling the next action, so this same person cannot be the one responsible for interviewing and note taking.

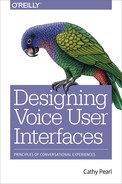

When I was at Nuance Communications, WOz testing was a common and low-cost way to test IVR systems before they were built. Because users were interacting over the phone, it was easy to simulate a real IVR system. We created a web-based tool that had a list of currently available prompts (including error prompts) in each step of the conversation, and the “wizard” would click on the appropriate prompt, which would then play out over the phone line.

We still had to design the flow and record the prompts, but no system code had to be written—just some simple HTML files (see Figure 6-1 for an example).

Conducting WOz testing on a mobile app is trickier, because it’s much more difficult to control remotely. Nonetheless, gathering user data before you have a fully working prototype can be valuable. Here are a few ways to do this type of testing before you have a working app:

- Use texting

Although this will not simulate speech recognition, it can give you a picture of overall conversational flow and what types of things users might say. For this method, tell the user that they’ll be texting with your new bot. Unbeknownst to the user, however, a human is on the other end. This could be done very easily by simply texting her with the opening prompt, and going from there.

- Focus on an abbreviated task

In certain cases, you might simply be trying to learn what it is users think they can say in a given situation. For example, if you’re trying to design a virtual assistant, your app can ask the question “What can I help you with?” and collect user responses, even if that’s as far as it goes. No actual speech recognition is needed—just a mock-up of the screen and the prompt.

- Always error-out

Another technique that you can use before your speech recognition is fully in place is to error-out. For example, if your app asks the user, “How many people will you be traveling with?” on any response, go into your back-off behavior, which could be something like, “I’m sorry, how many people?” and provide a GUI option (such as buttons). You’ll still learn some valuable ways in which people interact with the system.

- Test the GUI, even if the VUI is not ready

Because mobile VUI apps are often a combination, you can still test a variety of GUI elements by using tools such as Axure and InVision. These allow you to create a simple working model of a mobile app with just mock-ups, in which the user can swipe and press buttons to cause certain behaviors to occur. Axure also allows your prototype to play audio.

Although one advantage of WOz is the ability to test an early prototype, the other advantage is that it’s much cheaper to make design changes before you’ve written most of the code than later on in the development cycle.

Difference Between WOz and Usability Testing

There is one major difference between WOz and usability testing when working with VUIs, and that is recognition accuracy.

When conducting WOz on a GUI, it’s usually quite clear where the user has clicked the screen, or what part they’ve swiped or tapped. There’s no ambiguity; you know that as soon as that button or list is hooked up to real code, it will do what is expected.

With VUI and natural-language interfaces, the Wizard has to do some on-the-fly interpretation and determine whether that’s something we could realistically recognize. Sometimes it’s easy and what the user said is quite obviously in-grammar. But other times it’s more complex and the Wizard must make a snap judgment call to decide what the user really meant and whether it could be handled.

It’s better to be on the conservative side as a Wizard, but don’t worry too much—the testing will still do a good job capturing early issues.

Usability Testing

Usability testing refers to the stage when you have a working app. The system should have all of the features you want to test in a fully working state. If you need to test anything requiring personal information, such as a user profile or user search history, you will need to create a fake account. If the backend is still not hooked up, you can even test with hardcoded information.

Usability testing is not generally aimed at test recognition accuracy; it’s meant to test the flow and ease of use. However, recognition issues can cause problems and prevent users from completing tasks. If possible, run a couple of sample subjects first to try to catch any major recognition issues and fix them before running the full study. That being said, recognition issues are still an important lesson to be learned. If there are particular places where your users have problems because they’re not being recognized, that is clearly an issue that needs addressing.

Traditional usability testing takes place in a lab, but that’s not the only way to run a successful usability test.

Remote Testing

Some researchers still frown on remote usability testing, but it can be extremely effective if you do it correctly. Advantages to remote testing include the following:

Easier to find people in your demographic because they do not need to be local

Generally cheaper because people do not need to be paid for traveling to your site

Better for testing “in the wild”—more similar to real-world scenarios

Users are often less self-conscious, because no one is staring at them as they engage in the test

Can be moderated or unmoderated—testing can occur without you being present

Moderated versus unmoderated

As just mentioned, remote testing allows for more flexibility in terms of the tester’s participation. With remote testing, it’s still possible to observe and interview subjects remotely by using video conferencing, or even the phone. Moderated testing means that as you watch participants, you can ask questions based on their actions, and probe for more details when their replies are sparse.

However, remote testing also allows the testing to occur when you are not present, which means testers can be free to perform the tasks at the time that’s best for them. Unmoderated remote testing can still be a very effective way to conduct usability studies.

Video recording

Because you obviously will not be there in person during a remote usability test, you need to come up with a way to record what’s going on with the user. When testing mobile apps with no audio component, using a screen recording app on a phone can do the trick, but it’s more difficult when the app is speaking and the user is speaking to the app.

Some companies offer a service wherein some of their users have their own webcams and are comfortable recording testing sessions. This can become expensive, however, and limit your demographics to people who own and are able to use a webcam or other recording device.

When I was at Volio, we were already recording users’ audio and video with our picture-in-picture feature on the iPad (users could see themselves in the corner, as they do in FaceTime), as shown in Figure 6-2. For remote testing, we simply left the recording on even when it was not the user’s turn to speak, to capture any facial expressions and other audio. (We of course obtained their permission to do so, and kept the data internal.)

This methodology proved to be remarkably effective. In addition to capturing what users were saying to the app during their turn, we were also observing their reactions to the content being presented to them. For example, when testing our stand-up comedian interactive app, while the comedian was telling a joke, we could observe the users’ expressions—were they laughing? Bored? Offended?

After having users do several tasks, they completed a survey, which provided us with information about their subjective perceptions of the system. One downside to this type of remote testing is that you cannot follow-up on participants’ responses in real time (unless of course you’re testing an IVR system), but the benefits can often outweigh the disadvantages.

Services for remote testing

When conducting usability tests, sometimes you need to get creative, especially if you’re at a small company with no real budget for user testing.

Another method for conducting remote user testing is to find a group of testers by using services such as Amazon’s Mechanical Turk. “Workers” sign up for Mechanical Turk, and “Requesters” create tasks that can be done online via a web page. Workers can choose which tasks they want to do, and requesters pay them. Figure 6-3 presents an example of what a Mechanical Turk worker would see when performing a task.[42]

Ann Thyme-Gobbel, currently VUI design lead at D+M Holdings, has successfully used Mechanical Turk to test comparisons of text-to-speech (TTS) versus recorded prompts. She asked Turkers to listen to various prompts that were happy, discouraging, disappointing, and other emotions, and then asked follow-up questions about trust and likability.

She feels remote testing has a variety of advantages over lab testing, and not just the fact that people are not required to come in to a lab. Remote testing can make it easier to simulate scenarios “in the wild.” An example she gives is asking a user to refill a prescription over the phone. You can ask the remote user to find a real pill bottle in their house and read off the prescription number. Having them walk around the house while on the phone and looking for it will provide a much more realistic example of what it’s like to do this task than to bring them into a lab and hand them a bottle.

When conducting user studies for their voice-enabled cooking app “Yes, Chef,” Conversant Labs made sure to test in people’s kitchens while they made real recipes, to ensure that the app would understand the way people really speak while cooking.

In addition, Thyme-Gobbel says that during remote testing:

Participants share more. If you are sitting in the room with the participant, they’re more likely to say, “You saw what happened,” than to describe what they thought happened in some situation.

Remember to pay your subjects well, even with outsourcing. Mechanical Turk can be a much more affordable method than recruiting people yourself, but that doesn’t mean you should not pay fairly. These methods also mean that your budget can be stretched further, so you can recruit more subjects. The rule of thumb of 5 to 10 usability subjects applies when the subjects are all in the appropriate demographic; if you have less control, you’ll need more subjects.

Lab Testing

Traditional usability testing is often done in a lab, with one-way mirrors and recording equipment. Recruiting subjects to do in-person testing can be very effective. Having recording equipment already set up is a great way to reliably record what’s needed. The pros of lab testing include having a dedicated space to perform user testing (particularly important when you need a quiet place to do VUI testing), and the ability to have permanent recording equipment set up, allowing the user to do a task without the tester sitting right next to them and possibly making them uncomfortable (assuming a one-way mirror).

Some cons include the price, both of having and maintaining the lab as well as the cost of paying subjects to keep appointments and show up in person. It can also limit the demographic if the people you need are not available in your area.

There are of course variations in “lab” testing: you do not necessarily need a dedicated space, a particularly difficult thing to come by in a small company. A private quiet room can do just fine. Ideally, you will have a camera showing you what the user is seeing, so you do not need to sit too closely to observe.

Guerrilla Testing

When you don’t have a budget for user testing, sometimes you need to simply go out into the world and ask people to try your app. Coffee shops, shopping malls, parks—any of these can be effective places to recruit subjects for on-the-fly testing. Be well armed with your mobile device, task definition, questions, and a reward. Homemade cookies, Starbucks gift cards, even stickers can be enough motivation for people to spend 5 to 15 minutes trying out your app. Remind them they’re helping design a better app and that their feedback will help others.

Performance Measures

Collecting a combination of objective and subjective measurements is recommended. This combination is important because one measurement does not always give the full story. For example, perhaps the user completed the task successfully, but did not like something about it. Or, they might technically not be successful in their task, but not be bothered.

As Balogh explains, “you can find that subjects failed, but they didn’t mind.” I noticed this during Volio usability testing: in some cases, users’ speech would not be correctly recognized, but because the error handling was relatively pain-free, users would not mark the app more negatively because of it. If I had only been marking down error counts, the results would have been different.

For objective measures, remember to tally observations and not just rely on impressions. Balogh says:

Sometimes you will find that one subject made an impression on you, but when you actually count the instances of a behavior, the conclusion is different from what this one person experienced.

She cites a study that found five key measurements for testing VUI systems: accuracy and speed, cognitive effort, transparency/confusion, friendliness, and voice.[43]

Decide beforehand what “task completion” means for each task. If the user gets partway through a task but quits before it’s finished, it could still be considered complete. For example, if the user is looking up their nearest pharmacy, but fails to click on the link to a map, it is still successful—perhaps the user only wanted to know the hours the pharmacy was open. If the user does end the task (voluntarily or not), follow up to ask if they believe they accomplished what was needed and, if not, why not.

Tracking the number and type of errors is very important in a VUI system. A rejection error, for example, is different than a mismatch (in which the user was incorrectly recognized as saying something else). In addition, it’s important to note what happened after the error: did the user recover? How long did it take?

Next Steps

After you have run your subjects, tally up their responses to questions, their task completion rates, and number and types of errors.

Identify pain points. Where did the users struggle? Did they know when it was OK to speak? Where did they become lost—or impatient? When things did go wrong, were they able to recover successfully?

Write up your observations, and make a list of recommendations. Rank the issues by severity and share this with the entire team to create a plan on when and how you’ll be able to fix them.

Testing VUIS in Cars, Devices, and Robots

When testing a VUI in contexts other than an IVR or mobile app, some differences apply.

Cars

Testing in the car is challenging. Large car companies and some universities have driving simulators, but for smaller companies it can be difficult to fund such equipment. Lower-cost options can include a mock-up of a car, with a monitor displaying a driving simulator, along with a steering wheel and holder for a phone or tablet for the driving app itself.

Lisa Falkson, lead voice UX designer at electric car company NEXTEV, often runs car usability testing in a real car, but while the car is stopped in a parking lot. This allows her to gain valuable information about how the user interacts with the system, their level of distraction, and what they can and cannot accomplish—in a much safer environment than if the user were actually driving.

Karen Kaushansky, director of experience at self-driving car company Zoox, reminds us that you don’t need to have a fully working prototype to do initial experience testing. While at Microsoft, working on the Ford SYNC system (which allowed users to push a button and ask for their commute time, among other features), she used a low-fidelity method to do WOz testing.

The car did not yet have its push-to-talk button, so they attached a foam one to the steering wheel (Figure 6-4). While sitting in the parking lot, the Wizard sat in the backseat with a laptop, playing prompts that would, in the future, come from the car! Subjects were quickly caught up in the experience, allowing the team to do useful initial testing to discover things such as how people would ask for information about traffic.

Devices and Robots

Kaushansky talks about ways to test other devices, as well, not just cars, in the early stages, which she calls “experience prototyping.” Suppose that you’re building a smartwatch, but it will be a while before the first model exists. Even before that happens, you can test what it would be like to have such a device—strap one on your wrist and walk around the mall, talking to it. As Kaushansky says, “be Dick Tracy and put your mouth near your watch and say, ‘What’s the weather tomorrow?’”

As Tom Chi said in his TED talk in 2012 about rapid prototyping Google Glass, “Doing is the best kind of thinking.” In other words, you can think long and hard about how people might interact with your device, but nothing beats having people actually interact with it (even in a crude prototype form).

If you’re testing a device such as an Amazon Echo–type system, you can rig something up to do WOz testing, because all you need to do is play back prompts through the device. Imagine a scenario in which the user is in a room with the device, and the Wizard sits in the other room at the computer, ready to play prompts in response to the user’s queries.

You can even use WOz methodology for testing robots. Ellen Francik, lead UX designer at Mayfield Robotics, needed to test how people reacted to the robot’s responses to user input. Before the robot even had a microphone, she was able to test this scenario with a WOz setup.

The Wizard would use an iPad app, which was connected to the robot via Bluetooth, to control the robot’s movements, sounds, and animations. When the user said a command to the robot or asked a question, the Wizard would play the appropriate sound and move the robot. In this way, Francik could test whether users understood the robot’s (nonverbal) responses. Did the user know when the robot “said” yes? Could the user tell when it indicated a “got it!” response?

Because they were testing in the environment in which the robot would actually be used—in people’s homes—they did not try to hide the Wizard. Francik said this was not an impediment; people were still game to interact with the robot, and the sessions proved highly valuable.

Conclusion

User testing VUI devices has many things in common with any kind of user testing: test through as many stages of development as possible; carefully choose your demographics; design your tasks to exercise the right features; don’t lead the subject; and ask the right questions.

It can be more difficult to test VUI apps because traditional methods (user talking out loud as they test, screen capture software to record their actions) are often not appropriate. However, there are workarounds, such as testing before speech recognition has been implemented and having a Wizard play prompts, or doing a text-only version. Remember that it’s important to test whether users can complete tasks in a satisfactory way, not just whether a cool feature works. Just because you can say “book me a taxi and then make a reservation and then send my mom flowers” doesn’t mean any users actually will say it.

Data collection, as mention in Chapter 5, is an essential part of building a successful VUI. Collecting data during the testing phases is a great way to enhance your VUI early on. Learn how users talk and interact with your system via testing, and build your VUI accordingly.

[34] Weinberger, M. (2016). “Why Microsoft Doesn’t Want Its Digital Assistant, Cortana, to Sound Too Human.” Retrieved from http://businessinsider.com/.

[35] Cohen, M., Giangola, J., and Balogh, J. Voice User Interface Design. (Boston, MA: Addison-Wesley, 2004), 6, 8, 75, 218, 247-248, 250-251, 259.

[36] Harris, R. Voice Interaction Design: Crafting the New Conversational Speech Systems. (San Francisco, CA: Elsevier, 2005), 489.

[37] Harris, Voice Interaction Design, 474.

[38] Neilsen, J. (2012). “How Many Test Users in a Usability Study?” Retrieved from https://www.nngroup.com/.

[39] Harris, R. Voice Interaction Design: Crafting the New Conversational Speech Systems. (San Francisco, CA: Elsevier, 2005), 489.

[40] Crocker, L. and Algina, J. Introduction to Classical and Modern Test Theory. (Mason, Ohio: Cengage Learning, 2008), 80.

[41] Harris, R. Voice Interaction Design: Crafting the New Conversational Speech Systems. (San Francisco, CA: Elsevier, 2005).

[42] This example is taken from Amazon’s “How to Create a Project” documentation (http://docs.aws.amazon.com/AWSMechTurk/latest/RequesterUI/CreatingaHITTemplate.html).

[43] Larsen, L.B. (2003). “Assessment of spoken dialogue system usability: What are we really measuring?” Eurospeech, Geneva.