Chapter 13

Shadow Mapping

Shadows indicate to the observer where light originates and helps convey the relative locations of objects in a scene. This chapter provides an introduction to the basic shadow mapping algorithm, which is a popular method for modeling dynamic shadows in games and 3D applications. For an introductory book, we focus only on the basic shadow mapping algorithm; more sophisticated shadowing techniques, such as cascading shadow maps [Engel06] which give better quality results, are built by extending the basic shadow mapping algorithm. Before we can discuss the shadow mapping algorithm, we must introduce two techniques the shadow mapping algorithm utilizes: render to texture and projective texturing.

Objectives:

![]() To understand the basic shadow mapping algorithm.

To understand the basic shadow mapping algorithm.

![]() To discover how to render to a texture.

To discover how to render to a texture.

![]() To learn how projective texturing works.

To learn how projective texturing works.

![]() To find out about orthographic projections.

To find out about orthographic projections.

13.1 Render to Texture

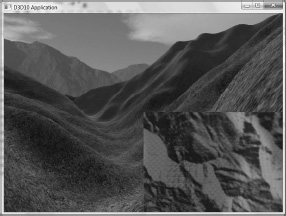

Thus far, we have been rendering to the swap chain’s back buffer surface; that is to say, the back buffer has been the render target. However, the back buffer need not always be the render target; we can render to a different texture. For example, Figure 13.1 shows an application of rendering to texture to implement a radar map. In this section, we show how to update a texture at run time by rendering into it every frame; after we render to this texture, we can bind the texture as a shader input and map it onto geometry.

Figure 13.1: The scene is rendered to a texture from a bird’s-eye view. Then, when we render the scene as normal from the player’s eye, we map the texture onto a quad in the bottom-right corner of the screen to display the radar map (i.e., the scene from the bird’s-eye view).

To facilitate render to texture, we implement a class called DrawableTex2D. This class encapsulates the resource views to two textures: a color map, which we bind as the render target, and a depth map, which we bind as the depth/stencil buffer. We incorporate a depth buffer since, just as we want to do depth buffering when we render the scene to the back buffer, we will want to do depth buffering when we render a scene to a texture. The class definition is given below:

class DrawableTex2D

{

public:

DrawableTex2D();

~DrawableTex2D();

void init(ID3D10Device* device, UINT width, UINT height,

bool hasColorMap, DXGI_FORMAT colorFormat);

ID3D10ShaderResourceView* colorMap();

ID3D10ShaderResourceView* depthMap();

void begin();

void end();

private:

DrawableTex2D(const DrawableTex2D& rhs);

DrawableTex2D& operator=(const DrawableTex2D& rhs);

void buildDepthMap();

void buildColorMap();

private:

UINT mWidth;

UINT mHeight;

DXGI_FORMAT mColorMapFormat;

ID3D10Device* md3dDevice;

ID3D10ShaderResourceView* mColorMapSRV;

ID3D10RenderTargetView* mColorMapRTV;

ID3D10ShaderResourceView* mDepthMapSRV;

ID3D10DepthStencilView* mDepthMapDSV;

D3D10_VIEWPORT mViewport;

};

13.1.1 Constructor/Destructor/Accessors

The following implementations are trivial, but we include them for completeness:

DrawableTex2D::DrawableTex2D()

: mWidth(0), mHeight(0), mColorMapFormat(DXGI_FORMAT_UNKNOWN),

md3dDevice(0), mColorMapSRV(0), mColorMapRTV(0), mDepthMapSRV(0),

mDepthMapDSV(0)

{

ZeroMemory(&mViewport, sizeof(D3D10_VIEWPORT));

}

DrawableTex2D::~DrawableTex2D()

{

ReleaseCOM(mColorMapSRV);

ReleaseCOM(mColorMapRTV);

ReleaseCOM(mDepthMapSRV);

ReleaseCOM(mDepthMapDSV);

}

ID3D10ShaderResourceView* DrawableTex2D::colorMap()

{

return mColorMapSRV;

}

ID3D10ShaderResourceView* DrawableTex2D::depthMap()

{

return mDepthMapSRV;

}

The DrawableTex2D::colorMap and DrawableTex2D::depthMap methods return shader resource views, so that we can bind the respective texture to a shader stage in order to sample the texture in a shader.

13.1.2 DrawableTex2D::init

The DrawableTex2D::init method is implemented like so:

void DrawableTex2D::init(ID3D10Device* device, UINT width, UINT height,

bool hasColorMap, DXGI_FORMAT colorFormat)

{

mWidth = width;

mHeight = height;

mColorMapFormat = colorFormat;

md3dDevice = device;

buildDepthMap();

// shadow maps don't need color maps, for example

if( hasColorMap )

buildColorMap();

mViewport.TopLeftX = 0;

mViewport.TopLeftY = 0;

mViewport.Width = width;

mViewport.Height = height;

mViewport.MinDepth = 0.0f;

mViewport.MaxDepth = 1.0f;

}

The width and height parameters specify the dimensions of the color and depth/stencil buffers. The colorFormat parameter specifies the pixel format of the color buffer. The hasColorMap parameter indicates whether a DrawableTex2D instance has a color map. For example, to implement shadow mapping, we do not need a color map — we only need a depth buffer. The viewport indicates the subrectangle of the render target (and depth/stencil buffer) to draw to. In our implementation, we draw to the entire render target (and depth/stencil buffer) by having the viewport dimensions match the buffer dimensions.

13.1.3 DrawableTex2D::buildDepthMap

The following code builds the depth map. We specify the bind flags

texDesc.BindFlags = D3D10_BIND_DEPTH_STENCIL |

D3D10_BIND_SHADER_RESOURCE;

so that we can bind the texture as a depth/stencil buffer and as a shader input. Note that the texture format is typeless (DXGI_FORMAT_R32_TYPELESS) when we create the texture. The format needs to be typeless because the format depends on the view. The shader resource view uses the format DXGI_FORMAT_R32_FLOAT and the depth/stencil view uses the format DXGI_FORMAT_D32_FLOAT.

void DrawableTex2D::buildDepthMap()

{

ID3D10Texture2D* depthMap = 0;

D3D10_TEXTURE2D_DESC texDesc;

texDesc.Width = mWidth;

texDesc.Height = mHeight;

texDesc.MipLevels = 1;

texDesc.ArraySize = 1;

texDesc.Format = DXGI_FORMAT_R32_TYPELESS;

texDesc.SampleDesc.Count = 1;

texDesc.SampleDesc.Quality = 0;

texDesc.Usage = D3D10_USAGE_DEFAULT;

texDesc.BindFlags = D3D10_BIND_DEPTH_STENCIL |

D3D10_BIND_SHADER_RESOURCE;

texDesc.CPUAccessFlags = 0;

texDesc.MiscFlags = 0;

HR(md3dDevice->CreateTexture2D(&texDesc, 0, &depthMap));

D3D10_DEPTH_STENCIL_VIEW_DESC dsvDesc;

dsvDesc.Format = DXGI_FORMAT_D32_FLOAT;

dsvDesc.ViewDimension = D3D10_DSV_DIMENSION_TEXTURE2D;

dsvDesc.Texture2D.MipSlice = 0;

HR(md3dDevice->CreateDepthStencilView(depthMap,

&dsvDesc, &mDepthMapDSV));

D3D10_SHADER_RESOURCE_VIEW_DESC srvDesc;

srvDesc.Format = DXGI_FORMAT_R32_FLOAT;

srvDesc.ViewDimension = D3D10_SRV_DIMENSION_TEXTURE2D;

srvDesc.Texture2D.MipLevels = texDesc.MipLevels;

srvDesc.Texture2D.MostDetailedMip = 0;

HR(md3dDevice->CreateShaderResourceView(depthMap,

&srvDesc, &mDepthMapSRV));

// View saves a reference to the texture so we can

// release our reference.

ReleaseCOM(depthMap);

}

You might wonder why the depth buffer should be allowed to be bound to a shader input. It seems the depth buffer would just be needed for the depth buffer algorithm to accurately generate the color buffer, and that we would only want to bind the color buffer as a shader input. In actuality, the depth buffer is precisely the texture we want to project onto geometry to implement the shadow mapping algorithm. Thus, it is useful to also allow the depth buffer to be bound as a shader input.

13.1.4 DrawableTex2D::buildColorMap

The following code builds the color map. It is analogous to the DrawableTex2D::buildDepthMap method. However, one difference is that we specify the flag:

texDesc.MiscFlags = D3D10_RESOURCE_MISC_GENERATE_MIPS;

When this flag is specified, it allows the hardware to generate the lower mipmap levels (see §13.1.6).

void DrawableTex2D::buildColorMap()

{

ID3D10Texture2D* colorMap = 0;

D3D10_TEXTURE2D_DESC

texDesc;

texDesc.Width = mWidth;

texDesc.Height = mHeight;

texDesc.MipLevels = 0;

texDesc.ArraySize = 1;

texDesc.Format = mColorMapFormat;

texDesc.SampleDesc.Count = 1;

texDesc.SampleDesc.Quality = 0;

texDesc.Usage = D3D10_USAGE_DEFAULT;

texDesc.BindFlags = D3D10_BIND_RENDER_TARGET |

D3D10_BIND_SHADER_RESOURCE;

texDesc.CPUAccessFlags = 0;

texDesc.MiscFlags = D3D10_RESOURCE_MISC_GENERATE_MIPS;

HR(md3dDevice->CreateTexture2D(&texDesc, 0, &colorMap));

// Null description means to create a view to all mipmap levels

// using the format the texture was created with.

HR(md3dDevice->CreateRenderTargetView(colorMap, 0, &mColorMapRTV));

HR(md3dDevice->CreateShaderResourceView(colorMap, 0, &mColorMapSRV));

// View saves a reference to the texture so we can

// release our reference.

ReleaseCOM(colorMap);

}

13.1.5 DrawableTex2D::begin

The structure for drawing to a texture is as follows:

DrawableTex2D::begin();

// Draw geometry here to texture

DrawableTex2D::end();

That is, the DrawableTex2D::begin method needs to be called before we can draw to the texture, and the DrawableTex2D::end method should be called when we are done drawing to the texture.

The DrawableTex2D::begin method changes the render target of the output merger stage from the back buffer to the texture. It also sets the new viewport that corresponds to the texture. Finally, it clears the color map (if it exists) and the depth map to default values.

void DrawableTex2D::begin()

{

ID3D10RenderTargetView* renderTargets[1] = {mColorMapRTV};

md3dDevice->OMSetRenderTargets(1, renderTargets, mDepthMapDSV);

md3dDevice->RSSetViewports(1, &mViewport);

// only clear if we actually created a color map.

if( mColorMapRTV )

md3dDevice->ClearRenderTargetView(mColorMapRTV, BLACK);

md3dDevice->ClearDepthStencilView(mDepthMapDSV,

D3D10_CLEAR_DEPTH, 1.0f, 0);

}

If a color map does not exist, then mColorMapRTV will be null, and we are binding a null render target. This is fine, and it disables any pixels from being written since there is no render target for them to be written to. Depth values are still written to the depth buffer, however. It might sound odd to draw to the depth buffer but not the color buffer, but as we will soon see, for shadow mapping we only need to draw to the depth buffer.

Note: When binding a null render target, you do not pass in null for the render target array parameter. Instead you must pass in a valid array of ID3D10RenderTargetView pointers, where the elements are null. That is, note the distinction between:

// Incorrect

md3dDevice->OMSetRenderTargets(1, 0, mDepthMapDSV);

// Correct

ID3D10RenderTargetView* renderTargets[1] = {0};

md3dDevice->OMSetRenderTargets(1, renderTargets, mDepthMapDSV);

13.1.6 DrawableTex2D::end

As mentioned, when we render to texture to generate a color map, we usually want to map the generated texture onto some geometry, as was illustrated in Figure 13.1. However, since we had Direct3D generate the texture at run time, we do not have the lower mipmap levels. We can have Direct3D generate the lower mipmap levels using the ID3D10Device::GenerateMips method. In order to call this method on a shader resource view, the original texture must have been created with the D3D10_RESOURCE_MISC_GENERATE_MIPS flag.

void DrawableTex2D::end()

{

// After we have drawn to the color map, have the hardware generate

// the lower mipmap levels.

if( mColorMapSRV )

md3dDevice->GenerateMips(mColorMapSRV);

}

As mentioned, DrawableTex2D::end should be called when we are done rendering to the texture. This is because we do not want to generate the mipmap levels until after we are done drawing to the texture for the current frame.

13.2 Orthographic Projections

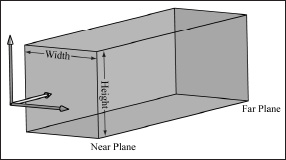

Thus far in this book we have been using a perspective projection. The key property of perspective projection is that objects more distant from the camera are perceived as smaller. This agrees with how we perceive things in real life. Another type of projection is an orthographic projection. Such projections are primarily used in 3D science or engineering applications, where it is desirable to have parallel lines remain parallel after projection. However, orthographic projections will enable us to model shadows that parallel lights generate. With an orthographic projection, the viewing volume is a box axis-aligned with the view space with width w, height h, near plane n, and far plane f that looks down the positive z-axis of view space (see Figure 13.2). These numbers, defined relative to the view space coordinate system, define the box view volume.

Figure 13.2: The orthographic viewing volume is a box that is axis-aligned with the view coordinate system.

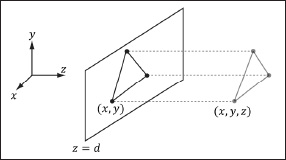

With an orthographic projection, the lines of projection are parallel to the view space z-axis (Figure 13.3). And we see that the 2D projection of a vertex (x, y, z) is just (x, y).

Figure 13.3: The orthographic projection of points onto the projection plane. The lines of projection are parallel to the view space z-axis with an orthographic projection.

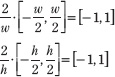

As with perspective projection, we want to maintain relative depth information, and we want normalized device coordinates (NDC). To transform the view volume from view space to NDC space, we need to rescale and shift to map the view space view volume ![]() to the NDC space view volume [–1, 1]×[–1, 1]×[0, 1]. We can determine this mapping by working coordinate-by-coordinate. For the first two coordinates, it is easy to see that the intervals differ only by a scaling factor:

to the NDC space view volume [–1, 1]×[–1, 1]×[0, 1]. We can determine this mapping by working coordinate-by-coordinate. For the first two coordinates, it is easy to see that the intervals differ only by a scaling factor:

For the third coordinate, we need to map [n, f] → [0, 1]. We assume the mapping takes the form g(z) = az + b (i.e., a scaling and translation). We have the conditions g(n) = 0 and g(f) = 1, which allow us to solve for a and b:

an + b = 0

af + b = 1

The first equation implies b = –an. Plugging this into the second equation we get:

And so:

![]()

Thus,

![]()

The reader may wish to graph g(z) over the domain [n, f ] for various n and f such that f > n.

Finally, the orthographic transformation from view space coordinates (x, y, z) to NDC space coordinates (x′, y′, z′) is:

Or in terms of matrices:

The 4 × 4 matrix in the above equation is the orthographic projection matrix.

Recall that with the perspective projection transform, we had to split it into two parts: a linear part described by the projection matrix, and a nonlinear part described by the divide by w. In contrast, the orthographic projection transformation is completely linear — there is no divide by w. Multiplying by the orthographic projection matrix takes us directly into NDC coordinates.

13.3 Projective Texture Coordinates

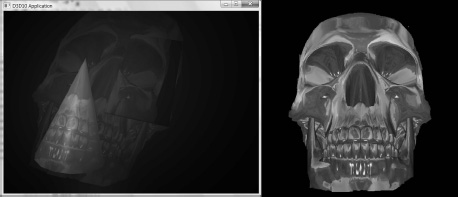

Projective texturing is so-called because it allows us to project a texture onto arbitrary geometry, much like a slide projector. Figure 13.4 shows an example of projective texturing.

Figure 13.4: The skull texture (right) is projected onto the scene geometry (left).

Projective texturing can be useful on its own for modeling slide projectors, but as we will see in §13.4, it is also used as an intermediate step for shadow mapping.

The key to projective texturing is to generate texture coordinates for each pixel in such a way that the applied texture looks like it has been projected onto the geometry. We will call such generated texture coordinates projective texture coordinates.

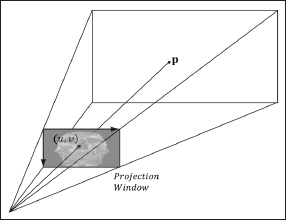

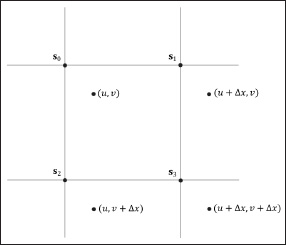

From Figure 13.5, we see that the texture coordinates (u, v) identify the texel that should be projected onto the 3D point p. But the coordinates (u, v) precisely identify the projection of p on the projection window, relative to a texture space coordinate system on the projection window. So the strategy of generating projective texture coordinates is as follows:

1. Project the point p onto the light’s projection window and transform the coordinates to NDC space.

2. Transform the projected coordinates from NDC space to texture space, thereby effectively turning them into texture coordinates.

Step 1 can be implemented by thinking of the light projector as a camera. We define a view matrix V and projection matrix P for the light projector. Together, these matrices essentially define the position, orientation, and frustum of the light projector in the world. The matrix V transforms coordinates from world space to the coordinate system of the light projector. Once the coordinates are relative to the light coordinate system, the projection matrix, along with the homogeneous divide, are used to project the vertices onto the projection plane of the light. Recall from §5.6.3 that after the homogeneous divide, the coordinates are in NDC space.

Step 2 is accomplished by transforming from NDC space to texture space via the following change of coordinate transformation:

u = 0.5x + 0.5

v = –0.5y + 0.5

Here, u, v ∈[0, 1] provided x, y∈[–1, 1]. We scale the y-coordinate by a negative to invert the axis because the positive y-axis in NDC coordinates goes in the direction opposite to the positive v-axis in texture coordinates.

Figure 13.5: The texel identified by the coordinates (u, v) relative to the texture space on the projection window is projected onto the point p by following the line of sight from the light origin to the point p.

13.3.1 Code Implementation

The code for generating projective texture coordinates is shown below:

struct VS_OUT

{

float4 posH : SV_POSITION;

float3 posW : POSITION;

float3 tangentW : TANGENT;

float3 normalW : NORMAL;

float2 texC : TEXCOORD0;

float4 projTexC : TEXCOORD1;

};

VS_OUT VS(VS_IN vIn)

{

VS_OUT vOut;

[…]

// Transform to light's projective space.

vOut.projTexC = mul(float4(vIn.posL, 1.0f), gLightWVP);

[…]

return vOut;

}

float4 PS(VS_OUT pIn) : SV_Target

{

// Complete projection by doing division by w.

projTexC.xyz /= projTexC.w;

// Transform from NDC space to texture space.

projTexC.x = +0.5f*projTexC.x + 0.5f;

projTexC.y = -0.5f*projTexC.y + 0.5f;

// Depth in NDC space.

float depth = projTexC.z;

// Sample the texture using the projective tex-coords.

float4 c = gTextureMap.Sample(gSampler, projTexC.xy);

[…]

}

13.3.2 Points Outside the Frustum

In the rendering pipeline, geometry outside the frustum is clipped. However, when we generate projective texture coordinates by projecting the geometry from the point of view of the light projector, no clipping is done — we simply project vertices. Consequently, geometry outside the projector’s frustum receives projective texture coordinates outside the [0, 1] range. Projective texture coordinates outside the [0, 1] range function just like normal texture coordinates outside the [0, 1] range based on the enabled address mode (see §7.8) used when sampling the texture.

We do not want to projectively texture any geometry outside the projector’s frustum because it does not make sense (such geometry receives no light from the projector). To handle this, we can use conditional statements to check if a projected point is outside the projector’s frustum in NDC coordinates:

if( projTexC.x < -1.0f || projTexC.x > 1.0f ||

projTexC.y < -1.0f || projTexC.y > 1.0f ||

projTexC.z < 0.0f )

// then not in light volume

Another strategy is to associate a spotlight (see §6.10) with the projector so that anything outside the spotlight’s field of view cone is not lit (i.e., the surface receives no projected light). The advantage of using a spotlight is that the light intensity from the projector is strongest at the center of the spotlight cone, and can smoothly fade out as the angle ϕ between –L and d increases (where L is the light vector to the surface point and d is the direction of the spotlight).

13.3.3 Orthographic Projections

So far we have illustrated projective texturing using perspective projections (frustum shaped volumes). However, instead of using a perspective projection for the projection process, we could have used an orthographic projection. In this case, the texture is projected in the direction of the z-axis of the light through a box.

Everything we have talked about with projective texture coordinates also applies when using an orthographic projection, except for a couple of things. First, with an orthographic projection, the spotlight strategy used to handle points outside the projector’s volume does not work. This is because a spotlight cone approximates the volume of a frustum to some degree, but it does not approximate a box. However, we can still use conditional statements to check if a projected point is outside the projector’s volume. This is because an orthographic projection still generates NDC coordinates and a point (x, y, z) is inside the volume if and only if:

–1 ≤ x ≤ 1

–1 ≤ y ≤ 1

0 ≤ z ≤ 1

Second, with an orthographic projection, we do not need to do the divide by w; that is, we do not need the line:

// Complete projection by doing division by w.

projTexC.xyz /= projTexC.w;

This is because after an orthographic projection, the coordinates are already in NDC space. This is faster, because it avoids the per-pixel division required for perspective projection. On the other hand, leaving in the division does not hurt because it divides by 1 (an orthographic projection does not change the w-coordinate, so w will be 1). If we leave the division by w in the shader code, then the shader code works for both perspective and orthographic projections uniformly. The trade-off for this uniformity is that you do a superfluous division with an orthographic projection.

13.4 Shadow Mapping

13.4.1 Algorithm Description

The idea of the shadow mapping algorithm is to render to texture the scene depth from the viewpoint of the light into a depth buffer called a shadow map. After this is done, the shadow map will contain the depth values of all the visible pixels from the perspective of the light. (Pixels occluded by other pixels will not be in the shadow map because they will fail the depth test and either be overwritten or never written.)

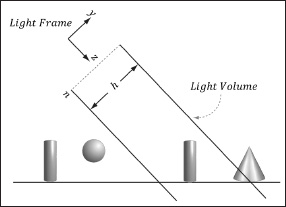

To render the scene from the viewpoint of the light, we need to define a light view matrix that transforms coordinates from world space to the space of the light and a light projection matrix, which describes the volume light emits through in the world. This can be either a frustum volume (perspective projection) or box volume (orthographic projection). A frustum light volume can be used to model spotlights by embedding the spotlight cone inside the frustum. A box light volume can be used to model parallel lights. However, the parallel light is now bounded and only passes through the box volume; therefore, it may only strike a subset of the scene (see Figure 13.6).

Figure 13.6: Parallel light rays travel through the light volume, so only a subset of the scene inside the volume receives light.

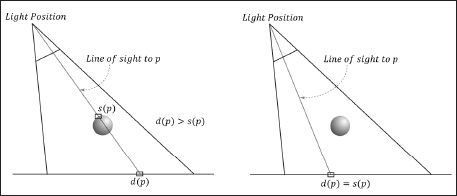

Once we have built the shadow map, we render the scene as normal from the perspective of the camera. For each pixel p rendered, we also compute its depth from the light source, which we denote by d(p). In addition, using projective texturing, we sample the shadow map along the line of sight from the light source to the pixel p to get the depth value s(p); this value is the depth of the pixel closest to the light along the line of sight from the position of the light to p. Then, from Figure 13.7, we see that a pixel p is not in shadow if and only if d(p) = s(p). Due to floating-point inaccuracy, it is problematic to compare two floating-point numbers for equality; therefore, we usually say that a pixel is not in shadow if and only if d(p) ≤ s(p) + ε, where ε > 0 is some small number determined by experimenting.

Figure 13.7: On the left, the depth of the pixel p from the light is d(p). However, the depth of the pixel nearest to the light along the same line of sight has depth s(p), and s(p) < d(p). We conclude, therefore, that p is in shadow. On the right, the depth of the pixel p from the light is d(p) and it also happens to be the pixel nearest to the light along the line of sight (that is, s(p) = d(p)), so we conclude p is not in shadow.

Note: The depth values compared are in NDC coordinates. This is because the shadow map, which is a depth buffer, stores the depth values in NDC coordinates. How this is done exactly will be clear when we look at the code.

13.4.2 Building the Shadow Map

The first step in shadow mapping is building the shadow map. To do this, we create a DrawableTex2D instance. For shadow mapping, we only need the depth values, so we create the texture with no color map:

mShadowMap.init(md3dDevice, 1024, 1024, false, DXGI_FORMAT_UNKNOWN);

We then define a light view matrix and projection matrix, and render the scene into the shadow map:

mShadowMap.begin();

drawSceneToShadowMap();

mShadowMap.end();

The effect file we use for rendering the scene from the perspective of the light is quite simple. For one thing, we only need a position element and texture coordinates element:

cbuffer cbPerFrame

{

float4×4 gLightWVP;

};

// Nonnumeric values cannot be added to a cbuffer.

Texture2D gDiffuseMap;

SamplerState gTriLinearSam

{

Filter = MIN_MAG_MIP_LINEAR;

AddressU = Wrap;

AddressV = Wrap;

};

struct VS_IN

{

float3 posL : POSITION;

float3 tangentL : TANGENT;

float3 normalL : NORMAL;

float2 texC : TEXCOORD;

};

struct VS_OUT

{

float4 posH : SV_POSITION;

float2 texC : TEXCOORD;

};

VS_OUT VS(VS_IN vIn)

{

VS_OUT vOut;

vOut.posH = mul(float4(vIn.posL, 1.0f), gLightWVP);

vOut.texC = vIn.texC;

return vOut;

}

void PS(VS_OUT pIn)

{

float4 diffuse = gDiffuseMap.Sample( gTriLinearSam, pIn.texC );

// Don't write transparent pixels to the shadow map.

clip(diffuse.a - 0.15f);

}

technique10 BuildShadowMapTech

{

pass P0

{

SetVertexShader( CompileShader( vs_4_0, VS() ) );

SetGeometryShader( NULL );

SetPixelShader( CompileShader( ps_4_0, PS() ) );

}

}

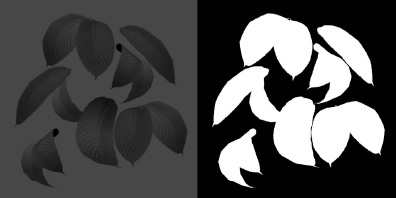

Notice that the pixel shader does not return a value because we only need to output depth values. The pixel shader is solely used to clip pixel fragments with zero or low alpha values, which we assume indicate complete transparency. For example, consider the tree leaf texture in Figure 13.8; here, we only want to draw the pixels with white alpha values to the shadow map.

Figure 13.8: Leaf texture.

13.4.3 Restore Rendering to the Back Buffer

When we render to a texture, we change the render target, depth/stencil buffer, and viewport. Therefore, after rendering to a texture, we need to restore the old values. This is done with the D3DApp::resetOMTargetsAndViewport method. The way it is used and its implementation are given below:

mShadowMap.begin();

drawSceneToShadowMap();

mShadowMap.end();

// restore rendering to back buffer

resetOMTargetsAndViewport();

// Render scene to back buffer

…

void D3DApp::resetOMTargetsAndViewport()

{

md3dDevice->OMSetRenderTargets(1, &mRenderTargetView,

mDepthStencilView);

D3D10_VIEWPORT vp;

vp.TopLeftX = 0;

vp.TopLeftY = 0;

vp.Width = mClientWidth;

vp.Height = mClientHeight;

vp.MinDepth = 0.0f;

vp.MaxDepth = 1.0f;

md3dDevice->RSSetViewports(1, &vp);

}

13.4.4 The Shadow Factor

The shadow factor is a new factor we add to the lighting equation. The shadow factor is a scalar in the range 0 to 1. A value of 0 indicates a point is in shadow, and a value of 1 indicates a point is not in shadow. With filtering (§13.4.6), we will see that a point can also be partially in shadow, in which case the shadow factor will be between 0 and 1. In our model, the shadow factor will be multiplied against the diffuse and specular lighting terms:

litColor += shadowFactor * diffuseFactor * v.diffuse * L.diffuse;

litColor += shadowFactor * specFactor * v.spec * L.spec;

The shadow factor does not affect ambient light since that is indirect light, and it also does not affect reflective light coming from the environment map. Our lighting functions have been updated to take a shadow factor parameter. For example:

float3 ParallelLight(SurfaceInfo v, Light L, float3 eyePos,

float shadowFactor)

{

…

}

13.4.5 The Shadow Map Test

We now show the effect code used to draw the scene from the camera’s viewpoint after the shadow map has been built. The key issue is computing d(p) and s(p) for each pixel p. The value d(p) is found by transforming the point to the NDC space of the light; then the z-coordinate gives the normalized depth value of the point from the light source. The value s(p) is found by projecting the shadow map onto the scene through the light’s view volume using projective texturing. Note that with this setup, both d(p) and s(p) are measured in the NDC space of the light, so they can be compared. The relevant code is in bold.

#include "lighthelper.fx"

static const float SHADOW_EPSILON = 0.001f;

static const float SMAP_SIZE = 1024.0f;

static const float SMAP_DX = 1.0f / SMAP_SIZE;

cbuffer cbPerFrame

{

Light gLight;

float3 gEyePosW;

};

cbuffer cbPerObject

{

float4×4 gLightWVP;

float4×4 gWorld;

float4×4 gWVP;

float4×4 gTexMtx;

float4 gReflectMtrl;

bool gCubeMapEnabled;

};

// Nonnumeric values cannot be added to a cbuffer.

Texture2D gDiffuseMap;

Texture2D gSpecMap;

Texture2D gNormalMap;

Texture2D gShadowMap;

TextureCube gCubeMap;

SamplerState gShadowSam

{

Filter = MIN_MAG_MIP_POINT;

AddressU = Clamp;

AddressV = Clamp;

};

SamplerState gTriLinearSam

{

Filter = MIN_MAG_MIP_LINEAR;

AddressU = Wrap;

AddressV = Wrap;

};

struct VS_IN

{

float3 posL : POSITION;

float3 tangentL : TANGENT;

float3 normalL : NORMAL;

float2 texC : TEXCOORD;

};

struct VS_OUT

{

float4 posH : SV_POSITION;

float3 posW : POSITION;

float3 tangentW : TANGENT;

float3 normalW : NORMAL;

float2 texC : TEXCOORD0;

float4 projTexC : TEXCOORD1;

};

VS_OUT VS(VS_IN vIn)

{

VS_OUT vOut;

// Transform to world space space.

vOut.posW = mul(float4(vIn.posL, 1.0f), gWorld);

vOut.tangentW = mul(float4(vIn.tangentL, 0.0f), gWorld);

vOut.normalW = mul(float4(vIn.normalL, 0.0f), gWorld);

// Transform to homogeneous clip space.

vOut.posH = mul(float4(vIn.posL, 1.0f), gWVP);

// Generate projective tex-coords to project shadow map onto scene.

vOut.projTexC = mul(float4(vIn.posL, 1.0f), gLightWVP);

// Output vertex attributes for interpolation across triangle.

vOut.texC = mul(float4(vIn.texC, 0.0f, 1.0f), gTexMtx);

return vOut;

}

float CalcShadowFactor(float4 projTexC)

{

// Complete projection by doing division by w.

projTexC.xyz /= projTexC.w;

// Points outside the light volume are in shadow.

if( projTexC.x < -1.0f || projTexC.x > 1.0f ||

projTexC.y < -1.0f || projTexC.y > 1.0f ||

projTexC.z < 0.0f )

return 0.0f;

// Transform from NDC space to texture space.

projTexC.x = +0.5f*projTexC.x + 0.5f;

projTexC.y = -0.5f*projTexC.y + 0.5f;

// Depth in NDC space.

float depth = projTexC.z;

// Sample shadow map to get nearest depth to light.

float s0 = gShadowMap.Sample(gShadowSam,

projTexC.xy).r;

float s1 = gShadowMap.Sample(gShadowSam,

projTexC.xy + float2(SMAP_DX, 0)).r;

float s2 = gShadowMap.Sample(gShadowSam,

projTexC.xy + float2(0, SMAP_DX)).r;

float s3 = gShadowMap.Sample(gShadowSam,

projTexC.xy + float2(SMAP_DX, SMAP_DX)).r;

// Is the pixel depth <= shadow map value?

float result0 = depth <= s0 + SHADOW_EPSILON;

float result1 = depth <= s1 + SHADOW_EPSILON;

float result2 = depth <= s2 + SHADOW_EPSILON;

float result3 = depth <= s3 + SHADOW_EPSILON;

// Transform to texel space.

float2 texelPos = SMAP_SIZE*projTexC.xy;

// Determine the interpolation amounts.

float2 t = frac( texelPos );

// Interpolate results.

return lerp(lerp(result0, result1, t.x),

lerp(result2, result3, t.x), t.y);

}

float4 PS(VS_OUT pIn) : SV_Target

{

float4 diffuse = gDiffuseMap.Sample( gTriLinearSam, pIn.texC );

// Kill transparent pixels.

clip(diffuse.a - 0.15f);

float4 spec = gSpecMap.Sample( gTriLinearSam, pIn.texC );

float3 normalT = gNormalMap.Sample( gTriLinearSam, pIn.texC );

// Map [0, 1] --> [0, 256]

spec.a *= 256.0f;

// Uncompress each component from [0, 1] to [-1, 1].

normalT = 2.0f*normalT - 1.0f;

// build orthonormal basis

float3 N = normalize(pIn.normalW);

float3 T = normalize(pIn.tangentW - dot(pIn.tangentW, N)*N);

float3 B = cross(N, T);

float3×3 TBN = float3×3(T, B, N);

// Transform from tangent space to world space.

float3 bumpedNormalW = normalize(mul(normalT, TBN));

float shadowFactor = CalcShadowFactor(pIn.projTexC);

// Compute the lit color for this pixel.

SurfaceInfo v = {pIn.posW, bumpedNormalW, diffuse, spec};

float3 litColor = ParallelLight(v, gLight, gEyePosW, shadowFactor);

[branch]

if( gCubeMapEnabled )

{

float3 incident = pIn.posW - gEyePosW;

float3 refW = reflect(incident, bumpedNormalW);

float4 reflectedColor = gCubeMap.Sample(gTriLinearSam, refW);

litColor += (gReflectMtrl*reflectedColor).rgb;

}

return float4(litColor, diffuse.a);

}

technique10 ShadowTech

{

pass P0

{

SetVertexShader( CompileShader( vs_4_0, VS() ) );

SetGeometryShader( NULL );

SetPixelShader( CompileShader( ps_4_0, PS() ) );

}

}

13.4.6 Filtering and the Shadow Map Test

The projective texture coordinates (u, v) used to sample the shadow map generally will not coincide with a texel in the shadow map. Usually, the coordinates will hit between four texels. With color texturing, this is solved with bilinear interpolation (§7.4). However, [Kilgard01] points out that we should not average depth values, as this can lead to incorrect results about a pixel being flagged in shadow. (For the same reason, we also cannot generate mipmaps for the shadow map.) Instead of interpolating the depth values, we interpolate the results. That is, we use point filtering (MIN_MAG_MIP_POINT) and sample the texture with coordinates (u, v), (u + Δx, v), (u + Δx), (u + Δx, v + Δx), where Δx = 1 / SHADOW_MAP_SIZE. Since we are using point sampling, these four points will hit the nearest four texels s0, s1, s2, and s3, respectively, surrounding (u, v), as shown in Figure 13.9. We then do the shadow map test for each of these sampled depths and bilinearly interpolate the shadow map results:

Figure 13.9: Taking four shadow map samples.

static const float SHADOW_EPSILON = 0.001f;

static const float SMAP_SIZE = 1024.0f;

static const float SMAP_DX = 1.0f / SMAP_SIZE;

…

// Sample shadow map to get nearest depth to light.

float s0 = gShadowMap.Sample(gShadowSam,

projTexC.xy).r;

float s1 = gShadowMap.Sample(gShadowSam,

projTexC.xy + float2(SMAP_DX, 0)).r;

float s2 = gShadowMap.Sample(gShadowSam,

projTexC.xy + float2(0, SMAP_DX)).r;

float s3 = gShadowMap.Sample(gShadowSam,

projTexC.xy + float2(SMAP_DX, SMAP_DX)).r;

// Is the pixel depth <= shadow map value?

float result0 = depth <= s0 + SHADOW_EPSILON;

float result1 = depth <= s1 + SHADOW_EPSILON;

float result2 = depth <= s2 + SHADOW_EPSILON;

float result3 = depth <= s3 + SHADOW_EPSILON;

// Transform to texel space.

float2 texelPos = SMAP_SIZE*projTexC.xy;

// Determine the interpolation amounts.

float2 t = frac( texelPos );

// Interpolate results.

return lerp(lerp(result0, result1, t.x),

lerp(result2, result3, t.x), t.y);

In this way, it is not an all-or-nothing situation; a pixel can be partially in shadow. For example, if two of the samples are in shadow and two are not in shadow, then the pixel is 50% in shadow. This creates a smoother transition from shadowed pixels to non-shadowed pixels (see Figure 13.10).

Note: The HLSL frac function returns the fractional part of a floating-point number (i.e., the mantissa). For example, if SMAP_SIZE = 1024 and projTex.xy = (0.23, 0.68), then texelPos = (235.52, 696.32) and frac(texelPos) = (0.52, 0.32). These fractions tell us how much to interpolate between the samples. The HLSL lerp(x, y, s) function is the linear interpolation function and returns x + s(y – x) = (1 – s)x + sy.

Figure 13.10: In the top image, observe the “stairstepping” artifacts on the shadow boundary. On the bottom image, these aliasing artifacts are smoothed out a bit with filtering.

Note: Even with our filtering, the shadows are still very hard and the aliasing artifacts can still be unsatisfactory close up. More aggressive methods can be used; see [Uralsky05], for example. We also note that using a higher-resolution shadow map helps, but can be cost prohibitive.

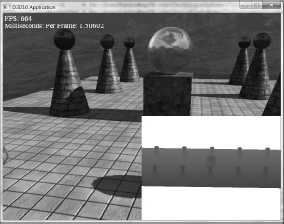

13.4.7 Rendering the Shadow Map

For the Shadow Map demo (available in the download files), we also render the shadow map onto a quad that occupies the lower-left corner of the screen. This allows us to see what the shadow map looks like for each frame. Recall that the shadow map is just a depth buffer texture with the D3D10_BIND_SHADER_RESOURCE flag, so it can also be used to texture a surface. The shadow map is rendered as a grayscale image since it stores a one-dimensional value at each pixel (a depth value). Figure 13.11 shows a screenshot of the demo.

Figure 13.11: Screenshot of the Shadow Map demo.

13.5 Summary

![]() The back buffer need not always be the render target; we can render to a different texture. Rendering to texture provides an efficient way for the GPU to update the contents of a texture at run time. After we have rendered to a texture, we can bind the texture as a shader input and map it onto geometry. Many special effects require render to texture functionality, such as shadow maps, water simulations, and general-purpose GPU programming.

The back buffer need not always be the render target; we can render to a different texture. Rendering to texture provides an efficient way for the GPU to update the contents of a texture at run time. After we have rendered to a texture, we can bind the texture as a shader input and map it onto geometry. Many special effects require render to texture functionality, such as shadow maps, water simulations, and general-purpose GPU programming.

![]() With an orthographic projection, the viewing volume is a box (see Figure 13.2) with width w, height h, near plane n, and far plane f, and the lines of projection are parallel to the view space z-axis. Such projections are primarily used in 3D science or engineering applications, where it is desirable to have parallel lines remain parallel after projection. However, we can use orthographic projections to model shadows generated by parallel lights.

With an orthographic projection, the viewing volume is a box (see Figure 13.2) with width w, height h, near plane n, and far plane f, and the lines of projection are parallel to the view space z-axis. Such projections are primarily used in 3D science or engineering applications, where it is desirable to have parallel lines remain parallel after projection. However, we can use orthographic projections to model shadows generated by parallel lights.

![]() Projective texturing is so-called because it allows us to project a texture onto arbitrary geometry, much like a slide projector. The key to projective texturing is to generate texture coordinates for each pixel in such a way that the applied texture looks like it has been projected onto the geometry. Such texture coordinates are called projective texture coordinates. We obtain the projective texture coordinates for a pixel by projecting it onto the projection plane of the projector, and then mapping it to the texture coordinate system.

Projective texturing is so-called because it allows us to project a texture onto arbitrary geometry, much like a slide projector. The key to projective texturing is to generate texture coordinates for each pixel in such a way that the applied texture looks like it has been projected onto the geometry. Such texture coordinates are called projective texture coordinates. We obtain the projective texture coordinates for a pixel by projecting it onto the projection plane of the projector, and then mapping it to the texture coordinate system.

![]() Shadow mapping is a real-time shadowing technique that shadows arbitrary geometry (it is not limited to planar shadows). The idea of shadow mapping is to render the depth of the scene from the light’s viewpoint into a shadow map, after which the shadow map stores the depth of all pixels visible from the light’s perspective. We then render the scene again from the camera’s perspective, and we project the shadow map onto the scene using projective texturing. Let s(p) be the depth value projected onto a pixel p from the shadow map and let d(p) be the depth of the pixel from the light source. Then p is in shadow if s(p) < d(p); that is, if the projected pixel depth is less than the depth of the pixel, then there must exist a pixel closer to the light which occludes p, thereby casting p in shadow.

Shadow mapping is a real-time shadowing technique that shadows arbitrary geometry (it is not limited to planar shadows). The idea of shadow mapping is to render the depth of the scene from the light’s viewpoint into a shadow map, after which the shadow map stores the depth of all pixels visible from the light’s perspective. We then render the scene again from the camera’s perspective, and we project the shadow map onto the scene using projective texturing. Let s(p) be the depth value projected onto a pixel p from the shadow map and let d(p) be the depth of the pixel from the light source. Then p is in shadow if s(p) < d(p); that is, if the projected pixel depth is less than the depth of the pixel, then there must exist a pixel closer to the light which occludes p, thereby casting p in shadow.

13.6 Exercises

1. Write a program that simulates a slide projector by projecting a texture onto the scene. Experiment with both perspective and orthographic projections.

2. Modify the solution to Exercise 1 by using conditional statements so that points outside the projector’s frustum do not receive light.

3. Modify the solution to Exercise 1 by using a spotlight so that points outside the spotlight cone do not receive any light from the projector.

4. Modify this chapter’s demo application by using a perspective projection. Note that the ε used for bias in the shadow map test that worked for an orthographic projection might not work well for a perspective projection. So you may need to tweak ε. When using a perspective projection, notice that the depth map is heavily biased to white (1.0). Does this make sense considering the graph in Figure 5.27?

5. Experiment with the following shadow map resolutions: 2048 × 2048, 1024 × 1024, 512 × 512, 256 × 256. Be sure to also update the effect constants that depend on the size:

static const float SMAP_SIZE = 1024.0f;

static const float SMAP_DX = 1.0f / SMAP_SIZE;

6. Derive the matrix that maps the box [l, r] × [b, t] × [n, f] → [–1, 1] × [–1, 1] × [0, 1]. This is an “off-center” orthographic view volume (i.e., the box is not centered about the view space origin). See D3DXMatrixOrthoOffCenterLH.