One of the most difficult tasks in a computer game is to convey emotion. While some games have no room for emotional content, many more benefit greatly from it. Modern computers finally have the processing power and graphics capability needed to show a realistic-looking human face. Players have been conditioned since birth to instantly read nuances of human expression, but technology to show them is relatively new. With motion-capture data touched up by a professional animator, a simulated face and body stance can convey nearly anything. Things change when the AI is in charge.

The AI programmer’s first protest is quite clear: “It’s all I can do to get them to decide what to do. You mean I have to get them to decide what to feel?” Upon rising to face that challenge, the AI programmer faces the next hurdle: “How do I get the AI to show what it is feeling? Many AI programmers lack professional training as artists, psychologists, and actors; worse yet, many of them are introverts, outside their comfort zone when dealing with emotionally charged content. Inexperienced AI programmers who also lack those skills and a wide comfort zone could easily conclude that there is no way for them to model and show emotions under the control of game AI. They would be wrong on both counts.

To start with, they are trying to solve the wrong problem. The core problem is not how to model and show emotion in games. Showing emotions is the core problem in realistic simulations such as the virtual Bosnian village given in [Gratch01]. Showing emotion is a secondary problem in games. This may sound completely counterintuitive; what could be more emotionally engaging than showing emotion? The real problem to solve in games is evoking an emotional response in the player. How we get there is a secondary problem and a free choice. In simulation, showing emotions is primary. Evoking emotions is secondary, but it is a strong indicator of success. As luck would have it, modeling emotions in games is not a particularly difficult problem. To varying degrees of fidelity, we can make our game AI feel at a level comparable to how well our AI thinks. We do need to keep foremost in our minds that the feelings that count are the player’s feelings.

AI game programmers are afforded great liberties with the Al’s “feelings.” Everything from faking it to sophisticated models beyond the scope of this book is perfectly acceptable. Modeling feelings may be unfamiliar, but it is closely analogous to modeling thinking. In games, we are not required to particularly care about the AI’s feelings. Even if the AI programmer creates a high-fidelity emotion system, the game designer may override the AI’s feeling at any time. We are familiar with overrides from the behavior side already; the designer says, “Make it do this, here, regardless of what the AI thought it should do.” The designer does this for dramatic impact. The designer may also say, “Make it feel angry here, regardless of what it wants to feel.” The AI might have decided to feel depressed, or it might be coldly planning future retribution, but the designer wants anger for the emotional impact.

While we do not particularly care about what the AI is feeling, we care deeply about what the player is feeling. We especially care when the player has feelings about the virtual characters in the game. “I’ve saved them! I’ve saved them all!” the player shouts. On occasion, we can get the player to be affected by the feelings attributed to the virtual characters. “Oh, no! She’s going to be really angry at me!” the player says. These are emotional responses from the player. They do not require emotions to actually be present in the virtual characters. People attach sentimental value to non-feeling objects in real life; they even attribute feelings to inanimate objects. Often, children worry about how lonely a lost toy is going to be more than they worry about how sad they will be if they never get it back.

The game succeeds when players feel compelled to describe their playing experiences to their friends with sentences that start out, “I was so...” and end with words like “pumped,” “scared,” “thrilled,” or even “sad.” Evoked emotions do not always have to be positive to be meaningful. Games are an art form capable of a wide range of expression, for good or ill. We have already seen that the real problem with bad AI is that it frustrates, annoys, or angers the player. We would like to avoid evoking those particular emotions in the player. The AI can be the most frustrating part of a computer game, but with some effort, it can also be one of the parts that evokes the strongest positive emotions.

Showing human faces expressing simulated emotions is a tremendously powerful tool for evoking an emotional response in the player, but it is far from being the only tool. There are other, less direct means—many of which are far cheaper to implement. Novice AI programmers might not want to start with the most complex tool available. We can evoke emotion via music, mood, plot, and even camera control. This is only a partial list; the AI can creep into nearly everything, giving the game better chances at evoking an emotional response from the player. These tend to be design elements, but they have to be controlled somehow, and that somehow is AI.

Before delving into other tools, we should recall our definition of game AI to see if what we will be doing is really AI. Our AI reacts intelligently to changing conditions. Things that will never change have no need of AI. A face that never changes expression or lighting that never changes or even music that never changes require no decisions. These static elements start out purely as art, music, and level design. These elements need not be static, but once they start changing, they raise the question, “How do we control the changes?” This is the realm of AI—even if artists, musicians, and level designers do not always think that way at first. Like any part of game AI, once it is well understood, it stops being AI to many people. Everyone knows how to go somewhere, but pedestrians, drivers, and pilots have different skills, levels of training, and available tools to get themselves someplace else. The job of the AI programmer is to bring intelligence into any part of the virtual world that would benefit from it. Doing so gives the entire creative team a richer palette of tools with which to craft an emotionally engaging experience. They all know how to “walk but the AI programmer provides them a “pilot and plane when they need one.

Before we get into how we might evoke an emotional response from the player, we will consider what emotions games evoke. After that, the bulk of this chapter will go over tools that can be used to evoke an emotional response in the player other than body posture and facial expression. These more indirect methods have an impact on what the AI programmer needs to know about other team member’s jobs. This chapter concludes with a treatment of modeling emotional states using techniques ranging from simple to sophisticated. The projects for the chapter start out with simple touches, progress to an FSM for emotions, and finish with a relationship model loosely based on The Sims. In prior chapters, we have shown that beginners can learn how to program game AI for behaviors. In this chapter, we will show that modeling emotions can be just as accessible.

As a programmer, you may be thinking something along the lines of, “I’m a programmer, not a psychologist.” But the emotions we are dealing with make a pleasantly short list. Our list, shown in Table 9.1, is taken from XEODesign’s research into why people play games [Lazzaro04]. They observed people playing popular games and studied the players’ responses and what triggered them. Words with a non-English origin are marked. This list is not meant to be exhaustive. For example, relief is not listed. Relief may be thought of as the removal of fear or feelings of pressure. This list was also aimed at emotions that come from sources other than story.

Table 9.1. Emotions and Their Triggers

Emotion | Common Themes and Triggers |

|---|---|

Fear | Threat of harm, an object moving quickly to hit player, a sudden fall or loss of support, or the possibility of pain. |

Surprise | Sudden change. Briefest of all emotions, does not feel good or bad. After interpreting the event, this emotion merges into fear, relief, etc. |

Disgust | Rejection, as with food or behavior outside the norm. The strongest triggers are body products such as feces, vomit, urine, mucus, saliva, and blood. |

Naches/kvell (Yiddish) | Pleasure or pride at the accomplishment of a child or mentee. (Kvell is how it feels to express this pride in one’s child or mentee to others.) |

Fiero (Italian) | Personal triumph over adversity. The ultimate game emotion. Overcoming difficult obstacles. Players raise their arms over their heads. They do not need to experience anger prior to success, but the accomplishment does require effort. |

Schadenfreude (German) | Gloat over misfortune of a rival. Competitive players enjoy beating each other—especially a long-term rival. Boasts are made about player prowess and ranking. |

Wonder | Overwhelming improbability. Curious items amaze players at their unusualness, unlikelihood, and improbability without breaking out of realm of possibilities. |

The research showed these emotions link to the following four different ways of having fun [Lazzaro07]. It should hardly be a surprise that the most popular titles incorporate at least three of the four.

Hard fun: The fun of succeeding at something difficult

Easy fun: The fun of undirected play in a sandbox

Serious fun: “Games as therapy”: fun with a purpose

People fun: The fun of doing something with others

Game designers must be fluent in these areas, and AI programmers should at least be familiar with them. The AI will be tasked with supporting these kinds of fun and evoking these emotions in the players. When designers ask for some “good AI,” the AI programmer should ask if they mean “hard to beat” or “fun to play with or something altogether different. In order for AI programmers to implement a good AI, they have to implement the right AI. Let us consider the many ways such an AI can express itself.

Musicians will tell you that half the emotional content of a film is in the musical score. Music is a powerful tool for evoking a full range of responses in the player. Games have incorporated it since shortly after the first PC sound cards became available. Unlike films, games can exhibit a fluid control over their music and change it in response to player actions. Games can do more than pick what music fits a scene. Back in 1990, the game Wing Commander not only made smooth transitions in the music every two to four bars on beat boundaries, but it also transitioned instantly when there was a serious change in the game state, such as a missile chasing the player [Sanger93].

Something has to decide what to play and when. That something is just another form of AI with new outputs. At its simplest, music follows a fixed script with no changes based on player interaction. The designer says, “This is the music I want for this level. If the designer wants more out of the game, he or she will want player interaction to drive as much of the experience as possible. Interaction is the key differentiator between games and other media. “I need this game to react to what the player does and do something appropriate are the marching orders for the AI, whether it is doing resource allocation in a strategy game or music selection in a flight simulator.

Music has similar drawbacks to facial expression. Music is complex and dynamic and hard to describe, but people almost innately respond to it. That is to say, critical listeners are far more common than skilled practitioners. Skilled game musicians are rarer still, but they exist, and the industry is growing. Designers will want to place certain demands on music. The AI programmer should be part of the process—and the earlier, the better. Because music is such a powerful tool, the designer may demand total control of all music changes. AI programmers might think that this takes them out of the loop, but they may be the people tasked with translating the designer’s demands into code. As the designer’s vision expands beyond the simplest triggers, the need for code to reason and react will increase. A holistic approach that includes considering “music AI” ensures that even if the music only has the simplest of changes, no creative capability was unintentionally excluded because no one thought about designing it in.

Mood here is a catch-all intended to pick up static elements, usually of a visual nature, that need not be static. Consider the possibilities when AI controls clothing, lighting, and even the very basic texture maps on the objects in the world. These are virtual worlds, after all, and the game studio has control over every bit of it. Mood elements easily play to fear, relief, wonder, and occasionally to surprise. They provide subtle support to the bold dramatic elements of the game.

Clothing can be under AI control if there are sufficient art assets to support it. Dress for Success was originally published in 1975, but wardrobe designers for theatre have long used costuming as visual shorthand to communicate unspoken information to the audience. People are used to thinking about facial expressions, but wardrobe expression shows at longer ranges and uses less detail. By using a consistent wardrobe palette, especially one that is well understood by the public, the game designer can communicate information to the player in more subtle ways than facial expression, dialog, or music. That information can carry emotional content or evoke an emotional response. Players who are less tuned in can be clued in via dialog. Consider the following exchange:

“Uh, oh, this is going to be bad. He’s wearing his black pin-stripe power suit.”

“What do you mean?”

“He wears that to fire people and when he doesn’t have a choice about ramrodding something down people’s throats. He wears his less-threatening navy-blue suit when he’s selling something that actually needs our cooperation”.

“That doesn’t sound very friendly.”

“If he wants you to relax, he’ll shed the jacket or maybe even loosen the tie. That’s when he asks you if you need to take some time off because your kid is in the hospital or something. He s not actually friendly unless he’s wearing a golf shirt someplace other than here.”

After that dialog, the game designer has prepped the player for the desired interpretations of the wardrobe choices presented. The designer has seeded potential emotional reactions that can be evoked by the game. Fear and trepidation get matched to the black pin-stripe suit. This is hardly new, but once again we have a venue for AI control. Scripting may provide enough control to manage wardrobe; after all, once upon a time it used to be sufficient for the entire AI of a game. Game AI in large part has left scripting behind for more dynamic techniques. These techniques are then applied to the more static elements as designers envision effective ways to exploit them.

Lighting falls into our category of mood as well. Lighting used to be static because computers lacked the processing power to do anything else. But these days, dynamic lighting is a fact of life in games; watching your shadow on the floor shorten as a rocket flies toward your retreating backside warns you in a very intuitive way of your impending demise. Just like clothing selection, AI control puts lighting selection in the designer s bag of tricks.

One day, all designers are going to ask, “Can we get the AI to do the lighting selection? A shifty character AI knows to turn off lights and meet only in dark places like poorly lit parking garages. A naive community activist AI prefers brightly lit places and broad daylight. If we can teach the AI to tell time and to use a light switch, the AI can do its own lighting selection. If the activist AI likes candlelit dinners, we will have to teach the AI about candles as well. A virtual character who actively lights candles and turns lights off fishes for an emotional response from the player more directly than if the character simply meets with others in dark and spooky parking garages. The action is more noticeable than the ambience. The AI of the activist could also decide that it is annoyed and turn the lights back on and blow out the candles. Seeing that is likely to evoke an emotion in the player as well. Even if the AI can’t handle candles, the emotional payloads delivered by turning the lights down and then back up compared to the low cost of having an AI that knows the difference between business and pleasure makes a strong case for AI-controllable lighting. An FSM might be all that is needed to model the emotional state of the AI.

The level of AI devoted to lighting control varies from the simple controls described earlier to the baked-into-the-game control seen in Thief 3, Deadly Shadows [Spector04]. AI-controlled dynamic lighting shows great promise for a more engaging player experience, especially with non-gamers and casual gamers. The ALVA lighting control system given in [El-Nasr09] improved player performance and lowered player frustration.

Unlike clothing, the additional asset cost for AI-controlled lighting is quite low. Light fixtures and light switches would already be in the level. Compared to outfits, soft lighting is cheap. Lighting is just as visual as clothing selection.

Virtual characters are not the only things in a level that wear “clothing.” As noted by Chris Hecker when he posited that one day there would be a Photoshop of AI, graphics rendering in games is done via texture-mapped triangles [Hecker08]. For those unfamiliar with graphics, a texture is an image that is applied to the geometric skin of an object, and that skin is built using triangles. A flat wooden door and a flat metal door might share the same geometric skin, but they use different textures to make that skin look like wood or metal. The graphics system can switch between those skins easily after an artist has created them both and they are loaded into the system. This takes us down the same rabbit hole into Wonderland that the rest of the chapter falls into. If it can be changed, that change will need control, and why not have the AI manage some of it?

This one really could take us to Wonderland. We start out being able to change the color of the leaves, which happens in the real world, and wind up wherever the crazed minds of artists and designers can take us. Imagine a world in which an unlimited supply of spray paint (including transparent) and wallpaper can be applied instantly to anything and everything, changing every time you blink or close your eyes. Shapes remain constant, but appearances change instantly.

Luckily, we do not have to go all the way into this Wonderland. More subtle and skilled artists could take us partway there, to a world where things change when we are not looking at them. Imagine a game featuring a house shared by a strange old man and his daughter. When he is home, the place is dirty and dingy, complete with the occasional cobweb on the inside and graffiti on the exterior. When she is home, it is clean and shiny; no cobwebs and no graffiti. What kind of emotional reactions are we evoking in the player when they interact with either character? Presume nothing bad ever happens to the player when he or she visits the house or interacts with either character. Given the cumulative effect on the player of exposure to countless horror movies of questionable value, what kind of emotional response is the player going to have every time he or she visits this house?

This kind of world inspires new genres of games. Imagine a God game in which the player is responsible for carrying out the orders of a capricious god modeled on some stereotypical gamer. The very walls of the buildings seem happy when the god is happy; you would certainly know who is recently out of favor and who has recently regained favor. This is fertile ground for evoking emotions in the player, limited by the imagination of the game designer, the skills of the artists, and the abilities of the AI programmer to keep it under control. Typical AI tries to simulate thoughts turning into actions. It is only a modest leap to transform this into simulating feelings and turning them into effects. We need only modest fidelity in the simulated feelings as long as the effects evoke the right emotions in the players.

The emotional responses we listed earlier were taken from gameplay outside of story. Plot provides rich opportunities for evoking emotions in the player. Consider a game in which the player runs a fragment of a conflict-torn country. He is joined by an advisor, who comes with supporting forces. The advisor gives sound advice and offers good ideas for the player to consider. Sometime later, the advisor betrays the player and goes over to the other side, helping lead the enemy. A typical emotional response to this is a feeling of betrayal, followed by an urge for revenge that revitalizes the player and prompts him or her to play the game to completion to defeat the traitor. If the betrayal is part of a fixed script, this setup is a plot device and not part of the AI. But what if the game design does not require the betrayal? What if the game decides whether or not to use this device? We are back to AI, possibly some very easy AI to write.

A rich game would use the same betrayal device both ways. A skilled player gets betrayed as described, but a struggling player would be gifted with the other side’s advisor changing sides, bringing with him plans, support, and gratitude. This is just as likely to cause an emotional response in the player as being stabbed in the back. The positive feelings can be reinforced if the advisor occasionally thanks the player and offers up advice or volunteers to do dangerous missions.

The game AI could pick which way the betrayal works, or even if the betrayal happens at all. An experienced game designer will point out that branching scripts are hardly new and might claim that they are not AI. They are, as we saw in Chapter 2, “Simple Hard-Coded AI.” They just do not require an AI programmer to script them. The scripting system itself is probably the work output of an AI programmer. The AI programmer does need to make sure that the scripting system does not get in the way of the designers, but instead frees and inspires them. Their needs might require the techniques of other chapters. The AI programmer may not have training as a playwright, but the AI programmer can ask the designers what tools they need to ensure that they can evoke an emotional response from the player. Someone else may write the script, but AI owns the action. It is possible for the AI to own the script, but this is rare.

In the game Facade, the AI owns the entire plot sequence. The AI interprets player input as best it can and reacts accordingly. In this one-act interactive drama, the AI has access to 27 plot elements, called beats in dramatic writing theory, and any particular run-through of the game typically encounters about 15 of them [Mateas05]. Facade certainly succeeds as interactive drama, but it is not the first thing people think of when they think about computer games. While it does not fit this chapter’s “on the cheap” emphasis on techniques that are accessible to beginners, it does show just how far AI-controlled plot has already gone. For many designers, there are serious issues beyond abandoning control to the AI; Facade shows at most 25 percent of its total available content in any given run, often less. Unless the experience provides a compelling reason for repeated play, the rest of the assets are effectively wasted.

Somewhere between simple branching scripts and total AI control, designers find their particular sweet spot for AI interaction with the plot. This is the spot that the AI programmer must support. The simplest techniques can be added as necessary, but more complex capabilities must be designed in from the start.

It’s no surprise that computer games are often called video games; this is due in part to their history, but also to the fact that games have such strong visual elements to them. We touched on camera AI in Chapter 2. Camera scripts allow the designer to take control of what the player sees and from what perspective. Camera scripts can be used to make sure that the player can appreciate all the work the art team has put into the game. Between the scripts and the artwork, there should be no problem with evoking emotions in the player. Did the runway survive the combat unscathed? A touch of camera AI at the right point in the game brings relief or dismay to the player without saying a word or showing a face.

There are two kinds of camera AI to consider. The camera AI described earlier is on behalf of the designer, who wants to achieve dramatic impact. Like many of the items in this chapter, the AI is simple to provide, and the real emphasis is on game design. There is also camera AI that is on behalf of the player. Such AI need not exist in first-person games, but when we leave first person for another point of view, we need camera AI. Third-person viewpoints, particularly the chase camera view, present interesting problems to the camera AI. The first problem to consider is when the camera hits a wall.

To fully appreciate the problem, a quick graphics refresher may be in order. Think of the objects in a 3D world as if they were blow-up balloons. Their geometry “blows them up” to their final shape, and we paint textures on the outside surfaces. In order to save CPU time, we don’t paint on the inside surfaces; they might as well not exist. Real balloons are closed, so they hold air, and we want our objects to be closed so that no one can see inside them and spoil the illusion that they are solid objects.

Our objects can be as interesting as the real balloons seen in the Macy’s New Year’s Day Parade or as mundane as a flat sheet of wallboard. We use a mesh of flat triangles instead of curving air-tight fabric. It takes a large number of triangles to build a detailed world, particularly when our objects appear curved. The more triangles we consider and the longer we consider them, the slower our system draws.

One very easy way to reject a triangle is to check which way the triangle is facing. If the painted outside surface, known as the front face, is facing away from us, we do not need to draw the triangle. The unpainted back face is facing us, and no one is ever supposed to see it. As long as our object is closed—it “holds air”—and we are not inside it, we know that there is a front face somewhere on this object that is closer to the camera than the back face we are considering. This technique is called back-face culling and has been used to speed up rendering since the dawn of time in computer graphics. Roughly speaking, back-face culling lets us reject half the triangles of every object in the scene.

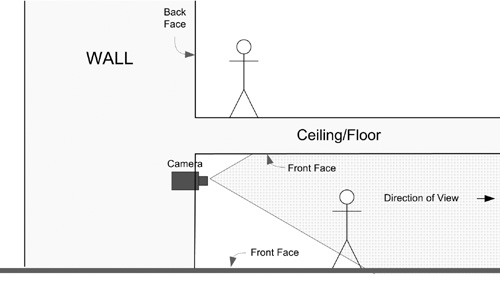

Figure 9.1 shows an over-the shoulder camera view inside a two-story building. The figure on the first floor is the player. The player’s views above and below are blocked by the front faces of the floor and ceiling. The player is facing away from the thick exterior wall shown. The shaded area indicates what the camera can see.

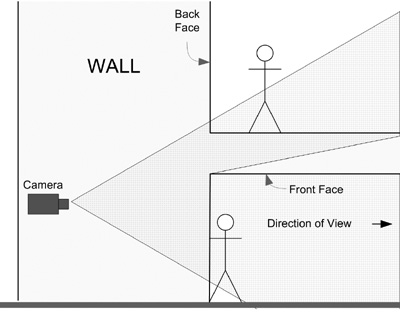

What happens if the player puts his back to the wall? We can either make the camera change viewpoint or let the camera penetrate the wall. At first blush, letting the camera penetrate the wall seems enticing. Back-face culling effectively removes the wall, so we will still see the player exactly as before. Figure 9.2 shows potential problems that happen when we let the camera get “inside” the geometry. We want it to look through the back face of the wall to show us the player, but the camera can also see through the back face of the floor above, showing us the lower half of the figure on the second floor. The field of view has extended past the corner of the ceiling and the wall, giving a partial view of the second floor. The front face of the ceiling still blocks the rest of the second floor. The problematic corner configuration seen in the side view of the wall and ceiling also shows up in top-down views when one wall meets another wall. When walls meet, a camera pushed inside the wall sees sideways past the walls of the current room.

The thick exterior wall makes this point of view possible. Because the first-floor ceiling does not extend into the wall, its front face no longer prevents us from seeing part of the upstairs. Extending the ceiling into the wall has two drawbacks. The first is that it adds triangles that will rarely be seen, making work for our artists and for our graphics pipeline for very little gain. The second is that if we put our back to the other side of the wall, the camera would again go into the wall; we would see the extended ceiling, and there is not supposed to be a ceiling there! Note that on the left side of the wall, the view is open to the sky; this is an exterior wall. This thick wall creates problems!

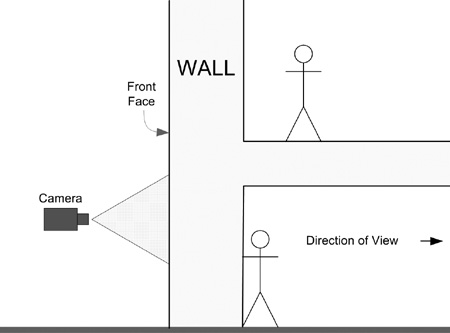

As shown in Figure 9.3, a thin wall presents problems, too. Figure 9.3 makes it clear that we cannot let the camera punch through walls from the inside heading back out, or all we will see is a close-up of the other side of the wall we have our back to. It is clear that something must be done.

We might consider fighting the issues presented by Figure 9.2 and Figure 9.3 with tightly constrained level designs and careful attention to camera placement. A tall room with waist-high furniture gives the camera more space than the player has, keeping it out of trouble. A camera that is close to the player can penetrate walls without punching through the back side or seeing around corners. A narrow field of view keeps the camera from peeking into places other than where the player happens to be. All those limitations go out the window when a designer says, “That’s too constraining, and third-person is too good to give up. Make the camera smart enough to keep itself out of trouble.” Even if the designer allows a constrained level, the camera could conflict with mobile objects such as a taller character walking up behind the player. The view from inside a character is just as jarring as the view from inside a wall, and the camera needs to be smart enough to avoid it. This is camera AI on the player’s behalf. The designer is really saying, “Keep the player’s eyes out of trouble.” It can be done, but great care must be taken to do it well.

Early camera AI of this type had some teething problems. The camera would make sudden and hard-to-anticipate changes in viewpoint to keep it out of trouble with the surrounding walls. Players fought against the camera AI as much as they fought off their enemies. The changing viewpoint changed the player’s aim point, making combat while moving an exercise in frustration. All of this evoked an emotional response in the player, but disgust probably was not what the game designer had in mind.

Other games incorporated camera AI from the outset. The system described in [Carlise04] used steering behaviors and scripting to control the camera. They envisioned adding rules to the system for more “film-like” transitions. Effective camera AI adds to the player’s enjoyment. Beyond effective camera AI, there is room for the camera AI to provide emotional impact.

The lesson for camera AI is that it is a powerful tool that needs to be used carefully. Taking control of the player’s eyes should be done sparingly and carefully. Otherwise, it is easy to frustrate the player. (Of course, preventing the player from coming to harm while the AI is controlling the camera would be considerate.) But with the proper care and an integrated approach, having full control over the player’s eyes is too powerful a tool to ignore, especially when the game is trying to create an emotional response in the player.

Cross-pollination is not possible without something to cross with. AI programmers do not have to be expert designers, artists, musicians, set designers, writers, or costume designers, but all of those skills are helpful. We could also add a touch of psychology and ergonomics for completeness. If the AI programmer lacks these skills, he or she needs to be able to ask the right questions of the team members who have them. The programmer also needs to be careful to always offer up his or her own special skills—making things think, or possibly making things feel—to the rest of the team. Working together, the team can produce games in which the richness of the interactivity produces a wide range of emotions in the players. To the player, having done something is fine, but having done something and felt good about it is better.

Much as the interactions of software agents give us emergent behaviors, so do the interactions between team members on a game cause a game to emerge. This is why good communication skills are commonly listed on job openings. For AI programmers, having a wide range of knowledge in other areas improves communication with the members of the team who are working in those areas. There is a great deal of synergy, particularly when AI programmers and animators work closely together to solve each other’s problems. AI programmers should exploit the expertise of the sound designer if the team is blessed with one. Recall that emergent behavior depends on the richness of the interactions; if the agents do not react to other agents’ behaviors, then nothing emerges. The first step for the AI programmer is speaking “their” language; the next steps are seeing things their way and offering his or her own abilities as a solution to their problems. If the AI programmer can talk to the rest of the team, one of the problems that he or she can help solve is when a designer says, “I’d like the player to feel....”

Computer games have modeled emotions for a very long time. In 1985, Balance of Power modeled not only integrity, but pugnacity and nastiness as well [Crawford86]. While the first two might be deemed merely an observation of the facts, nastiness is close enough for our purposes to be considered as a modeled emotion. Since then, games have made great strides in modeling emotions.

Most of this chapter talks about evoking an emotional response in the player. Some of the methods imply that the AI itself has emotions to display. How do we model the emotional state of the AI? For many AIs, an FSM is sufficient to model emotional states. The Sims does not directly model emotions, but it does model relationships. More complex systems store emotional state as a small collection of numeric values, each indicating the strength of the feeling. A single emotion might range from 0 to +100. Opposing emotions such as fear and confidence can be interpreted from a single stored value. This might call for a range of —100 to +100. The different emotions that can coexist are each stored as a separate value. Numerical methods can then be applied to the collection to determine how the emotional state should affect actions. We will examine a range of emotional models, starting with a simple addition to an FSM.

An FSM works for emotions as long as we only need a single emotion at a time. Recall from Chapter 3, “Finite State Machines (FSMs),” that FSMs work best when we have short answers to “I am...” Our simple-minded monster was attacking, fleeing, or hiding. The simplest possible way to make our monster feel would be to map a single emotion to each of the existing action states. It could be angry when attacking, afraid when fleeing, and happy when hiding. These are all believable for the conditions, and the amount of effort to implement them is extremely low. We get into trouble when we lack a clear mapping between what our AI is doing and what it is feeling, or when we are not already using an FSM.

The next level of sophistication would be to use a separate FSM for the emotional state of the AI. This allows a more sophisticated AI for actions than an FSM. A sports-coach AI might use a book of moves for action selection but could be augmented with an FSM for feelings. One coach AI may exhibit questionable judgment when it is upset or angry. A different coach AI might be programmed to show brilliance only when under pressure. In both cases, the emotional state of the AI is influencing its actions. If the game foreshadows these traits before using them and telegraphs the AI’s feelings when they are active, the player enjoys a richer experience.

The killer weaknesses of an FSM are that it can only be in one state at a time and that there are no nuances for a given state. For this reason, an FSM should be used only for the very simplest of emotional models. An FSM used this way does not allow the time-honored device of emotions in conflict. When we need to model more than one emotion at a time, and those emotions need a range of intensity, we are forced to use other techniques. Thankfully, those techniques present a range of complexity.

The game The Sims does not directly model emotions at all [Doornbos01]. But the Sims make friends, fall in love, and acquire enemies. How can they do that without emotions? The Sims do not have emotions, but they do have needs, preferences (traits), and relationships. Friends, lovers, and enemies are a function of the relationships between the Sims. The psychological model for the Sims is that a strong positive relationship is created between Sims that meet each other s needs through shared interests. As a basis for relationships, real-world experience suggests that this one is pretty bulletproof. The Sims all have needs such as needs for food, comfort, fun, and social interactivity. Each individual Sim has a small number of traits selected from a much longer list of possible traits. These traits provide each Sim with individual preferences. Each Sim keeps its own relationship score with every other Sim it has met. Relationship scores need not be mutual. The relationship score runs from —100 to +100.

Positive interactions build the relationship score, and negative interactions reduce it. All Sims act to meet their most pressing need. The interaction that one Sim prefers to use to meet a need might not be an interaction preferred by the other Sim. The preferences color the interaction, changing each Sim’s relationship score with the other. If both Sims like the interaction, they both react positively. Thus, meeting needs through shared preferences builds positive relationships. It is not modeling emotions, but it certainly has proven effective.

Instead of modeling needs, other systems directly model emotions. The same —100 to +100 range that The Sims uses for needs is instead used for emotions. Often thought of as “sliders” (vertical scrollbars), each one carries a pair of opposed emotions. One might be joy versus sadness. Other pairs include acceptance versus disgust or fear versus anger. A small number suffices because the number of combinations grows very rapidly as more sliders are added. The system deals with all of the emotions combined, so look at the combinations to see if two emotions that are directly modeled give you an emotion that you are thinking of adding.

On this core data, an input and output system is required. It is pointless to model an emotion that the system cannot show. It is equally pointless to model a feeling that is only subtly different from other feelings. If the player cannot tell the difference, there is no difference. Just as in personality modeling, a broad brush is required. A few archetypes suffice. The system can output feelings directly into expression and posture if the animation assets to support them exist. It can output them indirectly through the kind of techniques described earlier in this chapter.

The input system has to make emotional sense of the world. Strong emotions fade over time, but the AI has to react to events in the world around it. The simplest methods concentrate on the impact of what directly happens to the AI. Taking damage causes anger or fear. Winning the game causes joy. Restricting emotions to direct inputs gives rise to a lack of depth in characters. If the AI has more depth, it needs to respond emotionally to events in the world that happened to something else.

More sophisticated AIs, particularly AIs that have plans and goals, can evaluate how events will affect their plans and goals and react with appropriate emotions [Gratch00]. This makes intuitive sense to people. Consider two roommates sharing their first apartment. The first roommate has a car, and the second one occasionally borrows it. What kind of emotional responses do we get when something goes wrong? “What do you mean, it’s no big deal that you got a flat and they will come out and fix it tomorrow? Of course you’re paying for it, but you don’t understand! I was going to drive to my girlfriend’s tonight!” The AI had a plan in place to achieve a goal, and that plan has been ruined. This is a perfect place for a negative emotion on the part of the AI. If the AI had a different plan to achieve the same goal, it would have a much different emotional response. “It’s a good thing she’s picking me up tonight. They better have it fixed by noon when I need to drive to work.” AI that deals in plans and goals is briefly touched upon in Chapter , “Topics to Pursue from Here.” As you study them, keep in the back of your mind how to incorporate emotional modeling into the AI. Writing an AI that voices its feelings using the spoken lines that illustrate this paragraph remains a very hard problem, even for experts. The point here is not to have an AI that speaks its feelings. The spoken text is used here as a vehicle to convey the emotional response of the AI to events that affect the AI’s plans. As we have seen, modeling emotions is not particularly difficult. Modifying a planning AI to help drive the emotional model is not a task for beginners, but it should present far fewer challenges to an AI programmer experienced with planning AIs.

As we have seen, the core data needed to model emotions is the easy part. The input and output systems carry more complexity. As you might expect, tuning the system as a whole is critical. There are some general guidelines. The first is that a handful of modeled emotions is enough. The range of 0 to +100 is also enough. Finer gradations do not improve the system. The hard stop of +100 or —100 is also perfectly acceptable; people can only get so angry, and as long as they do not have a stroke, more bad news will not make them any angrier. Less obvious is that effects—both input and output—need not be linear. The effect of the food need in The Sims is not linear. The difference in happiness between a fed Sim and a very well fed Sim is very small, even if one carries a need of+10 and the other a need of + 100. The difference in drive between a hungry Sim (—10) and a starving Sim (—100) is far more than a linear difference. The same idea could be applied to how anger affects good judgment; a small amount of anger creates a small impairment in inhibition, but serious anger drives the AI into actions that provide immediate satisfaction, regardless of their long-term cost. There is a wide array of non-linear curves suitable for modeling emotions. The behavioral mathematics of game AI is the subject of an entire book [Mark09]. Beginning AI programmers should know that simple linear equations will not be enough. Evaluation and selection functions are prime candidates for implementing these emotional effects.

Concentrating on evoking an emotional response from the player instead of on displaying the emotional states of AI agents gives game designers considerable latitude if they wish to exploit it. A holistic approach leaves no stone unturned in the quest to pack impact into the game. For many of the avenues, the costs are reasonable and the technical risks quite low. When games also demand good modeling of emotional states, they can pick a level of sophistication appropriate to their needs. The net effect of actively managing emotional content is a game to which players will have much stronger reactions than any otherwise equivalent competition.

The fact that emotional content under the control of some form of AI can be packed into nearly all aspects of a game means that doing so will have a schedule impact across the board. A number of low-cost items may not sum to an acceptable overall cost. Paying that cost is a gamble. Not all players will react in the same way to the emotional content of a game. Players who are sensitive and observant will have markedly different reactions from players who are not. There are players who are clueless about clothing, lighting, or even the impact of a dirty environment compared to a clean one. It is a well-understood concept in game AI circles that the AI can be too subtle to be appreciated.

Evoking emotions on the cheap does not free many games from the need to directly model emotions and display them graphically through facial expression and body stance. Games that put emotional content front and center this way may not have the budget to pack emotion into every possible additional avenue available. The designer may decide that having a main character show emotion is all that is required.

Dealing in emotions at all forces a broad swath of people to become aware of the potential to deliver emotional content, like it or not. Even when AI can accurately make the decisions, the rest of the game has to be able to exploit them. It may be the case that the rest of the team is not equipped with the analytical skills needed to judge the emotional impact of their work so that they can create multiple alternatives.

The emotional payload of the more nuanced effects requires a cultural context. The difference between a black pin-stripe suit and a navy-blue one will be lost in cultures where no one wears a suit. Worse than an “I don’t get it” response is when the cues confuse or worse yet offend the player. These techniques can easily be the unwitting vehicle of hidden stereotypes and unintentional disrespect. A nuanced world in front of a clueless player is wasted effort. A clueless design in front of a sensitive player has the all of the makings of a perfect Internet flame storm. The last thing any game company needs is an eloquent player who feels disrespected and yanked around emotionally.

Pac-man showed us that simply changing the color of the ghosts not only told the player that the ghosts were vulnerable, it helped imply that they were afraid of the player. For our project we will add some color to our FSM monster AI from Chapter 3. That way, we can tell how it is feeling. After we do that, we will give the emotions their own FSM that is separate from the FSM that controls actions. After giving our monster emotions, we will model the relationships between passengers on a cruise ship.

We will model the emotional state of our monster using the same states it uses for thinking. Our monster, when it attacks, is healthy and angry, so we will use pink as our color for that state. When out monster flees, it is wounded and afraid; we will use light gray for that state. When our monster is calmly hiding, its protective camouflage turns it green. We can do this with three lines added to the entry functions of the three states.

Open the project and edit the AttackState.vb class. Add the following line to the Update() routine:

World.BackColor = Color.Pink

Switch to the FleeState.vb class. Add the following line to the Update() routine:

World.BackColor = Color.LightGray

Switch to the HidingState.vb class. Add the following line to the Update() routine:

World.BackColor = Color.LightGreen

Run the code and watch the background of the form change color with the state of the AI. We have no visual representation of our monster, but we can tell at a glance how it is feeling. Unfortunately, this forces our monster to be happy any time it is hiding, even if it is near death and unwilling to fight. The mapping between our action states and the emotional states is imperfect. It is time to give our monster a more sophisticated set of feelings.

Comment out or completely remove the three lines you just added and run the program to make sure that all of them are inoperative. We are going to add a separate FSM to model the monster’s feelings. We will need three new states, but we can reuse the existing transitions. Once we have created the states, adding them to the AI will be very easy. Add a new class to the project and name it FeelHappy.vb. Just inside the class definition, add the following code:

Inherits BasicState

After you press Enter, VB will populate the required skeletons. Add code until your file looks like the following:

Public Class FeelHappy

Inherits BasicState

Public Sub New()

Dim Txn As BasicTransition

'Get angry if I see intruders while healthy.

Txn = New SeePlayerHighHealthTxn()

'Set the next state name of that transition.

Txn.Initialize(GetType(FeelAngry).Name)

'Add it to our list of transitions.

MyTransitions.Add(Txn)

'I react to health - if low, be afraid.

Txn = New LowHealthTxn()

'Set the next state name of that transition.

Txn.Initialize(GetType(FeelAfraid).Name)

'Add it to our list of transitions.

MyTransitions.Add(Txn)

End Sub

Public Overrides Sub Entry(ByVal World As Monster)

World.Say("I feel happy now.")

End Sub

Public Overrides Sub ExitFunction(ByVal World As Monster)

End Sub

Public Overrides Sub Update(ByVal World As Monster)

World.BackColor = Color.LightGreen

End Sub

End Class

VB will complain because we have transitions to states that do not exist yet. Add a new class to the project. Name it FeelAfraid.vb and make it inherit from BasicState. Add code until your file looks like the following:

Public Class FeelAfraid

Inherits BasicState

Public Sub New()

Dim Txn As BasicTransition

'Order is important.

'Get angry if I see intruders while healthy.

Txn = New SeePlayerHighHealthTxn()

'Set the next state name of that transition.

Txn.Initialize(GetType(FeelAngry).Name)

'Add it to our list of transitions.

MyTransitions.Add(Txn)

'If healthy and no intruder, I am happy.

Txn = New HighHealthTxn()

'Set the next state name of that transition.

Txn.Initialize(GetType(FeelHappy).Name)

'Add it to our list of transitions.

MyTransitions.Add(Txn)

End Sub

Public Overrides Sub Entry(ByVal World As Monster)

World.Say("I feel afraid!")

End Sub

Public Overrides Sub ExitFunction(ByVal World As Monster)

End Sub

Public Overrides Sub Update(ByVal World As Monster)

World.BackColor = Color.LightGray

End Sub

End Class

We only need one more state. Add a new class to the project. Name it FeelAngry.vb and make it inherit from BasicState. Add code until your file looks like the following:

Public Class FeelAngry

Inherits BasicState

Public Sub New()

Dim Txn As BasicTransition

'Order is important - react to health first.

'I react to health - if low, be afraid.

Txn = New LowHealthTxn()

'Set the next state name of that transition.

Txn.Initialize(GetType(FeelAfraid).Name)

'Add it to our list of transitions.

MyTransitions.Add(Txn)

'If healthy and no intruder, I am happy.

Txn = New NoPlayersTxn()

'Set the next state name of that transition.

Txn.Initialize(GetType(FeelHappy).Name)

'Add it to our list of transitions.

MyTransitions.Add(Txn)

Public Overrides Sub Entry(ByVal World As Monster)

World.Say("I feel so angry!")

End Sub

Public Overrides Sub ExitFunction(ByVal World As Monster)

End Sub

Public Overrides Sub Update(ByVal World As Monster)

World.BackColor = Color.Pink

End Sub

End Class

What remains is to put those states into an FSM and wire that FSM to the user interface. We need a new FSM for the feelings. Switch to the code for Monster.vb and locate the declaration for the monster’s Brains. Add code for feelings so that we have two FSMs as follows:

'We need an FSM.

Dim Brains As New FSM

'We need to feel as well as act.

Dim Feelings As New FSM

Now that we have a new FSM machine for feelings, we need to load it with states. Add the following lines to Monster_Load() below the lines that states into Brains:

'Load our feelings (make the start state appropriate).

Feelings.LoadState(New FeelHappy)

Feelings.LoadState(New FeelAfraid)

Feelings.LoadState(New FeelAngry)

Now we have to tell our monster to examine its feelings. Locate ThinkButton_Click() and add the following line to make our monster feel each time it thinks:

Feelings.RunAI(Me)

Now run the project. Lower the monster’s hit points first and watch it become afraid while hiding. When we piggy-backed the monster’s feeling onto its actions, we could not make it feel that way while it was hiding. Run it through the rest of the transitions to make sure that its feelings match the conditions. If we need finer-grained control, we could have the feeling FSM react to different levels of hit points than the action FSM machine uses. Our monster might be in combat, take damage, feel afraid but stay in combat, take more damage, and then flee.

Our monster provides better feelings when the feelings are controlled in their own FSM. We still use the simple display techniques, but we improved the emotional model. Our monster is not very sophisticated, so an FSM fills its emotional needs easily.

Now that we have a separate emotional model, we can use the emotional state to influence the behaviors of the AI. We are attempting to directly express emotions with color, but we can also indirectly express emotions with altered behaviors. Direct expression of emotion is more accessible to the player, but indirect expression may be more accessible to the AI programmer. Good AI practice is to attempt both; if the player sees that an AI character is visibly angry, the player will expect the AI character to act angry as well. (See Exercise 3 at the end of the chapter.)

For our final project, we will model needs and relationships instead of modeling emotions directly. As with The Sims, meeting needs through shared interests will build relationships. Our project purports to be the social director of a cruise ship. The director mixes people into pairs to share activities together. The director uses a mix of intentional and random elements when selecting matchups and activities. The director makes sure that people with strong needs get those needs met. The director randomly picks partners for the people with strong needs. The activities are selected at random from those activities that will meet the need. Over time, the interactions will build relationships. Let us examine the details needed to make this general description clear.

We will model only three needs: exercise, culture, and dining. Everyone starts with random values for each of their three needs. Needs can range from —100 to +100, with negative values implying an unmet need and positive values implying a met need. We will make sure that everyone starts with the sum of all of their needs equal to zero. This is a design decision that is tunable. We also will limit the initial range of any one need to —20 to +20, another tunable design decision. Each turn, every need is reduced in value by 10. Every time a person does an activity, the corresponding need is incremented by 30, exactly balancing the net drop to be zero, keeping our system in balance.

Each need will have three activities that meet that need. Exercise is met by swimming, tennis, and working out. Going to the movies, on a tour, or to a play meets the culture need. The available dining pleasures include French cuisine, Asian cuisine, and pub fare. We will keep these activities in a mini-database.

Each person will have a full set of randomly picked individual preferences, one for every activity available. This differs from The Sims, which has a small number of traits selected from a far larger list. The preferences are used along with needs to judge how a person will react to a given activity. Preferences range from —2 to +2, another tunable design decision. Positive values denote the person liking the activity. The net sum of all preferences is not constrained; the simulation includes people with both positive and negative viewpoints on life as a whole.

Everyone also tracks one-way relationship scores with all the people they have interacted with. This score starts at 0 and has no limit. Relationships are not required to be mutual. The scores are updated every time two people interact. A positive score implies a positive relationship.

There are three terms that influence the relationship score with each shared activity. The first term can be thought of as the “we think alike” term. If both parties share a positive or negative preference for an activity that they do together, it is a positive influence on the relationship. With this term, “We both hated it” builds relationships just as well as “We both loved it.” This term is computed by multiplying the two preference values together. For example if one person dislikes (—1) pub fare and his partner strongly dislikes (—2) it, this term yields +2 for their relationship every time they get to dislike it together.

In addition to mutual preferences, individual preferences also count, giving us a second term to add into our relationship score. If either of the individuals likes the activity, it adds to their relationship score; if they do not like the activity, it subtracts. This “I like it” term allows our people to take their own opinions into account. Note that this second term need not be the same for both people. Whether the two people think alike or not, each individual still has independent preferences and wants to be catered to.

The third term in the score is need based instead of preference based. As a bonus, if the activity matches to an unmet need in both people, it adds to the relationship; regardless of the food, hungry people prefer to dine with other hungry people. All together, positive relationships are built between people who have shared preferences, do things they like to do, and meet shared needs. This bonus “we needed that” term picks up on any shared needs.

Unlike The Sims, our people do not get to pick what they do or who they do it with. Each round, our cruise director puts everyone into a pool of candidates. The director selects the person in the pool with the strongest need. The director randomly picks that person’s partner, but the activity picked matches the first person’s strongest need. The activity is also randomly picked. The pair is removed from the pool, and the selection process is repeated until the pool of people is empty. The random pairing and selection drives toward all the people doing all the activities with all the other people. This is not intended as a game mechanic, but as a way to validate the simulation by driving toward good coverage of the interaction space. We know that left to themselves, people would self-select toward their own preferences and established friends.

In addition to the needs, preferences, and relationships, we will compute some other scores to help us make sense of the simulation. Our people have their own views on life, computed as the simple sum of all of their preferences. We expect people with a positive view on life to more easily build positive relationships because they like to do more things. We expect the reverse as well. We will also compute how opinionated each person is by taking the average of the sum of the squares of their preferences. Strongly opinionated people might be candidates for strong relationships, positive or negative. We certainly expect people with weaker opinions to build their relationships more slowly. The final derived score we keep is compatibility. Compatibility between two people is computed by multiplying the matching preferences of each person and summing the result. Since the first term of the relationship score is based on multiplying the two values of a single preference, we expect compatibility to help predict strong relationships.

Why do we compute the extra numbers? The derived values are to help us predict and tune. Our system mixes determinism with random chance, so trends may be slow to emerge. The preferences and needs for our people will be randomly initialized; these numbers will make it easier for us to see if any particular set of people will make for interesting interactions. Compatibility score is one of our better predictors; without some strong values in the mix, the simulation is boring. This has game design implications; randomly generated characters are fine so long as they are not all boring randomly generated characters. Let us build the simulation and see how they turn out.

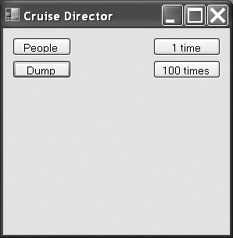

Our user interface will be quite simple, as shown in Figure 9.4.

Launch Visual Basic if it is not already running.

Create a new Windows Forms Application project and name it CruiseDirector.

In the Solution Explorer window, rename Form1.vb to Cruise.vb.

Click the form in the editing pane and change the Text property to Cruise Director.

Add a button to the form. Change the Text property to People and the Name property to PeopleButton.

Add another button to the form. Change the Text property to Dump and the Name property to DumpButton.

Add a third button to the form. Change the Text property to 1 Time and the Name property to Button1Time.

Add a fourth button to the form. Change the Text property to 100 Times and the Name property to Button100Times. You may need to make the button wider to fit the text.

Save the project.

We will begin our design from the core data and work our way outward. To do that, we start our thinking from the outside and work inward. We will need a class for people. We know that each person has needs, preferences, and relationships to store. The values for all three kinds of data are integers, but we would like to tag them with names and pass them around. Our lowest-level chunk of data will be a simple name-value pair object. Our people class will use it, and our mini-database will need it, too. The data is so simple that we will let other code manipulate it directly.

Add a class to the project and name it NVP.vb. When the editor opens, add two lines of code to the file as follows:

Public Class NVP Public name As String Public value As Integer End Class

Needs and preferences will be initialized to random integer values when we create a person. To get those random values, we need a helper function. We will use the concept of rolling an N-sided die to get our integer random values. The code is extremely useful in a variety of games. Add a module to the project and name it Dice.vb. Add code to the file as follows:

Module Dice 'Get one roll on an N-sided die. Public Function getDx(ByVal dots As Integer) As Integer Return CInt(Int((Rnd() * dots) + 1)) End Function End Module

Between the name-value pair helper object and VB’s Collection object, we are ready to make our mini-database. The database stores the available activities along with the need each activity meets. The database also keeps a list of needs met by the activities. We will use the database when we create a person. From the list of needs, it can create a set of randomly initialized needs for a person. Likewise, it can create and initialize a set of randomly initialized preferences from the list of activities. We will also use the database to help the cruise director. When the director gets the strongest need, the director will want a random activity to meet that need. And when we print out the relationship data, we will need a list of available activities. The mini-database simplifies the rest of the code considerably; if it gets initialized cleanly, everything else just works.

Add a class to the project and name it MiniDB.vb. Add initialization code so that it resembles the following:

Public Class MiniDB

'ToDo is activities grouped by need (a collection of collections).

Dim ToDo As New Collection

'Simple list of names of needs.

Dim Needs As New Collection

'Simple list of names of activities.

Dim ActivityNames As New Collection

Public Sub Add(ByVal Activity As String, ByVal Satisfies As String)

Dim Category As Collection

If Not ToDo.Contains(Satisfies) Then

'Must be a new need.

Debug.WriteLine("Creating " & Satisfies)

Needs.Add(Satisfies)

'Different needs get their own category.

Category = New Collection

ToDo.Add(Category, Satisfies)

Else

Category = CType(ToDo.Item(Satisfies), Collection)

End If

'Now add the activity to the category.

If Not Category.Contains(Activity) Then

Debug.WriteLine("Adding " & Activity & " to " & Satisfies)

Category.Add(Activity, Activity)

End If

'Keep a simple list of names of activities.

If Not ActivityNames.Contains(Activity) Then

ActivityNames.Add(Activity, Activity)

End If

End Sub

End Class

When our main code initializes the database, it will add each activity with its need to the database. The ToDo collection of collections lets us use a need name to get a collection of activities that meet that need. The name of the need is used as a key in ToDo to find the subtending collection. Along the way, we keep simple lists of activities and needs so that other code can iterate through them. Much of the rest of the code keeps us from adding the same thing twice or does explicit type conversions.

Since everything depends on the database, we should see if we can initialize it. Switch to the Code view of Cruise.vb and add code as follows:

Public Class Cruise

Dim Roster As New Collection

Dim MDB As New MiniDB

Private Sub Cruise_Load(ByVal sender As System.Object, _

ByVal e As System.EventArgs) Handles MyBase.Load

Randomize()

'Load up the database.

MDB.Add("tennis", "exercise")

MDB.Add("swimming", "exercise")

MDB.Add("workout", "exercise")

MDB.Add("movie", "culture")

MDB.Add("tour", "culture")

MDB.Add("drama", "culture")

MDB.Add("French cuisine", "dining")

MDB.Add("Asian cuisine", "dining")

MDB.Add("pub fare", "dining")

'Add people using the people button code.

'Call PeopleButton_Click(Nothing, Nothing)

End Sub

End Class

Our cruise director needs a database and people, so the first thing we did was to declare variables for them. Upon form load, the Randomize() call reseeds the random number generator so that we get a different run every time. Then the code adds activities to the database. At the end, it will eventually add a new group of people by using the PeopleButton_Click event handler. For now, we leave that call commented out. Run the code in the debugger and check the debugging output in the Immediate window. You should see each activity load in the proper place.

Now that our database can be created, we can add the code that accesses the data. Switch back to MinDB.vb in the editor. We will start with the database code needed to help create a person. People will require needs and preferences; we will add them in that order. Add the following code to the MiniDB class:

'Give some person a set of initialized needs.

Public Function SetOfNeeds() As Collection

Dim PersonalNeeds As New Collection

Dim net As Integer = 0

Dim need As String

Dim thisNeed As NVP = Nothing

For Each need In Needs

thisNeed = New NVP

thisNeed.name = need

'These variability parameters are tunable!

thisNeed.value = 21 - getDx(41)

PersonalNeeds.Add(thisNeed, need)

net += thisNeed.value

Next

'Everyone has a net of zero; adjust a random need.

'Net of zero is another tuning parameter!

If Needs.Count > 0 Then

'Get the random need.

thisNeed = CType(PersonalNeeds(getDx(Needs.Count)), NVP)

'Adjust it to make our net be zero.

thisNeed.value -= net

Else

Debug.WriteLine("cannot create SetOfNeeds: No needs in database.")

End If

Return PersonalNeeds

End Function

'Give a person a set of individual preferences.

'Preferences run from -2 to +2.

Public Function SetOfPreferences() As Collection

Dim PersonalPreferences As New Collection

Dim Activity As String

For Each Activity In ActivityNames

Dim thisPreference As New NVP

thisPreference.name = Activity

'The variability here is a tuning parameter.

'Five minus three gives -2 to +2.

thisPreference.value = getDx(5) - 3

'Seven minus four gives -3 to +3.

'thisPreference.value = getDx(7) - 4

PersonalPreferences.Add(thisPreference, Activity)

Next

Return PersonalPreferences

End Function

Note in the comments where it calls out the tunable parameters. Initial values for needs are restricted to —20 to +20 in range, except that a random need is forced to yield a net of zero. Both the range and the net of zero are tunable and will make a difference in the simulation. Recall that we give a bonus to relationships if both parties have an unmet need that the activity in question satisfies. If we move the balance point up from zero, that bonus becomes less likely. If we make the net sum of the need values a negative number, the bonus will become more likely; if everyone is always hungry, they will always enjoy eating together. If we allow extreme ranges in the starting values, some of the people will be quite single-minded for the first part of the simulation, and it will take longer for the true long-term trends to emerge. If we do not allow somewhat extreme values, our people will lack interesting diversity.

Preferences are also tunable. Because preference values will be added sometimes and multiplied other times, the range is important. Increasing the range of preference values makes strong preferences more dominant in the relationship score. The code for a range of —3to +3 is given as comments. Running the simulation with the wider range is left as an exercise, but it is well worth doing. So far, our simulation has three tuning knobs to adjust, and it is a good experience for AI programmers to try their hand at tuning a simulation.

The cruise director needs the database to pick an activity and to give the list of activities. We will add that code now to finish up the database. Add the following to the MiniDB class:

'Get a random activity for this need.

Public Function ActivityForNeed(ByVal Satisfies As String) As String

'Find the collection of activities for this need.

If ToDo.Contains(Satisfies) Then

'We have a collection for this need, get access to it.

Dim category As Collection = CType(ToDo.Item(Satisfies), Collection)

'Pick a random item.

Dim i As Integer = getDx(category.Count)

'The lower-level collection holds activity names (strings).

Return CStr(category(i))

Else

Debug.WriteLine("Error: MiniDB unable to meet need " & Satisfies)

Return ""

End If

End Function

'What is the master list of activities?

Public Function ActivityList() As Collection

'Give them a copy of our list instead of our actual list.

Dim alist As New Collection

Dim activity As String

'Copy from our list to theirs.

For Each activity In ActivityNames

alist.Add(activity, activity)

Next

Return alist

End Function

That completes the code for the mini-database. We can now initialize the needs and preferences of our people, so we should work on people next.

Create a class and call it Person.vb. We start with the data we store and the code that does initialization. Add code to the class as follows:

Public Class Person

Dim myname As String

Dim myNeeds As New Collection

Dim myPreferences As New Collection

Dim myRelationships As New Collection

Public Sub New(ByVal name As String, ByVal MDB As MiniDB)

myname = name

'Load all of the needs.

myNeeds = MDB.SetOfNeeds

'Load my preferences.

myPreferences = MDB.SetOfPreferences

End Sub

End Class

All our people require to start is their name and a database from which to get their needs and preferences. People will lack any relationships until they start to interact. The cruise director needs to know their highest need. Add the following to the Person class:

'What is my highest need? We need both the name and value.

Public Function HighestNeed() As NVP

'Default to our first need.

Dim highNeed As NVP = CType(myNeeds(1), NVP)

Dim someNeed As NVP

For Each someNeed In myNeeds

'If we find a bigger need use it instead.

If someNeed.value < highNeed.value Then highNeed = someNeed

Next

Return highNeed

End Function

In addition, the simulation will want to call for adjustments to the needs. Needs get stronger over time and interactions satisfy a need. Add the following code to the Person class:

'We interacted to meet some need.

Public Sub EaseNeed(ByVal Need As String)

Dim someNeed As NVP = CType(myNeeds(Need), NVP)

'30 is picked to balance 3 needs that drop 10 each turn.

someNeed.value += 10 * myNeeds.Count

'Clip at +100.

If someNeed.value > 100 Then someNeed.value = 100

End Sub

'Every turn we need more.

Public Sub IncAllNeeds()

Dim someNeed As NVP

'All my needs get 10 points worse.

For Each someNeed In myNeeds

someNeed.value -= 10

'Clip at -100.

If someNeed.value < -100 Then someNeed.value = -100

Next

End Sub

The code for meeting a need is self balancing. It knows that all needs get stronger by 10 each turn, so the amount of satisfaction has to be 10 times the number of needs to maintain balance. Keeping balance and having all needs act the same way is a design decision. We start with a balanced and conservative simulation. We can give it wider swings and variability if it proves unsatisfactory. Needs change when people interact, but so do relationships. Add the following code to the Person class:

Public Sub UpdateRelationship(ByVal theirName As String, _

ByVal howMuch As Integer)

Dim thisRelation As NVP

'Have we met?

If myRelationships.Contains(theirName) Then

'Update the existing relationship.

thisRelation = CType(myRelationships(theirName), NVP)

thisRelation.value += howMuch

Else

'Create a new relationship.

thisRelation = New NVP

thisRelation.name = theirName

thisRelation.value = howMuch

'Store the relationship.

myRelationships.Add(thisRelation, theirName)

End If

End Sub

'A simple Boolean when the caller does not care about magnitude.

Public Function NeedsSome(ByVal need As String) As Boolean

Dim someNeed As NVP = CType(myNeeds(need), NVP)

Return (someNeed.value <= 0)

End Function

'Return HOW MUCH they like an activity.

Public Function Likes(ByVal activity As String) As Integer

Dim somePref As NVP = CType(myPreferences(activity), NVP)

Return somePref.value

End Function

The NeedsSome() function is used when computing the relationship score for an interaction. The third term in the computation was a bonus if both parties had an unmet need satisfied by the activity. So we need to be able to ask a person if a particular need was unmet. The other parts of the relationship score need a person’s preference value for an activity, which is provided by the Likes() function.

The final code for a person handles the various ways outside code interrogates a person in order to print out results. The simulation will make use of these in debugging statements that let us see our results. Add the following code to the Person class:

Public Function CurrentRelationship(ByVal theirName As String) As Integer

'If we have met them...

If myRelationships.Contains(theirName) Then

Dim rel As NVP = CType(myRelationships(theirName), NVP)

'...then return the value of our relationship.

Return rel.value

End If

'Return 0 if we haven't met yet.

Return 0

End Function

'Give back my name and my needs in a compact form.

Public Function ShortDump() As String

Dim sn As String = myname & "["

Dim someNeed As NVP

For Each someNeed In myNeeds

sn = sn & " " & someNeed.value.ToString

Next

sn = sn & " ]"

Return sn

End Function

Public Function LongDump() As String

Dim ld As String = ""

Dim opinionated As Double = 0.0

Dim view As Integer = 0

Dim attr As NVP

For Each attr In myPreferences

'Strong preferences count more.

opinionated += attr.value * attr.value

'Keep the sign when building their total outlook.

view += attr.value

'Build the string of preferences.

ld = ld & " " & attr.name & "=" & attr.value.ToString

Next

Return (Me.ShortDump & " View=" & view.ToString & " Op=" & _

Format(opinionated / myPreferences.Count, "0.00") & "; " & ld)

End Function

Public Function Name() As String

Return myname

End Function

Now that we can create a database and some people, we are ready for the cruise director to make an appearance.

Switch to the Code view of the Cruise class. Add code as follows:

Private Sub PeopleButton_Click(ByVal sender As System.Object, _

ByVal e As System.EventArgs) Handles PeopleButton.Click

Dim people() As String = {"Drackett", "Jones", "Lincoln", _

"Morrill", "Stradley", "Taylor"}

Dim surname As String

Debug.WriteLine("+++++++++ LOADING NEW PEOPLE.")

'Remove prior people.

Roster.Clear()

'Load up the roster with new people.

For Each surname In people

Roster.Add(New Person(surname, MDB))

Next

End Sub

Uncomment the call to PeopleButton_Click that is near the end of the Cruise_Load event handler. We had commented it earlier because we lacked any people code. Now that we have the Person class complete, we can do more testing.