In previous chapters, our crawlers downloaded web pages sequentially, waiting for each download to complete before starting the next one. Sequential downloading is fine for the relatively small example website but quickly becomes impractical for larger crawls. To crawl a large website of 1 million web pages at an average of one web page per second would take over 11 days of continuous downloading all day and night. This time can be significantly improved by downloading multiple web pages simultaneously.

This chapter will cover downloading web pages with multiple threads and processes, and then compare the performance to sequential downloading.

To test the performance of concurrent downloading, it would be preferable to have a larger target website. For this reason, we will use the Alexa list in this chapter, which tracks the top 1 million most popular websites according to users who have installed the Alexa Toolbar. Only a small percentage of people use this browser plugin, so the data is not authoritative, but is fine for our purposes.

These top 1 million web pages can be browsed on the Alexa website at http://www.alexa.com/topsites. Additionally, a compressed spreadsheet of this list is available at http://s3.amazonaws.com/alexa-static/top-1m.csv.zip, so scraping Alexa is not necessary.

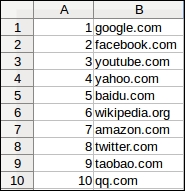

The Alexa list is provided in a spreadsheet with columns for the rank and domain:

Extracting this data requires a number of steps, as follows:

Here is an implementation to achieve this:

import csv

from zipfile import ZipFile

from StringIO import StringIO

from downloader import Downloader

D = Downloader()

zipped_data = D('http://s3.amazonaws.com/alexa-static/top-1m.csv.zip')

urls = [] # top 1 million URL's will be stored in this list

with ZipFile(StringIO(zipped_data)) as zf:

csv_filename = zf.namelist()[0]

for _, website in csv.reader(zf.open(csv_filename)):

urls.append('http://' + website)You may have noticed the downloaded zipped data is wrapped with the StringIO class and passed to ZipFile. This is necessary because ZipFile expects a file-like interface rather than a string. Next, the CSV filename is extracted from the filename list. The .zip file only contains a single file, so the first filename is selected. Then, this CSV file is iterated and the domain in the second column is added to the URL list. The http:// protocol is prepended to the domains to make them valid URLs.

To reuse this function with the crawlers developed earlier, it needs to be modified to use the scrape_callback interface:

class AlexaCallback:

def __init__(self, max_urls=1000):

self.max_urls = max_urls

self.seed_url = 'http://s3.amazonaws.com/alexa-static/top-1m.csv.zip'

def __call__(self, url, html):

if url == self.seed_url:

urls = []

with ZipFile(StringIO(html)) as zf:

csv_filename = zf.namelist()[0]

for _, website in csv.reader(zf.open(csv_filename)):

urls.append('http://' + website)

if len(urls) == self.max_urls:

break

return urlsA new input argument was added here called max_urls, which sets the number of URLs to extract from the Alexa file. By default, this is set to 1000 URLs, because downloading a million web pages takes a long time (as mentioned in the chapter introduction, over 11 days when downloaded sequentially).