Challenges will first be described, and after a detailed analysis, we will formalize the patterns that are most suitable for solving the problems on hand. Not all SOA patterns related to Orchestration will be covered here; we will focus only on candidates who are most frequently (in our opinion) engaged in the practical implementation of Service Inventory. We have mentioned some of these candidates several times already—it's a Service Broker together with Intermediate Routing that acts as an agnostic Composition Controller.

Tip

These patterns are related to ESB primarily. The telecom example presented in The telecommunication primer section will demonstrate that these patterns are very important for Orchestration as well. There are some more patterns that are not directly related to Orchestration, but without them, it will be impossible to build a reliable EBF framework. Our intention is not to follow the SOA pattern catalog, but rather demonstrate the practical values of the patterns.

To reuse something, first we need to establish the easily accessible inventory of reusable entities and make sure that these entities are truly reusable. From Chapter 1, SOA Ecosystem – Interconnected Principles, Patterns, and Frameworks, you are aware of the eight principles we must follow to achieve the four desirable characteristics. Simply put, it is your (any) solution's evaluation spreadsheet that contains eight rows and four columns for each component you are planning to evaluate. Surely, you can place your requirements at the top, but bear in mind the tangibility of your criteria. Obviously, you will have to pay some price for reusability as well. You need to balance your tactical and strategical goals wisely, but what is obvious from the presented telecom example is that strategic targets were sacrificed.

Your first task as a lead architect is to meet the development team and review their design of the new Order Management System service. You must evaluate the possibility of improving the current Orchestration layer in order to reduce the operational costs (firstly by minimization of service installations). Another obvious choice would be to scrap the Order-oriented orchestration entirely and select a commercial product, Oracle Communications Order and Service Management (OSM), for instance.

Operations and Business System Support (OSS/BSS) form the core of the telecommunication domain, handling custom relations management (CRM) and order management and procurement among other functionalities.

TMForum (www.tmforum.org) is the main standardization authority in telecommunication. Its eTOM specification was used for implementing the first release of the Pan-American service layer, suitable for providing a unified order management.

Physical realizations presented by three tightly coupled Orchestrated services are as follows:

Obviously, the realization is on BPEL, as the development was initially carried out on the previous Version 10g and then migrated and refactored for 11g.

Service Request is received as a delimited file, containing one or more ordered items according to the advertised CTU products. The COM module verifies a client's profile and the current products registered for this customer.

A further Service Request in the TOM module is converted into working order with lines that are sorted according to business logic and enriched with the client's information. For instance, if a client needs TV channels in higher quality over the Internet, the currently provisioned Internet bandwidth must be adjusted to these requirements. Consequently, copper or optic cables must be considered and verified; some newly advertised features cannot be supported by older versions of the Set Top Box (STB).

There are certain interdependencies between core telecom products such as VOIP and Internet, and Order provisioning must adhere to these rules of practical fulfillment. From the field force management perspective, for instance, you cannot send a technician to install the cable modem or STB if the cable requirements are not met. All of this must be carefully analyzed for converting the received commercial order into its technical implementation plan.

Therefore, Order lines are grouped for parallel or sequential processing and are passed to the service provisioning module for actual processing. As you can understand, every single line of commercial order could be potentially converted into multiple lines of technical tasks that are related to certain application endpoints or other compositions. Thus, there are a lot of IF-ELSE controls included in the last module in an attempt to cover all the possible business combinations.

This last module presents a lot of adapters and partner links to endpoint systems, acting as service providers for IP Provisioning, DTV Provisioning, registration/alteration in CRM, and so on. After completing the list of technical tasks that are related to a single Order line, its status must be returned to the caller of the process. The last successfully executed Order line will update the Order status if all the line invocations were successful. A failure in the execution of the group of invocations (or single operation in the group) related to a single Order line leads to complex compensation activities. The complexity could be very high as the number of combinations of technical tasks related to one Order line is massive. Thus, in most cases, developers just park the error order in the error queue for manual recovery. In an attempt to help operational personnel with order recovery, developers decided to log error information with excessive details. Regretfully, due to the complexity of the compositions and the number of telecom subsystems involved, there are also several logs and information related to errors that are not centralized.

To summarize this, Service Request Input is in a delimited file format where delimiters could vary, the number of lines could potentially be unlimited, and line terminators are not always in place. This is the result of some inconsistencies in the legacy system that is responsible for the construction of this file. This fact complicates the implementation of the adapter that is responsible for data format transformation at the receiving endpoint. It appears that this adapter is part of a COM process.

The development team confirmed that an XML object, constructed after the data format transformation by the receiving adapter, is in full compliance with the TMForum data model (Telco CDM Order), implementing all the declared elements. Most of these elements are not in use at the moment, thereby presenting placeholders for further business implementation. Not all elements are presented in an optional way. Therefore, the whole structure is propagated to the ultimate service provider's adapter, passing all the three orchestrated services to it without moving on to the second transformation.

Access to the Customer DB and Service catalog (commercial service term) is implemented using DB adapters, as these resources do not provide public interfaces that are suitable for the new OM system. These resources are also consumed by other applications that perform read-write operations. For a couple of hours every day, Customer DB handles excessive workload caused by some DWH OLTP operations; thus, to address latency during this period, developers increased the timeout for this particular adapter.

The Product catalog (and its DB) is presented as a separate service with a standard contract. The XSD for this service was autogenerated, ensuring that the data model was presented very precisely in the XML schema.

The level of standardization of the presented contract is also ensured by the fact that it was generated from an entity object based on an existing data model, which means that all the basic operations such as GET and SET are in place.

Most importantly, developers confirmed that these three Orchestration services are truly universal and ready to serve multitenant requests. It was achieved by placing complex branching logic into TOM and COM with separate branches for each country/affiliate. For some use cases, which are not formalized yet, separate placeholders are preserved for later implementation. At some places, additional transformation will be implemented in the next phases to make it rapidly available for adapters.

The development team is not concerned about the size of the composition, as they believe that the partial state deferral DB will be capable of handling the process hibernation state. Error-handling routines are covered by throwing SOAP fault errors with maximum details, as we mentioned previously.

Branching logic with dynamic expansion of branches depends on the root conditions that lead to an avalanche-like physical implementation. Starting from obvious business fractions, it would be very hard to stop. At the time of initial analysis, three coupled processes had nearly 20,000 lines of BPEL code (the code was written over several years by a very big consulting company). It not only makes the code unreadable (by other developers), but also unmanageable (by ops, as opening the failed flow in the Enterprise Manager (EM) console could take five minutes). By the way, did we mention that the company has had operations in ten countries, and the BPEL code branches have to accommodate each of these countries' specific logic as well?

We believe that the situation looks pretty familiar to many of us (certainly for the telecom architects), and that strong temptation to turn to Oracle OSM is only restrained by the tiny feeling that the same logic had to be deployed on another, more secluded tool, adding another silo to the CTU farm and increasing the dependency on the vendor.

The detailed analysis pattern together with Enterprise Inventory and Logic Centralization (see the second figure under the SOA Service Patterns that help to shape a Service Inventory section in Chapter 1, SOA Ecosystem – Interconnected Principles, Patterns, and Frameworks) shapes the boundaries of our reusable service catalog in a way that is similar to the separation of concern principle. The "divide and conquer" rule is the gradual adaptation of bulky silo-like BPEL processes to the idea of dynamic assembly and service consumption. The initial step here is to separate the automated logic (something we can code and run) from manual operations. It's done already in general, but some calls to frontend GUI services still must be isolated for proper human task implementations. The next step is to identify business cases that are related to the provisioning of core telecom products (TV, Internet, and Voice) and their bundles. Technical cases that support business cases also have to be separated and then arranged into dedicated services (ACS, STB control flows, and so on).

The common parts of all Orchestrated services (also as Orchestrated services) must be extracted and segregated as a result of this analysis. You do not have to implement them physically in the first place; however, as long we are dealing with BPEL, it would not be a problem to identify the IF-ELSE branches in a copy of analyzed process and take them out, storing the new flow under a new version or/and name. Eventually, we can drill down all our processes to the realization of the fact that all branches' diversity is mostly related to the geographic specialties presided by the following:

- Different endpoints of similar applications in regional installations

- Different combinations of similar services/applications due to regional business specifications

The two-steps approach based on the initial functional decomposition and following the logic abstraction and centralization is presented in the following block diagram. These patterns must be applied gradually for the most obvious IF-ELSE branches first, avoiding big bang implementation.

This segregation leads to the following:

- We have tree products, and each has around 20 to 25 different business flows. (We have Internet that provides high speed, ceases high speed, selects the bandwidth, and so on. We have TV to request the relevant TV package, cancel a TV channel, add a channel to a package, and so on.)

- We have ten countries where each country could have its own variation of business flows.

- In each country, we could have two (sometimes more) affiliates, providing individual or aggregated services.

- We have seven telecom-specific applications for all those countries that participate in our OSS/BSS business flows and one or two specific applications for some countries.

- Variations within an individual process for different countries are insignificant. Usually, it's just a sequence of invocations of similar applications. Anyway, it makes processes different.

- A single branch (or decomposed process with concise and formalized functional boundaries) has up to 10 invocations (one application can be called several times).

No wonder the realization of all of these in practically every single BPEL process was problematic; simple math can provide us with this: 3 products * 20 business cases * 10 countries * 2 affiliates = 1200 different combinations of single order provisioning (this is a quite modest number; we could have more combinations).

Thus, potentially, we have 1,200 services (task-orchestrated models) to maintain in our Service Inventory, and it's definitely clear that it cannot be implemented as a single Order Management process (sorry, Order Fusion Demo). However, what's wrong with 1,000 BPEL processes? Firstly, it's quite a big number to maintain on the server. It will require quite a powerful server farm and considerable Governance efforts to control versions, DB utilization when a state is dehydrated, and significant memory resources for services running in parallel. It seems that this extreme level of functional decomposition is not good either as so many similar services will complicate Governance and error recovery, plus, again, the hardware resources' consumption will be considerable.

Balance must be maintained during Functional Decomposition (1) and Service Refactoring (2). Not every use case (business process) is equally demanding. You should focus on refactoring 20 percent of the services, leaving the remaining 80 percent to handle the task on hand (yes, common sense again). Do not rush to decompose the hybrid Entity services. Try to separate the agnostic and non-agnostic parts first, keeping in mind Occam's rule (http://en.wikipedia.org/wiki/Occam's_razor).

Quite soon, we will have our core atomic business processes, related to certain use cases of Order provisioning, decomposed and stored separately in Service Inventory. Actually, we just started shaping our Service Inventory and are not really concerned about its taxonomy. All we need to know at this moment is that we will have two distinctive layers:

- Task-orchestrated layers for decomposed and refactored BPEL processes, presenting atomic processing legs

- Entity services (Product, Service, and Customer), which for the time being are also presented as BPEL processes, are primary candidates for rewriting an appropriate language (staying with Oracle: Java + Spring JPA).

You may think that BPEL processes in the task layer will remain untouched, and that would be a mistake. We will come to this quite soon. The Application of Process Centralization (5) pattern is just a beginning; we will focus on Service Normalization (6) shortly. Now what's interesting is how we will invoke our centralized services.

It is obvious that SPC will now act as a composition initiator, and we are quite close to realization of our Composition Controller (not agnostic yet). This controller will isolate the initiator from service(s)-actors, hide the complexity of the composition, and provide dynamic invocation together with the service locator component. From the previous figure, you can clearly see that this is the description of a classic J2EE Business Delegate, the way it's described in http://www.corej2eepatterns.com and http://www.oracle.com/technetwork/java/businessdelegate-137562.html. Refer to the following points:

- We want to promote Loose Coupling between the initiator and service-worker

- We want to minimize service calls from the composition initiator for fulfilling this complex task

- We would like to shield the initiator from composition or invocation exceptions, thereby providing a clear and understandable error context and completion status

- We want to keep this process dynamic, allowing logic to mutate without affecting the initiator

- We want to maintain this delegation as highly manageable, allowing us to easily change the business rules within the composition

Thus, we place the classic Business Delegate as a part of the solution where its role in SOA terms is slightly wider, covering service brokering and mediation at the same time. So, potentially, it would be the SCA mediator, as a router and message transformer. Potentially, it can detect the use case using a message header provided by the component-initiator (SPC in the earlier figure), and route the message to the decomposed BPEL process. We have already mentioned a drawback of this decision:

- A composition could be enormous if we place all the decomposed services in one SCA. Even the addition of certain aspects of atomic BPEL processes will make it rather heavy. Decomposing a big process and then assembling the atomic pieces in SCA using a mediator is a very common approach, but not for the discussed number of services.

- A mediator with a static routing table can be overinflated by the number of conditions and rules. Adding transformation to it will not make it better than the original solution. A rule-based mediation has certain limitations that we mentioned in Chapter 2, An Introduction to Oracle Fusion – a Solid Foundation for Service Inventory, and we will discuss it a bit further.

- Most importantly, quite a few of the decomposed processes are almost identical from the business logic and involved applications' perspective (Canonical Expressions and Canonical Schema are either in place or can be maintained).

The last bullet item means that for some processes, we could continue with the decomposition down to the endpoints' definitions, aiming to apply the dynamic partner links technique as an option or generalize the processes to simple XML configuration files in the form of routing slips, describing the endpoints that should be called. This process is similar to the ultimate DB's structure normalization, where we strive to eliminate redundant constructions and minimize dependencies. This activity is described as the SOA Process Normalization pattern where we eliminate redundant logic and establish the atomic process as a single carrier of business logic. Of course, the primary candidates for normalization are as follows:

- Processes of the same logic for different geographic units (only endpoints are different)

- Processes similar for one type of product or the same service-provisioning group

For these Processes/Service Normalization and Capability Composition (assembling capabilities outside the service boundaries in order to fulfill complex business logic; a typical role of task-orchestration services), business logic can be really "dehydrated" to routing slips. The concept of routing slips is quite common and mature; it's one of the core EAI patterns (http://www.enterpriseintegrationpatterns.com/) that is inherited by SOA as well. Products such as Apache Camel and ServiceMix (and the commercial Red Hat version, Fuse ESB) use it for dynamic and static routing. The whole concept is highly related to the WS-Addressing standard notation that we discussed in Chapter 1, SOA Ecosystem – Interconnected Principles, Patterns, and Frameworks: "The message came from A, then must go to the endpoint B and sequentially to C. The reply must be delivered to service D. In case of error, service E must be notified".

We would like to repeat it here again, just for comparison.

|

WS-Addressing |

Static Routing Slip: ServiceMix |

|---|---|

|

The code for WS-Addressing is as follows:

<SOAP:Header>

<wsa:MessageID>uuid:aaaabbbb-cccc-dddd-

eeee-ffffffffffff

</wsa:MessageID>

<wsa:ReplyTo>

<wsa:Address>

http://CTU.Order/CRM/Clarify

</wsa:Address>

</wsa:ReplyTo>

<wsa:To SOAP:mustUnderstand="1">

mailto:[email protected]

</wsa:To>

<wsa:Action>

http://CTU.Order/Provision/Activate

</wsa:Action>

<wsa:FaultTo>

<wsa:Address>

http://www.w3.org/2005/08/addressing/anonymous

</wsa:Address>

<wsa:ReferenceParameters>

<ctuns:ParameterA

xmlns:ctuns="http://CTU.Order.namespace">

FAULT

</ctuns:ParameterA>

<ParameterB>ServiceBroker</ParameterB>

</wsa:ReferenceParameters>

</wsa:FaultTo>

</SOAP:Header> |

The code for Static Routing Slip is as follows:

<eip:static-routing-slip

service="ctuOrder:routingSlip"

endpoint="endpoint">

<eip:targets>

<eip:exchange-target

service="ctuOrder:Service1" />

<eip:exchange-target

service="ctuOrder:Service2" />

<eip:exchange-target

service="ctuOrder:Service3" />

</eip:targets>

</eip:static-routing-slip> |

Thus, this ultimate implementation of Normalization of an SOA pattern leads to the conversion of BPEL artifacts into some primitive XML constructs, fulfilling a part of the WS-Addressing paradigm.

"Stop" you say? Two points must be clarified straightaway:

- Are we reinventing another more lightweight BPEL?

- Intermediate routing within SCA is perfectly covered by a mediator. What's wrong with it, and why can't we employ it instead of routing slips?

Firstly, BPEL 2.0 is a full-fledged language with quite an extensive syntax. It's no longer a simple approach with essential "Assign-Invoke" commands. A complex syntax requires a very smart Interpretation Engine. Look at the construct on the right-hand side of the previous table. You do not need an engine to execute this; any language with a standard XDK can execute it gracefully. Even without any XDK, you can execute it using less than a dozen lines of code. Secondly, it's transportable and portable. WS-Addressing is just fine, but it's part of the SOAP header, and it's still the WS-* standard; however, we will not always call WS, and WSIF is not always an option.

Also, you probably noticed that the routing slip is nothing more than a description of Endpoints. Endpoints (API) are one of the key (probably most crucial) elements of our service infrastructure, and from the Governance standpoint, we must simply register and maintain them in our Service Repository (and Registry as well). The Registry structure will be discussed in a separate chapter, but for now, we can assume that endpoints are already there after the completion of decomposition and logic centralization. It's also not a big deal to extract a consolidated endpoint descriptor in the form of a routing slip from the Repository. We have to stress that we are not opposing WS-Addressing routing slips and BPEL as an Orchestration language; further, you will see how they gracefully coexist. Now we come to the realization of two new SOA patterns:

- Metadata Centralization (4)

- Inventory Endpoint (7)

Metadata is data about data (probably the shortest description possible, and we love it). The endpoints and types of Service Engines in use, which include runtime roles of services and service models, are data elements that describe the service. They will be in our Inventory eventually (the sooner the better) but accessed through the unified Inventory Endpoint. So far, we have plans to keep the service endpoints and extract them using the WS API, which we described in the development phase.

Returning to the first point of concern, we would like to confirm its validity. Keeping the routing slip reasonably simple is the key; otherwise, we will just reinvent the wheel. It would be much easier than presenting the routing slip as the BPEL 2.0 XML artifact and the routing slip parser as a subclass of the BPEL engine, reacting only on the constructs invoke and transform. By doing this, universalism will be maintained, but we will have to forget about portability.

Thus, we have no plans to reinvent the BPEL. Even more, by balancing the Normalization of different processes, we openly declare that not all processes can (and should) be decomposed down to a simple sequential EP. We deliberately would like to keep complex processes untouched and invoke them from the same EPs. Even more, we will denormalize some processes due to the presence of complex parallel processing or difficulties that arise with error compensation. Another reason for the implementation of the Contract Denormalization pattern is the needless multiplication of the services and high performance demands associated with it. For sequential processes though, Execution Plans (structure will be explained in detail with connection to Enterprise Repository in Chapter 5, Maintaining the Core – the Service Repository, but critical elements will be presented here) can solve this problem more gracefully.

Next, all of the logic related to BPEL is applicable to the Mediator concern as well. The fact is that the Oracle Mediator is not lighter than BPEL, and its engine is also rather heavy. Yes, the syntax and vocabulary is a bit more modest, but Mediator is also a stateful component that is capable enough to perform the processing sequentially and in parallel with the predefined priorities. Thus, its XML metalanguage is not the best candidate for performing a simple sequential EP. However, similar to what we did with BPEL, we are planning to use at least two (initially) mediators: one for dynamically extracting EP or routing to the complex denormalized BPEL and another for routing to the specific endpoint (this decision will be revised soon).

Gradually, we will come to the dynamic extraction of EPs, which can be realized on Rule Engine; now we are moving on to the Rule Centralization pattern (8), enforced by Oracle SCA Decision Service. Here, another concern can be expressed. Would it be more logical to use Rule Engine to identify the next task in the dynamic workflow instead of extracting the execution plans by the same Rule Engine? The answer is already in the question. For a sequential composition, we will need up to 10 Decision Service invocations instead of one (see requirements). Most importantly, for dynamically branching a process, we can invoke RE from EP with no problems, or we can call another SCA that could encapsulate another Decision Service.

Functional analysis would not be complete without defining the message structure that is consumed and propagated between the controller, composition members, service providers, and composition initiator. Obviously, the message payload is the Order in the CDM form according to the TMForum specification. As mentioned in Chapter 1, SOA Ecosystem – Interconnected Principles, Patterns, and Frameworks, discussing the Standardized Service Contract, the optimization of its structure is extremely important, but we cannot approach it just yet due to compatibility reasons (external applications are used to it) and because all those enormous BPEL processes are our primary concern at the time. However, we will have to address message normalization as soon as possible. Due to this pending task, we have to construct our message in such a way that the alteration of payload would not affect Composition Controller's functionality, that is, make it universal (or process agnostic). It could be possible if we isolate the payload and a dynamic part of it, and expose the payload object's particulars in the message header. The Execution Plan(s) must also be presented in the message, and mandatory routing slip(s) and statuses of object alterations must also be preserved for the controller's convenience.

All these message components are best discussed in conjunction with the Service Repository taxonomy, and we will do that in the coming chapters. We would just like to stress on the fact that the implementation of a universal message-container will convert our Composition Controller into a truly agnostic service that is capable of serving not only Order messages, but all types of payloads in a very dynamic and lightweight fashion. This will inevitably lead to the following consequences:

- As you will realize, a universal message container can be designed by the implementation of the

<any>element in the payload section. (Yes, by[CDATA]as well, but we are not talking about this extreme case for obvious reasons.) We mentioned this point in Chapter 1, SOA Ecosystem – Interconnected Principles, Patterns, and Frameworks, as rather undesirable: security considerations and excessive XML processing at the backend. This could be fairly and successfully mitigated by the following:- We are in the EBF framework behind security gateways in DMZ and the conventional ESB. If the enemy is already present, it's too late anyway.

- Besides, our payload is not really

<any>; it's still a corporate EBO (Order, Invoice, Client, Device, and Resource) and is strictly compliant with CDM's XSD. If we would like to perform message screening in EBF by means of an XDK, we can do that easily (although, it's rather unusual and ineffective all the same).

- The message header will be in place despite the presence of any type of message structure. Should we also mention that it will be SBDH-compliant (see Chapter 1, SOA Ecosystem – Interconnected Principles, Patterns, and Frameworks)? Thus, all objects' particulars will be in the header anyway, simplifying payload processing. Even more, this approach could make our payload lighter, as we can delegate some common objects' elements to the message header.

- A universal message-container denotes the universal agnostic Composition Controller, and consequently, the promotion of Domain Inventory to Enterprise Inventory. This would be a very positive outcome.

Now we can summarize the results of our analysis and draw the functional diagram of the proposed solution. We have an initial understanding of the tools we are going to use, and all of them are in the SOA Suite bundle (both 11g and 12c) and quite well within the EBF framework.

The summary of the results is as mentioned in the following table:

The block diagrams shown previously present the complete solution that consisted of the order management part (non-agnostic task-orchestrated services together with entity services), encompassing the following generic parts as well:

- Business delegates as service brokers, both synchronous and asynchronous (currently we will focus on asynchronous), fulfilling the role of an agnostic Composition Controller

- A Service Broker facade, responsible for wrapping an inbound Order message into a message container

- An Inventory Endpoint in the form of Execution Plan Lookup Service, as we have only EPs in our Metadata Repository for the time being

- A Task Router that is presented as a mediator for a synchronous service broker

The next step after you're done with drawing a comprehensible solution block diagram is presenting detailed sequence diagrams, covering all aspects of the components' runtime behavior. Needless to say that it can only be done with close cooperation with the developers. Here, we would like to present the complete sequence diagram with focus on Service Broker's activities shown as follows:

Service Brokers as a core of Composition Controller

Usually, sequence diagrams should contain all the elements of the solution to visualize the service activities that involve the following:

- An audit service

- Error handlers

- Inbound and outbound adapters

- Common Order-handling components

We will discuss each of them later. In the previous figure, we depicted the part that acts as an agnostic Composition Controller. This part can be created in any environment (again, SOA patterns are vendor-neutral) for any of your projects where an agnostic controller's functionality is required; however, for good reason, you should plan to implement it in BPEL. Now we will drill into some details that are essential for its physical implementation, although we will stay focused on the positive execution scenario first (rollbacks will be discussed later in the chapter).

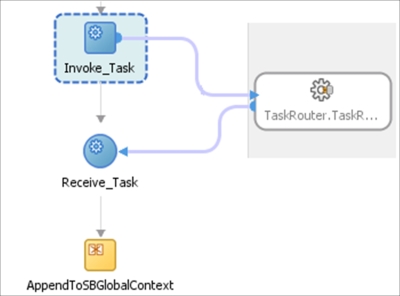

All controllers' SOA artifacts will be packed into one composite for simplicity. This simplicity will be illusive from the very beginning as we will have to handle synchronous and asynchronous operations in one place. Thus, we will have two pairs of BPEL (as a service broker and business delegate) and Mediator as a task router. However, that's the whole idea: gradually convert a bulky solution into something composable. The four main steps in the realization of this idea's realization are presented in the following SCA diagram:

Composition Controller's realization on SCA

Naturally, two types of invocations could be possible: one from the async (1) initiator and the other from sync (2). Then, Execution Plan will be extracted for every call (3), and every sequential task will be passed to the Adapter framework (4).

Create the asynchronous Service Broker as an asynchronous BPEL, exposing its WSDL as a SOAP service. We are omitting some obvious initial steps only to return to them later. The first important step is to invoke the Inventory Endpoint and extract EP as a routing slip, as shown in the following screenshot:

Obviously, EP must be implanted into the universal message container for further traversing in the loop during the execution/invocation. Universality means that a controller can be nested so that it acts as a subcontroller; therefore, the extraction of an EP is not always necessary. So, we should recognize the presence of an EP provided by a master-controller and invoke the lookup only if it's necessary. Logically, EP can be provided by the Adapter framework, where an individual adapter handles a particular use case. EP's extraction is based on message header elements that are initially known: sender, affiliate, object (Order), and event (requested product and operation). The Message Header is SBDH-compliant as discussed in Chapter 1, SOA Ecosystem – Interconnected Principles, Patterns, and Frameworks. The final step here is to store the EP tasks array in a global variable.

We got the list of tasks as an XML array, and now we can invoke them sequentially in the loop shown as follows:

This is the essence of the constructed BPEL process and the core of the execution logic, although later, we will have to add some extra functionalities to keep it agnostic and task-oriented at the same time. As explained during the analysis phase, each order line could dynamically spawn a number of subtasks, which should be logically grouped, where the last task in a group should perform a specific operation on the service context. This task is the last one in the group of EP and the middle lane of the control is responsible for handling it. The right lane is for the standard task; it also updates the task execution history, but only the ones that are related to tasks such as status, isRolbackRequired, and currentTaskcounter, which are essential for traversing and looping. The left lane is responsible for executing the compensation task when the response from the actor is negative; it's possible only if current and compensative tasks are included in EP in relation to the primary task.

Using the While looping is quite easy; with the following code, we just simplified the condition for the sake of demonstration:

<while name="WhileNotFinalTask">

<condition>

(... ($finalizeTask_counter <= $finalizeTask_total )...)

</condition>

<scope name="ExecuteTask" exitOnStandardFault="no">There are many ways in which you can loop over XML nodes in Oracle BPEL. All of them involve the Assign element, counting the number of XML nodes using the count function in XPath and XPath's position[] function. The one we can use in this case was extracted in the previous step where the execution plan was stored in a global variable, which is common for all BPEL scopes. In the ForEachTsk scope, to the single EP task element that currently exists in the universal Message Container (Process Header) part, we assign the value of the task node from the global variable with the position that is equal to the current task counter. The task counter is incremented after each task is invoked, and we will perform a reassignment in every loop.

This is probably not the fastest technique, but it works; additionally, we have advantages in terms of memory utilization, keeping only one node at a time in the Process Header within the message container.

Extend the task types further to make the controller more universal.

With no intention of making our routing slip heavy or close to BPEL, we have to extend its syntax a bit further. It is obvious that different tasks in our task sequence will require different data subsets; even if we use a canonical XSD for our Order (actually, we are not going to use it yet the TM Forum model is too "all-weather"). This fact will require additional transformations between invocations. We can perform these executions in the adapter framework layer and relieve the Business Delegate from this functionality; however, some of our service-workers will be other composites, standalone BPEL(s), or other services that are not covered by the ABCS layer yet.

A separate lightweight Servlet can do it gracefully, making the solution truly universal; however, because we have decided to stay in a single composite for now, the standard BPEL functionality will be enough:

ora:processXSLT($TransformationFileName, $TransformationInput)

Here, TransformationFileName is a task parameter, assigned in BPEL as shown in the following code snippet:

<assign name="AssignFileName_and_Input">

<copy>

<from>

string($currentTask/ns15:taskList/ns15:task/ns15:transform/@location)

</from>

<to>$TransformationFileName

</to>

</copy>We must also supply the task worker (service provider) with additional information about the transported business object. This information resides in the SBDH message header, the Object Context part, and presented in the name/value pairs form, storing all objects' supplemental information. Now we are ready to invoke the actual Service Provider.

Agnostic Task Invocation

The only task associated with the designated provider is maintained in the Execution plan, and this task is exactly what we want to execute. Thus, the extraction of this variable is simple, and we pass it to Task Router (SCA Mediator). It is now the Mediator's job to substitute the actual endpoint URI with the variable from the task list.

This operation is similar for synchronous and asynchronous service brokers; only Mediators are different.

That's it! We can extract the XML task array from the metadata inventory; this array has endpoint references, and we can loop through and invoke them synchronously or asynchronously, get the result back, update an object's payload and its contexts, keep track of the execution in a special placeholder of our XML container, and even react to errors with predefined tasks in the same tasks array.

The mentioned endpoint reference term is part of the WS-Addressing vocabulary we briefly discussed in Chapter 1, SOA Ecosystem – Interconnected Principles, Patterns, and Frameworks. Let's look at it a bit closer now. The endpoint reference is the XML construct that allows us to select one of the available services in a WSDL. We can also define the service endpoint at runtime. As we just saw, BPEL is very good at assigning variables at runtime, practically for all XML entities in a composite. With the very first version of the Oracle BPEL manager, we got a brilliant DynamicPartnerLink example, demonstrating the substitution of a dynamic partner-link address; it was turned into an excellent chapter in SOA Best Practices: The BPEL Cookbook, Oracle.

One might think that as we focus on SCA (and BPEL in particular), why not really implement everything in BPEL and use the same old technique (with no Mediator)? Actually, the old example is similar to Mediator, how it exists today. We initially defined a dummy service endpoint and created a WSDL for it with all the predefined real endpoints; after that, we used the wsa:EndpointReference type variable in assignment to the partnerReference variable, pointing to the real endpoint in WSDL. This approach could be taken even further with the substitution of an actual WSDL with different addresses for the same reference. This approach is not purely dynamic, as we will have to redeploy the new WSDL. What was proved is that we can call any of the services even without addresses registered in WSDL, as long we know the actual address at runtime. For addresses that are not specified, the default endpoint in WSDL will be used. All of these benefits though are already in the SCA Mediator (with lots of extra benefits), and we do not need to modify BPEL. We delegate the endpoint reference and partner links' handling to the Mediator without affecting BPEL and WSDL alterations. Here, we are clearly following the separation of concern principle.

Before getting a bit deeper into Mediator, we would like to check some other features we would like to include into the BPEL Service Broker/Business Delegate component. We already added the transformation and clearly separated it from the task invocation (we can still invoke something that will perform the transformation for us). However, what else can be added?

We assume that all responses (callbacks) will be handled by the adapter framework, and ABCS will make sure that the response is based according to XSD. Assumption could be a bit bold, and some internal services could be called directly. A service broker in this case is obliged to validate the output before calling the next service. Therefore, adding a new task type, Validate, in addition to Invoke and Transform is inevitable. It's not a problem yet, as it can be easily supported, but we better stop here, otherwise the SB will not be better than the initial monstrous BPEL.

Tip

Select this approach with extra caution. It has all rights to exist because BPEL is one of the possible platforms for ABCS and Service Broker with routing capabilities; it is an essential pattern for northbound and southbound adapters. In a non-agnostic controller, the best approach would be to validate the XML property for validating inbound and outbound XML messages; just set it to True (in the SOA EM console, SOA Administration | BPEL Properties). Of course, it impacts the performance. For our agnostic controller with a containerized payload as the <any> element, we have limited options, but anyway, it would be more prudent to delegate this function to every individual adapter that is isolating the endpoint.

One more thing. We have already departed from the telecom-orders-specific Composition Controller and strive to achieve a truly agnostic realization, hence the routing slip could potentially contain more than ten invocations (endpoints). In the SCM domain, for instance, we deal with objects such as CargoManifest, BillOfLading, VesselSchedule, and quite often, the same task will be repeated a considerable number of times (even dozens). How can we do that?

The following table explains the method to accomplish the functions in the first column:

|

Task |

Realization |

|---|---|

|

Include the same task n number of times in EP |

This option allows us to keep the task parser within the Business Delegate simplified. Only one loop is maintained. Alternatively, if we need to perform nested looping, we will call another service broker's instance with the new EP (extracted first), or another composite with the The drawback here could be that the size of the EP can grow considerably depending on the number of task interactions. |

|

Delegate the looping to the Adapter framework |

At first glance, this would be the best approach, as it looks similar to debatching. With debatching, we send message chunks to the designated endpoint. Unfortunately, debatching here is not pure as we want to send the entire message several times (or perform several invocations with the same payload); most importantly, batching and debatching functionalities are supported for a limited number of adapters: DB, FTP, and File for inbound adapters. Anyway, an adapter is the most logical option from the separation of concern standpoint. We have to supply an adapter with the number of interactions we want to execute and that could be done using EP (see the following option with the looping attribute). The previous tip is also valid here. |

Set an extra attribute for individual tasks, describing the number of iterations for this task:

Here, setting the attribute is not a problem:

$currentTask/ns15:taskList/ns15:task/@loopOver

However, we should be very careful about further requirements that might follow. Technically, we have implemented a nested loop for a single task, but this task can be part of the compensative actions (the Rollback EP mode) for carrying out the primary operation, and it can compensate the single (last) interaction for the task group or the entire group. In other words, without the support of native adapters, we have to handle all the errors ourselves with this approach, and nested looping must be applied discriminately for positive and negative execution scenarios.

You can also follow the second option (adapter); in this case, establishing the attribute will be enough for fulfilling these requirements, and the specific adapter will do it non-agnostically.

Using this five-step approach, we have implemented the agnostic Composition Controller using SCA components. This is the first iteration after functional decomposition, and we still have some deficiencies in the design:

- We still have synchronous and asynchronous parts in one composite.

- Focusing mostly on the component's design, we didn't cover the structure of the message container and all its parts. We will do this later in relation to the metadata storage.

Now we will discuss the role of Mediators in our design and in general.

The primary role of Mediators is to implement the Intermediate Routing SOA pattern in SOA Suite SCAs, which are the building blocks of either Orchestration (EBF) or Adapter frameworks. From the SOA Patterns catalog, we know that Intermediate Routing together with the Service Broker belongs to the ESB compound pattern. So, at first glance, it might be a bit outlandish to have them both actively discussed in the chapter that is dedicated to Orchestration. This is the obvious question that arises when someone tries to apply (or avoid applying) a seemingly fitting pattern, instead of understanding the actual problem and the ways of its mitigation (not exactly by the pattern with a fancy name from the catalog).

The message path in complex compositions could be equally complex, sometimes even unpredictable at design time, requiring dynamic (or rule-based) dispatching. Oracle SOA Suite composites (as deployment units) could be really complex, aggregating different components (primarily presented, but not limited, by four predefined types); the negative impact of excessive usage of the heavy if-else logic that is implanted into any of primary components (mainly BPEL) has been clearly explained at the beginning of this chapter.

The BPEL's dynamic endpoints' invocation approach discussed in the DynamicPartnerLink example is ingenious but has certain limitations that are mentioned in this paragraph and the first bulleted list in this chapter. Also, this approach is apparently obsolete with the presence of SOA Suite SCA 11g. We need more than just simple WS invocations in our use case, and Mediator covers them all gracefully.

Configuring Mediator in Agnostic Composition Controller

Do you remember that in the first release of our Service Broker, we have two mediators for synchronous and asynchronous processes? The logic we will cover next is applicable for each of them.

Firstly, by decoupling (isolation) our business delegate BPEL process from actual service-workers, we have to anticipate different MEPS involved in service activities. Yes, we have already demonstrated that Mediator can initially be created with either synchronous or asynchronous interfaces (we will discuss one-way and event-based later).

This fact, though, is significant for SCA dehydration routines (Mediator is a stateful component whereas OSB is not) and for the definition of the Port Type; naturally, the sync interface has one Port Type as the response that is returned to the caller, whereas, for async, you can define Callback Port Type for response messages.

For now, it's important to provide all combinations of one- and two-way MEPs.

Mediators' MEPs (green one- and two-way arrows to the left of the service address fields) are based on the service WSDL we are selecting for target service operations. We have already decomposed a considerable number of processes, and we are quite aware of their WSDL. Therefore, any speculation about the possible MEPs and operations will be easy, and it's pretty similar to defining the "generic LoanService" from the DynamicPartnerLink example in SOA Best Practices: The BPEL Cookbook, Oracle.

Here, we just go a little further and define the abstract and generic "Sync CustomService", "Async CustomService", and "OneWay CustomService" for services we would like to invoke directly from our service broker. Also, in the earlier screenshot, you can see some statically defined services such as Audit, EP Lookup Service, and Error Handler, which we will be using anyway. The CRM facade here is just an example of the static business non-agnostic service, which is still not covered by a dynamic composition. Feel free to amend it in your actual implementation.

The generic Adapter endpoint in the list is probably the most important endpoint for cross-framework communication as it represents an OSB adapter, fulfilling the same routing/transformation functions in the EBS framework. That's how we communicate with eternal standalone services and applications that are not directly invoked by a single SCA.

The sequence of establishing this static routing is quite standard (see the Oracle docs, http://docs.oracle.com/cd/E17904_01/integration.1111/e10224/med_createrr.htm); we will shortly highlight the important step. After clicking on the green plus sign in the upper-right corner, you will be invited to select the target service. It is obvious that static and dynamic routes cannot be mixed in one mediator instance (a warning is shown in the screenshot); you can consider it as a limitation, but there are several simple ways to mitigate it. Nothing prevents you from calling another mediator of the desired type sequentially.

The next step is to learn how to set content-based routing using an expression builder. The content and structure of our execution plan are completely related to our metadata taxonomy in ESR; we will have to dedicate much more time to it later, although we have already displayed several key fields in the earlier screenshot:

- mep: This is the flag that is used for routing to the appropriate abstract service. In our case, it could be generic sync, async, and one-way flags. You can add your own MEP type according to WSDL 2.0 MEP if you want.

- location: This is the concrete service URI and will be used further for substituting in a generic endpoint. This is necessary for distinguishing endpoint types.

- taskDomain: This is an optional element for routing to the specific business domain (another Mediator or Service Broker in a separate business domain). It could be the CRM, ERP, SCM, and so on. Use your own business landscape, but this element in general contradicts the agnostic nature of our Service Broker.

- serviceEngine type: This was not shown earlier, but it is quite a useful element that you can declare as a service engine that will be responsible for executing tasks such as BPEL, Transformation, and DB. It's especially relevant for transformations, as they can display different behavior in complex compositions. You can route to the specific engine using this element.

We are still in Mediator's request path. Here, there are three things that we should consider (following the logic of our solution).

- Assign value: This is the field that does all the magic. Using mediators' properties (we have plenty of useful properties; please read about these in the documentation, as we have no place to discuss them here),

endpointURIin particular, we can assign our service URI from the execution plan dynamically, resolving any address issues according to the selected MEP. - Content transformation: This is another highly important feature of Mediator. Yes, we are trying to minimize transformations within a single service domain; however, with the huge legacy application burden, it's not always possible. For an agnostic Composition Controller, it is even more inevitable, as we need to extract (by means of XSLT) the payload from our agnostic message container (CTUMessage) because the endpoint application cannot handle the entire container. Apparently, we would prefer to do this in the ABCS layer as transformations are usually associated with adapters.

- The Validate Semantic operation: This is provided by the Schematron tool or XSD validation, and the most interesting part of this feature is that we could apply the Partial Validation SOA pattern, checking only part of the message, thereby considerably reducing the time to perform quite an expensive XML operation. As you can see in the following screenshot, we do not use this operation in an outbound call. Why is that? It is because of the following reasons:

- It is our outbound flow. We believe that here we can trust ourselves. Still, we have the possibility to employ southbound ABCSes to perform the validation.

- We have already decided that the validation would be part of the execution plan performed on SB. It gives us a lot of flexibility, and we can maintain this operation in a really agnostic way.

Configuring Mediator's endpoint property

Configuring Mediator's endpoint property

You have to consider this option wisely. With an agnostic payload, we can generally validate MessageHeader, ProcessHeader (with EP), and MessageTrackingData. Logically, it should be done on OSB and/or the adapter layer. Although the Schematrons functionality is really quick, we can achieve the same kind of performance by other means. We suggest that you perform JIT verifications yourself. For this use case, we will not perform it on Mediator.

Inbound flows are easier (again, for this use case only), but practically, all of the outbound functionalities are applicable for inbound flows as well. You can also perform partial/complete validation (we don't). The transformation of SCA's responses is also inevitable, as we will put the response into a container. We can also assign an endpoint for a callback, even for synchronous calls; however, we don't want to do that, as we will handle all the responses in an agnostic controller. Although there is some other Mediator functionality available, we would like to demonstrate it with regard to inbound flows here.

We want to demonstrate another interesting Mediator feature we used in some inbound flows, although it's not related to any SOA patterns directly at first glance. For some operations within a single service invocation group, we will need to translate the remote response code (errors, messages, and statuses) into generic agnostic controller statuses. Using these statuses, the controller will decide whether to proceed with the next task or execute the compensative action. You can see it in a flows fragment as demonstrated in the earlier screenshot. The task is fairly obvious as external applications, which are not in our Service Inventory, naturally have their own code, statuses, and resolution logic. At best, they will provide you with certain code, reflecting their operational status; however, those situations when some error dumps will be returned are also quite common. Codes and related resolutions should be discussed during the service encapsulation and functional decomposition stage, and we should have at least the initial table with values and code in the form of Domain-Value Map (DVM). You can create DVM for your SCA application/active project (Ctrl + N, then navigate to All Technologies | Domain-Value Map) and store it in your MDS for common use. You can use it in any of your XSLTs as well (you can find plenty of examples on that), but the usage in Mediator is most interesting to us.

Using DVM in Mediator for error code mappings

The expression present in the Type field in the From section, realizing the lookup of the error code via status text using DVM in our MDS, is as follows:

dvm:lookupValue("dvm/StatusText_to_ErrorCode_Mapping_nested.dvm",

"StatusText",

$in.payload/ns7:CTUMessage/ns17:MessageTrackingData/ns17:MessageTrackingRecord/ns17:StatusText,

"ErrorCode", $in.payload/ns7:CTUMessage/ns17:MessageTrackingData/ns17:MessageTrackingRecord/ns17:ErrorCode)As demonstrated in the earlier screenshot, we perform three lookups for ErrorCode, ErrorMessage, and ErrorDetails, which we store in the MessageTracking section of the message container, and our agnostic controller will use this value for dynamic compensation. As mentioned earlier, every task has a compensation pair, registered as a sibling in the EP. In cases where there is a recognized error (recognized as a Rollback flag even if it's not an actual rollback), this compensative task will be executed instead of the next one in the sequence; the task counter will not be increased and further execution will depend on the results of this compensation (again, provided via Mediator). The final error resolution will be covered by a dedicated Error Handler, which is part of the conventional BPEL compensation flow, and we will discuss it in detail in Chapter 8, Taking Care – Error Handling.

Of course, compensative branching logic in an agnostic controller must be strictly conducted as per the values you put in DVM. DVM can be modified at runtime, changing the business logic brokered by an agnostic controller; however, if you misspell a word, the situation can be unpredictable. (Another question is who would do that directly in production? Sadly, we all know the answer.) Another issue that must be taken into consideration is what if the business task does not (or could not) have a dynamic compensation routine? For simplicity, we didn't show this scenario, but this situation can be anticipated. In most common cases, we can ignore this condition and continue with the next task or park it in Human Workflow to come up with a manual resolution.

So, now you can see that Mediator can do a lot as a static router:

- Dynamically substitute the endpoint URI, which is most interesting for us here.

- Substitute the values using DVM, stored in MDS. Here, we can partially implement the Exception Shielding SOA pattern, improving our security landscape (do not rely on that much though).

- Perform all kinds of transformations for invocations and callbacks.

- Perform partial validation, implementing the Partial Validation SOA pattern, thereby improving performance.

Other things we didn't discuss here (yet) are as follows:

- Event propagation, implementing Event Driven Messaging (the Event Aggregation Programming model in AIA terms)

- Using Java callouts in message mediation and process handling

- Rule-based dynamic routing

Actually, now we are going to discuss the implementation of the decision service that is responsible for selecting the appropriate execution plan and then we will continue with Mediator.

In the third step depicted on the consolidated agnostic Composition Controller SCA diagram, we called PlanLookupService to acquire the routing slip (EP). This service is clearly visible among others in the list of target services that are available for invocation by our Service Broker. You already know the basic structure of EP and the MessageHeader elements used as input parameters; therefore, establishing WSDL for this service should not be a problem. Simply put, this is all that we know: the requested product, county/affiliate, business event, and the requested business operation. This information should be sufficient for determining which business process (in the form of EP) must be fired (namely, EP's name extracted and passed for its execution in Business Delegate within a loop). Consequently, these parameters will be the elements of the Business Rule components' inbound and outbound variables, where outbound is just the name of an XML object (file) stored in MDS.

To begin with, we design this SCA Composite in an exceedingly simple way; it should only contain Mediator and the Business Rule component. We will start the configuration from business rules. The configuration steps are obvious; just follow the Oracle documentation:

- The XSD schema will be created with two types, reflecting the inbound and outbound elements (hint: see the transformation part in the following screenshot). Feel free to adapt the types according to your requirements or just simplify it, leaving no more than three elements for the start.

- Drag the rules component from the right palette, and assign the input and output variables from the defined XSD (input is based on the

MessageHeaderXSD and output just on a string). - These input and output variables will be our XSD-based rule facts.

- It would be useful to define baskets as List of Values (LOV) for country, affiliate, product, and business operations (action types). Think of baskets as enumerators with fixed values that we will use in our decision table.

Now, we can create our decision table as shown in the following screenshot:

Building the decision table for Agnostic Composition Controller

The earlier table is similar to any table you can create for this purpose (in Excel, for instance). There are two parts. In the first part, using basket LOVs, we define the conditions provided by inbound parameters such as productType: VOIP; businessEvent: UpdateOrderStatus; country: BR (for Brazil); and affiliate: BraTello (for Brazil Telephonic). In the second part, we define actions, which in our case is the filename of the Execution Plan. Needless to say that this file is stored in MDS, and we know the actual path; it's part of the deployment profile and configuration plans. You do not have to describe the whole complexity of business rules and conditions from the start; taking one small step at a time is important, and we would really like you to repeat it. Obviously, the execution plans and their names will emerge gradually (and not exactly slowly) along the way of the functional decomposition process together with logic centralization and service refactoring (all of them are SOA patterns, representing the common sense we mentioned many times).

Apparently, the ultimate outcome of this practical exercise would be the establishment of a concrete Service Inventory, which is currently maintained on MDS. We are not going to discuss the pros and cons of this realization now; we're just jumping ahead, but we can assure you that we will rebuild it for better association with SOA Patterns and as a result provide better performance, maintainability, and scalability. Anyway, you can be rest assured that the presented solution is already solid enough and it works!

We could only have the Business Rule component in our EP lookup composite, but again, we will add the Mediator. Why is that? For its transformation and routing support, of course. Again, following the SOA principles, we want to maintain PlanLookupService in an atomic state, decoupled from the MessageHeaders implementation. The MH XSD can evolve, following our understanding of business requirements and the SBDH standard; also, we definitely do not want to affect our Rule Facts, which are already implanted into the decision service. Another important thing is the flexibility of the Rule Engine itself. We can have more than one ruleset, supporting multiple decision tables or rule functions. Thus, we should not only transform message headers' values from an inbound request into rule function parameters (see the callFunctionStateless transformation in the following screenshot, the Transform Using field), but also route individual requests to the specific decision table (or rule function). Static routing will do perfectly fine here.

You can see how many operations we can support from the client's perspective with our service in the previous screenshot from the previous paragraph; all operations are synchronous, which is logical because async MEPs simply will not work here.

Talking about the Rule Centralization SOA pattern, we have to stress the fact that concentrating on all the rules in the form of heavy decision tables in one decision service is not a good idea at all. The rule of the thumb here would be to centralize all the business delegates' decision tables in one decision service and separate it from other business domains (see the corresponding BusinessDomain element in the following mapping):

Acquiring an XML object using Mediator

Handling a synchronous reply is easier as we have only one element to deal with, that is, the URL of the actual execution plan. Here as well, we have to use transformation with one simple concatenation function to combine our oramds: path with the <EP>.xml filename; you can see it in the following screenshot. Now, back to the Mediator routing rules and transformations presented earlier in the chapter, you can see more clearly how we transform our Execution Plan as an XML object.

The next operation in the row Assign: Retrieve_ExecutionPlan is just to assign the whole of the reply to the EP type in the Messages Process header. (Yes, "retrieve" probably is not the best term here as it is in fact an inject, but you get the idea.)

Concatenating XML objects in Mediator's transformation

That's it! We're almost done here. One more very convenient thing related to Mediator should be mentioned. Although it's not directly related to any SOA message mediation patterns, this feature directly supports Runtime Discoverability, and by enforcing this SOA principle, we can greatly improve the lives of our operational personnel. We all know (as diligent architects who support and control the health of deployed applications) that with a considerable number of business processes, tracing them in the SOA EM dashboard could be hard. The native SCA ConversationID could be ugly, and leaving the Instance name empty is not a decent courtesy towards those who will deal with our products. Regretfully, our dynamic approach, as you already guessed, does not improve the situation much; quite contrary to this, it will complicate it. We do not have the actual business process name until we call the EP lookup process. We should assign the instance title somehow in the request or preferably in the response to make it more searchable and traceable.

Setting the Composite Instance title using Mediator

Here again we can use Mediator properties to complete the task; see the previous screenshot. Feel free to select any meaningful fields from your message in the XPath function call, as presented in the next code snippet (here, med: stands for Mediator's namespace). Now we can assign the concatenated value to the mediator property. In the Oracle documentation, tracking.compositeInstanceTitle is mentioned, which is obviously invisible. In our example, we use testfwk.testRunName, which is pretty good as well:

med:setCompositeInstanceTitle(concat($in.request/mhs:MessageHeader/mhs:Sender/mhs:Instance, '_', $in.request/mhs:MessageHeader/mhs:RequestId));

You can imagine that this is not the only way to set the Instance title.

In a book that is dedicated to practical aspects of SOA patterns, we cannot devote much space to Java, as we are supposed to stay reasonably language-neutral (one of the SOA benefits, you remember). Sure, it's impossible when we talk about concrete implementations on the Oracle platform where Java is the blood and bones of the entire ecosystem. Thus, some words about it are in order here.

We deliberately omitted some steps at the beginning of our Business Delegate (or Service Broker) process. They are quite typical and are as follows:

- We have to set appropriate audit and tracing levels, identifying them from the message context (the Message Header is involved).

- We want to set the instance title from the very beginning, again using Message Header values. This can be done using embedded Java; see the following screenshot:

You can do a lot using Java callouts in BPEL, but be reasonable and do not reinvent existing functions. Other areas of direct Java application would be Mediator's Java Callouts and BPEL custom sensor actions; you can register custom classes with the SOA Suite.