6

Managing Environment Security

In a managed service, shared responsibility for securing the resources must be factored in. The provider is responsible for securing the infrastructure, managing services that run on top, and providing security-related capabilities. The consumer is responsible for who has access to the service and securing the data outside of the managed service.

In this chapter, we will cover the following topics of how Cloud Build provides security while also integrating with other security-related services:

- Defense in depth

- The principle of least privilege

- Accessing sensitive data and secrets

- Building metadata for container images

- Securing the network perimeter

Defense in depth

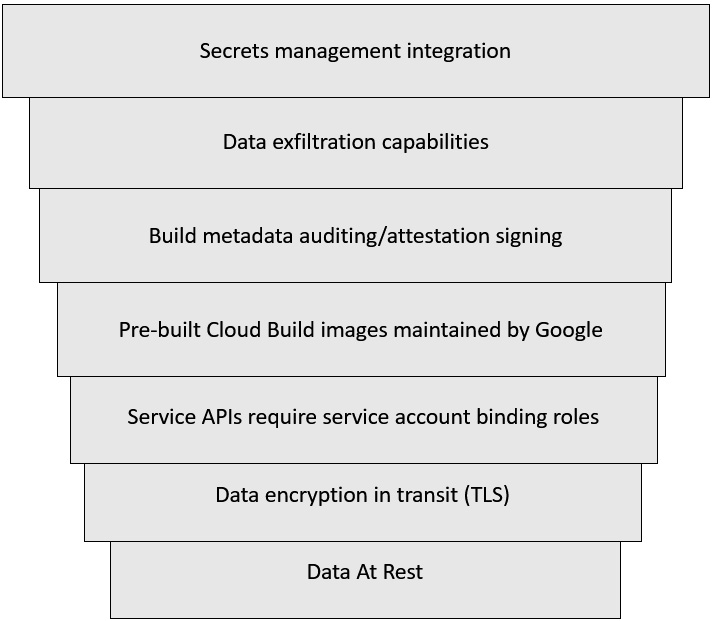

The concept of defense in depth for computing is used by information security to ensure that security constructs are put in place at each layer for protection. Cloud Build ensures that these security constructs are in place; some examples are noted in Figure 6.1:

Figure 6.1 – Defense in depth with Cloud Build

While Cloud Build provides the capabilities mentioned here, some services must be enabled or leveraged in the pipeline. There are other security-related solutions available in the market that may not have direct integration with Cloud Build, but they can still be used for pipeline steps due to Cloud Build’s support for custom container images in each step. For instance, you may be able to retrieve values from HashiCorp Vault if your container image has the correct libraries and tools.

There are also a few other examples to be aware of that may leave pipelines sharing sensitive information:

- Steps that output and log sensitive information

- Steps that use non-secure protocols to communicate with other systems

- Using unknown or vulnerable container images for steps within your pipeline

- Storing sensitive information in the Cloud Build working directory between steps

Defense in depth is a component of the overall security strategy; thus, the shared responsibility model also applies. Cloud Build, as noted before, provides many elements to secure your pipelines; however, certain steps and measures must be taken by the consumer of the service as well.

The principle of least privilege

The principle of least privilege is another security construct for protecting resources. The goal is to only provide the necessary access to resources to complete the job. If a pipeline does not need access to data in object storage, then there is no reason to grant access to the actor invoking the pipeline. For instance, organizations may be less restrictive about security permissions in the development phase, but more restrictive in the production phase. While this may make it easier to get things started, inconsistencies may cause trouble as teams progress to higher-level environments, causing unnecessary troubleshooting tasks. The cultural movement within organizations of shifting left with security from the onset may involve the concept of least privileged access to resources. This varies from organization to organization, as different regulatory bodies and industries may have different requirements.

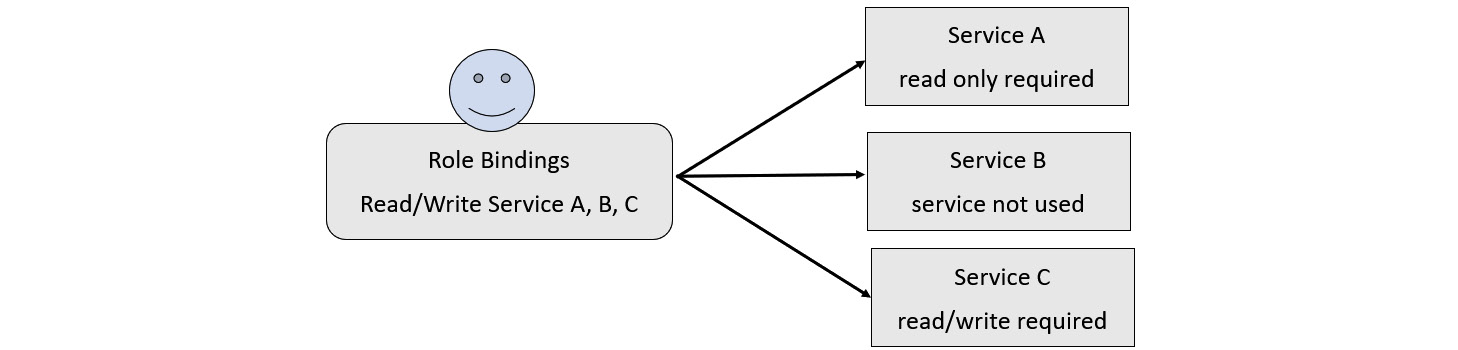

Imagine if a bad actor gains access or someone in the organization unintentionally uses the service account. Figure 6.2 depicts the scenario in visual form: they would be able to write to Service A, affecting the integrity of the data, while also being able to access or write data into Service B when it’s not needed to complete a pipeline:

Figure 6.2 – Additional access granted when not needed

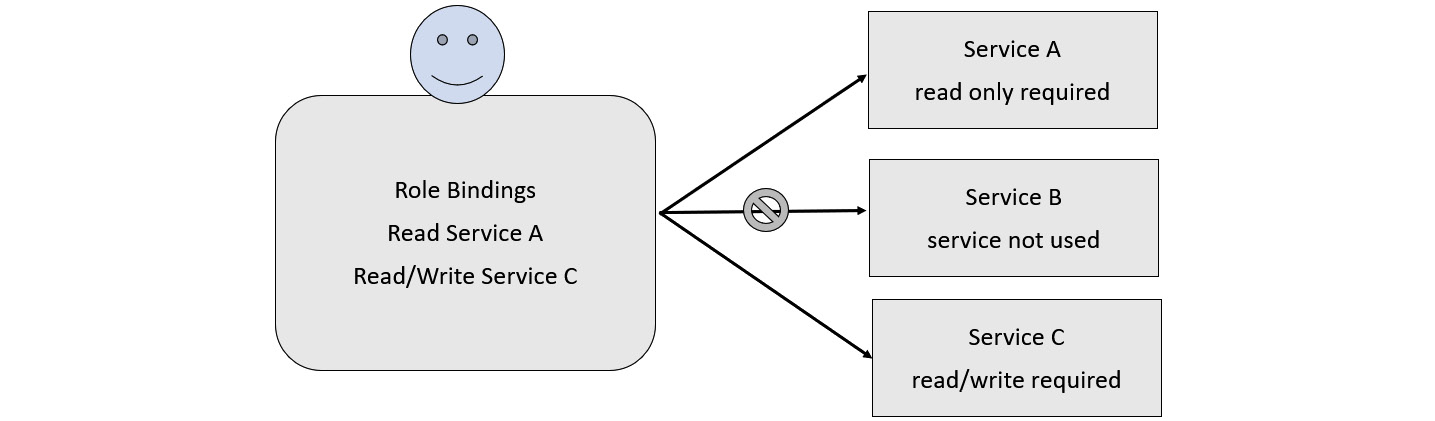

When leveraging the principle of least privilege, access is only granted to services that are necessary for a specific pipeline. Figure 6.3 depicts that though a service exists, access is not granted because it is not needed in the pipeline. While this is in the context of a build pipeline, this can be applied throughout the organization for all services:

Figure 6.3 – Restricting access to a service to only what is needed

Cloud Build leverages the service accounts within Google Cloud Platform (GCP) to identify the actor that will be used in the pipeline to connect to other Google Cloud services. The service account can be assigned to each trigger, which allows for the same pipeline configuration, for instance, to be executed by different service accounts depending on the parameters that triggered the build. If you recall, in Chapter 5, Triggering Builds, Cloud Build offers regex patterns to determine how to trigger builds or different event invocation patterns. This allows us to use different service accounts on different Git branch, tag, and pull request patterns, webhooks, and manual invocations. These different types of triggering mechanisms were covered in Chapter 5, Triggering Builds.

Organizations can create service accounts with different role privileges and fine-grained permissions to define the minimal permissions required to successfully complete the pipeline. Leveraging unique service accounts also facilitates life cycle management, as well as provides administrators with the ability to monitor usage patterns within the defined service accounts.

Limiting access to resources through the principle of least privilege can reduce the exposure of an organization while also reducing the accidental manipulation of resources that was not intended.

Accessing sensitive data and secrets

Data is critical between the steps in a build pipeline; sometimes, the data being used in the pipeline may be sensitive as well. In Chapter 5, Triggering Builds, we stored the GitLab private SSH key in Secret Manager. The secret was used in the pipeline to clone the private repository. Sensitive data or secrets can be retrieved from various sources. Cloud Build has integrations with two GCP services for secrets:

- Secret Manager

- Cloud Key Management

It is important to protect the secret safely in a location that is not specified in the build configuration. Each of the respective services here also emits audit and data access logs that share when and which principal attempted to access a secret or key. Access to sensitive secrets using both of the aforementioned services is logged in Cloud Build for auditing purposes.

Secret Manager

The Secret Manager integration for Cloud Build is referenced by a stanza in cloudbuild.yaml. An example snippet from the previous chapter is shown here:

- name: gcr.io/cloud-builders/git args: - '-c' - | echo "$$GITLAB_SSH_KEY" > /root/.ssh/id_rsa chmod 400 /root/.ssh/id_rsa ssh-keyscan gitlab.com > /root/.ssh/known_hosts entrypoint: bash secretEnv: - GITLAB_SSH_KEY ... availableSecrets: secretManager: - versionName: projects/282614098327/secrets/GITLAB_SSH_KEY/versions/1 env: GITLAB_SSH_KEY

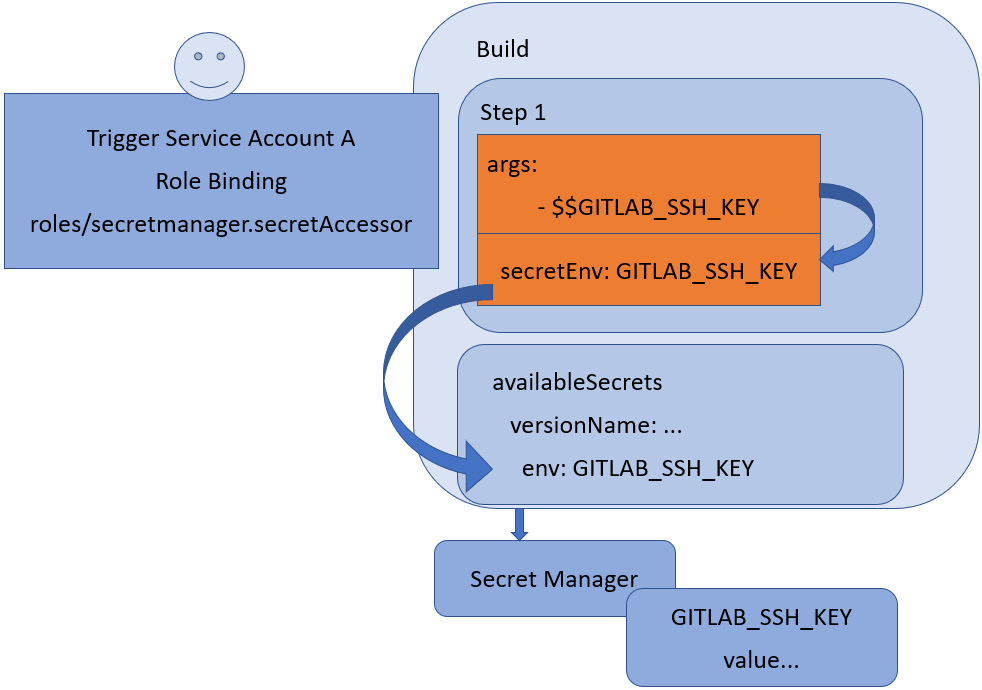

The secretManager key is used to identify that the Secret Manager service is to be utilized. versionName is the unique identifier to the secret and version that is to be retrieved. The value of the secret is then stored in the environment variable, GITLAB_SSH_KEY, which can be used in the build steps of the pipeline. In this build step, notice how the variable named GITLAB_SSH_KEY is associated with the secretEnv key, so it can be leveraged in the args build argument.

Specifying availableSecrets in Cloud Build does not automatically grant access to the secret that is specified. The roles/secretmanager.secretAccessor role binding for the specific secret must be specified – in this case, referencing the service account to be used by the Cloud Build trigger.

Figure 6.4 – How a secret is accessed by a build and build step

Figure 6.4 depicts how a build pipeline is associated with a specific service account with the appropriate role binding. The build can then retrieve the secret from Secret Manager and make it available within the build step using secretEnv.

Cloud Key Management

Cloud Build, as noted, can also use another GCP service, Cloud Key Management, to access sensitive data. The following consists of the example syntax used to access an encryption key from Cloud Key Management to decrypt an encoded secret:

availableSecrets: inline: - kmsKeyName: projects/**PROJECT_ID_redacted**/locations/global/keyRings/secret_key_ring/cryptoKeys/secret_key_name envMap: MY_SECRET: '**ENCRYPTED_SECRET_encoded**'

Rather than using the secretManager reference, as in the previous example, the inline key is used to identify that the Cloud Key Management service is to be used. Notice some of the differences from the example snippet here in comparison to the Secret Manager scenario. In the Cloud Key Management scenario, kmsKeyName references the unique identifier to the encryption key. Cloud Build will use this key to decrypt the text specified as the value of MY_SECRET. The value of the secret is stored in cloudbuild.yaml, but in its encrypted form.

Just as with Secret Manager, Cloud Build also requires permission to the key through the Cloud Key Management service. In the case of Cloud Key Management, we require the following role for the Cloud Build trigger service account, roles/cloudkms.cryptoKeyDecrypter.

Cloud Build provides integration with multiple GCP cloud services for access-sensitive secrets and encrypted data. Sensitive data should not be stored in the build configuration or source code management (SCM) repositories.

Note

While we have provided instructions for Cloud Key Management, it is recommended to use Secret Manager to store and retrieve sensitive information for Cloud Build.

Build metadata for container images

Cloud Build generates metadata for container images that can be used to identify build details, build steps, attestations, or repository sources. SCM repository commit hashes and build ID hashes can be used to track the repository source and steps for each build. Cloud Build can generate and sign attestations at build time to allow organizations to enforce that only builds built-by-cloud-build can be deployed at runtime. We will cover each concept in the following sections.

Provenance

Cloud Build, in conjunction with Artifact Registry, can associate additional metadata about container images to validate the build details. For the most part, those that have access to the SCM and Cloud Build can use the metadata and logs to validate which SCM repository commit was used to trigger a specific build. As an added layer of auditing available to organizations, build provenance allows for organizations to validate that the metadata identifying the source and build steps of a container image has not been tampered with or manipulated. This metadata is stored in the format defined by the Supply-chain Levels for Software Artifacts (SLSA) framework (https://slsa.dev/provenance). Cloud Build generates this metadata and signs it so that organizations can validate the contents if desired. At the time of writing, Cloud Build can achieve an SLSA level of 2 (L2), which is defined as “tamper resistance of the build service” (https://slsa.dev/spec/v0.1/levels#summary-of-levels) and utilizes Cloud Build triggers with SCM repositories that have built-in integrations.

Note

For Cloud Build to generate the metadata, the following services must be enabled: Artifact Registry and Container Analysis. The cloudbuild.yaml configuration must also include the images field. For private pools to enable build provenance, the requestedVerifyOption option must be set to VERIFIED.

Artifact Registry provides the API to display the provenance metadata generated by Cloud Build with the –show-provenance switch. See an example command here:

$ gcloud artifacts docker images describe

us-central1-docker.pkg.dev/**PROJECT_ID-redacted**/image-repo/myimage@sha256:e4637b83784b96cb...93349a8864cc9b8be1

--show-provenance

A truncated example output is provided here. Note that the numbers are added for illustration purposes – they are not in the command output:

image_summary: ... provenance_summary: provenance: - build: ... [1] materials: - uri: https://github.com/**REPO-redacted**/packt-cloudbuild/commit/497985408c6f656944dd8d2f2ffd42160c59334e ...

This is the location of the source materials – in this case, the repository and specific commit:

[2] subject: - digest: sha256: e4637b83784b96cbdcaad40cde0622bd3c0e0bfe4cd4c993349a8864cc9b8 be1 name: https://us-central1-docker.pkg.dev/**PROJECT_ID-redacted**/image-repo/myimage ...

See the following container image SHA and location:

[3] envelope: payload: eyJfdHlwZSI6Imh0dHBzOi...mZkNDIxNjBjNTkzMzRlIn1dfX0= payloadType: application/vnd.in-toto+json signatures: - keyid: projects/verified-builder/locations/global/keyRings/attestor/cryptoKeys/builtByGCB/cryptoKeyVersions/1 sig: MEUCIQDkyICeKz0Assa6Dgj4ss-rsd0RJ2ZXJWmBI-6lycoV_wIgZnSaPYCRAGRo0BVYGx_3aofMJS0YifWLcNtwXQpmtQ0= ...

The critical pieces, which are the encoded envelope, signature, and public key, to verify that the contents of the metadata have not been tampered with or manipulated, are as follows:

- payload – The JSON format of the build metadata and build steps adhering to the SLSA spec

- keyid – The location of the builtByGCB public key to be used to validate the signature

- sig – The signature generated by Cloud Build for validation of the payload

This may repetition of information that is available in the Cloud Build logs, but it provides organizations with another mechanism to audit and ensures that a container image has not been tampered with or manipulated.

Attestations

GCP uses an attestation in the form of a digital document as a way to certify a container image. Attestations can be added to build steps to ensure that the steps were completed by a designated system. Cloud Build can generate an attestation that a build was completed in Cloud Build and this can be verified by the container runtime.

Binary Authorization is the service in GCP that container runtimes such as Google Kubernetes Engine (GKE) and Cloud Run use to determine whether an image can be deployed. For instance, GKE can deny an image for use because it was not built by Cloud Build. Organizations can generate an attestation that the image has passed QA. Using Binary Authorization, an organization can only permit container images that have passed QA to be deployed into the runtime. Additional attestations can be added as build steps defined for Cloud Build.

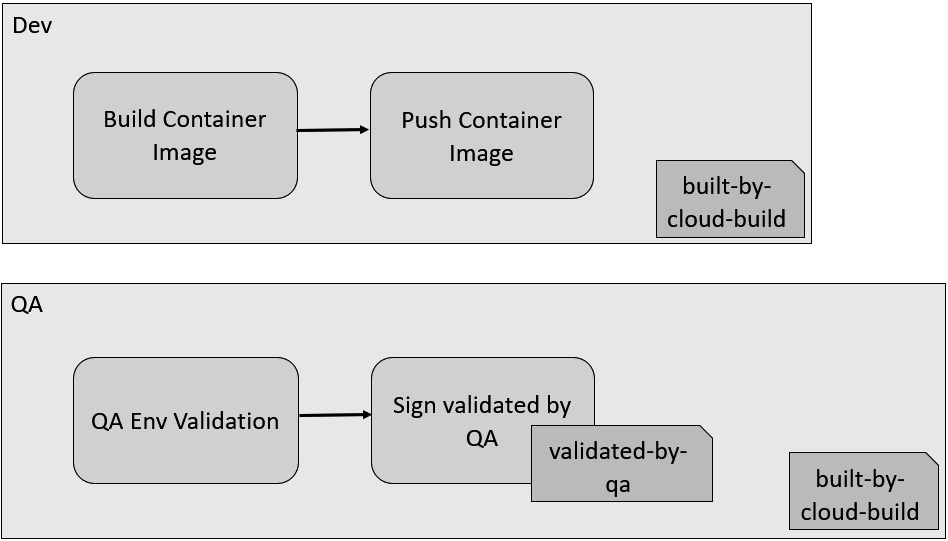

When Binary Authorization is enabled, an attestor is created by Cloud Build, which is then used to create the built-by-cloud-build attestation and signed using the builtByGCB crypto keys. This is validated by the supported container runtimes before the specified container image is deployed. Figure 6.5 shows how Cloud Build can automatically sign images with a built-by-cloud-build attestation, and in the QA pipeline, how a configured build step can also sign a validated-by-qa custom attestation:

Figure 6.5 – Cloud Build signing attestations

In the following example, the enforcementMode for Binary Authorization is set to DRYRUN_AUDIT_LOG_ONLY, which means the runtime will check for attestations, but only log violations. If GKE (as the container runtime) enables Binary Authorization, when a Pod is requested to be created, it won’t be rejected but the following will be logged:

imagepolicywebhook.image-policy.k8s.io/dry-run: "true" imagepolicywebhook.image-policy.k8s.io/overridden-verification-result: "'nginx' : Image nginx denied by attestor projects/**PROJECT_ID-redacted**/attestors/built-by-cloud-build: Expected digest with sha256 scheme, but got tag or malformed digest

In the preceding example, a generic nginx container image was requested to be deployed but was caught and logged by Binary Authorization. Binary Authorization can be set up to require multiple attestations as it passes through different parts of the build process, such as QA.

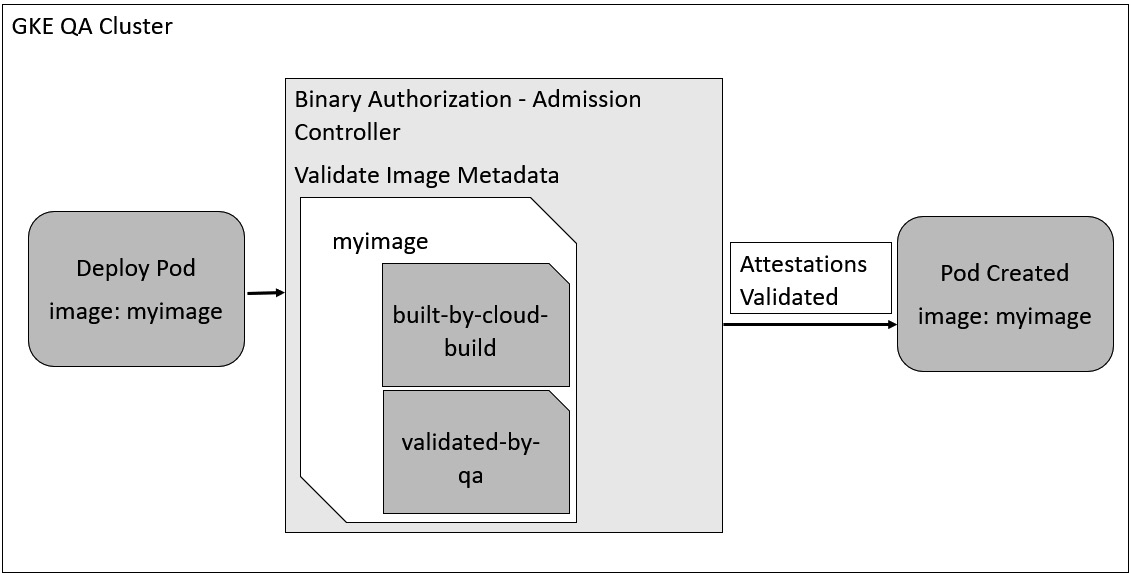

Figure 6.6 shows Binary Authorization checking for attestations in GKE’s admission controller. If all the required attestations are present and enforcementMode is ENFORCED_BLOCK_AND_AUDIT_LOG, it is permitted into the cluster. This blocks admission of the container image if the required attestations are not available:

Figure 6.6 – A GKE container runtime using Binary Authorization to determine whether an image can be admitted

The combination of both Cloud Build and GCP container runtimes supporting Binary Authorization can help improve the security posture of an organization. Denying container images that have not passed through the security rigor within an organization is an added layer of defense that can be implemented. Binary Authorization can also be configured to allow the deployment of images without the appropriate attestations in the event of an emergency. This mechanism is referred to as breakglass, which will bypass the validation.

Cloud Build’s data has multipurpose uses for auditing and validation throughout your end-to-end pipeline. Specific GCP services can take advantage of the metadata, such as signatures, to determine whether resources have completed all required steps in the respective environment or not.

Securing the network perimeter

Cloud Build can leverage a GCP security construct named VPC Service Controls (https://cloud.google.com/vpc-service-controls) to guard against data exfiltration. VPC Service Controls (VPC SC) allows an organization to set policies to define the user information, service accounts, IP addresses, and IP subnetworks required to access a GCP service.

In the context of Cloud Build, only builds using private pools, as discussed in Chapter 2, Configuring Cloud Build Workers, can support VPC SC. Private pool instances can leverage this capability because they are associated with your VPC, even though it’s managed by GCP. Organizations can also restrict Cloud Build even further by only allowing builds to use private pools within an organization’s policy. Further restrictions can be applied by only allowing certain private worker pools at various GCP hierarchies:

- Organization

- Folder

- Project

By leveraging this fine-grained hierarchy of rules, we limit the permitted worker pools at each level. This isolates things further by restricting certain teams to using pools based on the required resources. It would also deny the use of default pools, which may be applicable when organizations require builds to run within their private networks.

When a VPC SC perimeter is configured, only those granted access can trigger a build or call the Cloud Build API, for example. In the previous examples as part of our Defense in depth section, we focused on service account role bindings that prevent access to particular services and data. VPC SC provides another layer of protection at the network layer by configuring associated policies. To create an initial VPC SC perimeter, we specify Cloud Build as a restricted service within the perimeter. This would mean that the Cloud Build service is protected and any ingress or egress from the perimeter would have to be defined by an ingress or egress policy.

Note

Organizations just getting started may want to configure VPC SC in dry run mode to audit the impact of defining a perimeter. Enabling enforced mode immediately may cause builds to fail. Any perimeters created in dry run mode can be converted to enforced mode without having to re-write policies.

As an ingress policy, for example, we may want to allow the user-specified service account defined in the trigger to access the Cloud Build API. For inner-loop scenarios where we may want to give specific developers access to trigger a build, we can do this by creating an access-level policy that clears specific developers within a specific IP/CIDR range or country, or who are using devices that adhere to specific policies.

We have limited access at both the Identity and Access Management (IAM) and VPC network layer, by using VPC SC and the defined service perimeter. The service perimeter can be extended to allow communication from other connecting networks for build triggering and service access from on-premises systems or specific developer machines.

VPC SC provides an additional layer of security at the network layer for services on GCP. In this chapter, we discussed how Cloud Build can leverage this GCP service to protect how builds can be triggered, but so that resources that build configurations can also access them.

Summary

Cloud Build integrates with various GCP services to provide users with different security capabilities. These capabilities help provide layers to an organization’s defense-in-depth strategy. Cloud Build makes it easy to implement security within your pipeline but the shared responsibility model between the GCP services and the organization is critical to ensuring an end-to-end security posture.

In the next chapter, we will start looking into leveraging Cloud Build for the automation of infrastructure resources.