2

Performing Engineering on Projects (Part I)

How do we do engineering on projects? Engineering projects are different from other projects, so learning to be an effective manager of an engineering project starts by understanding how we do engineering on projects. We accomplish this engineering through the engineering life‐cycle. In this chapter, I summarize key aspects of how we do the initial stages of the engineering life‐cycle, which are called “requirements analysis” and “design.” We will complete our overview of how we do engineering on projects in Chapter 3.

2.1 The Systems Method

2.1.1 Motivation and Description

In Chapter 1, we introduced the idea of a project life‐cycle – a series of steps or stages through which a project progresses toward completion.

Each stage can and should be governed by a set of guidelines – which we termed processes – whose level of rigor and detail should be adapted to the needs of each particular project.

A discipline called systems engineering, which is in many ways closely related to engineering project management, has developed what we might call the system method. This is intended to increase the likelihood that a system development effort will be successful, and achieves this increased likelihood of success by placing the focus on the behavior of the system as a whole, rather than exclusively on the parts and components.

Since the objectives are so similar, we can transfer much of this thought process from systems engineering to managing engineering projects. In fact, I tend to think of engineering project management as systems engineering supplemented by a specific set of management and people skills.

As I stated in the introduction to this book, I have come to believe – through long experience – that engineering projects are quite different from other projects (such as construction projects and artistic projects – and I have managed those too). The difference centers in something that later in this book we will call risk; engineering projects, since they are inventing something new and technological, have more risks, more profound risks, and risks that tend to be centered in engineering matters.

All of this has led me to the conclusion that one cannot be an effective manager of an engineering project without employing methods for project management that account for this specific nature of engineering projects. What I have learned is how to tailor and adapt the methods of ordinary project management to the specific needs and challenges of engineering projects, in many cases by drawing upon the methods and insights of systems engineering.

In this chapter (and continuing into the next), therefore, we will summarize how one performs engineering – in particular, systems engineering – on our engineering projects. With that background, in later chapters, we will be able to draw upon that knowledge to create those tailorings, adaptations, and new features that allow us successfully to address the challenges specific to managing engineering projects.

I will start by describing what I consider the systems method.

There is a natural tendency for engineers to focus on the parts and components of a system, such as computers, radios, motors, mechanical structures, and so forth. After all, they are visible, tangible artifacts; they are of a size and complexity that one can readily grasp; and some important portion of the actual system development effort involves the specification, selection, acquisition, and integration of these parts. Focusing on them is conceptually easy and comfortable.

But … it is usually the case that the reason we are building a complex system to accomplish a mission is that we desire or need something more than what is provided directly by these parts; that is, we aspire to create some emergent behavior, some “1 + 1 = 3” effect, wherein the new system will do something more than what is accomplished by the individual parts.

Let's consider an example or two. I am old enough to remember the first mobile telephones; they pretty much just had a numerical dial pad and two buttons marked “call” and “hang up.” They did not store phone numbers, but they were still a breakthrough; you could make a call while you were out and about, without having to depend on finding a phone booth.

But you needed to know the number that you were going to call. At one time, we all had little booklets into which we wrote the telephone numbers of friends and business associates. To use that mobile telephone, I would have to first look up the name of the person that I wanted to call in my little booklet, look next to their name where I had written their phone number, and then punch their phone number into my mobile phone. In the “mobile communications system” of those days (not so long ago!), storing phone numbers – and relating those stored phone numbers to names – was one function; making the actual phone call was another, separate, function.

At some point, this was improved through the introduction of a little electronic device into which I could enter the names and phone numbers of my friends and business associates. This was a big improvement (my handwriting is terrible).

But there were still two separate devices, each of which implemented a separate function.

Then, someone came along and realized that one could put both functions onto a single device. Even with no electronic integration between the two functions, this was an improvement, as I had to carry only a single device, keep only a single battery charged, and so forth.

But then something very different was introduced: Since those two functions (1 = storing phone numbers and relating those stored phone numbers to names; 2 = making the actual phone call) were both on the same device, it was now possible to allow these two functions to interact electronically. I could now just find my friend or business associate by name, and indicate that I wanted to call them. The actual phone number was automatically transferred from the first function to the second. This was a radical improvement in simplicity, ease of use, and reduction of errors!

It is also an example of what we mean by the term “emergent behavior.” The people that did this in essence created a capability that one might call “dial by finding a name on the list.” The capability to do that is not inherent in either of the “parts” (e.g. the list of names and numbers, and the phone itself), but instead “emerges” from the carefully controlled union of those parts. I like to think of such emergent behavior as a “1 + 1 = 3” effect that we are striving to create; or to use an old‐fashioned phrase, the “whole is greater than the sum of its parts.”1

Emergent behavior is critical in systems engineering and engineering projects. Almost every engineering project today is undertaken to achieve such emergent behavior. Once you realize this, then you realize that your focus as either the designer or the project manager needs to shift from being exclusively or primarily on the parts, to instead being on the system as a whole. This is the start of the systems method.

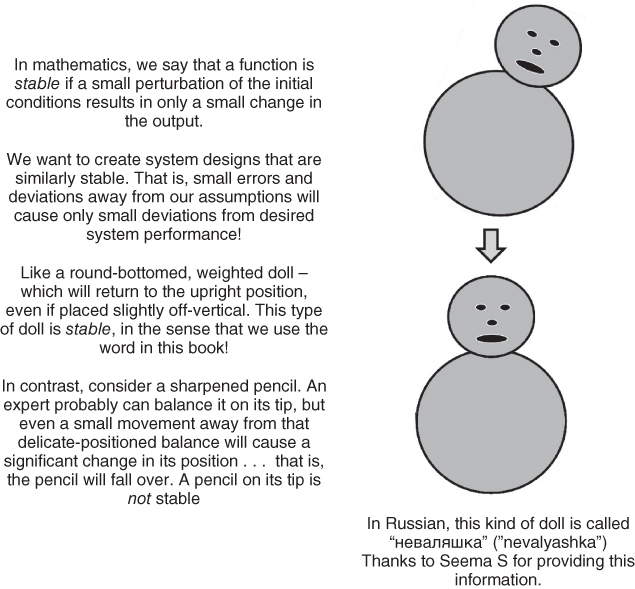

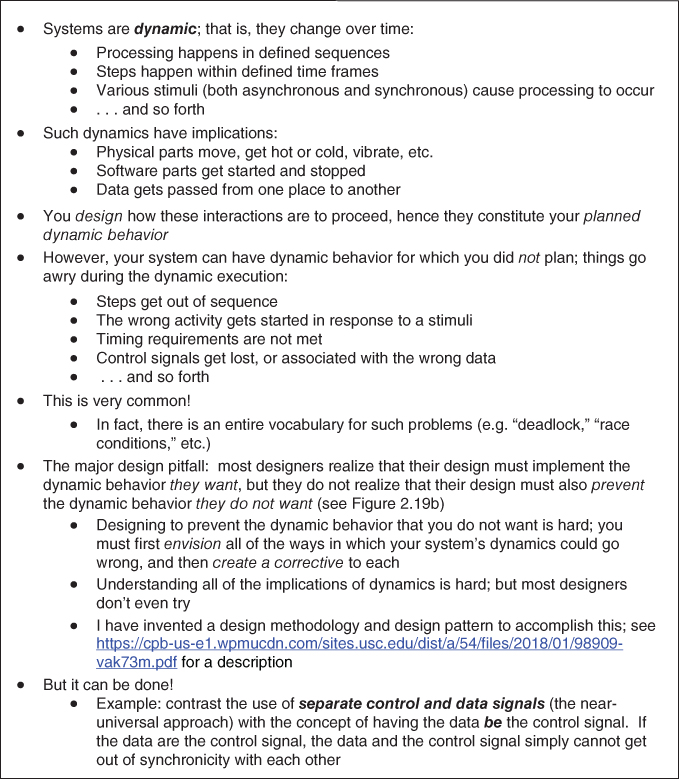

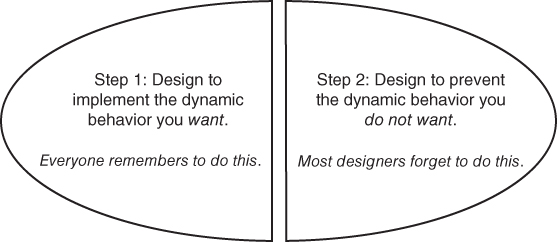

Of course, when we put parts together so as to create the desired emergent behavior, we are highly likely unintentionally to create other, undesired, unplanned emergent behavior. Such unplanned emergent behavior can range in impact from a nuisance to a serious safety hazard. As we will see later in this chapter, good designers therefore not only design their systems to provide the emergent behavior they want, they also design their systems so as to prevent other types of emergent behavior.

There is another motivation for the systems method: parts – even large parts that may look like they are complete systems – may not be usable without the complete system. Imagine having cars, but without roads, without filling stations, without traffic signals, without insurance, without spare parts and repair facilities and repair technicians, and even without traffic police. It would not work. It is the role of the systems method to help us figure out what are all of the parts that we must have; sometimes, the need for a part is obvious; sometimes, however, it is not. I have seen many systems that “forgot” what turned out to be a critical part; perhaps some data set that the computer program needed before it could operate, perhaps some special piece of test equipment, and so forth. The systems method provides a basis for actually determining early on in the project development cycle all of the parts that are needed. It does this through a technique called decomposition, wherein we conceptually break the system into segments, and then break those segments into smaller subsegments, and so forth. We in fact use decomposition throughout the systems method, decomposing different aspects of the system: the requirements, the design, the test program, and many other aspects of the problem are all analyzed through decomposition. We will say a lot more about this later.

There is yet a final motivation for the systems method: not only do we need all of the parts, but those parts must match and be in balance. Cars must be of a size that fits appropriately in the marked road lanes. The weight of the cars must be limited to that which the road can bear. The surface of the road must be reasonably smooth, but there is no reason to make it smoother than is necessary; that would certainly increase cost, and probably decrease reliability. Bumpers on all cars must be at around the same height. When we transitioned from leaded to unleaded fuel we had to ensure different sized nozzles at gas stations, sized so that people could not accidently put leaded fuel into a car that was designed for unleaded fuel.2 We had to figure out for how long we needed to sell both types of fuel, as not everyone could afford to buy a new car that used unleaded fuel right away (and the car companies could not have built that many cars in a single year, anyway). And so forth. Parts must match, and be in balance. We want the car to drive smoothly, and with only a reasonable level of noise. How much of that noise control is to be achieved by the road surface? By the tires? By the suspension? By the shock absorbers? These must not only be in balance in order to achieve the desired effects; we must avoid putting effort into improving a single part if the effect of that improvement at the system level is either not worth its cost, or if other factors prevent there from being any improvement at all!

Here's an example to consider: society will have another very complicated system transition as we start introducing driverless cars. For many years, perhaps for decades, we will have both driverless and human‐driven cars sharing the roads. How will insurance and liability work? Will we, at some point, require driverless cars to be connected to a network, so that we can route such driverless cars so as to even out traffic congestion? Will we at some point ban human‐driven cars? After all, human‐driven cars kill tens of thousands of people every year in the United States alone; once the driverless technology is really mature, such driverless cars are likely to be far safer than human‐driven cars. Will we then abandon lane markings, and let the driverless cars crowd together, so that we can fit many more cars on the same roads? Doing some of these things would seem to be essential in order to achieve the real benefits of driverless cars – many fewer accidents and fatalities, and the ability to fit far more traffic on the same roads with less congestion. Yet there will be significant social resistance to all of these changes. These are all examples of the questions we consider when using the systems method.

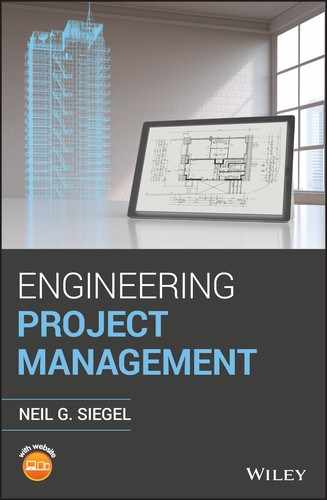

The systems method guides us through all of these issues (see Figure 2.1).

Figure 2.1 Motivations for employing the systems method; key characteristics of the systems method.

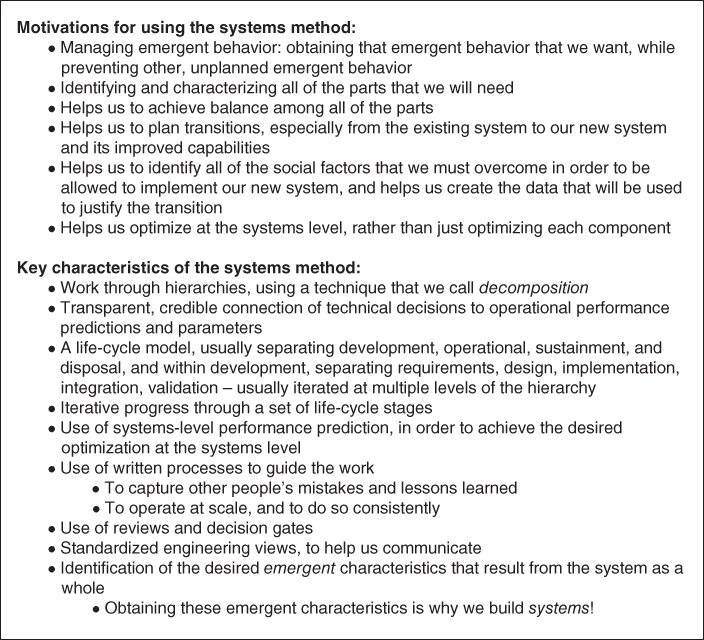

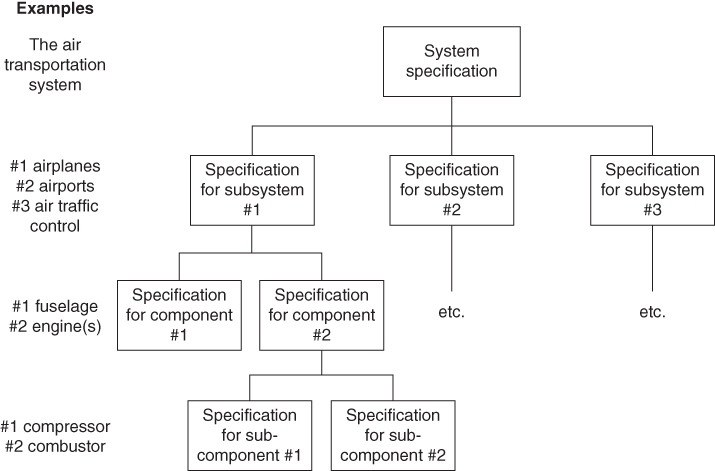

Figure 2.1 points out that one of the key characteristics of the systems method is the use of hierarchies and decomposition. You will see that we use hierarchies and decomposition over and over again in project management (and in this book). We describe the product or system we aspire to create by decomposing it into a hierarchy of parts. For example, in Figure 2.2, we depict and describe the world air transportation system by decomposing it into a set of constituent parts – airports, airplanes, and air traffic control – which form level 2 of our hierarchy. We can then decompose each of these level‐2 components further into smaller parts; in the figure, I have decomposed airplanes into fuselage, engines, avionics, and passenger equipment. The decomposition can then continue to further levels. Such decomposition into a hierarchy helps us understand complex entities.

Figure 2.2 An example of decomposing a system into a hierarchy.

We will discover that we can use such hierarchies and decomposition to represent and analyze many other items, not just the systems and products themselves. In particular, we can use them to represent and analyze our methods and intermediate work products (e.g. requirements, design, approach to testing, and so forth).

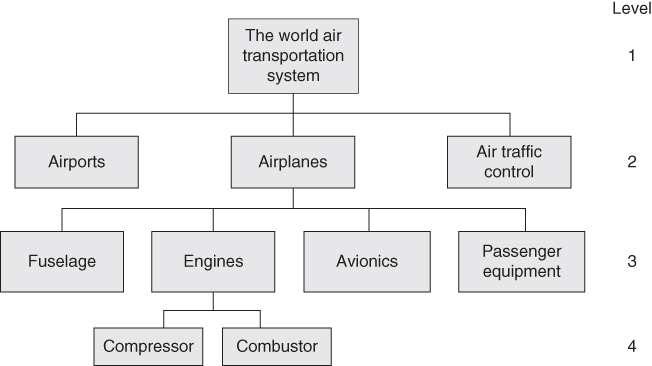

In Chapter 1, we introduced the idea of a project life‐cycle, a series of steps or stages through which the project moves as it progresses. In Figure 2.3, I expand the list that we used in Chapter 1 to form my own version of the stages of the project life‐cycle; other people use slightly different versions of this list.

Figure 2.3 Neil's version of the stages of the project life‐cycle.

Next, I will describe each stage:

- The need and the idea. In this stage, we try to understand the stakeholders, their needs, and their constraints. What is their mission? How do they do it? What is their product? How do they do their mission today? Why is that how they do it? What constrains the possibilities? What do they value? How do they measure that value? We also look at technology, techniques, and capabilities that are available to use in building the system. What can they really accomplish? What are their limits and side‐effects? How mature are they (e.g. reliability, consistency, safety, predictability, manufacturability, etc.)? Can they be used productively in ways other than their original intent? In this stage, we are trying to understand broad needs and potential enablers, but not yet trying to define specific requirements.

- Requirements. The formal statement of what the new system is supposed to do, and how well it is supposed to do it. The what is a qualitative statement of a capability. For example, “The car shall be able to travel in both the forward and reverse directions, and the direction of travel shall be selectable by the driver.” The how well is a quantitative statement. For example, “The car shall average 30 miles per gallon of fuel under the following conditions: X, Y, and Z,” or “The noise level inside of the car shall not exceed 55 dB at speeds below 70 miles per hour, while traveling on road surfaces that meet the following conditions: A, B, and C.” Requirements (of both types) are written down and placed into documents called specifications. Meeting the requirements, as documented in the specifications, is usually mandatory; that is, you may not get paid (or may get paid less) if the system you deliver at the end does not meet every single requirement in the specifications. Requirements – like so much else in systems engineering – are created in a hierarchy: we create requirements for the system as a whole, and then for the major functional elements of the system, and perhaps even a level below that; we do this using the decomposition process.

- Design. The requirements say what the system is supposed to do, and how well it is supposed to do it. The design, on the other hand, says how all of this is supposed to be accomplished; for example, will our car use a gasoline engine, a diesel engine, or a battery with an electric motor. The design will likely be quite technical at times, specifying, for example, particular algorithms, particular materials, particular structural methods, and so forth. The design, like the requirements, is also created in a hierarchy: for the system as a whole, for the major components of the system, and so forth. Note, however, that the hierarchies for the requirements and the design are seldom, if ever, the same: one is a decomposition of what and how well, the other a decomposition of how. The two hierarchies are related, but they are not the same. The top level of the design hierarchy describes how the system as a whole will be implemented, and in particular, will describe how we are to achieve the emergent behavior desired for our system. Lower levels of the design hierarchy describe successively smaller pieces of our system, and how those pieces interact. The lowest level of the design hierarchy describes how each of the smallest pieces are to work internally.

- Implementation. Through the decomposition process embodied in the hierarchy for the design, we arrive at the bottom of our top‐down definition process (e.g. the left‐hand side of the “U”; see Figure 2.4, below): the naming and describing of each of the little pieces into which we have decomposed our system through the design process. We now have requirements specifications and designs for all of these little pieces and, therefore, we are ready to go and build them. Any given such piece might consist entirely of hardware; or entirely of software; or entirely of data; or some combination thereof. We may not need a hierarchy for this stage; we may commission a set of independent teams each to build one or more of those little pieces.

- Integration. When people started building systems, especially systems with lots of software in them, their original concept was to finish all of the implementation, then put all of the pieces together, and then proceed to test the system. It was quickly discovered that this seldom worked in a predictable and consistent fashion. The complete system, with its hundreds of separate parts and lots of software (nowadays, perhaps millions of lines of software code), turns out to be too complex for this sort of put‐it‐together‐all‐at‐once approach to work. So, gradually, the need for a phase between implementation and testing was recognized, which we now term integration. The purpose of the integration phase is to put the parts together, at first in small subsets of the whole, and gradually working one's way through the integration of ever‐larger subassemblies toward having the entire system. At this stage, we are not yet testing the system; instead, we are just trying to make it operate in an approximately correct fashion. Distinguishing integration from testing has been a gigantic boon to systems engineering and engineering project management; many projects today, however, still neglect the integration stage, and usually suffer greatly from that neglect. We will talk much more about the integration stage in the next chapter.

- Testing. Having conducted implementation (which builds all of the pieces of our system) and integration (which assembles all of the pieces of our system, and sorts out enough problems that the system operates in a reasonably correct fashion), we can then turn to the problem of testing our system. For most systems, we will do two different types of testing. First, we have our specifications that contain our requirements: the mandatory statements of what and how well for our system. We have to conduct some type of rigorous process to make sure that our system meets each and every one of these requirements. In this book, I call this first form of testing verification. Such verification, however, is not sufficient; I have seen plenty of systems which meet their requirements but were disliked by their intended users. Therefore, we must also assess a set of more subjective matters, such as “Can this system be used by the intended users, or is it too difficult for people with their education, experience, and training?” Or perhaps our systems is used by people who are in stressful situations (such as power plant operators, police, ambulance dispatchers, doctors, or soldiers); is the system designed in such a way that it can realistically be used by people under such stresses with only a reasonable number of errors? In this book, I call this second form of testing validation.

- Production. Our system development (e.g. all of the life‐cycle stages through testing) yields us one copy of our system. Sometimes – such as for a satellite project – that is the only copy of our system that we will build. But more often, we then make additional copies of our system. This making of additional copies of our system is called production. Production may range in scale from making 10 copies, to 1000 copies, to 1 000 000 copies, or today, even 100 000 000 copies. The techniques used for production will need to vary significantly, depending on the scale of the production required. We must also perform some testing to ensure that our production copies are correct; this testing is usually far less in extent than the testing we perform on the first article, however.

- Deployment. Our system needs to be placed into service. That is often a complicated endeavor on its own. For example, a satellite needs to be launched into space. Or a new billing system probably needs to be operated in parallel with the existing billing system for a while, before we disconnect the existing billing system and switch over to the new one. Or our millions of new mobile phones need to be sent to retailers and sold. This process of placing our new system into service is called deployment.

- Use in actual operation. Once our system is in actual use (e.g. we have completed a successful deployment), it can finally be used by the intended users, and bring them the benefits for which it was designed. But those users need support: someone has to create training materials for the new system, and perhaps even conduct actual training classes. Things break, and someone has to diagnose and fix them. To effect those repairs, we will need replacement parts; we need someone to make those replacement parts. It is likely that we will continue to find errors in the system – even after the test program has completed – and we will need to fix those errors. Most systems are operated for a long time, and our users expect us to design and implement improvements to the system over the course of the time that the system is operated. There are many other, related aspects of supporting our new system in effective operations.

- Phase‐out and disposal. All good things come to an end, and someday – perhaps long after we have retired – our system will reach the end of its usable lifetime and will need to be taken out of service. This can be a very complicated and expensive activity on its own, and in such cases, methods to implement the retirement and disposal of the system should be designed into the system from the very beginning. A satellite might need a special rocket motor to deorbit the satellite, so that it burns up in the Earth's atmosphere. A nuclear power plant needs to be designed so that all of the radioactive materials can be taken out when they are expended, and then properly stored or reprocessed. Even mobile phones (and other consumer devices that contain batteries) need special disposal procedures, so that we do not create inappropriate dangers through pollution caused by old batteries. In other cases, we may have to figure out how to safely retire and dispose of a system where no such preparations were made. The world has many dams, for example; all have finite lifetimes, but methods, materials, and funds to dispose of them are seldom worked out until the dam is ready to be retired.

This is our basic system life‐cycle; every project may have its own small variations and changes in nomenclature, but the general intent is usually very similar to that which I have described.

In each stage of the project life‐cycle, we perform a mixture of activities:

- Technical

- Project management

- Agreements

- Planning and replanning

- Monitoring

- … and so forth.

Each of these activities can and should be governed by a set of written guidelines – which we in this book will call processes – whose level of rigor and detail should be adapted to the needs of each particular project. An engineering process is simply a written description of the steps, guidelines, constraints, inputs, and outputs that we use to perform engineering activities. We will return to the subject of such project processes later on in this book.

2.1.2 Life‐Cycle Shapes

An interesting insight is that engineering project life‐cycles can have shapes. I will describe three of the most common such shapes: “U,” waterfall, and spiral.

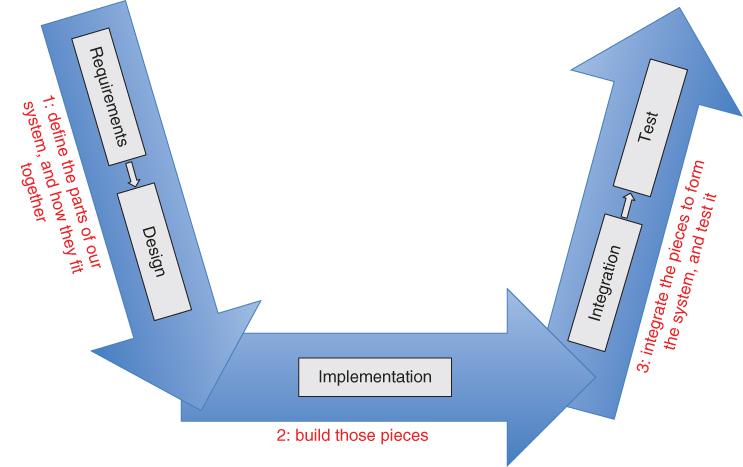

I start with what I call the “U” diagram (Figure 2.4). The concept is clear: we start our project in the upper left, defining our requirements, and then creating our design. As noted above, these stages proceed in a hierarchy; we do requirements at the system level, and then for each smaller segment of our system. We do design at the system level, and then for each smaller segment of our system. That is, we perform the requirements and design stages by starting at the system level and working our way “down” the hierarchy (where “down” in this context signifies from the system level to ever smaller pieces of the system).

Figure 2.4 Neil's “U” diagram.

To make it clear what we mean by such a hierarchy, consider the example that we presented in Figure 2.2:

- The air transportation system can be considered to consist of the following major parts: airplanes, airports, and air traffic control. These items – airplanes, airports, and air traffic control – form the second level of this system's hierarchy.

- An airplane consists of parts too, such as the fuselage and the engine(s). Those parts form a third level of our hierarchy.

- An airplane engine also consists of parts, such as a compressor and a combustor. The compressor and combustor form a fourth level of our hierarchy.

We say that the requirements and design stages are performed top‐down, referring to the direction of motion through the hierarchies and through the “U” diagram.

Having completed our requirements and our design, we are ready to implement all the pieces of our system defined by our design. This portion of the diagram is drawn horizontally, because we may not use a hierarchy for this stage; we could just commission independent teams to implement each of the pieces we have defined.

We are then ready to move upward through the right‐hand side of the diagram. Having implemented all of the pieces, we start putting those pieces together, at first in small subsets of the system, and gradually progressing to larger subsets, until we finally have the entire system interconnected and operating to some initial degree of correctness; as noted above, I call this process integration. We then perform testing: the verification that our system is effective (e.g. satisfies all of its formal requirements) and the validation that our system is suitable (e.g. meets the needs and desires of its intended users). On this side of the diagram, we show the arrow pointing upward, because we progress from small pieces and subassemblies, through larger subassemblies, to the entire system; that is, in this portion of the diagram, we say that we are proceeding bottom‐up.

Many other people use a similar diagram, which they generally call the “V” diagram; it basically has the left and right sides of my “U.” But the “V” version of this diagram either omits the implementation steps, or it places that actual building of the pieces on the right‐hand side. I object to either of those approaches. Obviously, the implementation is important, and ought not to be omitted. Perhaps more subtle is the idea that the purpose of the left‐ and right‐hand sides of the “U” diagram is to show that these activities proceed in a hierarchy. The actual implementation of the pieces that we have defined through the decomposition on the left‐hand side, however, need not proceed in any sort of hierarchy; if we have decomposed our system into 500 little pieces, we might well build the 500 little pieces pretty much independent of each other. We resume working in a hierarchy (bottom‐up, on the right side of the “U”) when we start putting small numbers of those pieces together through the integration process. Hence my preference for the “U” shape over the “V.”

The “U” diagram does not depict the latter stages of the project life‐cycle (e.g. production, deployment, actual use of the system, retirement, and disposal); neither do the related “V” versions of this diagram. Instead, this “shape” concentrates on the stages that occur during the actual development of our system. Indeed, some of those later phases (e.g. use of the system in actual operations) are not actually “projects,” as I defined that term in Chapter 1; instead, they are what I defined as “continuous business operations.”

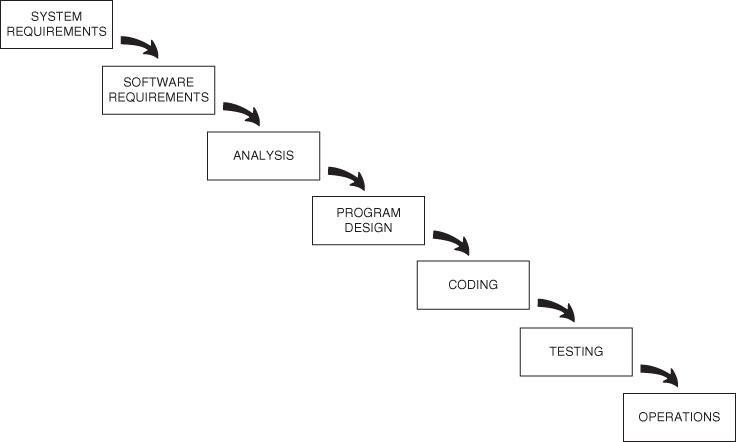

The next shape for a project life‐cycle that I will discuss is called the waterfall (see Figure 2.5). The so‐called waterfall method was introduced by Dr. Winston Royce in 1970.3 Dr. Royce's purpose was to bring some order to what he perceived as the chaos that seemed to him to be a recurring feature of large software development activities. His recommendation was for a series of particular steps to be undertaken in a particular order, while endeavoring to complete one step before beginning the next. He believed that the need to perform all of these steps was not universally recognized – he actually said that some customers believed that doing some analysis, and then doing the coding, was all that would be required to deliver a software product – and therefore, part of his goal was to advocate the use of the complete set of steps. Although his terminology is in some ways specific to software systems, you can see that, in concept, Dr. Royce's list of steps is not very different from the list of stages that I presented above.

Figure 2.5 Dr. Royce's first depiction of the waterfall method.

Source: Used with the permission of Dr. Royce's son, Walker.

It is difficult to overstate how important and influential Dr. Royce's work has been. Large companies, like TRW and IBM, created corporate software development policies that more or less adopted Dr. Royce's approach in toto. So too did the US Department of Defense, and through the Department of Defense's contractual terms that mandated that companies who were building software (and later, systems) for the US Government follow those standards, in fairly short order the entire world was following the waterfall method.

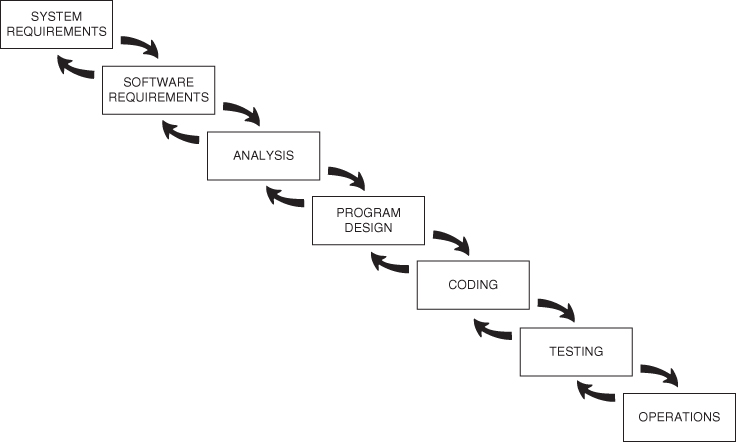

There is an additional insight that Dr. Royce provided: a recognition that things do go wrong, and one might at times have to go backwards (Figure 2.6).

Figure 2.6 Dr. Royce's second depiction of the waterfall method.

Source: Used with the permission of Dr. Royce's son, Walker.

The depiction of Figure 2.6 is often misunderstood. If one just looks at the drawing but does not read Dr. Royce's paper, one might get the impression that one is allowed – even encouraged – to back up as many steps as wished. This is not so! Dr. Royce is very clear that the desired approach is to be thorough enough at each step that one never has to back up more than a single step. His original caption for this figure actual reads “Hopefully, the iterative interaction between the successive phases is confined to successive steps.”

The waterfall method contributed huge value through its “normalization” of the necessity for steps other than analysis and coding, and through its promulgation of the idea that there should in fact be a planned sequence of steps.

In short, the waterfall method was aimed at introducing some organization and structure into what was perceived to be an overly chaotic approach to systems engineering and engineering project management. But the waterfall method also implied a rigorous sequencing of (i) doing all of the requirements, then (ii) doing all of the design, then (iii) doing all of the implementation, and so forth; in fact, many of the corporate and government development policies that were created in the wake of Dr. Royce's paper said explicitly: finish one step before you proceed to the next, and go through the life‐cycle exactly once.

Experience, however, soon showed that this was too constraining to be practical for many projects, especially those of larger scale and complexity. At times, people would achieve success through an incremental approach that involved a sort of successive approximation, by building a well‐defined partial version of the system, operate that version for a while to gain additional insight, then build a second well‐defined partial version of the system, operate that version for a while to gain additional insight, and so forth.

What to do? Live with the perceived inflexibility of the waterfall method, or return to the pre‐waterfall chaos? Neither of those choices seemed very good.

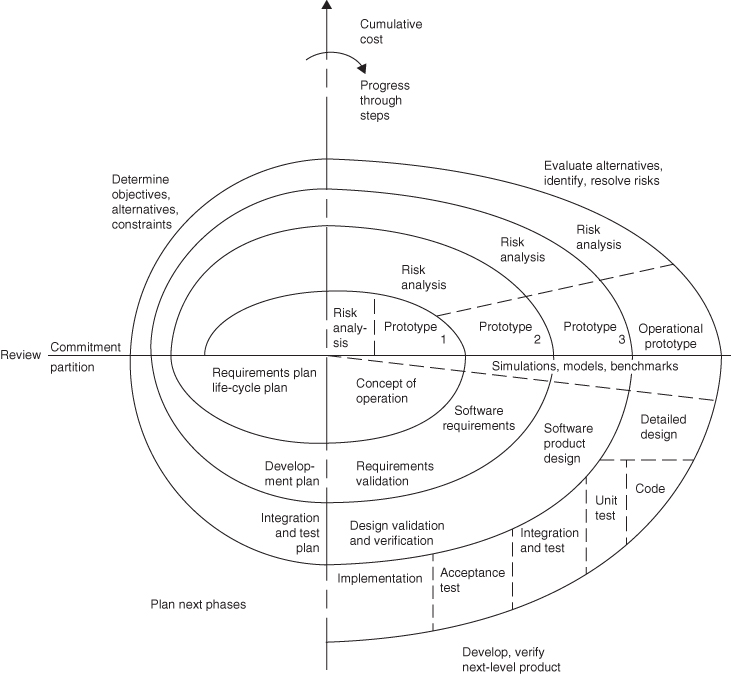

Fortunately, someone came along and proposed a new method – and a new project life‐cycle shape – that solved this dilemma, allowing the continued rigor and organization of the waterfall method, while creating a structured framework for successful development through incremental, successive approximations of the eventual system. The person who first put this forward as a candidate formal method was Dr. Barry Boehm,4 in 1986;5 he termed it the spiral model. The spiral model (Figure 2.7) forms a third shape for a project life‐cycle.

Figure 2.7 Dr. Boehm's spiral method.

Source: Used with the permission of Dr. Boehm.

The point of the spiral method is that we develop a carefully thought out partial version of our system, and then we deliver that partial version to the users (Dr. Boehm calls this a prototype in Figure 2.7, but this partial version, ideally, is actually used to accomplish real work), who then use it for actual mission operations. The development team observes these operations, and thereby gains new insights into what those users actually need and want. The developers then incorporate those new insights into their plans for the next increment – which Dr. Boehm calls a spiral – of the system. This way, features or omissions that might cause the system to be unacceptable to the users get found and fixed along the way. It turns out (we quantify this in the next chapter) that fixing things earlier in the project development life‐cycle costs far less than fixing them later. Fixing them earlier, of course, also increases the user's satisfaction with the system, and their confidence in the development team.

Again, it is difficult to overstate how important and influential Dr. Boehm's work has been. All of the same organizations that in the 1970s and 1980s created policies and directives mandating the use of the waterfall method modified those policies and directives in the 1990s to allow and encourage the use of the spiral method. As a result, some variation of the spiral method is used nearly universally on engineering projects today, especially engineering projects with lots of software (which these days is most of them). Legendary computer scientist Dr. Fredrick P. Brooks says of Dr. Boehm's spiral model, “I strongly believe the way forward is to embrace and develop the Spiral Model.”6

Other shapes are possible, and have been propounded in the literature. But for the purposes of this book, we will limit ourselves to these three project life‐cycle shapes.

2.1.3 Progress Through the Stages

Whatever shape your engineering project employs, you will use some type of orderly method to determine when you are ready to move from one life‐cycle stage to the next (e.g. from requirements to design, and so forth). This method is centered around a review, which is the data‐gathering and data‐analysis exercise that forms the basis for a formal decision process (e.g. are we ready to move to the next life‐cycle stage or not?). If we determine that we are not yet ready, we then determine what are the things that remain for us to accomplish, so that we are ready to move to the next life‐cycle stage. Generally, you want a large segment of the stakeholders for your project (the development team, your company's management, the buying customer, the using customer, the paying customer, and so forth) to participate in these reviews and decisions. We include all of these people, both in order to get a full range of opinions to inform your decisions, but also to build a social consensus about the correctness of that decision.

This process is often referred to as decision gates. We call these reviews gates because we may be allowed to pass through them at this time … or we may not; that is, the gate may be either open or closed. The reviews are intended to determine the adequacy of the system to meet the known requirements (specifications and process guidelines) and constraints. Reviews become progressively more detailed and definitive as one advances through the program life‐cycle.

In toto, reviews provide a periodic assessment of your project's maturity, technical risk, and programmatic/execution risk. Equally importantly, they help one improve/build consensus around the go‐forward plans; this is why you involve so many of your stakeholders in the review process. Reviews provide you – the manager of this engineering project – with the data you need to make the decision about whether to proceed to the next phase, or return to the previous one in order to resolve some issues.

As noted above, an important characteristic of the systems method is that we strive to optimize at the systems level, not at the component level. Even if we make each component of our system the very best possible, they may interact in a fashion that provides less than the best possible performance; since we are striving to make the system as a whole the best possible, we must look at the interactions of the components, in addition to the performance of each individual component.

This may have other benefits. For example, I have frequently discovered that I could make do with a less capable version of some component in a system and still get the system‐level performance and capacity that I needed. It would be a waste of money to have paid for a better version of that particular component; limitations that arise out of the interactions of the components might prevent the improved version of that component from having a positive effect on the system as a whole.

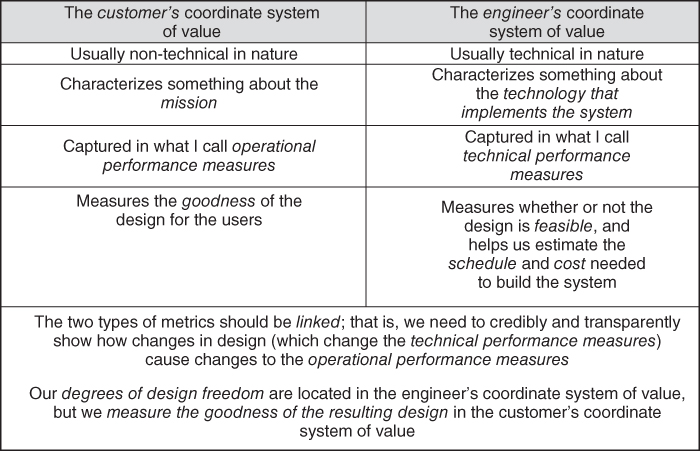

This quest for system‐level optimization can lead to useful insights too. I once had a radio vendor on a military command‐and‐control system come to me and say that they had figured out (for a price!) how to make the signaling rate of their radio become twice as fast as it was at present. In the coordinate system of value for a radio designer, being twice as fast is of high value indeed. The radio signaling rate is an example of what I will later call a technical performance measure; this is an objective measurement within what I like to call the engineer's coordinate system of value. But before making a decision to pay that money for faster radio, I had my system‐level modelers insert a model of that faster radio into our system‐level performance model. We had created a set of metrics that measured the performance of the system not only in technical terms, but also in terms of the operational benefit to the intended users; this is what I will later call an operational performance measure, and it forms an objective measurement within what I like to call the customer's coordinate system of value (Figure 2.8). For example, we had created a metric that predicted the level of casualties on each of the opposing sides in a battle scenario; if our system design was going to add value to the customer (in this case, the US Army), the ratio of the number of enemy casualties to the number of US Army casualties should be higher than for the same battle scenario when our system was not used. We called this the loss‐exchange ratio; a higher loss‐exchange ratio indicated a better design, because that was a metric that the intended users of the system valued.

Figure 2.8 The two coordinate systems of value, and the two types of objective measurements.

When we plugged the radio with the doubled signaling rate (a highly favorable technical performance measurement, in the engineer's coordinate system of value) into our system model, the result was no improvement at all in the system's overall performance, as measured by the loss‐exchange ratio! Therefore, in the customer's coordinate system of value, the faster radio had no value.

But we did not stop there; I asked the radio vendor and the modeling team to work together to figure out why the performance at the system level did not improve. They discovered that there was a subtle bottleneck, and this insight led to an idea for a different improvement that the radio vendor could make: leave the signaling rate the same, but decrease the time it took for the radio to acquire the channel and synchronize the encryption process. This was a far less expensive change to make than doubling the signaling rate, but led to a major improvement in system‐level performance.

My lesson: You should always ask why! And you cannot trust your intuition; the interactions inside of a complex system make it very difficult to intuit the system‐level impact of a change to a component.

We – not the Army – created the loss‐exchange ratio metric. But clearly, this metric would have more value to our design team if the customers and eventual users agreed that this metric actually reflected their coordinate system of value, that is, a better score on this metric would indicate a system that the users would actually find to be better. So, you need to take the effort to socialize your ideas for operational performance metrics with your customers, users, and other stakeholders. I like to achieve what I call the transfer of emotional ownership to the customers. This signifies that in some real sense, the metric has become theirs, rather than mine. For example, after socializing the loss‐exchange ratio metric with the US Army for several months, I learned that the Army modeling organization had started using that metric as the primary output of their own system performance models; our metric was now driving internal Army decision‐making about the future of our system. That is a transfer of emotional ownership! When you achieve that, you are building credibility with your customers, users, and other stakeholders.

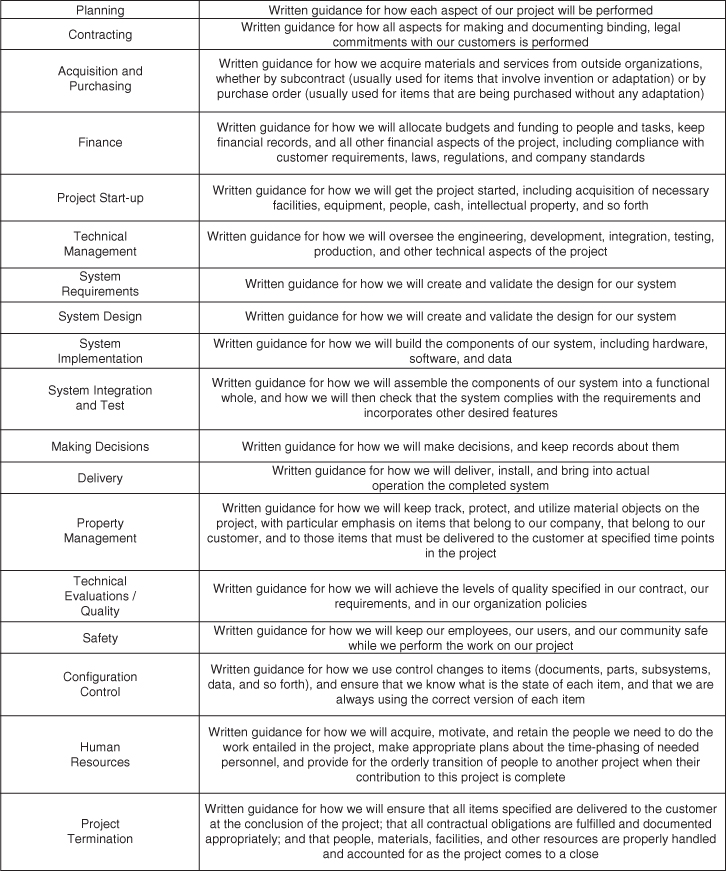

Another aspect of the systems method is that we employ written guidance regarding our methods. We call such guidance processes. We have processes for every aspect of our engineering project: engineering, but also finance, hiring, managing our people, configuration control, contracting, procurement, testing, quality, safety, and many other items (many of which we will cover over the course of this book). Each process will specify in writing what is to be done, when, by whom, what the products will be, what artifacts will be created, how the work is measured and quality ensured, who approves the work and the artifacts, and many other aspects.

Figure 2.9 summarizes the range of processes that we use in an engineering project.

Figure 2.9 Examples of the range of processes that we use on an engineering project.

Why go to the trouble to create and employ such written process? Because engineering a complex system is hard. Dr. Eberhart Rechtin7 said that:

- Success comes from wisdom

- Wisdom comes from experience, and

- Experience comes from mistakes.

When possible, it is best to learn from the mistakes of others, rather than learning only from mistakes that one makes oneself. And that is the role of engineering processes – they allow us to learn from the mistakes of others. They are the “lessons learned” from past activities.

There is a caveat: processes are necessary – they help us be repeatable, and operate at scale. But processes by themselves are not sufficient to ensure a good design! We need both good processes and a good design. Good designs come from good designers, not from good processes.

Some companies have gone through a phase of assuming that good processes are in fact sufficient to ensure good design; the result was a series of expensive project failures.

We discuss how to achieve a good design later in this chapter.

Lastly, the systems method involves a lot of planning. We formulate and write plans about how we are going to perform each of the various aspects of the project, ranging from how we will validate the technical requirements, to how we will acquire the people with all of the specialized skills we need (and at the right time, and in sufficient quantity), to how we will keep our people (and the general public) safe as we perform this work.

2.2 Requirements

One of the first stages in the project life‐cycle is the process of creating and validating the requirements for our system.

Let's start by considering why we think about requirements. It is a fact that people often just do things, without having given a lot of consideration to the detailed nature of the problem, or without having spent a lot of time considering what is the best approach to use. Is that how successful people attack a problem?

I have been told that someone once asked the famous physicist Albert Einstein how he would allocate his time, if he had only an hour in which to solve a problem. His answer?

- 45 minutes to understand the problem

- 5 minutes to formulate a solution

- 10 minutes to implement the solution

(He left off verifying the solution.)

That is, Dr. Einstein would allocate 75% of his time just to the task of understanding the problem, before he started doing any actual work to formulate or create a solution.

On real engineering projects, we generally cannot allocate 75% of the time to this single task, but the point I take away from this (potentially apocryphal) story is that this particular highly successful person8 believed that the path to success entailed allocating a significant portion of time to the question of understanding the problem to be solved.

In systems engineering and in engineering project management, therefore, we try to understand the problem, and then we write down what we have decided.

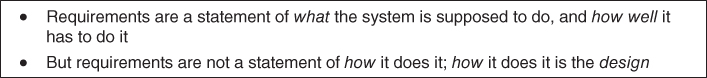

Requirements is the term we use for the formal, written statement of the problem that we are trying to solve by building an engineered system. Requirements are a statement of what the system is supposed to do, and how well it has to do it. But requirements are not a statement of how the system does it; how it does it is the design (which we will discuss next). See Figure 2.10.

Figure 2.10 Definition of the terms requirements and design.

Let us illustrate this definition with an example. What is a car supposed to do? We might say that a project to create a car “shall provide a separate physical entity that is capable of moving under its own power over a paved road from one location to another, under the control of a human being.” That is a statement of what. We might also say that “The car shall be able to reach a speed of at least 50 miles per hour, sustained for 2 hours without needing to stop for refueling or any other purpose.” This is a statement of how well. The question of whether our car uses an internal combustion engine and gasoline, or an electric motor and a battery – or a hamster in a cage – is a question of design, that is, how we accomplish the what and the how well. We choose the design after we have specified the requirements; the what and how well requirements stated above do not tell us what type of engine to use. We might have a how well requirement about limitations on the pollution generated by the operation of our car, but ideally, that requirement does not tell us what type of engine to use either.9 Nor do either of our requirements determine whether the car should have three or four wheels, how large those wheels and tires should be, and other considerations of how; those are design decisions.

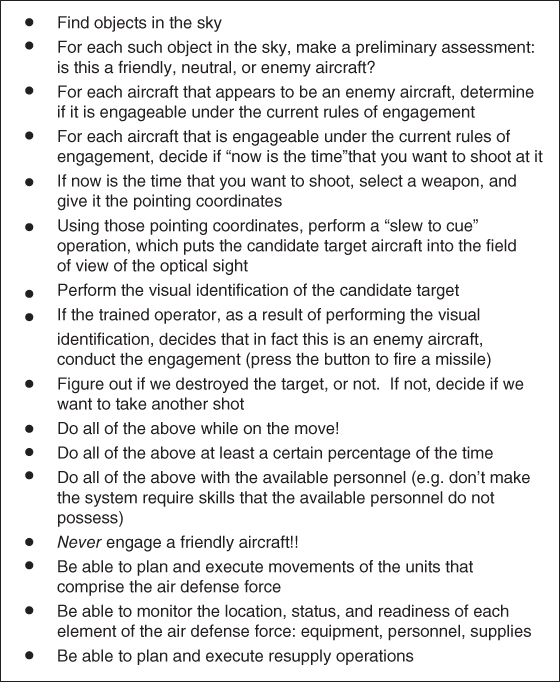

Here's another example. One of the US Army's short‐range air defense weapons – called the Avenger – has a missile operator in a turret on the back of a small truck (see Figure 2.11). There are eight missile tubes located on the top of this turret. A radar located somewhere else sees objects flying in the sky, and a computer makes a preliminary assessment about which ones are friendly aircraft and which ones might be hostile aircraft. Information about both types of aircraft are sent by a data radio to the depicted unit, which receives that information and displays it on a computer screen with a map. The weapons operator may select an aircraft that he thinks he may want to shoot down. But … before he is allowed to shoot, he is required by US Army policy visually to look at the aircraft through a magnifying optic, and make a determination, based on the training that he has received, that in fact this is a hostile aircraft (and not a friendly aircraft). Only after making such a visual identification may he press the button to shoot a missile. All of this, by the way, takes place while the depicted unit is moving, driving either on a road or cross country.

Figure 2.11 Avenger air defense weapon.

But how is the operator to perform this visual identification? When he selects the aircraft that he thinks he may want to shoot down, the turret on the back of the truck turns and elevates so that the magnifying optic is pointing at the correct aircraft, and the turret continues to adjust its position automatically, so as to keep that aircraft in the field of view of the optic. This process is called “slew‐to‐cue,” and there are written requirements defining exactly what the slew‐to‐cue process must accomplish, both what (e.g. “Upon designation of a candidate target aircraft by the operation, the computer shall compute and issue the appropriate commands to the turret's positioning motors so as to adjust the position of the turret in both azimuth and elevation, and to continuously update these positions as both the aircraft and the weapon continue to move”) and how well (e.g. “Upon slew‐to‐cue, 90% of the time, the designated aircraft will be in the narrow field of view of the launcher's optics”). Note that neither of the statements of what and how well says anything about how we will accomplish this; the choice of computer, programming language, algorithms, servo‐motors, rate sensors, and so forth is left to the design activity.

But suppose we were at the beginning of our air defense project. You do not yet have all of the information contained in the previous two paragraphs. What might we have when we started the project? Probably, we have only some statements of objectives and constraints from the customer, such as

So, what do you do next? My approach is to start by gaining an understanding of the customers, especially the eventual users. What is their mission? What are the constraints placed upon them? How do they accomplish their mission today? What do they like about the way they do it today, and what do they think needs improvement? Out of questions like these, you can start to distill a statement of what I call the customer's coordinate system of value (please review Figure 2.8): What do they value? How do they measure it?

Some of this might already be written down (in policy manuals, training manuals, and so forth), but much of it will not be. You have to go and talk to people, listen to people, and equally important, watch them while they work.

In my experience, it is vitally important that this knowledge be acquired by the engineers on the project; it is not sufficient for the project engineers to depend on other people (“domain experts”) for this knowledge. I find that we need to have both the domain knowledge and the engineering skills in a single brain. Only then are we able to create useful new insights.

Let's go back to our air defense example. After you read the manuals, talk to people, go on exercises, and watch air defense personnel train in their mission, and maybe even go to a theater of war and watch them conduct real operations, you can start creating a more detailed list of the key steps, functions, and attributes needed to accomplish the short‐range air defense mission. It might look like Figure 2.12. Are we done? Are those requirements?

Figure 2.12 Key steps, functions, and attributes of accomplishing the short‐range air defense mission.

Unfortunately, no. Let us consider just the very first statement on that list: “Find objects in the sky.” Before we can declare that we have a viable requirement about finding objects in the sky, we have to consider items such as these:

- How small an object do we need to see?

- Are there limits on the speed of the object that we need to be able to see?

- Are there limits on the materials of which the object is made? Can we be satisfied with seeing airplanes made of metal; do we not have to see airplanes made of plastic?

- Do we have to provide 360° azimuthal coverage, or can we be satisfied with looking only in a particular direction?

- Do we need to look at all elevation angles, from the horizon to the zenith, or can we be satisfied with only looking at some lesser set of elevation angles? It might be expensive to build a sensor that can see all the way to the zenith, for example.

- How far out do we need to look (e.g. to what slant range?).

- Is it sufficient if we report only the slant range and azimuth of the objects we find in the sky? Or do we also need to report the elevation angle?

- In what coordinate system do we need to make our reports? In latitude and longitude? In some circular coordinate system centered on our sensor? In some circular coordinate system centered on a weapon?

- Is it sufficient to report the object just once? It is an airplane, and therefore will continue to move; how often do we need to send subsequent reports?

- How accurate do the measurements contained in our reports need to be? How is accuracy specified?

For each functional statement on your list (e.g. “Find objects in the sky”), we need first to figure out all of the detailed questions that the requirements must address, and then we must go and figure out all of the answers to those questions. We then phrase what we learned in a particular fashion: we use the verb “shall” to indicate that a sentence is a mandatory requirement; we use the verbs “is” or “are” to indicate that a sentence is a supplemental description, rather than a mandatory requirement; we place each statement with a “shall” in its own paragraph and with its own paragraph number. When we have done all of that, then we have the actual requirements.

Notice that some of the resulting requirements will contain numbers; these form the how well portion of the requirements.

Requirements are written down in documents called specifications. Therefore, a specification is a document used in acquisition/development, which clearly and accurately describes the essential technical requirements of an entity (system, subsystem, component, etc.).

On most projects, we will have a hierarchy of specifications; that is, we will have a specification that defines the requirements for the system as a whole, but we will also have subordinate specifications that describe in more detail the requirements for individual subsystems and components. On a typical large engineering project, this hierarchy of specifications is likely to be three or four layers deep, moving from (at the top of the hierarchy) defining the requirements for the system as a whole, to defining the requirements for smaller and smaller pieces (Figure 2.13). This hierarchy is usually called the specification tree. We break the requirements into these separate specifications because each subsystem and component is likely to be designed and built by a separate team, and it is convenient, and reduces errors, to have separate requirements specifications for each such team. By separating the subsystem and component requirements from the system‐level requirements, we also gain the benefit of being able to focus separately on the system‐level requirements, where so much of our desired emergent behavior will reside.

Figure 2.13 The specification tree.

Remember what we said about requirements: they define the what and the how well for our system, but not the how.

Specifications contain a little more than just the statement of the requirements; since it would be useless to write a requirement that is for some reason impossible to test, it has become customary to include in the specification an indication of the basic strategy by which each requirement will be tested. We return to this subject in the next chapter.

Specifications usually form a contractually binding commitment; that is, if the system you eventually deliver fails to implement some requirement, your company may be paid less for their services, or there may be some other form of penalty. We will therefore pay a lot of attention to making sure that our design is in fact implementing every single requirement in our specification tree.

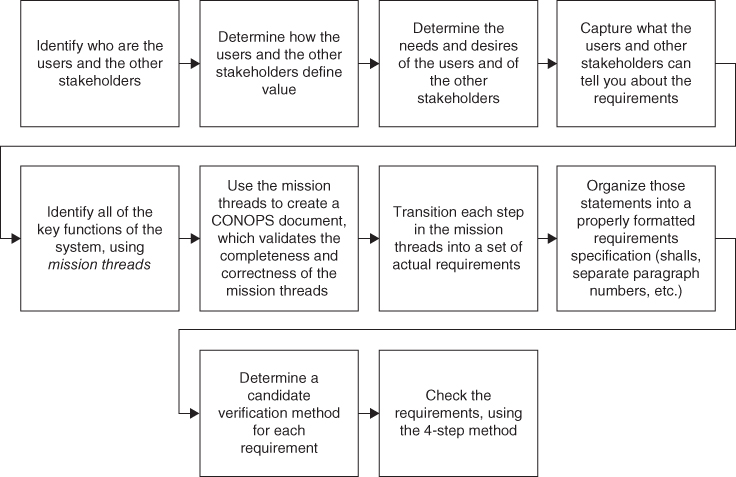

How do we go about creating the requirements? Figure 2.14 defines the steps that I recommend. There are a few new terms in this figure, each of which are explained in the paragraphs that follow.

Figure 2.14 Creating the requirements.

We have already talked about some of these steps, but not all of them:

- Identify who are the users and the other stakeholders. We have already talked about this; we must know all of the users and the other stakeholders for our system. The buying customer is only one of those stakeholders, and it is likely that they are not users of the system at all. As we already discussed, there are likely many other stakeholders besides the users and the buyers.

- Determine how the users and the other stakeholders define value. We already talked about this one too; we must determine how the users and other stakeholders define value, in the context of our system. As noted above, we do this by reading, talking to people, listening, and watching the users perform the mission with their current tools and methods.

- Determine the needs and desires of the users and of the other stakeholders. Having determined how our users and stakeholders determine value, we are ready to determine what they need and want. This involves more reading, talking, listening, and watching. Many texts on requirements tell you only to consider documented needs, as you may not get paid for doing additional things (e.g. desires) that are not in your contract. I strongly disagree; the customers must end up being happy. This always involves figuring out what they want, in addition to what they need. You may well then try to get those items added to the contract (so that you can get paid for them), but you might elect to do some of them anyway, in the interest of establishing good relations and high credibility with the customer. When things go wrong (and things will go wrong at some point), you need the customer “on your side.”

- Capture what the users and other stakeholders can tell you about the requirements. Usually, the buying customer will have some written description of what they want to buy, and of course you start with that. But even if they do not, you must elicit that information from them.

- Identify all of the key functions of the system, using mission threads. Consider Figure 2.10; here, we tried to capture a list of all the major functions of our notional short‐range air defense system. How did we create that list? The method I advocate is to create mission threads, the major operational sequences of our user's mission. What are all of the external stimuli that cause our users to start some sort of a task? What is the sequence of steps (this sequence is what I call a mission thread) that they move through in order to accomplish each task? What are the results of each such sequence of steps? You can read Figure 2.10 and create a list of what were the likely mission threads that I had in mind when I created the figure.

- Use the mission threads to create a concept of operations (CONOPS) document, which not only describes the mission threads, the inputs, and the outputs, but also summarizes how often each mission thread is likely to be exercised during actual operations, identifies any timing constraints (e.g., a particular thread might have to be accomplished in fewer than 10 seconds), identifies other constraints (e.g. the requirement to perform the visual identification step) imposed by outside authorities, and so forth. You also write down what you have learned about why each of these items is done in this particular fashion. The CONOPS document allows you to validate the completeness and correctness of the mission threads.

- Transition each step in the mission threads into a set of actual requirements. Recall that we said items like those in Figure 2.10 do not constitute actual requirements. We discussed how we would turn just one statement from the figure (“find objects in the sky”) into actual requirements. Now, we must complete a similar process for every step along every one of our mission threads.

- Organize those statements into a properly formatted requirements specification. Over time, we have developed standardized formats, lexical conventions, and so forth for requirement specifications. These are often documented in government or company policy manuals, and include things such as the use of particular verbs for distinguishing actual mandatory requirements from explanatory materials (e.g. shall versus will), the use of a separate paragraph number for each individual requirement, and other conventions.

- Determine a candidate verification method for each requirement. Recall that earlier, I stated that in our requirements specification we also include a preliminary idea for how we will verify each requirement. It is possible to write requirements in a fashion that is difficult or impossible to verify, and we strive to avoid that (if for no other reason than we will not get paid until we have verified every single requirement!). In order to avoid such a situation, for each requirement we identify a candidate method (usually the candidate methods are inspection, analysis, demonstration, simulation, and assessment by operation) for verification, and include this verification method right in the specification.

- Check the requirements. We cannot consider something as important as a requirements specification complete until we have performed some type of check that it is complete and correct. I use a four‐step process that I learned from Jim Hines and Scott Jackson in 200910.

- Editorial check (format, grammar, spelling, punctuation, consistent use of terminology).

- Soundness check (no missing items or “to be determined,” quantitative values where required, positive statements [e.g. shall, rather than shall not], no statements of design [e.g. no statements of how, only statements of what and how well], no statements constraining external systems, validation of all assumptions).

- Substance check (complete, consistent, every requirement is necessary, appropriate level of constraints).

- Risk check (technical feasibility, consistent with budget and schedule, identify things that could go wrong, estimate likelihood and consequence of each, assess realism of available approaches to mitigate each risk should it actually occur).

Remember that we said requirements usually become a binding part of the contract. If you let the requirements say more than you intend, you may well have to foot the bill for building that extra capability!

Scott and Jim also have a cute acronym intended to remind us about the desired attributes of requirements, which I have modified slightly so that it now reads:

A requirement must be SMART

(Specific, Measureable, Achievable, Realistic, Testable)

These five attributes are essential, but there are other essential attributes of requirements too. For example, we have stated several times that the requirements are about what and how well, but not about how, because how is the design. The requirements should not unnecessarily constrain the design.

We also noted above that the requirements must be verifiable; and there are other important attributes of requirements too. But I have decided not to tamper with Jim and Scott's SMART acronym!

2.3 Design

2.3.1 The Design and its Process

The next phase in the development process of an engineering project is the design; we aspire to create a design that satisfies the requirements, but also one that is feasible and affordable to build.

We get a lot of “help” with the requirements; after all, our customers and our users understand well what they want the new system to do, and such what constitutes a major portion of the requirements. It is my experience that most systems eventually develop pretty good requirements, although it may take them longer to do so than they originally planned, and cost more money to do so than they planned.

But the design is a completely different matter; many systems simply have bad designs. Why might this be? For one thing, the customer and the users are generally not qualified to provide expert help with the design, in contrast to the way that they are qualified to provide expert help with the requirements.

How do I know that many systems actually have bad designs? More than once I have seen cases of two completed systems that do approximately the same thing, where one runs 100 times faster than the other. Similarly, I have seen cases of two completed systems that do approximately the same thing, where one is 1000 time more reliable than the other. I have then had the opportunity to examine these systems so as to find the root cause for the slower and less reliable performance, and therefore I can state with confidence that this gigantic gap in performance derived from specific (undesirable) features of their designs.

This finding has many interesting implications. First, having a 100× or 1000× range of outcomes for a critical parameter from an engineering project is shocking; mature disciplines do not have such large ranges of outcomes. Consider mid‐sized family sedans offered for sale that meet US emissions control requirements; the variation from best to worst in, for example, gas mileage is no more than 25%, not 100× (10 000%) or 1000× (100 000%). Something is going radically wrong inside the designs of the systems that exhibit such poor performance on such an important metric.

Second, a lot of engineering projects turn out to be problematic, in the sense that they end up far over budget, far behind their delivery schedule, and a shocking number (some studies say more than half of all engineering projects) are canceled before they complete, because of customer and user dissatisfaction with progress. The people who study these problem projects nearly all assign the blame to poor requirements. But I spent many years of my career as a designated fix‐it person for engineering projects that were in trouble, and I will tell you that they all had pretty good requirements. What they all lacked was a sensible, feasible design.

So, in light of the above, I have come to view the design as the critical portion of the engineering project development cycle. It is the stage that will likely make or break your project.

What is a design? In the previous section, we defined the requirements as the statements that tell us what the system is supposed to do, and how well it is supposed to do it; in contrast, the design tells us how the requirements are going to be accomplished. Consider a house: the requirements might tell us that the house needs to have four bedrooms and three bathrooms. The design tells us how we will satisfy those requirements: whether we will use wood or metal for the frame, whether we will use a raised structure or a concrete slab for the foundation, whether we will use casement or sliding windows, whether we will use wooden shakes or concrete tiles for the roofing materials, and so forth. We can build a house that meets the requirements – four bedrooms and three bathrooms – using either wood or metal as the framing material; both probably allow us to satisfy the requirements. But there may be other reasons for choosing one design approach or the other, reasons that have little to do with the requirements (e.g. “four bedrooms”). For example, if our house is going to be in a location with a really severe termite problem, we might not want to select wood for the framing material. But if wood is satisfactory, then using wood is probably a lot less expensive than using metal for the frame. A wood‐framed house can probably be built in less time than a metal‐framed one too. These are examples of alternative designs.

The process that we use to create a design centers around a method that we call the trade study. The trade study process helps us create a set of candidate alternative designs, helps us create a way to measure the “goodness” of each alternative, and finally allows us to select a preferred alternative, while also creating the data and the artifacts that will allow us to explain to our peers and stakeholders why we believe that it is the best possible alternative.

In the design process, there will seldom be a clear winner, in the sense that a particular alternative design is best in every category. That is why we call the process a trade; we make judgments (backed up by data and analyses) about which combination of positive and negative features achieves the best overall solution for our system. We strive not for perfection, but for a reasonable balance.

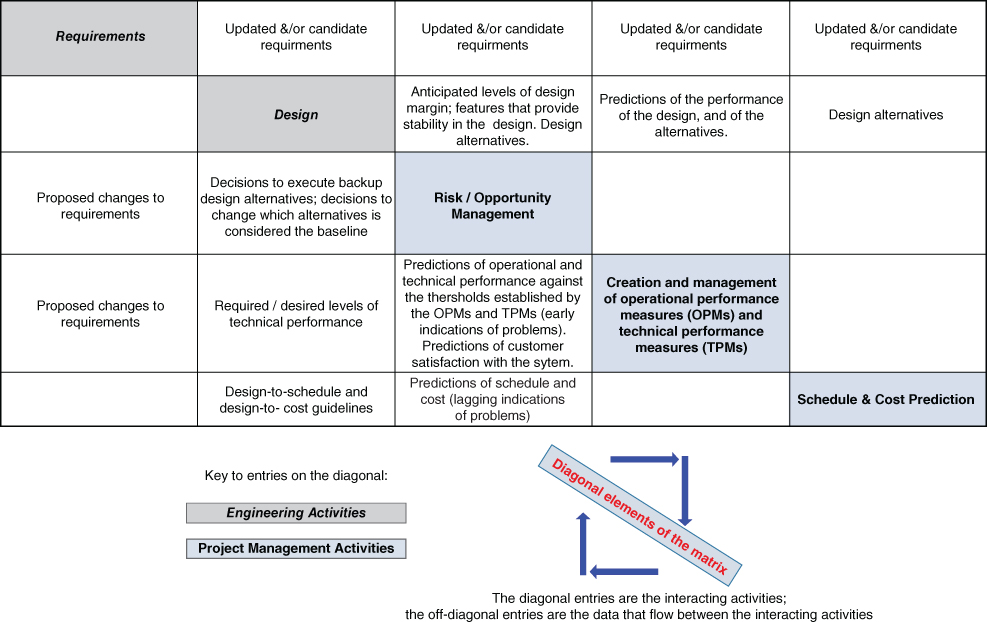

In this book, we are considering engineering projects, and therefore technology and technological concepts are central to the success of those projects. We therefore use engineering methods to guide project management decisions, and that in turn implies that we use data to help make decisions. The data that we use to measure the “goodness” of our candidate designs takes the form of two types of metrics: one that I call operational performance measures (OPMs), and one that I call technical performance measures (TPMs).

Why two types of metrics? Our degrees of freedom in creating alternative designs lie mostly in alternative technical concepts and approaches, and these are best measured using the technical performance measures. But we must also make sure that improved technical performance actually results in improved operational performance, as measured from the coordinate system of value relevant to the users, customers, and other stakeholders. We therefore also need to use the operational performance measures.

At first blush, one might believe that improved technical performance always leads to improved operational performance. That is simply not true. Remember the example a few pages back about the radio vendor who offered me a radio that sent and received data twice as fast as his current radio model? When we plugged twice the data rate into our system‐level model, the operational performance measures did not improve at all! This, then, is a real‐life example where dramatic improvement in a key technical performance measure did not result in any improvement in an operational performance measure.

In my experience, this is in fact a frequent occurrence; as a result, we must separately measure both technical performance measures and operational performance measures. We cannot abandon technical performance measures, as they are at the heart of our technical analyses that allow us to determine whether or not our design will in fact work. But we must somehow relate the effect of the technical performance measures to the operational performance measures. In the radio example cited above, we did that through a system‐level model. In other instances, we have done this through actual benchmark measurements. But however you choose to do it, it must be done.

Furthermore we must convince our stakeholders – most of whom are not engineers – that our predictions about operational performance measures are credible. So, we must be able to explain the connection between the technical performance measures and the operational performance measures in a credible and transparent fashion, even to our non‐technical stakeholders. This might be done by explaining the logic in the system‐level model, and then showing that we have calibrated that model by using it to predict the performance for a set of situations for which we can go out and make actual real‐world measurements. If the stakeholders understand and agree with the logic inside our system‐level model, and see that in a set of real‐world circumstances the system‐level model makes accurate predictions, then they may accept that when we use the model to make predictions about circumstances for which we cannot yet make measurements (e.g. how the new system will perform), the predictions may be believed.

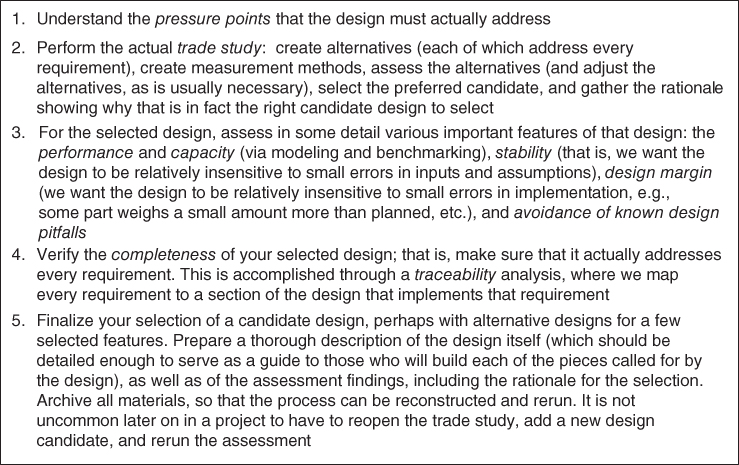

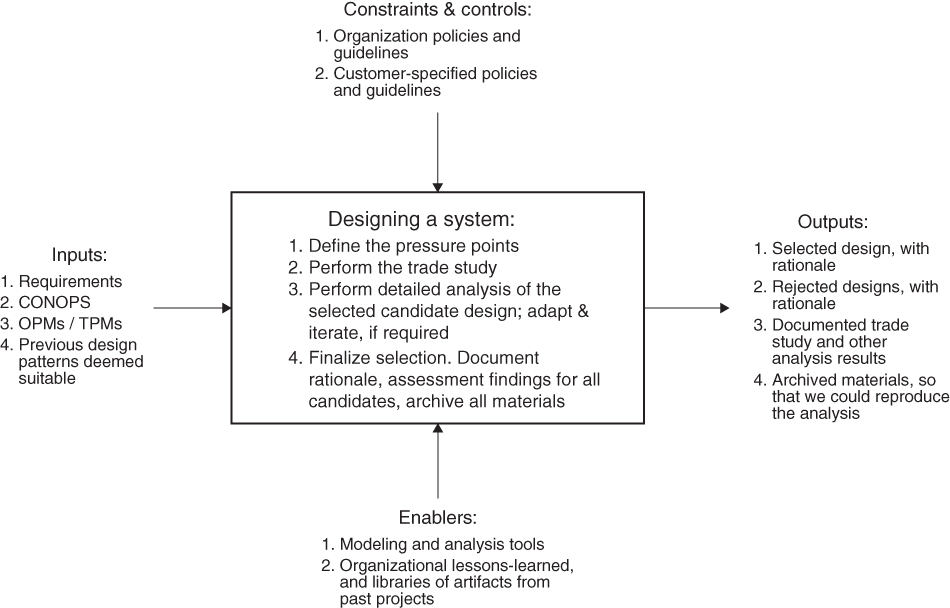

I use the method depicted in Figure 2.15 in order to create a design. This figure introduces a few new terms, which will be described in the following text.

Figure 2.15 Steps to create a design.

Let's discuss each of these.

By a pressure point that the design must actually address, I mean that we must use our operational knowledge of the users and the mission to determine what are the real design drivers for the system. Do not depend on your customers to do this for you! They are not engineers and designers, and while they understand their mission well, they often have an imperfect understanding of how technology interacts with their mission.

Think of the short‐range air defense system that I described earlier. If the Army were to notice that only about one‐third of the missiles they fire at airplanes in the sky actually hit the target airplane, they might well conclude that they need a better missile. While this may sound reasonable, that conclusion may be completely incorrect! When I was actually designing such a system many years ago, we discovered that the Army gunners were actually taking very few shots; they were shooting at a target only about 10% of the time that they could have. Most of the time, the very short nature of the shot opportunity (a high‐speed jet flying very close to the ground passes you by in just a few seconds) meant that they were not even shooting 90% of the time. Instead of building a better missile, we decided that the pressure point in the design was to help the gunner get ready, and to cue him when a shot opportunity was coming up soon, so that he would not miss so many shot opportunities. A few years later, after we had finished an automated system designed to help gunners achieve more shot opportunities, not only were they taking nearly 10 times as many shots per day, but most of those shots were hitting the target airplane. It turned out that by using automation in the system to help the gunner find and take his shot opportunities, we were not only creating more shot opportunities, but also creating better shot opportunities, ones where the target airplane was closer, or otherwise situated so as to make it easier for the missile actually to hit the target airplane. They didn't need a better missile at all!

Think about that: not only did we create a revolutionary improvement in the performance of the system (almost a 10× improvement!), but we did it without making any changes at all to the item that the customers and users initially might have thought was the problem. We had to discover what was the real pressure point in the design. In this case, that pressure point was improving the number of shot opportunities that complied with the rules of engagement (and also improving the quality of those shot opportunities), rather than improving the probability of kill once a missile was launched. This was despite the fact that the major observable of poor performance was that most of the time, the missile missed the intended target – which made it seem like the problem was with the missile itself.

In my experience, this sort of focusing on the wrong aspect of the problem takes place quite frequently. Therefore, my design methodology always starts with the assessment and analysis needed to determine where the actual pressure point is in the design of our new system. There may well be more than one, of course.

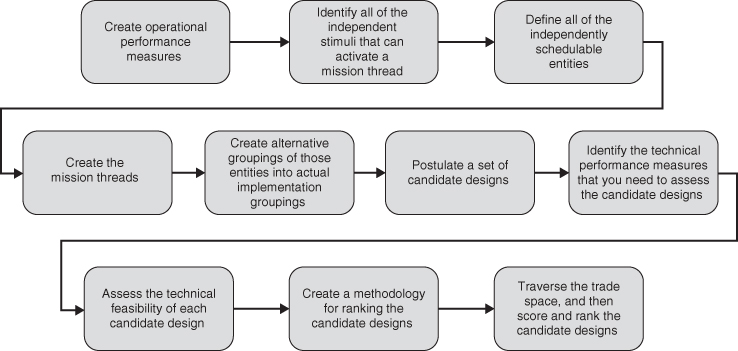

Once we know the pressure points, we can turn to the trade study. I use the following steps to perform a trade study (Figure 2.16):

- Use the knowledge and insight we acquired about the customer in order to create operational performance measures, and then discuss those with the customer. Of course, we actually started this process while we were creating the requirements.

- Use the operational sequences to firm up a list of all the independent stimuli that can activate a mission thread in your system, and also define what is produced as the output of each mission thread. We started this when we prepared the concept of operations document as part of the requirements, but now we need to do it in more detail.

- Use these items – the lists of stimuli and the partitioning of steps that could be in parallel and steps that must be in sequence – to define all of the independently schedulable entities within your system. These are the system activities that can be started in response any sort of asynchronous stimuli, and can therefore operate at the same time as other system activities.

- Use the knowledge and insight we acquired about the mission, together with the requirements, to create operational sequences that describe how they perform this mission, the mission threads. This is a mechanism that helps you to ensure that every requirement is addressed by the design. We started this while we were creating the requirements too. Now we have to do it at a finer level of detail, showing which steps on the mission threads can be performed in parallel, and which must be performed sequentially.

- Use the list of mission threads and the list of independently schedulable entities to create alternative groupings of those entities into actual implementation groupings (e.g. the items that will actually comprise the separately buildable elements of the system).