2

Vexations and

Missed Opportunities

in Group Work

Four Case Studies

We’ll begin this chapter with four short case studies that have come out of my recent research. Three of them are about companies; the fourth is about a government entity. Two of them concern large organizations; the other two deal with small ones. Two are set within the high-tech sector; two are not. And in three cases a problem has been identified, while in the fourth someone senses a new opportunity.

In short, these four stories are dissimilar. In addition to covering different types of organizations, they also present a wide range of issues: capturing and sharing knowledge, building corporate culture, training new employees in a rapidly growing company, putting people in touch with each other across a large and fragmented enterprise, tapping into the “wisdom of crowds,” and giving people easier, faster, and better access to the information they need to do their jobs well.

So what, if anything, do these stories have in common? They share three characteristics. First, they all focus on group-level work and interactions among knowledge workers. Second, they’re all left unresolved in this chapter. I apologize in advance for leaving you hanging in this way and promise to return to each of these four stories later in the book.

The third common element in these case studies, as you’ve probably guessed, is information technology. As we’ll see later, technology is used to resolve each of the four situations presented in this chapter. The “right” technology may not be obvious in each case—in fact, I hope it’s not—but each organization effectively addresses its challenges and opportunities by deploying and using IT.

What’s more, the technologies deployed in all cases are novel ones. Most of them weren’t available even ten years ago. The story of how they came to be, and why they are ideally suited for business needs like those presented here, is the story of this book. An important aspect of this story is understanding why previous generations of technology designed to facilitate collaboration within organizations were not well suited for the situations described here. I’ll discuss that issue after presenting the case studies.

I’ve arranged these four stories in a deliberate order. The first concerns a relatively small group of people who work together closely. The second focuses on a larger group of people in many locations who all work at the same company, but don’t know each other well. The third is about an even larger population of knowledge workers spread across several organizations who should be sharing information and expertise, but are not doing so. And the fourth and final case study encompasses all the employees of one big company, most of whom will never need to work together.

In each successive example, the strength of the professional relationships between the people involved decreases, moving from close colleagues in the first case to professional strangers in the fourth. I chose this progression for two reasons. First, it illustrates the impressive flexibility and applicability of Enterprise 2.0 tools, which we’ll see are useful not just in one or two of these situations, but in every one of them. Second, this sequence reveals a useful framework for understanding the benefits of Enterprise 2.0 that is based on a great deal of existing research and theory and can be applied to almost any situation. This framework uses the concept of tie strength among knowledge workers, that is, the closeness and depth of their professional relationships. As we’ll see in chapter 3, this concept of tie strength reveals the power of Enterprise 2.0, as well as its breadth. The new tools of collaboration and interaction provide benefits to close colleagues, professional strangers, and every level of tie strength in between. Before describing these benefits, though, let’s look at our four real-world problems, challenges, and missed opportunities.

VistaPrint

By 2008, VistaPrint was becoming a victim of its own success. The company, which was founded in 1995 within the already-crowded direct marketing industry for printed products, initially differentiated itself with a compelling offer to consumers: it gave away business cards free to anyone who wanted them. Visitors to www.vistaprint.com could obtain 250 business cards for the cost of shipping only. Even though these cards were free, they did not look cheap or generic. People could customize them by selecting from a range of templates and logos, and they were printed on high-quality paper. The free cards helped to spread the word about the company, with the tagline “Business cards are free at www.vistaprint.com” printed in small type on the back of each one. By 2008 the company had given away over three billion cards.

VistaPrint used technology extensively to keep costs low while giving away large amounts of free merchandise. Company engineers modified the presses used to print business cards and other materials to make them more flexible. They also wrote programs that “looked at” the stream of incoming orders and determined which ones to print together in order to minimize waste. This combination of hardware and software allowed VistaPrint to receive a large number of small orders online, combine them on the fly into optimal configurations, and print them as cheaply as possible.

If customers wanted only free merchandise, however, VistaPrint was not going to stay in business for very long. The company became proficient at the practice of up-selling, that is, persuading customers to pay a small amount for a product they perceived to be superior. For business cards, this might mean higher-quality paper, more colors, or greater freedom to choose a logo or arrange the card’s elements. VistaPrint learned what customers were willing to pay for these options and priced its products accordingly. Experience showed that even customers who originally intended to take advantage of free cards might wind up spending $10 or $20 in order to get “better” ones. Because of VistaPrint’s highly efficient operations, such small orders could be produced profitably.

If customers were satisfied with their initial orders, they often returned to the Web site and purchased additional products. Over time VistaPrint expanded into a wide range of additional paper products—presentation folders, letterhead, notepads, postcards, calendars, sticky notes, brochures, and return address labels. The company also began to carry products such as pens, hats, T-shirts, car door magnets, lawn signs, and window decals. After initially targeting individuals, VistaPrint eventually came to market its products and services to “microbusinesses” employing fewer than ten people, offering them design, copywriting, and mailing services.

VistaPrint grew rapidly, and without making any acquisitions. By 2008 the company had revenues of $400 million and employed fourteen hundred people in six locations in North America, the Caribbean, and Europe. The Lexington, Massachusetts, office housed most of the executive team: the marketing, human resources, and finance functions and the teams responsible for IT, technology operations, and capabilities development.

The company had to hire many engineers and technology support personnel in Lexington to support its rapid growth. In the summer of 2007, for example, VistaPrint recruited more than twenty new software engineers, most of them recent college graduates. Since the department had employed only about sixty engineers up to this time, managers were concerned about how to integrate the large number of new hires smoothly without burdening the existing workforce too much, and without jeopardizing anything that the company had already built. VistaPrint had a large and complex code base (the interlinked applications that supported the company’s operations), and it would be easy for a new hire to inadvertently harm or destabilize it by not following proper procedures. As Dan Barrett, a senior software engineering manager, joked, “We need to train our new people quickly so they don’t break our software.” 1

Ideally, VistaPrint would have an easily consultable and comprehensive reference work for new employees, but such a resource would be time-consuming to develop. It could also quickly become outdated, since the company’s technology changed so rapidly. VistaPrint had a shared hard drive on which people saved documentation and other reference work, but most people felt that it was disorganized and hard to search. As at most companies, new employees at VistaPrint learned about the organization and their jobs by observing their colleagues and asking lots of questions.

Barrett, however, wondered if it were possible to do better in this area. He was concerned about the challenges VistaPrint faced not only when a new employee entered the company but also when an experienced worker left it. Departing workers typically left little behind, especially in a form that could be easily accessed or searched by others. This absence of records struck him as a shame. Barrett also wondered how the company could do a better job of sharing its accumulated knowledge and the good ideas that were developed at each location. He felt that many of the company’s innovations and insights were not shared widely enough; people at the Netherlands printing facility, for example, often faced problems that had already been confronted and resolved at the sister site in Canada. Engineers at the two locations talked to one another and sent e-mails frequently, of course, but Barrett and others had the impression that people at VistaPrint were still doing a fair amount of redundant work and “reinventing the wheel.”

For VistaPrint, a relatively small company in the printing industry, managing growth and training new employees had become major challenges. How could the company capture its own engineering knowledge and deliver it to all new hires so that they could become productive as quickly as possible? And how could VistaPrint possibly ensure that this body of knowledge would remain current even as the company and its environment continued to change?

Serena Software

In 2007, Serena Software CEO Jeremy Burton felt that his company needed to make two fundamental shifts. First, it had to make a major addition to its product family. Up to that time, Serena had been an enterprise software company that helped its customers manage all of their enterprise software. Large organizations typically have many different pieces of enterprise software from different vendors; each of these applications is configured, and most are modified. In addition, software vendors release bug fixes, upgrades, and new versions over time. As a result, large organizations maintain highly complex software environments with many moving parts.

Serena’s products, which fell into the category of Application Lifecycle Management software, helped its customers keep track of all these moving parts and the linkages and dependencies among them. The company had been founded more than twenty-five years earlier during the mainframe era of corporate computing and still offered mainframe products in 2007. It also developed and sold applications for software change and configuration management and project and portfolio management. Serena supplied over ninety-five of the Fortune 100 companies.

Burton and other executives wanted the company to enter the nascent market for software that helped companies build “mashups,” that is, combinations of two or more existing enterprise systems and their data. Mashups were becoming very popular on the Internet as companies like Google “opened up” their applications, allowing virtually anyone to use and extend popular programs without requiring up-front permission. People did not need deep programming skills for this task; they could mash up Google Maps, for example, by combining its mapping capabilities with other data. One of the most popular early mashups on the Web was Chicago Crime, a mashup of Google Maps with crime data published by the Chicago Police Department; Chicago Crime allowed residents to look at crime patterns in their neighborhoods. 2

In one way mashups were a logical extension of Serena’s enterprise software capabilities, but in another sense they constituted a radical departure from the company’s existing products. Mashups were a stereotypical Web 2.0 technology: they empowered individuals, heightened their ability to act autonomously, and increased information openness, transparency, and sharing. Corporate mashups, in essence, were about letting go of centralized control over enterprise IT. Serena’s other offerings and its entire prior history, in contrast, emphasized maintaining control.

The company’s employees had not grown up in the mashup era; many of them, in fact, had acquired their technical skills and started their careers well before the Web was born. The average age of Serena employees was approximately forty-five, and many were significantly older. These people had deep technical skills, but Burton wondered whether they were immersed enough in Web 2.0 tools and philosophies to develop great software for corporate mashups.

He also worried that whether or not they knew Web 2.0 well enough, they might not know one another well enough. Unlike VistaPrint, Serena had not just grown organically; it had made multiple acquisitions over the years, many of them in other countries. By 2007 the company had over eight hundred employees in eighteen countries. More than 35 percent of these people worked from home, giving them little if any opportunity to interact with their colleagues face to face.

Many at Serena were concerned that if the company continued on its current path, it could lose all sense of community and become little more than a collection of people all over the world who worked on projects together in virtual teams and earned paychecks. As vice president Kyle Arteaga explained it, “There was little sense of a Serena community. People often worked together for more than a decade, yet knew nothing about each other. There was no easy way to learn more about your colleagues because we had no shared space. We had all these home workers, or employees in satellite offices like Melbourne who we only knew by name.” 3

The second fundamental shift Burton was contemplating was a deliberate attempt to increase Serena’s sense of community. But how was he to do so with such a patched-together and globally distributed workforce?

The U.S. Intelligence Community

In the wake of the September 11, 2001, terrorist attacks on the United States, several groups investigated the performance of the country’s intelligence agencies, and did not like much of what they saw. Their conclusions can be summarized using two phrases that became popular during the investigations: even though the system was blinking red before 9/11, no one could connect the dots.

In an interview with the National Commission on Terrorist Attacks Upon the United States, better known as the 9/11 Commission, CIA director George Tenet maintained that “the system was blinking red” in the months before the attacks. In other words, there were ample warnings, delivered at the highest levels of government during the summer of 2001, that Osama bin Laden and his Al Qaeda operatives were planning large-scale attacks, perhaps within the United States. In some cases these warnings were frighteningly accurate: CIA analysts, for example, prepared a section titled “Bin Laden Determined to Strike in US” for the President’s Daily Brief of August 6, 2001. 4

These warnings were accurate and urgent in part because diverse actors scattered throughout the sixteen agencies that made up the U.S. intelligence community (IC) were convinced of the grave threat posed by Al Qaeda and dogged in their pursuit of this enemy. At the CIA, for example, a group called Alec Station, headed by Michael Scheuer, was dedicated to neutralizing bin Laden. At the FBI, counterterrorism chief Paul O’Neill and his team had gained firsthand experience of Islamic terrorists during investigations of attacks in Saudi Arabia and Yemen, and were determined not to let them strike in the United States again (in 1993 the World Trade Center had been attacked with a truck bomb). Richard Clarke, the chief coun-terterrorism adviser on the U.S. National Security Council, was the highest-level champion of these efforts, devoting great energy to assessing and communicating the danger posed by bin Laden’s group. 5

In the months preceding 9/11 troubling signs were apparent to intelligence agents and analysts throughout the world. In the United States, agent Ken Williams of the FBI’s Phoenix office wrote a memo in July 2001 to the bureau’s counterterrorism division highlighting an “effort by Osama bin Laden to send students to the US to attend civil aviation universities and colleges” and proposing a nationwide program of monitoring flight schools. 6 On July 5th Clarke assembled a meeting with representatives from many agencies—including the FBI, the Secret Service, the Coast Guard, and the Federal Aviation Authority—and told them, “Something really spectacular is going to happen here, and it’s going to happen soon.” 7 Zacarias Moussaoui was taken into custody by agents from the FBI’s Minneapolis office in August 2001 and immediately recognized as a terrorist threat. The Minneapolis office requested a search warrant of Moussaoui’s laptop and personal effects, citing the crime of “Destruction of aircraft or aircraft facilities.” 8

No one, however, was able to “connect the dots” between all these pieces of evidence and perceive the nature and timing of the coming attacks clearly enough to prevent them. Investigators concluded that a major reason for this failure was the lack of effective information sharing both within and across intelligence agencies. Information flows were often “stovepiped,” that is, reports, cables, and other intelligence products were sent up and down narrow channels within an agency, usually following formal chains of command. If someone within a stovepipe decided that no more analysis or action was appropriate, the issue and the information associated with it typically went no further. There were few natural or easy ways for someone to take information, analysis, conclusions, or concerns outside the stovepipe and share them more broadly within the community.

These documented failures to act on and share information before 9/11 proved devastating. The Moussaoui search warrant request, for example, was not granted until after the attacks had taken place. Throughout 2001 agents at both the CIA and FBI were interested in the activities and whereabouts of several suspected terrorists, including Khalid al-Mihdhar and Nawaf al-Hazmi. CIA investigations revealed that Mihdhar held a U.S. visa, and that Hazmi had traveled to the United States in January 2000. This information was not widely disseminated within the IC at the time that it was collected, however, and was also not shared with FBI agents during a June 2001 meeting of representatives from the two agencies during the investigation of the October 2000 bombing of the USS Cole in Yemen. At the time of the meeting, Mihdhar was not on the State Department’s TIPOFF watch list, which was intended to prevent terrorists from entering the United States. He arrived in the United States in July 2001, and both he and Hazmi participated in the September 11 attacks. 9

The volume and gravity of these information-sharing failures led some to conclude that the 9/11 attacks could have been prevented. Because of what the 9/11 Commission called “good instinct” among a small group of collaborators across the FBI and CIA, both Mihdhar and Hazmi were added to the TIPOFF list on August 24. However, efforts then initiated to find them within the United States were not successful. The Commission’s report concluded,

We believe that if more resources had been applied and a significantly different approach taken, Mihdhar and Hazmi might have been found …

Both Hazmi and Mihdhar could have been held for immigration violations or as material witnesses in the Cole bombing case. Investigation or interrogation of them, and investigation of their travel and financial activities, could have yielded evidence of connections to other participants in the 9/11 plot. The simple fact of their detention could have derailed the plan. In any case, the opportunity did not arise. 10

The 9/11 Commission made a number of recommendations for improving the IC. These included, predictably, better information sharing:

We have already stressed the importance of intelligence analysis that can draw on all relevant sources of information. The biggest impediment to all-source analysis—to a greater likelihood of connecting the dots—is the human or systemic resistance to sharing information …

In the 9/11 story, for example, we sometimes see examples of information that could be accessed—like the undistributed NSA information that would have helped identify Nawaf al Hazmi in January 2000. But someone had to ask for it. In that case, no one did. Or … the information is distributed, but in a compartmented channel. Or the information is available, and someone does ask, but it cannot be shared …

We propose that information be shared horizontally, across new networks that transcend individual agencies …

The current system is structured on an old mainframe, or hub-and-spoke, concept. In this older approach, each agency has its own database. Agency users send information to the database and then can retrieve it from the database.

A decentralized network model, the concept behind much of the information revolution, shares data horizontally too …

No one agency can do it alone. Well-meaning agency officials are under tremendous pressure to update their systems. Alone, they may only be able to modernize the stovepipes, not replace them. 11

The 9/11 Commission also recommended the creation of a director of national intelligence with some level of authority over all sixteen federal agencies. This director, it was hoped, could encourage better coordination and lessen the degree of stovepiping within the community. According to the commission’s final report, “[With] a new National Intelligence Director empowered to set common standards for information use throughout the community, and a secretary of homeland security who helps extend the system to public agencies and relevant private-sector databases, a government-wide initiative can succeed.” 12

The Intelligence Reform and Terrorism Prevention Act of 2004 created the office of the Director of National Intelligence (DNI). In an official statement to the U.S. Senate in 2007, J. Michael McConnell, the second director, discussed the need for a deep shift in philosophy and policy within the IC:

“Our success in preventing future attacks depends upon our ability to gather, analyze, and share information and intelligence regarding those who would do us more harm … Most important, the long-standing policy of only allowing officials access to intelligence on a “need to know” basis should be abandoned for a mindset guided by a “responsibility to provide” intelligence to policymakers, warfighters, and analysts, while still ensuring the protection of sources and methods.” 13

Not all observers, however, felt that the DNI would be able to accomplish much, or to effect deep change in the agencies’ strong and entrenched cultures. The federal commission established to investigate the IC’s poor performance in determining whether Iraq possessed weapons of mass destruction was particularly blunt. Its final report stated that “commission after commission has identified some of the same fundamental failings we see in the Intelligence Community, usually to little effect. The Intelligence Community is a closed world, and many insiders admitted to us that it has an almost perfect record of resisting external recommendations” (emphasis in original). 14 The journalist Fred Kaplan, writing in the online magazine Slate, was also pessimistic: “There will be a director of national intelligence. But the post will likely be a figurehead, at best someone like the chairman of the Council of Economic Advisers, at worst a thin new layer of bureaucracy …” 15

Many wondered how a “thin new layer of bureaucracy” could possibly address the IC’s inability to connect the dots, or start to change its deeply entrenched “need to know” culture. Could U.S. intelligence analysts ever learn to share what they knew and collaborate more effectively? What might encourage them to do so?

The IC decided to look within itself for answers to these questions. The DNI took over from the director of central intelligence (the head of the CIA) a novel program, called the Galileo Awards, intended to solicit innovative solutions to challenges facing the IC from community members themselves. The Galileo Awards’ first call for papers went out in 2004.

In June 2004, while on vacation from his job at Google, Bo Cowgill was seized by an idea that would not let go of him. He had gone to work for Google the previous year after finishing his bachelor’s degree in public policy at Stanford. He liked the company, but hoped to move on from his entry-level customer support job.

One of the books he had taken with him on vacation was James Surowiecki’s The Wisdom of Crowds. 16 The book’s theme was that it was often possible to harness the “collective intelligence” of a group of people and thus yield better or more accurate information than any individual within the group possessed. Many readers found this a powerful and novel message. They were accustomed to thinking that groups usually yielded the “lowest common denominator” of their members’ contributions or, even worse, that groups could turn into mobs that actually behaved less intelligently than any of their members.

The Wisdom of Crowds provided many examples of collective intelligence, including one that was familiar to Cowgill. He had learned as an undergraduate about the Iowa Electronic Markets (IEM), an ongoing experiment begun in 1988 at the University of Iowa that sought to test whether the same principles and tools that supported stock markets could be used to predict the results of political elections. The IEM and other similar environments came to be known as prediction markets.

Just like public stock markets, prediction markets are composed of securities, each of which has a price. People use the market to trade with one another by buying and selling these securities. Because traders have differing beliefs about what the securities are worth, and because events occur over time that alter these beliefs, the prices of securities vary over time in all markets.

In a stock market like the New York Stock Exchange the securities being traded are shares in companies, the price of which reflects beliefs about the value of their future profits. In a prediction market, in contrast, the securities being traded are related to future events such as a U.S. presidential election. In that case the market can be designed so that each security is linked to a particular candidate, and its price is the same as the predicted percentage of the vote that the candidate will win, according to the markets’ traders.

Participants in the IEM trade use their own money and can set up accounts with $5 to $500. In general, IEM results are quite accurate and compare favorably with other ways of predicting the winners of political contests. Across twelve national elections in five countries, for example, the average margin of error of the last large-scale voter polls taken before the election was 1.93 percent. The average margin of error of the final IEM markets prices was 1.49 percent. 17

The Hollywood Stock Exchange, a Web-based prediction market devoted to movies that does not use real money, provides another example of crowd wisdom. Its traders usually arrive at a highly accurate prediction concerning how much money a Hollywood movie will make during its opening weekend.’ 8 This accuracy is particularly impressive because movie revenues have proved extremely difficult to forecast using other means; as University of California, Irvine, Professor Arthur de Vany summarized, “My research … shows (and every movie fan knows) that motion picture revenues are not forecastable; the forecast error is infinite.” 19 Screenwriter William Goldman famously distilled this conclusion to “nobody knows anything.” The Hollywood Stock Exchange and other prediction markets seem to show, in contrast, that many people can know many things.

In The Wisdom of Crowds, Surowiecki wrote that “… the most mystifying thing about [prediction] markets is how little interest corporate America has shown in them. Corporate strategy is all about collecting information from many different sources, evaluating the probabilities of potential outcomes, and making decisions in the face of an uncertain future. These are tasks for which [prediction] markets are tailor-made. Yet companies have remained, for the most part, indifferent to this source of potentially excellent information, and have been surprisingly unwilling to improve their decision making by tapping into the collective wisdom of their employees.” 20

Cowgill shared Surowiecki’s puzzlement as he read his book, and came to believe that prediction markets were a natural technology for Google. The company’s stated mission, after all, was “to organize the world’s information and make it universally accessible and useful.” Cowgill became intrigued by the idea of starting a prediction market at Google. He knew he’d need colleagues, particularly those with programming expertise, to help build one. 21

Ilya Kirnos was exactly the kind of colleague Cowgill was looking for. He had programming expertise, and he shared Cowgill’s interest in giving people a forum in which to make predictions. For Kirnos this interest sprang less from an interest in political elections and market mechanisms than from job frustrations he’d experienced. He had joined the advertising systems group at Google in 2004 after working at Oracle. Earlier in his career he had participated in a project that he and many other engineers knew would not succeed, but that continued to receive support and funding.

Kirnos wondered why it had been so hard to spread the word that this effort was doomed to fail, and he wanted to use technology at least to document the fact that he had accurately predicted its fate. Kirnos built a simple application called “itoldyouso” that allowed people to offer and accept nonmonetary bets and keep track of them over time. Employees could use it, for example, to essentially say to their colleagues, “I’ll bet you this project won’t be finished on time; any takers?” When they won a bet, the system helped them say, “I told you so!” 22

Cowgill and Kirnos, however, did not know each other. Although they were employed by the same company, they worked in very dissimilar functions and would normally have little opportunity to meet and discover their common interests. Moreover, building prediction markets was not part of either of their job descriptions. How could they ever find each other, productively combine their talents, and create a technology to harness collective intelligence? And if a prediction market were ever built within Google, would it work as well as the IEM and Hollywood Stock Exchange did? Or would there be too few traders and trades to let markets work their magic and make accurate predictions?

Poor Tools

Each of the case studies just described is about collaborative work, and IT has a long history of activity in this area. The first conference on computer-supported collaborative work (CSCW), for example, was held in 1984, and over the years software vendors have offered many products intended to support interdependent teams and groups.

Yet none of the decision makers in the case studies presented here considered using groupware or knowledge management (KM) software, the two classic applications of corporate CSCW. “Groupware” is a catch-all label for software such as Lotus Notes, which allows members of work teams to message each other, share documents and schedules, and build customized applications. Just as its name implies, groupware aims to help people with a common purpose work together by giving them access to a shared pool of information and communication tools. After the first version of Notes was released in 1989, groupware became quite widespread. Many organizations used the messaging and calendaring functionality of their groupware heavily but found the software less well suited to finding and sharing information and knowledge. One review of groupware research concluded that “In practice, Lotus Notes … is merely a glorified e-mail program.” 23

After groupware, KM systems were the second main group-level technology deployed widely in the 1980s and 1990s. KM systems essentially consisted of two components: a database designed to capture human knowledge about a particular topic—the best ways to sell mobile phones, the problems that cropped up among photocopiers at customer sites and how to fix them, tax law changes and how to interpret them—and a front-end application used by people to populate this database. KM systems were intended to receive “brain dumps” from people over time—their experiences, expertise, learnings, insights, and other types of knowledge. In most cases, though, they fell far short of this goal. As knowledge and technology researcher Tom Davenport of Babson College concluded, “The dream … that knowledge itself—typically unstructured, textual knowledge—could easily be captured, shared, and applied to knowledge work … [has not] been fully realized … it’s taken much longer than anyone anticipated.” 24

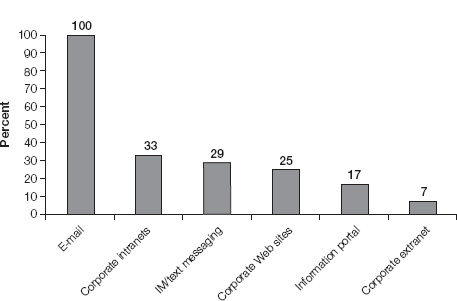

Davenport’s downbeat conclusion was amply supported by a survey he conducted in 2005 to determine what communications technologies people actually used in their work. As the results showed (see figure 2-1), classic groupware and KM systems didn’t even make the list (although to be fair, by 2005 most groupware contained both e-mail and instant messaging functionality).

So by the middle of this century’s first decade, corporate managers had reason to be skeptical that IT could ever be used effectively to knit together groups of people, letting them both create and share knowledge as a community. The technologies popular at that time for producing information (according to Davenport’s research)—e-mail and instant messaging (IM)—were used for one-to-one or one-to-many communications; they aided personal productivity but didn’t enhance group functionality. On the other hand, the most popular technologies for consuming information, intranets and public Web sites, were created, updated, and maintained by only a few people, not by all members of a workgroup.

FIGURE 2-1

Percentage of knowledge workers using each medium weekly

Source: Thomas H. Davenport, Thinking for a Living: How to Get Better Performance and Results from Knowledge Workers (Boston: Harvard Business School Press, 2005).

To understand why groupware, KM, and other “classic” CSCW applications are so unpopular and why they were not seriously considered in any of the case studies described above, we need to go back in time a short way and focus our attention not on organizations, but instead on the World Wide Web. In the early and middle years of the millennium’s first decade a set of new tools and new communities began to appear on the Web. As we’ll see, they are quite powerful. They overcome the limitations of earlier tools for CSCW and assist greatly in resolving the problems in each of this chapter’s case studies.