It is a common requirement for enterprises to provide and leverage Internet services such as DNS, e-mail, and file transfer. How the services are used and properly integrated into the enterprise network infrastructure remains a constant challenge for enterprises in addition to implementing security. The latest malware threats utilize these common services in order to redirect internal hosts to Internet destinations under the control of the malware writers. In a network with correctly implemented architecture, this scenario would mostly be a mute point, and with additional security mechanisms, a rare occurrence.

Domain Name Service (DNS) is in my opinion one of the greatest inventions, saving all of us from memorizing 32-bit and soon 128-bit IP addressing to browse to our favorite Internet websites. DNS provides a mapping of an IP address to a fully qualified domain name, an example is www.google.com, at IP address 173.194.75.106, one of the many web servers that serve the website. It is much easier to memorize a name versus a string of numeric characters. A system can be directed anywhere on the Internet with DNS, so the authenticity of the source of this information is critical.

This is where DNS Security Extensions (DNSSEC) come into play. DNSSEC is a set of security extensions for DNS that provide authenticity for DNS resolver data. In other words, the source of DNS data cannot be forged and attacks like DNS poisoning, where erroneous DNS is injected into DNS and propagated, resulting in pointing hosts to the wrong system on the Internet is mitigated. This is a common method used by malware writers and in phishing attacks.

Another area of security in regards to DNS implementation are DNS zone transfers—the records that the DNS server maintains for the domains it is responsible for. A lot can be learned from the records on the DNS server, including hidden domains used for purposes other than general Internet use and access. The extremely insecure practice of storing information in TXT records on DNS servers can be detrimental if the document has sensitive information contained within, such as system passwords. We will take a more detailed look into securely implementing DNS using some ideas from our trust models.

DNS resolution can make for easy exploitation of victim hosts if there is no control on where the internal hosts are getting this mapping information from on the Internet. This has been the main method used by the Zeus botnet. Hosts are pointed to maliciously controlled Internet servers by manipulating DNS information. With complete control of how a host resolves web addresses, this ensures that the victim hosts only go where the malicious hackers want them to venture. The method also relies on compromised or specifically built DNS servers on the Internet, allowing malware writers to make up their own, unique and sometimes inconspicuous domain names that, at a glance, do not spark the interest of the IT security team and can remain undetected.

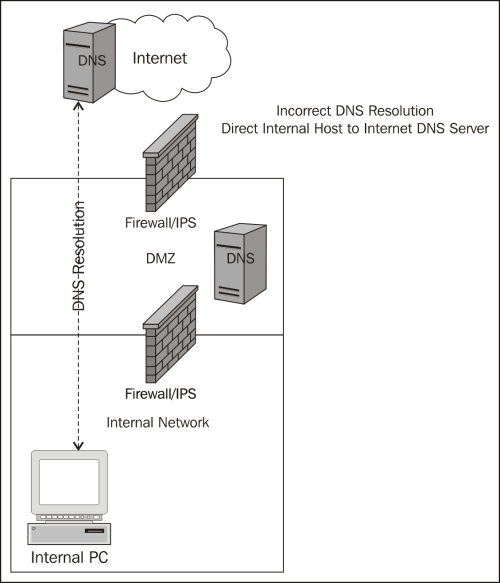

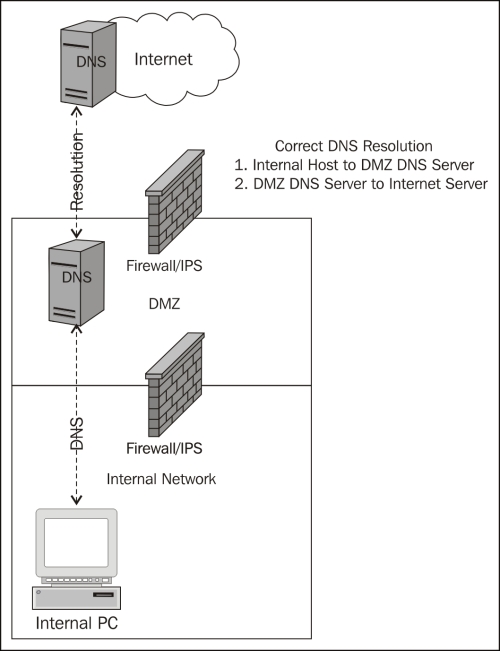

Here is an example of an incorrect DNS resolver implementation. This highlights that internal hosts are able to resolve DNS names from anywhere on the Internet.

DNS should be configured and tightly controlled to ensure that DNS resolution information is sourced from an enterprise maintained DNS infrastructure for internal hosts. In this implementation, the internal hosts rely on a trusted DNS server owned and maintained by the enterprise. The enterprise DNS infrastructure is configured in such a way that resolution of domain names is tightly controlled and will leverage only trusted Internet sources.

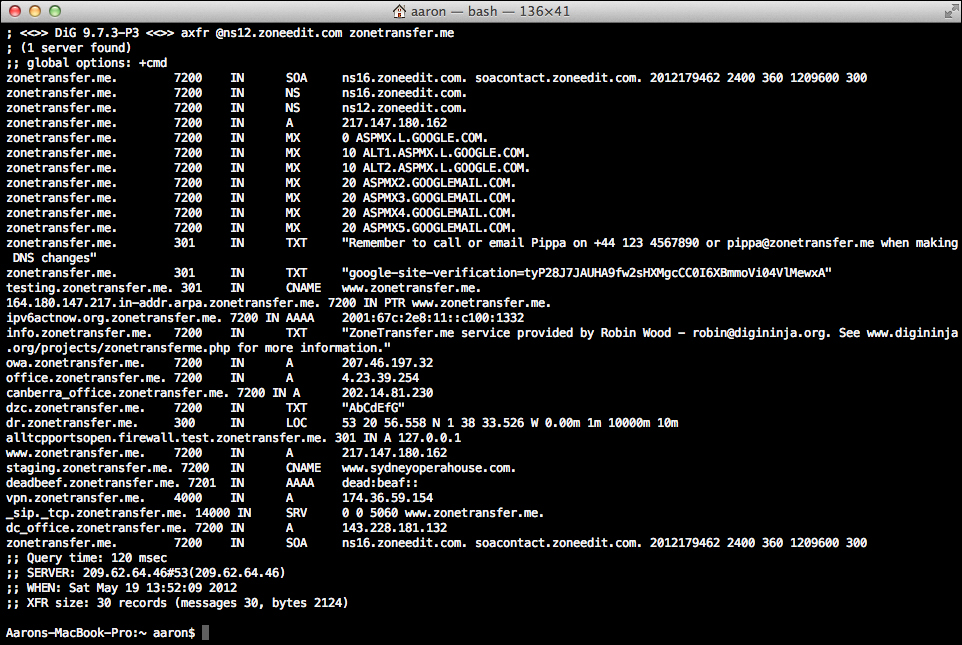

A DNS zone transfer is the mechanism used in DNS to provide other DNS servers with what domains the DNS server is responsible for and all the details available for each record in the zone. A DNS zone transfer should be limited to only trusted partners and limited to only zones that need to be transferred. The next screenshot provides information that is gathered with a DNS zone transfer using the Domain Information Groper (DIG) tool.

The command used to get this information is:

dig axfr @ns12.zoneedit.com zonetransfer.me

Typing this command at the command prompt will produce the following output:

This is a specially created DNS instance configured by DigiNinja as the ZoneTransfer.me project to show the dangers of allowing zone transfers to any anonymous system on the Internet. The project along with an excellent write-up can be found at the project site: http://www.digininja.org/projects/zonetransferme.php.

With a quick glance, you are able to determine the DNS structure of this server and the possible avenues of attack if you were a penetration tester or malicious hacker. An enterprise may have several domain names for various services they provide to business partners and employees that are not "known" by the general public. While the fact remains that if the service is available on the Internet, it can be found, a simple zone transfer reduces the discovery process significantly. This specific example shows office-specific records, a VPN URL, SIP address for VoIP, and it looks like there might be a staging system with potentially poor security. That is a lot of information about the enterprise services available on the Internet.

Depending on the DNS structure within the enterprise, there may be internal and external DNS implementations with records specific to the areas of the network they service. For instance, the internal DNS server may have records for all internal hosts and services, while a DNS server in the DMZ may only have records for DMZ services. In this type of implementation, there will be some dependencies across the DNS infrastructure, but it will be critical to keep the records uncontaminated from other zones. If the DMZ server somehow receives the internal zone information, then any system that can initiate a zone transfer with the DMZ server will also get the internal network DNS information. This could be a very big problem if anonymous users on the Internet could study the internal DNS records.

We will talk more about the TXT records discovered after the zone transfer in the next section.

DNS records are the mapping of IP addresses to fully qualified domain names of systems, services, and web applications available either internal or external to a network. The Request for Comments (RFC) 1035 for DNS indicates the resource types that a record specifies. The standard resource records are A, NS, SOA, CNAME, and PTR. We will not cover DNS in detail as it is out of scope for this book. To learn more about DNS, start with the RFC: http://www.ietf.org/rfc/rfc1035.txt.

Using DNS is a great way to provide access to a service using an easy to remember name. If the IP address mapped to a name changes, it is no big deal; it is a simple DNS change. In most cases, this would be an invisible change to the user of the system or service. Records can be created for services such as VPN, web e-mail, and special sites for specific users. In the ZoneTransfer.me example, we not only see these types of services, but also a record for a staging system and FQDNs that would probably never be guessed and would remain unknown to those not seeking them.

With a closer examination of the resource record types from ZoneTransfer.me, there are a few records that stick out, the TXT resources.

zonetransfer.me. 301 IN TXT "Remember to call or e-mail Pippa on +44 123 4567890 or [email protected] when making DNS changes" zonetransfer.me. 301 IN TXT "google-site-verification=tyP28J7JAUHA9fw2sHXMgcCC0I6XBmmoVi04VlMewxA" info.zonetransfer.me. 7200 IN TXT "ZoneTransfer.me service provided by Robin Wood - [email protected]. See www.digininja.org/projects/zonetransferme.php for more information." dzc.zonetransfer.me. 7200 IN TXT "AbCdEfG"

As you can see from the output, there is specific information contained in these .txt files that probably should not be public. It looks like the owner of DNS is Pippa and we can also see all the contact information for Pippa to request changes to DNS. This could easily be used maliciously if it was real data. Initially, the assumption by the DNS administrator is that no-one will see this information and this is a relatively safe location to put the information.

The important thing to note about the usage of TXT records is that the data leakage may give too much information that can be used in a malicious manner against the enterprise. Information provided in this example not only provides system administrator information but also information for Google site verification and could lead to exploitation by creative means.

The DNS server administrator was probably not thinking a zone transfer would give up so much information that could be used to attack the organization. DNS records should only contain information needed to resolve IP addresses to domain names.

Security extensions added to the DNS protocol make up the Internet Engineering Task Force (IETF) DNS Security (DNSSEC) specification. The purpose of DNSSEC is to provide security for specific information components of the DNS protocol in an effort to provide authenticity to the DNS information. This is important, because some of the most well known hacks of recent times were actually DNS attacks and systems were never hacked as clients were simply redirected to another website. However, if clients can be directed to a site that resolves a domain name they entered, then they have reached a compromised website in their minds. This is DNS poisoning , where the DNS information on the Internet is poisoned with false information, allowing attackers to direct clients to whatever IP address on the Internet they desire.

The importance of DNSSEC is that it is intended to give the recipient DNS server confidence in the source of the DNS records or resolver data that it receives. Another benefit is users of DNS, have some protection that ensures their information is not altered or poisoned, and users intending to access their systems will get to the correct Internet destination.

An essential method of communication for the enterprise today is e-mail. The requirement to send and receive e-mails with the expectation to respond to it as instantaneously as instant messaging makes this service a critical business function. With the increased growth and acceptance of cloud-based services, e-mail is amongst the first to be leveraged. Some enterprises are taking advantage of the low cost option to move their e-mail implementation to the cloud. This, however, introduces some challenges for not only the control of the e-mail and data within, but filtering unwanted e-mails. While some cloud services have impressive SPAM detection and mitigation, the enterprise may want to augment this with a solution they have some control over. The next sections will cover common e-mail threats and present methods to secure e-mail from SPAM and SPAM relaying.

E-mail is one of the most popular methods to spread malware or lead users to malware hosted on the Internet. Most often, this is the single intent of unwanted e-mails in the form of SPAM. Threats from e-mail SPAM range from annoying and unsolicited ads to well-crafted socially engineered e-mails that steal credentials and install malicious malware.

Methods to protect the enterprise from SPAM include cloud-based and local SPAM filtering at the network layer and host-based solutions at the client. A combination of these methods can prove to be most effective at reducing the risk and annoyance of SPAM. Receiving SPAM and becoming the source of SPAM while being used as a relay are two sides to the same coin, and the challenge most focused on by e-mail administrators and IT security.

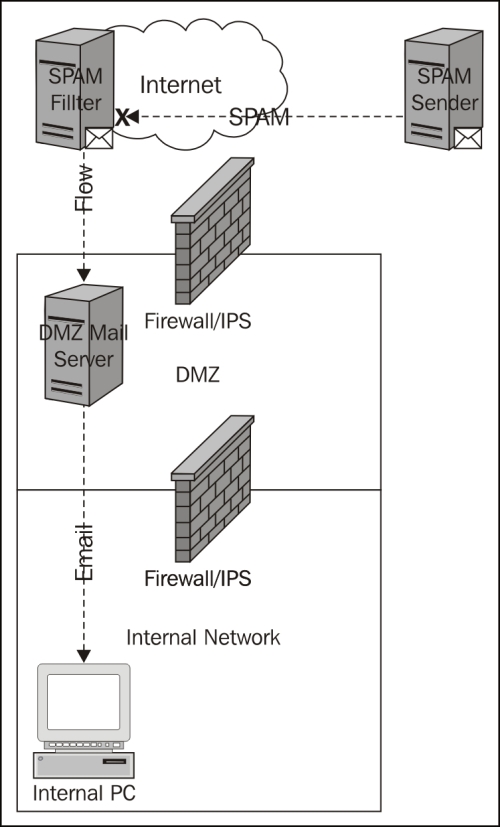

Cloud-based solutions offer an attractive option for protecting the enterprise from this nuisance. The service works by configuring the DNS mail record (MX) to identify the service provider's e-mail servers. This configuration forces all e-mails destined to e-mail addresses owned by the enterprise through the SPAM solution filtering systems before forwarding to the final enterprise servers and user mailbox. Outbound mail from the enterprise would take the normal path to the destination as configured, to use DNS to find the destination domain email server IP address.

With this solution, there can be a significant cost depending on how the service fee structure is designed. The benefits include little-to-no administration of the solution, reduced SPAM e-mail traffic, reduced malware and other threats propagated through e-mails. Some caveats include lack of visibility into what filters are implemented, what e-mail has been blocked, service failure can mean no e-mail or undesirable delay, and potential cost associated with the service.

Here is an example of what an email SPAM filtering solution looks like in the cloud:

The following questions should be answered when making a decision to select a cloud-based SPAM service:

- Is there a cost benefit of using the solution? (Consider capital and operating expense including people and processes that must be in place.)

- How will the enterprise be informed if an e-mail is blocked? Will there be a list of e-mails to ensure there are no false positives?

- What happens if the service fails? Is there an internal SLA on the e-mail service?

- Are there contract restrictions, forcing the use of the service for a defined period of time?

- What is the reputation of the provider? Is there a low false-error rate?

The enterprise may decide to perform SPAM filtering locally to maintain complete control over this critical communication method. There are several solutions that are able to provide SPAM filtering and e-mail encryption in one appliance. This may play a role in the enterprise data loss prevention and secure file transfer strategies, providing more than just SPAM filtering. However, if the enterprise opts to leverage a cloud-based e-mail solution, local e-mail security mechanisms may have no place in the new e-mail communication flow.

In the case of web-based e-mail hosting, the SSL connection would be from the user's browser or e-mail client to the hosted e-mail servers. SSL decryption could be possible, but the overhead and privacy implications should be weighed carefully to ensure that additional cost is not incurred with little benefit to the enterprise. There are differing views on SSL decryption. Technically, decrypting SSL by presenting a false certificate in order to snoop, breaks SSL theory and is considered a man-in-the-middle attack. The position taken by each enterprise must be based on the risk determined by the enterprise based on users, data, and access level.

Local SPAM filtering leverages an appliance that receives e-mails from Internet sources, and then analyzes incoming messages and attachments for offenses to configured policies, and forwards e-mails to local e-mail servers if assessed as safe to forward. In order to maintain the best protection against emerging SPAM threats, the vendor will continuously update the appliance to include new block list updates and signatures. An additional benefit of having the service locally is the ability to also own the DNS infrastructure that tells other e-mail systems where to send e-mail. In the event of appliance failure, e-mails can be routed around the failure using DNS to maintain the e-mail service.

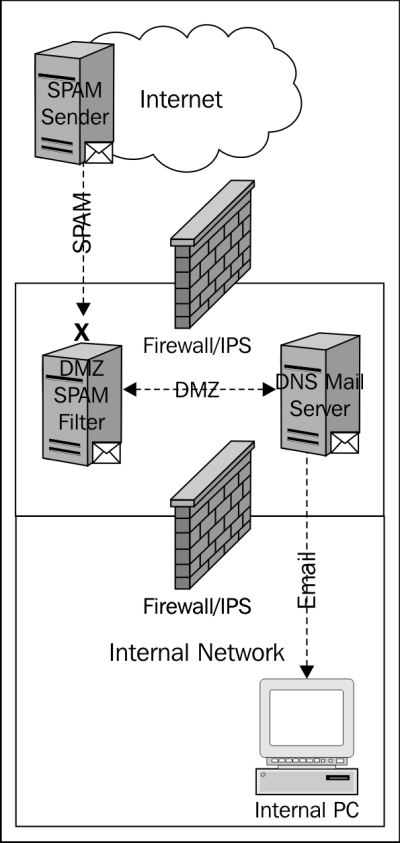

The following diagram is a depiction of what local SPAM filtering may look like for an enterprise:

The following questions should be answered when making a decision on local SPAM protection:

- Can the solution provide a user-controlled quarantine? (Users can remove erroneously blocked e-mails.)

- Does the solution have a bypass feature?

- How often is the appliance updated?

- How easy is it to view blocked or quarantined e-mails?

- Are there additional email security features available such as encryption?

Misconfiguration of the enterprise mail servers may lead to exploitation in the form of using the servers as a SPAM relay. This method uses the server's lack of sender authentication and capability to send e-mails from domains which it does not have authority to send e-mail. Unfortunately, this misconfiguration is common for Internet facing e-mail systems where the only authentication would need to be the internal mail relay. It is common for internal servers to have misconfiguration due to the requirement of non-human processes that must be able to send e-mails, such as the alerting mechanism on a security system.

Instead of creating accounts for every possible system that would need this authorization, it is easier to allow anyone to send e-mails through the mail server. The internal server should still have restrictions on sending domains, to avoid the system being misused to send SPAM or other spoofed e-mails.

Tip

To test the enterprise mail servers for relaying capability, use telnet and connect to the mail server on port 25. Once connected, use the available commands and attempt to send an e-mail from an e-mail domain that the enterprise does not have authority to send e-mail from; for example [email protected]. If this is allowed, the server is misconfigured and relaying is possible. Once this server is identified on the Internet, it is likely to be exploited for this capability. The enterprise may be at risk of becoming the source of SPAM and can therefore be blocked by services such as SPAMHAUS. To check for blocked status, visit the SPAMHAUS Block List page at http://www.spamhaus.org/lookup/.

Another method to reduce the enterprise's likelihood of ending up on a SPAM block list is to ensure that only the enterprise e-mail servers are permitted to send e-mails via TCP port 25 outbound from the enterprise network. Some malware is specifically designed to blast e-mail SPAM from the infected system, thus getting the enterprise blocked by services such as SPAMHAUS.

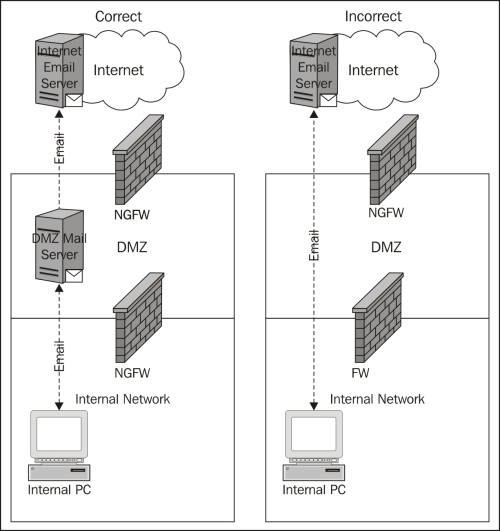

The following diagram presents the correct and incorrect methods to implement e-mail controls at the firewall to ensure that only the internal mail servers are able to directly send e-mails to the Internet. This method reduces the potential impact of end system malware, designed to send SPAM from inside the network, potentially getting the enterprise blocked on SPAM lists.

Getting data to and from the enterprise network is not only a convenience, but also many times is a necessity to facilitate business operations. There are many protocols and methods that are viable options; FTP, SFTP, FTPS, SSH, and SSL to name a few standard protocols with many more proprietary options available too.

The migration to secure protocols has been driven primarily by security standards such as PCI DSS, ISO 27001, and NIST, though adoption has been dismal for enterprises that lack a third-party audit requirement. One of the challenges with file transfer is secure implementation. Not only should the protocols be secure, but the design must also adhere to network and security architecture, to ensure that any compromise is limited and to enforce control over file transfer.

Imagine what security challenges may be inherent with the ability to use uncontrolled encrypted file transfer from a user's desktop to any Internet destination. Essentially, an implementation, as described, would circumvent most network-based security controls.

A method to ensure secure communication and the ability to control what is transferred and to whom is to implement an intermediary transfer host. The ideal location of the host would be the DMZ to enforce architecture and limit the scope of compromise. The solution should also require authentication to be used and the user list audited regularly, for both voluntarily and involuntarily terminated employees.

Not all enterprises that have implemented secure protocols use a secure file transfer. In some cases, this is by design such as credit card authorizers, where the risk has been accepted due to the overhead and complexity of managing secure communications for a high number of clients. Design may have nothing to do with the lack of secure communication capabilities. It may not be a priority as the enterprise may not have to perform any type of risk analysis on the business process or may remain ignorant to the idea of risk associated with the use of insecure file transfer and business critical data.

It can be challenging to implement a secure transfer solution, especially if not using an SSL implementation where encryption can be managed by certificates, which are both inexpensive and easy to implement. Not to mention, almost everyone knows how to use a browser to upload a file. In instances where SSH or SFTP is used, this can be more complicated to provide authentication and encryption.

For SSH, SFTP, and other such protocols, there are two methods of authentication, namely user credentials and keys. For user authentication, the enterprise must configure either locally or using directory services, such as Windows Active Directory for users that can access the service. Both have security implications. For local accounts, the fact that they are locally stored on the server may leave them vulnerable to compromise even if hashed in the Linux /etc/shadow file or SAM on Windows. The system administrator will also have to manually manage user credentials on each and every system configured.

For systems that rely on a central user directory, the implementation must be thought out to ensure that any compromise of the system does not lead to a compromise of the internal user directory. Some enterprises implement a unique user directory to be used for services in the DMZ to enforce segregation of directories, with the ability to limit the scope of a compromise while centralizing administration for multiple systems and services.

An alternate method for authentication is to use Simple Public Key Infrastructure (SPKI). This method is used with PGP. The design of the SPKI is based on trust determined by the owner, and if the owner chooses to trust another, then the other will be given a public key that is mathematically related to the owner's private key. This private-public key combination can be used for authenticating systems, applications, and users.

In our example, a user is issued a key that provides user authentication. When the SSH session is started, the receiving system checks to see if there is a trusted key for authentication being presented. If there is a match, the authentication is successful. The keys must be protected on the system that generated the private key. The benefit is that the key can be used in an application and referenced in a script where using credentials in this case should be avoided.

Internal user access to the Internet is probably deemed a much more critical service than even e-mails. Calls to the helpdesk will happen faster if access to Facebook is down versus a critical business application. With so much focus on the use of this technology, it must be secured and monitored to provide a safe use of the Wild West Internet, reducing the risk this threat vector presents.

In order to provide some level of security and monitoring, the use of Internet proxy technology is required. A proxy accepts a connection from a client, such as an internal host, and makes the connection to the final destination on behalf of the client. This allows the true identity of the client to be protected and provides the ability to filter Internet traffic for inappropriate sites, for example.

There are standalone proxy solutions and the aforementioned NGFWs have this feature, which allows for URL filtering based on category and known malicious destinations. Depending on the implementation, various features can be utilized with the NGFW that are not an option with some standalone proxy solutions; for example, features such as advanced application inspection for Internet threats and malware. If an NGFW is implemented, providing Internet proxy at this control point may be desirable to reduce complexity and gain features including user authentication.

Standalone proxies typically have the option to perform user authentication, but lack more advanced security features. It should be noted that proxy solutions without the ability to inspect Internet traffic for advanced threats typically have a mechanism to send traffic to a third-party system for inspection prior to sending the traffic to the Internet destination.

Note

There are cases that require a purpose built proxy solution to provide advanced proxy capabilities, such as Proxy Auto-Configuration (PAC) files that control which connections use the proxy and which are routed directly. This is a feature that is lacking today in most NGFW solutions. To make the best selection, understand the requirements for Internet access, protection, and monitoring to ensure that the solutions considered are capable of meeting the defined requirements.

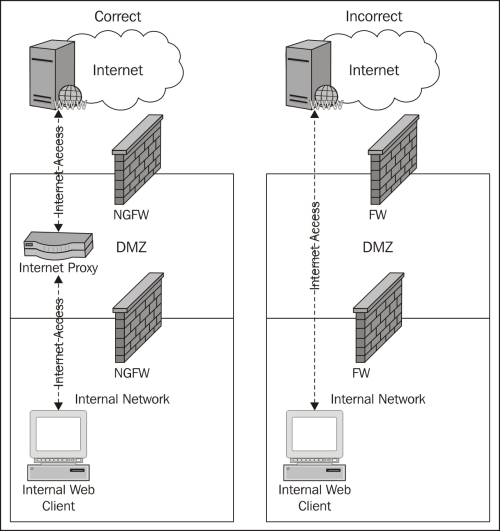

The following diagram presents the incorrect and correct method to providing Internet access to internal systems:

Some enterprise networks do not have a proxy solution, and Internet access is facilitated directly from the client to the Internet destination. These implementations are typically where most malware is successful, purely due to the lack of security and monitoring. Using SPAM and leveraging social engineering techniques, internal users can be directed to websites that automatically install malware, some of which can go undetected by anti-virus installed on end systems.

At a minimum, an Internet proxy is recommended to monitor and block access to known malicious sites and sites that may introduce varying levels of risk to the enterprise. It is recommended to have a layered approach to Internet access, where data is inspected not only for malicious content but critical enterprise data that may indicate a compromise.

Internet accessible websites are the most targeted asset on the Internet due to common web application security issues, such as SQL injection, cross-site scripting, and cross-site request forgery. There are several approaches to securing websites, but it is truly a layered security approach requiring a combination of secure coding, web application firewalls, and other security mechanisms that will provide the most benefit. We'll take a look at methods to secure Internet accessible websites.

The website would not exist without the web application. Technically, a website does not constitute a web application, but even the simplest one has a contact form, or search function. These are examples of web applications that perform a function to collect and store visitor data when the contact form is completed and submitted, and the search function searches the web content stored in a database, such as MySQL, Oracle, or MSSQL. The web application is the link between the user and the data where it is stored, and any mistake or security oversight in the development of the web application can have grave consequences.

Secure coding is not a natural tendency for developers as they are mainly tasked with developing applications with business requested functions and features. Without a security check during the development phase, critical security vulnerabilities may be coded in the final product. Utilizing a secure software development lifecycle (S-SDLC) is the best method to ensure that secure coding practices are being followed. Essentially, the life cycle provides a framework for how the coding process is to be completed with testing and validation of the code. This process is iterative for each new instance of code or modified portions of code.

During the iterative SDLC, developers can and should test their code for security vulnerabilities either in the development or quality assurance phase to ensure that no vulnerabilities or at least only low risk vulnerabilities make it into the code release.

There are several open source and commercial products available for testing not only web applications via web scanning, but source code analysis as well. The tools typically are designed for developer use, but it is helpful to have IT security personnel who understand development and common web application programming languages. IT security involvement can help remediation efforts if the development team is unclear of the tool's output and how to remediate vulnerabilities.

When using tools to test web application code for vulnerabilities, the reporting capabilities should be tested to determine which product meets the requirements of the enterprise by first understanding how the data is to be shared and with whom. Ownership of the tool should technically reside with the development team but with oversight from the IT security team to ensure that the SDLC process is being followed and to independently assess the effectiveness of the SDLC. Ultimately, vulnerabilities identified should be documented and tracked through remediation within a centralized vulnerability or defect management solution.

Secure coding must be the focal point of the security strategy for securing web applications; if not, there must be a shift to this model to have a truly layered defense against cyber attacks. There is a limit to the protection of using only third-party solutions versus securing the code. Any misconfiguration of these other tools exposes the vulnerable application where the vulnerability should have been fixed.

We have already covered the next generation firewall (NGFW) earlier in the chapter, but this section warrants further discussion, as it pertains to leveraging the NGFW to protect Internet-facing enterprise websites and applications. Threats within seemingly benign connection attempts to the web servers can be detected and mitigated with the application aware firewall. By enforcing strict access to applications necessary to provide the service to Internet users, risk is greatly reduced for other applications running on the server and the underlying operating system. A benefit of using a next generation firewall is that access can be provisioned by applications, such as web browsing, and is not restricted by TCP port. This requires more intelligence and a deeper inspection of communication.

Filtering all inbound traffic at the firewall can also alleviate system resource consumption from bursting traffic loads on the web servers, by inspecting and mitigating all illegitimate traffic, such as denial of service attacks, before they reach the web servers. This implementation, in addition to secure coding, will provide additional protection for Internet-facing web servers and applications. It should be noted that NGFW alone is not sufficient for protection for web applications, but a layer in the security protection mechanism is. There is a lack of in-depth application capability and detection beyond basic attack types with no protection for database interaction.

Intrusion prevention may also be implemented at the network perimeter to mitigate known attack patterns for web applications and the underlying operating system. Typically, IPS detection and mitigation engines have limited intelligence apart from the typical patterns found in the malicious payload of SQL injection attacks and other popular attacks. The lack of in-depth web application behavior and accepted communications can lead to a high number of false positives, however, IPS can provide excellent denial of service protection and block exploit callbacks.

Note

With the capabilities found in NGFW, a standalone IPS may not be a viable protection mechanism investment to protect the web infrastructure if the capability is enabled in the NGFW. There are various schools of thought on standalone or integrated IPS and there are logical reasons for each. This book does not purposely attempt to endorse one method over the other.

Web application firewalls are designed to specifically mitigate attacks against web applications through pattern and behavioral analysis. The primary detection and mitigation capabilities include attacks against known web application vulnerabilities, such as SQL injection, cross-site scripting, command injection, and misconfigurations. More advanced web application firewalls use another component at the database tier of the web applications, which is either installed on the database server or proxies inbound connections. This is important for a couple of reasons.

First, it is hard to determine if a detected threat warrants further investigation, if it is unknown whether the threat was able to interact with the database. The database is key as it serves as the data repository for the web application and may house sensitive information such as customer information, credit card numbers, and intellectual property, for example, product information. All this important data must be accessible to the web application for the service being provided.

Second, attacks that do get past the first layer of the web application firewall can be mitigated at the database tier of the network architecture. The database team may not detect a successful database attack through the web application if the team is not trained to identify a successful exploit. Some web application firewall solutions are able to leverage both the tiers of protection to control the alerting of attacks, reducing false positive alerts and meaningless data to manually analyze attacks by the security team.

The most significant benefit of database protection using a web application firewall is the ability to enforce security controls for database access initiated not only by the web application but also by database administrators. This allows strict control of the queries sent to the database whether from the application or a database administration tool. Any deviation from the expected can be blocked, therefore mitigating common database attacks through incorrectly written and configured web applications or misuse. A commercial product leader in this space is Imperva (http://www.imperva.com). Their solutions provide comprehensive web attack mitigation and database security through database access and activity management capabilities.

There may be confusion with the difference in protection capabilities of NGFW and a specialized web application firewall. An NGFW will perform very basic mitigation of common web application attacks at the perimeter; there is no database protection. Additionally, the NGFW typically is not capable of advanced customization and configuration specific to the environment where it is deployed. This is where the web application firewall exhibits the benefit of a specialized solution designed for web application and database protection.

Web application firewalls should be considered as an important layer in the defense strategy to mitigate web application threats and provide the much needed database security in the enterprise.