Chapter 20. Ethernet Performance

“Performance” is an umbrella term that can mean different things to different people. To a network designer, the performance of an Ethernet system can range from the performance of individual Ethernet channels, to the performance of Ethernet switches, to the performance and capabilities of the entire network system.

For the users of a network, on the other hand, performance usually refers to how quickly the applications that they are using over the network respond to their commands. In this case, the performance of the Ethernet system that connects to a user’s computer is only one component in a whole set of entities that must work together to provide a good user experience.

Because this is a book about Ethernet local area networks, we will focus on the performance of the Ethernet channel and the network system. Along the way, we will also show how the performance of the network is affected by a complex set of elements that includes local servers, filesystems, cloud servers, the Internet, and the local Ethernet system, all working to provide application services for users.

The first part of this chapter discusses the performance of the Ethernet channel itself. We will examine some of the theoretical and experimental analytical techniques that have been used to determine the performance of a single Ethernet channel. Later, we discuss what reasonable traffic levels on a real-world Ethernet can look like. We also describe what kinds of traffic measurements you can make, and how to make them.

In the last part of the chapter, we show that various kinds of traffic have different response time requirements. We also show that response time performance for the user is the complex sum of the response times of the entire set of elements used to deliver application services between computers. Finally, we provide some guidelines for designing a network to achieve the best performance.

Performance of an Ethernet Channel

The performance of an individual Ethernet channel was a major topic when all Ethernet systems operated in half-duplex mode, using the CSMA/CD media access control mechanism. On a half-duplex system, all stations used the CSMA/CD algorithm to share access to a single Ethernet channel, and the performance of CSMA/CD under load was of considerable interest.

Those days are gone, and now virtually all Ethernet channels are automatically configured by the Auto-Negotiation protocol to operate in full-duplex mode, between a station and a switch port. In full-duplex mode a particular station “owns” the channel, because the Ethernet link is dedicated to supporting a single station’s connection to a switch port. There are two signal paths, one for each end of the segment, so the station and the switch port can send data whenever they like, without having to wait.

Both the station and the switch port can send data at the same time, which means that a full-duplex segment can provide twice the rated bandwidth at maximum load. In other words, a 1 Gb/s full-duplex link can provide 2 Gb/s of bandwidth, assuming that both the station and the switch port are sending the full rate of traffic in both directions simultaneously.

Performance of Half-Duplex Ethernet Channels

Ethernet systems operated as half-duplex systems for many years, and a number of simulations and analyses of those systems were published. This activity resulted in a number of myths and misconceptions about the performance of older Ethernet systems, which are discussed next. Keep in mind that we are discussing the old half-duplex model of Ethernet operation, which is rarely used anymore.

When calculating the performance of a half-duplex Ethernet channel, researchers often used simulations and analytic models based on a deliberately overloaded system. This was done to see what the limits of the channel were, and how well the channel could hold up to extreme loads.

Over time, the simulations and analytic models used in these studies became increasingly sophisticated in their ability to model actual half-duplex Ethernet channel behavior. Early simulations frequently made a variety of simplifying assumptions to make the analysis easier, and ended up analyzing systems whose behavior had nothing much to do with the way a real half-duplex Ethernet functioned. This produced some odd results, and led some people to deduce that Ethernets would saturate at low utilization levels.

Persistent Myths About Half-Duplex Ethernet Performance

Due to the incorrect results coming from simplified models, there arose some persistent myths about half-duplex Ethernet performance, chief of which was that the Ethernet channel saturated at 37% utilization. We’ll begin with a look at where this figure comes from, and why it had nothing to do with real-world Ethernets.

The 37% figure was first reported by Bob Metcalfe and David Boggs in their 1976 paper that described the development and operation of the very first Ethernet.[67] This was known as the “experimental Ethernet,” which operated at about 3 Mb/s. The experimental Ethernet frame had 8-bit address fields, a 1-bit preamble, and a 16-bit CRC field.

In this paper, Metcalfe and Boggs presented a “simple model” of performance. Their model used the smallest frame size and assumed a constantly transmitting set of 256 stations, which was the maximum supported on experimental Ethernet. Using this simple model, the system reached saturation at about 36.8% channel utilization. The authors warned that this was a simplified model of a constantly overloaded shared channel, and did not bear any relationship to normally functioning networks. However, this and subsequent studies based on the simplified model as applied to 10 Mb/s Ethernet led to a persistent myth that “Ethernet saturates at 37% load.”

This myth about Ethernet performance persisted for years, probably because no one understood that it was merely a rough measure of what could happen if one used a very simplified model of Ethernet operation and absolute worst-case traffic load assumptions. Another possible reason for the persistence of this myth was that this low figure of performance was used by salespeople in an attempt to convince customers to buy competing brands of network technology and not Ethernet.

In any event, after years of hearing people repeat the 37% figure, David Boggs and two other researchers published a paper in 1988, entitled “Measured Capacity of an Ethernet: Myths and Reality.”[68] The objective of this paper was to provide measurements of a real Ethernet system that was being pushed very hard, which would serve as a corrective for the data generated by theoretical analysis that had been published in the past.

The three authors of the paper, Boggs, Mogul, and Kent, noted that these experiments did not demonstrate how an Ethernet normally functions. Ethernet, in common with many other LAN technologies, was initially designed to support “bursty” traffic instead of a constant high traffic load. In normal operation, many half-duplex Ethernet channels operated at fairly low loads, averaged over a five-minute period during the business day, interrupted by peak traffic bursts as stations happened to send traffic at approximately the same time.

In the Boggs, Mogul, and Kent paper, a population of 24 workstations were programmed to constantly flood a 10 Mb/s Ethernet channel in several experimental trials, each using a different frame size, and some using mixed frame sizes. The results showed that the Ethernet channel was capable of delivering data at very high rates of channel utilization even when the 24 stations were constantly contending for access to the channel. For small frames sent between a few stations, channel utilization was as high as 9 Mb/s, and for large frames utilization was close to the maximum of 10 Mb/s (100% utilization).

With 24 stations running full blast, there was no arbitrary saturation point of 37% utilization (confirming what LAN managers who had been using Ethernet for years already knew). Nor did the system collapse with a number of stations offering a high constant load, which had been another popular myth. Instead, the experiments demonstrated that a half-duplex Ethernet channel could transport high loads of traffic among this set of stations in a stable fashion and without major problems.

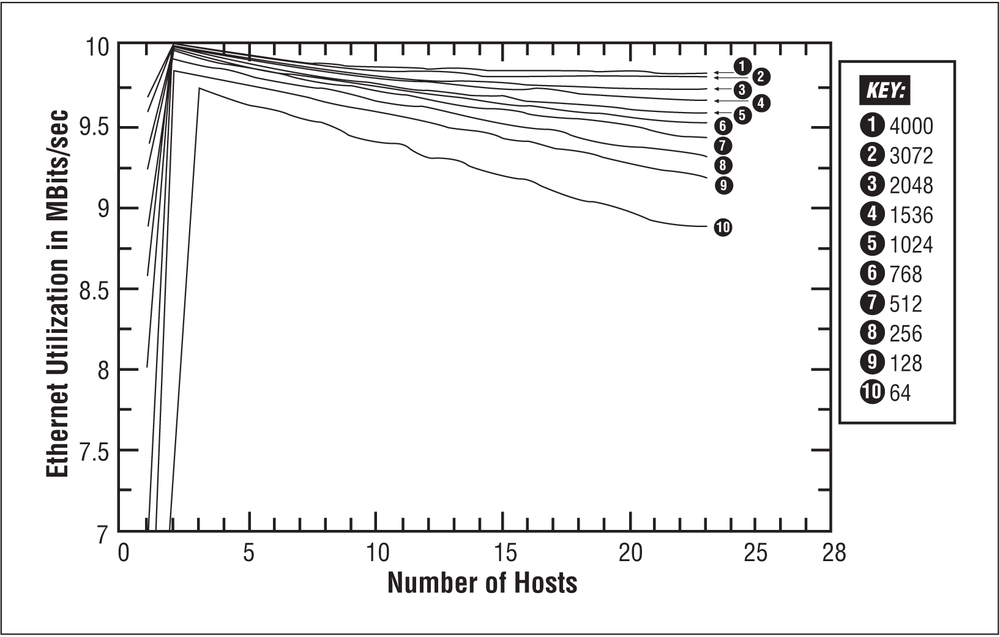

Figure 20-1 shows a graph of Ethernet utilization from the Boggs, Mogul, and Kent paper[69] that illustrates the maximum channel utilization achieved when up to 24 stations were sending frames continuously, using a variety of frame sizes. The frame sizes for each graph are numbered from 1 through 10, and range from 64 bytes (graph number 10) on up to 4,000 bytes (graph number 1). Any frame larger than 1,518 bytes exceeds the maximum allowed in the Ethernet specification, but the larger frame sizes were included in this test to see what would happen when the channel was stress-tested in this way. The graph shows that even when 24 stations were constantly contending for access to the channel, and all stations were sending small (64-byte) frames, channel utilization stayed quite high, at around 9 Mb/s.

The Boggs, Mogul, and Kent paper also provides some guidelines for network design based on their analysis. Two points they made are worth restating here:

- Don’t put too many stations on a single half-duplex channel (and therefore in a single collision domain). For best performance, use switches and routers to segment the network into multiple Ethernet segments.

- Avoid mixing heavy use of real-time applications with bulk-data applications. High traffic loads on the network caused by bulk-data applications produce higher transmission delays, which will negatively affect the performance of real-time applications. (We will discuss this issue in more detail later in this chapter.)

Simulations of Half-Duplex Ethernet Channel Performance

The Boggs, Mogul, and Kent paper noted that some of the theoretical studies that had been made of Ethernet performance were based on simulations that did not appear to accurately model Ethernet behavior. Building an accurate simulator of Ethernet behavior is difficult, because transmissions on an Ethernet are not centrally controlled in any way; instead, they happen more or less randomly, as do collisions.

In 1992, Speros Armyros published a paper showing the results of a new simulator for Ethernet that could accurately duplicate the real-world results reported in the Boggs, Mogul, and Kent paper.[70] This simulator made it possible to try out some more stress tests of the Ethernet system.

These new tests replicated the results of the Boggs, Mogul and Kent paper for 24 stations. They also showed that under worst-case overload conditions, a single Ethernet channel with over 200 stations continually sending data would behave rather poorly, and access times would rapidly increase. Access time is the time it takes for a station to transmit a packet onto the channel, including any delays caused by collisions and by multiple packets backing up in the station’s buffers due to congestion of the channel.

Further analysis of an Ethernet channel using the improved simulator was published by Mart Molle in 1994.[71] Molle’s analysis showed that the Ethernet binary exponential backoff (BEB) algorithm was stable under conditions of constant overload on Ethernet channels with station populations under 200. However, once the set of stations increased much beyond 200, the BEB algorithm began to respond poorly. In this situation (under conditions of constant overload), the access time delays encountered when sending packets can become unpredictable, with some packets encountering rather large delays.

Molle also noted that the capture effect, described in Appendix B, can actually improve the performance of an Ethernet channel for short bursts of small packets. However, the capture effect also leads to widely varying response times when trains of long packets briefly capture the channel. Finally, Molle’s paper described a new backoff algorithm that he created to resolve these and other problems, called the Binary Logarithmic Arbitration Method (BLAM). BLAM was never formally adopted by the Ethernet standard, for the reasons explained in Appendix B.

Molle noted that constantly overloaded channels are not a realistic model of real-world usage. What the network users are interested in is response time, which includes the typical delay encountered when transmitting packets. Highly congested channels exhibit very poor response times, which users find unacceptable.

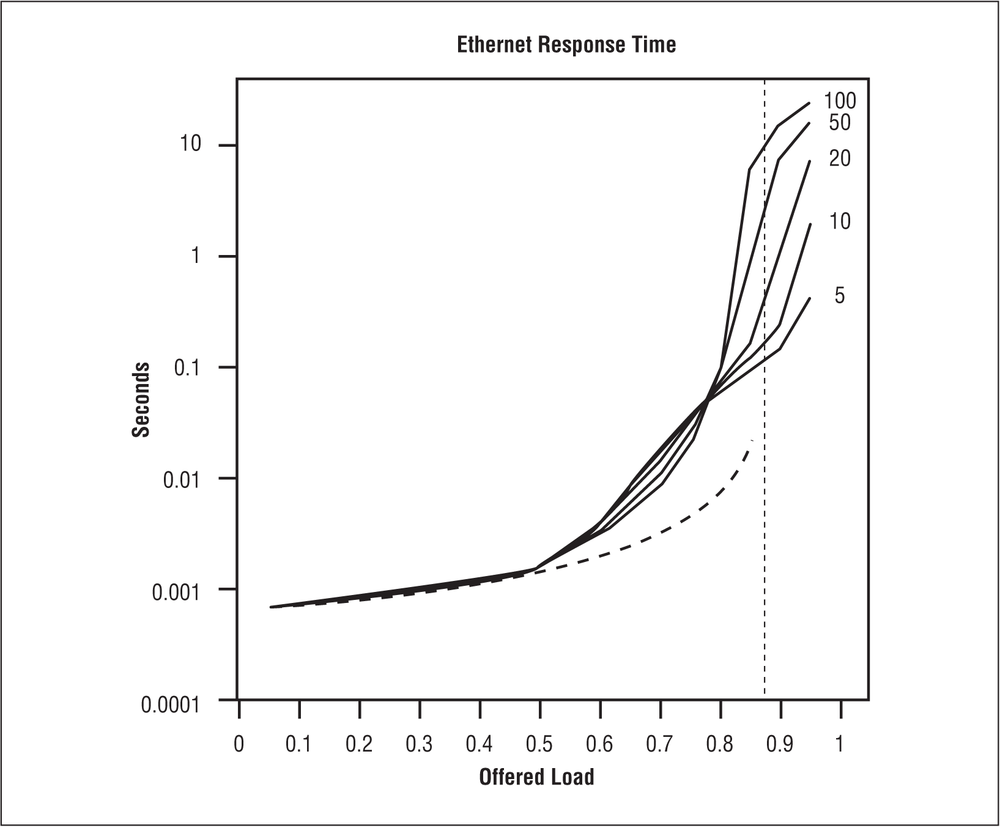

Figure 20-2 shows a graph from Molle’s paper, displaying the effects that channel load and the number of stations (hosts) have on response time.[72] The chart shows that the average channel response time is good until the channel is seeing a constant load of more than 50%. The region from 50% constant load to about 80% constant load shows increased delays, and above 80% constant load the delays increase rapidly.

Another element is the variation in channel access times, known as jitter. For example, real-time traffic carrying audio information will work best on an uncongested channel that provides rapid response times. Heavily loaded channels can result in excessive delay and jitter, making the audio bursty and difficult to understand. Therefore, excessive jitter will be unacceptable to the users of real-time applications.

The Molle study shows that there are three major operating regimes for an Ethernet half-duplex channel:

- Light load

- Up to 50% average utilization measured over a one-second sample time. At this level of utilization, the network responds rapidly. Stations can send packets with very low access delays of about 0.001 seconds or less on a 10 Mb/s channel. The response of real-time applications will be acceptable.

- Moderate to heavy load

From 50 to 80% average utilization measured over a one-second sample period. At this point, the channel begins to show larger delays in the range of 0.01 to 0.1 seconds on a 10 Mb/s channel.

This kind of transmission delay will not be noticeable to applications such as web browsers, or when accessing file servers or databases. However, transmission delays may be large enough for some packets that real-time applications could experience negative effects from variable delay. Short-term traffic bursts into this region should not be a problem, but longer-term load averages at this rate of utilization are not recommended for best performance.

- Very high load

- From 80 to 100% average utilization measured over a one-second sample period. At this rate, the transmission delays can get quite high, and the amount of jitter can get very large. Access delays of up to a second are possible on 10 Mb/s channels, while even longer delays have been predicted in simulations. Short-term traffic bursts into this region should not be a problem, but long-term average loads at this rate would indicate a seriously overloaded channel.

The lessons learned in these studies make it clear that users trying to get work done on a constantly overloaded channel will perceive unacceptable delays in their network service. Although constant high network loads may feel like a network “collapse” to the users, the network system itself is in fact still working as designed; it’s just that the load is too high to rapidly accommodate all of the people who wish to use the network.

The delays caused by congestion in communications channels are somewhat like the delays caused by congested highways during commute times. Despite the fact that you are forced to endure long delays due to a traffic overload of commuters, the highway system isn’t broken; it’s just too busy. An overloaded Ethernet channel has the same problem.

Measuring Ethernet Performance

Now that we’ve seen the analysis of half-duplex Ethernet channels, let’s look more closely at how to monitor a normally operating modern Ethernet system, where virtually all channels are operating in full-duplex mode. In this mode, the channel can be loaded to 100% without affecting the channel access time for a station. That’s because full-duplex channels provide dedicated signal paths for the devices at each end of the channel, allowing them to send data whenever they like and allowing the channel to operate at loads up to 100% without affecting access to the channel.

Monitoring the total amount of traffic on a given Ethernet link requires a device that operates in promiscuous receive mode, reading in every frame seen on the channel. Looking at every frame with a general-purpose computer requires a network interface and computer system that can keep up with high frame rates.

In the older Ethernet systems based on coaxial cables and shared channels, you could attach a monitor to the cable and see all of the traffic from all stations on that channel. These days it’s considerably harder to monitor an Ethernet system, because Ethernets are built using individual Ethernet segments connected to switch ports.

Therefore, you need to monitor the switch itself, or use a monitoring device connected to a packet mirror or SPAN port on the switch. Higher-cost switches also have built-in management, which allows you to monitor the utilization and other statistics on each port as well as for the entire device. One useful method for collecting utilization and other statistics is provided by the Simple Network Management Protocol (SNMP). Using SNMP-based management software is discussed in more detail in Chapter 21.

Measurement Time Scale

Before you set out to measure the network load on a real network, you need to determine what time scale to use. Many network analyzers are set by default to look at the load on an Ethernet averaged over a period of one second. One second may not sound like a long period of time, but a 10 Mb/s Ethernet channel operating flat out can theoretically transmit 14,880 frames in that one second. A Gigabit Ethernet system can send a hundred times that amount, or 1,488,000 frames per second, and a 100 Gigabit system can send 148,800,000 frames per second.

By looking at the load on a network in one-second increments, you can generate a set of data points that can be used to draw a graph of the one-second average loads over time. This graph will rise and fall, depending on the average network traffic seen during the one-second sample period.

The one-second sample time can be useful when looking at the performance of a network port in real time. However, most network systems consist of more than one network segment, and most network managers have better things to do than spend all day watching the traffic loads on Ethernet ports. Management software exists that will automatically create reports of network utilization and store them in a database. These reports are used to create charts that show traffic over the busy hours of a workday, or the entire day, week, or month.

Because the traffic rate on a LAN can vary significantly over time, you really need to look at the average utilization over several time periods to get an idea of the general loads seen on the network. The traffic on most Ethernet ports tends to be bursty, with large, short-lived peaks. Peak network loads can easily go to 80, 90, or 100% measured over a one-second interval without causing any problems for a typical mix of applications.

For an Ethernet system, the minimal set of things you might consider keeping track of include:

- The utilization rate of the network ports over a series of time scales.

- The rate of broadcasts and multicasts. Excessive rates of broadcasts can affect station performance because every station must read in every broadcast frame and decide what to do with it.

- Basic error statistics, including cyclic redundancy check (CRC) errors, oversize frames, and so on.

The time scales you choose for generating utilization figures are a matter of debate, because no two networks are alike and every site has a different mix of applications and users. Network managers often choose to create baselines of traffic that extend over several time scales. With the baselines stored, they can then compare new daily reports against previous reports to make sure that the Ethernet ports are not staying at high loads during the times that are important to the users.

Most networks are designed in a hierarchy, with access switches connected to core switches using uplink ports. Monitoring those uplink ports is critical, because they are the bottlenecks for your network system. Very high loads on those uplinks that last for significant periods of time during the workday could cause unacceptable delays.

For example, filesystem backups can take a fair amount of time to perform and often place a heavy demand on your network. To ensure that your users have priority access to the network, it’s recommended that you perform backups at night, when the likelihood of user traffic on the network is minimal.

Constant monitoring also provides evidence of overload that can be useful when responding to complaints about network performance. Given the wide variability in application mix, number of users, and so on, it is quite difficult to provide any rules of thumb when it comes to network load. Some network managers report that they regard network traffic as approaching excessive load levels when:

- Uplink utilization averaged over the eight-hour workday exceeds 20%.

- Average utilization during the busiest hour of the day exceeds 30%.

- Fifteen-minute averages exceed 50% at any time during the workday.

Notice that these recommendations are not based on the three operating regimes derived from Molle’s paper. The three operating regimes that Molle studied are based on one-second average loads on half-duplex shared channels. In the traffic load levels just shown, an eight-hour average utilization that reaches 20% is a heavily smoothed graph, which does not show the short-term peaks. During the business day, we can assume that transient peaks went much higher than 20%. More importantly, we can assume that when the long-term average gets that high, the peak traffic loads may have been lasting for long periods, producing unacceptable response times for the users.

There are many ways to generate graphs and reports of network utilization. Table 20-1 displays some raw data collected with an SNMP-based management program. These samples were collected every 30 minutes for the total number of packets, octets, broadcasts, and multicasts seen on an uplink port.

| Timestamp | Packets | Octets | Broadcast | Multicast | Utilization |

09:42:10 | 138243 | 41326186 | 882 | 383 | 2 |

10:12:10 | 161295 | 51701901 | 828 | 397 | 2 |

10:42:10 | 168389 | 58580988 | 868 | 391 | 3 |

11:12:10 | 2775468 | 559286267 | 1283 | 280 | 25 |

11:42:10 | 604774 | 111504337 | 1231 | 275 | 5 |

12:12:10 | 836423 | 126693664 | 1218 | 415 | 6 |

12:42:10 | 164848 | 59062247 | 1117 | 500 | 3 |

13:12:10 | 221535 | 94692849 | 1343 | 980 | 4 |

The average utilization on the channel over the 30-minute period is also collected. Notice that during the 30-minute period from 10:42 to the next sample at 11:12, the average utilization was 25%. This average is high for such a long period of time in the middle of the workday, and network users may have complained about poor response time during this period. A shorter sample time would very likely have shown much higher peak loads lasting for significant periods of time, which could cause poor response times and generate complaints about network performance.

When collecting utilization information, it’s up to you to determine what load levels are acceptable to your users, given the application mix at your site. Note that short-term averages may reach 100% load for a few seconds without generating complaints. Short-term peaks such as this can happen when large file transfers cause high loads for a brief period. For many applications, the users may never notice the short-term high loads. However, if the network is being used for real-time applications, then even relatively short-term loads could cause problems.

When the reports for an Ethernet begin to show a number of high utilization periods, a LAN manager might decide to keep a closer eye on the network. You want to see whether the traffic rates are stable, or if the loads are increasing to the point where they may affect the operation of the network applications being used. The network load can be adjusted by increasing the speed of the Ethernet links, especially the uplinks between switches.

Data Throughput Versus Bandwidth

The analytical studies we’ve seen so far were interested in measuring total channel utilization, which includes all application data being sent as well as the framing bits and other overhead it takes to send the data. This is useful if you’re looking at the theoretical bandwidth of an Ethernet channel. On the other hand, most users want to know how much data they can get through the system. This is sometimes referred to as throughput. Note that bandwidth and throughput are different things.

Bandwidth is a measure of the capacity of a link, typically provided in bits per second (bps). The bandwidth of Ethernet channels is rated at 10 million bits per second (10 Mb/s), 100 million bits per second (100 Mb/s), 1 billion bits per second (1 Gb/s), and so on. Throughput is the rate at which usable data can be sent over the channel. While an Ethernet channel may operate at 10 Mb/s, the throughput in terms of usable data will be less due to the number of bits required for framing and other channel overhead.

On an Ethernet channel, it takes a certain number of bits, organized as an Ethernet frame, to carry data from one computer to the other. The Ethernet system also requires an interframe gap between frames, and a frame preamble at the front of each frame. The framing bits, interframe gap, and preamble constitute the necessary overhead required to move data over an Ethernet channel. As you might expect, the smaller the amount of data carried in the frame, the higher the percentage of overhead. Another way of saying this is that frames carrying large amounts of data are the most efficient way to transport data over the Ethernet channel.

Maximum data rates on Ethernet

We can determine the maximum data rate that a single station can achieve by using the sizes of the smallest and largest frames to compute the maximum throughput of the system. Our frame examples include the widely used type field, because frames with a type field are easiest to describe. The IEEE 802.3 frame format with 802.2 logical link control (LLC) fields will have slightly lower performance, due to the use of a few bytes of data in the data field that are required to carry the LLC information. The numbers we come up with for the 10 Mb/s channel can simply be multiplied by 10 for a 100 Mb/s Fast Ethernet system, by 100 for Gigabit Ethernet, and so on. Keep in mind that a link operating in full-duplex mode can support twice the data rates shown here, because the devices on both ends of the link can transmit simultaneously.

The first column in Table 20-2 shows the data size (in bytes) being carried in each frame and the total frame size including the overhead bits (i.e., the non-data framing fields) in parentheses. The non-data fields of the frame include 64 bits of preamble, 96 bits of source and destination address, 16 bits of type field, and 32 bits for the frame check sequence (FCS) field, which carries the CRC.

| Data field size (frame size) | Maximum frames/sec | Maximum data rate (bits/sec) |

46 (64) | 14,880 | 5,475,840 |

64 (82) | 12,254 | 6,274,084 |

128 (146) | 7,530 | 7,710,720 |

256 (274) | 4,251 | 8,706,048 |

512 (530) | 2,272 | 9,306,112 |

1,024 (1,042) | 1,177 | 9,641,984 |

1,500 (1,538) | 812 | 9,752,925 |

The interframe gap on a 10 Mb/s system is 9.6 microseconds, which is equivalent to 96 bit times. Total it all up, and we get 304 bit times of overhead required for each frame transmission. With that in mind, we can now calculate, theoretically, the number of frames that could be sent for a range of data field sizes—beginning with the minimum data size of 46 bytes, and ending with the maximum of 1,500 bytes. The results are shown in the second column of the table.

The calculations provided in Table 20-2 are made using some simplifying assumptions, as they say in the simulation and analysis trade. These assumptions are that one station sends back-to-back frames endlessly at these data sizes, and that another station receives them. This is obviously not a real-world situation, but it helps us provide the theoretical maximum data throughput that can be expected of a single 10 Mb/s Ethernet channel.

At 14,880 frames per second, the Ethernet channel is at 100% load. However, Table 20-2 shows that, while operating at 100% load, a 10 Mb/s channel moving frames with only 46 bytes of data in them can deliver a maximum of 5,475,840 bits per second of data throughput in one direction, and twice that if both directions are operating at the maximum rate on a full-duplex link. This is only about 54.7% efficiency in terms of data delivery.

If 1,500 bytes of data are sent in each frame, then an Ethernet channel operating at 100% constant load could deliver 9,744,000 bits per second of usable data for applications. This is over 97% efficiency in channel utilization. These figures demonstrate that, while the bandwidth of an Ethernet channel may be 10 Mb/s, the throughput in terms of usable data sent over that channel can vary quite a bit. It all depends on the size of the data field in the frames, and the number of frames per second.

Network performance for the user

Of course, frame size and data throughput are not the entire picture either. As far as the user is concerned, network throughput and response time are affected by the whole set of elements in the path between computers that communicate with one another. All of the following can impact the user’s perception of network performance:

- The performance of the high-level network protocol software running on the user’s computer.

- The overhead required by the fields in high-level protocol packets that are carried in the Ethernet frames.

- The performance of the application software being used. File-sharing performance in the face of occasional dropped packets can fall drastically depending on the amount of time required by application-level timeouts and retransmissions.

- The performance of the user’s computer, in terms of CPU speed, amount of random access memory (RAM), backplane bus speed, and disk I/O speed. The performance of a bulk-data-transfer operation such as file transfer is often limited by the speed of the user’s disk drive. Another limit is the speed at which the computer can move data from the network interface onto the disk drive.

- The performance of the network interface installed in the user’s computer. This is affected by the amount of buffer memory that the interface is equipped with, as well the speed of the interface driver software.

As you can see, there are many elements at play. The question most network managers want answered is: “What traffic levels can the network operate at and still provide adequate performance for the users?” However, it’s quite clear that this is not an easy question to answer.

Some applications require very rapid response times, while others are not that delay-sensitive. The size of the packets sent by the applications makes a big difference in the throughput that they can achieve over the network channel. Further, mixing delay-sensitive and bulk-data applications may or may not work, depending on how heavily loaded the channel is.

Performance for the network manager

So how does the network manager decide what to do? There’s still no substitute for common sense, familiarity with your network system, and some basic monitoring tools. There’s not much point in waiting for someone to develop a magic program that understands all possible variables that affect network behavior. Such a program would have to know all the details about the computers you are using, and how well they perform. Perhaps it would also automatically analyze your application mix and load profile and call you on the telephone to report problems.

Until the magic program arrives, you can do some basic monitoring yourself. For example, you could develop some baselines for your daily traffic so that you know how things are running today. Then you can compare future reports to the baselines to see how well things are working on a day-to-day basis.

Of course, Ethernets can run without being watched very closely, and small Ethernets may not justify monitoring at all. On a small home network supporting a few stations, you probably don’t care what the load is as long as things are working. The same is probably true for many small office networks. Even large Ethernet systems—spanning an entire building or set of buildings—may have to run without analysis.

If you have no budget or staff for monitoring, then you may have very little choice except to wait for user complaints and then wade in with some analysis equipment to try to figure out what is going on. Of course, this will have a severe impact on the reliability and performance of your network, but you get what you pay for.

The amount of time, money, and effort you spend on monitoring your network is entirely up to you. Small networks won’t require much monitoring, beyond keeping an eye on the error stats or load lights of your network equipment. Some switches can provide management information by way of a management interface, as described in Chapter 21. This makes it easier to monitor the error counts on the switch without investing very much money in management software. Larger sites that depend on their networks for their business operations could reasonably justify the expenditure of a fair amount of resources on monitoring. There are also companies that will monitor your network devices for you, for a fee.

Network Design for Best Performance

Many network designers would like to know ahead of time exactly how much bandwidth they will need to provide, but as we’ve shown in this chapter, it’s not that easy. Network performance is a complex subject with many variables, and it’s a distinctly nontrivial task to model a network system sufficiently well that you can predict what the traffic loads will be like.

Instead, most network managers take the same approach that highway designers take, which is to provide excess capacity for use during peak times and to accommodate some amount of future growth in the number of stations being supported. The cost of Ethernet equipment is low enough that this is fairly easy to do.

Providing extra bandwidth helps to ensure that a user can move a bulk file quickly when she needs to. Extra bandwidth also helps ensure that delay-sensitive applications will work acceptably well. In addition, once a network is installed, it attracts more computers and applications like ants to a picnic, so extra bandwidth always comes in handy.

Switches and Network Bandwidth

Switches provide you with multiple Ethernet channels, and make it possible to upgrade those channels to higher speeds of operation. Each port on a switch can operate at different speeds, as needed, and is capable of delivering the full bandwidth of the channel. Examples of switch configurations are provided in Chapter 19. You can link stacks of switches together, for example, to create larger Ethernet systems.

Growth of Network Bandwidth

Today, all computers in the workplace are connected to networks, and everyone in the workplace requires a computer and a network connection. Huge numbers of network applications are in use, ranging from applications that send short text messages to high-definition video. This proliferation of network is leading to a constantly increasing appetite for more bandwidth.

The incredibly rapid growth of the Internet has had a major impact on the traffic flow through local networks. In the past, many computing resources were local to a given site. When major resources were local to a workgroup or to a building, network managers could depend on the 80/20 rule of thumb, which stated that 80% of traffic on a given network system would stay local, and 20% would leave the local area for access to remote resources.

With the growth of Internet-based applications, the 80/20 rule has been inverted. As a result of the Internet and the development of corporate intranets, a large amount of traffic is being exchanged with remote resources. This can place major loads on backbone network systems, as traffic that used to stay local is now being sent over the backbone system to reach the intranet servers and Internet resources and cloud-based services.

Changes in Application Requirements

Not only is traffic increasing, but multimedia applications that deliver streaming audio and video to the user are in common use. These applications place serious demands on network response time. For example, excessive delay and jitter can cause problems with real-time multimedia applications, leading to breakups in the audio and to jerky response on video displays.

Multimedia applications are currently undergoing rapid evolution. Fortunately, modern multimedia applications are designed for delivery over the Web. These applications typically expect to encounter network congestion and packet loss on the Internet. Therefore, they use sophisticated data compression and buffering techniques and other approaches to reduce the amount of bandwidth they require, and to continue working in the presence of low response times and high rates of jitter. Because of this design, these types of multimedia applications will very likely perform quite well even on heavily loaded campus Ethernets.

Designing for the Future

About the best advice anyone can give to a network designer is to assume that you will need more bandwidth, and probably sooner than you expect. Network designers should:

- Plan for future growth and upgrades

- The computer business in general, and networking in particular, is always undergoing rapid evolution. Assume that you are going to need more bandwidth when you buy equipment, and buy the best that you can afford today. Expect to upgrade your equipment in the future. While no one likes spending money on upgrades, it is a necessity when technology is evolving rapidly.

- Buy equipment with an eye to the future

- Hardware evolution has become quite rapid, and hardware life cycles are becoming shorter. Beware of products that are at the end of their product life cycle. Try to buy products that are modular and expandable. Investigate a vendor’s track record when it comes to upgrades and replacing equipment. Look for “investment protection” plans that provide a trade-in discount when upgrading.

- Be proactive

- Keep an eye on your network utilization, and regularly store data samples to provide the information you need for trend analysis and planning. Upgrade your network equipment before the network reaches saturation. A business plan, complete with utilization graphs showing the upward trend in traffic, will go a long way toward convincing management at your site of the need for new equipment.

[67] Robert M. Metcalfe and David R. Boggs, “Ethernet: Distributed Packet Switching for Local Computer Networks,” Communications of the ACM 19:5 (July 1976): 395–404.

[68] David R. Boggs, Jeffrey C. Mogul, and Christopher A. Kent, “Measured Capacity of an Ethernet: Myths and Reality,”Proceedings of the SIGCOMM ’88 Symposium on Communications Architectures and Protocols, (August 1988), 222–234.

[69] Boggs, Mogul, and Kent, “Measured Capacity of an Ethernet,” Figure I-1, p. 24, used by permission.

[70] Speros Armyros, “On the Behavior of Ethernet: Are Existing Analytic Models Accurate?” Technical Report CSRI-259, February 1992, Computer Systems Research Institute, University of Toronto, Toronto, Canada.

[71] Mart M. Molle, “A New Binary Logarithmic Arbitration Method for Ethernet,” Technical Report CSRI-298, April 1994 (revised July 1994), Computer Systems Research Institute, University of Toronto, Toronto, Canada.

[72] Molle, “A New Binary Logarithmic Arbitration Method for Ethernet,” Figure 5, p. 13, used by permission.