Chapter 14. Red Hat Testing

Visual Regression in Action

PhantomCSS has been my go-to tool for the past few years because it provides component-based comparison, with a headless browser and scripting library that can be integrated with my current build tools. So let me walk you through the setup of PhantomCSS and how we are currently using it at Red Hat.

The Testing Tools

PhantomCSS is a powerful combination of three different tools:

- PhantomJS is a headless WebKit browser that allows you to quickly render web pages, and most importantly, take screenshots of them.

- CasperJS is a navigation and scripting tool that allows you to interact with the page rendered by PhantomJS. We are able to move the mouse, perform clicks, enter text into fields, and even perform JavaScript functions directly in the DOM.

- ResembleJS is a comparison engine that can compare two images and determine if there are any pixel differences between them.

We also wanted to automate the entire process, so we pulled PhantomCSS into Grunt and set up a few custom Grunt commands to test all, or just part, of our test suite.

Setting Up Grunt

Now before you run off and download the first Grunt PhantomCSS you find on Google, I’ll have to warn you that it is awfully stale. Sadly, someone grabbed the prime namespace and then just totally disappeared. This has led to a few people taking it upon themselves to continue on with the codebase, merging in existing pull requests and keeping things current. One of the better ones is maintained by Anselm Hannemann. Here’s how you install it:

npm i --save-dev git://github.com/anselmh/grunt-phantomcss.git

With that installed, we need to do the typical Grunt things like loading the task in the Gruntfile.js:

grunt.loadNpmTasks('grunt-phantomcss'),

Then set a few options for PhantomCSS, also in the Gruntfile.js. Most of these are just default:

phantomcss:{options:{mismatchTolerance:0.05,screenshots:'baselines',results:'results',viewportSize:[1280,800],},src:['phantomcss.js']},

mismatchTolerance:We can set a threshold for finding visual differences. This helps account for antialiasing or other minor, noncritical differences.screenshots:Choose a folder to place baseline images in.results:After we run comparison tests, the results will be placed in this folder.viewportSize:We can always adjust the viewport size with Casper.js.src:This is just a path to our test file, relative to our gruntfile.

Our Test File

Next, in the phantomcss.js file, this is where Casper.js kicks in. PhantomCSS is going to spin up a PhantomJS web browser, but it is up to Casper.js to navigate to a web page and perform all of the various actions needed. We decided the best place to test our components would be inside of our style guide. It shared the same CSS with our live site, and it was a consistent target that we could count on not to change from day to day. So we start off by having Casper navigate us to that URL:

casper.start('http://localhost:9001/cta-link.html').then(function(){phantomcss.screenshot('.cta-link','cta-link');}).then(function(){this.mouse.move('.cta-button');phantomcss.screenshot('.cta-link','cta-link-hover');});

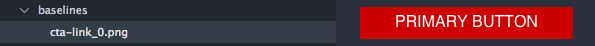

After starting up Casper at the correct page, we use JavaScript method chaining to string together a list of all the screenshots we need to take. First, we target the .cta-link and take a screenshot. We aptly call it cta-link. That will be its base filename in the baselines folder.

Figure 14-1. The baseline image for our cta-link

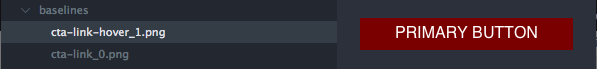

Next, we need to test our button to make sure it behaves like we’d expect when we hover over it. We can use CasperJS to actually move the cursor inside of PhantomJS so that when we take our next screenshot, named cta-link-hover, the button will be in its hovered state. Figure 14-2 shows the result.

Figure 14-2. The baseline image for our cta-link in hovered state

Making a Comparison

With those baselines in place, we are now able to run the test over and over again. If nothing has changed, images created by the subsequent tests will be identical to the baseline images and everything will pass. But if something were to change:

.cta-link{text-transform:lowercase;}

The next time we ran our comparison tests, we’d see the results shown in Figure 14-3.

Figure 14-3. An example of a failing test

As expected, the change from uppercase to lowercase created a failure. Not only was the text different, but the button ended up being smaller. The third “fail” image shows us in pink which pixels were different between the two images.

Running the Entire Suite

After doing this for each component (or feature) we want to test, we can run $grunt phantomcss and it will do the following:

- Spin up a PhantomJS browser.

- Use CasperJS to navigate to a page in our style guide.

- Take a screenshot of a single component on that page.

- Interact with the page: click the mobile nav, hover over links, fill out a form, submit the form, and so on.

- Take screenshots of every one of those states.

- Compare all of those screenshots with baseline images we captured and committed when the component was created Figure 14-4.

- Report if all images are the same (PASS!) or if there is an image that has changed (FAIL!).

- Repeat this process for every component and layout in our library.

Figure 14-4. All of the baseline images created by our visual regression test

What Do We Do with Failing Tests?

Obviously, if you are tasked with changing the appearance of a component you are going to get failing tests, and that’s fine (see Figure 14-5). The point is that you should only be getting failing tests on the component you are working on. If you are trying to update the cta-link and you get failing tests on the cta-link and your pagination component, one of two things happened:

- You changed something you shouldn’t have. Maybe your changes were too global, or you fat-fingered some keystrokes in the wrong file. Either way, find out what changed on the pagination component, and fix it.

- On the other hand, you might determine that the changes you made to the

cta-linkshould have affected the pagination too. Perhaps they share the same button mixin, and for brand consistency they should be using the same button styles. At this point, you’d need to head back to the story owner/designer/person-who-makes-decisions-about-these-things and ask if the changes were meant to apply to both components, and act accordingly.

Figure 14-5. Seeing failures reported on subsequent runs

Moving from Failing to Passing

Regardless of what happens with the pagination, you will still be left with a “failing” cta-link test because the old baseline is no longer correct. In this case, you would delete the old baselines and commit the new ones (see Figure 14-6). If this new look is the brand-approved one, then these new baselines need to be committed with your feature branch code so that once your code is merged in, it doesn’t cause failures when others run the test.

Figure 14-6. Commit new baseline images when your code changes the component appearance

The magic of this approach is that at any given time, every single component in your entire system has a “gold standard” image that the current state can be compared to. Your test suite can also be run at any time, in any branch, and it should always pass without a single failure, which is a solid foundation to build upon.

Making It Our Own

I started to work with Anselm’s code at the beginning of the Red Hat project and found that it met 90% of our needs, but it was that last 10% that I really needed to make our workflow come together. So as any self-respecting developer does, I forked it and started in on some modifications to make it fit our specific implementations. Let me walk you through some of those changes that I made to create the node module @micahgodbolt/grunt-phantomcss:

// Gruntfile.jsphantomcss:{webrh:{options:{mismatchTolerance:0.05,screenshots:'baselines',results:'results',viewportSize:[1280,800],},src:[// select all files ending in -tests.js'src/library/**/*-tests.js']},},

Place Baselines in Component Folder

Good encapsulation was important to us. We put everything in the component folder, and I mean everything! For example:

- The Twig template with the component’s canonical markup

- The JSON schema describing valid data for the template

- The documentation file, which fed into our Hologram style guide explaining component features, options, and implementation details

- Sass files for typographic and layout styles

- The test file with tests written for every variation of the component

Because we already had all of this in one place, we wanted our baseline images to live inside of the component folder as well. This would make it easier to find the baselines for each component, and when a merge request contained new baselines, the images would be in the same folder as the code change that made them necessary.

The default behavior of PhantomCSS was to place all of the baselines into the same folder regardless of the test file location. With dozens of different components in our system, each with several tests, this process just didn’t scale. So one of the key changes I made was to put baseline images into a folder called baseline right next to each test file (see Figure 14-7).

Figure 14-7. A typical component folder

Run Each Component Test Suite Individually

In addition to changing the location of the baseline images, I changed the test behavior to test each component individually, instead of all together. So, instead of running all 100+ tests and telling me if the build passed or failed, I now get a pass/fail for each component, as shown in Figures 14-8 and 14-9.

Figure 14-8. Everything passes!

Figure 14-9. Failing tests

Test Portability

The last change I made is that I wanted my tests to be more portable. Instead of a single test file, we had broken our tests up into dozens of different test files that Grunt pulled in when it ran the test.

The original implementation required that the first test file start with casper.start('http://mysite.com/page1') and all subsequent files start with casper.thenOpen('http://mysite.com/page2'). This becomes problematic because the order in which Grunt ran these files was alphabetical. So as soon as I added a test starting with a letter one earlier in the alphabet than my current starting test, my test suite broke!

The fix involved calling casper.start as soon as Grunt initiates the task, and then all of the tests can start with casper.thenOpen:

// cta.tests.jscasper.thenOpen('http://localhost:9001/cta.html').then(function(){this.viewport(600,1000);phantomcss.screenshot('.rh-cta-link','cta-link');}).then(function(){this.mouse.move(".rh-cta-link");phantomcss.screenshot('.rh-cta-link','cta-link-hover');});// quote.tests.jscasper.thenOpen('http://localhost:9001/quote').then(function(){this.viewport(600,1000);phantomcss.screenshot('.rh-quote','quote-600');}).then(function(){this.viewport(350,1000);phantomcss.screenshot('.rh-quote','quote-350');});

Conclusion

After getting these tests in place, we were able to confidently grow our design system with new components, layouts, and patterns. With every addition of code, we had a suite of tests that ensured our previous work had not been compromised. Our coverage not only included the basic appearance, but every possible variation or interaction as well. As new features were added, new tests could be written. If a bug slipped through, we could fix it and then write a test to make sure it never happened again.

Instead of our design system getting more difficult to manage with every new addition, we found it getting easier and easier. We could now consistently utilize and adapt current components, or create new ones with little fear of breaking others.