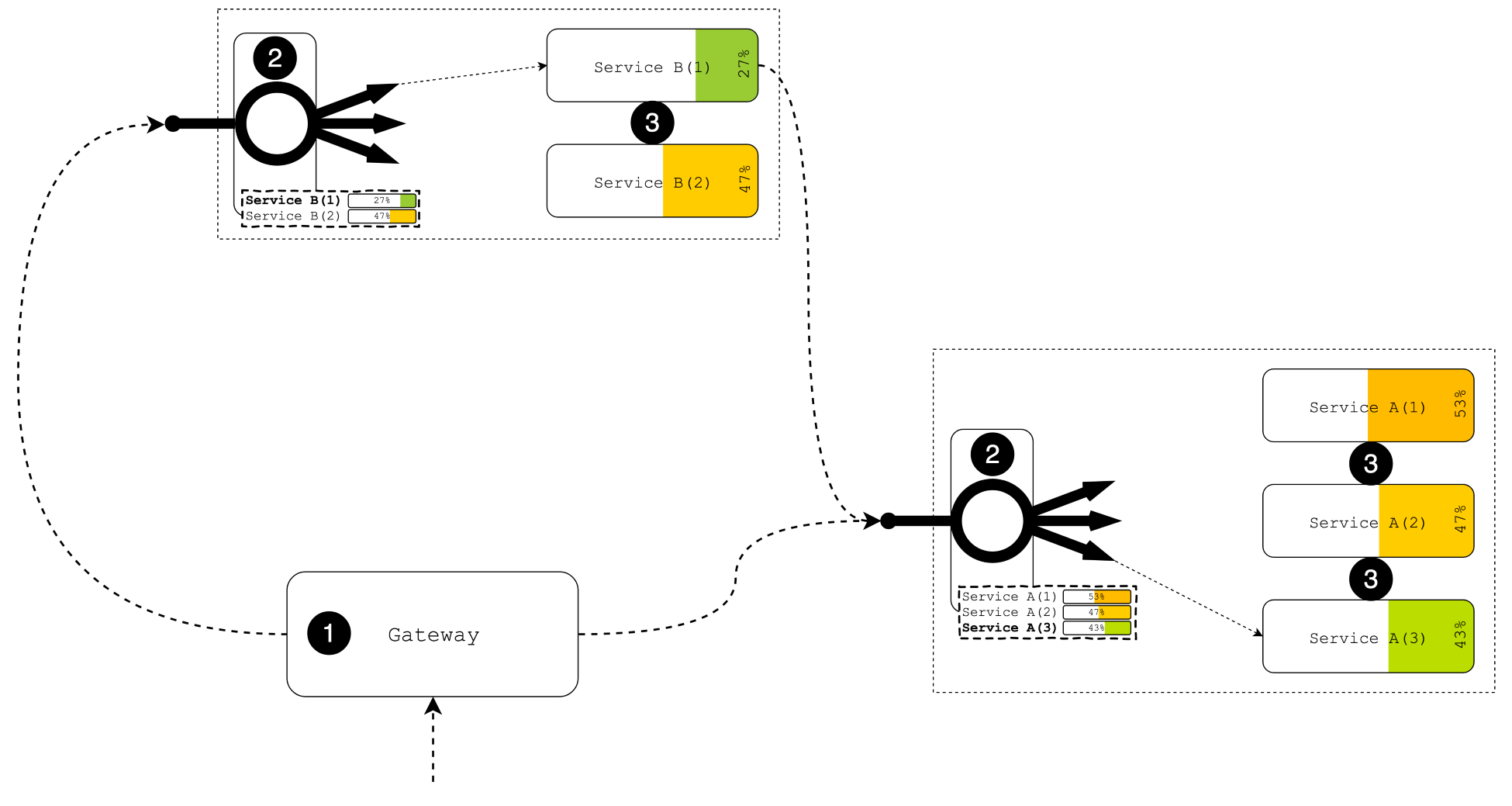

In the earliest stages of distributed system development, one of the ways to achieve location transparency and elasticity was to use external load balancers, such as HAProxy/Nginx, as an entry point on top of the group of replicas or as a central load balancer for the whole system. Consider the following diagram:

Each section of the numbered diagram is explained as follows:

- Here, we have a service that plays the role of the gateway and orchestrates all users' requests. As we may see, the gateway makes two calls to Service A and Service B. Suppose that Service A plays the role of access control if the first call verifies that the given access token is correct or checks the presence of the valid authorization to access the gateway. Once the access is checked, the second call is performed, and that may include an execution of business logic on Service B, which may require an additional permission check as a result, therefore calling access control again.

- This is the schematic representation of the load balancer. To enable auto-scaling, the load balancer may collect metrics such as the number of open connections, which may give the state of the overall load on that service. Alternatively, the load balancer may collect response latencies and make some additional assumptions about the service's health status based on that. Combining this information with additional periodical health checking, the load balancer may invoke a third-party mechanism for allocating additional resources on spikes in the load or de-allocating redundant nodes when the load decreases.

- This demonstrates the particular instances of the services grouped under a dedicated load balancer (2). Here, each service may work independently on separate nodes or machines.

As we can see from the diagram, the load balancer plays the role of the registry of available instances. For each group of services, there is a dedicated load balancer that manages the load among all instances. In turn, the load balancer may initiate a scaling process based on the overall group load and available metrics. For example, when there is a spike in user activity, new instances may be dynamically added to the group, so the increased load is handled. In turn, when the load decreases, the load balancer (as the metrics holder) may send a notification stating that there are redundant instances in the group.

However, there are a few known issues with that solution. First of all, under a high load, the load balancer might become a hotspot in the system. Recalling Amdahl's Law, we might remember that the load balancer becomes a point of contention, and the underlying group of services cannot handle more requests than the load balancer can. In turn, the cost of serving the load balancer might be high, since each dedicated load balancer requires a separate powerful machine or virtual machine. Moreover, it might require an additional backup machine with the load balancer installed. Finally, the load balancer should also be managed and monitored. This may result in some extra expenses for infrastructure administration.