Configuring and operating the IBM TS7700

This chapter provides information about how to configure and operate the IBM TS7700 by using the Management Interface (MI).

This chapter includes the following topics:

For general guidance regarding TS3500 or TS4500 tape libraries, see the following IBM Redbooks publications:

•IBM TS3500 Tape Library with System z Attachment A Practical Guide to Enterprise Tape Drives and TS3500 Tape Automation, SG24-6789

•IBM TS4500 R5 Tape Library Guide, SG24-8235

9.1 User interfaces

To successfully operate the TS7700, you must understand its concepts and components. This chapter combines the components and functions of the TS7700 into two groups:

•The logical view

•The physical view

Each component and each function belong to only one view.

The logical view is named the host view. From the host allocation point of view, there is only one library, called the composite library. The logical view includes virtual volumes and virtual tape drives.

With Release R4.2, a composite library can have up to 4096 virtual addresses for tape mounts, considering a eight-cluster grid with support for 496 virtual devices in each cluster (available with FC5275 and z/OS APAR OA44351). For more information, see Chapter 2, “Architecture, components, and functional characteristics” on page 15.

The host is only aware of the existence of the underlying physical libraries because they are defined through Interactive Storage Management Facility (ISMF) in a z/OS environment. The term distributed library is used to denote the physical libraries and TS7700 components that are part of one cluster of the multi-cluster grid configuration.

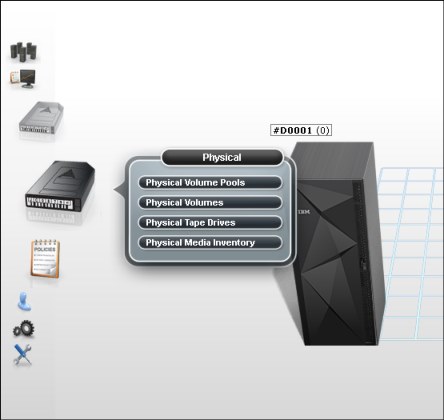

The physical view shows the hardware components of a stand-alone cluster or a multi-cluster grid configuration. In a TS7700 tape-attached model, it includes the currently configured physical tape library and tape drives:

•The TS4500 tape library with supported tape drives TS1140 (3592 EH7) and TS1150 (3592 EH8) models

•The TS3500 tape library, which supports the 3592 J1A, TS1120, TS1130, TS1140, or TS1150 tape drive models

|

Note: TS7760-VEC tape attach configurations must use CISCO switches (16 Gbps)

|

With Release R4.2, the TS7700 supports Tier to Cloud functions, which uses Transparent Cloud Tiering (TCT) for off load data to public or private cloud. Both Physical tape and cloud tier are policy managed options. In R4.2, cloud or tape are mutually exclusive options for a TS7700 cluster.

Release 4.0 introduced support for the TS4500 tape library when attached to models TS7740-V07, TS7720T-VEB, and TS7760. TS3500 tape library can still be attached to all TS7700 tape attach models.

Release 3.3 introduced support for TS1150 along with heterogeneous support for two different tape drive models at the same time, as described in 7.1.5, “TS7700 tape library attachments, drives, and media” on page 266.

The following operator interfaces for providing information about the TS7700 are available:

•Object access method (OAM) commands are available at the host operator console. These commands provide information about the TS7700 in stand-alone and grid environments. This information represents the host view of the components within the TS7700. Other z/OS commands can be used against the virtual addresses. This interface is described in Chapter 10, “Host Console operations” on page 585.

•Web-based management functions are available through web-based user interfaces (UIs). The following browsers can be used to access the web interfaces:

– Firefox ESR:31.x, 38.x, 45.x

– Microsoft Internet Explorer Version 9.x, 10.x, and 11

– Chrome 39.x and42.x

– Microsoft Edge 25.x

Enable cookies and disable the browser’s function of blocking windows for the MI usage. Unsupported web browser versions might cause some MI windows to not display correctly.

Considering the overall TS7700 implementation, two different web-based functions are available:

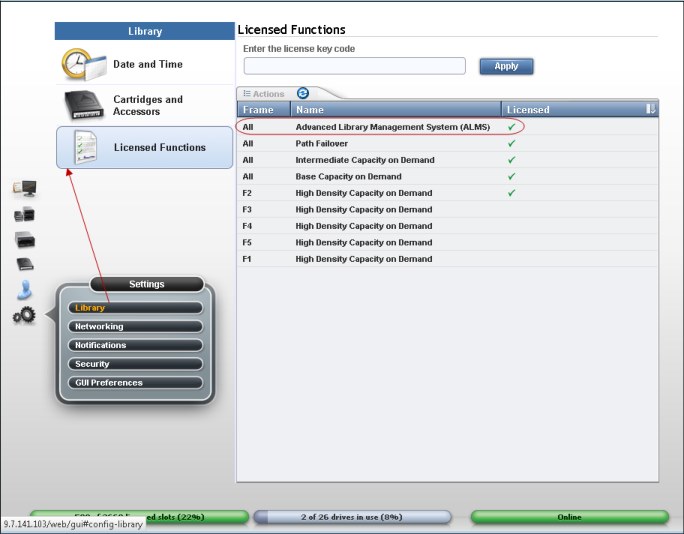

– The tape library GUI, which enables management, configuration, and monitoring of the configured tape library in tape attach configurations. The TS4500 and TS3500 are the supported tape libraries for the TS7700 implementation.

– The TS7700 MI, which is used to run all TS7700 configuration, setup, and monitoring actions.

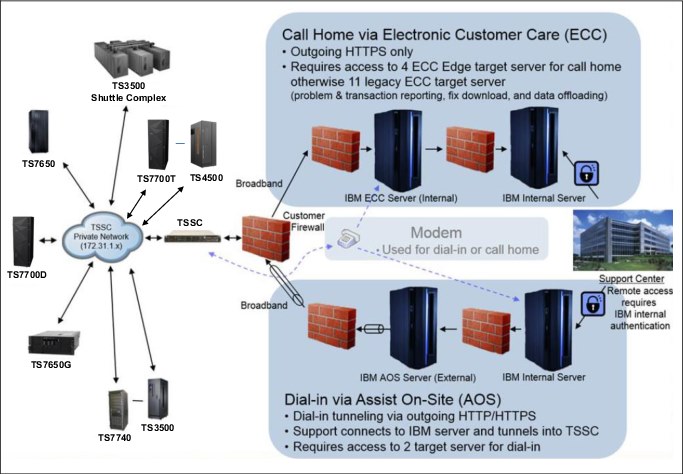

•Call Home Interface: This interface is activated on the TS3000 System Console (TSSC) and provides helpful information to IBM Service, Support Center, and Development personnel. It also provides a method to connect IBM storage systems with IBM remote support, also known as Electronic Customer Care (ECC). No user data or content is included in the call home information.

9.2 The tape library management GUI

The tape library management GUI web interface enables the user to monitor and configure most of the library functions from the web. The tape library GUI can be started from the tape library expanded page on TS7700 MI by clicking the tape library image. Starting with R4.0, the tape attach TS7700 can be configured with the TS3500 and TS4500 tape libraries.

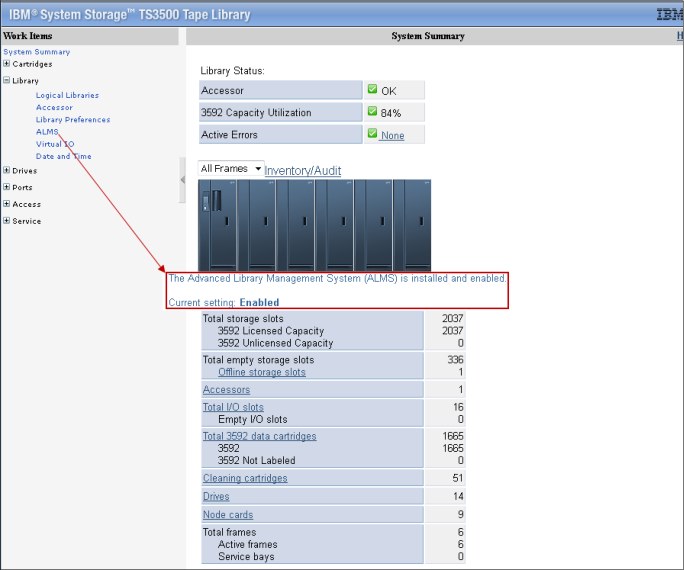

Figure 9-1 shows the TS3500 tape library GUI initial window with the System Summary.

Figure 9-1 TS3500 tape library GUI initial window

Figure 9-2 shows the TS4500 Management GUI initial Summary Screen. Notice that GUI general appearance and warning messages presentation are similar to the TS7700 and other IBM storage products MIs.

Figure 9-2 The TS4500 tape library management GUI

The tape library management GUI windows are used during the hardware installation phase of the TS7700 tape attach models. For more information about installation tasks, see 9.5.1, “The tape library with the TS7700T cluster” on page 524.

9.3 TS7700 Management Interface

The TS7700 MI is the primary interface to monitor and manage the TS7700. The TS7700 GUI is accessed through TCP/IP, by entering the TS7700 IP address in your web browser. The following web browsers are currently supported:

•Mozilla Firefox ESR 31.x, 38.x, 45.x

•Microsoft Internet Explorer 9, 10, and 11

•Google Chrome 39.x and 42.x

•Microsoft Edge 25.x

The current TS7700 graphical user interface (GUI) implementation has an appearance and feel similar to other MI adopted in other IBM Storage products.

9.3.1 Connecting to the Management Interface

To connect to the TS7700 MI, complete the following steps:

1. The TS7700 must first be installed, configured, and online.

2. In the address field of a supported web browser, enter http://x.x.x.x

(where x.x.x.x is the virtual IP address that was assigned during installation). Press Enter or click Go in the web browser.

(where x.x.x.x is the virtual IP address that was assigned during installation). Press Enter or click Go in the web browser.

3. The virtual IP is one of three IP addresses that are provided during installation. To access a specific cluster, enter the cluster IP address as shown in Example 9-1, where Cluster 0 is accessed directly.

Example 9-1 IP address to connect to Cluster 0 in a grid

http://x.x.x.x/0/Console

4. If a local name server is used, where names are associated with the virtual IP address, then the cluster name rather than the hardcoded address can be used for reaching the MI.

5. The login window for the MI displays as shown in Figure 9-3. Enter the default login name as admin and the default password as admin.

Figure 9-3 TS7700 MI login

After logging in, the user is presented to the Grid Summary page, as shown in Figure 9-4.

After security policies are implemented locally at the TS7700 cluster or by using centralized role-base access control (RBAC), a unique user identifier and password can be assigned by the administrator. The user profile can be modified to provide only functions applicable to the role of the user. All users might not have access to the same functions or views through the MI.

For more information, see 9.3.9, “The Access icon” on page 465.

Figure 9-4 shows an example of Grid Summary window of a TS7700 Grid. It shows a four-cluster grid, the components of it and health status of components. The composite library is depicted as a data center, with all members of the grid on the raised floor. Notice that the TS7760 (two clusters on the left) has a distinct visual appearance when compared to the TS7740 and TS7720 to the right of the picture.

Figure 9-4 MI Grid summary

Each cluster is represented by an image of the TS7700 frame, displaying the cluster’s nickname and ID, and the composite library name and Library ID.

The health of the system is checked and updated automatically at times that are determined by the TS7700. Data that is displayed in the Grid Summary window is not updated in real time. The Last Refresh field, in the upper-right corner, reports the date and time that the displayed data was retrieved from the TS7700. To populate the summary with an updated health status, click the Refresh icon near the Last Refresh field in the upper-right corner of Figure 9-4.

The health status of each cluster is indicated by a status sign next to its icon. The legend explains the meaning of each status sign. To see more information about a specific cluster, click that component’s icon. In the example that is shown in Figure 9-4 on page 348, the TS7720T has a Warning or Degraded indication on it.

9.3.2 Using the TS7700 management interface

This section describes how to use the TS7700 management interface (MI).

Login window

Each cluster in a grid uses its own login window, which is the first window that opens when the cluster URL is entered in the browser address field. The login window shows the name and number of the cluster to be accessed. After logging in to a cluster, other clusters in the same grid can be accessed from the same web browser window.

Navigating between windows

Navigation between MI window can be done by clicking active links on a window or on the banner, or by selecting a menu option or icon.

Banner

The banner is common to all windows of the MI. The banner elements can be used to navigate to other clusters in the grid, run some user tasks, and locate additional information about the MI. The banner is located across the top of the MI web page, and allows a secondary navigation scheme for the user.

Figure 9-5 shows an example of the TS7700 MI banner element.

Figure 9-5 Management Interface Banner

The left field on the banner shows the sequence of selections made by user in the TS7700 MI website hierarchy, the bread crumbs trail. User can navigate directly to a different page by hovering the mouse over that field and clicking to select a different page. At the right of the banner (showing admin on Figure 9-5) shows the current user logged in MI. Hovering the mouse over it gives you the choices of logging out, changing user password, and turn on/off low graph mode.

The last field to the right of the banner (question mark symbol) provides information about current MI window. In addition, you can invoke learning and tutorials, the knowledge center, and check the level of the installed knowledge center by hovering the mouse over it and clicking the desired option.

Status and event indicators

Status and alert indicators occur at the bottom of each MI window. These indicators provide a quick status check for important cluster and grid properties. Grid indicators provide information for the entire grid. These indicators are displayed on the left and right corners of the window footer, and include tasks and events.

Figure 9-6 shows some examples of status and events that can be displayed from the Grid Summary window.

Figure 9-6 Status and Events indicators in the Grid Summary pane

All cluster indicators provide information for the accessing cluster only, and are displayed only on MI windows that have a cluster scope. MI also provides ways to filter, sort, and change the presentation of different tables in the MI. For example, the user can hide or display a specific column, modify its size, sort the table results, or download the table row data in a comma-separated value (CSV) file to a local directory.

For a complete description of tasks, the behavior of health and status icons, and a description of how to optimize the table presentations, see the Using the Management Interface topic in TS7700 R4.1 IBM Knowledge Center:

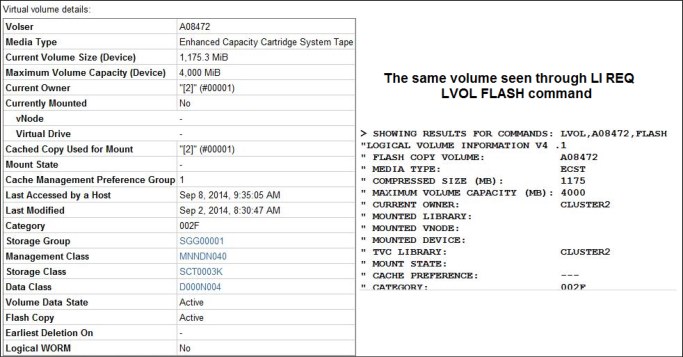

Library Request Command window

The LI REQ command pane in the MI expands the interaction of the system administrator with the TS7700 subsystem. By using the LI REQ panel, a standard LI REQ command can be run by the Storage Administrator directly from the MI to a grid (also known as Composite Library), or to a specific Cluster (also known as Distributed Library), with no need to be logged in to the z/OS host system.

The LI REQ panel is minimized and docked at the bottom of the MI window. The user must only click it (at the lower right) to open the LI REQ command pane. Figure 9-7 shows the new LI REQ command panel and operation.

Figure 9-7 LI REQ Command window and usage

By default, the only user role that is allowed to run LI REQ commands is the Administrator. LI REQ commands are logged in to tasks.

|

Remember: The LI REQ option shows only in the bottom of the MI windows for users with the Administrator role, and is not displayed on the host console.

|

Figure 9-8 shows an example of a library request command reported in the Tasks list, and shows how to get more information about the command by selecting Properties clicking See details in the MI window.

Figure 9-8 LI REQ command log and information

|

Important: LI REQ commands that are issued from this window are not presented in the host console logs.

|

For a complete list of available LI REQ commands, their usage, and respective responses, see the current IBM TS7700 Series z/OS Host Command Line Request User’s Guide, WP101091:

Standard navigation elements

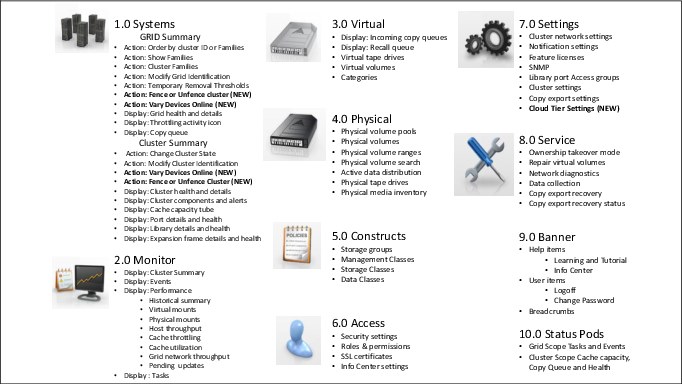

This section of the TS7700 MI provides functions to manage and monitor the health the TS7700. Listed next are the expandable interface windows that are shown on the left side of the MI Summary window. The exception is the systems window, which is displayed only when the cluster is part of a grid.

More items might also show, depending on the actual cluster configuration:

Systems icon This window shows the cluster members of the grid and grid-related functions.

Monitor icon This window gathers the events, tasks, and performance information about one cluster.

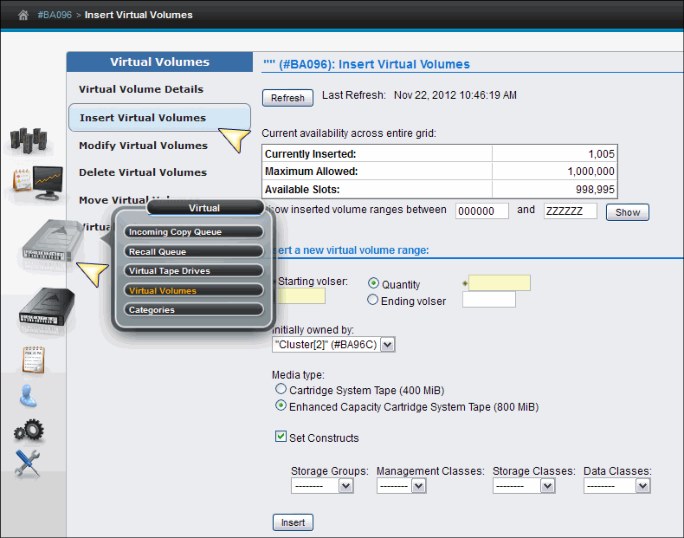

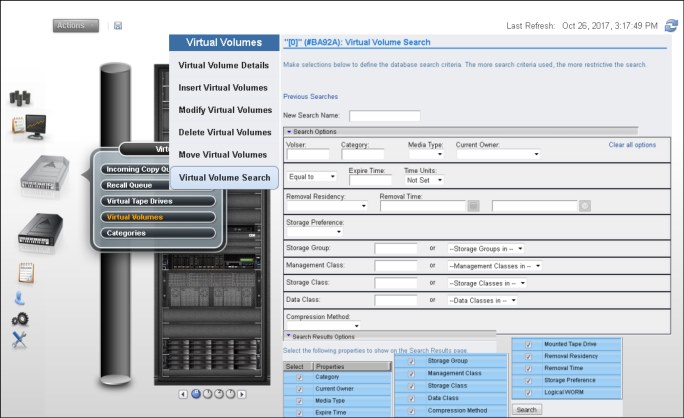

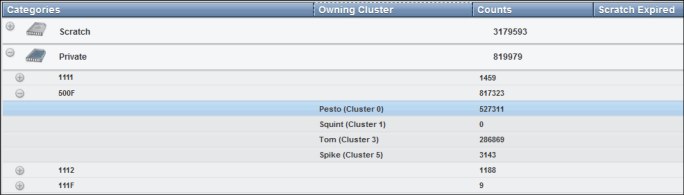

Light cartridge icon Information that is related to virtual volumes is available here.

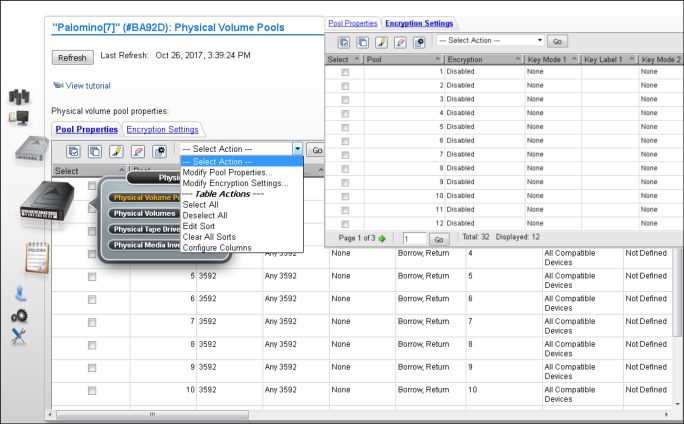

Dark cartridge icon Information that is related to physical cartridges and the associated tape library are under this window.

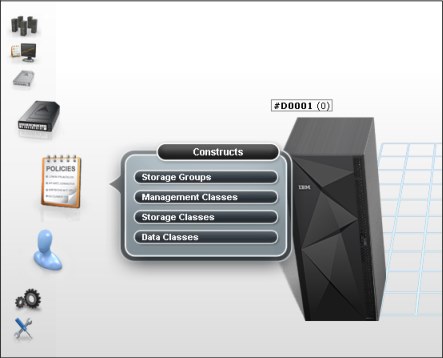

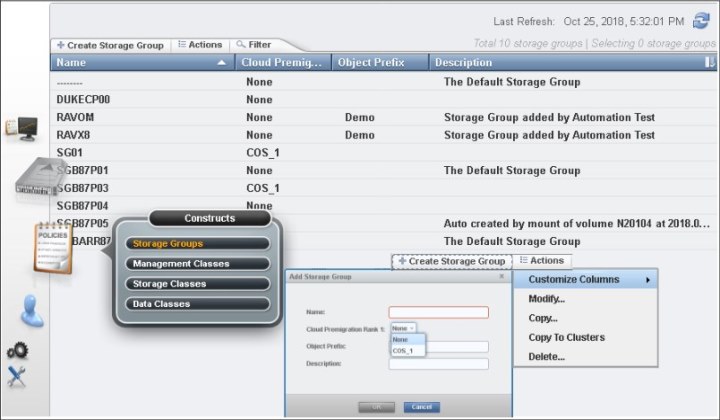

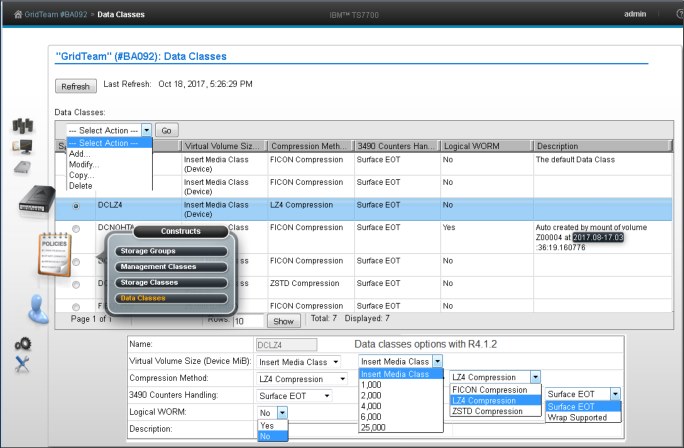

Notepad icon This window contains the constructs settings.

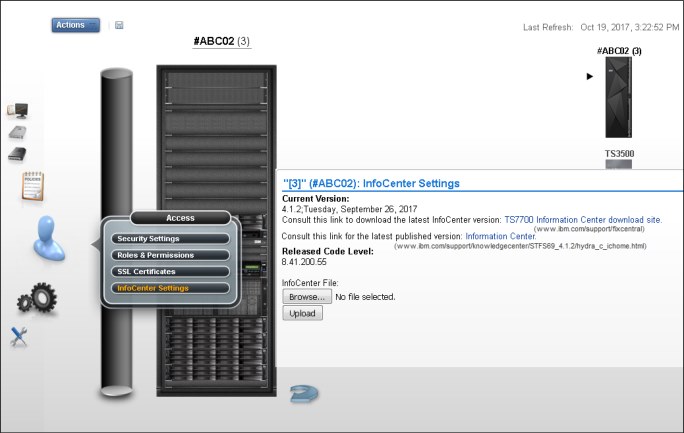

Blue man icon Under the Access icon, all security-related settings are grouped.

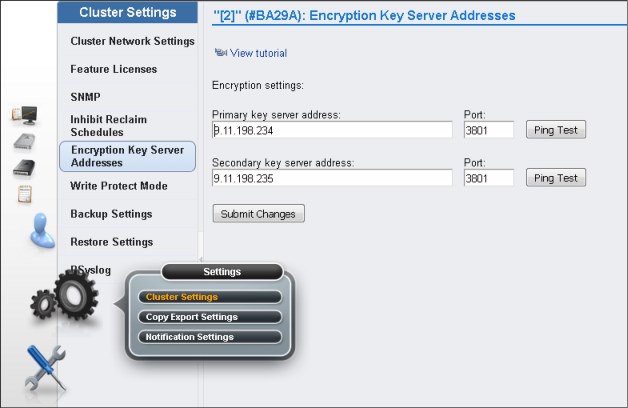

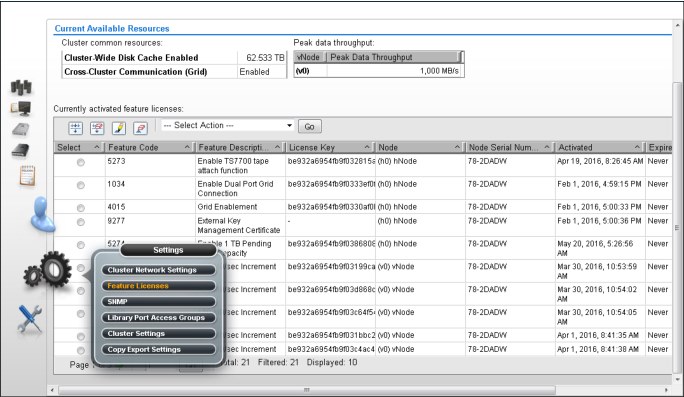

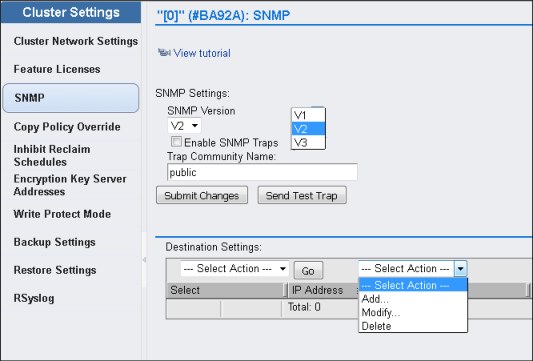

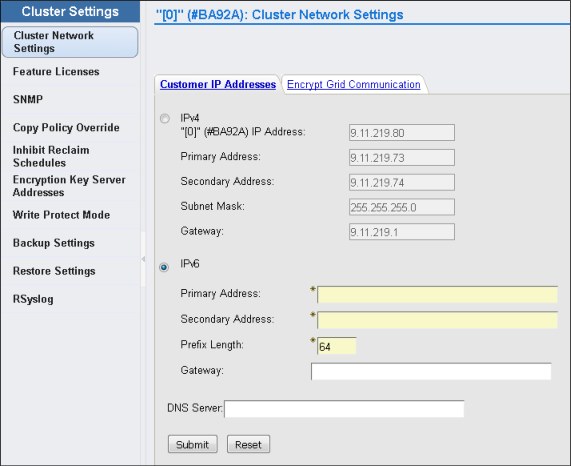

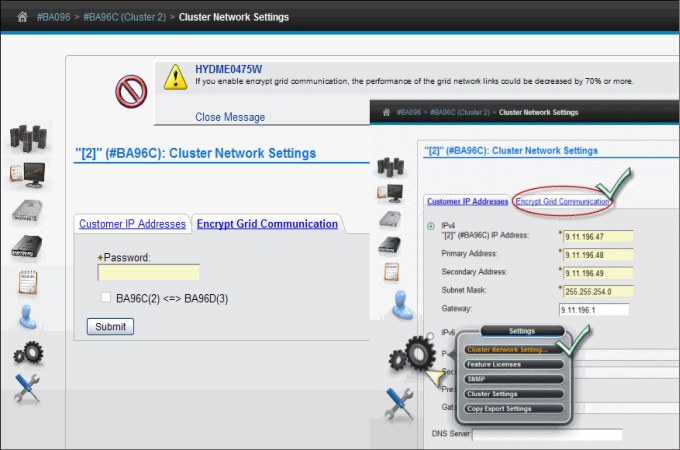

Gear icon Cluster general settings, feature licenses, overrides, SNMP, write protect mode, and backup and restore settings dare under the Gear icon.

Tool icon Ownership takeover mode, network diagnostics, data collection, and other repair/recovery-related activities are under this icon.

MI Navigation

Use this window (see Figure 9-9) for a visual summary of the TS7700 MI Navigation.

Figure 9-9 TS7700 MI Navigation

9.3.3 The Systems icon

The TS7700 MI windows that are gathered under the Systems icon can help to identify quickly cluster or grid properties, and assess the cluster or grid “health” at a glance.

|

Tip: The Systems icon is only visible when the accessed TS7700 Cluster is part of a grid.

|

Grid Summary window

The Grid Summary window is the first window that opens in the web interface when the TS7700 is online, and the cluster that is currently being accessed by MI is part of a grid. This window can be used to quickly assess the health of all clusters in the grid, and as a starting point to investigate cluster or network issues.

|

Note: If the accessing cluster is a stand-alone cluster, the Cluster Summary window is shown upon login instead.

|

This window shows a summary view of the health of all clusters in the grid, including family associations, host throughput, and any incoming copy queue. Figure 9-10 shows an example of a Grid Summary window, including the pop-up windows.

Grid Summary window includes the following information:

•Cluster throttling

•Host throughput rate (sampled before compression by host adapters within cluster)

•Copy queue size and type

•Running tasks and events

Figure 9-10 Grid Summary and pop-up windows

There is a diskette icon on the right of the Actions button. Clicking the icon saves a CSV-formatted file with a summary of the grid components information.

Actions menu on the Grid Summary page

Use this menu to change the appearance of clusters on the Grid Summary window or grid identification details. When the grid includes a disk-only cluster, this menu can also be used to change removal threshold settings for it or resident partitions (CP0) of a TS7700T (tape attach) or a TS7760C (Cloud attach) clusters. The Actions menu window is shown in Figure 9-11.

Figure 9-11 Grid Summary window and Actions list

This menu features the following tasks:

•Order by Cluster ID

Select this option to group clusters according to their cluster ID number. Ordered clusters are shown first from left to right, then front to back. Only one ordering option can be selected at a time.

|

Note: The number that is shown in parentheses in breadcrumb navigation and cluster labels is always the cluster ID.

|

•Order by Families

Select this option to group clusters according to their family association.

•Show Families

Select this option to show the defined families on the grid summary window. Cluster families are used to group clusters in the grid according to a common purpose.

•Cluster Families

Select this option to add, modify, or delete cluster families used in the grid.

•Modify Grid Identification

Use this option to change grid nickname or description.

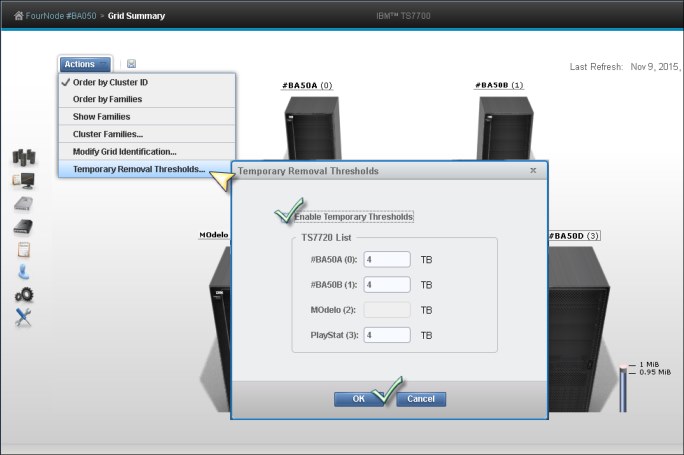

•Temporary Removal Thresholds

This option is used to change temporarily the removal thresholds of the disk-only clusters in the grid.

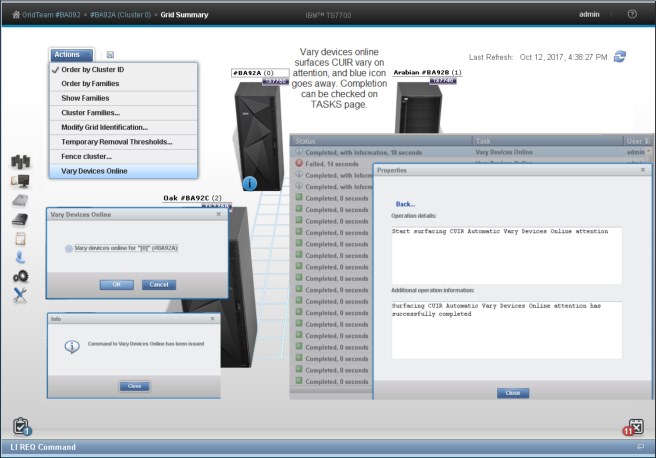

•Vary Devices Online

Select this option to vary devices online for the selected cluster. A blue informational icon is shown on the lower left corner of the cluster image if logical devices of that cluster need to be varied online. Figure 9-12 shows an example of cluster vary online devices. Notice the information icon on the cluster that is reporting devices offline.

Figure 9-12 Vary cluster devices online

This menu option is available only if control unit initiated reconfiguration (CUIR) is enabled by a LI REQ command and the Automatic Vary Online (AONLINE) notification is disabled. For more information about the use of the LI REQ commands, see Chapter 10, “Host Console operations” on page 585.

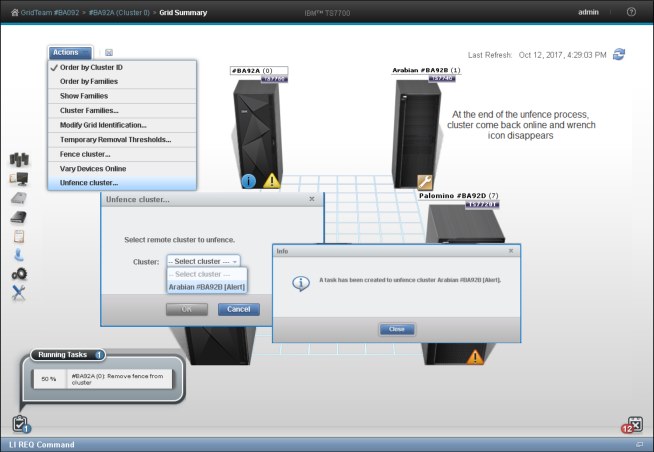

•Fence Cluster, Unfence Cluster

Select Fence Cluster to place a selected cluster in a fence state. If a cluster is already in a fence state, the option Unfence Cluster will show instead.

Select Unfence Cluster to unfence a selected cluster that is currently in a fence state.These functions are part of the grid resilience improvements package. The Fence Cluster option in the Actions menu allows the user Administrator (default) to manually remove (fence) a cluster that has been determined to be sick or not functioning properly from the grid. Fencing a cluster will isolate it from the rest of the grid. The administrator can fence the local cluster (the one being accessed by MI) or a remote cluster in the grid from this window.

|

Note: Remote cluster fence is enabled only when all clusters in a grid are at R4.1.2 (or later) code level.

|

The user can decide what action will be taken by the sick cluster after the fence cluster action is selected:

– Options for the local cluster:

• Forced offline

• Reboot

• Reboot and stay offline

– Options for a remote cluster (from any other cluster in the grid besides the cluster under suspicion):

• Send an alert

• Force cluster offline

• Reboot

• Reboot and stay offline or isolate from the grid

Figure 9-13 shows the TS7700 MI sequence to manual fence a cluster.

Figure 9-13 Fence a cluster operation.

For more information about cluster fence function and proper usage, see 2.3.37, “Grid resiliency functions” on page 99.

Figure 9-14 shows how to manually unfence a cluster using the TS7700 MI.

Figure 9-14 Unfence cluster sequence

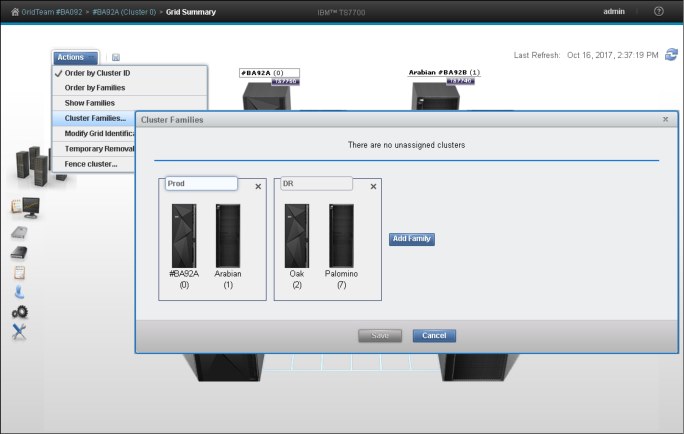

Cluster Families window

To view information and run actions that are related to TS7700 cluster families, use the window that is shown in Figure 9-15.

Figure 9-15 MI Add Cluster Families: Assigning a cluster to a family

To view or modify cluster family settings, first verify that these permissions are granted to the assigned user role. If the current user role includes cluster family permissions, select Modify to run the following actions:

•Add a family: Click Add to create a new cluster family. A new cluster family placeholder is created to the right of any existing cluster families. Enter the name of the new cluster family in the active Name text box. Cluster family names must be 1 - 8 characters in length and composed of Unicode characters. Each family name must be unique. Clusters are added to the new cluster family by relocating a cluster from the Unassigned Clusters area by using the method that is described in the Move a cluster function, described next.

•Move a cluster: One or more clusters can be moved by dragging, between existing cluster families, to a new cluster family from the Unassigned Clusters area, or to the Unassigned Clusters area from an existing cluster family:

– Select a cluster: A selected cluster is identified by its highlighted border. Select a cluster from its resident cluster family or the Unassigned Clusters area by using one of these methods:

• Clicking the cluster with the mouse.

• Using the Spacebar key on the keyboard.

• Pressing and holding the Shift key while selecting clusters to select multiple clusters at one time.

• Pressing the Tab key on the keyboard to switch between clusters before selecting one.

– Move the selected cluster or clusters:

• Click and hold the mouse on the cluster, and drag the selected cluster to the destination cluster family or the Unassigned Clusters area.

• Using the arrow keys on the keyboard to move the selected cluster or clusters right or left.

|

Consideration: An existing cluster family cannot be moved within the Cluster Families window.

|

•Delete a family: To delete an existing cluster family, click the X in the upper-right corner of the cluster family to delete it. If the cluster family to be deleted contains any clusters, a warning message is displayed. Click OK to delete the cluster family and return its clusters to the Unassigned Clusters area. Click Cancel to abandon the delete action and retain the selected cluster family.

•Save changes: Click Save to save any changes that are made to the Cluster Families window and return it to read-only mode.

|

Remember: Each cluster family must contain at least one cluster. An attempt to save a cluster family that does not contain any clusters results in an error message. No changes are made, and the Cluster Families window remains in edit mode.

|

Grid Identification properties window

To view and alter identification properties for the TS7700 grid, use this option. In a multigrid environment, use this window to identify clearly a particular composite library, making it easier to distinguish, operate, and manage this TS7700 grid (avoiding operational mistakes due to ambiguous identification).

To change the grid identification properties, edit the available fields and click Modify. The following fields are available:

•Grid nickname: The grid nickname must be 1 - 8 characters in length and composed of alphanumeric characters with no spaces. The characters at (@), period (.), dash (-), and plus sign (+) are also allowed.

•Grid description: A short description of the grid. Up to 63 characters can be used.

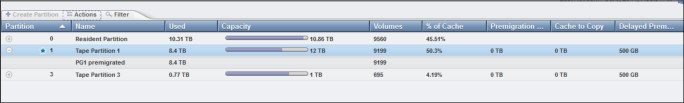

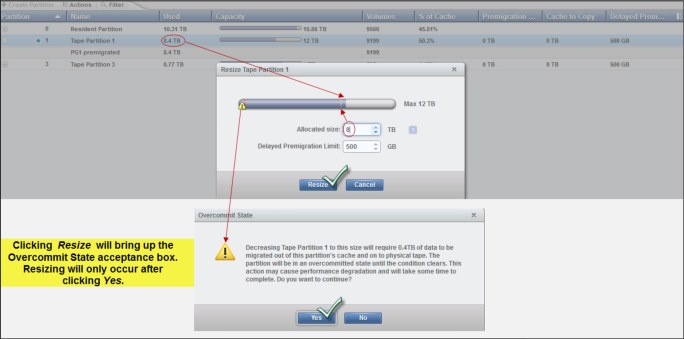

Lower removal threshold

Select Actions → Temporary Removal Threshold in the Grid summary view to lower the removal threshold for any disk-only cluster or cache resident partition of a cloud or tape attach cluster in a grid that possess a physical tape library.

For more information about removal policies, see 4.2.6, “TS7720 and TS7760 cache thresholds and removal policies” on page 164.

Figure 9-16 shows the Temporary Removal Threshold window.

Figure 9-16 Setting the Temporary Removal Threshold

Grid health and details

In the Grid Summary view, cluster is in a normal state (healthy) when there is no warning or degradation icon that is displayed at the lower left side at the cluster’s representation in the MI. Hovering the mouse pointer over the lower right corner of the cluster’s picture in the Grid Summary window shows a message stating The health state of the [cluster number] [cluster name] is Normal, confirming that this cluster is in a normal state.

Exceptions in the cluster state are represented in the Grid Summary window by a little icon at the lower right side of the cluster’s picture. Additional information about the status can be viewed by hovering your cursor over the icon. See Figure 9-6 on page 351 for a visual reference of the icons and how they show up on the Grid Summary page.

Figure 9-17 shows the appearance of the degraded icon, and the possible reasons for degradation to happen.

Figure 9-17 Warning or Degraded Icon

Figure 9-18 shows the icons for other possible statuses for a cluster that can be viewed on the TS7700 Cluster or Grid Summary windows.

Figure 9-18 Other cluster status icons

For a complete list of icons and meanings, see IBM Knowledge Center:

In the Grid Summary window, there is an alert icon to indicate throttling activity on a cluster within the grid. Throttling can severely affect the overall performance of a cluster, and might result in job execution delays and affects the operation schedule. See Figure 9-19 for an example.

Figure 9-19 Clusters throttling in a two-cluster grid

For practical considerations about this topic, what it means, and what can be done to avoid it, see Chapter 11, “Performance and monitoring” on page 623.

For more information about throttling in a TS7700 grid, see the IBM TS7700 Series Best Practices - Understanding, Monitoring, and Tuning the TS7700 Performance, WP101465:

Cluster Summary window

By clicking the icon of an individual cluster in the grid, or by selecting a specific cluster in the cluster navigation element in the banner, the Cluster Summary window can be accessed. In a stand-alone configuration, this is the first icon that is available in the MI.

Figure 9-20 shows an example of the Cluster Summary window.

Figure 9-20 Cluster Summary window with TS7760T and TS4500 tape library

The Cluster Information can be displayed by hovering the cursor over the components, as shown in Figure 9-20. In the resulting box, the following information is available:

•Cluster components health status

•Cluster Name

•Family to which this cluster is assigned

•Cluster model

•Licensed Internal Code (LIC) level for this cluster

•Description for this cluster

•Disk encryption status

•Cache size and occupancy (Cache Tube)

There is a diskette icon to the right of the Actions button. Clicking that icon downloads a CSV-formatted file with the meaningful information about that cluster.

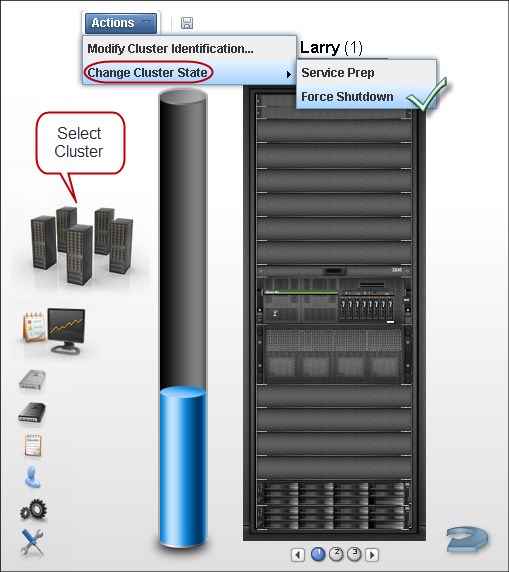

Cluster Actions menu

By using the options under this menu, the user can change the state or settings of a cluster. Also, when the selected cluster is a tape attach TS7700 (a tape library is present), this menu can be used to change the Copy Export settings.

From the Action menu, the Cluster State can be changed to a different one to perform a specific task, such as preparing for a maintenance window, performing a disaster recovery drill, or moving machines to a different IT center. Depending on the current cluster state, different options display.

Table 9-1 describes options available to change the state of a cluster.

Table 9-1 Options to change the cluster state

|

If the current state is

|

Selection

|

Restrictions and notes

|

|

Online

|

Service Prep

|

All following conditions must be met first:

•The cluster is online.

•No other clusters in the grid are in service

prep mode. •At least one other cluster must remain online.

Caution: If only one other cluster remains online, a single point of failure exists when this cluster state becomes service prep mode.

Select Service Prep to confirm this change.

|

|

Force Shutdown

|

Select Force Shutdown to confirm this change.

Important: After a shutdown operation is initiated, it cannot be canceled.

|

|

|

Service Pending

|

Force Service

|

Use this option when an operation stalls and is preventing the cluster from entering Service Prep.

Select Force Service to confirm this change.

All but one cluster in a grid can be placed into service mode, but it is advised that only one cluster be in service mode at a time. If more than one cluster is in service mode, and service mode is canceled on one of them, that cluster does not return to normal operation until service mode is canceled on all clusters in the grid.

|

|

Return to Normal

|

Select this option to cancel a previous service prep change and return the cluster to the normal online state.

Select Return to Normal to confirm this change.

|

|

|

Force Shutdown

|

Select Force Shutdown to confirm this change.

Important: After a shutdown operation is initiated, it cannot be canceled.

|

|

|

Shutdown (offline)

|

User interface not available

|

After an offline cluster is powered on, it attempts to return to normal. If no other clusters in the grid are available, skip hot token reconciliation can be tried.

|

|

Online-Pending or Shutdown Pending

|

Menu disabled

|

No options to change state are available when a cluster is in a pending state.

|

Going offline and coming online considerations

Whenever a member cluster of a grid goes offline or comes back online, it needs to exchange information with other peer members regarding the status of the logical volumes that are controlled by the grid. Each logical volume is represented by a token, which contains all of the pertinent information regarding that volume, such as creation date, whose cluster it belongs to, which cluster is supposed to have a copy of it, and what kind of copy it should be.

Each cluster in the grid keeps its own copy of the collection of tokens, representing all of the logical volumes that exist in grid, and those copies are kept updated at the same level by the grid mechanism. When coming back online, a cluster needs to reconcile its own collection of tokens with the peer members of the grid, making sure that it represents the status of the grid inventory. This reconcile operation is also referred to as token merge.

Here are some items to consider when going offline and coming online:

•Pending token merge

A cluster in a grid configuration attempts to merge its token information with all of the other clusters in the grid as it goes online. When no other clusters are available for this merge operation, the cluster attempting to go online remains in the going online, or blocked, state indefinitely as it waits for the other clusters to become available for the merge operation. If a pending merge operation is preventing the cluster from coming online, there is an option to skip the merge step.

Click Skip Step to skip the merge operation. This button is only available if the cluster is in a blocked state while waiting to share pending updates with one or more unavailable clusters. When you click Skip Step, pending updates against the local cluster might remain undetected until the unavailable clusters become available.

•Ownership takeover

If ownership takeover was set at any of the peers, the possibility exists that old data can surface to the host if the cluster is forced online. Therefore, before attempting to force this cluster online, it is important to know whether any peer clusters have ever enabled ownership takeover mode against this cluster while it was unavailable. In addition, if this cluster is in service, automatic ownership takeover from unavailable peers is also likely and must be considered before attempting to force this cluster online.

If multiple clusters were offline and must be forced back online, force them back online in the reverse order that they went down in (for example, the last cluster down is the first cluster up). This process ensures that the most current cluster is available first to educate the rest of the clusters forced online.

•Autonomic Ownership Takeover Manager (AOTM)

If it is installed and configured, it attempts to determine whether all unavailable peer clusters are in a failed state. If it determines that the unavailable cluster is not in a failed state, it blocks an attempt to force the cluster online. If the unavailable cluster is not in a failed state, the forced online cluster can be taking ownership of volumes that it need not take ownership of. If AOTM discovers that all unavailable peers failed and network issues are not to blame, this cluster is then forced into an online state.

After it is online, AOTM can further enable ownership takeover against the unavailable clusters if the AOTM option is enabled. Additionally, manual ownership takeover can be enabled, if necessary.

•Shutdown restrictions

To shut down a cluster, it is necessary to be logged in to this system. To shut down another cluster, log out of the current cluster and log in to the cluster to shut down. For more information, see “Cluster Shutdown window” on page 371.

|

Note: After a shutdown or force shutdown action, the targeted cluster (and associated cache) are powered off. A manual intervention is required on site where the cluster is physically located to power it up again.

|

A cluster shutdown operation that is started from the TS7700 MI also shuts down the cache. The cache must be restarted before any attempt is made to restart the TS7700 cluster.

Service mode window

Use the window that is shown in Figure 9-21 to put a TS7700 cluster into service mode, whenever required by a service action or any disruptive activity on a cluster that is a member of a grid. See Chapter 2, “Architecture, components, and functional characteristics” on page 15 for more information.

|

Remember: Service mode is only possible for clusters that are members of a grid.

|

Figure 9-21 Cluster Summary: Preparing for service

Service mode enables the subject cluster to leave the grid graciously, surrendering the ownership of its logical volumes as required by the peer clusters in the grid to attend to the tasks being performed by client. The user continues operating smoothly from the other members of the grid automatically, if consistent copies of volumes that reside in this cluster also exist elsewhere in the grid, and the host also has access to those clusters.

Before changing a cluster state to Service, the user needs to vary offline all logical devices that are associated with this cluster on the host side. No host access is available in a cluster that is in service mode.

|

Note: On the host side, vary logical drives online on the remaining clusters of the grid to ensure mount points enough for the system to continue operation before setting a cluster in Service mode.

|

R4.1.2 level of code starts implementing the CUIR. CUIR will help to alleviate client involvement and simplify the process necessary to start service preparation in a grid member.

Before code level R4.1.2, the user needed to vary offline all logical drives associated to the cluster going into service before changing cluster state to service. This process had to be done across all LPARs and system plexes attached to the cluster. Any long running jobs using these pending offline devices at this point will continue to run up to the end. Thus, the user should issue SWAP commands to these jobs, causing them to move to a different logical drive in a different cluster of the grid. After the cluster maintenance is completed and IBM CSR cancels service for the cluster, the user needed to vary online all the devices again across all LPARs and system plexes.

CUIR can automate this entire process when fully implemented. For more information about CUIR, see 10.9, “CUIR for tape” on page 616.

|

Important: Forcing Service Mode causes jobs that are currently mounted or that use resources that are provided by targeted cluster to fail.

|

Whenever a cluster state is changed to Service, it enters first in service preparation mode, and then, when the preparation stage finishes, it goes automatically into service mode.

During the service preparation stage, the cluster monitors the status of current host mounts, sync copy mounts targeting local Tape Volume Cache (TVC), monitors and finishes up the copies that are currently in execution, and makes sure that there are no remote mounts targeting local TVC. When all running tasks have ended, and no more pending activities are detected, the cluster finishes the service preparation stage and enters Service mode.

In a TS7700 grid, service preparation can occur on only one cluster at any one time. If service prep is attempted on a second cluster before the first cluster has entered in Service mode, the attempt will fail. After service prep has completed for one cluster, and that cluster has entered in service mode, another cluster can be placed in service prep. A cluster in service prep automatically cancels service prep if its peer in the grid experiences an unexpected outage while the service prep process is still active. Although all clusters except one can be in Service mode at the same time within a grid, the preferred approach is having only one cluster in service mode at a time.

Be aware that when multiple clusters are in service mode simultaneously, they need to be brought back to Normal mode at the same time. Otherwise, the TS7700 will not get to ONLINE state, waiting until the remaining clusters also leave service mode. Only then, those clusters merge their tokens and rejoin the grid as ONLINE members.

|

Remember: If more than one cluster is in Service mode, and service is canceled on one of them, that cluster does not return to an online state until Service mode is canceled on all other clusters in this grid.

|

For a disk-only TS7700 cluster or CP0 partition in a grid, click Lower Threshold to lower the required threshold at which logical volumes are removed from cache in advance. See “Temporary removal threshold” on page 167 for more information about the Temporary Removal Threshold. The following items are available when viewing the current operational mode of a cluster.

Cluster State can be any of the following states:

•Normal: The cluster is in a normal operation state. Service prep can be initiated on this cluster.

•Service Prep: The cluster is preparing to go into service mode. The cluster is completing operations (that is, copies owed to other clusters, ownership transfers, and lengthy tasks, such as inserts and token reconciliation) that require all clusters to be synchronized.

•Service: The cluster is in service mode. The cluster is normally taken offline in this mode for service actions or to activate new code levels.

Depending on the mode that the cluster is in, a different action is presented by the button under the Cluster State display. This button can be used to place the TS7700 into service mode or back into normal mode:

•Prepare for Service Mode: This option puts the cluster into service prep mode and enables the cluster to finish all current operations. If allowed to finish service prep, the cluster enters Service mode. This option is only available when the cluster is in normal mode. To cancel service prep mode, click Return to Normal Mode.

•Return to Normal Mode: Returns the cluster to normal mode. This option is available if the cluster is in service prep or service mode. A cluster in service prep mode or Service mode returns to normal mode if Return to Normal Mode is selected.

A window opens to confirm the decision to change the Cluster State. Click Service Prep or Normal Mode to change to new Cluster State, or Cancel to abandon the change operation.

Cluster Shutdown window

Use the window that is shown in Figure 9-22 to shut down remotely a TS7700 cluster for a planned power outage or in an emergency.

Figure 9-22 MI Cluster: Forcing a cluster to shutdown

This window is visible from the TS7700 MI whether the TS7700 is online or in service. If the cluster is offline, MI is not available, and the error HYDME0504E The cluster you selected is unavailable is presented.

|

Note: After a shutdown or force shutdown action, the targeted cluster (and associated cache) are powered off. A manual intervention is required on the site where the cluster is physically located to power it up again.

|

Only the cluster where a connection is established can be shut down by the user. To shut down another cluster, drop the current cluster connection and log in to the cluster that must be shut down.

Before the TS7700 can be shut down, decide whether the circumstances provide adequate time to perform a clean shutdown. A clean shutdown is not mandatory, but it is suggested for members of a TS7700 grid configuration. A clean shutdown requires putting the cluster in Service mode first. Make sure that no jobs or copies are targeting or being sourced from this cluster during shutdown.

Jobs that use this specific cluster are affected, but also copies are canceled. Eligible data that has not yet been copied to remaining clusters cannot be processed during service and downtime. If the cluster cannot be placed in Service mode, use the force shutdown option.

|

Attention: A forced shutdown can result in lost access to data and job failure.

|

A cluster shutdown operation that is started from the TS7700 MI also shuts down the cache. The cache must be restarted before any attempt is made to restart the TS7700.

If the Shutdown option is selected from the action menu for a cluster that is still online, as shown at the top of Figure 9-22 on page 371, a message alerts the user to put the cluster in service mode first before shutting down, as shown in Figure 9-23.

|

Note: For normal situations, set the cluster into service mode before shutdown is always recommendable.

|

Figure 9-23 Warning message and Cluster Status during forced shutdown

It is still possible to force a shutdown without going into service by entering the password and clicking the Force Shutdown button if needed (for example, during a DR test to simulate a cluster failure. In this case, placing a cluster in service does not apply).

In Figure 9-23, the Online State and Service State fields in the message show the operational status of the TS7700 and appear over the button that is used to force its shutdown. The lower-right corner of the picture shows the cluster status that is reported by the message.

The following options are available:

•Cluster State. The following values are possible:

– Normal. The cluster is in an online, operational state and is part of a TS7700 grid.

– Service. The cluster is in service mode or is a stand-alone system.

– Offline. The cluster is offline. It might be shutting down in preparation for service mode.

•Shutdown. This button initiates a shutdown operation:

– Clicking Shutdown in Normal mode.

If Shutdown is selected while in normal mode, a warning message suggesting that you set the cluster to Service mode before proceeding opens, as shown in Figure 9-23 on page 372.

To place the cluster in service mode, select Modify Service Mode. To continue with the force shutdown operation, enter the password and click Force Shutdown. To abandon the shutdown operation, click Cancel.

– Clicking Shutdown in Service mode.

When Shutdown is selected while in Service mode, you are prompted for a confirmation. Click Shutdown to continue, or click Cancel to abandon the shutdown operation.

|

Important: After a shutdown operation is initiated, it cannot be canceled.

|

When a shutdown operation is in progress, the Shutdown button is disabled and the status

of the operation is displayed in an information message. The the shutdown sequence features the following steps:

of the operation is displayed in an information message. The the shutdown sequence features the following steps:

1. Going offline.

2. Shutting down.

3. Powering off.

4. Shutdown completes.

Verify that power to the TS7700 and to the cache is shut down before attempting to restart the system.

A cluster shutdown operation that is started from the TS7700 MI also shuts down the cache. The cache must be restarted first and allowed to achieve an operational state before any attempt is made to restart the TS7700.

Cluster Identification Properties window

Select this option to view and alter cluster identification properties for the TS7700.

The following information that is related to cluster identification is displayed. To change the cluster identification properties, edit the available fields and click Modify. The following fields are available:

•Cluster nickname: The cluster nickname must be 1 - 8 characters in length and composed of alphanumeric characters. Blank spaces and the characters at (@), period (.), dash (-), and plus sign (+) are also allowed. Blank spaces cannot be used in the first or last character position.

•Cluster description: A short description of the cluster. Up to 63 characters can be used.

|

Note: Copy and paste might bring in invalid characters. Manual input is preferred.

|

Cluster health and detail

The health of the system is checked and updated automatically from time to time by the TS7700. The information status that is reflected on this window is not in real time; it shows the status of the last check-out. To repopulate the summary window with the updated health status, click the Refresh icon. This operation takes some minutes to complete. If this cluster is operating in Write Protect Mode, a lock icon is shown in the middle right part of the cluster image.

Figure 9-20 on page 365, Cluster Summary page, depicts a TS7760T with a TS4500 tape library attached. Within cluster front view page, cluster badge (top of the picture) brings a general description about the cluster, such as model, name, family, Licensed Internal Code level, cluster description, and cache encryption status. Hovering the cursor over the locations within the picture of the frame shows the health status of different components, such as the network gear (at the top), TVC controller and expansion enclosures (bottom and halfway up), and the engine server along with the internal 3957-Vxx disks (the middle section). The summary of cluster health shows at the lower-right status bar, and also at the badge health status (over the frame).

Figure 9-24 shows the back view of the cluster summary window and health details. The components that are depicted in the back view are the Ethernet ports and host Fibre Channel connection (FICON) adapters for this cluster. Under the Ethernet tab, the user can see the ports that are dedicated to the internal network (the TSSC network) and those that are dedicated to the external (client) network. The assigned IP addresses are displayed. Details about the ports are shown (IPv4, IPv6, and the health). In the grid Ethernet ports, information about links to the other clusters, data rates, and cyclic redundancy check (CRC) errors are displayed for each port in addition to the assigned IP address and Media Access Control (MAC) address.

The host FICON adapter information is displayed under the Fibre tab for a selected cluster, as shown in Figure 9-24. The available information includes the adapter position and general health for each port.

Figure 9-24 Back view of the cluster summary with health details

To display the different area health details, hover the cursor over the component in the picture.

Cache expansion frame

The expansion frame view displays details and health for a cache expansion frame that is attached to the TS7720 cluster. To open the expansion frame view, click the small image corresponding to a specific expansion frame below the Actions button.

|

Tip: The expansion frame icon is displayed only if the accessed cluster includes an expansion frame.

|

Figure 9-25 shows the Cache Expansion frame details and health view through the MI.

Figure 9-25 Cache expansion frame details and health

Physical library and tape drive health

Click the physical tape library icon, which is shown on a TS7700 tape-attached Cluster Summary window, to check the health of the tape library and tape drives. Figure 9-26 shows a TS4500 tape library that is attached to a TS7760 cluster.

Figure 9-26 TS4500 tape library expanded page and links

|

Consideration: If the cluster is not a tape-attached model, the tape library icon does not display on the TS7700 MI.

|

The library details and health are displayed as listed in Table 9-2.

Table 9-2 Library health details

|

Detail

|

Definition

|

|||

|

Physical library type - virtual library name

|

The type of physical library (type is always TS3500) accompanied by the name of the virtual library established on the physical library.

|

|||

|

Tape Library Health

Fibre Switch Health

Tape Drive Health

|

The health states of the library and its main components. The following values are possible:

•Normal

•Degraded

•Failed

•Unknown

|

|||

|

State

|

Whether the library is online or offline to the TS7700.

|

|||

|

Operational Mode

|

The library operational mode. The following values are possible:

•Auto

•Paused

|

|||

|

Frame Door

|

Whether a frame door is open or closed.

|

|||

|

Virtual I/O Slots

|

Status of the I/O station that is used to move cartridges into and out of the library. The following values are possible:

•Occupied

•Full

•Empty

|

|||

|

Physical Cartridges

|

The number of physical cartridges assigned to the identified virtual library.

|

|||

|

Tape Drives

|

The number of physical tape drives available, as a fraction of the total. Click this detail to open the Physical Tape Drives window.

|

|||

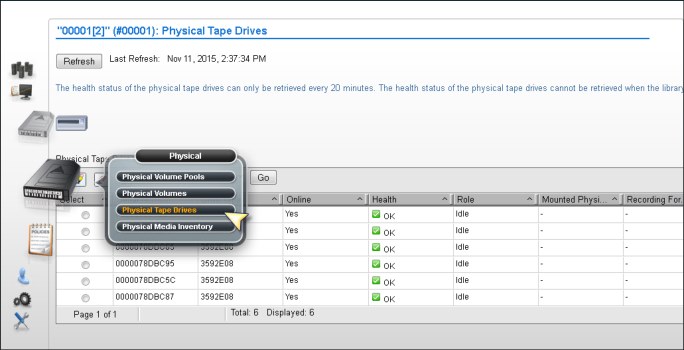

The Physical Tape Drives window shows all the specific details about a physical tape drive, such as its serial number, drive type, whether the drive has a cartridge mount on it, and for what is it mounted. To see the same information, such as drive encryption and tape library location, about the other tape drives, select a specific drive and click Select Action → Details.

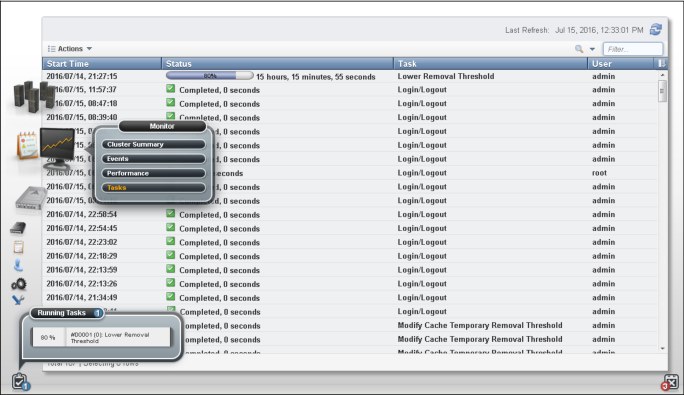

9.3.4 The Monitor icon

The collection of items under the Monitor icon in the MI provides means to monitor tasks, events, and performance statistics within the TS7700. Figure 9-27 shows the Monitor icon in the TS7700 MI.

Figure 9-27 The monitor Icon

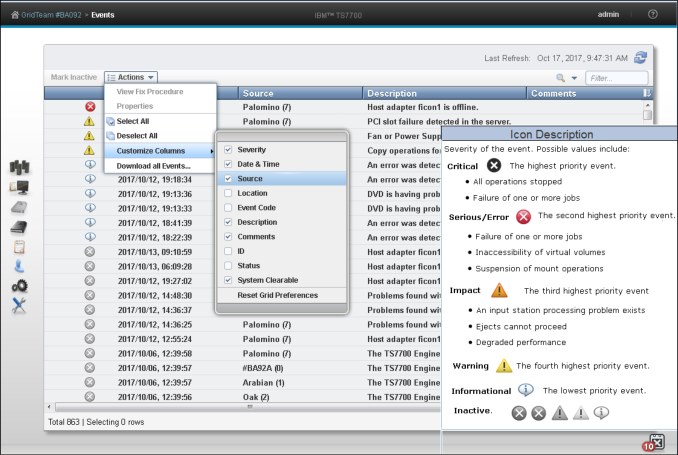

Events

Use this window that is shown in Figure 9-28 on page 378 to view all meaningful events that occurred within the grid or a stand-alone TS7700 cluster. Events encompass every significant occurrence within the TS7700 grid or cluster, such as a malfunctioning alert, an operator intervention, a parameter change, a warning message, or some user-initiated action.

R4.1.2 level of code improves the presentation and handling of the cluster state and alerting mechanisms, providing new capabilities to the user.

The TS7700 MI Event panel has been remodeled, now separating between Operator Intervention Required, Tasks, and Events messages. Also, host notification messages will be logged to the Event window, given the user an historical perspective.

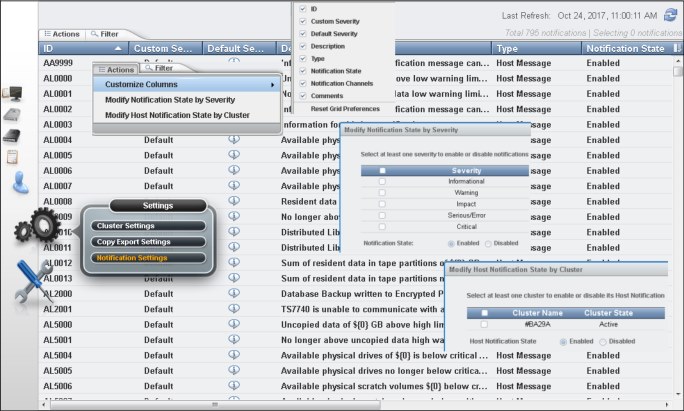

The user is now able to customize the characteristics of each one of the CBR3750I messages, being able to add custom text to each of them. This goal is accomplished through the new TS7700 MI Notification Settings window implemented by the R4.1.2 level of code.

Information is displayed on the Events table for 30 days after the operation stops or the event becomes inactive.

Figure 9-28 TS7700 MI Events window

|

Note: The Date & Time column refers the time of the events to the local time on the computer where the MI was initiated. If the DATA/TIME is modified in the TS7700 from Coordinated Universal Time during installation, the event times are offset by the same difference in the Events display on the MI. Coordinated Universal Time in all TS7700 clusters should be used whenever possible.

|

The Event window can be customized to meet your needs. Select which columns should show in the Events window. Figure 9-29 shows an example and the meaning of the icons used on the Events page.

Figure 9-29 Customizing the Events page and Icons

For more information about the Events page, see IBM Knowledge Center for TS7700, available locally by clicking the question mark symbol at the right of the banner on the TS7700MI, or at this web page:

Table 9-3 lists the column names and descriptions of the fields, as shown in the Event window.

Table 9-3 Field name and description for the Events window

|

Column name

|

Description

|

|||

|

Date & Time

|

Date and time the event occurred.

|

|||

|

Source

|

Cluster where the event occurred.

|

|||

|

Location

|

Specific location on the cluster where the event occurred.

|

|||

|

Description

|

Description of the event.

|

|||

|

ID

|

The unique number that identifies the instance of the event. This number consists of the following values:

•A locally generated ID, for example: 923

•The type of event: E (event) or T (task)

An event ID based on these examples appears as 923E.

|

|||

|

Status

|

The status of an alert or task.

If the event is an alert, this value is a fix procedure to be performed or the status of a call home operation.

If the event is a task, this value is its progress or one of these final status categories:

•Canceled

•Canceling

•Completed

•Completed, with information

•Completed, with warning

•Failed

|

|||

|

System Clearable

|

Whether the event can be cleared automatically by the system. The following values are possible:

•Yes. The event is cleared automatically by the system when the condition that is causing the event is resolved.

•No. The event requires user intervention to clear. The event needs to be cleared or deactivated manually after resolving the condition that is causing the event.

|

|||

Table 9-4 lists the actions that can be run on the Events table.

Table 9-4 Actions that can be run on the Events table

|

To run this task

|

Action

|

|||

|

Deactivate or clear one or more alerts

|

1. Select at least one but no more than 10 events.

2. Click Mark Inactive.

If a selected event is normally cleared by the system, confirm the selection. Other selected events are cleared immediately.

A running task can be cleared but if the task later fails, it is displayed again as an active event.

|

|||

|

Enable or disable host notification for alerts

|

Select Actions → [Enable/Disable] Host Notification. This change affects only the accessing cluster.

Tasks are not sent to the host.

|

|||

|

View a fix procedure for an alert

|

Select Actions → View Fix Procedure.

A fix procedure can be shown for only one alert at a time. No fix procedures are shown for tasks.

|

|||

|

Download a comma-separated value (CSV) file of the events list

|

Select Actions → Download all Events.

|

|||

|

View more details for a selected event

|

1. Select an event.

2. Select Actions → Properties.

|

|||

|

Hide or show columns on the table

|

1. Right-click the table header.

2. Click the check box next to a column heading to hide or show that column in the table. Column headings that are checked display on the table.

|

|||

|

Filter the table data

|

Follow these steps to filter by using a string of text:

1. Click in the Filter field.

2. Enter a search string.

3. Press Enter.

To filter by column heading:

1. Click the down arrow next to the Filter field.

2. Select the column heading to filter by.

3. Refine the selection.

|

|||

|

Reset the table to its default view

|

1. Right-click the table header.

2. Click Reset Table Preferences.

|

|||

9.3.5 Performance

This section present information for viewing IBM TS7700 Grid and Cluster performance and statistics.

All graphical views, except the Historical Summary, are from the last 15 minutes. The Historical Summary presents a customized graphical view of the different aspects of the cluster operation, in a 24-hour time frame. This 24-hour window can be slid back up to 90 days, which covers three months of operations.

For more information about the steps that you can take to achieve peak performance, see IBM Virtualization Engine TS7700 Series Best Practices - Understanding, Monitoring and Tuning the TS7700 Performance, WP101465:

Historical Summary

Figure 9-30 shows the Throughput View for the Historical Summary in Monitor → Performance MI operation in a tape-attached cluster.The performance data for a specific TS7700 cluster can be viewed in this page.

Figure 9-30 Performance window operation, throughput view

The MI is enhanced to accommodate the functions that are introduced by the code. Figure 9-31 shows the Performance Historical Summary and related chart selections that are available for this item.

Figure 9-31 Performance options and chart selections

Table 9-5 lists the chart elements that can be viewed or changed on this page.

Table 9-5 Elements of the Historical Summary chart

|

Element

|

Description

|

|

Y axes

|

The left vertical axis measures either throughput (MiBps) or copies (MiB), depending on the selected data sets. The right vertical axis measures the number of mounted virtual drives, reclaimed physical volumes, and data set size to copy. Possible measurements include MiB, GiBs, milliseconds, seconds, hours, and percentage.

|

|

X axis

|

The horizontal axis measures in hours the time period for the data sets shown. Measurements are shown in 15-minute increments by default. Click the time span (located at the top-center of the chart) to change the display increments. The following are the possible values:

•1 day

•12 hours

•1 hour

•30 minutes

•15 minutes

•custom

|

|

Last 24 hours

|

Click the icon in the top right corner of the page to reset the time span that is shown on the chart to the past 24-hour period. A change to the time span does not alter the configuration of data sets displayed in the main area of the chart.

|

|

Data sets

|

Data sets displayed in the main area of the chart are shown as lines or stacked columns.

Data sets related to throughput and copy queues can be grouped to better show relationships between these sets. See Table 9-6 on page 385 for descriptions of all data sets.

|

|

Legend

|

The area below the X axis lists all data sets selected to display on the chart, along with their identifying colors or patterns. The legend displays a maximum of 10 data sets. Click any data set shown in this area to toggle its appearance on the chart.

Note: To remove a data set from the legend, you must clear it using the Select metrics option.

|

|

Time span

|

The time period from which displayed data is drawn. This range is shown at the top of the page.

Note: Dates and times that are displayed reflect the time zone in which your browser is located. If your local time is not available, these values are shown in Coordinated Universal Time (UTC).

Click the displayed time span to modify its start or end values. Time can be selected in 15-minute increments.

•Start date and time: The default start value is 24 hours before the present date and time. You can select any start date and time within the 90 days that precede the present date and time.

•End date and time: The default end value is 24 hours after the start date or the last valid date and time within a 24-hour period. The end date and time cannot be later than the current date and time. You can select any end date and time that is between 15 minutes and 24 hours later than the start value.

|

|

Presets

|

Click one of the Preset buttons at the top of the vertical toolbar to populate the chart using one of three common configurations:

Throughput: Data sets in this configuration include the following:

•Remote Read

•Remote Write

•Recall from Tape

•Write to Tape

•Link Copy Out

•Link Copy In

•Primary Cache Device Read

Throttling: Data sets in this configuration include the following:

•Average Host Write Throttle

•Average Copy Throttle

•Average Deferred Copy Throttle

Copy queue: Data sets in this configuration include the following:

•Copy Queue Size

The established time span is not changed when a preset configuration is applied.

Note: The preset options that are available depend on the configuration of the accessing cluster. See Table 9-6 on page 385 for existing restrictions.

|

|

Select metrics

|

Click the Select metrics button on the vertical toolbar to add or remove data sets displayed on the Historical Summary chart. See Table 9-6 on page 385 for descriptions of all data sets.

|

|

Download spreadsheet

|

Click the Download spreadsheet button on the vertical toolbar to download a comma-separated (.csv) file to your web browser for the period shown on the graph. In the .csv file, time is shown in 15-minute intervals.

Note: The time reported in the CSV file is shown in UTC. You might find time differences if the system that you are using to access the Management Interface is configured for a different time zone.

|

|

Chart settings

|

Click the Chart settings button on the vertical toolbar to enable the low graphics mode for improved performance when many data points are displayed. Low graphics mode disables hover-over tool tips and improves chart performance in older browsers. If cookies are enabled on your browser, this setting is retained when you exit the browser.

Note: Low graphics mode is enabled by default when the browser is Internet Explorer, version 8 or earlier.

|

Click the Select metrics button to open the Select metrics window to add or remove data sets displayed on the Historical Summary chart.

The Select metrics window organizes data sets by sections and categories.

The user can select up to 10 data sets, as listed in Table 9-6, to display on the Historical Summary chart.

Table 9-6 Data set descriptions

|

Metrics section

|

Metrics category

|

Data set

|

Description

|

|

Throughput

|

I/O

|

Channel R/W MiB/s

|

Transfer rate (MiBps) of host data on the FICON channel, which includes this information:

•Host raw read: Rate that is read between the HBA and host.

•Host raw write: Rate that is written to the virtual drive from the host.

|

|

Throughput

|

I/O

|

Primary Cache Read

|

Data transfer rate (MiBps) read between the virtual drive and HBA for the primary cache repository.

|

|

Throughput

|

I/O

|

Primary Cache Write

|

Data transfer rate (MiBps) written to the primary cache repository from the host through the HBA.

|

|

Throughput

|

I/O

|

Remote Read

|

Data transfer rate (MiBps) to the cache of the accessing cluster from the cache of a remote cluster as part of a remote write operation.

This data set is only visible when the accessing cluster is part of a grid.

|

|

Throughput

|

I/O

|

Remote Write

|

Data transfer rate (MiBps) to the cache of a remote cluster from the cache of the accessing cluster as part of a remote read operation.

This data set is only visible if the access cluster is part of a grid.

|

|

Throughput

|

Copies

|

Link Copy Out

|

Data transfer rate (MiBps) for operations that copy data from the accessing cluster to one or more remote clusters. This is data transferred between legacy TS7700 Grid links.

This data set is only visible if the access cluster is part of a grid.

|

|

Throughput

|

Copies

|

Link Copy In

|

Data transfer rate (MiBps) for operations that copy data from one or more remote clusters to the accessing cluster. This is data transferred between legacy TS7700 Grid links. This data set is only visible if the access cluster is part of a grid.

|

|

Throughput

|

Copies

|

Copy Queue Size

|

The maximum size of the incoming copy queue for the accessing cluster, which is shown in MiBs, GiBs, or TiBs. Incoming copy queue options include the following:

•Immediate

•Synchronous-deferred

•Immediate-deferred

•Deferred

•Family deferred

•Copy refresh

•Time delayed

•Total

This data set is only visible if the accessing cluster is part of a grid.

|

|

Throughput

|

Copies

|

Average Copy Life Span

|

The average age of virtual volumes to be copied to the distributed library for the accessing cluster. The following are the available options:

•Immediate Mode Copy

•Time Delayed Copy

•All other deferred type copies

This data set is only visible if the accessing cluster is part of a grid.

|

|

Storage

|

Cache

|

Cache to Copy

|

The number of GiBs that reside in the incoming copy queue of a remote cluster, but are destined for the accessing cluster. This value is the amount of data that is being held in cache until a copy can be made.

This data set is only visible if the accessing cluster is part of a grid.

|

|

Storage

|

Cache

|

Cache Hit

|

The number of completed mount requests where data is resident in the TVC.

If two distributed library access points are used to satisfy a mount with synchronous mode copy enabled, this count is advanced only when the data is resident in the TVC for both access points. For this reason, this data set is visible only if the accessing cluster is a TS7700 Tape Attach, or is part of a grid that contains a TS7700 Tape Attach.

|

|

Storage

|

Cache

|

Cache Miss

|

The number of completed mount requests where data is recalled from a physical stacked volume.

If two distributed library access points are used to satisfy a mount with synchronous mode copy enabled, this count is advanced when the data is not resident in the TVC for at least one of the two access points. For this reason, this data set is visible only if the accessing cluster is a TS7700 Tape Attach, or is part of a grid that contains a TS7700 Tape Attach.

|

|

Storage

|

Cache

|

Cache Hit Mount Time

|

The average time (ms) to complete Cache Hit mounts. This data set is visible only if the accessing cluster is attached to a tape library. If the cache is partitioned, this value is displayed according to partition.

|

|

Storage

|

Cache

|

Cache Miss Mount Time

|

The average time (ms) to complete Cache Miss mounts.

This data set is visible only if the accessing cluster is attached to a tape library. If the cache is partitioned, this value is displayed according to partition.

|

|

Storage

|

Cache

|

Partitions

Primary Used

|

If the accessing cluster is a TS7720 or TS7760 attached to a tape library, a numbered tab exists for each active partition. Each tab displays check boxes for these categories:

•Cache Hit

•Cache Miss,

•Mount Time Hit

•Mount Time Miss

•Data in Cache

The amount of used cache in a partitioned, primary cache, according to partition.

This data set is only visible if the selected cluster is a TS7700T

|

|

Storage

|

Cache

|

Data Waiting for Premigration

|

The amount of data in cache assigned to volumes waiting for premigration.

This data set is only visible if the selected cluster is a TS7700T.

|

|

Storage

|

Cache

|

Data Migrated

|

The amount of data in cache that has been migrated.

This data set is only visible if the selected cluster is a TS7700T.

|

|

Storage

|

Cache

|

Data Waiting for Delayed Premigration

|

The amount of data in cache assigned to volumes waiting for delayed premigration.

This data set is only visible if the selected cluster is a TS7700T.

|

|

Storage

|

Virtual Tape

|

Maximum Virtual Drives Mounted

|

The greatest number of mounted virtual drives. This value is a mount count.

|

|

Storage

|

Physical Tape

|

Write to Tape

|

Data transfer rate (MiBps) written to physical media from cache. This value typically represents premigration to tape.

This data set is not visible when the selected cluster is not attached to a library.

|

|

Storage

|

Physical Tape

|

Recall from Tape

|

Data transfer rate (MiBps) read from physical media to cache. This value is recalled data.

This data set is not visible when the selected cluster is not attached to a library.

|

|

Storage

|

Physical Tape

|

Reclaim Mounts

|

Number of physical mounts that are completed by the library for the physical volume reclaim cache operation. This value is a mount count.

This data set is not visible when the selected cluster is not attached to a library.

|

|

Storage

|

Physical Tape

|

Recall Mounts

|

Number of physical mounts that are completed by the library for the physical volume reclaim operation.

This data set is not visible when the selected cluster is not attached to a library.

|

|

Storage

|

Physical Tape

|

Premigration Mounts

|

Number of physical mount requests completed by the library required to satisfy pre-migrate mounts.

This data set is not visible when the selected cluster is not attached to a library.

|

|

Storage

|

Physical Tape

|

Physical Drives Mounted

|

The maximum, minimum, or average number of physical devices of all device types concurrently mounted. The average number displays only when you hover over a data point.

This data set is only visible when the selected cluster attaches to a library.

|

|

Storage

|

Physical Tape

|

Physical Mount Times

|

The maximum, minimum, or average number of seconds required to complete the execution of a mount request for a physical device. The average number displays only when you hover over a data point.

This data set is only visible when the selected cluster attaches to a library.

|

|

System

|

Throttling

|

Average Copy Throttle

|

The average time delay as a result of copy throttling, which is measured in milliseconds. This data set contains the averages of nonzero throttling values where copying is the predominant reason for throttling.

This data set is only visible if the selected cluster is part of a grid.

|

|

System

|

Throttling

|

Average Deferred Copy Throttle

|

The average time delay as a result of deferred copy throttling, which is measured in milliseconds. This data set contains the averages of 30-second intervals of the deferred copy throttle value.

This data set is only visible if the selected cluster is part of a grid.

|

|

System

|

Throttling

|

Average Host Write Throttle for Tape Attached Partitions

|

The average write overrun throttle delay for the tape attached partitions. This data set is the average of the non-zero throttling values where write overrun was the predominant reason for throttling.

This data set is only visible if the selected cluster is a TS7720 or TS7760 attached to a tape library.

|

|

System

|

Throttling

|

Average Copy Throttle for Tape Attached Partitions

|

The average copy throttle delay for the tape attached partitions. The value presented is the average of the non-zero throttling values where copy was the predominant reason for throttling.

This data set is only visible if the selected cluster is a TS7720 or TS7760 attached to a tape library.

|

|

System

|

Throttling

|

Average Deferred Copy Throttle for Tape Attached Partitions

|

The average deferred copy throttle delay for the tape attached partitions. This value is the average of 30-second intervals of the deferred copy throttle value during the historical record.

This data set is only visible if the selected cluster is part of a grid and is a TS7720 or TS7760 attached to a tape library.

|

|

System

|

Utilization

|

Maximum CPU Primary Server

|

The maximum percentage of processor use for the primary TS7700 server.

|

|

System

|

Utilization

|

Maximum Disk I/O Usage Primary Server

|

The maximum percentage of disk cache I/O uses as reported by the primary server in a TS7700.

|

For more information about the values and what to expect in the resulting graphs, see Chapter 11, “Performance and monitoring” on page 623.

For more information about the window and available settings, see TS7700 R4.2 IBM Knowledge Center. TS7700 R4.2 IBM Knowledge Center is available locally on the TS7700 MI (by clicking the question mark icon at the upper right corner of the window) and at the following website:

For more information, see IBM Virtualization Engine TS7700 Series Best Practices - Understanding, Monitoring, and Tuning the TS7700 Performance, WP101465:

IBM Virtualization Engine TS7700 Series Best Practices - Understanding, Monitoring, and Tuning the TS7700 Performance is an in-depth study of the inner workings of the TS7700, and the factors that can affect the overall performance of a stand-alone cluster or a TS7700 grid. In addition, it explains throttling mechanisms and available tuning options for the subsystem to achieve peak performance.

Virtual mounts

This page is used to view the virtual mount statistics for the TS7700 Grid. Virtual mount statistics are displayed for activity on each cluster during the previous 15 minutes. These statistics are presented in bar graphs and tables and are organized according to number of virtual mounts and average mount times.

•Number of virtual mounts: This section provides statistics for the number of virtual mounts on a given cluster during the most recent 15-minute snapshot. Snapshots are taken at 15-minute intervals. Each numeric value represents the sum of values for all active partitions in the cluster.Information displayed includes the following:

– Cluster: The cluster name.

– Fast-Ready: The number of virtual mounts that were completed using the Fast-Ready method.

– Cache Hits: The number of virtual mounts that were completed from cache.

– Cache Misses: The number of mount requests that are unable to be fulfilled from cache.

|

Note: This field is visible only if the selected cluster possesses a physical library.

|

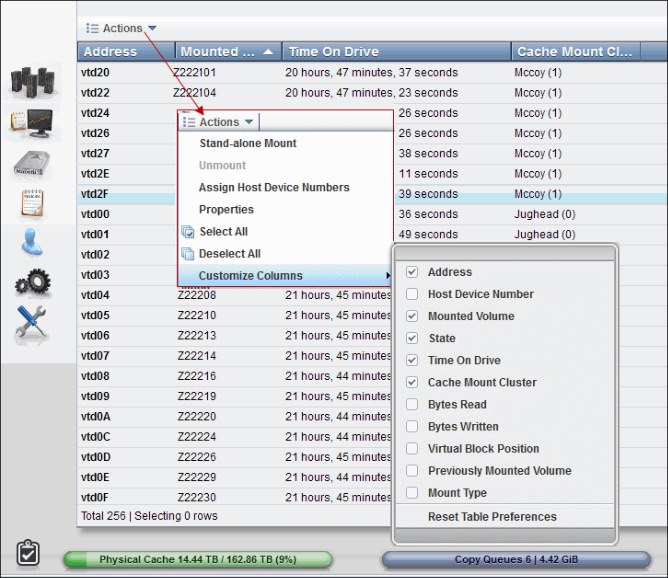

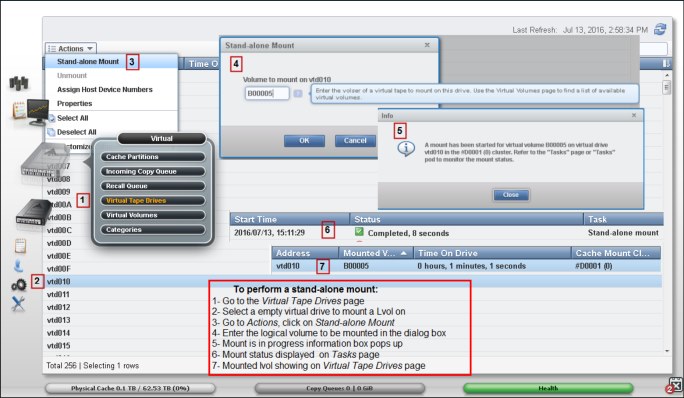

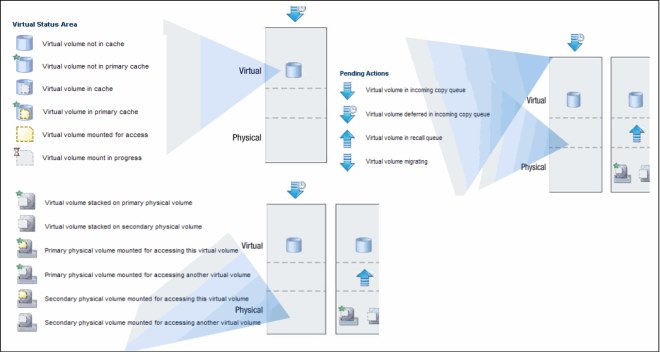

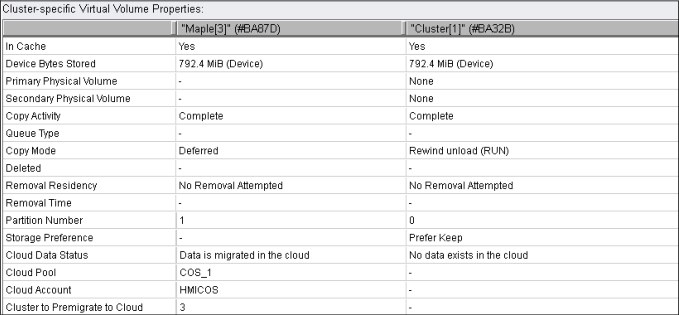

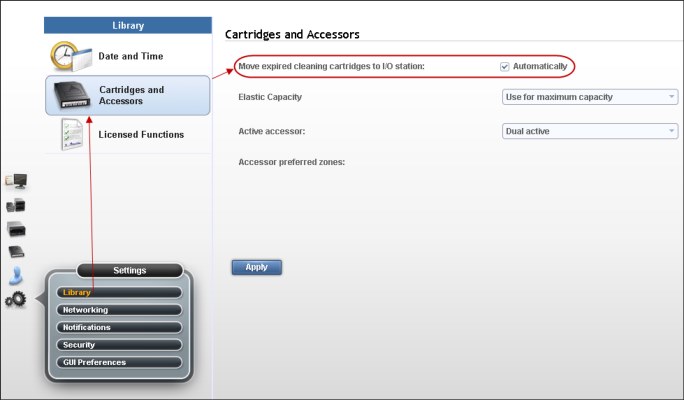

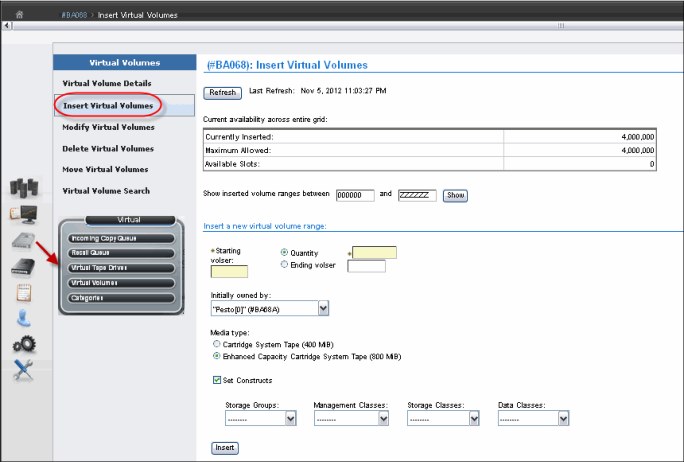

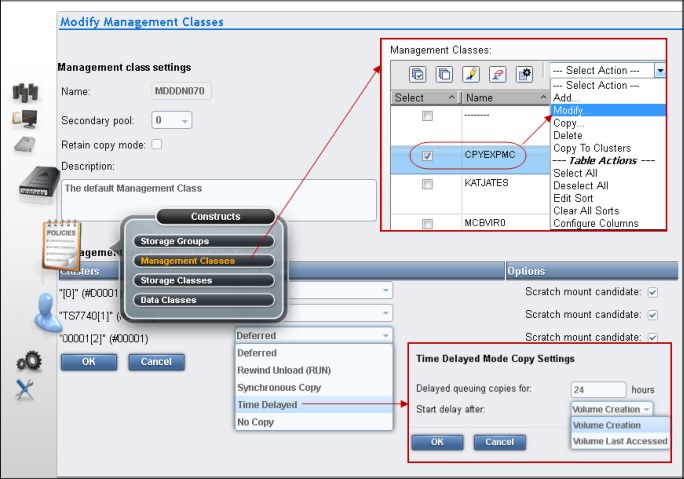

– Total: Total number of virtual mounts.