Chapter 3

Identifying Project Scope Risk

“Well begun is half done.”

—ARISTOTLE

Although beginning well will never actually complete half of a project, beginning poorly will lead to disappointment, rework, stress, and potential failure. A great deal of project risk can be discovered at the earliest stages of project work, when defining the scope of the project.

For risks associated with the elements of the project management triple constraint (scope, schedule, and resources), scope risk generally will be considered first. Of the three types of projects that will fail—those that are beyond your capabilities, those that are overconstrained, and those that are ineffectively executed—the first type is the most significant, because this type of project is literally impossible. Identification of scope risks will reveal either that your project is feasible or that it lies beyond the state of your art. Early decisions to shift the scope or abandon the project are essential on projects with significant scope risks.

There is little consensus in project management circles on a precise definition of “scope.” Broad definitions use scope to refer to everything in the project, and narrow definitions limit project scope to include only project deliverables. For the purposes of this chapter, project scope is defined to be consistent with the Guide to the Project Management Body of Knowledge (PMBOK® Guide). The type of scope risk considered here relates primarily to the project deliverable(s). Other types of project risk are covered in later chapters.

Sources of Scope Risk

Scope risks are most numerous in the Project Experience Risk Information Library (PERIL) database, representing more than one-third of the data. Even more important, risks related to scope accounted for nearly half of the total schedule impact. The two broad categories of scope risk in PERIL relate to changes and defects. By far the most damage was due to poorly managed change (two-thirds of the overall scope impact and almost a third of all the impact in the entire database), but all the scope risks represented significant exposure for these projects. While some of the risk situations, particularly in the category of defects, were legitimately “unknown” risks, quite a few of the problems could have been identified in advance and managed as risks. The two major root-cause categories for scope risk are separated into more detailed sub-categories.

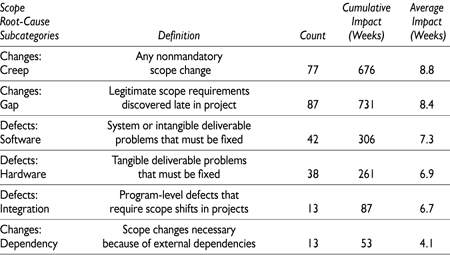

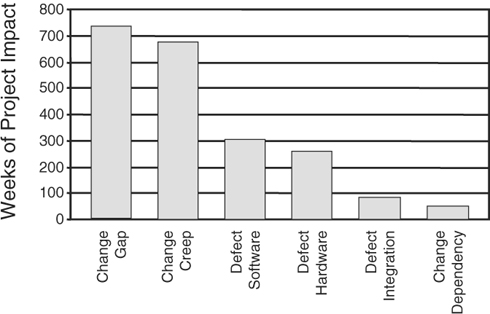

Scope changes due to gaps were the most frequent, but scope creep changes were the most damaging on average. A Pareto chart of overall impact by type of risk is summarized in Figure 3-1, and a more detailed analysis follows.

Change Risks

Change happens. Few if any projects end with the original scope intact. Managing scope risk related to change relies on minimizing the loose ends of requirements at project initiation and having (and using) a robust process for controlling changes throughout a project. In the PERIL database, there are three categories of scope change risks: scope creep, scope gaps, and dependencies.

Figure 3-1. Total project impact by scope root-cause subcategories.

Scope creep was the most damaging type of change risk, resulting in an average schedule slip of nearly nine weeks. Scope gaps were only slightly less damaging, at well over eight weeks of slippage, and were also both more common and had greater total impact. Each of these subcategories individually represented about one-sixth of all the problems in the PERIL database.

Scope gaps are the result of committing to a project before the project requirements are complete. When legitimate needs are uncovered later in the project, change is unavoidable. Some of the overlooked requirements were a consequence of the novelty of the project, and some were because of customers, managers, team members, or other project stakeholders who were not available at the project start. Although some of the scope gaps are probably unavoidable, in most of the cases these gaps were due to incomplete or rushed analysis. A more thorough scope definition and project work breakdown would have revealed the missing or poorly defined portions of the project scope.

Scope creep plagues all projects, especially technical projects. New opportunities, interesting ideas, undiscovered alternatives, and a wealth of other information emerges as the project progresses, providing a perpetual temptation to redefine the project and to make it “better.” Some project change of this sort may be justified through clear-eyed business analysis, but too many of these nonmandatory changes sneak into projects because the consequences either are never analyzed or are drastically underestimated. To make matters worse, the purported benefits of the change are usually unrealistically overestimated. In retrospect, much of scope creep delivers little or no benefit. In some particularly severe cases, the changes in scope delay the project so much that the ultimate deliverable has no value; the need is no longer pressing or has been met by other means. Scope creep represents unanticipated additional investment of time and money, because of both newly required effort and the need to redo work already completed. Scope creep is most damaging when entirely new requirements are piled on as the project runs. Such additions not only make projects more costly and more difficult to manage, they also can significantly delay delivery of the originally expected benefits. Managing scope creep requires an initial requirements definition process that thoroughly considers potential alternatives, as well as an effective process for managing specification changes throughout a project.

Scope creep can come from any direction, but one of the most insidious is from inside the project. Every day a project progresses you learn something new, so it’s inevitable that you will see things that were not apparent earlier. This can lead to well-intentioned proposals by someone on the project team to “improve” the deliverable. Sometimes, scope creep of this sort happens with no warning or visibility until too late, within a portion of the project where the shift seems harmless. Only after the change is made do the real, and sometimes catastrophic, unintended consequences emerge. Particularly on larger, more complicated projects, all changes deserve a thorough analysis and public discussion, with a particularly skeptical analysis of all alleged benefits. Both scope creep and scope gaps are universal and pervasive issues for technical projects.

Scope dependencies are due to external factors that affect the project and are the third category of change risk. (Dependency risks that are primarily due to timing rather than requirements issues are characterized as schedule risks in the database.) Though less frequent in the PERIL database, compared with other scope change risks, scope dependencies did represent an average slippage of over a month. Admittedly, some of the cases in the database involved situations that no amount of realistic analysis would have uncovered in advance. Other examples, though, were a result of factors that should not have come as complete surprises. Although legal and regulatory changes do sometimes happen without notice, a little research will generally provide advance warning. Projects also depend on infrastructure stability, and periodic review of installation and maintenance schedules will reveal plans for new versions of application software, databases, telecommunications, hardware upgrades, or other changes that the project may need to anticipate and accommodate.

Defect Risks

Technical projects rely on many complicated things to work as expected. Unfortunately, new things do not always operate as promised or as required. Even normally reliable things may break down or fail to perform as desired in a novel application. Defects represent about a third of the scope risks and about one-seventh of all the risks in the PERIL database. The three categories of defect risks are software, hardware, and integration.

Software problems and hardware failures were the most common types of defect risk in the PERIL database, approximately equal in frequency. They were also roughly equal in impact, with software defects slightly exceeding seven weeks of delay on average and hardware problems a bit less than seven weeks. In several cases, the root cause was new, untried technology that lacked needed functionality or reliability. In other cases, a component created by the project (such as a custom integrated circuit, a board, or a software module) did not work initially and had to be fixed. In still other cases, critical purchased components delivered to the project failed and had to be replaced. Nearly all of these risks are visible, at least as possibilities, through adequate analysis and planning.

Some hardware and software functional failures related to quality or performance standards. Hardware may be too slow, require too much power, or emit excessive electromagnetic interference. Software may be too difficult to operate, have inadequate throughput, or fail to work in unusual circumstances. As with other defects, the definition, planning, and analysis of project work will help in anticipating many of these potential quality risks.

Integration defects were the third type of defect risk in the PERIL database. These defects related to system problems above the component level. Although they were not as common in the database, they were quite damaging. Integration defects caused an average of nearly seven weeks of project slip. For large programs, work is typically decomposed into smaller, related subprojects that can progress in parallel. Successful integration of the deliverables from each of the subprojects into a single system deliverable requires not only that each of the components delivered operates as specified but also that the combination of all these parts functions as a system. All computer users are familiar with this failure mode. Whenever all the software in use fails to play nicely together, our systems lock up, crash, or report some exotic “illegal operation.” Integration risks, though relatively less common than other defect risks in the PERIL database, are particularly problematic, as they generally occur near the project deadline and are never easy to diagnose and correct. Again, thorough analysis relying on disciplines such as software architecture and systems engineering is essential to timely identification and management of possible integration risks.

Black Swans

Based on schedule impact, the worst 20 percent of the risks from each category in the PERIL database—defined as “black swans”—deserve more detailed attention. We’ll explore these “large-impact, hard-to-predict, rare events” in this section. Each of the black swan risks represented at least three months of schedule slip, so each certainly qualifies as large impact. Black swan risks are rare; the PERIL database has an intentional bias in favor of the most serious risks, which are (or at least we hope are) not risks we expect to see frequently. The purpose of this section and the discussions in Chapters 4 and 5 is to make some of these black swans easier to predict.

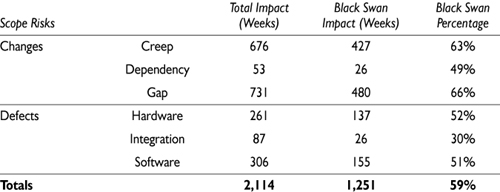

Of the most damaging 127 risks in the PERIL database, 64—just over half—were scope risks. In the database as a whole, the black swans accounted for slightly more than half of the total risk impact. The top scope risks exceeded this with nearly 60 percent of the aggregate scope risk impact. The details are presented in the following table.

As the table shows, the black swan scope risks were dominated by change risk, with about three-quarters of these risks in terms of both quantity and impact. When major change risks occur, their effects are painful. Black swan defect risks were less common as well as somewhat less damaging overall, because recovery from these risks is generally more straightforward.

There were forty-seven black swan scope risks associated with change, dominated by scope gaps (with a total of twenty-five). Examples of the scope gap risks were:

• Project manager expected the solution to be one item, but it proved to be four.

• New technology required unanticipated changes to function.

• Development plans failed to include all of the 23 required applications.

• End users were too little involved in defining the new system.

• Requirements were understood differently by key stakeholders.

• Scope initially proposed for the project did not receive the upper management sign-off.

• Some countries involved provided incomplete initial requirements.

• Fit/gap analysis was poor.

• The architect determined late that the new design plan would be considerably more complex than expected.

• Lack of consensus on the specifications resulted in late project adjustments.

• When a survey required for the project was assigned to several people in different countries, each assumed someone else would do it but no one did.

• A midproject review turned up numerous additional regulations.

• Manufacturing problems were not seen in the original analysis.

Most of the rest of the change risk black swans were attributable to scope creep. Among these twenty-one risks were:

• Scoping for the project increased substantially after the award was won.

• New technology was introduced late in the project.

• The project team agreed to new requirements, some of which proved to be impossible.

• Late changes were poorly managed.

• The contract required state-of-the-art materials, which changed significantly over the project’s two-year duration.

• Volume requirements increased late in the project, requiring extensive rework.

• Mid-project feature revisions had a major impact on effort and schedule, making the product late to market.

• A merger occurred during a companywide software refresh of all desktops and laptops, adding more systems and hugely complicating the project.

• A system for expense analysis expanded into redesign of most major internal systems.

• One partner on a Web design project expanded scope without communicating or getting approval from others.

• Late change required new hardware and a second phase.

• Application changed midproject to appeal to a prospective Chinese customer (who never bought).

• Project specifications changed, requiring material imported from overseas.

There was a single black swan change risk caused by an external dependency (in a pharmaceutical project, a significant study was unexpectedly mandated).

There were fewer black swans in the scope defect categories (seventeen total). Software and hardware defects each caused eight, and one was a consequence of poor integration. Examples of scope defect risks included:

• Redesign was required in a printer development project that failed to meet print quality goals.

• The system being developed had twenty major defects and eighty additional problems that had to be fixed.

• In user acceptance testing, a fatal flaw sent the deliverable back to development.

• During unit testing, performance issues arose with volume loads.

• Contamination of an entire batch of petri plates meant redoing them all.

• Server crashed with four months of information, none of it backed up, requiring everything to be reentered.

• Hardware failed near the end of a three-month final test, necessitating refabrication and retest.

• Purchased component failed, and continuing the project depended on a brute force and difficult-to-support workaround.

• System tool could not be scaled to a huge Web application.

• A software virus destroyed interfaces in two required languages, requiring rework.

• A purchased learning management system had unanticipated modular complexity.

Identifying scope risks similar to the examples given here can expose many potential problems. Reviewing these examples and the additional scope risks from the PERIL database listed in the Appendix can be a good starting point for uncovering possible scope-related problems on your next project.

Defining Deliverables

Scoping gaps were the top category of risk in the PERIL database. Defining deliverables thoroughly is a powerful tool for uncovering these potential project risks. The process for specifying deliverables for a project varies greatly depending on the type and the scale of the project.

For small projects, informal methods can work well, but for most projects, adopting a more rigorous approach is where good project risk management begins. For most projects, defining the deliverables is the initial opportunity for the project leader and team to begin uncovering risks. Whatever the process, the goal of deliverable definition is developing specific, written requirements that are clear, unambiguous, and agreed to by all project stakeholders and contributors.

A good, thorough process for defining project deliverables begins with identifying the people who should participate, including everyone who needs to agree. Project scope risk increases when key project contributors are not involved in the project early enough. Many scope gaps only become visible late in the project when these people do finally join the project team. Whenever it is not possible to work with the specific people who will later be part of the project team, locate and work with people who are available and who can represent all the needed perspectives and functional areas. If you need to, call in favors, beg, plead, or do whatever you need to do to get the right people involved.

Deliverable definition includes all of your core project team, but it rarely ends there. You will also need others from outside your team, from other functions such as marketing, finance, sales, and support. You are also likely to need input from outside your organization, from customers, users, other related project teams, and potential subcontractors. Consider the project over its entire development life cycle. Think about who will be involved with all stages of design, development, manufacturing or assembly, testing, documentation, marketing, sales, installation, distribution, support, and other aspects of the work.

Even when the right people are available and involved early in the initial project definition activities, it is difficult to be thorough. The answers for many questions may not yet be available, and some of your data may be ranges or even guesses. Specifics concerning new methods or technologies add more uncertainty. Three useful techniques for uncovering scope risk are using a documented definition process, developing a straw-man definition document, and adopting a rigorous evolutionary methodology.

Deliverable Definition Process

Processes for defining deliverables vary depending on the nature of the project. For product development projects, the following guidelines are a typical starting point. By reviewing such a list and documenting both what you know and don’t know, you set the foundation for project scope and begin to identify activities for your project plan necessary to fill in the gaps.

Topics for a typical deliverable definition process are:

• Alignment with business strategy (How does this project contribute to stated high-level business objectives?)

• User and customer needs (Has the project team captured the ultimate end-user requirements that must be met by the deliverable?)

• Compliance (Has the team identified all relevant regulatory, environmental, and manufacturing requirements, as well as any relevant industry standards?)

• Competition (Has the team identified both current and projected alternatives to the proposed deliverable, including not undertaking the project?)

• Positioning (Is there a clear and compelling benefit-oriented project objective that supports the business case for the project?)

• Decision criteria (Does this project team have an agreed-upon hierarchy of measurable priorities for cost, time, and scope?)

• Delivery (Are logistical requirements understood and manageable? These include, but are not limited to, sales, distribution, installation, sign-off, and support.)

• Sponsorship (Does the management hierarchy collectively support the project and will they provide timely decisions and ongoing resources?)

• Resources (Does the project have, and will it continue to have, the staffing and funding needed to meet the project goals within the allotted time?)

• Technical risk (Has the team assessed the overall level of risk it is taking? Are technical and other exposures well documented?)

(This list is based on the 1972 SAPPHO Project at the University of Sussex, England.)

Although this list is hardly exhaustive, examining each criterion and documenting the information you already have provides the initial data for scoping and reveals what is missing. Determining the degree to which you understand each element (on a scale ranging from “Clueless” on one extreme to “Omniscient” on the other) reveals the biggest gaps. Although some level of uncertainty is inevitable, this analysis clarifies where the exposures are and helps you and the project sponsor decide whether the level of risk is inappropriately high. The last item on the list, technical risk, is most central to scope risk identification. High-level project risk assessment techniques are discussed in detail later in this chapter.

Straw-Man Definition Document

Most books on project management prattle on about identifying and documenting all the known project requirements. This is much easier said than done in the real world; it is hard to get users and stakeholders of technical projects to cooperate with this strategy. When too little about a project is clear, many people see only two options: accept the risks associated with incomplete definition (including inevitable scope creep), or abandon the project. Between these, however, lies a third option. By constructing a straw-man definition, instead of simply accepting the lack of data, the project team defines the specific requirements. These requirements can come from earlier projects, assumptions, or guesses, or they can come from your team’s understanding of the problem that the project is supposed to solve. Any definition constructed this way is certain to be inaccurate and incomplete, but formalizing requirements leads to one of two beneficial results.

The first possibility is that these made-up requirements will be accepted and approved, giving you a solid basis for planning. Once sign-off has occurred, anything that is not quite right or deemed incomplete can still be changed, but only through a formal project change management process. Some contracting firms get rich using this technique. They win business by quoting fixed fees that are below the cost of delivering all the stated requirements, knowing full well that there will be changes. They then make their profits by charging for the inevitable changes that occur, generating large incremental project billings. Even for projects where the sponsors and project team are in the same organization, the sign-off process gives the project team a great deal of leverage when changes are proposed later in the project. (This whole process brings to mind the old riddle: How do you make a statue of an elephant? Answer: You get an enormous chunk of marble and chip off anything that does not look like an elephant.)

The second possible outcome is a flood of criticism, corrections, edits, and “improvements.” Where most people are intimidated by a blank piece of paper or an open-ended question, everyone seems to be a critic. Once a straw-man requirements document is created, the project leader can circulate it far and wide as “pretty close, but not exactly right yet.” Using such a document to gather comments (and providing big, red pens to get things rolling) is effective for the project, though it can be humbling to the original authors. In any case, it is always better to identify scoping issues early than to find you missed something during acceptance testing.

Evolutionary Methodologies

When the scope gaps are extremely large, a third approach to scope definition may be more productive. Evolutionary (or cyclic) methodologies are sometimes used for software development, where the end deliverable is truly novel and cannot be specified with much certainty. Rather than defining a system as a whole, these more organic approaches set out a general overall objective and then describe incremental stages, each producing a functional deliverable. Development projects have employed these step-by-step techniques since the 1980s, and they are still widely applied for innovative software development by small project teams that have ready access to their end users. The system built at the end of each development cycle adds more functionality, and each release brings the project closer to its destination. As the work continues, specific scope is defined for the next cycle or two using user feedback from testing of the previous cycle’s deliverables. Cycles vary from about two to six weeks, depending on the specific methodology, and the deliverables for later cycles are defined only in general terms. The scoping will evolve as the project proceeds using user evaluations and other data collected along the way for course corrections.

Although this approach can be an effective technique for managing revolutionary projects where definition is not initially possible, it does carry the risk of institutionalizing scope creep. It can also result in “gold plating,” or delivering additional functionality because it’s possible, not because it’s necessary.

Historically, evolutionary methodologies have carried higher costs than other project approaches. Compared with projects that are able to define project deliverables with good precision early using a more traditional “waterfall” life cycle, evolutionary development is both slower and more expensive. By avoiding a meandering definition process and eliminating the need to deliver to users every cycle and then evaluate their feedback, comparative costs for more traditionally run projects may be as little as a third, and timelines can be cut in half. From a risk standpoint, evolutionary methodologies focus primarily on scope risk, starting the project with no certain end date or budget. Without careful management, such projects might never end.

Risk management of these multicycle projects requires frequent reevaluation of the current risks as well as extremely disciplined scope management. To manage overall risk using evolutionary methodologies, set limits for both time and money, not only for the project as a whole but also for checkpoints no more than a few months apart.

Current thinking on evolutionary software development includes a number of methodologies described as agile, adaptive, or lightweight. These methods adopt more robust scope control and incorporate project management practices intended to avoid the “license to hack” nature of some of the earlier evolutionary development models. “Extreme programming” (XP) is a good example of this. XP is intended for use on relatively small software development projects by project teams collocated with their users. It adopts effective project management principles for estimating, managing scope, setting acceptance criteria, planning, and communicating. XP puts pressure on the users to determine the overall scope initially, and based on this the project team determines the effort required for the work. Development cycles of a few weeks are used to implement the scope incrementally, as prioritized by the users, but the amount of scope (which is carved up into “stories”) delivered in each cycle is determined exclusively by the programmers. XP allows revision of scope as the project runs, but only as a zero-sum game—any additions cause something to be bumped out or deferred until later. XP also rigorously avoids scope creep in the current cycle.

Scope Documentation

However you go about defining scope, once it’s defined you need to write it down. Managing scope risk requires a scope statement that clearly defines both what you will deliver and what you will not deliver. One problematic type of scope definition characterizes project requirements as “musts” and “wants.” Although it may be fine to have some flexibility during the earliest project stages, carrying uncertainty into development work exacerbates scope risk. Retaining a list of “want to have” features remains common on many high-tech projects, and this makes planning chaotic and estimates inexact, and ultimately results in late (often expensive) scope changes. From a risk management standpoint, the “is/is not” technique is far superior to “musts and wants.” The “is” list is equivalent to the “musts,” but the “is not” list serves to limit scope. Determining what is not in the project specification is never easy, but if you fail to do it early many scope risks will remain hidden behind a moving target. An “is not” list does not cover every possible thing the project might include. It is generally a list of completely plausible, desirable features that could be included, and in fact might well be in scope for some future project—just not this one.

The “is/is not” technique is particularly important for projects that have a fixed deadline and limited resources, because it defines a boundary for scope consistent with the timing and budget limits. It is nearly always better to deliver the minimum requirements early than either to set aggressive scoping objectives that result in being late or to meet the deadline only by dropping promised features near the end of the project. As you document your project scope, establish limits that define what the project will not include, to minimize scope creep.

There are dozens of formats for a document that defines scope. In product development, it may be a reference specification or a product data sheet. In a custom solution project (and for many other types of projects), it may be a key portion of the project proposal. For information technology projects, it may be part of the project charter document. In other types of projects, it may be included in a statement of work or a plan of record. For agile software methodologies, it may be a brief summary on a Web page and a collection of index cards tacked to a wall or forms taped to a whiteboard. Whatever it may be called or be a part of, an effective definition for project deliverables must be in writing. Specific information typically includes:

• A description of the project (What are you doing?)

• Project purpose (Why are you doing it?)

• Measurable acceptance and completion criteria

• Planned project start

• Intended customer(s) or users

• What the project will and will not include (“is/is not”)

• Dependencies (both internal and external)

• Staffing requirements (in terms of skills and experience)

• High-level risks

• Cost (at least a rough order-of-magnitude)

• Hardware, software, and other infrastructure required

• Detailed requirements, outlining functionality, usability, reliability, performance, supportability, and any other significant issues

• Other data customary and appropriate to your project

The third item on the list, acceptance criteria, is particularly important for identifying defect risks. When the requirements to be used at the end of the project are unclear or not defined, there is little chance that you will avoid problems, rework, and late project delay. The key for identifying scope risk is to capture what you know and, even more important, to recognize what you still need to find out.

High-Level Risk Assessment Tools

Technical project risk assessment is part of the earliest phase of project work, as mentioned in the discussion of the deliverable definition process earlier in this chapter. Even though there is usually little concrete information for initial project risk assessment, there are several techniques that provide useful insight into project risk even in the beginning stages. These tools are:

• Risk framework

• Risk complexity index

• Risk assessment grid

The first two are useful in any project that creates a tangible, physical deliverable through technical development processes. The third is appropriate for projects that have less tangible results, such as software modules, new processes, commercial applications, network architectures, or Internet service offerings. These tools all start with answering the same question: How much experience do you have with the work the project requires? How the tools use this information differs, and each builds on the assessment of technical risk in different directions. These tools are not mutually exclusive; depending on the type of project, more than one of them may be useful in characterizing risk.

Although any of these tools may be used at the start of a project to get an indication of project risk, none of the three is precise. The purpose of each is to provide information about the relative risk of a new project. Each of these three techniques is quick, though, and can provide insight into project risk early in a new project. None of the three is fool-proof, but the results provide as good a basis as you are likely to have for deciding whether to go beyond initial investigation into further project work. (You may also use these three tools to reassess project risk later in the project. Chapter 9 discusses reusing these three tools, as well as several additional project risk assessment methods that rely on planning details to refine project risk assessment.)

Risk Framework

This is the simplest of the three high-level techniques. To assess risk, consider the following three project factors:

1. Technology (the work)

2. Marketing (the user)

3. Manufacturing (the production and delivery)

For each of these factors, assess the amount of change required by the project. For technology, does the project use only well-understood methods and skills, or will new skills be required (or developed)? For marketing, will the deliverable be used by someone (or by a class of users) you know well, or does this project address a need for someone unknown to you? For manufacturing, consider what is required to provide the intended end user with your project deliverable: are there any unresolved or changing manufacturing or delivery channel issues?

For each factor, the assessment is binary: change is either trivial (small) or significant (large). Assess conservatively; if the change required seems somewhere between these choices, treat it as significant.

Nearly all projects will require significant change to at least one of these three factors. Projects representing no (or little) change may not even be worth doing. Some projects, however, may require large changes in two or even all three factors. For technical projects, changes correlate with risk. The more change inherent in a project, and the more different types of change, the higher the risk.

In general, if your project has significant changes in only one factor, it probably has an acceptable, manageable level of risk. Evolutionary-type projects, where existing products or solutions are upgraded, leveraged, or improved, often fall into this category. If your project changes two factors simultaneously, it has higher relative risk, and the management decision to proceed, even into further investigation and planning, ought to reflect this. Projects that develop new platforms intended as the foundation of future project work frequently depend upon new methods for both technical development and manufacturing. For projects in this category, balance the higher risks against the potential benefits.

If your project requires large shifts in all three categories, the risks are greatest of all. Many, if not most, projects in this risk category are unsuccessful. Projects representing this much change are revolutionary and are justified by the substantial financial or other benefits that will result from successful completion. Often the risks seem so great—or so unknowable—that a truly revolutionary project requires the backing of a high-level sponsor with a vision.

A commonly heard story around Hewlett-Packard from the early 1970s involved a proposed project pitched to Bill Hewlett, the more technical of the two HP founders. The team brought a mock-up of a hand-held device capable of scientific calculations with ten significant digits of accuracy. The model was made out of wood, but it had all the buttons labeled and was weighted to feel like the completed device. Bill Hewlett examined the functions and display, lifted the device, slipped it in his shirt pocket, and smiled. The HP-35 calculator represented massive change in all three factors; the market was unknown, manufacturing for it was unlike anything HP had done before, and it was debatable whether the electronics could even be developed on the small number of chips that would fit in the tiny device. The HP-35 was developed primarily because Bill Hewlett wanted one. It was also a hugely successful product, selling more units in a month than had been forecasted for the entire year, and yielding a spectacular profit. The HP-35 also changed the direction of the calculator market completely, and it destroyed the market for slide rules and mechanical computing devices forever.

This story is known because the project was successful. Similar stories surround many other revolutionary products, like the Apple Macintosh, the Yahoo (and then Google) search engine, and home video cassette recorders. Stories around the risky projects that fail (or fall far short of their objectives) are harder to uncover; most people and companies would prefer to forget them. The percentage of revolutionary ideas that “crash and burn,” based on the rate of Silicon Valley start-up company failures, is at least 90 percent. The higher risks of such projects should always be justified by substantial benefits and a strong, clear vision.

Risk Complexity Index

The risk complexity index is the second technique for assessing risk on technical projects. As in the risk framework tool, technology is the starting point. This tool looks more deeply at the technology being employed, separating it into three parts and assigning to each an assessment of difficulty. In addition to the technical complexity, the index looks at another source of project risk: the risk arising from larger project teams, or scale. The following formula combines these four factors:

Index = (Technology + Architecture + System) × Scale

For this index, Technology is defined as new, custom development unique to this project. Architecture refers to the high-level functional components and any external interfaces, and System is the internal software and hardware that will be used in the product. Assess each of these three against your experience and capabilities, assigning each a value from 0 to 5:

0—Only existing technology required

1—Minor extensions to existing technology needed in a few areas

2—Significant extensions to existing technology needed in a few areas

3—Almost certainly possible, but innovation needed in some areas

4—Probably feasible, but innovation required in many areas

5—Completely new, technological feasibility in doubt

The three technology factors will generally correlate, but some variation is common. Add these three factors, to a sum between 0 and 15.

For Scale, assign a value based on the number of people (including all full-time contributors, both internal and external) expected on the project:

0.8—Up to 12 people

2.4—13 to 40 people

4.3—41 to 100 people

6.6—More than 100 people

The calculation for the index yields a result between 0 and 99. Projects with an index below 20 are generally low-risk projects with durations of well under a year. Projects assessed between 20 and 40 are medium risk. These projects are more likely to get into trouble, and often take a year or longer. Most projects with an index above 40 are high risk, finishing long past their stated deadline, if they complete at all.

Risk Assessment Grid

The first two high-level risk tools are appropriate for hardware deliverables. Technical projects with intangible deliverables may not easily fit these models, so the risk assessment grid can be a better approach for early risk assessment.

This technique examines three project factors, similar to the risk framework. Assessment here is based on two choices for each factor, and technology is again the first. The other factors are different, and here the three factors carry different weights. The factors, in order of priority, are: Technology, Structure, and Size.

The highest weight factor, Technology, is based on required change, and it is rated either low or high, depending on whether the project team has experience using the required technology and whether it is well established in uses similar to the current project.

The second factor, Structure, is also rated either low or high, based on factors such as solid formal specifications, project sponsorship, and organizational practices appropriate to the project. Structure is rated low when there are significant unknowns in staffing, responsibilities, infrastructure issues, objectives, or decision processes. Good up-front definition indicates high structure.

The third factor, Size, is similar to the Scale factor in the risk complexity index. A project is rated either large or small. For this tool, size is not an absolute assessment. It is measured relative to the size of teams that the project leader has successfully led in the past. Teams that are only 20 percent larger than the size a project leader has successfully led with should be considered large. Other considerations in assessing size are the expected length of the project, the overall budget for the project, and the number of separate locations where project work will be performed.

Figure 3-2. Risk assessment grid.

After you have assessed each of the three factors, the project will fall into one of the sections of the grid, A through H (see Figure 3-2). Projects in the right column are most risky; those to the left are more easily managed.

Beyond risk assessment, these tools may also guide early project risk management, indicating ways to lower project risk by using alternative technologies, making changes to reduce staffing, decomposing longer projects into a sequence of shorter projects with less aggressive goals, or improving the proposed structure. Use of these and other tools to manage project risk is the topic of Chapter 10.

Setting Limits

Although many scope risks come from specifics of the deliverable and the overall technology, scope risk also arises from failure to establish firm, early limits for the project.

In workshops on risk management, I demonstrate another aspect of scope risk using an exercise that begins with a single U.S. one-dollar bill. I show it to the group, setting two rules:

• The dollar bill will go to the highest bidder, who will pay the amount bid. All bids must be for a real amount—no fractional cents. The first bid must be at least a penny, and each succeeding bid must be higher than earlier bids. (This is the same as with any auction.)

• The second-highest bidder also pays the amount he or she bid (the bid just prior to the winning bid), but gets nothing in return. (This is unlike a normal auction.)

As the auctioneer, I start by asking if anyone wants to buy the dollar for one cent. Following the first bid, I solicit a second low bid, “Does anyone think the dollar is worth five cents?” After two low bids are made, the auction is off and running. The bidding is allowed to proceed to (and nearly always past) $1.00, until it ends. If $1.00 is bid and things slow down, a reminder to the person who has the next highest bid that he or she will spend almost one dollar to buy nothing usually gets things moving again. The auction ends when no new bids are made. The two final bids nearly always total well over $2.00.

By now everything is quite exciting. Someone has bought a dollar for more than a dollar. A second person has bought nothing but paid almost as much. To calm things down, I put the dollar away, explain that this is a lesson in risk management (not a scam), and apologize to people who seem upset.

So, what does the dollar auction have to do with risk management? This game’s outcome is similar to what happens when a project that hits its deadline (or budget), creeps past, and just keeps going. “But we are so close. It’s almost done; we can’t stop now. . . .” The auction effectively models any case where people have, or think they have, too much invested in an undertaking to quit.

Dollar auction losses can be minimized by anticipating the possibility of an uncompensated investment, setting limits in advance, and then enforcing them. Rationally, the dollar auction has an expected return of half a dollar (the total return, one dollar, spread between the two active participants). If each participant set a bid limit of fifty cents, the auctioneer would always lose. For projects, clearly defining limits and then monitoring intermediate results will provide early indication that you may be in trouble. Project metrics such as earned value (described in Chapter 9), are useful in minimizing unproductive investments by detecting project overrun early enough to abort or modify unjustified projects. Defining project scope with sufficient detail and limits is essential for risk management.

Work Breakdown Structure (WBS)

Scope definition reveals some risks, but scope planning digs deeper into the project and uncovers even more. Product definition documents, scope statements, and other written materials provide the basis for decomposing of project work into increasingly finer detail, so it can be understood, delegated, estimated, and tracked. The process used to do this—to create the project work breakdown structure (WBS)—reveals potential defect risks.

One common approach to developing a WBS starts at the scope or objective statement and proceeds to carve the project into smaller parts, working “top down” from the whole project concept. Decomposition of work that is well understood is straightforward and quickly done. Whenever it is confusing or difficult to decompose project work into smaller, more manageable pieces, there is scope risk. If any part of the project resists breakdown using these ideas, that portion of the project is not well understood, and it is inherently risky.

Work Packages

The ultimate goal of the WBS process is to describe the entire project in much smaller pieces, often called “work packages.” Each work package should be deliverable-oriented and have a clearly defined output. General guidelines for the size of the work represented by the work packages at the lowest level of a WBS are usually in terms of duration (between two and twenty workdays) or effort (roughly eighty person-hours or fewer). When breakdown to this level of detail is difficult, it is generally because of gaps in project understanding. These gaps either need to be resolved as part of project scoping or captured as scope risks. (These granularity guidelines foreshadow discussions on estimating risks discussed in Chapters 4 and 5.)

Work defined at the lowest level of a WBS may also be called “activities” or “tasks,” but what really matters is that the effort be defined well enough that you understand how to complete it. If you cannot decompose the work into pieces within the guidelines, note it as a risk.

Aggregation

A WBS is a hierarchy and a useful method for detecting missing work. The principle of “aggregation” for a WBS ensures that the defined work at each level plausibly includes everything needed at the summary level above it. If the listed items under a higher-level work package do not represent its complete “to do” list, your WBS is incomplete. Either complete it by adding the missing work to the WBS, or note the WBS gaps as project scope risks. Any work in the WBS that you cannot adequately describe contributes to your growing accumulation of identified risks.

Parts of a project WBS that resist easy decomposition are rarely visible until you systematically seek them out. The WBS development process provides a tool for separating the parts of the project that you understand from those that you do not. Before proceeding into a project with significant unknowns, you also must identify these risks and determine whether the associated costs and other consequences are justified.

Ownership

There are many reasons why some project work is difficult to break into smaller parts, but the root cause is often a lack of experience with the work required. This is a common sort of risk discovered in developing a WBS, and it relates to delegation and ownership. A key objective in completing the project WBS is the delegation of each lowest-level work package (or whatever you may choose to call it) to someone who will own that part of the project. Delegation and ownership are well established in management theory as motivators, and they also contribute to team development and broader project understanding.

Delegation is most effective when it’s voluntarily. It is fairly common on projects to allow people to assume ownership of project activities in the WBS by signing up for them, at least on the first pass. Although there is generally some conflict over activities that more than one person wants, sorting this out by balancing the workload, selecting the more experienced person, or using some other logical decision process usually works. But when the opposite occurs—when no one wants to be the owner—there are project risks to be identified. Activities without volunteers are risky, but you will need to investigate to find out why. There are a number of common root causes, including the one discussed before: no one understands the work well. Perhaps no one currently on the project has developed key skills that the work requires, or the work is technically so uncertain that no one believes it can be done at all. Or the work may be feasible, but no one believes that it can be completed in the “roughly two weeks” expected for activities defined at the lowest level of the WBS. In other cases, the description of deliverables may be so fuzzy that no one wants to be involved.

There are many other possible reasons, and these are also risks. Of these, availability is usually the most common. If everyone on the project is already working beyond full capacity on other work and other projects, no one will volunteer. Another possible cause might be that the activity requires working with people whom no one wants to work with. If the required working relationships are likely to be difficult or unpleasant, no one will volunteer, and successful completion of the work is uncertain. Some activities may depend on outside support or require external inputs that the project team is skeptical about. Few people willingly assume responsibility for work that is likely to fail because of issues beyond their control.

In addition, the work itself might be the problem. Even easy work can be risky, if people see it as thankless or unnecessary. All projects have at least some required work that no one likes to do. It may involve documentation or some other dull, routine part of the work. If done successfully, no one notices; this is simply expected. If something goes wrong, though, there is a lot of attention. The activity owner has managed to turn an easy part of the project into a disaster, and he or she will at least get yelled at. Most people avoid these activities.

Another situation is the “unnecessary” activity. Projects are full of these too, at least from the perspective of the team. Life-cycle, phase gate, and project methodologies place requirements on projects that seem to be (and in many cases, may actually be) unnecessary overhead. Other project work may be scheduled primarily because it is part of a planning template or because “That’s the way we always do it.” If the work is actually not needed, good project managers work to eliminate it.

To the project risk list, add clear descriptions of each risk identified while developing the WBS, including your best understanding of the root cause for each. These risks may emerge from difficulties in developing the WBS to an appropriate level of detail or in finding willing owners for the lowest-level activities. A typical risk listed might be: “The project requires conversion of an existing database from Sybase to Oracle, and no one on the current project staff has the needed experience.”

WBS Size

Project risk correlates with size; when projects get too large, risk becomes overwhelming. Scope risk rises with complexity, and one measure of complexity is the size of the WBS. Once you have decomposed the project work, count the number of items at the lowest level. When the number exceeds about 200, project risk is high.

The more separate bits of work that a single project leader is responsible for, the more likely it becomes that something crucial to the project will be missed. As the volume of work and project complexity expand, the tools and practices of basic project management become more and more inadequate.

At high levels of complexity, the overall effort is best managed in one of two ways: as a series of shorter projects in sequence delivering what is required in stages, or as a program made up of a collection of smaller projects. In both cases, the process of decomposing the total project into sequential or parallel parts is done using a decomposition very like a WBS. In the case of sequential execution, the process is essentially similar to the evolutionary methodologies discussed previously in this chapter. For programs, the resulting decomposition creates a number of projects, each of which will be managed by a separate project leader using project management principles, and the overall effort will be the responsibility of a program manager. Project risk is managed by the project leaders, and overall program risk is the responsibility of the program leader. The relationship between managing project and program risk is discussed in Chapter 13.

When excessively lengthy or complex projects are left as the responsibility of a single project leader to plan, manage risk, and execute, the probability of successful completion is low.

Other Scope-Related Risks

Not all scope risks are strictly within the practice of project management. Examples are market risk and confidentiality risk. These risks are related, and although they may not show up in all projects, they are fairly common. Ignoring these risks is inappropriate and dangerous.

A business balance sheet has two sides: assets and liabilities. Project management primarily focuses on “liabilities,” the expense and execution side, using measures related to the triple constraint of “scope/schedule/resources.” Market and confidentiality risks tend to be on the asset, or value, side of the business ledger, where project techniques and teams are involved indirectly, if at all. Project management is primarily about delivering what you have been asked to deliver, and this does not always equate to “success” in the marketplace. Although it is obvious that “on time, on budget, within scope” will not necessarily make a project an unqualified success, managing these aspects alone is a big job and is really about all that a project leader should reasonably be held responsible for. The primary owners for market and confidentiality risks may not even be active project contributors, although many kinds of technical projects now engage cross-functional business teams—making these risks more central to the project. In any case, the risks are real, and they relate to scope. Unless identified and managed, they too contribute to project failure.

Market Risk

This first type of risk is about getting the definition wrong. Market risk can relate to features, timing, cost, or almost any facet of the deliverable. Various scenarios can trigger problems. When long development efforts are involved, the problem to be solved may change, go away, or be better addressed by an emerging new technology. A satisfactory deliverable may be brought to market a week after an essentially identical offering from a competitor. Even when a project produces exactly what was requested by a sponsor or economic buyer, it may be rejected by the intended end user. Sometimes the people responsible for promoting and selling a good product do not (or cannot) follow through. Many paths can lead to a result that meets the specifications and is delivered on time and on budget, yet is never deployed or fails to achieve expectations.

The longer and the more complicated the project is, the greater the market risk will tend to be. Project leaders contribute to the management of these risks through active, continuing participation in any market research and customer interaction, and by frequently communicating with (ideally, without annoying) all the people surrounding the project who will be involved with deployment of the deliverable.

Some of the techniques already discussed can help in managing this. A thorough process for deliverable definition probes for many of the sources of market risk, and the high-level risk tools outlined previously also provide opportunities to understand the environment surrounding the project.

In addition, ongoing contact with the intended users, through interviews, surveys, market research, and other techniques, will help to uncover problems and shifts in the assumptions the project is based upon. Agile methodologies employ ongoing user involvement in the definition of short, sequential project cycles, minimizing the “wrong” deliverable risk greatly for small project teams colocated with their users.

If the project is developing a product that will compete with similar offerings from competitors, ongoing competitive analysis to predict what others are planning can be useful (but, of course, competitors will not make this straightforward or easy—confidentiality risks are addressed next). Responsibility for doing this may be fully within the project, but if it is not, the project team should still review what is learned, and if necessary, encourage the marketing staff (or other stakeholders) to keep the information up to date.

The project team should always probe beyond the specific requirements (the stated need) to understand where the specifications come from (the real need). Understanding what is actually needed is generally much more important than simply understanding what was requested, and it is a key part of opportunity management. Early use of models, prototypes, mock-ups, and other simulations of the deliverable will help you find out whether the requested specifications are in fact likely to provide what is needed. Short cycles of development with periodic releases of meaningful functionality (and value) throughout the project also minimize this category of risk. Standards, testing requirements, and acceptance criteria need to be established in clear, specific terms, and periodically reviewed with those who will certify the deliverable.

Confidentiality Risk

A second type of risk that is generally not exclusively in the hands of the project team relates to secrecy. Although some projects are done in an open and relatively unconstrained environment, confidentiality is crucial to many high-tech projects, particularly long ones. If information about the project is made public, its value could decrease or even vanish. Better-funded competitors with more staff might learn of what you are working on and build it first, making your work irrelevant. Of course, managing this risk well will potentially increase the market risk, as you will be less free to gather information from end users. The use of prototypes, models, mock-ups, or even detailed descriptions can provide data to competitors that you want to keep in the dark. On some technical projects, the need for secrecy may also be a specific contractual obligation, as with government projects. Even if the deliverable is not a secret, you may be using techniques or methodologies that are proprietary competitive advantages, and loss of this sort of intellectual property also represents a confidentiality risk.

Within the project team, several techniques may help. Some projects work on a “need to know” basis and provide to team members only the information required to do their current work. Although this will usually hurt teamwork and motivation, and may even lead to substandard results (people will optimize only for what they know, not for the overall project), it is one way to protect confidential information.

Emphasizing the importance of confidentiality also helps. Periodically reinforce the need for confidentiality with all team members, and especially with contractors and other outsiders. Be specific about the requirements for confidentiality in contract terms when you bring in outside help, and make sure all nondisclosure terms are clearly understood. Any external market research or customer contact also requires effective nondisclosure agreements, again with enough discussion to make the need for secrecy clear.

In addition to all of this, project documents and other communication must be appropriately marked “confidential” (or according to the requirements set by your organization). Restrict distribution of project information, particularly electronic versions, to people who need it and who understand, and agree with, the reasons for secrecy. Protect information stored on computer networks or the Internet with passwords that are changed often enough to limit inappropriate access. Use legal protections such as copyrights and patents as appropriate to establish ownership of intellectual property. (Timing of patents can be tricky. On the one hand, they protect your work. On the other hand, they are public and may reveal to competitors what you are working on.)

Although the confidentiality risks are partially the responsibility of the project team, many lapses are well out of their control. Managers, sponsors, marketing staffs, and favorite customers are the sources for many leaks. Project management tools principally address execution of the work, not secrecy. Effective project management relies heavily on good, frequent communication, so projects with heavy confidentiality requirements can be difficult and even frustrating to lead. Managing confidentiality risk requires discipline, frequent reminders of the need for secrecy to all involved (especially those involved indirectly), limiting the number of people involved, and more than a little luck.

Documenting the Risks

As the requirements, scope definition documents, WBS, and other project data start to take shape, you can begin to develop a list of specific issues, concerns, and risks related to the scope and deliverables of the project. When the definitions are completed, review the risk list and inspect it for missing or incomplete information. If some portion of the project scope seems likely to change, note this as well. Typical scope risks involve performance, reliability, untested methods or technology, or combinations of deliverable requirements that are beyond your experience base. Make clear why each item listed is an exposure for the project; cite any relevant specifications and measures that go beyond those successfully achieved in the past in the risk description, using explicitly quantified criteria. An example might be, “The system delivered must execute at double the fastest speed achieved in the prior generation.”

Sources of specific scope risks include:

• Requirements that seem likely to change

• Mandatory use of new technology

• Requirements to invent or discover new capabilities

• Unfamiliar or untried development tools or methods

• Extreme reliability or quality requirements

• External sourcing for a key subcomponent or tool

• Incomplete or poorly defined acceptance tests or criteria

• Technical complexity

• Conflicting or inconsistent specifications

• Incomplete product definition

• Large WBS

Using the processes for scope planning and definition will reveal many specific technical and other potential risks. List these risks for your project, with information about causes and consequences. The list of risks will expand throughout the project planning process and will serve as your foundation for project risk analysis and management.

Panama Canal: Setting the Objective (1905–1906)

One of the principal differences between the earlier unsuccessful attempt to build the Panama Canal and the later project was the application of good project management practices. However, the second project had a shaky beginning. It was conceived as a military project and funded by the U.S. government, so the scope and objectives for the revived Panama Canal project should have been clear, even at the start. They were not.

The initial manager for the project when work commenced in 1904 was John Findlay Wallace, formerly the general manager of the Illinois Central Railroad. Wallace was visionary; he did a lot of investigating and experimenting but he accomplished little in Panama. His background included no similar project experience. In addition to his other difficulties, he could do almost nothing without the consent of a seven-man commission set up back in the United States, a commission that rarely agreed on anything. Also, nearly every decision, regardless of size, required massive amounts of paperwork. A year later, in 1905, US$128 million had been spent but still there was no final plan, and most of the workers were still waiting for something to do. The project had in most ways picked up just where the earlier French project had left off, problems and all. Even after a year, it was still not clear whether the canal would be at sea level or constructed with locks and dams. In 1905, mired in red tape, Wallace announced the canal was a mistake, and he resigned.

John Wallace was promptly replaced by John Stevens. Stevens was also from the railroad business, but his experience was on the building side, not the operating side. He built a reputation as one of the best engineers in the United States by constructing railroads throughout the Pacific frontier. Before appointing Stevens, Theodore Roosevelt eliminated the problematic seven-man commission, and he significantly reduced the red tape, complication, and delay. As chief engineer, Stevens, unlike Wallace, effectively had full control of the work. Arriving in Panama, Stevens took stock and immediately stopped all work on the canal, stating, “I was determined to prepare well before construction, regardless of clamor of criticism. I am confident that if this policy is adhered to, the future will show its wisdom.” And so it did.

With the arrival of John Stevens, managing project scope became the highest priority. He directed all his initial efforts at preparation for the work. He built dormitories for workers to live in, dining halls to feed them, warehouses for equipment and materials, and other infrastructure for the project. The doctor responsible for health of the workers on the project, William Crawford Gorgas, had been trying for over a year to gain support from John Wallace for measures needed to deal with the mosquitoes, by then known to spread both yellow fever and malaria. Stevens quickly gave this work his full support, and Dr. Gorgas proceeded to eradicate these diseases. Yellow fever was conquered in Panama just six months after Dr. Gorgas received Stevens’s support, and he made good progress combating malaria as well.

Under the guidance of Stevens, all the work was defined and planned employing well-established, modern project management principles. He said, “Intelligent management must be based on exact knowledge of facts. Guess work will not do.” He did not talk much, but he asked lots of questions. People commented, “He turned me inside out and shook out the last drop of information.” His meticulous documentation served as the basis for work throughout the project.

Stevens also determined exactly how the canal should be built, to the smallest detail. The objective for the project was ultimately set in 1907 according to his recommendations: The United States would build an eighty-kilometer (fifty-mile) lock-and-dam canal at Panama connecting the Atlantic and Pacific oceans, with a budget of US$375 million, to open in 1915. With the scope defined, the path forward became clear.