11

Eye-Based Cursor Control and Eye Coding Using Hog Algorithm and Neural Network

S. Sivaramakrishnan1*, Vasuprada G.2, V. R. Harika2, Vishnupriya P.2 and Supriya Castelino2

1Department of Information Science and Engineering, New Horizon College of Engineering, Bangalore, India

2Department of Electronics and Communication, Dayananda Sagar University, Bangalore, India

Abstract

For the physically challenged people cursor control using the eye movement plays a major role in helping them to perform the necessary task. Non-intrusive gaze estimation technique can help in the communication between humans and computers. The eye-gaze will be handy for the communication between physical challenged person and the computer. The pupil movement patterns also called as Eye Accessing Cues (EAC) is related to human brain’s cognitive processes. This method in particular will be beneficial to the physical challenged people with disabilities such as Amyotrophic Lateral Sclerosis (ALS). In this chapter, a technique is proposed to control the mouse cursor in real time using the pupil movements. With the application of the image processing and graphic user interfacing technique (GUI) the cursor control can be rolled into reality using blink detection and gaze estimation. Hog algorithm helps in identifying the eye blink and pupil movements. Based on the detection the cursor control is carried out either directly tracking the pupil movement or tracking the same with the help of camera connected to the system. In this chapter the gaze direction is performed using Hog algorithm and machine learning algorithm. Finally, the cursor control is implemented by GUI automation. The same technique can be expanded to help the physically challenged person to write content and also to do python coding.

Keywords: Eye gaze detection, hog algorithm, cursor control, GUI automation

11.1 Introduction

To meet the challenges of the modern world the technology keeps on updating and certainly the technology has helped human begins to greater extend. This chapter proposes the use of the latest technology to help the people with severe disabilities to communicate to the real world with the help of the computer by tracking the eye movement. The proposed technique tracks the pupil movement with the help of the web camera. The proposed technique is efficient and simple. The technique used to communicate with the help of eye movement is called as eye writing and this technique help the physical challenged people especially with ALS (amyotrophic lateral sclerosis) there by then can express and convey message to other people. The coding is done using python programming for the hog algorithm to implement this technique and it an effective method to help people communication with eye detection and eye gazing and more importantly they can achieve it without the help of others.

The hog algorithm in the image processing technique is used to extract the features of human being and find its application in face detection. The region of interest for this application is around eye and the pupil movement can be obtained using the facial landmarks and with help of the geometric relationship. The data gathered in the area of interest can be used further to detect eye blink and other location of pupil help in the movement of the cursor which is explained in the subsequent session. The pupil can move left, right, top and bottom and based on the movement the necessary control action can be carried out. The control action result in the movement of the cursor. So the cursor control is due to two factors namely blink detection and gaze determination. This technique also helps the physically challenged people to write a coding in python language and compile the same. The eye based control technique can also be used with the emerging technology like augmented reality (AR) as the tacking technique used in eye gazing will add weightage to AR. The two technique used for classifying the direction of the eye movement are Hog Based technique and Machine learning technique which are elaborated in details in the following sessions.

11.2 Related Work

In the article “Hand free Control PC control “a simple matching technique is proposed [1]. Support vector machine (SVM) learning algorithm is used for appropriate window positioning. This technique offers a real time cursor control as it could offer high speed and accuracy. The programming is done in JAVA language as the computational cost is less and has an advantage of platform independence.

In the article “Enhanced Cursor Control Using Eye Mouse” the face detection is carried out using viola-jones algorithm [2]. Here Spherical Eyeball Model and Houghman circle detection algorithm are used for locating the iris. With the help of the calibration points the gaze position is determined. This method provides better performance and accurate tracking of the eye movement.

In the article “A face as a Mouse for disabled person” the detection of the facial features like eyes, nose, and the distance between two eyes is detected using the clustering algorithm [3]. The cursor movement in this method is directly proportional to the eye movements. The method offers an advantage of precise eye tracking also it is cost effective.

In the article “Sleepiness Detection System Bases on Facial Expression” Dlib tool is used along with the camera for identifying the mouth and the eye movements [4]. This method could detect the blink detection with an accuracy of around 90% and drowsiness detection with an accuracy of about 91.7%.

In the article “Communication through real-time video oculography using face landmark detection” the technique used, plays a major role in assisting the person to communication to the real world [5]. The combination of expression used in face is used to convey different information. The image processing tools such as opencv and dlib tool is used for building the model. The dlib tool detects the eye blink using the facial landmarks. The various eye pattern helps to provide corresponding visual or audio communication. This method also provides better efficiency in differentiating the voluntary and involuntary blinks.

In the article “Eye blink detection using the facial landmarks” an algorithm is proposed to operate in real time for the identification of blink in the video sequence [5]. The model is trained with various landmarks in the face using the data and this helps in identifying the eye blink detection. This technique also helps in finding the level of eye opening and calculates the eye aspect ratio effectively. The Markov model is used to measures the eye closure so as to determine blink detection. For accurate classification, machine learning algorithm SVM was used in the model.

In the article “Eye-Gaze determination using Convolutional Neural network” for the detection of eye gaze direction and Eye Accessing Cues (EAC) the real time framework is used [6]. The viola-jones algorithm is used for detection of face features. For the estimation of the gaze direction Convolution neural network (CNN) is used. The advantage of this method is it offers computational time of 42 ms and also works well with rotation of the face. With the CNN this method provides better human to computer interaction.

In the article “Precise Eye-Gaze Detection and tracking system” the authors have used real time system which provides contact free eye tracking [7]. The technique detects the center for pupil accurately and thereby can follow the movement of head. PupilGlint vector is used to determine the line of gaze and the validation of the glints is done using iris outline. This method process 25 frames per second and provides the advantage of less tracking error caused due movement of head and slow blinking.

In the article “Facial and eye Gaze Detection” SVM algorithm is used and the computer vision technology help in locating the facial features [8]. The system is trained with 3D data and thereby the neural network helps in accurate gaze position. One disadvantage of this method is high processing time for determining the gaze movement involving the 3D rotations. Also the mean square error does not offer satisfactory result.

In the article “Eye writer” a technique is proposed to determine the dark and the bright pupils of the eyes which felicitates in the eye tracking [9]. PS3 camera is used along with computer vision technology for capturing the high quality image. IR illumination is used to improve the quality of the image and MOSFET included in the model is used to vary the resistance. This model has an advantage of low cost as the technique uses the open source tools.

11.3 Methodology

The eye based cursor control is achieved by the combination of action such as gaze detection, GUI automation and blink detection. Figure 11.1 represented below shown the eye based cursor control. Through the webcam the region of interest is captured followed by that the eye blink detection, eye gaze detection and GUI Automation is performed using the Hog Algorithm and finally the mouse control and program coding is carried out.

Figure 11.1 Eye-based cursor and coding control.

11.3.1 Eye Blink Detection

As mention the HOG algorithm is used to detect the blink of the eye in the region of interest. OpenCV and dlib tool are used to detect the face of a person and thereby in the region of interest the blink detection is carried out [10, 11]. The next step is to detect the position of left and the right eye which can be accomplished with the help of the facing landmarks. Then the distance between horizontal as well as vertical direction is calculated. The measured parameter helps in estimating ratio of horizontal to vertical length. The above mentioned calculation is carried out for both the eyes. A threshold length is then fixed and if the measured distance is less than the threshold length then the blink is said to be detected. On the occurrence of the blink detection the necessary control action like mouse clicking such as right click or left click and scrolling events can be carried out. Figure 11.2 shows represents the identifying of the facial features with the help of the facial points. The figure is obtained by using the HOG algorithm and various points represents the facial landmarks.

Figure 11.2 Facial landmarks.

11.3.2 Hog Algorithm

Histogram of Oriented Gradients in short called as HOG algorithm is a simple, efficient and powerful feature descriptor which detects the object using local intensity gradient distribution. HOG algorithm find its application not only in face detection but also in detecting the object. The HOG is a two stage process algorithm. In the stage one, the histogram of small part of the image is found and later all the histogram is combined to form a single one. Figure 11.3 clearly shows how HOG algorithm process an input image.

As shown in the above figure in the stage one histogram is obtained for small parts of the image and in stage two overall histograms are obtained. Hence this algorithm has high accuracy in locating the facial features. Also for each input images a unique feature vector is obtained.

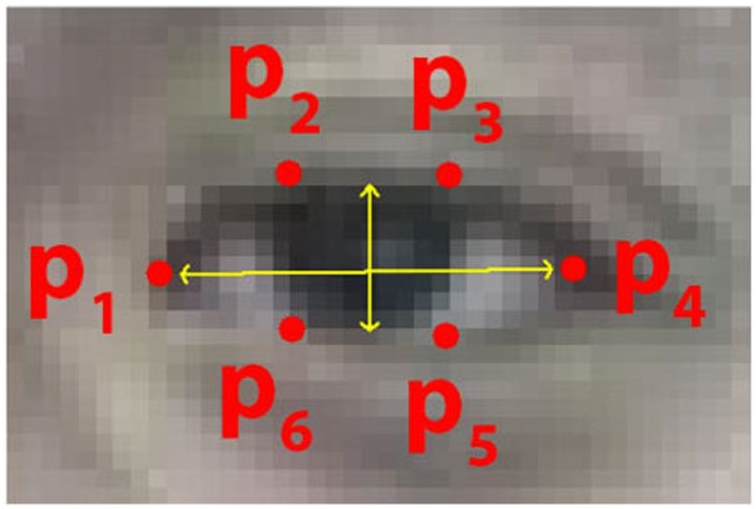

Figure 11.4 represented below shows the landmark points made over a human eye using the HOG algorithm.

Figure 11.3 Two-stage processing of HOG algorithm.

Figure 11.4 Landmark on human eye.

11.3.3 Eye Gaze Detection

Eye gaze detection can be implemented using either of these two techniques:

- Deep learning and CNN

- Gaze determination using HOG algorithm

11.3.3.1 Deep Learning and CNN

Deep learning is a subset of Artificial intelligence which is concerned with emulating the learning approach similar to human begins to overcome certain task. CNN is a special type of neural network model which works with 2D images. The model is first trained with a good number of images and with the knowledge gain it can predict the category of a new image. Like now human brain is connected with neuron, the CNN also have neuron which is designed mathematically which tries to replicate what human neuron does. CNN consists of multiple layers and each layer consists of neurons and each neuron in one layer is connected to all the neuron in the next layer. The fully connected network some time vulnerable to overfitting and to overcome the same, the regularization technique such as varying the weight of neuron is performed. The CNN hold the advantage of less processing time compared to other algorithm used for image. So the CNN quickly learn with the trained data and can accurately predict the classification of image of unknown data. Our objective here is to detect the gaze direction using CNN and there are totally five categories namely

- Right

- Left

- Centre

- Up

- Down

For each of the above category, the CNN is trained with a set of data called as training data set and some set of data are used for validation to check the efficiency of the model. Since the model deal with classification of the image into five categories, the category method is used while coding in python for the gaze determination. The necessary libraries to be import during coding are opencv, numpy, matplotlib, and the framework used is tensorflow. The root mean square error is monitored and it is optimized so as to adjust the learning rate automatically [12]. The labeling of the data can be done with the help of the Image Data Generator method available in python. In the CNN max pooling followed by dense layer are used to build the model and for the final layer ReLu activation function is used. ReLu stands for rectified linear unit which provides two outputs one is the direct input if the input is positive else the output is zero. Most of the CNN and Artificial Neural networks (ANN) uses ReLu as the default activation function [13]. The other activation function which are used is softmax activation function, which return the maximum value in the list [14, 15]. It is also better to start with ReLu activation function first before trying with the other activation function. To determine the loss while training the data, categorical cross entropy is used in multi class classification.

11.3.3.2 Hog Algorithm for Gaze Determination

Histogram of Oriented Gradients (HOG) is applied over the region of interest, for eye gazing the region of interest is human face. It is a descriptive algorithm which performs the necessary computation along the grid of cells in the identified region of interest. The facial landmarks obtained is mention in the Figure 11.4 and these identified points are useful in determining the gaze ratio for all the five categories namely up, down, left, right and center. And the gaze ratio is defined as the ratio of number of pixels extending in the white region in the left side to the right side of eye. And the gaze ratio is used to determine the eye gaze in all the four direction.

11.3.4 GUI Automation

The library used to obtain the Graphical User Interface is ‘pyautogui’ which is available in python programming. The keyboard and the mouse can be controlled by user with the help of the ‘puautogui’ library and thereby the automatic interaction can happen. Thus the automatic mouse clicks, keystrokes from a keyboard, cursor navigation, cursor positioning and display of message is achieved. With the help of the detection of eye blink and the eye gaze detection the GUI automation is performed. For example, if left eye blink is detected then left click in the mouse is automated and on the detection of right blink, right click is automated. One important thing to be considered is the eye blink period is to be defined in advance so any involuntary blink will not be taken into the account. By closing the eye for certain duration the person can able to select the key to be pressed for eye based coding. The code compiling is carried out using hotkey feature which is available in the ‘pyautogui’ library. The keyset used consists of the keyboard keys and frequently used keywords thereby it makes the easy for the person to easily perform eye based control.

11.4 Experimental Analysis

As mentioned earlier two types of operation can be performed namely eye based cursor control and eye coding. The hardware and the software requirement are as follows. The in-built web in the laptop or an external web with a minimum resolution of 0.922 mega pixel is required. But the algorithm used in this chapter namely HOG algorithm or the deep learning model can perform well even if the resolution is around 0.6 megapixels. The python programming is used which has the advantage of many libraries as mention early in the chapter which can support image processing techniques.

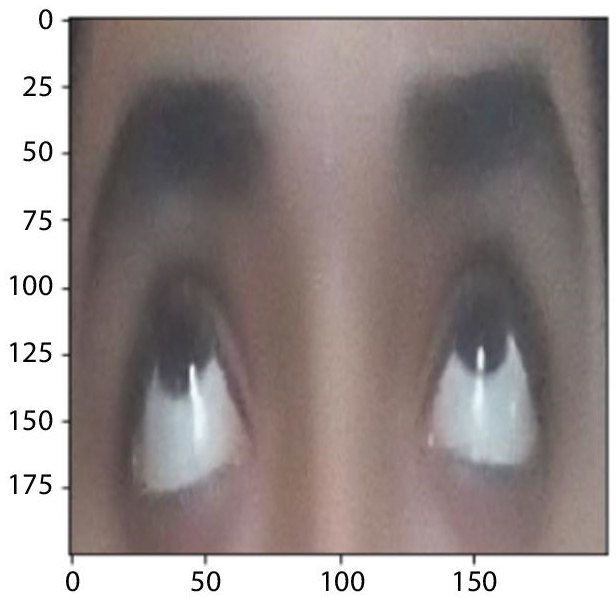

11.4.1 Eye-Based Cursor Control

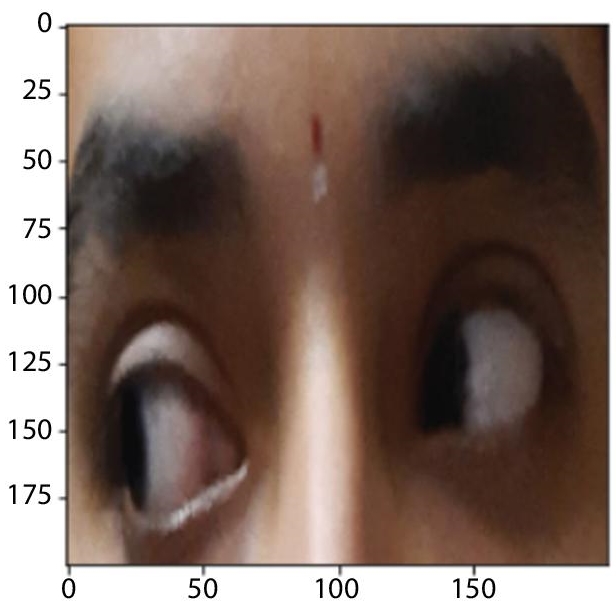

The combined action of gaze detection and the eye blink detection felicities the cursor control. So whenever a gaze is detected, the cursor is programmed to move in a certain direction and for the number of pixel distance mentioned. Figure 11.5 represented below shown the image used for the HOG algorithm. This input image is monitored for the blink and the gaze detection. And based on the detection the left click, right click, scroll can be performed.

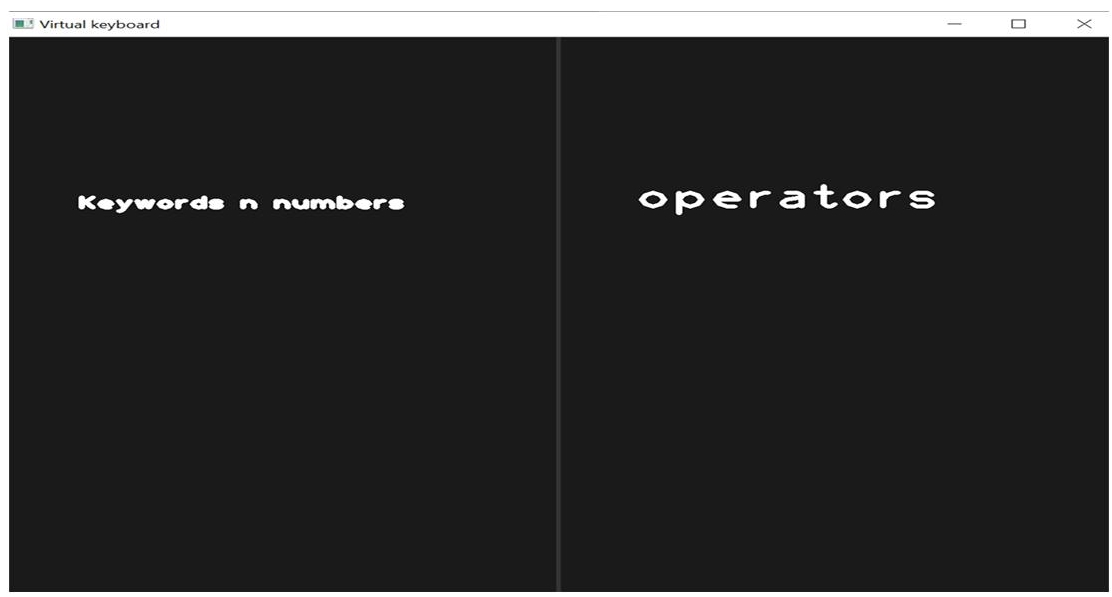

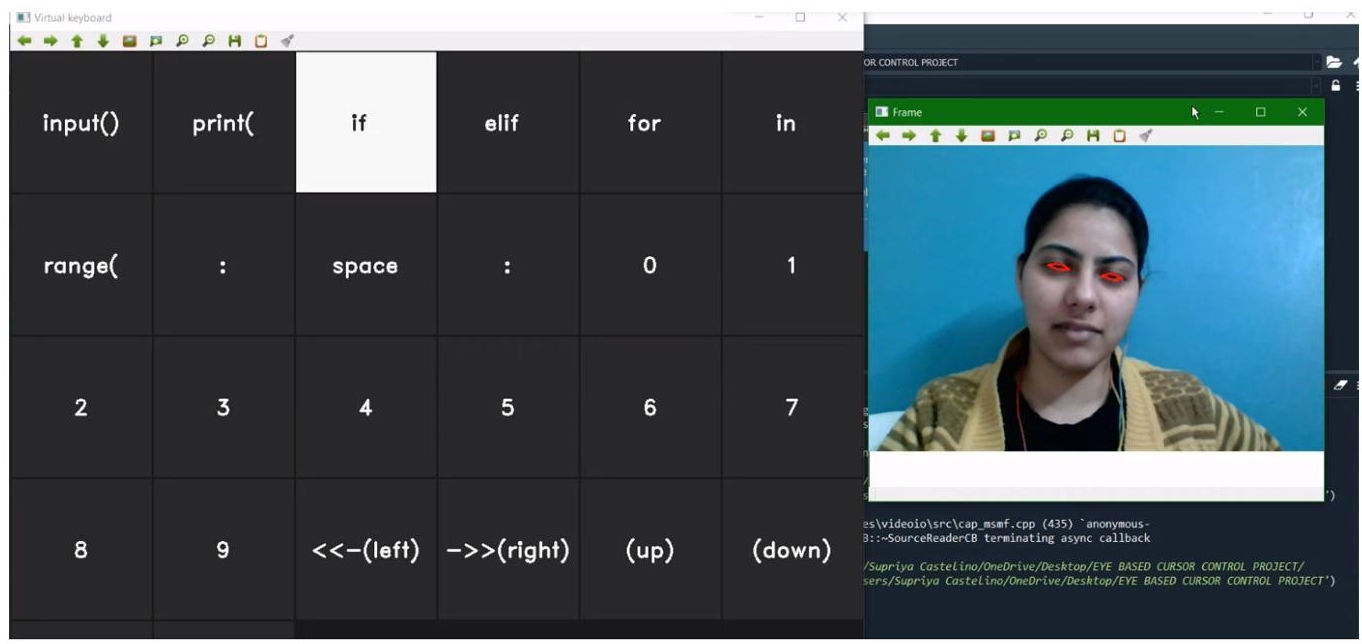

11.4.2 Eye Coding

The technique used to write the content with the movement of the eye is called as eye detection [16−18]. This can be achieved with opencv and coding in python, where the keyboard is controlled with gaze movement. For this process a virtual keypad is created as shown in the Figure 11.6 below. The virtual keypad is divided into rows and columns. Each cell has a unique value and when the cell is highlighted the desired key is pressed by closing the eye for a duration of 2 seconds. The time duration of 2 seconds is provided so as to eliminate any action because of involuntary blink.

Figure 11.5 Image captured for processing.

Figure 11.6 Virtual keypad.

Figure 11.7 shown below is the virtual keyboard, Figures 11.8, 11.9, and 11.10 represents the gaze detected for left, right and bottom respectively. Figure 11.7 will be the image which will appear once the python program is run. The three gaze direction are used to access the keypad. For user friendly approach the frequently used words are represented in the keypads, which help the user to avoid so much time in typing letter by letter. To correct the mistyped back the user can even use backspace to delete the letter.

Figures 11.8, 11.9, and 11.10 are the keypads which is created by dividing the numpy image into group of cell. The letter represented in each cell is the letter to type out for the communication.

Figure 11.7 Virtual keyboard.

Figure 11.8 Keypad for left gaze.

Figure 11.9 Keypad for the right gaze.

Figure 11.10 Keypad for the bottom gaze.

11.5 Observation and Results

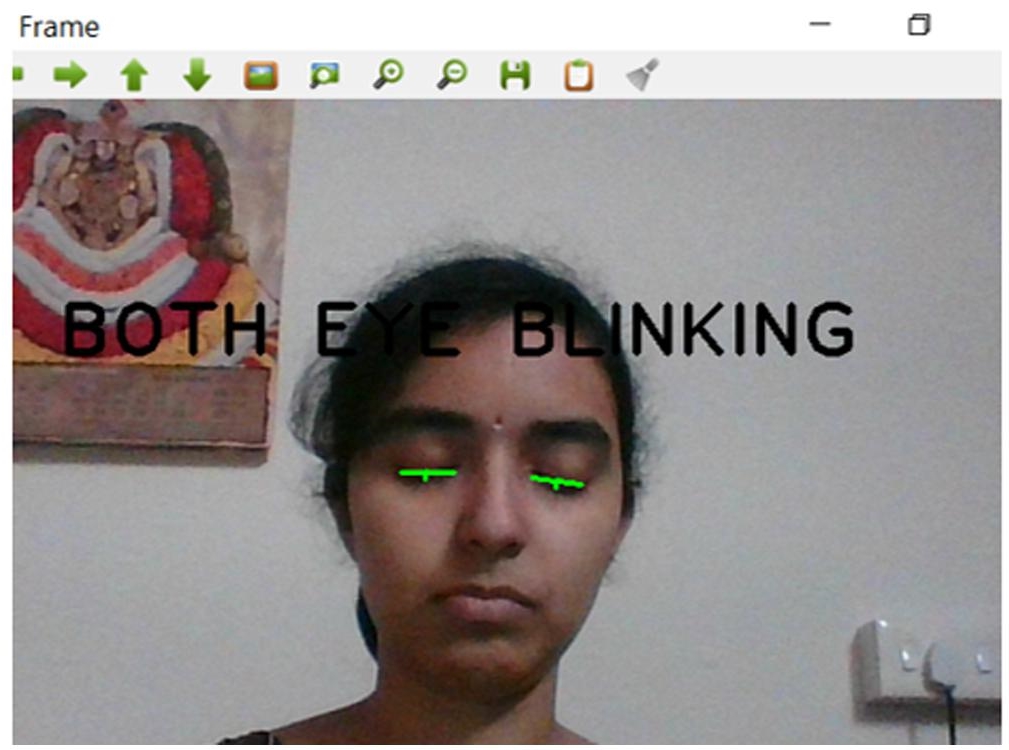

Figures 11.11, 11.12, and 11.13 represents the left eye blink, right eye blink and both eye blink and as the output indicates the HOG algorithm performs well in detecting the blinks and the facial features. Also it can be observed that the distance between the face and the camera is not sensitive to the result achieved. The possibility of the error might be only due to poor illuminance.

Figure 11.11 Left eye blink.

Figure 11.12 Right eye blink.

Figure 11.13 Both eye blink detection.

The cursor control is automated with the combination of blink and the gaze detection. Figures 11.14 to 11.17 represents the various gaze detected.

Figure 11.18 shows the simulation of the eye coding.

Figure 11.14 Right gaze.

Figure 11.17 Down gaze.

Figure 11.18 Simulation of eye coding.

11.6 Conclusion

This chapter discussed the solution for people with severe disabilities by using their laptop, personal computer, or smart phones to communication to the real world. Usage of the HOG or machine learning model helps them to achieve typing or even perform coding with the help of low cost system. With the real time detection of the movement of the eye the cursor moves over and helps them to convey the information to the closed ones. Since there is no need of any special hardware and as mention a normal laptop or even smart phone is sufficient the overall cost of the system is very less and the software platform used is also open source. Thus the system apart from normal communication can also be used to code and compile programs.

11.7 Future Scope

The eye gaze control can also be implemented with the detection of head movements and the facial expressions. The content in chapter concentrates on four directions which can be further extended to diagonal direction as well. Eye control from communication to other person can also be extended to control other devices. The eye gaze and cursor control technique can further be used in the latest technologies for various application such as Augment reality, Virtual reality, navigation, smart homes, smart farming, smart wearables etc.

References

- 1. Gupta, A., Rathi, A., Radhika, D.Y., “Hands-free pc control” controlling of mouse cursor using eye movement. Int. J. Sci. Res. Publ., 2, 4, 1–5, 2012.

- 2. Narayan, M.S. and Raghoji, W.P., Enhanced cursor control using eye mouse. IJAECS, 3, 31–35, 2016.

- 3. Dongre, S. and Patil, S., A face as a mouse for disabled person. A Monthly Journal of Computer Science and Information Technology, pp. 156–160, 2015.

- 4. Tsuzuki, Y., Mizusako, M., Yasushi, M., Hashimoto, H., Sleepiness detection system based on facial expressions. IECON 2019-45th Annual Conference of the IEEE Industrial Electronics Society, vol. 1, pp. 6934–6939, 2019.

- 5. Rakshita, R., Communication through real-time video oculography using face landmark detection, in: 2018 Second International Conference on Inventive Communication and Computational Technologies (ICICCT), pp. 1094–1098, 2018.

- 6. Soukupova, T. and Cech, J., Eye blink detection using facial landmarks, in: 21st Computer Vision Winter Workshop, Rimske Toplice, Slovenia, 2016.

- 7. George, A. and Routray, A., Real-time eye gaze direction classification using convolutional neural network, in: 2016 International Conference on Signal Processing and Communications (SPCOM), IEEE, pp. 1–5, 2016.

- 8. Perez, A., Cordoba, M.L., Garcia, A., Mendez, R., Munoz, M.L., Pedraza, J.L., Sanchez, F., A precise eyegaze detection and tracking system, in International Conference in Central Europe on Computer Graphics, Visualization and Computer Vision 2003, vol. 3, February, Plzen, pp. 105–108, 2003.

- 9. Park, K.R., Lee, J.J., Kim, J., Facial and eye gaze detection, in: International Workshop on Biologically Motivated Computer Vision, Springer, pp. 368–376, 2002.

- 10. Valsalan, A., Anoop, T., Harikumar, T., Shan, N., Eye writer. IOSR-JECE, 1, 20–22, 2016.

- 11. Harika, V.R. and Sivaramakrishnan, S., Image overlays on a video frame using HOG algorithm. 2020 IEEE International Conference on Advances and Developments in Electrical and Electronics Engineering (ICADEE), pp. 1–5, 2020.

- 12. Sivaramakrishnan, S. and Vasupradha, Centroid and path determination of planets for deep space autonomous optical navigation. IJARESM, 8, 11, 42–48, 2020.

- 13. Gandhi, R., A look at gradient descent and rms prop optimizers. Towards Data Science, 1, 19–24, Jun 2018.

- 14. Brownlee, J., A gentle introduction to the rectified linear unit (ReLU), Machine learning mastery, 1, 6–12, 2019.

- 15. Goodfelow, I., Bengio, Y., Courville, A., Deep learning (adaptive computation and machine learning series), 2016.

- 16. Cano, S., Write using your eyes-gaze controlled keyboard with python and opencv. ICADACC, 2, 12–16, 2019.

- 17. Khare, V., Krishna, S.G., Sanisetty, S.K., Cursor control using eye ball movement, in: 2019 Fifth International Conference on Science Technology Engineering and Mathematics (ICONSTEM), vol. 1, IEEE, pp. 232–235, 2019.

- 18. Murata, A., Eye-gaze input versus mouse: Cursor control as a function of age. Int. J. Hum. Comput. Interact., 21, 1, 1–14, 2006.

Note

- *Corresponding author: [email protected]