3

Accurate Document Extraction with Amazon Textract

In the previous chapter, you read how businesses can use Amazon Simple Storage Service (Amazon S3) and the Amazon Comprehend custom classifier to collect and categorize documents before accurate document extraction. We will now dive into the details of the extraction stage of the Intelligent Document Processing (IDP) pipeline for the accurate extraction of documents. We will navigate through the following sections in this chapter:

- Understanding the challenges in legacy document extraction

- Using Amazon Textract for accurate extraction of different types of documents

- Using Amazon Textract for the accurate extraction of specialized documents

Technical requirements

For this chapter, you will need access to an Amazon Web Services (AWS) account. Before getting started, we recommend that you create an AWS account by referring to the AWS account setup and Jupyter notebook creation steps, as mentioned in the Technical requirements section of Chapter 2, Document Capture and Categorization. You can find Chapter 3 code samples in GitHub here: https://github.com/PacktPublishing/Intelligent-Document-Processing-with-AWS-AI-ML-/tree/main/chapter-3

Understanding the challenges in legacy document extraction

Many organizations, across industries, irrespective of the size of the business, deal with a large number of documents in everyday transactions. Moreover, we discussed the data diversity, data sources, and various layouts and formats for these documents in Chapter 2, Document Capture and Categorization. The data diversity at scale makes it difficult to extract elements from these documents. For example, think about a back-office task for a company. This is one of the non-mission critical tasks for a company, but at the same time, these tasks need to be fulfilled in a scheduled and timely manner. For example, the back office receives invoices at scale and needs to extract information and put it in a structured way in its enterprise resource planning (ERP) system, such as Systems, Applications, and Products (SAP) for accurate payments. Once we convert the data from unstructured documents to a structured format, a machine can handle the processing. So, without automation, how do we handle the extraction part of document processing?

One of the most common ways to extract documents is through manual processing.

Human beings can manually scan these documents and extract elements and feed them to their data entry system, but manual processing of documents can be error-prone, time-consuming, and expensive. Alternatively, customers can use traditional technologies such as optical character recognition (OCR) to extract all elements from the documents. But OCR can give you a flat bag of words (BoW), which is not an optimal way to extract documents. Additionally, we have seen customers using template- or rule-based document extraction. But template-based extraction can be high maintenance, and to onboard a new document type or new format, you will have to create and manage its corresponding template. Moreover, if you have used your custom model building process to extract elements from your documents, to onboard a new document type, it takes significant time and effort to retrain the technology stack for accurate extraction. Moreover, most of the time, traditional document processing—including manual processing—is not ready to handle document extraction at scale. Is there any other alternative solution for accurate document extraction? The following diagram depicts a sample architecture for document extraction in an IDP pipeline:

Figure 3.1 – Document extraction in IDP pipeline

In the document extraction stage of the IDP pipeline, our goal is to extract all the fields accurately from any type of document. In Figure 3.1, after categorizing the documents, we want to extract elements unique to that document. For example, you might want to get the Social Security number (SSN) from a Social Security card (SSC), but the Bank account number from a bank statement document. We may use different technologies and AWS artificial intelligence (AI) services such as Amazon Textract and Amazon Comprehend for accurate extraction. Before diving deep into the architecture, let’s first learn how we can use Amazon Textract for accurate document extraction.

Using Amazon Textract for the accurate extraction of different types of documents

To build an IDP pipeline, we need an AI service such as Amazon Textract to accurately extract printed as well as handwritten text from any type of document. Now, let’s look into the features of Amazon Textract for accurate document extraction.

Introducing Amazon Textract

Amazon Textract is a fully managed AI/machine learning (ML) service, built with pre-trained models for accurate document extraction. Amazon Textract goes beyond simple OCR and can extract data from any type of document accurately. To leverage Textract, no ML experience is required; you can call into its application programming interfaces (APIs) to leverage the pre-trained ML model behind the scenes.

Amazon Textract provides APIs that you can call through the serverless environment without the need to manage any kind of infrastructure. Once the document content is extracted, you can proceed to the subsequent stages of the IDP pipeline, as required by your business. As with any other ML model, the Textract model learns from the data and gives intelligent results over time, but the main benefit of leveraging a managed service such as Textract is that, with no infrastructure to manage, it can still get accurate results just by calling into its API. Now, let’s see some of the common benefits of accessing managed models through serverless APIs in the following table:

Table 3.2 – ML model versus pre-trained AI services

The preceding table gives you a high-level comparison of the benefits of using a managed ML model for your document extraction. As a business decision maker, you can map it back to your timeline requirement and priority as per business requirements. This will help you define how much ML investment you will do for your team. If you decide to go with managed ML model(s), you can save time and can deliver results to the market faster. You can then leverage the saved time for your additional priorities.

Accurate extraction of unstructured document types

Unstructured documents are dense text types, such as legal contractual documents. For the hands-on lab for this chapter, we will be using the following sample text. A snippet of the dense text document is provided here:

Figure 3.3 – Dense text document example

We will follow the next steps to accurately extract elements using Amazon Textract. You can follow the Notebook for your Document Extraction: https://github.com/PacktPublishing/Intelligent-Document-Processing-with-AWS-AI-ML-/blob/main/chapter-3/Textract-chapter3.ipynb

- Firstly, we will start out by importing the required libraries, such as boto3, as follows:

import boto3

import os

from io import BytesIO

from PIL import Image

from IPython.display import Image, display, JSON, IFrame

from trp import Document

from PIL import Image as PImage, ImageDraw

from IPython.display import IFrame

- Let’s see what our unstructured.png sample document looks like. You can see this here:

# Document

documentName = "unstructured.png"

display(Image(filename=documentName))

- Get the Textract boto3 client by executing the following code:

#Extract dense Text from scanned document

# Amazon Textract client

textract = boto3.client('textract')

- To extract from dense text types of documents, we are using the detect_document_text API of Amazon Textract, as shown in the following code snippet. This Amazon Textract API can accept a local file or an S3 object as the input document. For our code here, we are using a local file as an example. Thus, we are passing raw bytes from the local file to Textract’s API:

# Read document content

with open(documentName, 'rb') as document:

imageBytes = bytearray(document.read())

# Call Amazon Textract

response = textract.detect_document_text(Document={'Bytes': imageBytes})

- Now, let’s print Textract’s response as LINES. The code and sample output are shown in the following snippet:

# Print detected text

for item in response["Blocks"]:

if item["BlockType"] == "LINE":

print (item["Text"])

print(response)

Now, let’s check the result here:

Figure 3.4 – Lines from document

The preceding code walked you through how you will call Textract’s API directly using Python code for a dense text type of document extraction. Let’s now check another way to extract text from documents, leveraging the amazon textract textractor library.

The Textractor library helps speed up the code implementation with its inbuilt abstraction logic and response parser. This is available as the pypi library.

Prerequisites to use the Textractor pypi library include the following:

- Python 3

- AWS command-line interface (CLI)

Now, let’s check in the next code samples how we can use the Textractor library to extract accurately from the same dense text type of documents. Proceed as follows:

- Import the required pypi library, like so:

!python -m pip install amazon-textract-caller

!python -m pip install amazon-textract-prettyprinter

import json

from trp import Document

from textractcaller import call_textract, Textract_Features

from textractprettyprinter.t_pretty_print import Pretty_Print_Table_Format, Textract_Pretty_Print, get_string

- Use the call_textract method to extract elements from your document. This method takes the local filename directly as input. It abstracts the creation of "raw bytes" from the local document. The user doesn’t have to get raw bytes and pass them to the Textract API anymore. We can directly pass the local filename [documentName] for accurate extraction, as illustrated in the following code snippet:

textract_json = call_textract(input_document=documentName)

Now, let’s print Textract’s response as LINES. For printing, we are using the get_string() method of the prettyprinter library. prettyprinter formats the JavaScript Object Notation (JSON) output response of Textract for easy reading. In the following code snippet, we are using Textract_Pretty_Print.LINES for our document processing, but additional types such as WORDS and more are supported:

print(get_string(textract_json=textract_json,

output_type=[Textract_Pretty_Print.LINES]))

Sample output is shown here:

Figure 3.5 – Output of Textract

Now, let’s check the JSON API response of Amazon Textract for better parsing.

JSON API response

The document metadata will have reference to the number of pages as pages and blocks. The blocks are organized in a parent-child relationship. For example, a LINE (Amazon Textract) block will have a LINE BlockType, which has a reference to two child blocks of the WORD BlockType: one WORD BlockType for Amazon and another WORD BlockType for Textract. This relationship is represented in the following diagram:

Figure 3.6 – Block relationship for dense text

Now, let’s check how we can use Amazon Textract for the extraction of semi-structured/form types of documents.

Accurate extraction of semi-structured document types

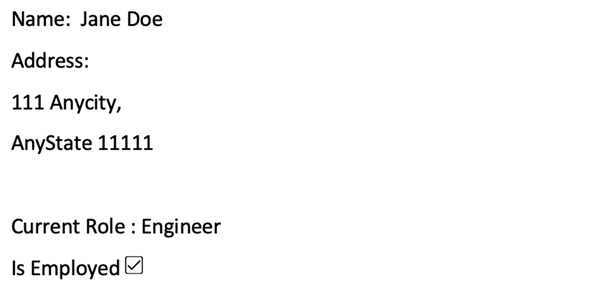

Semi-structured documents are of the form type, such as insurance claims forms. They can have key-value elements along with checkbox/radio button types of elements. For the hands-on lab for this chapter, we will be using the following sample document, which shows a snippet of a semi-structured type of document. You can see this document has key-value pairs. Some key-value pairs are on one line, but some values are on multilines below the key. A key value can be separated by a space, and any delimiter also. This document also has a checkbox type:

Figure 3.7 – Semi-structured form-type document

Now, let’s look into the following sample code for the accurate extraction of this form type of document. Proceed as follows:

- Use the following code to display the semi-structured document:

# Document

documentName = "semi-structured.png"

display(Image(filename=documentName))

This displays the semi-structured sample document, as we can see here:

Figure 3.8 – Sample form-type document

- Use the call_textract method to extract elements from your document. We are passing Textract_Features as FORMS to extract from the semi-structured document. This method takes the local filename directly as input. Then, we call the Textractor prettyprinter library to print the FORMS type. The code for this is shown here:

textract_json = call_textract(input_document=documentName, features=[Textract_Features.FORMS])

print(get_string(textract_json=textract_json,

output_type=[Textract_Pretty_Print.FORMS]))

- In the following output, you can see all the key-value pairs are extracted accurately. Moreover, the checkbox selection is also extracted with a SELECTED value:

Figure 3.9 – Sample Textract output form

Now let’s check the JSON API response of Amazon Textract for better parsing.

JSON API response

For a semi-structured document of the form type, if we have a form type, we get a KEY_VALUE_SET BlockType. In the JSON response, we also have EntityType. For a form type, EntityType values can be of KEY or VALUE. Additionally, if we have a checkbox, you will see a BlockType of SELECTION_ELEMENT with SelectionStatus of SELECTED or NOT_SELECTED. Sample output is depicted in the following diagram:

Figure 3.10 – Block relationship for the form type

Now, let’s check how we can use Amazon Textract for the extraction of structured/table types of documents.

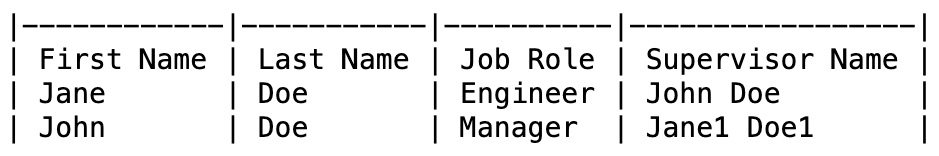

Accurate extraction of structured document types

Structured documents are table types. They can have a table with rows and columns with cells. Textract table extraction also supports merged cell extraction. For the hands-on lab for this chapter, we will be using the following sample structured type of document. Here, you can see this document has a table with rows and columns:

Figure 3.11 – Sample table type

Proceed as follows:

- Use the following code for the display of the semi-structured document:

# Document

documentName = "structured.png"

display (Image(filename=documentName))

And the output looks like this:

Figure 3.12 – Sample output: table

- Use the call_textract method to extract elements from your document. We are passing Textract_Features as TABLES to extract from the structured document. This method takes the local filename directly as input. Then, we call the Textractor prettyprinter library to print the TABLES type. If your document has multiple tables, the following code can extract all the tables from your documents:

textract_json = call_textract(input_document=documentName, features=[Textract_Features.TABLES])

print(get_string(textract_json=textract_json,

output_type=[Textract_Pretty_Print.TABLES]))

In the following sample output, you can see a table with headers, rows, and columns extracted from the document:

Figure 3.13 – Sample output: table result

Now, let’s check the JSON API response of Amazon Textract for better parsing.

JSON API response

For a structured document of the table type, we get additional TABLE and CELL BlockType values. In the JSON response, we also have EntityType. For a TABLE type, EntityType values can be of COLUMN_HEADER for the column header. Sample output is depicted in the following diagram:

Figure 3.14 – Block relationship for table type

Now, let’s check how we can use Amazon Textract for the extraction of specialized documents.

Using Amazon Textract for the accurate extraction of specialized documents

Amazon Textract can support the accurate extraction of specialized document types such as a United States (US) driver’s license, a US passport, invoices, and receipts. Now, we will walk you through the steps to accurately extract elements from these documents using Amazon Textract.

Accurate extraction of ID document (driver’s license)

In this section, we will use Amazon Textract for a US driver’s license document extraction. Now, let’s dive into the code sample, as follows:

- We are using "dl.png" as a sample US driver’s license document. Let’s use the following code to display the document:

# Document

documentName = "dl.png"

display(Image(filename=documentName)

Now, check the result, as follows:

Figure 3.15 – Sample driver’s license

- We are using the analyze_id synchronous API of Amazon Textract to extract elements from our US driver’s license, which is stored locally. To process a local file, we are first extracting the raw bytes from the document and then passing that as an input to the analyze_id API for extraction. The code is illustrated in the following snippet:

with open(documentName, 'rb') as document:

imageBytes = bytearray(document.read())

response = textract.analyze_id(

DocumentPages=[{"Bytes":imageBytes}]

)

print(response)

- Use the following code to print the JSON response from the analyze_id API:

import json

print(json.dumps(response, indent=2))

Now, let’s check the JSON output, as follows:

Figure 3.16 – Sample JSON output

Amazon Textract can also extract elements from additional identifier (ID) types of documents such as US passports.

ID document (US passport) accurate extraction

In this section, we will use Amazon Textract for a US passport document extraction. Now, let’s dive into the code sample, as follows:

- We are using passport.png as a sample US passport document. Let’s use the following code to display the document:

# Document

documentName = "passport.png"

display(Image(filename=documentName))

Now, check the result, as follows:

Figure 3.17 – Sample passport document

- We are using the analyze_id synchronous API of Amazon Textract to extract elements from our US passport, which is stored locally. To process a local file, we are first extracting the raw bytes from the document and then passing that as an input to the analyze_id API for extraction, as illustrated in the following code snippet:

with open(documentName, 'rb') as document:

imageBytes = bytearray(document.read())

response = textract.analyze_id(

DocumentPages=[{"Bytes":imageBytes}]

)

print(response)

- Use the following code to print the JSON response from the analyze_id API:

import json

print(json.dumps(response, indent=2))

Now, let’s check the JSON output, as follows:

Figure 3.18 – Sample output

Let’s next move on to receipt document accurate extraction.

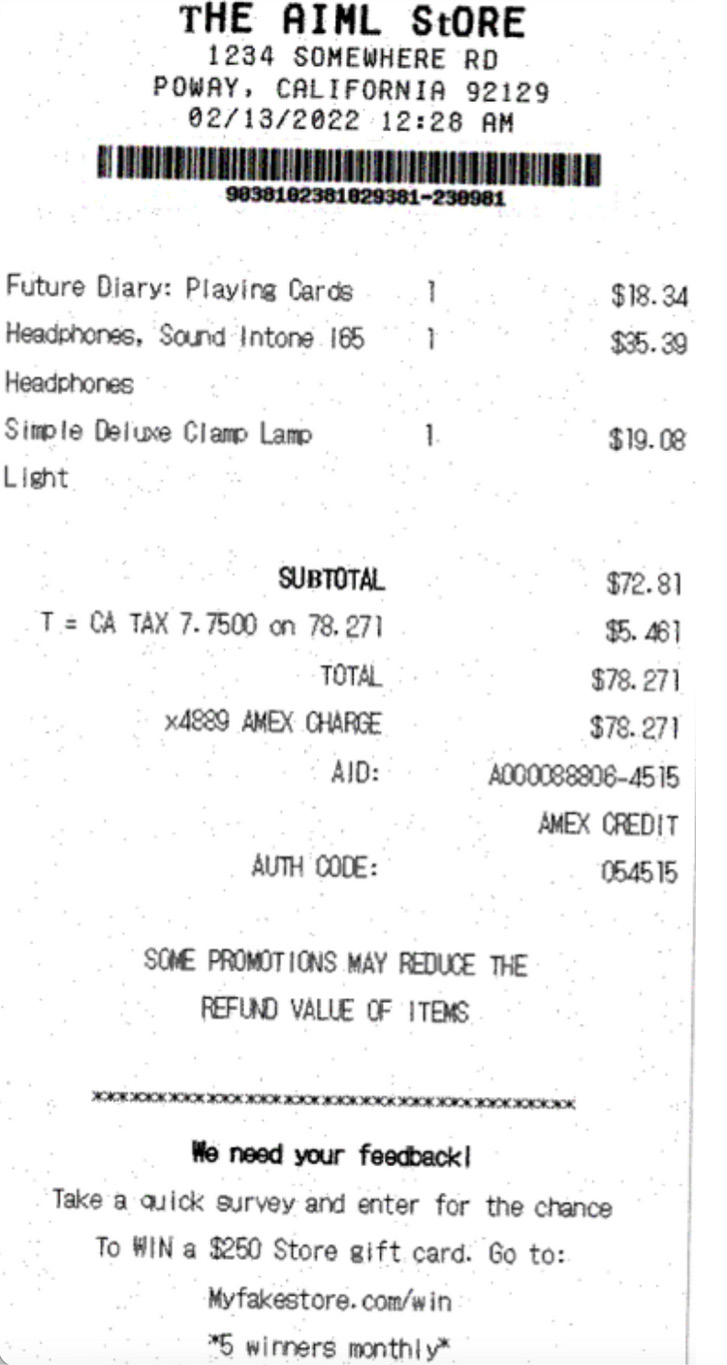

Receipt document accurate extraction

In this section, we will use Amazon Textract for the accurate extraction of a receipt document. Now, let’s dive into the code sample, as follows:

- We are using receipt.png as a sample receipt document. Let’s use the following code to display the document:

# Document

documentName = "receipt.png"

display(Image(filename=documentName))

Now, check the result, as follows:

Figure 3.19 – Sample receipt document

- We are using the analyze_expense synchronous API of Amazon Textract to extract elements from our receipt document, which is stored locally. To process a local file, we are first extracting the raw bytes from the document and then passing that as an input to the analyze_expense API for extraction. The code is illustrated in the following snippet:

# Read document content

with open(documentName, 'rb') as document:

imageBytes = bytearray(document.read())

# Call Amazon Textract

response = textract.analyze_expense(Document={'Bytes': imageBytes})

print(response)

- Use the following code to print the JSON response from the analyze_expense API:

import json

print(json.dumps(response, indent=2))

Now, let’s check the JSON output, as follows:

Figure 3.20 – Sample output

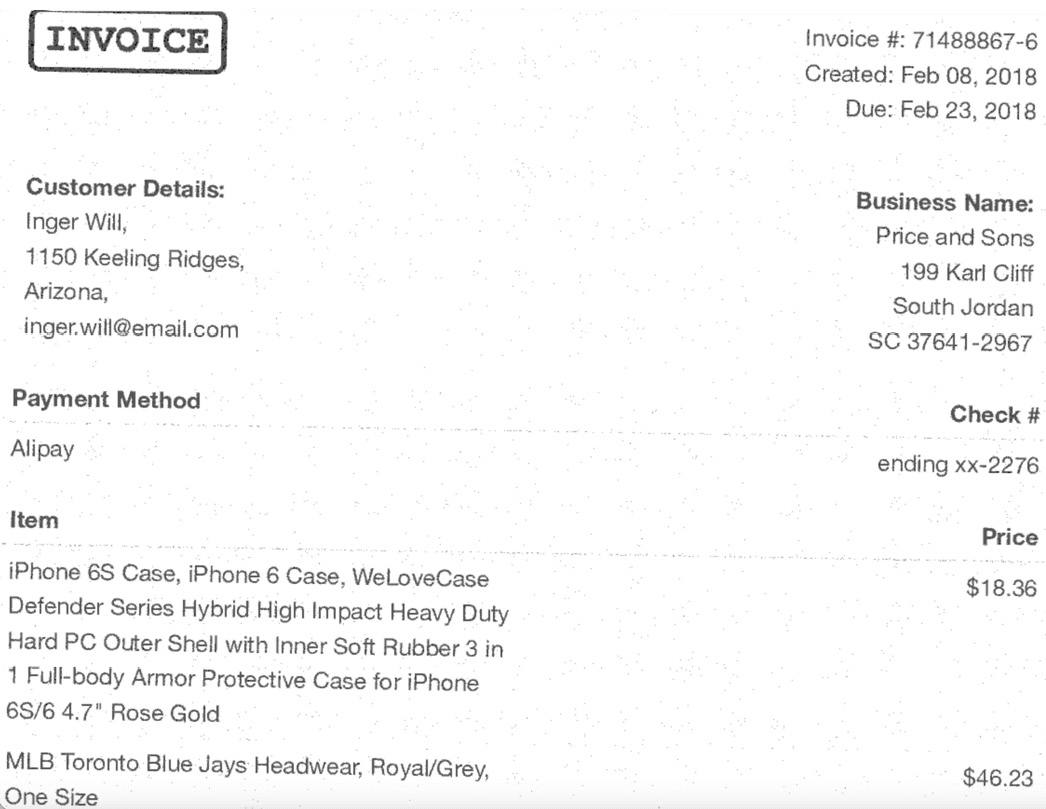

Invoice document accurate extraction

In this section, we will use Amazon Textract for the accurate extraction and normalization of inferred elements from an invoice type of document. Now, let’s dive into the code sample, as follows:

- We are using invoice.png as a sample invoice document. Let’s use the following code to display the document:

# Document

documentName = "invoice.png"

display(Image(filename=documentName))

Now check the result, as follows. I want to quickly highlight that the input document type has skew. This is to show that Amazon Textract can process documents with low resolution as well as documents with skew:

Figure 3.21 – Sample invoice document

- We are using the analyze_expense synchronous API of Amazon Textract to extract elements from our invoice document, which is stored locally. To process a local file, we are first extracting the raw bytes from the document and then passing that as an input to the analyze_expense API for extraction. The code is illustrated in the following snippet:

# Read document content

with open(documentName, 'rb') as document:

imageBytes = bytearray(document.read())

# Call Amazon Textract

response = textract.analyze_expense(Document={'Bytes': imageBytes})

print(response)

- Use the following code to print the JSON response from the analyze_expense API:

import json

print(json.dumps(response, indent=2))

Now, let’s check the JSON output, as follows:

Figure 3.22 – Sample output

We have been through the extraction stage of IDP and seen how we can leverage Amazon Textract for accurate extraction. Most often, there is a requirement to process millions of documents at scale. Going back to our insurance processing use case for enterprise insurance companies, they receive millions of documents as part of claims processing. Can we process a large number of documents at scale? To answer this, let’s look into a large-scale document processing architecture in the next chapter.

Large Scale Document processing

For large-scale document processing, we will use the following Intelligent document processing reference architecture. To design the architecture, we need to take two main things into consideration. One, how many documents you want to process, and second, how fast you want to process them. For this architecture, we want to process tens of thousands of documents per day and our processing is good with not having near real-time processing. For that reason, we are using Amazon Tetxract Asynchronous operation and designing a large-scale architecture.

In the first stage of the IDP (Data Capture) stage, we are storing data in Amazon S3, which kicks in a processor lambda function that can collect a subset of document prefixes. Now you must be thinking how much the subset of document prefix you will define. I would recommend checking Textract Transaction Per Second (TPS) limit per your account, region, and Tetxract API, and setting the subset of document prefix accordingly not to throttle your Tetxract API. Then in an event-driven manner, store these subsets of documents in Amazon SQS for decoupled architecture. SQS triggers Amazon Tetxract API asynchronous API to process these subsets of documents and notifies the completion status with SNS. Note here, that we are not wasting compute cycle by calling Get Textract Result API in the loop. We are just waiting for Tetxract completion notification, and then call Textract Get result API and store the result back into Amazon S3.

Figure 3.23 – Large Scale Document Processing Architecture

Now that we have looked into a large-scale document processing architecture I would recommend, checking the Textract TPS limit in the following reference: https://docs.aws.amazon.com/general/latest/gr/textract.html

Summary

In this chapter, we discussed the extraction stage of an IDP pipeline, and how we can leverage Amazon Textract to accurately extract elements from documents. Documents can be of different types, such as an unstructured dense text type of document, a semi-structured document such as a form, or a structured document such as a table. We walked through the sample code and its API response to accurately extract elements from any type of scanned document.

We then reviewed the need for accurate extraction of elements from specialized document types, such as ID documents such as a US driver’s license, a US passport, or invoice/receipt types of documents. We discussed Amazon Textract’s analyze_id and analyze_expense APIs to accurately extract elements from ID and invoice/receipt types of documents respectively. We walked you through the sample code for your accurate extraction of specialized document types.

In the next chapter, we will extend the extraction stage of the document processing pipeline with Amazon Comprehend. Moreover, we will introduce you to the enrichment stage of IDP and how you can leverage Amazon Comprehend to enrich your documents.