4

Smart Sensors for Activity Recognition

Rehab A. Rayan1*, Imran Zafar2, Aamna Rafique3 and Christos Tsagkaris4

1Department of Epidemiology, High Institute of Public Health, Alexandria University, Alexandria, Egypt

2Department of Bioinformatics and Computational Biology, Virtual University of Pakistan, Punjab, Pakistan

3Department of Biochemistry, Agriculture University Faisalabad, Punjab, Pakistan

4Faculty of Medicine, University of Crete, Heraklion, Greece

Abstract

Nowadays, health informatics is enhancing the efficiency of healthcare via improved collecting, storing, and retrieving of vital health-related data. Smart sensors arose because of the rapidly growing information and communication technologies and wireless communications. Today, both smartphones and wearable biosensors are highly used for self-monitoring of health and well-being. Smart sensors could enable healthcare providers to monitor digitally and routinely the elderly’s activities. Smart health has emerged from integrating smart wearable sensors in healthcare, while the growth in machine learning (ML) technologies enabled recognizing human activity. This chapter describes applications and limitations of a smart healthcare framework that could model and record, digitally and precisely, body movements and vital signs during daily-living human activities through ML techniques applying smartphones and wearables.

Keywords: Smart health, activity recognition, health monitoring, machine learning, biosensors

4.1 Introduction

Most of the elderly people suffer from age-related health issues like cardiovascular disorders, diabetes mellitus, osteoarthritis, dementia, and other chronic conditions. Such conditions, along with the apparent gradual decline in mental and body capabilities, limit their free-living. Recent advances in information and communication technologies (ICTs) together with innovations in ambient smart techniques, like smartphones and biosensors, have promoted smart settings [1]. Smart health could potentially meet the demands of such a growing elderly population via delivering smart healthcare services. For example, smart health systems could evaluate and monitor severe conditions of elderly population throughout their everyday living. The smart healthcare design could offer sustained healthcare services through lowering the load over the overall health system. For adopting a smart health system, there are several limitations in many phases of the developing procedure such as distant setting monitoring, the required techniques, the needed smart processing systems, and the delivering of setting-oriented services. Hence, further studies are needed [2].

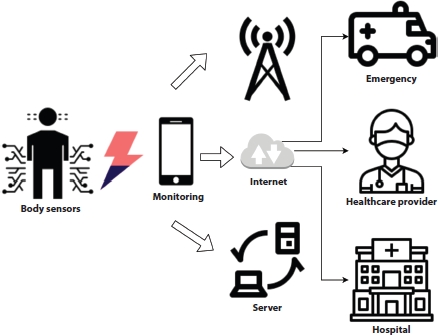

Smart health monitoring systems are integrating pervasive computing with ICTs, hence delivering smart healthcare services that meet individual needs. Several interventions are designed to solve many issues of such systems providing a smart context to monitor and analyze individual health status and deliver real-time smart healthcare services [2]. Figure 4.1 displays an overall structure of these systems.

Figure 4.1 An overall structure of smart health monitoring systems.

ICT innovations have driven the wider deployment of smartphones and body sensors that could connect timely patients with healthcare providers for monitoring and analyzing health status pervasively in a smart setting, hence the rising era of smart health and mobile health monitoring platforms. This chapter highlights a smart health platform that could mainly trigger the potential of wearables and mobile-based healthcare delivery to monitor the individual health and wellbeing and offer pervasive activity recognition via machine learning (ML) techniques. Smart health is a medical health framework based on smartphones and biosensors, and it comprises deploying technologies, such as 4G systems, Global Positioning System, and Bluetooth [3].

Late advances in innovative Internet and computational technologies enabled swift connectivity among many devices [4]. The newly rising principle of smartphones and biosensors have integrated the Internet into everyday living, linking many devices and adding to the productivity of various areas like smart health systems. In smart health applications, smart biosensors involve many types of wearables allowing individuals seeking healthcare services anywhere and anytime and bringing the techniques of body sensor network, one of the highly proficient technologies in the smart health systems, where they are chiefly a mixture of lightweight and low-power wireless sensor nodes for monitoring activity recognition [5]. However, smart health should establish and evaluate the entire scope of such techniques, which justify and advance multidisciplinary smart health applications [3].

Human-activity recognition (HAR) is a promising research area for its valuable contributions in enhancing the quality of life, the safety, transportation, health in smart cities and villages, and assisting decision-makers in improving quality services [2]. HAR systems supply data on the personal behavior and activity via tracking signals from smartphones and biosensors and translating them through ML techniques, hence the ability of ongoing patient monitoring for a range of conditions [6, 7] such as everyday life activities, movements, exercise, and transportation [8]. ML techniques vary largely in quantity, speed, data frameworks like supervised and unsupervised algorithms, and using the right algorithms matching data features. Since data are yielded via multiple sources with unique types, it is vital to apply the most efficient algorithms. Furthermore, determining the precise data model is an important phase in pattern recognition and better exploration of the generated data [4]. This chapter highlights various ML for HAR applying smartphones and biosensors to explore human activity and add more precision to smart health.

The novel wireless network techniques made it possible to adopt wearables gradually for smart monitoring of everyday activities in different domains like emergency situations, cognitive assistance, and safety [9]. HAR could determine pervasively various human activities and gestures via individual motion through smartphones and biosensors. Regarding recognition of complicated activities, data-centered techniques are challenging regarding mobility, expansion, and translation; however, knowledge-centered techniques are mostly poor in handling complicated temporal data [10]. ML could minimize the vast quantities of data to reflect the whole data without circulation [11]. The sensor-driven activity recognition is turning commoner than video-driven one to safeguard privacy [12]. Wearables’ gathered data could be processed via ML algorithms.

HAR is a swiftly rising research discipline with broad applications in health, assisted living, home monitoring, personal fitness, and terrorism identification. Likewise, HAR frameworks could be integrated in a smart home healthcare framework to develop and improve the patient’s rehabilitation procedures [13]. Assisting elderly population proactively made the healthcare professionals apply biosensors to monitor and analyze their everyday life activities, hence helping them to live independently [14]. An activity recognition model with various wearables comprises sections for protecting and analyzing data, involving extraction and identification, categorization, and evaluation [15]. However, identifying the best ML techniques to recognize accurately human activity is needed.

4.2 Wearable Biosensors for Activity Recognition

Wearable biosensors (WBS) are gaining infinite popularity nowadays, and currently they aim to be one of the biggest inventions in the powering technology market. In WBS, a broad biosensor classification is suitable for healthcare facilities, sports-related applications, security applications, etc. Rapid growth of such devices is on track to offer benefits such as ease of use, low cost, and real-time awareness. Progress in portable health technology and WBS has been made, so that they can be considered ready for all clinical purposes. Portable biosensors are of great interest because of their ability to provide continuous, real-time physiological information through complex, non-invasive measurements of biochemical markers in bio fluids, such as sweat, tears, saliva, and interstitial fluid. Recent developments have focused on electrochemical and optical biosensors as well as advances in non-invasive testing of biomarkers including metabolites, bacteria, and hormones.

A combination of multiplexed bio-sensing, microfluidic sampling and transport systems has been developed that is integrated, miniaturized, and combined with lightweight materials to increase portability and ease of use. While the promise of portable biosensors is that a better understanding of the interactions between concentrations of blood analysis and noninvasive bio-fluids is important to improve reliability. An extended range of bio-affinity assays on the body and more sensing techniques are required to provide more biomarkers available for monitoring. Characterization techniques covering a large dataset of the development of WBS would also be needed to substantiate clinical acceptability. Precise and real-time sensing with portable biosensor technology of physiological knowledge will have a huge impact on our everyday lives.

Using portable monitoring devices or WBS that allow for continuous monitoring of physiological signals is essential for progress in both disease diagnosis and treatment. Portable systems are devices that allow physicians to overcome technology limitations and respond to the need for weeks or months of monitoring of individuals. Usually portable biosensors rely on wireless sensors found in bandages or patches or on items that can be worn. The data sets collected using these systems are then processed to detect events that predict possible deterioration of the clinical situation of the patient and are analyzed to gain access to the results of clinical interventions. The HAR aims to recognize actions performed by individuals who have been given a series of data metrics that are captured by the sensor. Successful HAR research based on understanding relatively simple behaviors as sitting or walking and their applications are mainly useful for tracking healthcare, tele-immersion, or operation. Smartphone use is one of the most open ways to understand human behavior. Biological sensors are a system in short biosensors that comprise sensors, biosensor readers, and a biological element. The environmental viewpoint here may be a cause, an inhibitor, or an antigen for nucleotides. The biological factor is designed to communicate with the measured analyte, and the sensor translates the biological responses into an electrical signal. Depending on the application, biosensors are often popularly known by names that include immuno-sensors, optrodes, biochips, glucometers, and bio-computers. Displaying the data is up to the biosensor reader system. It is known that this is the most costly part of the biosensor.

HAR is intensively studied, and a large amount of analysis shows findings from the study of all kinds of everyday human behaviors, such as traveling, sleeping, or activity generating. To this end, multiple sensors accumulate numerous bio-signals, e.g., [16] used compact trial-waist-connected axial accelerometers to differentiate between rest (sit) and active (sit-to-stand, stand-to-sit, and walk) state. In [17], five biaxial accelerometers were used to identify everyday activities such as walking, rising, and folding laundry. The authors placed in the hands of the participants an integrated smartphone with a simple accelerometer and categorized activities such as running, climbing, sitting, standing, and bike riding [18]. In addition, accelerometers were combined with smartphones [19] to provide a simple auditory level research [20] contrasted the recognition efficiency of five machines learning classifiers (K-nearest neighbor, feedforward neural network, support vector machines, Näve Bayes, and decision tree) and assessed the benefits and drawbacks of implementing them on a HAR laptop computer in real time.

Because of their immense potential in data modeling and customized medication therapy, advanced nursing allowed by versatile/portable devices and advanced analytics has gained considerable interest during recent years. The restricted supply of portable analytical biosensors has impeded further progress towards continued personal health monitoring. In recent years, significant advances in portable chemical sensors have been made for the study of biomarkers in tears, saliva, sweat, blood and exhalation, fabrics, applications, and device innovations. Electromyography (EMG) identified the action of muscles as another important bio-signal. It also provides the opportunity to decide the intention of a person to move before moving a joint that was being checked for causing orthosis [21]. In addition, several researchers, among them [22] and [23], used electrogoniometers to analyze films. Some new technologies in WBS innovation are Google’s Smart Lens, Healthpatch Biosensor, Wearable Biosensor-Odor Diseases, QTM Sensor, Wearable Glucose Sensor, and Portable Biosensor Tattoos Monitor Sweat to Track Weight, Ring Sensor, and Smart Shirts.

4.3 Smartphones for Activity Recognition

Cellphones seem to be the most important resources in our daily lives and, with the changing technology, they are becoming more capable of fulfilling customer needs and demands every day. To make these gadgets more functional and effective, designers are adding new modules and devices to the hardware. Sensors play a major role in making smartphones more effective and environmentally conscious, so most smartphones come with numerous embedded sensors, allowing vast amounts of information to be gathered about the consumer’s daily life and activities. Both the accelerometer and the gyroscope sensors are among those items.

For almost every smartphone manufacturer, the accelerometer had become a normal hardware. Since its name means velocity change in the calculation of the accelerometer, not speed itself, users could look at the microcontroller’s collected data to track drastic changes. Another sensor that has become normal smartphone hardware is a gyroscope that makes use of gravity to determine the orientation. Gyroscopic-recovered signals may be analyzed to determine the device’s location and orientation. Since there is a considerable difference in specifications seen between data collected from these sensors, several features may be created from those sensor data to determine the individual carrying the operation of the system. In the view of various researches, classification of mobile usage behaviors was focused on the detection of human behavior with accelerator signals [24]. Later researchers have attempted to identify movement based on several wearable gyroscopes and accelerometers [25], and after that, researchers also developed a convolutionary artificial neural network to recognize the user movement using the accelerometer sensor of smartphones [26]. Kozina et al. used an accelerometer to operate on autumn detection [27].

Activity analysis has gained a large portion of attention in recent decades with the world’s latest developments in artificial intelligence and ML. Over the past 10 years, there has been continuous progress in this area, early research beginning with gesture spotting with body-worn inertial sensors [28], and state-of-the-art solutions for complex detection of human activity using smartphone and wrist-worn motion sensors [29]. In this section, we will look at some other user behavior detection methods.

4.3.1 Early Analysis Activity Recognition

One of the initial studies to recognize user interactions utilizing sensors has been to use body-worn sensors such as accelerometers and gyroscopes [28] to detect a wide variety of user activities, some of which included pressing a light button, shaking hands, picking up a phone, turning a phone down, opening a door, drinking, or using a spoon or eating handheld food (e.g., selecting handheld f). For this purpose, a set of sensors had been placed on the tester’s arm, one end on the forearm and one end on the upper arm near the elbow. The data collected were composed of feature vector information, such as the angle at which the hand was positioned and the hand movement. It gave established outcomes using two metrics: the first was recall, calculated as the ratio of known and acceptable gestures (with obtained values of 0.87), while the second was precision, calculated as the percentage of known gestures from all collected data (attained values of 0.62).

4.3.2 Similar Approaches Activity Recognition

A very similar system used accelerometers for the cell phone to experiment with human activity detection [18]. The authors opted to use only the accelerometer sensor, since it was the only significant motion sensor used by most mobile devices at the time. In the meantime, many other sensors have reached the vast majority of smartphones. The activities that were under observation were running, jogging, sitting, standing, ascending stairs, and going downstairs. The study was broken down into three main sections: data collection, feature generation, and experiment work.

The first phase, data collection, was accomplished with the assistance of 29 users who were keeping a mobile device in their pants’ pocket while doing casual activities. Even if the probability of 20-s stints was tested, each operation was ultimately performed in 10-s stints, reading sensor data every 50 ms. Not every user did the same number of attempts; the number of sets collected differed for each person and also for each operation performed. As this approach involved the use of a classification algorithm, the main objective in the next step was to form the data in such a way that it could be passed on to existing algorithms as input. At this stage, some computation was performed on each set of 10-sensor readings, called feature generation, resulting in six feature types: average, standard deviation, average absolute difference, average acceleration, peak time, and binned distribution. Playing with the extracted characteristics and three classification techniques, namely multilayer decision trees, logistic regression, and neural networks, was the final step of the functional component. The findings showed strong percentages of walking identification (92%), jogging (98%), sitting (94%), and standing (91%), which were the average values for the three methods of classification, while up and down climbing had comparatively low percentages. The last two operations averaged 49%, respectively 37% over the three classifiers, rising to 60% and 50% unless the logistic regression was taken into consideration. This eventually led to the elimination of the two operations altogether. Instead, for climbing stairs, a new activity was introduced, with an average accuracy of 77%, which is still slightly lower than what was achieved for the other four tasks, but more accurate.

4.3.3 Multi-Sensor Approaches Activity Recognition

In one of the more recent and complex studies, a solution focused on both smartphones and wrist-worn motion sensors was proposed [29]. The central concept of this approach is that the way smartphones are held by their users (for example, in the pant pocket) is not ideal for understanding human behaviors involving hand movements. That is why additional sensors are used, in addition to those from the device. Both sensor sets also included the accelerometer, gyroscope, and linear acceleration.

The data used were collected from 10 participants for 13 activities, but only 7 of those activities were performed by all participants, which are exactly the activities that the suggested solution is attempting to understand, and the mission was carried out for 3 min, with a total period of 30 min for each operation resulting in a dataset of 390 min. All data processing was conducted with two cell phones. But instead of using wrist-worn sensors, a second smartphone was mounted on the right wrist of the users. Only two elements were removed, that is, mean and standard deviation. These were chosen because of the low complexity and the logical precision shown for various activities. Results were composed of combinations of sensors. The sensor was measured separately and then in combination with the others, and the two mobile device locations were also combined. There were a few errors in distinguishing the activities using just the accelerometer and gyroscope at the wrist site; the biggest confusion was between walking and walking upstairs, with a 53% accuracy, when comparing 83% for the other activities together. The findings were improved by mixing the two test places; the overall accuracy increased to 98%. The main drawback to this solution compared to the initial solution was the use of two mobile devices, which is impracticable in real life.

4.3.4 Fitness Systems in Activity Recognition

Sports, especially fitness and running, are one area that has recently resonated greatly with activity awareness. There are numerous examples of applications that use HAR to help users track their training sessions. Samsung Health offers such a forum alongside many more, but there are other apps that concentrate specifically on running and walking, such as Nike+ and Endomondo or even cycling and swimming like Strava. Although these apps recognize a very limited range of operations, they have outstanding results, being extremely accurate in detecting the type of activity performed, giving their customers advantages such as auto pause when detecting that the user is no longer running and tailoring training patterns. In addition, there has been a drastic increase in the number of smartwatches and fitness bands such as Fitbit and Apple Watch that are able to track the number of steps, sleep cycles, inactive hours, etc. These types of devices have begun to be used as components of more complex systems that employ several sensors to perform in-depth activity detection in scenarios such as health care [30], healthy aging [31], and compelling health behavior technology [32].

4.3.5 Human–Computer Interaction Processes in Activity Recognition

The people’s delight in interest and playing never vanishes, and the gaming field has undergone many changes. Recognition of action has become an integral part of playing in recent years with the advancement of technologies such as Kinect [33], PlayStation Move [34], and Nintendo Wii [35]. While some of these only recognize movement with the use of visual computing (such as Kinect), others also rely on sensors. The Nintendo Wii has a device that has a motion sensor that allows for identification of the activity. All these are used in different ways to play sports and also to develop a healthy habit. By understanding a user’s emotions, the computer can understand the user and have an answer based on human reactions. Thus, human–machine interaction is possible.

4.3.6 Healthcare Monitoring in Activity Recognition

Recent research [36] showed that user activity and actions can be used to suggest human health status. Research has led medical experts to believe that there is a clear link between the amount of physical activity and the various obesity- and metabolism-related diseases. Considering the vast amount of data that can be gathered about the behavior of an individual, the concept of using these data to gather knowledge about the human health condition has evolved rapidly. While this is assumed to be a better option than a time-limited medical appointment, it is not considered a substitute, but more of an extra method.

One of the earliest attempts to tackle this issue was a Bluetooth-based accelerometer worn in three locations and RFID modules used to classify objects used daily [37]. The paper concluded that most people remain in one of the following states: sit, stand, lie, walk, and run. In addition, the aforementioned solution included a wrist-worn sensor that was used to track hand movement to improve the precision of human motion detection. This sensor also features an RFID reader to detect tags placed on certain objects. The sensor provides data when reading a nearby tag, which defines the hand movement when using that object. Several habits coupled with each state of the body were investigated for the assessment part, such as drinking while sitting, standing, or walking, and ironing, or brushing hair while standing up. The results showed a major improvement in accuracy with the use of RFID, raising the overall value from 82% to -97%. The results averaged 94%, labeling walking as the most challenging activity to identify when taking into account the recognition of body condition.

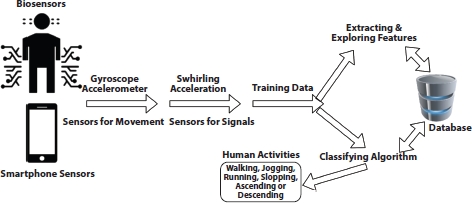

4.4 Machine Learning Techniques

The rapid developments in ICTs and wireless communication networks have resulted in the smart sensors being used. Using smart devices brings health practitioners and patients together in emerging healthcare networks for safe and automated routine tracking of older people’s behaviors. Smart body sensors and tablets are used continuously for personal wellbeing monitoring and wellness. Wearable sensor technology is one of the key advances in healthcare monitoring systems with smart sensor technologies. The implementation of intelligent wearable sensors in healthcare has contributed to the advancement of smart applications, such as smart healthcare and smart healthcare monitoring systems. Figure 4.2 shows an overall framework of smart health monitoring systems. Today, health information technology is a vital area for improving healthcare quality by optimizing patient collection, storage, and retrieval of essential health information. In this context, there is a lot of optimism about advancing ML techniques, which play a crucial role in understanding human behavior.

The manner in which data are stored, interpreted, and processed has changed dramatically in the last few decades. Tremendous quantities of data are generated every second and can provide some useful insights if these data are used and analyzed effectively. Many data mining methods have advanced in analyzing the vast amount of data involved. The selection of suitable models is an important part of predictions. We developed models using several ML techniques in our exercise and compared the accuracy of different algorithms. HAR is the issue of predicting a person’s actions, often indoors, based on sensor data, such as a smartphone accelerometer, and sensor data streams are often split into subsequences called windows, and each window is associated with a larger process, called a sliding window approach. The convolutional neural networks and long-term memory networks are ideally suited for learning features from raw sensor data and for predicting the associated movement in deep learning, and even both together. The machine-learning algorithms are as follows.

Figure 4.2 The smart health monitoring framework.

4.4.1 Decision Trees Algorithms for Activity Reorganization

Decision trees are one of the common problem-classification algorithms, such as recognition of human behavior. The first model was developed using the decision tree C4.5 for the classification. Trees are quick to understand in a decision. However, precision will suffer if predictors and results have a nonlinear relationship.

4.4.2 Adaptive Boost Algorithms for Activity Reorganization

AdaBoost is an effectiveness-enhancing strategy. This algorithm tends to give greater importance to the wrongly categorized instances of weak learners and thus encourages weak learners to perform well. We used AdaBoost technique with 10 decision trees to improve the classification accuracy of a single deep classification tree.

4.4.3 Random Forest Algorithms for Activity Reorganization

Consequently, random forest is the algorithm aimed at putting together poor learners to increase precision. It bootstraps various predictors and produces many weak trees from bootstrapped predictors. The indicator of bootstrapping implies fewer clustered trees. And, in the end, it brings poor decision trees together to predict the result. This algorithm also provides much more accuracy when classifying decision trees.

4.4.4 Support Vector Machine (SVM) Algorithms for Activity Reorganization

It is a supervised learning algorithm in which the SVM model is used to define samples as space points and divides points based on outcome categories, with a simple dividing distance. The new group of points is determined according to which side of the distance they fall on.

4.5 Other Applications

Smart activity sensors have become more and more accessible in several fields from fashion to IT and medicine. Introducing smart biosensors in oncology in clinical trials represents a new trend with many debatable aspects [38]. The need to introduce smart sensors derives from the cost of clinical trials and the need to secure an effective collection of data. Nowadays, the cost of new anticancer treatments varies between $648 million to $2.7 billion. Only 35% of studies make it from Phase II clinical trials to Phase III clinical trials, and for those who succeed, the product will have taken about 8 years to reach the market [39]. Monitoring several factors from compliance to treatment to vital signs and patients’ quality of life requires on-site visits and involves a considerable burden in terms of resources and workload. It has been estimated that more effective management of monitoring could cause a more timely screening of clinical studies that will not proceed further decreasing the cost and the time researchers and stakeholders spend [40, 41].

Recently, smart activity detecting biosensors have appeared as a promising alternative. Researchers and stakeholders have longed for a long time to connect clinical trials to eHealth and mHealth modalities [42]. According to a report published by the American Society of Clinical Oncology, novel technologies for data collection represent prime opportunities to standardize data collected across phases and to increase comparability of results [41]. Such novelties include wearable devices, smart electronic devices worn on the body as implants or accessories (including activity trackers), mobile technology, portable devices operating via wireless cellular services, biosensors, and subsystems monitoring the environment [38].

Multi-institution trials can improve their data collection methods and quantity (sample size) and quality (diversity) features of their studies. Activity biosensors can support more specific health outcome data collection via wearables and mobile technology. Wearables’ tracking is categorized as clinical outcomes assessment (COA) or non-clinical outcomes assessment (non-COA) encompassing a wide variety of quantifiable factors. Quantifying individuals’ physiology, mental state, or ability to complete an activity is possible as long as a key condition of requiring an activation step is involved [41].

For example, ample studies have shown the accuracy and representativeness of data collected through active sensors, mostly with sensors designed to collect a single measure [43]. Mobile-enabled blood glucose monitors, wireless pulmonary artery pressure monitors, and balance quality assessment are a few of the sensors that have been developed and validated for specific measures. The vitals of the patients reporting unseen or undermonitored incidents related to their breath pattern, cardiac function, and metabolism (glucose levels). Activity sensors placed on medicines storing boxes can monitor the patients’ compliance, shedding light to bizarre patterns of efficacy and inefficacy or adverse effects. Overall, smart activity sensors in oncology can help to justify sufficient clinical benefit of a new treatment, which may increase the likelihood of proceeding to a large randomized trial. Clinical studies with poor outcomes can be ceased timely before consuming more time and resources [41].

4.6 Limitations

Introducing smart activity sensors poses several challenges. The learning curve for both researchers and patients will define their further establishment in the field. The cost is also a considerable factor. Although the funding for clinical trials in oncology is high, smart sensors will establish themselves only if they can prove their cost-effectiveness. Finally, yet importantly, despite their advantages, smart activity sensors represent an invasion in the patients’ personal lives.

Although the learning curve to smart technology is small for young and educated people, many of whom are involved in clinical trials, the same does not apply to old people. Cancer becomes more prevalent with the progression of age, and most clinical trials include third age individuals. Collective or personalized training and consultation/help-center modalities can be helpful. Comparison across devices has shown that step count, for instance, is relatively accurate for most wearables for 18- to 39-year-olds but more variable in older age groups [41, 44]. However, step counting requires minimal interaction between the user and the device. In case several participants in the study cannot fully comply with the sensors’ use, the collection and validation of data will be in peril. Perhaps introducing these devices to studies focusing on populations with a sufficient command of smart technology while advocating for the wider promotion of digital literacy is a prudent strategy to follow.

Access to data represents a second serious concern. This is a rather technical issue that resonates with the previous point. The documentation of the source and lineage of the data (data provenance) is usually conducted through the manufacturer’s server. Tracking the specific device, where the data come from, is quite difficult and can only be resolved if each user is registered with a personal account to the server of the manufacturer [43]. Data are difficult to be collected, let alone undergo standardization from most wearable devices, unless there is specific software. The necessity of specific software increases the cost of these devices and makes most smart devices that the patient already possesses ineligible. While training patients to register themselves and use new devices they are not familiar with (rather than a new application installed in their smartphone) is a considerable burden, the regulatory consequences of such steps ought also to be taken into account [38].

4.6.1 Policy Implications and Recommendations

The regulatory infrastructure of smart activity sensors is complicated because of the disparity of legislation around the globe. In some cases, such legislation is not sufficiently developed. In other cases, for instance in Europe, that is considered the alma mater of data protection, personal data regulations may be strict enough to pose serious obstacles to the implementation of such studies. The EU Clinical Trials legislation of 2014 has no specific provisions for smart health monitoring [45], while smart sensors requirements may contradict with the legislations provisions for ethical approval of any clinical trial in Europe [46]. Similar concerns will appear in other continents, and although we cannot address all the potential scenarios in this chapter, we can hypothesize that international or intercontinental multicenter studies relying on smart activity sensors may face considerable obstacles.

4.7 Discussion

Upcoming directions in smartphones and biosensors would grow the wearables’ market, where researchers could enhance wearables’ frameworks for many applications. Hence, such innovations would attract applying wearables clinically and at home for health monitoring. Ongoing and accurate distant monitoring of vital medical conditions for the elderly population in everyday activities is a vital job. HAR using wearables to identify abnormal conditions, for examples falls, is a novel trend and could improve the health systems. This chapter highlighted a smart HAR applying timely biosensors to show the usability of such systems in determining activities. The system was illustrated via applications where smartphone and biosensors data could be sent to a distant server at a specific frequency, and data were analyzed timely. The system displayed practicability, precision, and efficacy in recognizing activity. The innovations in biosensors and smartphones techniques could provide critical solutions to enhance the quality of healthcare for patients suffering many disorders. This chapter shows adopting a digital, proficient, and adaptable HAR system for determining timely, serious medical status via smartphones and biosensors using gathered and kept data by ML technologies to test outcomes. HAR data recorded by biosensors might show more precision than those recorded smartphones.

4.8 Conclusion

Wearables and smartphones are growing; hence, HAR becomes interesting for several scientists to detect digitally human behavior and render validated data, for instance, in smart homes setting, healthcare monitoring applications, emergency, and transportation services. Activity recognition-centered biosensor data are quite challenging, for the various ML technologies; hence, it is vital to thoroughly explore such technologies. It is critical to expand HAR to other activities like biking or sleeping and discover further characteristics that could explore human-to-human communications and interpersonal interactions. However, despite the advances in health information technology, ML, and data mining technologies, human behavior is yet normally impulsive and a human might execute multiple or unrelated activities with variable velocities at once. Therefore, the next generation of HAR shall be developed to identify such simultaneous activities and deal with doubts in reaching better precision and enhance healthcare performance, quality, and safety.

References

1. Visvizi, A., Jussila, J., Lytras, M.D., Ijäs, M., Tweeting and mining OECD-related microcontent in the post-truth era: A cloud-based app. Comput. Hum. Behav., 107, 105958, 2020, https://doi.org/10.1016/j.chb.2019.03.022.

2. Mshali, H., Lemlouma, T., Moloney, M., Magoni, D., A survey on health monitoring systems for health smart homes. Int. J. Ind. Ergon., 66, 26–56, 2018, https://doi.org/10.1016/j.ergon.2018.02.002.

3. Subasi, A., Radhwan, M., Kurdi, R., Khateeb, K., IoT based mobile healthcare system for human activity recognition. 2018 15th Learn. Technol. Conf. T, pp. 29–34, 2018, https://doi.org/10.1109/LT.2018.8368507.

4. Mahdavinejad, M.S., Rezvan, M., Barekatain, M., Adibi, P., Barnaghi, P., Sheth, A.P., Machine learning for internet of things data analysis: a survey. Digit. Commun. Netw., 4, 161–75, 2018, https://doi.org/10.1016/j.dcan.2017.10.002.

5. Gope, P. and Hwang, T., BSN-Care: A Secure IoT-based Modern Healthcare System Using Body Sensor Network. IEEE Sens. J, 16, 1–1, 2016.

6. Avci, A., Bosch, S., Marin-Perianu, M., Marin-Perianu, R., Havinga, P., Activity Recognition Using Inertial Sensing for Healthcare, Wellbeing and Sports Applications: A Survey. 23th Int. Conf. Archit. Comput. Syst. 2010, pp. 1–10, 2010.

7. Clarkson, B.P., Life patterns: structure from wearable sensors, Thesis. Massachusetts Institute of Technology, Massachusetts, 2002.

8. Reyes-Ortiz, J.-L., Oneto, L., Samà, A., Parra, X., Anguita, D., Transition-Aware Human Activity Recognition Using Smartphones. Neurocomputing, 171, 754–67, 2016, https://doi.org/10.1016/j.neucom.2015.07.085.

9. Majumder, S., Aghayi, E., Noferesti, M., Memarzadeh-Tehran, H., Mondal, T., Pang, Z. et al., Smart Homes for Elderly Healthcare-Recent Advances and Research Challenges. Sensors, p.2496, 17, 2017, https://doi.org/10.3390/s17112496.

10. Liu, L., Peng, Y., Liu, M., Huang, Z., Sensor-based human activity recognition system with a multilayered model using time series shapelets. Knowl.-Based Syst., 90, 138–52, 2015, https://doi.org/10.1016/j.knosys.2015.09.024.

11. Xu, Y., Shen, Z., Zhang, X., Gao, Y., Deng, S., Wang, Y. et al., Learning multilevel features for sensor-based human action recognition. Pervasive Mob. Comput., 40, 324–38, 2017, https://doi.org/10.1016/j.pmcj.2017.07.001.

12. Wang Y, Fadhil A, Lange JP, Reiterer H. Towards a holistic approach to designing theory-based mobile health interventions. arXiv preprint arXiv:1712.02548. 2017 Dec 7.

13. Hassan, M.M., MdZ, Uddin, Mohamed, A., Almogren, A., A robust human activity recognition system using smartphone sensors and deep learning. Future Gener. Comput. Syst., 81, 307–13, 2018, https://doi.org/10.1016/j.future.2017.11.029.

14. Liu, Y., Nie, L., Liu, L., Rosenblum, D.S., From action to activity: Sensorbased activity recognition. Neurocomputing, 181, 108–15, 2016, https://doi.org/10.1016/j.neucom.2015.08.096.

15. He, H., Tan, Y., Zhang, W., A wavelet tensor fuzzy clustering scheme for multi-sensor human activity recognition. Eng. Appl. Artif. Intell., 70, 109–22, 2018, https://doi.org/10.1016/j.engappai.2018.01.004.

16. Mathie, M.J., Coster, A.C.F., Lovell, N.H., Celler, B.G., Detection of daily physical activities using a triaxial accelerometer. Med. Biol. Eng. Comput., 41, 296–301, 2003, https://doi.org/10.1007/BF02348434.

17. Bao, L. and Intille, S.S., Activity Recognition from User-Annotated Acceleration Data, in: Pervasive Comput., A. Ferscha and F. Mattern (Eds.), pp. 1–17, https://doi.org/10.1007/978-3-540-24646-6_1, Springer, Berlin, Heidelberg, 2004.

18. Kwapisz, J.R., Weiss, G.M., Moore, S.A., Activity recognition using cell phone accelerometers. ACM SIGKDD Explor. Newsl., 12, 74–82, 2011, https://doi.org/10.1145/1964897.1964918.

19. Lukowicz, P., Ward, J.A., Junker, H., Stäger, M., Tröster, G., Atrash, A. et al., Recognizing Workshop Activity Using Body Worn Microphones and Accelerometers, in: Pervasive Comput., A. Ferscha and F. Mattern (Eds.), pp. 18–32, https://doi.org/10.1007/978–3-540–24646-6_2, Springer, Berlin, Heidelberg, 2004.

20. Leonardis, G.D., Rosati, S., Balestra, G., Agostini, V., Panero, E., Gastaldi, L. et al., Human Activity Recognition by Wearable Sensors: Comparison of different classifiers for real-time applications. 2018 IEEE Int. Symp. Med. Meas. Appl. MeMeA, pp. 1–6, 2018, https://doi.org/10.1109/MeMeA.2018.8438750.

21. Fleischer, C., Reinicke, C., Hommel, G., Predicting the intended motion with EMG signals for an exoskeleton orthosis controller. 2005 IEEERSJ Int, Conf. Intell. Robots Syst., 2005, https://doi.org/10.1109/IROS.2005.1545504.

22. Rowe, P.J., Myles, C.M., Walker, C., Nutton, R., Knee joint kinematics in gait and other functional activities measured using flexible electrogoniometry: how much knee motion is sufficient for normal daily life? Gait Posture, 12, 143–55, 2000, https://doi.org/10.1016/s0966–6362(00)00060–6.

23. Sutherland, D.H., The evolution ofclinical gait analysis. Part II kinematics. Gait Posture, 16, 159–79, 2002, https://doi.org/10.1016/s0966–6362(02)00004–8.

24. Bayat, A., Pomplun, M., Tran, D.A., A Study on Human Activity Recognition Using Accelerometer Data from Smartphones. Proc. Comput. Sci., 34, 450–7, 2014, https://doi.org/10.1016/j.procs.2014.07.009.

25. Attal, F., Mohammed, S., Dedabrishvili, M., Chamroukhi, F., Oukhellou, L., Amirat, Y., Physical Human Activity Recognition Using Wearable Sensors. Sensors, 15, 31314–38, 2015, https://doi.org/10.3390/s151229858.

26. Ronao, C.A. and Cho, S.-B., Human activity recognition with smartphone sensors using deep learning neural networks. Expert Syst. Appl., 59, 235–44, 2016, https://doi.org/10.1016/j.eswa.2016.04.032.

27. Kozina, S., Gjoreski, H., Gams, M., Luštrek, M., Efficient Activity Recognition and Fall Detection Using Accelerometers, in: Eval. AAL Syst. Compet. Benchmarking, J.A. Botía, J.A. Álvarez-García, K. Fujinami, P. Barsocchi, T. Riedel (Eds.), pp. 13–23, https://doi.org/10.1007/978–3-642–41043-7_2, Springer, Berlin, Heidelberg, 2013.

28. Junker, H., Amft, O., Lukowicz, P., Tröster, G., Gesture spotting with body-worn inertial sensors to detect user activities. Pattern Recognit., 41, 2010–24, 2008, https://doi.org/10.1016/j.patcog.2007.11.016.

29. Shoaib, M., Bosch, S., Incel, O.D., Scholten, H., Havinga, P.J.M., Complex Human Activity Recognition Using Smartphone and Wrist-Worn Motion Sensors. Sensors, 16, 426, 2016, https://doi.org/10.3390/s16040426.

30. De, D., Bharti, P., Das, S.K., Chellappan, S., Multimodal Wearable Sensing for Fine-Grained Activity Recognition in Healthcare. IEEE Internet Comput., 19, 26–35, 2015, https://doi.org/10.1109/MIC.2015.72.

31. Paul, S.S., Tiedemann, A., Hassett, L.M., Ramsay, E., Kirkham, C., Chagpar, S. et al., Validity of the Fitbit activity tracker for measuring steps in community-dwelling older adults. BMJ Open Sport Exerc. Med., 1, e000013, 2015, https://doi.org/10.1136/bmjsem-2015–000013.

32. Fritz, T., Huang, E.M., Murphy, G.C., Zimmermann, T., Persuasive technology in the real world: a study of long-term use of activity sensing devices for fitness. Proc. SIGCHI Conf. Hum. Factors Comput. Syst., Association for Computing Machinery, New York, NY, USA, pp. 487–496, 2014, https://doi.org/10.1145/2556288.2557383.

33. Han, J., Shao, L., Xu, D., Shotton, J., Enhanced Computer Vision With Microsoft Kinect Sensor: A Review. IEEE Trans. Cybern., 43, 1318–34, 2013, https://doi.org/10.1109/TCYB.2013.2265378.

34. Boas Y.A. Overview of virtual reality technologies. In Interactive Multimedia Conference, vol. 2013, p. 4, 2013 Aug.

35. Deutsch, J.E., Brettler, A., Smith, C., Welsh, J., John, R., Guarrera-Bowlby, P. et al., Nintendo wii sports and wii fit game analysis, validation, and application to stroke rehabilitation. Top. Stroke Rehabil., 18, 701–19, 2011, https://doi.org/10.1310/tsr1806-701.

36. Coulston, A.M., Boushey, C.J., Ferruzzi, M., Delahanty, L. (Eds.), Nutrition in the Prevention and Treatment of Disease, 4th edition, Academic Press, London; San Diego, CA, 2017.

37. Hong, Y.-J., Kim, I.-J., Ahn, S.C., Kim, H.-G., Mobile health monitoring system based on activity recognition using accelerometer. Simul. Model. Pract. Theory, 18, 446–55, 2010, https://doi.org/10.1016/j.simpat.2009.09.002.

38. Dicker, A.P. and Jim, H.S.L., Intersection of Digital Health and Oncology. JCO Clin. Cancer Inform., 2, 1–4, 2018, https://doi.org/10.1200/CCI.18.00070.

39. Galsky, M.D., Grande, E., Davis, I.D., De Santis, M., Arranz Arija, J.A., Kikuchi, E. et al., IMvigor130: A randomized, phase III study evaluating first-line (1L) atezolizumab (atezo) as monotherapy and in combination with platinum-based chemotherapy (chemo) in patients (pts) with locally advanced or metastatic urothelial carcinoma (mUC). J. Clin. Oncol., 36, TPS4589-TPS4589, 2018, https://doi.org/10.1200/JCO.2018.36.15_suppl.TPS4589.

40. Bai, J., Sun, Y., Schrack, J.A., Crainiceanu, C.M., Wang, M.-C., A two-stage model for wearable device data. Biometrics, 74, 744–52, 2018, https://doi.org/10.1111/biom.12781.

41. Cox, S.M., Lane, A., Volchenboum, S.L., Use of Wearable, Mobile, and Sensor Technology in Cancer Clinical Trials. JCO Clin. Cancer Inform., 2, 1–11, 2018, https://doi.org/10.1200/CCI.17.00147.

42. Estrin, D. and Sim, I., Open mHealth Architecture: An Engine for Health Care Innovation. Science, 330, 759–60, 2010, https://doi.org/10.1126/science.1196187.

43. Case, M.A., Burwick, H.A., Volpp, K.G., Patel, M.S., Accuracy of Smartphone Applications and Wearable Devices for Tracking Physical Activity Data. JAMA, 313, 625, 2015, https://doi.org/10.1001/jama.2014.17841.

44. Miorandi, D., Sicari, S., De Pellegrini, F., Chlamtac, I., Internet of things: Vision, applications and research challenges. Ad Hoc Netw., 10, 1497–516, 2012, https://doi.org/10.1016/j.adhoc.2012.02.016.

45. Petrini C. Regulation (EU) No 536/2014 on clinical trials on medicinal products for human use: an overview. Annali dell’Istituto superiore di sanità, 50, 317–21, 2014.

46. Tsimberidou A.M., Ringborg U., Schilsky R.L., Strategies to overcome clinical, regulatory, and financial challenges in the implementation of personalized medicine. American Society of Clinical Oncology Educational Book, 33, 1, 118–25, 2013.

- * Corresponding author: [email protected]