5

Use of Assistive Techniques for the Visually Impaired People

Anuja Jadhav3*, Hirkani Padwad1, M.B. Chandak2 and Roshani Raut3

1Bajaj Institute of Technology, Wardha, Maharashtra, India

2Ramdeobaba College of Engineering and Management, Nagpur, Maharashtra, India

3Pimpri Chinchwad College of Engineering, Pune, Maharashtra, India

Abstract

Knowledge acquisition is not a simple task for blind people. Apart from sounds and voices, Braille is the most popular information transfer method used by blind people. There have been numerous new types of Braille created, including American Literary Braille, British Braille, computer Braille, literary Braille, music Braille, writing Braille, and so on. Conventional method used for Braille writing is slate and stylus. Other types of Braille writers and Braille computer software, such as voice recognition software, special computer keyboards, optical scanners, and radio frequency identification (RFID) based Braille character identification have been developed. A range of Smart education solutions for visually impaired people have been created, e.g., TripleTalk USB Mini Speech Synthesizer, BrailleNote Apex, TypeAbility Typing Instruction, Virtual Pencil Math Software, GeoSafari Talking Globe, IVEO Hands On Learning System, VoiceOver, BrailleTouch, List Recorder, Audible, Audio Exam Player, and Educational Chatbot, to name a few.

Keywords: Blind, Braille, learning system, visually impaired, AI, machine learning

5.1 Introduction

According to the International Agency for the Prevention of Blindness (IAPB), out of a global population of 7.3 billion people, 253 million (29%) are visually impaired, with 36 million being blind and 217 million having moderate to extreme vision impairment. At least 217 million people are severely (20/200) or moderately (20/60) impaired. Cataract, macular degeneration, and glaucoma are the most common causes of blindness worldwide; trachoma, glaucoma, and the bulk of these issues can be resolved. It is the aim of World Health Organization Initiative “Vision 2020” to decrease the worldwide blindness. From over the last century, several efforts have been made to improve the living experience for visually impaired people. Rehabilitation engineering is being used extensively for assistance and recovery of blind people. The objectives of visual rehabilitation are to enable visually impaired persons to execute maximum possible daily activities independently or with minimal aid and at the same time maintaining their security and safety alone and in societal communications. The major areas in this field include vision restorative technology, assistive technology, and enhancement technology. The emergence of artificial intelligence (AI) and machine learning (ML) has revolutionized the technological advancement towards providing independence to blind people in the activities including but not limited to routine cores, travel, safety, education, etc. This chapter provides the reader with an overview of this area in terms of its history, main concepts, major activities, and some of the current research and development projects.

People have been developing instruments to assist visually disabled and blind people for a long time, dating back to the 13th century, when spectacles were first used, followed by the invention of concave lenses. The development got a breakthrough in the year 1929 when Louis Braille published the first book of Braille code that is a series of six raised or un-raised dots that can be used by blind people or people with low vision for reading. This was accompanied by several other significant inventions, such as the blind writing machine and the white cane, which is used by blind people as a navigational aid.

The first development of electronic aid for blind people took place in early 2000, when the first mobile for visually impaired people was released. Many electronic-based aids were invented thereafter like alternative input devices and voice recognition software, which permit individuals to use keyboard and mouse substitutions. In recent years, first digital Brailler was developed that allows people with visual impairments “see” the digital world. The development of autonomous vehicles is in progress, which is going to be a revolutionary step in the independence of blind people. AI and its subfields along with Internet of Things (IoT) have unlocked a large number of possibilities to blind people providing a completely different way of experiencing the world just like normal people do. The research and development for computer-aided diagnosis as well as treatment of visually impaired people has gained momentum, and there is hope for more advancement in coming years. Many AI-based applications have been developed for computer-aided diagnostics, and efforts are being made for development of machine learning-based predictive models for better diagnosis of the reasons for visual impairment. Some of the solutions are smart televisions, smart radios, smart phones, and navigation systems, controlling the household devices, computer vision methods such as visual question answering, person and product identification, emotion detection, event detection, surrounding environment detection, safety alert and many more, AI-based lens technology, visually impaired mobility assistance, speech and text technology in the simplest of tasks like reading menus at a restaurant, and assistive interfaces.

5.2 Rehabilitation Procedure

This section gives an overview of the different rehabilitation procedures.

Vision rehabilitation is a professional field that is effective and is generally practiced as a multidisciplinary rehabilitation program.

Sensory aids for obstacle detection like laser cane, ultrasonic echolocating device, vision assistive devices for people with low vision like tele lenses, TV magnifiers, augmented vision field expansion devices, hybrid vision expansion devices, and vision multiplexing device [1] have been developed [2].

A sensor, a coupling mechanism, and a stimulator are the three components of a sensory substitution system. The sensor captures stimuli and sends them to a coupling device, which decodes them and sends them to a stimulator. Paul Bach-y-TVSS Rita’s Image Substitution System [3], which transformed the image from a video camera into a tactile image and coupled it to the tactile receptors on the back of a blind person, is the oldest and most well-known type of sensory substitution devices. Several new systems have recently been developed that interface the tactile image with tactile receptors on various parts of the body, including the chest, brow, and fingers. “BlindSight,” the sensory substitution technology, features devices like musical tones, vibrations, electrical stimulation of tongue, and wearable haptic actuators like vibrotactile motors, among others, to assist blind people in their navigation activity.

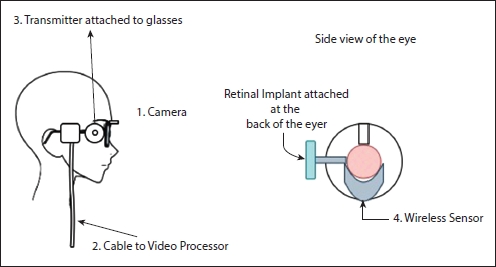

Prosthetic implant is an artificial device that replaces a missing body part and is expected to restore the functionality of the missing body part. The first intracranial visual prosthesis was developed and implanted in 1967 [2]. Vision restoration of the blind has always seemed to be an impossible task until recently efforts are being made in this direction, thanks to the technological advancements. Occipital cortex stimulation, retinal implants (microelectrode arrays that stimulate residual retinal cells), subretinal implants (artificial photoreceptors implant), epiretinal implants (microelectrodes are operated by electronic circuitry), and chemical neurotransmission (retinal network is chemically stimulated instead of electrically activated) are some of the visual prosthesis projects that have been undertaken [4]. Bionic Eye is a revolutionary step towards imparting slight vision to partially or completely blind persons. It is a visual device under experimental phase that is intended to restore functional vision. It works by converting images into electrical impulses, which are then transmitted to the brain through a retinal implant connected to a video camera. It works on patients facing vision loss due to degeneration of photoreceptors. Use of electrical stimulus for perception of phosphenes was proposed back in 1924 and 1929. Medical researchers have been working to overcome the problem of blindness in people with age related-degeneration by using camera-equipped eyeglasses and projecting images directly onto the nerves of the brain’s visual cortex. Among several efforts, only one device has received approval so far, i.e., the Aurgus II. It is a retinal prosthesis system that comprises of external and implanted mechanisms. It has a 16 X 16 electrode array and a receiver that are surgically implanted in the eye. The outward mechanisms include a camera mounted on a glass, video processing unit (VPU), and cable. Figure 5.1 exhibits the working of Aurgus II device. The camera captures video images that are sent to the implant through the coil. The captured images are converted into stimulus commands by the VPU. These electrical signals activate the retinal cells, which deliver the signal through the optic nerve to the brain. While no cases have been identified to date, the system implant poses a risk of intraocular or orbital infection. By increasing the number of phosphenes, better performance is observed in tasks like pattern recognition [5].

Figure 5.1 The working of Argus II Retinal Implant.

Issues like lifespan of implanted electrodes, biocompatibility, and electrical safety prevail and are major challenges in vision rehabilitation [2].

Researchers have been working since at least a decade to create artificial digital retinas that when implanted can allow blind people to see again. There are many obstacles in its ongoing research, one of which is the enormous amount of heat produced by the chips as a result of the large amount of data collected by the camera, which the researchers are working hard to overcome. Image processing is being extensively used for imaging of cornea and lens that provide non-invasive diagnostic contact to significant units like retina and optic nerve. Imaging has become routine procedure in medical diagnosis as a result of which a large dataset of images is readily available, thus giving a boost to AI-based research in this filed. The application of machine learning in retina imaging is mainly done as a supervised learning task. Three use case scenarios identified are classification (Ex. assigning image to different categories based on disease stage), segmentation (Ex. identify and indicate the exact position of lesions), and prediction (ex. predict future conclusions or value of other measures like blood sugar, BP, etc.). The deep learning approach for image classification uses a convolutional neural network (CNN) model for object recognition [6]. AI has also proved to be useful in providing guidance for therapy. AI generates knowledge from large amount of data gathered from thousands of previous cases in a much more handy way than most experienced experts. Identification of patterns in datasets through machine learning techniques aids in personalized prognosis.

Optical coherence tomography (OCT) has transformed the entire ophthalmic care [7]. It is used for diagnosis and laser-assisted cataract surgery. The modern medicine relies on machines to partly perform surgery, which has paved a way to automation in ophthalmic surgery. Currently, machines are used to execute corneal incisions, lens fragmentation, etc. Researchers are working to implement robotic surgery in this range. Some of the robotic eye surgery systems are The Da Vinci robotic arm, Intraocular Robotic Interventional Surgical System (IRISS) [8], Smart instruments to assist surgeons, etc. Glaucoma is one of the causes for irreversible blindness. GAT (Goldmann Applanation Tonometry) is the standard technique used to measure introcular pressure (IOP) [7]. This method, however, cannot be used for continuous monitoring of IOP. In recent years, tonometers with latest technologies like wireless devices and implantable sensors made with microelectromechanical technology have been developed that allow for continuous monitoring of IOP. Smartphone-based imaging can be used for remote consultation and is useful for people in rural areas who do not have an easy access with a trained ophthalmologist. The smartphone camera can be converted into an ophthalmoscope using the specially developed adapters, viz. Ocular CellScope, PeekVision, D-Eye, etc. Similarly, technological development has been done for imaging in cornea and Keratoconus, refractive surgery, vitreoretinal disorders, ocular oncology, ocular drug development, etc.

Microperimetry is a widely used test used to create a retinal sensitivity map of the amount of light perceived on retina for people who have problem fixating an object or light source. The test uses scanning laser or retinal camera technology and eye tracking technology for repeated testing, which is useful for monitoring purpose.

A number of alternative approaches for vision rehabilitation have been developed, and few are under investigation phase, viz. gene therapy and stem cell transplants.

The following table summarizes the relationship between type of blindness and rehabilitation procedure [2].

| Type of blindness | Suitable visual rehabilitation procedures | |||

| Pre-chiasmatic prosthesis | Cortical prosthesis | Sensory substitution | Visual aids | |

| Late due to some retinopathy | Yes | Yes | Yes | Yes |

| Late due to peripheral cause | No | Yes | Yes | Yes |

| Early during development | No | Yes | Yes | Yes |

| Early after development | No | No | Yes | Yes |

5.3 Development of Applications for Visually Impaired

The first product based on text to speech for the visually impaired was the Kurzweil Reading Machine, developed in 1976, followed by release of the first portable device, the Kurzweil-National Federation of the Blind Reader, in 2006. The KNFB Reader is now available as an app. A decent number of applications have been developed in recent years to provide a better living experience to blind people. Some of them are listed below.

Google’s app “Lookout” uses computer vision to assist visually impaired and blind people. Lookout operates in five modes for different types of activities as described below.

- 1. Food label mode (beta version) to identify packaged food. It can also scan barcode.

- 2. Scan document mode reads a whole page of text. The user needs to take a snapshot of a document. The reader scans the text and it is read aloud using the screen reader.

- 3. Quick read mode takes superficial scan of the text and reads it aloud. It is useful for activities like sorting email.

- 4. Currency mode is useful to correctly identify US bank notes.

- 5. Explore mode, in its Beta version, gives information about objects in the surroundings. It offers native language experience to few European languages other than English.

Microsoft has developed artificial intelligence-based application “Seeing AI” for the IOS platform. It is made for people with visual impairment. It uses a device camera to identify objects and then it audibly describes the identified objects. “Seeing AI” can describe documents, text, color, people, products, light (generates an audible tone corresponding to the brightness in the surrounding), and handwriting. The app can describe age, gender, and emotional condition of a person, which it scans through a camera. It can also scan barcode and describe a product and assist a user to focus on a barcode. According to a report, the app successfully recognized people in a photograph with their names. Currency note recognition for selective currencies is also done by the app. Some functions are performed offline, whereas few complex functions like describing a scene require Internet connection.

OrCam is a company that creates a range of personal assistive devices for visually impaired people. OrCamMyEye is a voice-activated device that attaches to glasses. It can read text from a book or a smart phone or any other surface. It also recognizes faces. It works real time as well as offline. It enables visually impaired people to live an independent life. OrCamMyReader is the most advanced artificial intelligence device that read newspapers, books, menus, signs, screens, and product labels. It also responds intuitively to hand gestures. OrCam Read is another product in the range made for people with mild low vision, reading fatigue, or dyslexia. It is a handheld device that reads text from any printed surface of digital display. It can privately read the text that is chosen.

Sharing photos on Facebook is a great way for people to communicate. But the case is not the same for blind or visually impaired people who cannot enjoy the experience of viewing the news feeds. So Facebook has incorporated artificial intelligence to improve the experience of blind persons. To help the blind people understand about the happenings on the news feed, Facebook has added a feature Automatic Alternative Text, which generates a description of a photo using Object Recognition Technology such as the items in the photo, location, i.e., indoors or outdoors, etc.

Drishti (Disambiguating Real Time Insights for Supporting Human with Intelligence) is an artificial intelligence powered solution to give a better living experience to visually impaired people and to enhance their productivity in workplace, created by Accenture under its “Tech4Good” initiative through collaboration with the National Association for Blind in India. The app provides smartphone-based assistance using AI technologies like Natural Language Processing, image recognition, etc. It can recognize people in the surrounding along with their age, gender, and number. It can also read text from a book or any other document, identify currency notes, and identify obstacles like glass doors.

Envision is a popular smartphone-based app that assists blind or visually impaired people by speaking out about the surroundings. It can read text, recognize objects, find the things and people around, and use public transport independently on their own. Recently, the use of their AI-powered software in Google glasses has also been announced, taking the empowering experience for the blind and visually impaired people to another level. It is capable of recognizing text in 60 languages and provides the fastest OCR.

Samsung has developed two solutions, viz. Good vibes and Relumino, to help deaf-blind and people with low vision to communicate better. The Good vibes app aids the deaf-blind people. It uses Morse code to convert vibrations into text or voice and vice versa. It has two types of user interfaces, one that works on gestures, taps, and vibrations, and another for caregiver with standard UI. The Relumino app helps people with low vision to see images clearer by highlighting the image outline, magnifying and minimizing the image, adjusting color brightness and contrast, etc. The app was developed in association with the National Association for Blind (Delhi), which helped Samsung in testing the app.

The “VizWiz” app is an iPhone app that helps blind people to get near-real-time answers to questions about their environment. Blind people can take pictures, ask questions related to those pictures, and get answers from employees at remote locations. Use of machine learning and image recognition has now enabled to get automated reply, i.e., answers to the questions without any human intervention.

To assist visually impaired people in identifying groceries and other household products, Amazon has launched “Show and Tell.” Alexa can recognize bottles, cans, and other packaged goods that are difficult to recognize only by touch.

Horus, AIServe, NavCog, Toyotas BLAID, and ESight are few other companies that are working on projects combining visual aids and artificial intelligence. According to a survey, persons with visual impairments frequently use apps for doing their daily actions and are pleased of the mobile apps and are willing to get improvements and new apps for their assistance. It is highly desirable to collaborate with blind communities and get a better understanding of their social needs and desires in order to increase their capabilities [9].

According to a survey on use of mobile applications for people who are visually blind [10], visually impaired people frequently use the apps specially designed for them to do their daily activities; they are satisfied with the apps and would like to see more apps and improvements in existing apps. Further refinement and testing is highly desirable in these kinds of apps.

5.4 Academic Research and Development for Assisting Visually Impaired

A good amount of research has been undertaken in academia since many decades towards visual rehabilitation. Many devices for assisting blind persons were proposed; however, not all could reach practical realization due to certain limitations. Few of them are mentioned below.

The researchers in [11] suggest a wearable walking guide device for blind or visually impaired people that uses an acoustic signal interface to detect obstacles. The system has a microprocessor and a PDA as a controller. Three ultrasound sensor pairs are used to obtain information about obstacle, and the obtained information is then passed to the microprocessor, which generates acoustic signal for alarm. The system also provides user with guide voice using PDA. A prototype of the system was implemented, and successful experimentation was carried out with requirement for certain modifications. A similar type of device for blind people travel aid “NavBelt” that uses mobile robot obstacle avoidance technology was proposed, but it was unable to provide guidance for fast walking. “IntelliNavi” [12] is a wearable navigation assistive system for the blind and the visually impaired people. It uses Microsoft Kinect’s on-board depth sensor Speeded-Up Robust Features (SURF) and Bag-of-Visual-Words (BOVW) model to extract features. An ML-based model, viz. support vector machine classifier, is used to classify scene objects and obstacles. Devices like “GuideCane” [13] for obstacle detection, which steers around it when it senses an obstacle, are a system with electronic, mechanical, and software components, electronic mobility cane (EMC) [14], which constructs a logical map of surroundings to gather priority information and also provides features like staircase detection and non-formal distance scaling scheme, RecognizeCane, Tom Pouce, Minitact, Ultracane, K-sonar cane, etc. Recently, machine learning higher precision methods are being used for object detection and obstacle detection in navigation.

Slide-Rule [15] is an audio-based multi touch communication technique that allows blind users to access touchscreen applications. The application uses different types of touch gestures like one finger scan, two finger tap, or flick gesture to perform a variety of tasks like browsing a list, selecting an item, flipping songs, etc. A few literature works like [10] present the needs and expectations of visually impaired community from today’s technological developments.

In [16], the authors propose a computer program to assist blind people who are struggling with music and music notation. The information acquisition module is responsible for understanding printed music notation and storing the data in the computer’s memory. Efforts for implementation of optical music recognition using artificial neural network (ANN) [17] have been undergoing for a few decades, but practical realization is still in progress [18]. Introduces a new CAPTCHA method that can be used by blind people. Predefined patterns are used to create a mathematical problem and then converted to speech, which can then be answered by a blind user.

Electronic Travel Aids (ETA) and Electronic Orientation Aids (EOA) were invented by Rene Farcy et al. to help visually disabled people navigate in unfamiliar environments. Two devices developed by the team, an infrared proximeter and a laser telemeter, have been in use for a few years.

5.5 Conclusion

For blind people, acquiring knowledge is difficult. Apart from sounds and voices, Braille is the most popular information transfer method used by blind people. There have been numerous new types of Braille created, including American Literary Braille, British Braille, computer Braille, literary Braille, music Braille, writing Braille, and so on. Conventional method used for Braille writing is slate and stylus. Speech recognition software, special computer keyboards, optical scanners, and RFID-based Braille character identification are examples of other forms of Braille writers and Braille computer software that have been developed. A range of Smart education solutions for blind and visually impaired people have been created, e.g., TripleTalk USB Mini Speech Synthesizer, BrailleNote Apex, TypeAbility Typing Instruction, Virtual Pencil Math Software, GeoSafari Talking Globe, IVEO Hands On Learning System, VoiceOver, BrailleTouch, List Recorder, Audible, Audio Exam Player, Educational Chatbot [19], RFID, and Ontology based new Chinese Braille system, to name a few [20].

Use of AI methods such as deep learning, machine learning, and computer vision continues to be adapted and tested, so there is hope that the life of blind people will be far easier in the future.

References

1. Peli, E., Vision Multiplexing: an Engineering Approach to Vision Rehabilitation Device Development. Optom. Vis. Sci., 78, 5, 304–315, May 2001.

2. Veraart, C., Duret, F., Brelén, M., Oozeer, M., Delbeke, J., Vision rehabilitation in the case of blindness. Expert Rev. Med. Devices, 1, 1, 139–153, 2004.

3. Bach-y-Rita, P., Collins, C.C., Saunders, F., White, B., Scadden, Vision Substitution by Tactile Image Projection. Nature, 221, 963–964, https://doi.org/10.1038/221963a0, 1969.

4. Weiland, J.D. and Humayun, M.S., Retinal Prosthesis. IEEE Trans. Biomed. Eng., 61, 5, 1412–1424, May 2014.

5. Brelén, M.E., Duret, F., Gérard, B., Delbeke, J., Veraart, C., Creating a meaningful visual perception in blind volunteers by optic nerve stimulation. J. Neural Eng., 2, 22, 2005.

6. Schmidt-Erfurth, U., Sadeghipour, A., Gerendas, B.S., Waldstein, S.M., Bogunović, H., Artificial intelligence in Retina. Prog. Retin. Eye Res., 67, 1–29, https://doi.org/10.1016/j.preteyeres.2018.07.004, 2018.

7. Current Advances in Ophthalmic Technology; Ichhpujani, Parul (ed.); In Book Series: Current Practices in Ophthalmology, p. 1–4, 69, 84, Springer, https://doi.org/10.1007/978-981-13-9795-0, 2020

8. Chen, C.W., Lee, Y.H., Gerber, M.J., Cheng, H. et al., Intraocular robotic interventional surgical system (IRISS): semi-automated OCT-guided cataract removal. Int. J. Med. Robot. Comput. Assist. Surg., 14 (6): pp. 1–14, 2018.

9. Morrison, C., Cutrell, E., Dhareshwar, A., Doherty, K., Thieme, A., Taylor, A., Imagining Artificial Intelligence Applications with People with Visual Disabilities using Tactile Ideation, in: Proceedings of the 19th International ACM SIGACCESS Conference on Computers and Accessibility, pp. 81–90, 10.1145/3132525.3132530, 2017.

10. Griffin-Shirley, N., Banda, D.R., Ajuwon, P.M., Cheon, J., Lee, J., Park, H.R., Lyngdoh, S.N., A Survey on the Use of Mobile Applications for People Who Are Visually Impaired. J. Vis. Impair. Blind., 111, 307–323, July-August 2017.

11. Kim, C.-G. et al., Design of a wearable walking-guide system for the Blind. ACM, 2007.

12. Bhowmick, A., Prakash, S., Bhagat, R., Prasad, V., Hazarika, S., IntelliNavi: Navigation for Blind Based on Kinect and Machine Learning Multidisciplinary Trends in Artificial Intelligence. MIWAI ‘14, vol. 8875, pp. 172–183, 10.1007/978-3-319-13365-2_16, 2014.

13. Shoval, S., Ulrich, I., Borenstein, J., Computerized Obstacle Avoidance Systems for the Blind and Visually Impaired. J. Logic Comput. - LOGCOM, ch14, 10.1201/9781420042122, 2001.

14. Bhatlawande, S., Mahadevappa, M., Mukherjee, J., Biswas, M., Das, D., Gupta, S., Design, Development and Clinical Evaluation of the Electronic Mobility Cane for Vision Rehabilitation. IEEE Trans. Neural Syst. Rehabil. Eng., 22(6), 1148–1159, 10.1109/TNSRE.2014.2324974, 2014.

15. Kane, S., Bigham, J., Wobbrock, J., Slide Rule: Making Mobile Touch Screens Accessible to Blind people using Multi-Touch Interaction Techniques. ASSETS’08: The 10th International ACM SIGACCESS Conference on Computers and Accessibility, pp. 73–80, 10.1145/1414471.1414487, 2008.

16. Homenda, W., Breaking Accessibility Barriers - Computational Intelligence in Music Processing for Blind People, in: Proceedings of the Fourth International Conference on Informatics in Control, Automation and Robotics, vol. 1, ICINCO, pp. 32–39, 2007.

17. Macukow, B. and Homenda, W., Methods of Artificial Intelligence in Blind People Education. International Conference on Artificial Intelligence and Soft Computing, Springer-Verlag Berlin Heidelberg, pp. 1179–1188, 2006.

18. Shirali-Shahrezal, M. and Shirali-Shahreza, S., CAPTCHA for Blind People. IEEE International Symposium on Signal Processing and Information Technology, 978-1-4244-1 835-0/07, 2007.

19. Naveen Kumar, M., Linga Chandar, P.C., Prasad, V., Android Based Educational Chatbot for Visually Impaired People. IEEE International Conference on Computational Intelligence and Computing Research, 10.1109/ ICCIC.2016.7919664, 2016.

20. Tang, J., Using ontology and RFID to develop a new Chinese Braille learning platform for blind students. Expert Syst. Appl., 40, 8, 2817–2827, https://doi.org/10.1016/j.eswa.2012.11.023, 2013.

- * Corresponding author: [email protected]