Making IT Right: Managing Goals, Time, and Costs

3.1 Before You Start: Assessing Value and Risks

Projects create new products, new services, or new capabilities. The first step of a sound management process is to understand whether the new products, services, or capabilities are worth our effort. The relevance of a project depends, in general, upon two main factors:

- The value generated by the project

- The risks associated with the project.

The meaning of value and risk, however, is not absolute and depends on the circumstances and on the project environment. For instance, a project developed for humanitarian reasons measures value in a different way from a project to launch a commercial product. Similar is the concept of risk. As mentioned by Maylor (2010), the first projects related to the Apollo mission, although they were considerably high risk, were critical to gain the know-how necessary to send a man to the moon. A typical scenario in the software industry is represented by a make or buy decision, namely, choosing between developing a new system or acquiring an existing one with features similar to those needed.

In the rest of this section, we look at factors and techniques to assess the value of a project. These can be used with different purposes:

- To decide whether a project is worth pursuing

- To select which project to start out of a portfolio of possible proposals

- To choose the best project plan, given a project with different plans.

3.1.1 Project Value: Aspects to Consider

Three main factors determine the value generated by a project:

- Direct and indirect value. As mentioned earlier, the value of a project does not refer necessarily and only to the revenues it generates directly and through its outputs. Considerations relative to the social and environmental impact, image and publicity, entering a new market, and know-how acquired are some of the considerations that could add or subtract value from a project.

- Sustainability. Many IT projects start without an idea or a strategy to sustain their outputs. Thus, the outputs of a project might not live long after a project and its resources end. Taking into account the operational costs of a projects outputs and the way in which the project outputs will survive after a project ends is an important consideration to understand whether a project is worth doing.

- Alignment with the strategic objectives of the organization. Ensuring that the project aligns with the goals of an organization is an essential point to consider before a project is worth starting. Alignment with the strategic objectives can determine the priority of a project. As pointed out in Maylor (2010), Toyota is a leader in defining priorities: projects are started only if they directly contribute to one of the strategic objectives of the company, namely, quality, cost, or delivery performance.

3.1.2 Project Risks: Aspects to Consider

Various factors determine the risk profile of a project. Among them are

- Resource availability. Projects require the availability of resources—human, financial, and technical—in specific time frames. Although it might be difficult to preempt the required resources in advance, a check on the projects needs is a good sanity check to verify whether a project is worth pursuing.

- Timing. Many projects have specific time windows for the delivery of their outputs. Deliver too early or too late and the outputs of the project might be useless. Consider, for instance, a project to build a rocket to reach another planet. The actual launch can occur only on a specific time frame, to take advantage of the relative position of planets. Deliver the rocket too early, and docking and maintenance might become an issue. Deliver too late and you might lose the opportunity to launch.

- Technical difficulty or uncertainty. The success of many projects relies on the actual capability of solving various technical challenges. Pointing out what these challenges are, understanding the level of risk associated with such challenges, and possible corrective or alternative courses of action are important in determining the values and risks of a project.

- Project environment and constraints. Projects are influenced by various constraints, both internal and external. Various internal and external stakeholders will have an interest in positively or negatively influencing a project. Regulations and standards can severely limit what can be done on a project.

3.1.3 Techniques to Assess Value and Risks

Different techniques are available to assess the value and risk generated by a project. Some are based on financial considerations, while others are more qualitative. In the following, we present some of the most used techniques.

3.1.3.1 Financial Methods

3.1.3.1.1 Payback

The simplest financial evaluation is the payback method, which measures how long it will take to return a projects investment. When using the payback method, an estimation of the project expenses and incomes determines the profits and losses at the end of each project year. The payback is the year at which the project covers all expenses and starts earning. The shorter the payback, the better.

The payback favors projects that minimize financial exposure. One of the issues with payback is that it does not take into account total profit. This second issue is that it does not measure the efficiency with which the money invested in the project is paid back.

3.1.3.1.2 Return of Investment

To overcome some of the limitations of the payback method, another technique that is often employed is the return of investment (ROI), which measures how much we get back for each dollar invested. ROI is calculated from the annual profit, defined as the average profit per year and computed by dividing the profit by the duration of a project:

Annual profit=incomes−expensesproject duration(3.1)

The ROI is then computed by dividing the annual profit by the total project expenses:

ROI=annual profitexpenses(3.2)

3.1.3.1.3 Net Present Value and Internal Rate of Return

Payback and ROI do not take into account the effects of inflation, namely, the fact that an amount of money in the future has a lower value than the same amount available now. (In a sense, “better an egg today than a hen tomorrow”). Thus, for longer projects, payback and ROI tend to overestimate profits, which are usually gotten toward the end of a project.

The net present value technique (or NPR for short) overcomes this issue by taking into account the inflation rate. Thus, if a reliable estimation of the inflation rate can be provided, the value of future expenses and incomes for a project can be recomputed in terms of their actual value.

In particular, when using NPR, profit and losses are computed using the following formula:

Value=1(1+r)i*amount(3.3)

where r is the inflation rate, amount is the net profit at year i, and value is the current value of amount. Note that the first project year is year 0.

An even more complex method is the internal rate of return (IRR for short), which determines the inflation rate which zeroes profits. The interested reader can consult Burke (2006), which contains a nice discussion about financial methods.

3.1.3.1.4 Applying Financial Methods: An Example

Consider two projects, called “Project A” and “Project B,” for which we have estimated expenses and incomes as described in Table 3.1. In particular, the table shows that project A is not profitable in the first 2 years and then starts earning. Project B has a similar behavior, but both expenses and incomes are higher.

Assessing Two Projects Using Financial Methods

Project A |

Project A |

|

|---|---|---|

Year 0 |

−€20,000.00 |

−€30,000.00 |

Year 1 |

−€10,000.00 |

−€30,000.00 |

Year 2 |

€40,000.00 |

€50,000.00 |

Year 3 |

€100,000.00 |

Suppose we have the resources to start only one of the two projects and we use a financial method to choose which project to activate.

If we use the payback method, we select Project A, since it has a shorter payback period. In fact, year 0 is forecast to end with losses, for Project A. So will year 1. At the end of year 2, however, the financial statement will show earnings of €10, 000.

Project B’s payback is 4 years. Years 0 and 1 end with losses. In year 2, project B earns profits of €50,000, but these are not yet sufficient to cover the expenses of the first 2 years. In year 3, however, the project pays back the initial investment.

If we use the ROI method, we select Project B, since it has the highest ROI.

Project A, in fact, has an ROI of 11%, computed as follows:

Annual profit=€40,000-(€20,000+€10,000)3=€3333(3.4)

ROI=€3333€300,000=11%(3.5)

The ROI of Project B, whose calculation we leave to the reader, is 38%.

3.1.3.2 Score Matrices

Financial methods help determine the financial viability of a project, but tell nothing about the project characteristics that are not measurable with profits and losses. Therefore, other methods have been proposed to assess a project. One of the simplest is the score matrix, which allows one to measure a project along several dimensions and assign it a value.

A score matrix is a list of project criteria, each of which is assigned a weight, which measures the importance the criteria have for us or for the organization we work for. The criteria highlight the desirable and undesirable aspects of a project; the weights of desirable features are positive numbers (e.g., from 1 to 5) and the weights of undesirable features are negative numbers (e.g., from −1 to −5).

When we evaluate a project using a score matrix, we measure how well the project satisfies each criterion we have identified, for instance, by assigning a number from 1 (very low) to 5 (very high). We then multiply the scores with the weights and sum all values. Projects scoring a higher value are more desirable than projects with a lower score. Projects can also be compared side by side; hence, the use of the term “matrix” in the name of the technique.

EXAMPLE 3.1

Table 3.2 shows an example of a score matrix used to evaluate three different projects.

A Score Matrix Example

Project 1

Project 2

Project 3

Factor

Description

Weight

Value

Total

Value

Total

Value

Total

Profit >30%

The project will yield a profit >30% if no exceptional events occur

3

4

12

4

12

4

12

Low-risk profile

The project does not present particular risks. That is, there is no risk with a very high impact

2

2

4

3

6

3

6

Schedule is not tight

Project delivery does not require activities to be performed in a very tight schedule

3

3

9

2

6

2

6

Manageable complexity

The complexity is manageable

2

1

2

1

2

1

2

Consistent with current business

The project is mainstream with the activities of the organization

1

1

1

1

1

1

1

Stakeholders

Stakeholders are difficult to manage

−4

2

−8

4

−16

3

−12

The starting point is a list of criteria and weights, which we imagine have been selected by an evaluation committee. Note that the last criterion is negative and it has been assigned a high relevance. Thus, the selection process will tend to favor projects in which stakeholders are not difficult to manage.

The second step is the evaluation of how well each criterion is met by a project. Table 3.2, for instance, shows the value assigned to each project.

The third and final step is computing the scores, which are shown in the last row of the table. According to the data, “Project 1,” the one with the highest score, is preferred over the others.

3.1.3.3 SWOT Analysis

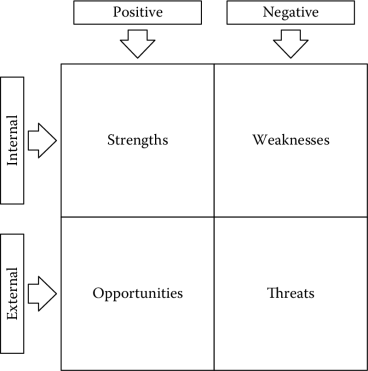

The strengths, weaknesses, opportunities, and threats analysis technique is credited to Albert Humphrey, who used it to determine the competitive advantages of the Fortune 500 companies in the 1970s (Friesner, 2013). The technique can also be used to evaluate the feasibility of a project.

The SWOT analysis is usually performed on a two-by-two matrix, like that shown in Figure 3.1. The analysis proceeds by identifying the strengths, weaknesses, opportunities, and threats related to the project under analysis, and by listing them in the matrix shown in Figure 3.1. (Other formats, of course, are possible.) Once the elements of the SWOT analysis are identified, decision makers use the information to evaluate whether the opportunities are worth the effort and how strengths can overcome weaknesses and threats.

3.1.3.4 Stakeholder Analysis

Stakeholders can exert quite a lot of influence on a project and determine the success or failure of a project. Understanding how stakeholders can influence, positively or negatively, a project is good practice to assess the project’s chances of success and to define a stakeholder management policy.

The stakeholder identification process is informal as it usually proceeds with a mental swipe of the project environment and the actors who might be directly or indirectly involved or affected by the project and its outputs. Once the stakeholders have been identified, the next step consists in understanding what kind of influence each stakeholder can exert in a project. This allows one to cluster the stakeholders and define specific policies for each cluster.

Various ways have been proposed to classify stakeholders. In Maylor (2010), stakeholders are classified in two dimensions:

- The power they can exert in the project

- The interest they have in the project.

This classification allows one to define different policies according to the positioning of the stakeholders in this two-dimensional space.

The extreme case is the one with high-influence and high-power stakeholders. In this case, careful analysis and specific treatment are necessary.

Other situations can use more generic strategies. For instance, it is good practice to keep high-influence and low-power stakeholders informed, while it is safer to keep low-influence and high-power stakeholders satisfied.

Finally, low-influence and low-power stakeholders require minimum effort.

For more complex stakeholder analyses, Yu et al. (2011) propose the i* model, which was developed for requirements engineering. The model is based on two concepts, actors and goals, and models dependencies between these entities. Using the notation, it is thus possible to identify, for each project goal, the stakeholders who have the most influence, whether this influence is positive or negative, and their motivations. The information can then be used to define adequate management strategies.

3.1.3.5 Assessing Sustainability

Evaluating the operational cost of the project outputs helps assess the long-term benefits of a project and what additional actions a project should include to ensure that its results will live after the project ends. This analysis is relevant in several high-risk or highly constrained projects, such as projects to start new companies, research projects, and projects to foster economic development. Often, in fact, these projects deliver benefits as long as steady financing is available; little or nothing survives when the project ends.

For products and services, the two important pieces of information that constitute the basis for a sustainability analysis are (1) the definition of the business model, a specification of how the operating costs will be paid for, and (2) when applicable, how profit will be generated. At a minimum, a good business model includes a value proposition, which clearly identifies the offering, that is, the product or service being offered and its value for the customers. Additionally, an analysis of the key partners, the key activities, and the key resources allows one to understand which resources are needed. Finally, a financial analysis of costs and revenues helps understand the profitability. This is composed of an analysis of the cost structure, which identifies costs and the most costly resources, and of a revenue stream analysis, which lists the sources of incomes, highlighting the most important sources.

The financial analysis might also include a break-even analysis, which identifies the break-even point, that is, the point at which a product generates neither losses nor gains. If the break-even point is not reached, a product will cause losses; any number of items sold above the break-even point will yield a gain.

The break-even point is computed by looking at three pieces information: the operational costs, the price of each item, and the number of items. The first determines the minimum profit that has to be reached. The second determines the profit in terms of items sold. The break-even point is the number of items in which profit equals the operational costs.

See The Business Model Generation (2013) for a freely available and very compact template, which also includes the identification of distribution channels and customer segments.

3.1.3.6 A Recap of Project Selection Techniques

Table 3.3 recaps the project selection techniques presented so far, highlighting the type of support each technique offers to evaluate the value and risk generating factors of a project.

Recap of Project Selection Techniques

Payback |

ROI |

NPR |

Goal Analysis |

Score Matrix |

SWOT |

Stakeholder Analysis |

|

|---|---|---|---|---|---|---|---|

Direct value |

Fully |

Fully |

Fully |

Partially |

Partially |

Partially |

|

Indirect value |

Partially |

Partially |

Partially |

Partially |

|||

Sustainability |

Partially |

Partially |

Partially |

Partially |

|||

Alignment with strategic objectives |

Fully |

Partially |

Fully |

||||

Resource availability |

Partially |

Fully |

|||||

Timing |

Partially |

Fully |

|||||

Technical difficulty or uncertainty |

Partially |

Fully |

|||||

Environment and constraints |

Fully |

Partially |

Fully |

Partially |

Some techniques provide better coverage than others to specific characteristics. This is qualitatively captured in the table using the terms “Fully,” “Partially,” or leaving a cell blank, when the technique does not offer support. Thus, for instance, the stakeholder analysis helps one to get a better understanding of the project environment. The technique, however, is very specific and does not offer support to measure other criteria.

3.1.4 The Project Feasibility Document

The project feasibility document closes the assessment phase by describing the main characteristics of a project and by analyzing, using the techniques presented above, the value and risks of a project. The document can be used to formally authorize the start of a project.

Thus, a feasibility document contains at least the following information:

- A statement ofwork, which describes what the project will accomplish

- The business objectives of the project and its outputs (value) and information about the business model, if relevant

- A summary of the project budget, which forecasts expenses and incomes

- A summary of the project milestones, which is a rough schedule of the project identifying the most important events

- An analysis of the stakeholders

- The project risks

- Possible alternatives to the project, such as a make-or-buy decision

- An evaluation of the project and alternatives, using the techniques described above.

3.2 Formalizing the Project Goals

If the analyses performed with the feasibility study are convincing enough, one of the first activities is fixing and having stakeholders agree on the project scope. This, in fact, constitutes the basis for any further management activity, namely, the definition of the work to be performed, schedule and budget, and the skills required by the team that will perform the work. Project goals, together with the schedule and the budget, are also the basis for contractual agreements with the clients.

Defining project scope is one of the most delicate activities in setting up a project, since

- It ensures that the project includes all and only the work necessary to achieve the project goals.

- It establishes a baseline of the work to be performed.

- It defines a reference document for project acceptance.

The first point is obvious. Adding unnecessary work, in fact, would cause a burden that needs to be sustained either by the project team, which will work on features that are not really necessary, or by the client, who pays for unnecessary work, which, in any case, would negatively affect the project, adding risks and increasing costs.

Ensuring that a project will not include any useless work, however, can be more difficult than it looks. Among the reasons, we mention uncertainties about the product to be built. A customer, for instance, might be uncertain about whether a particular feature is needed or not and, in doubt, add it among the ones to be developed, just to play on the safe side.

In a more difficult scenario, an ambiguous description of the project goals causes the stakeholders to form slightly different opinions about the objectives to achieve. This lack of integrity in a projects vision will most likely cause additional work, glitches, and anomalies.

A clear project scope mitigates the risks illustrated above by ensuring that all project stakeholders form a clear view of the project.

The second and third items on the list should also be quite clear. The specification of the project goals determines the characteristics of the products to build. These, in turn, define the work to build the project outputs and the criteria to verify whether the goals have been met.

An additional consideration is that sometimes tight timing constraints tend to compress this activity, shortening the time the manager has to precisely define the goals. The assumption is that the goals are clear and agreed among the stakeholders, even if there is no document describing them in details. Therefore, the limited time available in the project is better used in more productive activities. The risks of this approach, however, typically become clear too late.

The project scope is fixed in a project scope document. In particular, it is a good idea to include at least the following information in a project scope document:

- Project goals and requirements, which describe what we intend to achieve with the project and the main characteristics of the project and its outputs

- Assumptions and constraints, which describe the conditions which have to be met for the project to succeed

- Project outputs and control points, which describe the outputs of the project and, in some cases, a rough timing of their delivery

- Project Roster, which describes the who.

The first two items should be written in a way that also defines the project acceptance criteria, namely, the minimum conditions for the client to accept a project. Although my experience (together with that of many other project managers) is that client and stakeholders satisfaction is more important than the syntactic compliance with the acceptance criteria, making these explicit beforehand can contribute significantly to establishing a good relationship with the stakeholders and simplify project closing.

Another important piece of information that might be included in a scope document is the procedure to manage changes, namely, how request for changes will be dealt with. This is described in more detail in Chapter 4.

In the rest of the section, we look in more detail at the main content of the scope document.

3.2.1 Project Goals and Requirements

The project goals and requirements define what is inside and what is outside the scope of a project and the characteristics of the project outputs.

To elaborate a bit, two good practices help make the goals SMART and assign them priorities using the MoSCoW classification.

SMART stands for simple, measurable, agreed upon, realistic, and time bound. A goal is SMART when it has the qualities specified by the acrynom. Thus, a SMART goal is simple in its formulation, so that there are no ambiguities in its interpretation; it has measurable criteria to understand whether it has been achieved or not; it is agreed to by all the stakeholders; it can be reached with the resources available in the project; and finally, it has a date by which it has to be reached.

MoSCoW allows the project manager to assign a priority to the goals. The acronym stands for must have (features that are essential), should have (features that are important but not essential), could have (features that would be nice to have), and won’t have (features that will not be included in the product).

Note that a classification with MoSCoW allows the manager to distinguish between base scope, which is the scope required to meet the business requirement and value-added scope, which is discretionary but improves the economics of the overall project. This is similar to what Cameron (2005) and Tomczyk (2005) suggest with the identification of the critical success factors.

In many cases, it is also worthwhile to point out goals and work products that will not be delivered by the project, because they fall outside the scope of the work. The list, of course, should focus on elements typically included in similar projects or items some stakeholders might assume as included in the project scope. The goal is to reduce ambiguities and false expectations.

Consider, for instance, a project related to the development of a one-off software for a specific client. Although not explicitly mentioned in the project scope, some stakeholders might take for granted that user documentation and training will be part of the deal. The work necessary for such items, however, might be beyond the resources available to the team. Making clear that such items will not be included can be a way to better align expectations with actual delivery.

Note that, in general, project requirements differ in two ways from the software requirements we have introduced in Chapter 2. The first is that the project requirements are a superset of the software requirements. Many software development projects, in fact, will include activities that are indirectly related to the software being built, such as, for instance, training of resources, production of user manuals, hardware procurement, setup of an infrastructure to provide user support, and setup of the infrastructure to distribute the system. The second is that project requirements are often at a higher level of abstraction than the software requirements.

A final test that should be performed on the requirements is double checking that the project goals are under the power and control of the project team. If they are not under the control of the project team, in fact, they are, in the best scenario, under the control of some other project stakeholder or, in the worst scenario, completely out of the control of the project. In the first case, it is better to list the constraints and assumptions that make the goals achievable (see Section 3.2.2). In the second case, their achievement will depend on the good luck of the manager.

Once again, things are not so simple. Consider, for instance, a project related to the experimentation of a new technology. One could set the following measurable criteria as a project goal: “the system will be used by 20,000 people during the experimentation.” However, unless people are coerced to use the system, there is no way for the project manager to ensure that the system will be used by 20,000 users. A more realistic wording could highlight that the “experimentation will be set so that at least 20,000 people will be offered the chance to try the system.”

3.2.2 Project Assumptions and Constraints

Project assumptions and constraints define important hypotheses on which the manager bases the achievement of the project goals.

Assumptions are those conditions that are considered to be true, but might not in fact be. Assumptions are not under the control of the project manager, but they might be under the control of some project stakeholders. When this is the case, assumptions can be used to define the duties and obligations of project stakeholders.

Consider a case in which the installation of a new system requires the client to stop operating his or her business for a given period. Stopping operations is clearly not in the power of the project manager, who will list this operation as an essential assumption for project success.

Whether they are under the control of project stakeholders or not, assumptions should be properly addressed in the risk management plan, finding appropriate management strategies in case they cannot be satisfied or turn out to be false (see Section 4.2).

Constraints, by contrast, are known limitations, which shape and define the work we can do. They are used to explain why we set some goals and not others and why we structure the work in some way rather than another.

3.2.3 Project Outputs and Control Points

A deliverable is defined in Project Management Institute (2004) as a unique, measurable, and verifiable work product. Deliverables are the result of work performed in the project and, in many cases, they are also the prerequisites of project activities. For software development projects, examples of deliverables include a software system, a requirements document, and a user manual.

A very common classification distinguishes between internal and external deliverables. The former are functional to the implementation of the plan and are used only by the project team. As such, they can maintain a level of informality, which often simplifies their production and management. The latter are delivered to the customers. They often require additional work to ensure proper quality and formal procedures and, in some cases, a bit of ceremony, like, for example, delivery in hard copy through courier.

Deliverables might contain sensitive information. It is thus good practice to define the dissemination level of each deliverable. In common scenarios, circulation can be public, limited to selected stakeholders, to the project team, or only to selected project members. Such classification is an integral part of the project communication plan and is described in further detail in Section 5.3.

Milestones are defined by the Project Management Institute (2004) as a significant event in the project. Milestones are identified, at a minimum, by a label and a date, and they are typically used to highlight, significant control points, in the plan. Examples of milestones include a mid-term project review, or phase transition milestones, to identify the transition from one project phase to the next.

Deliverables and milestones can be presented as textual lists (like we do in the example below). They are also very often inserted in the graphical representation of plans (e.g., AON or Gantt chart), with the advantage of showing which activity or activities are responsible for the production of any specific deliverable.

According to the formality of the project development process that is being adopted, milestones can be used in different ways:

- To form (and present) a high-level roadmap of the project: milestones represent how the project unfolds in a series of significant events.

- As a verification point in the project: milestones identify control points in the project, which serve as a general “orientation” mechanism to steer the project in one direction or another.

- As a gate in the project: milestones clearly separate different phases of the project; if the goals of the milestone are not achieved, the transition to the next phase is blocked.

There is no mechanical technique to identify the milestones and deliverables of a project. They depend on the project type and their identification can be supported by adopting a development standard, by personal experience, or by discussing and negotiating them with the project stakeholders.

EXAMPLE 3.2

Table 3.4 shows the standards the European Union enforces for the specification of deliverables produced by the research projects it sponsors. In particular, the following information is associated with each deliverable:

- A unique identifier, typically an integer number

- The name of the deliverable

- The nature of the deliverable, which can be one of the following: a report (R), a prototype (P), a demonstrator (D), or other (O)

- The dissemination level, which, simplifying a bit on the EU rules, can be public (PU), restricted to the project team (RE), or restricted to the project stakeholders (CO)

- The delivery date, expressed in the number of months after the start of the project

- The partner (team member) responsible for the delivery of the project.

An Example of Deliverables

Del. ID

Deliverable Name

Nature

Dissemination Level

Delivery Date (Project Month)

Responsible Parter

1

Requirements

R

CO

0

FBK

2

Architecture UML diagram

R

CO

1

FBK

3

Software

P

PU

6

FBK

4

User manual

R

PU

12

FBK

Similarly, Table 3.5 shows (a subset of) the milestones of a European Research project. Milestones have the following information:

An Example of Milestones

Milestone Number

Milestone Name

Date (Month from Start)

Means of Verification

M0.1

Kick-off

1

Kick-off meeting done, meeting minutes available, project collaboration tools available.

M0.2

Experimental sites 1&2 up and running

12

Experimental sites 1 & 2 up and running.

M0.3

Midterm review

18

At least 80% of activities starting before M18 have started; at least 80% of activities ending before M18 have ended.

M0.4

Experimental sites 2&4 up and running

24

Experimental sites 3 & 4 up and running.

- A unique identifier

- A name

- A date, for instance, expressed in months from the start of the project

- A description of the purpose of the milestone

- Means of verification, which specify how it is possible to verify the achievement of the work associated with the milestone.

3.2.4 Project Roster

The project roster is the list of people participating in the project, together with their role and other information, such as the contact point. The project roster is a simple practice that allows the project manager to identify the project stakeholders and, in the process, simplify the definition of a project communication plan and favor team interaction.

3.3 Deciding the Work

Now that we have properly described the main goals and the boundaries of our project; we can start identifying the activities that we need to carry out in the project. This is very often accomplished with a work breakdown structure or WBS from now on. The notation, developed in the 1960s alongside the program evaluation and review technique (PERT), is defined in Project Management Institute (2004) as “a (deliverable-oriented) hierarchical decomposition of the work to be executed by the project team to accomplish projects objectives and create the required deliverable.”

Today, the technique is widely adopted and many project management and process standards make its use compulsory. For instance, NASA (1994) and NASA (2007) require a WBS to be built for each major program or project and suggest its use in any project, big or small, when it can be practically done so.

A WBS establishes the basis for

- Defining the work to be performed in a project

- Showing how various activities are related to the project objectives

- Establishing a framework for defining, assigning, and monitoring work and costs

- Identifying the organizational elements responsible for accomplishing the work.

See PERT Coordinating Group (1963) for a more detailed description.

In the rest of this section, we are going to describe the main construction techniques for WBSs and some rules of thumb to build and evaluate their quality and soundness.

3.3.1 Building a WBS

A WBS is a tree in which the root node represents a project or its main output. Each level of decomposition shows how a node of the tree can be structured and organized in more elementary work components.

WBSs come in two different notations. The first is graphical: the WBS is shown as a tree unfolding from top to bottom. The second is textual and similar to the table of contents of a book. The nodes of a WBS can be labeled according to their position in the tree: the top level is numbered “1,” its children “1.1,” …, “1.n,” and so on for all the nodes.

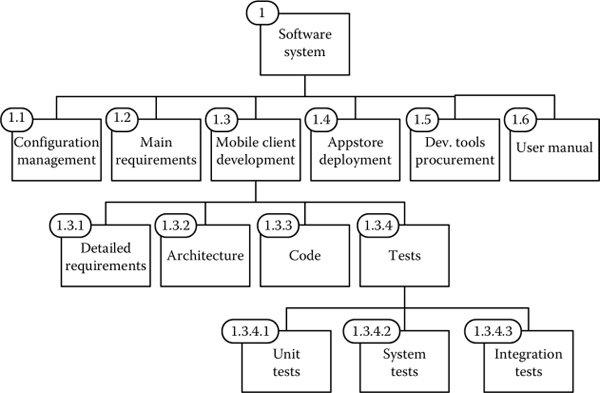

EXAMPLE 3.3

Figure 3.2 shows a graphical representation of a WBS for the development of a software application for mobile phones.

The WBS is structured in four levels.

The first level of decomposition contains the main activities, including the procurement of tools we need for development (1.5) and writing the user manual (1.6). Tests (1.3.4) are organized in three different types of activities: unit tests (1.3.4.1), system tests (1.3.4.2), and integration tests (1.3.4.3).

From the example above, we can infer various important characteristics of a WBS:

- The WBS does not specify the order in which the activities have to be executed, nor does it specify any dependency among activities. For instance, the procurement of development tools has to be completed before coding can start; the WBS, however, does not show this dependency.

- The decomposition follows the 100% and the mutual exclusion rules. That is, each level of decomposition includes all and only the items that are necessary to develop the parent node. Moreover, there are no overlaps between nodes. Each node specifies work different from that of any other node. In this way, the WBS becomes a powerful tool to define what is in the scope of the project and to allocate responsibility for the development of each activity.

- The WBS tree does not need to be balanced. In the diagram, for instance, some activities stop after the first level of decomposition, while others are refined up to the fourth level.

- The WBS can contain support activities, such as “management” and “development tools procurement.”

The level of decomposition and detail of a WBS depends upon its use. For instance, if the WBS is used as the basis for planning, its refinement process stops when we reach a level for which we can reliably estimate duration and effort.

According to NASA (1994), the leaves of the WBS must be such that the “completion of an element is both measurable and verifiable by persons (i.e., quality assurance persons) who are independent of those responsible for the element’s completion.” Thus, the advantage is that the WBS provides a solid basis for planning and monitoring and “no other structure (e.g., code of account, functional organization, budget and reporting, cost element) satisfactorily provides an equally solid basis for incremental project performance assessment.”

3.3.2 WBS Decomposition Styles

Different decomposition styles allow one to build a WBS.

In a product-oriented WBS, the decomposition proceeds by identifying the items that must be developed to build deliverables. A product WBS thus establishes a one-to-one correspondence between project activities and project (sub)products. This simplifies accountability, since the responsibility for a group of activities in a project will correspond to the responsibility of delivering a specific system component. When using a product-oriented WBS, a good rule is to ensure that tightly connected components are not separated in the WBS, since such a decomposition style does not allow one to allocate responsibility for integration.

In a process-oriented WBS, the decomposition proceeds by taking into account the activities that are necessary to carry out the project. One advantage of the process-oriented decomposition is that it can include activities, such as management, which are not directly related to the development of a product, but which are still necessary in a project. Another advantage is that it is simpler to build a WBS by analogy, using a similar project as a reference. This can also be considered a weakness, since the WBS could result in being too generic and uninformative.

To get the best of both worlds, one can use a hybrid WBS. A hybrid WBS contains both process- and product-oriented nodes. This approach is the one suggested, for instance, by NASA (2007), where a part of the WBS is a specification of the components of a product and the remaining parts are the activities necessary to manage the project, integrate the components, and perform quality control.

Other types of WBS highlight the organizational or geographical aspects of work. For these WBSs, the first level of decomposition contains, respectively, the organizational structure or the geographically distributed teams responsible for the development of a group of activities. Starting with the second level, the WBS contains the work to be performed. These decomposition styles can be effective for highly cross-functional projects or for projects in which geographical distribution is significant.

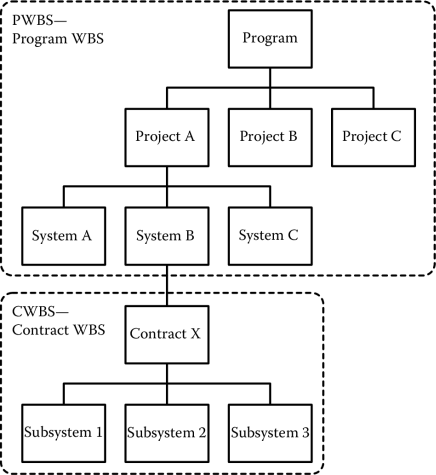

As a specific case of organizational WBS, we mention that defined in NASA (1994) and the Department of Defense (2011), which distinguish between Project Work Breakdown Structure (PWBs) and Contract Work Breakdown Structures (CWBs). In this case, the top levels of the WBS contain a logical structuring of the project or program, while the lower levels represent the WBSs of the contractors responsible for the development of the project. Thus, for instance, NASA (1994) and the Department of Defense (2011) suggest defining PWBs organized at three levels:

- Level 1 is the entire project or program.

- Level 2 includes the projects or major elements to develop the project.

- Level 3 includes the components necessary to develop the major elements.

Level 3 items constitute the statement of work for contractors and the first level of decomposition of the CWBs, whose development proceeds according to the standards of the different contractors, as shown in Figure 3.3.

Remark

When defining the work to be performed, the term work package is often used. There are different usages and slightly different definitions of the term, so it might be worthwhile to have a look at them.

According to the Project Management Institute (2004), a work package is an element of the WBS at which estimation for time and costs can be reliably provided. In bigger projects, this can correspond to the leaves of the WBS, rather than the higher level of the WBS. Note, however, that the leaves of a WBS could be the top level element of finer-grained work breakdown structures, like we have seen for a contract WBS.

NASA (1994) adopts a different definition. A work package is the unit of work required to complete a specific job, such as a report, a test, a drawing, a piece of hardware, or a service, which is within the responsibility of one operating unit within an organization.

Finally, in common practice, the term work package is often used to denote the first level of decomposition of a WBS. This is, for instance, the notation used for research projects sponsored by the European Union and other funding agencies.

3.3.3 WBS Dictionary

A WBS dictionary helps to annotate each element of the WBS with more detailed and structured information, such as

- Title and item number, to connect the description to an element of the WBS

- Detailed description of the element, including, for instance, quantities, relevant associated work, and contractual items, where applicable.

Additional information can help manage a WBS dictionary over time. Some WBS dictionary templates thus require one to provide references and links to other elements of the project, such as references to the scope document, budget and reporting, contract reference, and information about the history of the element, such as, for instance, revision number, author, and authorization.

See CDC (2013) for a template of a WBS dictionary and Space Division— North American Rockwell (1971) for a good example of a WBS and a WBS dictionary.

3.3.4 WBS Construction Methodologies

WBSs can be built top-down, bottom-up, or by analogy.

In the top-down approach, the construction of the WBS proceeds from the top level down to the leaves. It is usually best suited for projects that are well known or whose structure is clear. One risk is overlooking activities (e.g., achieving a “90% decomposition”).

In the bottom-up approach, the process proceeds in the opposite way. First, the leaves of the WBS are identified and then these are grouped in homogeneous items, thus giving structure to the WBS. It is best suited for projects that are new for a company or for the team responsible for their development and they work better with brainstorming sessions in which the whole team is involved. One technique that can be used for building the WBS elements is the so-called post-it on a wall. As each element of the WBS is identified, it is written on a post-it and posted on a wall. The post-it can then be physically grouped together to form the WBS structure. The main risks with bottom-up constructions are building WBSs that are too detailed and violate the mutual exclusion rule.

The third option, construction by analogy, starts from an existing WBS, which is adapted and customized for the project at hand. It can be very effective when an organization standardizes the structure of its projects.

3.4 Estimating

Yogi Berra is reported to have said that “it is difficult to make predictions, especially about the future.” The sentence could not be more appropriate for estimations of the work to be performed in a project. When we consider software projects, some of the characteristics of software, such as intangibility, flexibility, and complexity, make the estimation process even more complex.

In this section, we will look at some of the most common estimation techniques. The starting point is a discussion about what characterizes a project task. We then discuss the nature of estimations and continue with the presentation of the main techniques to estimate.

3.4.1 Effort, Duration, and Resources

Estimation is the process that determines the requirements to carry out an activity.

These are expressed in terms of

- Duration, namely, how long an activity will last

- Effort, namely, the amount of work necessary to complete an activity

- Resources, necessary to complete an activity.

Duration is the amount of time an activity lasts. It is measured in calendar units, such as days, weeks, months, and years. Sometimes the string “calendar-” is prefixed to unambiguously specify the nature of the measure.

Effort is the amount of work required to perform a task, and is measured using man-hours, man-days, man-months, or man-years meaning, respectively, the amount of work expressed by one worker in an hour, a day, a month, or a year. Thus, for instance, an activity requiring 40 man-hours can be completed by a person working for 40 h.

The main resource needed for software development projects is manpower, which is expressed in terms of units of work, that is, the effort that can be produced per calendar period.

Manpower is further qualified by identifying the type of resource required to carry out the work, namely, by identifying which kind of competences and what kind of personnel is needed. For instance, the development of a simulator for a rocket system might require the work of an expert in aerodynamics.

Finally, manpower is determined by the work calendar and the percentage of availability.

The first determines the maximum number of units of work that can be expressed during a calendar unit. For the service industry, typical values for one resource (one person) are 8 man-hours per calendar-day and 40 man-hours per calendar-week, considering 2 days of rest per week. Other calendars are used. For instance, industries working in shifts have a one-to-one relationship between units of work and calendar time; this is achieved by having three people working 8 h each throughout the day.

The percentage of availability reduces the maximum presence of a resource and it is used when a resource is not available full-time. For instance, if a resource is working part-time for an organization or for a project, the percentage of availability might be 50%.

For many tasks, a simple relationship links effort, duration, and manpower:

D=EM(3.6)

where D is the duration of an activity, E is the effort required by the activity, and M is the manpower required to carry out an activity. Of the three variables, one is usually estimated, another chosen, and the third computed.

The equation holds for reasonable values of D, E, and M. In fact, as M increases, so does the burden of coordinating the work and exchanging information (Brooks, 1995). So, please, never plan to use 1000 people so that you can finish in half a day an activity that requires an effort of 500 man-days, like a famous Dilbert cartoon suggested.

Most activities are estimated either in duration or in effort, according to the nature of the work. For instance, the activity “writing a document” is best estimated by looking at the effort. In this case, for instance, we can estimate the effort, choose the manpower to allocate to the task, and use the equation above to compute the duration of the task.

The equation does not hold for any type of task, though. For instance, the activity “waiting for the foundations of a home to solidify” requires a fixed duration and is independent of manpower and effort.

Finally, note that some activities might also require one to specify other types of resources, namely, materials and equipment:

- Material is necessary for certain activities and is consumed while work progresses. The measurement unit for material depends on the kind of material used. For instance, in building a house, a given amount of concrete will be used to carry out the construction activities.

- Equipment includes the tools required for carrying out work. In an oil exploration project, for instance, certain activities will require drilling equipment. Equipment is measured by the number of units that are necessary to carry out a given activity. Equipment is not consumed by the execution of the activity; that is, after the activity has been completed, the equipment can be used for another purpose. The availability of equipment introduces constraints in a plan. Thus, the equipment typically specified in a plan includes tools that are available in limited quantities or that are costly to use (and might impact the projects budget). Consider, for instance, the development of a software system to control a robotic surgeon. Certain project activities might require access to a robotic arm. If this requirement is made explicit in the plan, it becomes possible to schedule activities so that no overlaps or conflicts arise in the usage of this limited resource.

3.4.2 The “Quick” Approach to Estimation

The simplest and probably most commonly used approaches to estimation are expert judgment and analogy. The project manager, possibly in collaboration with the team or other experts, provides estimations using his/her experience or by looking at similar projects. The approach has the advantage of being very fast and simple. See the next section, however, for a discussion about some of the limitations.

These estimations can either proceed bottom-up or top-down.

In the bottom-up approach, the manager provides an estimation for each leaf of the WBS. If the estimations are effort-based, these can be easily propagated upward, thus determining the effort required for each node of the WBS. For instance, the effort required by anode A of the WBS whose children are A1, …,An is the sum of the efforts of its children, namely, effort(A1) + ⋯ + effort(An).

In the top-down approach, the process is reversed. The manager provides an estimation for the overall project. If the estimations are effort-driven, the effort of each activity of the WBS is then determined by distributing it to the lower levels. The propagation to the lower levels, however, is constrained by a relationship that is weaker than the one we defined above. If a node A of the WBS, for which we have estimated an effort of Ea, has children A1, …,An, then we can only say that effort(A1) + ⋯ + effort(An) = EA, but we cannot tell exactly how much effort has to be allocated to each of A1, …, An, using the structure of the WBS only. Rules of thumb are often used: for instance, the total effort of a project is split into different activities in percentages that are similar to those measured in previous projects.

Whether a top-down or bottom-up approach is more appropriate depends on the project at hand. The rule of thumb is that top-down estimations will tend to underestimate the duration or effort (since they might abstract away details), while bottom-up estimations tend to overestimate the effort or the duration, because too much importance is given to details. In my experience, bottom-up estimations are simpler to come out with. It is, however, a subjective matter and your experience might be different.

If more than a person is involved in the estimation process, various techniques can be used to elicit information. We mention the Delphi method, which is presented in more detail in Section 5.3.3.3.

3.4.3 The Uncertainty of Estimations

(Software) project managers make many implicit and explicit assumptions when estimating the resources necessary for an activity and many estimations are based on a number of “ifs.”

For instance, consider the problem of estimating how long it will take to complete a “requirement definition” activity, which produces a software requirements document. The reasoning could proceed as follows: if the final requirement document will be about 100 pages and if analysts can produce about 2.5 pages per hour, then the work will require about 40 man-hours. If one person will be able to dedicate about 80% of her time, then the actual duration will be about 50 h. The final estimation depends upon all these assumptions. For instance, if the estimation on the number of pages to write is increased by 10% and the productivity is reduced to 2 pages per hour, the duration of the tasks increases to 68.75 h.

On top of the “random” guess of the project manager, work has some implicit variability, which depends on many factors. Weather, for instance, might interfere with the construction of a house. The productivity of workers changes the speed at which progress is achieved.

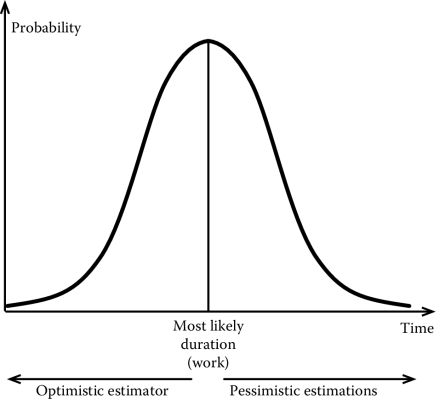

We can look at estimations as random variables characterized by a mean and a variance. Thus, precise scheduling techniques should or could be based on the rules of probabilistic reasoning. This is what certain techniques, like PERT and critical chain management, do. However, the requirement of coming out with precise numbers (e.g., “When does the project end? How much does it cost?”) and the need to keep plans simple (e.g., “The requirements document will be delivered on April 1, 2013” and not “between April 1 and April 13, with a probability of 68%”) favors an approach in which we choose a value and use it like it was a certain measure.

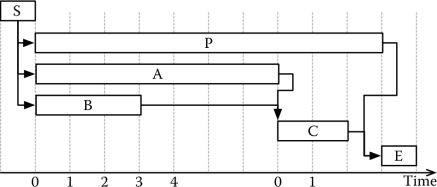

Thus, when we provide an estimation, we give our best guess of a task duration. An important implication, however, comes from considering the error between our estimation and the mean value of the actual duration (work) of a task. In fact, estimations (and estimators) can be optimistic if they are below the mean value or pessimistic, if they are above the mean value. In the first case, the actual plan is more likely to run late; in the second case, the plan will more likely over allocate resources (e.g., time and manpower). This is shown in Figure 3.4, where the area left of the mean corresponds to an optimistic estimation, while the area right of the mean includes the pessimistic estimations.

We tend to be optimistic in our estimations. For this reason, it is often the case that estimators pad their estimations to make sure they end above the most likely duration. If worst comes to worst, the reasoning goes, we will end earlier. Some rules of thumb go up to doubling the value of the estimation. Thus, in the example above, given our initial estimation of 40 h, we would schedule a plan in which the task takes twice as much time, namely, 80 h. This would easily accommodate the variations we mentioned above, but the consequence, in general, is that of building plans that are equally unrealistic.

There is also a subtler risk. As we acknowledge our implicit or explicit padding, we might be tempted to use estimations as a negotiation factor. If our estimations do not satisfy upper management (which is responsible for allocating resources) or our client, we might be tempted to just change the numbers so that they end up as the ones our stakeholders expect. Consider the example above: if 40 h of writing requirements are too long, why not reduce them to 30? After all, it is still relatively close to what we believe to be the most likely duration. The risks of such an attitude are clear, both in the short term and in the long term. In the short term, our plan becomes unreliable, since it is based on numbers chosen to please stakeholders, rather than to measure the work that has to be done. In the long term, it becomes difficult not only to make sense of our past plans, but also to use past experience and know-how to become more reliable in our estimations.

Various techniques have been proposed to tackle or mitigate the problems described above. PERT considers the random nature of estimations. Algorithmic techniques support the estimation process of the software system by relying on mathematical models that take stock of data collected over the years. Agile methodologies, by contrast, take a more radical approach by iterating the estimation process many times till convergence is reached or the project ends.

3.4.4 PERT

PERT was developed in the 1960s as a methodology to define and monitor projects (PERT Coordinating Group, 1963; Hamilton, 1964). The goal of the technique is to assess the probability of a plan to finish on a certain date, given the probabilistic estimations of the activities of composing the plan.

To use PERT, three values have to be assigned to each activity: an optimistic value, a most likely value, and a pessimistic value. (The values can be elicited using the techniques described above.) The most likely duration of an activity is then computed assuming a beta distribution. According to the distribution, the median duration of an activity is

t=(a+4m+b)6(3.7)

where a is the optimistic value, b the pessimistic value, and m the most likely value. The variance of t is given by the formula

σ2=(b−a)26(3.8)

Standard statistical methods can then be used to sum all the activities of a plan and determine, in such a way, the duration of the overall plan, together with the confidence of the data.

3.4.5 Algorithmic Techniques

Algorithmic techniques determine the effort or the duration required for developing a software system, given some of its characteristics. In other words, given a set of measurable features of a system x1, …, xn, an algorithmic technique defines a function f(x1, …, xn), such that

f(¯x1,…,¯xn)(3.9)

returns the effort, duration, and team size required to develop a system described by . The function f is typically defined by analyzing and interpolating data of sample projects for which both the input values (the measurable characteristics) and the output characteristics (effort, duration, and manpower) are available.

The advantages of the algorithmic techniques are evident, since they potentially provide a reliable, repeatable, and objective way to estimate fundamental parameters of a software development plan. Delivery dates, project budget, and so on can be derived from the measures computed by these algorithms.

Algorithmic techniques also have some limitations. The first is given by the models themselves, since they are derived from a limited (although growing) number of sample projects.

The second is given by the inputs to the models, namely, the values characterizing the system to build. These are not always easy to assess and might require significant analysis work, often performed by people with specific training and certifications.

It must be pointed out, however, that the analysis process required to come out with estimations has a value in itself, if not in the values it produces. In fact, the application of the algorithmic technique helps one to get a better understanding of the system to build.

Two big families of algorithmic techniques exist:

- Function-based estimations measure a system in terms of its functions. The most well-known technique in this family is probably the function point (FP) technique. A more recent addition includes the object point technique, to mention one.

- Size-based estimations measure a system in terms of its physical size, as well as measures in lines of code. The most well-known technique is probably the constructive cost modeling (COCOMO) family of models.

Finally, some techniques mix both function- and size-based approaches. This is the case, for instance, of web-objects, a technique to estimate web applications.

Several studies have been published on software estimations. One famous historical reference is Boehm (1981). An analysis of the existing literature, based on 304 papers and pointing to many resources, can be found in Jørgensen and Shepperd (2007).

3.4.5.1 Function Points

The FP technique was proposed by Albrecht in the 1970s (see, e.g., Albrecht (1979)). It finds its original application in business systems, but it has since evolved to embrace a wide range of systems and applications. Today, it has a large user base and an organization, the International Function Points User Group, to support its diffusion, application, and evolution (International function point user group, 2013c).

FP estimations are based on 19 different characteristics of a system, five of which refer to the functional characteristics of a system and 14 of which refer to nonfunctional aspects. These characteristics can typically be provided once the requirements of a system have been defined. The technique can, thus, be applied only after a part of the development process has started.

The five functional measures are

- User inputs: the number of user inputs, or elements in the system which require an input from the user.

- User outputs: the number of user outputs, or elements in the system which produce an output.

- User inquiries: the number of user inputs that generate a software response, such as word count, search result, or software status.

- Internal logical files: the number of files created and used dynamically by the system.

- External interfaces: the number of external files that connect with the software to an external system. For instance, if the software communicates with a device, it is counted as one external interface.

The data above are collected and classified according to a three-step complexity scale, which distinguishes among simple, average, and complex elements. Thus, for each of the five characteristics described above, we need to produce a three-dimensional vector: number of simple elements, number of average elements, and number of complex elements.

The technique defines a matrix of weights to take into account the impact that different elements have in determining the complexity of a project. Each pair functional characteristic, complexity has a weight. Thus, for instance, simple user inputs have a weight of 3, average user inputs a weight of 4, and complex user inputs a weight of 5.

The functional size of a system S, called by the method unadjusted function points (UFP), is the weighted sum of the characteristics of the system.

It is computed as follows:

where the index i runs over the five characteristics (user inputs, user outputs, …); the vector is the number of simple (S), average (A), and complex (C) elements of type i that we forecast in S. Finally, is the weight assigned by the method to simple (S), average (A), and complex (C) elements of type i.

UFP provides an estimation of the functional size of the system. Systems with a higher UFP are more complex to develop than systems with a lower UFP. However, UFP does not take into account the complexity deriving from nonfunctional characteristics of a system. For instance, high reliability systems are difficult to implement, requiring more effort. The method therefore introduces 14 questions that list various nonfunctional features of a system, which can positively or negatively affect a project. The questions are summarized in Table 3.6.

Nonfunctional Characteristics of a System (FP Method)

1. Does the system require reliable backup and recovery? 2. Are data communications required? 3. Are there distributed processing functions? 4. Is performance critical? 5. Will the system run in an existing, heavily utilized operational environment? |

6. Does the system require online data entry? 7. Does the online data entry require the input transaction to be built over multiple screens or operations? 8. Are the master files updated online? 9. Are the inputs, outputs, files, or inquiries complex? |

10. Is the internal processing complex? 11. Is the code to be designed reusable? 12. Are conversion and installation included in the design? 13. Is the system designed for multiple installations in different organizations? 14. Is the application designed to facilitate change and ease of use by the user? |

Similar to the functional evaluation, managers answer the 14 questions by providing, for each question, a qualitative answer ranging from 0 (irrelevant) to 5 (very influential).

The answers are summed together and added to the magic number 65, thus yielding a value in the range 65–135, where 135 is obtained when the answer to all the questions is 5, since 65 + 5 * 14 = 135. The value, divided by 100, is called the value adjustment factor, or VAF, and measures the additional (or reduced) effort that is necessary to take care of the implementation of nonfunctional requirements.

Formally,

VAF is then multiplied by UFP to yield the function points (FP):

FP measures the complexity of the system to be developed.

Once we have the estimation in function points, we can use it to estimate the effort required for the implementation of the system. There are two main approaches to map function points into effort. The first transforms the function points into a size measure (for which effort estimations can then be provided).

The second, which is preferred by the people advocating the use of function points, uses productivity metrics, such as man-months per function point, to estimate the effort required to develop a system. The actual values of productivity metrics depend on many factors, among which are team experience, organization maturity, and application fields.

Some example values can be found in Longstreet (2008), where work increases exponentially with a system’s size. It starts at 1.3 h/FP for a system of 50 FP, continuing with 12.1 h/FP for a system of about 7000 FP, and ending with 133.6 h/FP for a system of about 15,000 FPs. See Longstreet (2008) for the complete set of data.

Organizations willing to use the technique, however, should establish their own measurement programs to determine their productivity metrics.

3.4.5.2 COCOMO

Constructive cost model (COCOMO) is a family of estimation techniques first introduced by Barry Boehm in the 1980s and steadily improved over the years. The method was defined in the context of a broader analysis of software economics, which focused on improving the capacity of reasoning about software development costs and benefits, value delivered, and quality (Boehm, 1984; Boehm and Sullivan, 2000). The techniques are very detailed and make assumptions about the development process that is used to build a system. Moreover, different models are used during the development process to increase the accuracy of the estimations.

In this book, we will present the basic model, called COCOMO81, abstracting away many details and just hint about the more recent formulation of the model, called COCOMO II and introduced in 2000.

The COCOMO models use a size measure as the starting point for estimations and define a simple relationship between size and effort and duration of a project, which is captured by the following formula:

that is, the OUTPUT of the estimation (effort and duration) depends on a system’s SIZE and three other elements A, M, and B. In general, A and B are organizational-dependent constants, while M depends on the project at hand. Note that A and M have a multiplicative effect, while B has an exponential effect over the size. Reference values for A and B are given by the model.

3.4.5.2.1 COCOMO81

COCOMO81 is the first COCOMO model. It was defined by Barry Boehm and his group using a carefully screened sample of 63 projects developed between 1964 and 1979 (Boehm, 1981). The model applies to the software development practices in use by then, among which the use of the waterfall development process is probably the most relevant assumption.

The method defines three different variants, which can be applied at different stages of the development process as the information about a project increases. The basic model can be used when little information is available; the intermediate model can be used when the requirements are defined, and the advanced model can be used when the architecture is sketched.

In more detail, COCOMO81 computes effort and development time as follows:

where PM is the total effort, TDEV is the ideal duration of the project, TEAM is the team size, and KSLOC is the estimated number of thousands of lines code. In all three variants, A and B are constants, while M (which is not required in the basic model) is computed by looking at various project and product characteristics.

The actual values of A and B depend on the overall complexity of the project. COCOMO81, in particular, distinguishes between three types of projects, organic, semidetached, and embedded, according to the project characteristics listed in Table 3.7. For each type of project, the model provides values for APM, ATDEV, BPM, and BTDEV, as shown in Table 3.8.

COCOMO Development Modes

Project Type |

Main Characteristics |

|---|---|

Organic |

Simple projects with clear requirements and about which the performing organizations have a thorough understanding and experience. No or few technical and development risks. |

Semidetac head |

More complex projects. The performing organization has considerable know-how in the application field and with tools to be used for development. Some technical or development risks. |

Embedded |

Complex system with high variability in requirements. The organization has moderate know-how in the area. Various technical and development risks. |

COCOMO Base Model

APM |

BPM |

ATDEV |

BTDEV |

|

|---|---|---|---|---|

Organic |

2.40 |

1.05 |

2.50 |

0.38 |

Semidetached |

3.00 |

1.12 |

2.50 |

0.35 |

Embedded |

3.60 |

1.20 |

2.50 |

0.32 |

Finally, note that the formula to determine the team size is the same as in Equation 3.6.

The intermediate model takes into account various project- and product-related characteristics, which can contribute positively or negatively to the overall effort and to the project schedule. This is reflected by computing M as the product of 15 different parameters obtained by answering 15 different questions with a value from 1 (very low impact) to 6 (extremely high impact). Different from the FP method, each evaluation corresponds to a numerical constant, with values ranging from a minimum of 0.75 to a maximum of 1.56.

Tables 3.9 and 3.10, for instance, show the values assigned to the parameter RELY (required software reliability) and the corresponding assignment criteria. If RELY is evaluated as very low, for instance, the corresponding value to be used for the computation of M is 0.75, yielding a reduction in the effort of 25%.

COCOMO RELY Parameter

Very Low |

Low |

Nominal |

High |

Very High |

Extremely High |

|

|---|---|---|---|---|---|---|

Required Software Reliability (RELY) |

0.75 |

0.88 |

1 |

1.15 |

1.4 |

– |

Explanation of the COCOMO RELY Parameter

Very low |

The effect of a software failure is simply the inconvenience incumbent on the developers to fix the fault. |

Low |

The effect of a software failure is a low level, easily recoverable loss to users. |

Nominal |

The effect of a software failure is a moderate loss to users, but a situation for which one can recover without extreme penalty. |

High |

The effect of a software failure can be a major financial loss or a massive human inconvenience. |

Very high |

The effect of a software failure can be the loss of human life. |

Extremely high |

No rating—defaults to very high. |

The parameters considered for the M factor can be organized in four different classes. Two of them describe the characteristics of the project outputs. They are

- Product attributes, which model aspects related to the software to be developed and include aspects such as expected reliability, database size, and overall product complexity

- Computer attributes, which take into account aspects related to the platform that will be used to run the software and include aspects such as constraints related to performance and stability.

The remaining two classes describe project attributes. They are

- Personnel attributes, which model the influence of the personnel involved in the project and include five parameters related to the capability and experience of the personnel

- Project attributes, which model some aspects related to the project organization and include three parameters describing tool support and automation and schedule constraints.

3.4.5.2.2 COCOMO II

COCOMO II significantly revises and enhances COCOMO81 to take into account several new factors that intervened after the first definition of the model. Among them are new development processes, new development paradigms (e.g., object orientation), and new development techniques (e.g., code reuse). The model also takes advantage of an enlarged set of project data, which is based on 161 projects in place of the 63 used in the definition of COCOMO81 and an improved definition of the term “source lines of code.” Finally, COCOMO II uses the spiral development process as its reference process.

COCOMO II introduces three main changes:

- UFPs are used at an early stage of the development process to determine a system’s size. The UFP are then transformed into lines of code using translation tables that map UFP into SLOCs. Some example values can be found in Center for Software Engineering (2000) and Quantitative Software Management (2013). This helps solve the “chicken– egg” problem with the original definition of the model. In fact, the accuracy of the model depends on the accuracy of the estimation of the system size, which however is known only when development ends.

- The computation of lines of code is adjusted to take into account reused code and requirements volatility. The first is computed using a nonlinear model derived by analyzing about 3000 projects from NASA. The latter simply increases the count of SLOC by a percentage that represents the number of requirements that will change. This allows one to use the method with more modern programming practices.

- The parameters are refined or updated. In detail, more the exponent is computed as the sum of five scale factors. The scale factor includes aspects related to the development process. They are assessed similar to the effort adjustment factors; the result is always between 0.91 and 1.226. Finally, the other parameters are updated to match analyses conducted on a larger set of data.

The Center for Software Engineering (2000) and Merlo-Schett et al. (2002) give more information about the application, while the University of Southern California (2013) and NPS (2013) make available an online calculator.

We conclude the section on COCOMO by mentioning that various extensions have been proposed to the model, among which are COQUALMO, to estimate software defects, COCOTS, to estimate integration of COTS component, and COSYSMO for system engineering.

3.4.5.3 Web Objects

Web object is a technique that mixes FP analysis and COCOMO models to estimate the effort and schedule of web application development. The main motivations for the definition of (yet another) estimation technique are some fundamental differences between desktop and web applications development.

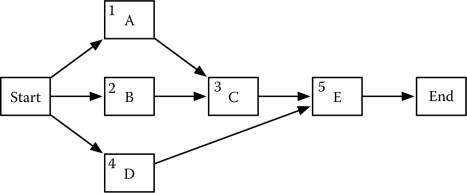

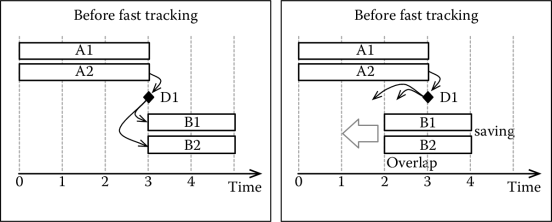

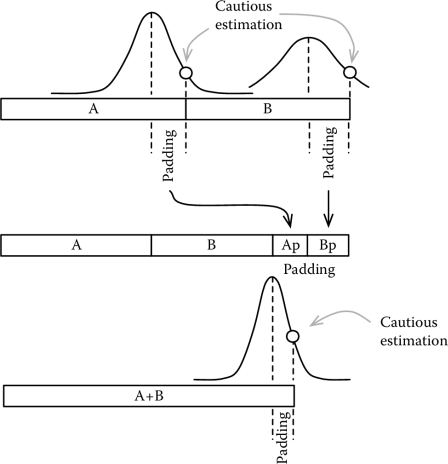

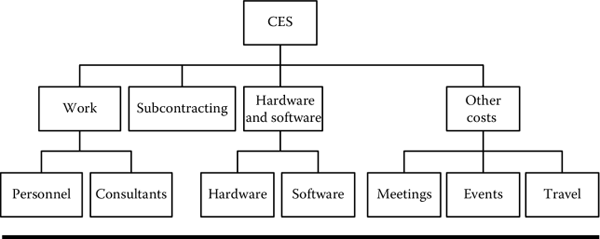

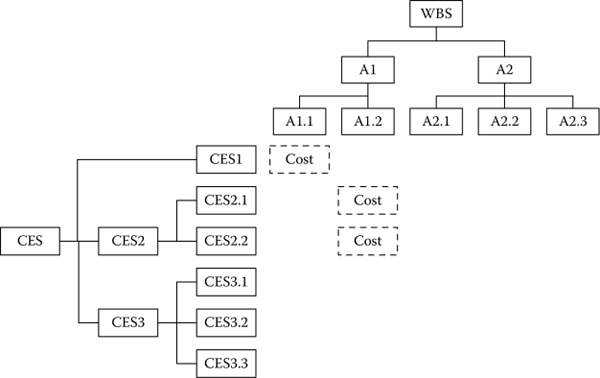

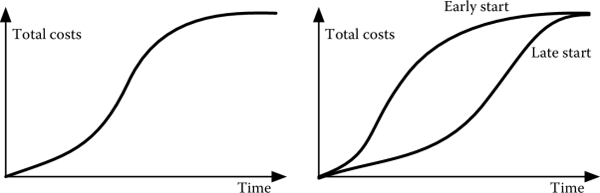

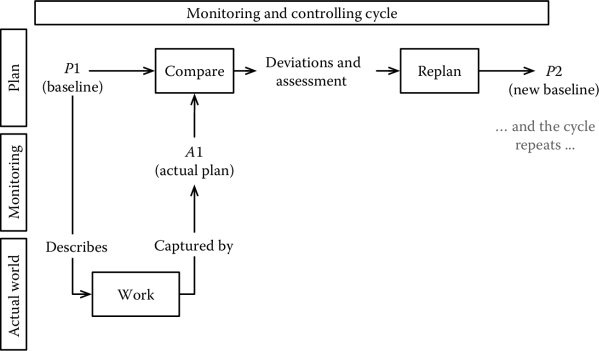

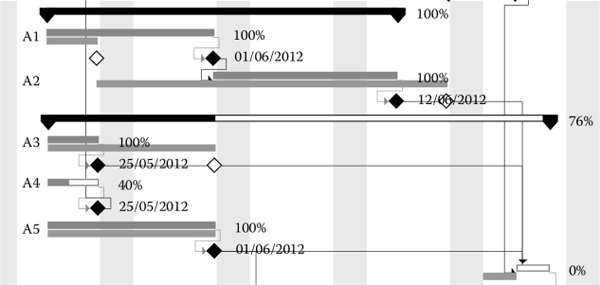

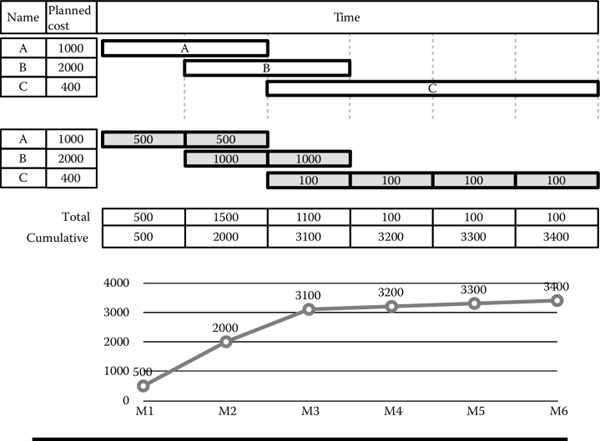

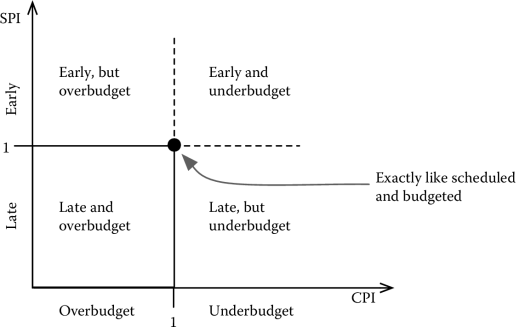

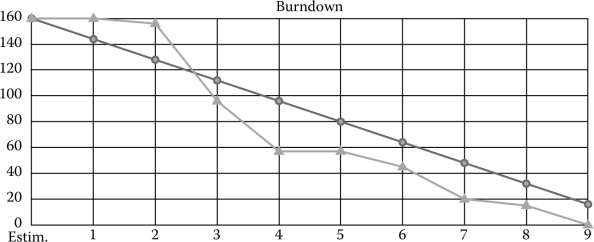

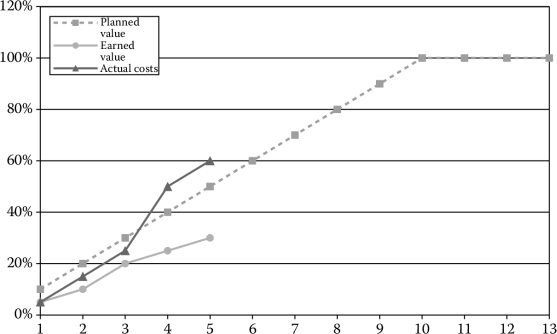

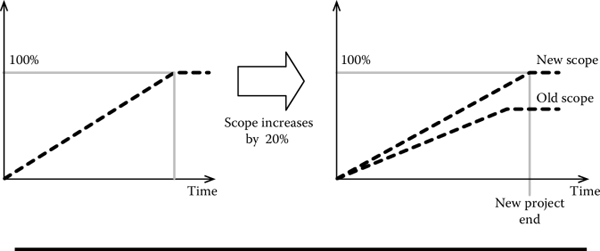

In fact, the development of web applications tends to be driven by time, rather, that costs; it prefers more informal (and speedy) processes; it uses smaller teams (3–6 people), often composed by younger and less experienced personnel. According to Reifer, who defined and proposed the model, these motivations make the application of other techniques less effective (see, e.g., Reifer (2000), Ruhe et al. (2003)).