3 Istio’s data plane: The Envoy proxy

- Understanding the standalone Envoy proxy and how it contributes to Istio

- Exploring how Envoy’s capabilities are core to a service mesh like Istio

- Configuring Envoy with static configuration

- Using Envoy’s Admin API to introspect and debug it

When we introduced the idea of a service mesh in chapter 1, we established the concept of a service proxy and how this proxy understands application-level constructs (for example, application protocols like HTTP and gRPC) and enhances an application’s business logic with non-differentiating application-networking logic. A service proxy runs collocated and out of process with the application, and the application talks through the service proxy whenever it wants to communicate with other services.

With Istio, the Envoy proxies are deployed collocated with all application instances participating in the service mesh, thus forming the service-mesh data plane. Since Envoy is such a critical component in the data plane and in the overall service-mesh architecture, we spend this chapter getting familiar with it. This should give you a better understanding of Istio and how to debug or troubleshoot your deployments.

3.1 What is the Envoy proxy?

Envoy was developed at Lyft to solve some of the difficult application networking problems that crop up when building distributed systems. It was contributed as an open source project in September 2016, and a year later (September 2017) it joined the Cloud Native Computing Foundation (CNCF). Envoy is written in C++ in an effort to increase performance and, more importantly, to make it more stable and deterministic at higher load echelons.

Envoy was created following two critical principles:

The network should be transparent to applications. When network and application problems do occur it should be easy to determine the source of the problem.

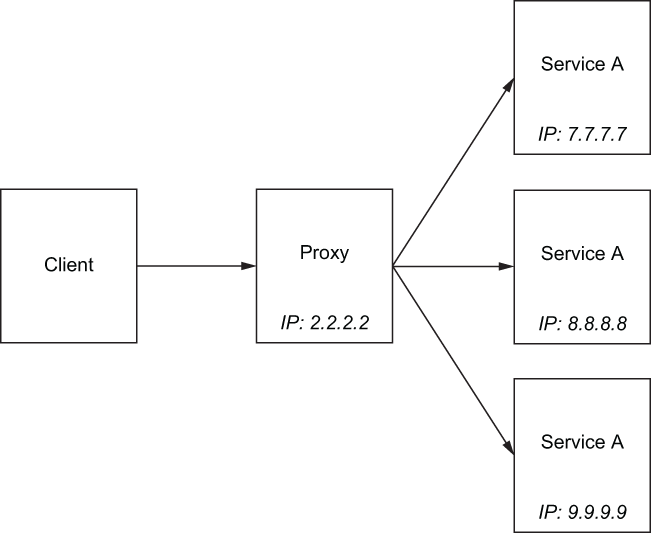

Envoy is a proxy, so before we go any further, we should make very clear what a proxy is. We already mentioned that a proxy is an intermediary component in a network architecture that is positioned in the middle of the communication between a client and a server (see figure 3.1). Being in the middle enables it to provide additional features like security, privacy, and policy.

Figure 3.1 A proxy is an intermediary that adds functionality to the flow of traffic.

Proxies can simplify what a client needs to know when talking to a service. For example, a service may be implemented as a set of identical instances (a cluster), each of which can handle a certain amount of load. How should the client know which instance or IP address to use when making requests to that service? A proxy can stand in the middle with a single identifier or IP address, and clients can use that to talk to the instances of the service. Figure 3.2 shows how the proxy handles load balancing across the instances of the service without the client knowing any details of how things are actually deployed. Another common function of this type of reverse proxy is checking the health of instances in the cluster and routing traffic around failing or misbehaving backend instances. This way, the proxy can protect the client from having to know and understand which backends are overloaded or failing.

Figure 3.2 A proxy can hide backend topology from clients and implement algorithms to fairly distribute traffic (load balancing).

The Envoy proxy is specifically an application-level proxy that we can insert into the request path of our applications to provide things like service discovery, load balancing, and health checking, but Envoy can do more than that. We’ve hinted at some of these enhanced capabilities in earlier chapters, and we’ll cover them more in this chapter. Envoy can understand layer 7 protocols that an application may speak when communicating with other services. For example, out of the box, Envoy understands HTTP 1.1, HTTP 2, gRPC, and other protocols and can add behavior like request-level timeouts, retries, per-retry timeouts, circuit breaking, and other resilience features. Something like this cannot be accomplished with basic connection-level (L3/L4) proxies that only understand connections.

Envoy can be extended to understand protocols in addition to the out-of-the-box defaults. Filters have been written for databases like MongoDB, DynamoDB, and even asynchronous protocols like Advanced Message Queuing Protocol (AMQP). Reliability and the goal of network transparency for applications are worthwhile endeavors, but just as important is the ability to quickly understand what’s happening in a distributed architecture, especially when things are not working as expected. Since Envoy understands application-level protocols and application traffic flows through Envoy, the proxy can collect lots of telemetry about the requests flowing through the system, such as how long they’re taking, how many requests certain services are seeing (throughput), and what error rates the services are experiencing. We will cover Envoy’s telemetry-collection capabilities in chapter 7 and its extensibility in chapter 14.

As a proxy, Envoy is designed to shield developers from networking concerns by running out-of-process from applications. This means any application written in any programming language or with any framework can take advantage of these features. Moreover, although services architectures (SOA, microservices, and so on) are the architecture de jour, Envoy doesn’t care if you’re doing microservices or if you have monoliths and legacy applications written in any language. As long as they speak protocols that Envoy can understand (like HTTP), Envoy can provide benefits.

Envoy is a very versatile proxy and can be used in different roles: as a proxy at the edge of your cluster (as an ingress point), as a shared proxy for a single host or group of services, and even as a per-service proxy as we see with Istio. With Istio, a single Envoy proxy is deployed per service instance to achieve the most flexibility, performance, and control. Just because you use one type of deployment pattern (a sidecar service proxy) doesn’t mean you cannot also have the edge served with Envoy. In fact, having the proxy be the same implementation at the edge as well as located within the application traffic can make your infrastructure easier to operate and reason about. As we’ll see in chapter 4, Envoy can be used at the edge for ingress and to tie into the service mesh to give full control and observe traffic from the point it enters the cluster all the way to the individual services in a call graph for a particular request.

3.1.1 Envoy’s core features

Envoy has many features useful for inter-service communication. To help understand these features and capabilities, you should be familiar with the following Envoy concepts at a high level:

-

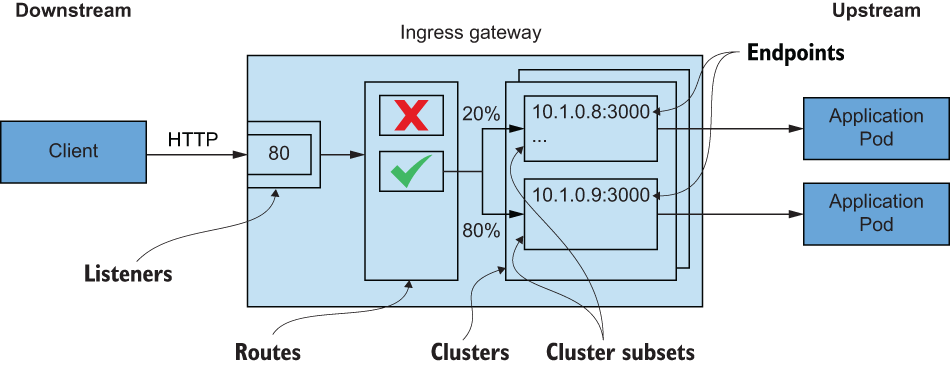

Listeners—Expose a port to the outside world to which applications can connect. For example, a listener on port 80 accepts traffic and applies any configured behavior to that traffic.

-

Routes—Routing rules for how to handle traffic that comes in on listeners. For example, if a request comes in and matches

/catalog, direct that traffic to thecatalogcluster. -

Clusters—Specific upstream services to which Envoy can route traffic. For example,

catalog-v1andcatalog-v2can be separate clusters, and routes can specify rules about how to direct traffic to either v1 or v2 of thecatalogservice.

This is a conceptual understanding of what Envoy does for L7 traffic. We will go into more detail in chapter 14.

Envoy uses terminology similar to that of other proxies when conveying traffic directionality. For example, traffic comes into a listener from a downstream system. This traffic is routed to one of Envoy’s clusters, which is responsible for sending that traffic to an upstream system (as shown in figure 3.3). Traffic flows through Envoy from downstream to upstream. Now, let’s move on to some of Envoy’s features.

Figure 3.3 A request comes in from a downstream system through the listeners, goes through the routing rules, and ends up going to a cluster that sends it to an upstream service.

Instead of using runtime-specific libraries for client-side service discovery, Envoy can do this automatically for an application. By configuring Envoy to look for service endpoints from a simple discovery API, applications can be agnostic to how service endpoints are found. The discovery API is a simple REST API that can be used to wrap other common service-discovery APIs (like HashiCorp Consul, Apache ZooKeeper, Netflix Eureka, and so on). Istio’s control plane implements this API out of the box.

Envoy is specifically built to rely on eventually consistent updates to the service-discovery catalog. This means in a distributed system we cannot expect to know the exact status of all services with which we can communicate and whether they’re available. The best we can do is use the knowledge at hand, employ active and passive health checking, and expect that those results may not be the most up to date (nor could they be).

Istio abstracts away a lot of this detail by providing a higher-level set of resources that drives the configuration of Envoy’s service-discovery mechanisms. We’ll look more closely at this throughout the book.

Envoy implements a few advanced load-balancing algorithms that applications can take advantage of. One of the more interesting capabilities of Envoy’s load-balancing algorithms is the locality-aware load balancing. In this situation, Envoy is smart enough to keep traffic from crossing any locality boundaries unless it meets certain criteria and will provide a better balance of traffic. For example, Envoy makes sure that service-to-service traffic is routed to instances in the same locality unless doing so would create a failure situation. Envoy provides out-of-the-box load-balancing algorithms for the following strategies:

Because Envoy can understand application protocols like HTTP 1.1 and HTTP 2, it can use sophisticated routing rules to direct traffic to specific backend clusters. Envoy can do basic reverse-proxy routing like mapping virtual hosts and context-path routing; it can also do header- and priority-based routing, retries and timeouts for routing, and fault injection.

Traffic shifting and shadowing capabilities

Envoy supports percentage-based (that is, weighted) traffic splitting/shifting. This enables agile teams to use continuous delivery techniques that mitigate risk such as canary releases. Although they mitigate risk to a smaller user pool, canary releases still deal with live user traffic.

Envoy can also make copies of the traffic and shadow that traffic in a fire and forget mode to an Envoy cluster. You can think of this shadowing capability as something like traffic splitting, but the requests that the upstream cluster sees are a copy of the live traffic; thus we can route shadowed traffic to a new version of a service without really acting on live production traffic. This is a very powerful capability for testing service changes with production traffic without impacting customers. We’ll see more of this in chapter 5.

Envoy can be used to offload certain classes of resilience problems, but note that it’s the application’s responsibility to fine-tune and configure these parameters. Envoy can automatically do request timeouts as well as request-level retries (with per-retry timeouts). This type of retry behavior is very useful when a request experiences intermittent network instability. On the other hand, retry amplification can lead to cascading failures; Envoy allows you to limit retry behavior. Also note that application-level retries may still be needed and cannot be completely offloaded to Envoy. Additionally, when Envoy calls upstream clusters, it can be configured with bulkheading characteristics like limiting the number of connections or outstanding requests in flight and to fast-fail any that exceed those thresholds (with some jitter on those thresholds). Finally, Envoy can perform outlier detection, which behaves like a circuit breaker, and eject endpoints from the load-balancing pool when they misbehave.

HTTP/2 is a significant improvement to the HTTP protocol that allows multiplexing requests over a single connection, server-push interactions, streaming interactions, and request backpressure. Envoy was built from the beginning to be an HTTP/1.1 and HTTP/2 proxy with proxying capabilities for each protocol both downstream and upstream. This means, for example, that Envoy can accept HTTP/1.1 connections and proxy to HTTP/2—or vice versa—or proxy incoming HTTP/2 to upstream HTTP/2 clusters. gRPC is an RPC protocol using Google Protocol Buffers (Protobuf) that lives on top of HTTP/2 and is also natively supported by Envoy. These are powerful features (and difficult to get correct in an implementation) and differentiate Envoy from other service proxies.

Observability with metrics collection

As we saw in the Envoy announcement from Lyft back in September 2016, one of the goals of Envoy is to help make the network understandable. Envoy collects a large set of metrics to help achieve this goal. It tracks many dimensions around the downstream systems that call it, the server itself, and the upstream clusters to which it sends requests. Envoy’s stats are tracked as counters, gauges, or histograms. Table 3.1 lists some examples of the types of statistics tracked for an upstream cluster.

Table 3.1 Some of the stats that the Envoy proxy collects

|

Total number of times that the cluster’s connection circuit breaker overflowed | |

|

Number of detected consecutive 5xx ejections (even if unenforced) |

Envoy can emit stats using configurable adapters and formats. Out of the box, Envoy supports the following:

Observability with distributed tracing

Envoy can report trace spans to OpenTracing (http://opentracing.io) engines to visualize traffic flow, hops, and latency in a call graph. This means you don’t have to install special OpenTracing libraries. On the other hand, the application is responsible for propagating the necessary Zipkin headers, which can be done with thin wrapper libraries.

Envoy generates a x-request-id header to correlate calls across services and can also generate the initial x-b3* headers when tracing is triggered. The headers that the application is responsible for propagating are as follows:

Automatic TLS termination and origination

Envoy can terminate Transport Level Security (TLS) traffic destined for a specific service both at the edge of a cluster and deep within a mesh of service proxies. A more interesting capability is that Envoy can be used to originate TLS traffic to an upstream cluster on behalf of an application. For enterprise developers and operators, this means we don’t have to muck with language-specific settings and keystores or truststores. By having Envoy in our request path, we can automatically get TLS and even mutual TLS.

An important aspect of resiliency is the ability to restrict or limit access to resources that are protected. Resources like databases or caches or shared services may be protected for various reasons:

Especially as services are configured for retries, we don’t want to magnify the effect of certain failures in the system. To help throttle requests in these scenarios, we can use a global rate-limiting service. Envoy can integrate with a rate-limiting service at both the network (per connection) and HTTP (per request) levels. We’ll show how to do that in chapter 14.

At its core, Envoy is a byte-processing engine on which protocol (layer 7) codecs (called filters) can be built. Envoy makes building additional filters a first-class use case and an exciting way to extend Envoy for specific use cases. Envoy filters are written in C++ and compiled into the Envoy binary. Additionally, Envoy supports Lua (www.lua.org) scripting and WebAssembly (Wasm) for a less invasive approach to extending Envoy functionality. Extending Envoy is covered in chapter 14.

3.1.2 Comparing Envoy to other proxies

Envoy’s sweet spot is playing the role of application or service proxy, where Envoy facilitates applications talking to each other through the proxy and solves the problems of reliability and observability. Other proxies have evolved from load balancers and web servers into more capable and performant proxies. Some of these communities don’t move all that fast or are closed source and have taken a while to evolve to the point that they can be used in application-to-application scenarios. In particular, Envoy shines with respect to other proxies in these areas:

For a more specific and detailed comparison, see the following:

-

Envoy documentation and comparison: http://bit.ly/2U2g7zb

-

Turbine Labs’ switch from Nginx to Envoy: http://bit.ly/2nn4tPr

-

Cindy Sridharan’s initial take on Envoy: http://bit.ly/2OqbMkR

-

Why Ambassador chose Envoy over HAProxy and Nginx: http://bit.ly/2OVbsvz

3.2 Configuring Envoy

Envoy is driven by a configuration file in either JSON or YAML format. The configuration file specifies listeners, routes, and clusters as well as server-specific settings like whether to enable the Admin API, where access logs should go, tracing engine configuration, and so on. If you are already familiar with Envoy or Envoy configuration, you may know that there are different versions of the Envoy config. The initial versions, v1 and v2, have been deprecated in favor of v3. We look only at v3 configuration in this book, as that’s the go-forward version and is what Istio uses.

Envoy’s v3 configuration API is built on gRPC. Envoy and implementers of the v3 API can take advantage of streaming capabilities when calling the API and reduce the time required for Envoy proxies to converge on the correct configuration. In practice, this eliminates the need to poll the API and allows the server to push updates to the Envoys instead of the proxies polling at periodic intervals.

3.2.1 Static configuration

We can specify listeners, route rules, and clusters using Envoy’s configuration file. The following is a very simple Envoy configuration:

static_resources: listeners: ❶ - name: httpbin-demo address: socket_address: { address: 0.0.0.0, port_value: 15001 } filter_chains: - filters: - name: envoy.http_connection_manager ❷ config: stat_prefix: egress_http route_config: ❸ name: httpbin_local_route virtual_hosts: - name: httpbin_local_service domains: ["*"] ❹ routes: - match: { prefix: "/" } route: auto_host_rewrite: true cluster: httpbin_service ❺ http_filters: - name: envoy.router clusters: - name: httpbin_service ❻ connect_timeout: 5s type: LOGICAL_DNS # Comment out the following line to test on v6 networks dns_lookup_family: V4_ONLY lb_policy: ROUND_ROBIN hosts: [{ socket_address: { address: httpbin, port_value: 8000 }}]

This simple Envoy configuration file declares a listener that opens a socket on port 15001 and attaches a chain of filters to it. The filters configure the http_connection _manager in Envoy with routing directives. The simple routing directive in this example is to match on the wildcard * for all virtual hosts and route all traffic to the httpbin _service cluster. The last section of the configuration defines the connection properties to the httpbin_service cluster. This example specifies LOGICAL_DNS for endpoint service discovery and ROUND_ROBIN for load balancing when talking to the upstream httpbin service. See Envoy’s documentation (http://mng.bz/xvJY) for more.

This configuration file creates a listener to which incoming traffic can connect and routes all traffic to the httpbin cluster. It also specifies what load-balancing settings to use and what kind of connect timeout to use. If we call this proxy, we expect our request to be routed to an httpbin service.

Notice that much of the configuration is specified explicitly (what listeners there are, what the routing rules are, what clusters we can route to, and so on). This is an example of a fully static configuration file. In previous sections, we pointed out that Envoy can dynamically configure its various settings. For the hands-on section of Envoy, we’ll use the static configurations, but we’ll first cover the dynamic services and how Envoy uses its xDS APIs for dynamic configuration.

3.2.2 Dynamic configuration

Envoy can use a set of APIs to do inline configuration updates without any downtime or restarts. It just needs a simple bootstrap configuration file that points the configuration to the correct discovery service APIs; the rest is configured dynamically. Envoy uses the following APIs for dynamic configuration:

-

Listener discovery service (LDS)—An API that allows Envoy to query what listeners should be exposed on this proxy.

-

Route discovery service (RDS)—Part of the configuration for listeners that specifies which routes to use. This is a subset of LDS for when static and dynamic configuration should be used.

-

Cluster discovery service (CDS)—An API that allows Envoy to discover what clusters and respective configuration for each cluster this proxy should have.

-

Endpoint discovery service (EDS)—Part of the configuration for clusters that specifies which endpoints to use for a specific cluster. This is a subset of CDS.

-

Secret discovery service (SDS)—An API used to distribute certificates.

-

Aggregate discovery service (ADS)—A serialized stream of all the changes to the rest of the APIs. You can use this single API to get all of the changes in order.

Collectively, these APIs are referred to as the xDS services. A configuration can use one or some combination of them; you don’t have to use them all. Note that Envoy’s xDS APIs are built on a premise of eventual consistency and that correct configurations eventually converge. For instance, Envoy could get an update to RDS with a new route that routes traffic to a cluster foo that has not yet been updated in CDS yet. The route could introduce routing errors until the CDS is updated. Envoy introduced ADS to account for this ordering race condition. Istio implements ADS for proxy configuration changes.

For example, to dynamically discover the listeners for an Envoy proxy, we can use a configuration like the following:

dynamic_resources: lds_config: ❶ api_config_source: api_type: GRPC grpc_services: - envoy_grpc: ❷ cluster_name: xds_cluster clusters: - name: xds_cluster ❸ connect_timeout: 0.25s type: STATIC lb_policy: ROUND_ROBIN http2_protocol_options: {} hosts: [{ socket_address: { address: 127.0.0.3, port_value: 5678 }}]

❶ Configuration for listeners (LDS)

❷ Go to this cluster for the listener API.

❸ gRPC cluster that implements LDS

With this configuration, we don’t need to explicitly configure each listener in the configuration file. We’re telling Envoy to use the LDS API to discover the correct listener configuration values at run time. We do, however, configure one cluster explicitly: the cluster where the LDS API lives (named xds_cluster in this example).

For a more concrete example, Istio uses a bootstrap configuration for its service proxies, similar to the following:

bootstrap:

dynamicResources:

ldsConfig:

ads: {} ❶

cdsConfig:

ads: {} ❷

adsConfig:

apiType: GRPC

grpcServices:

- envoyGrpc:

clusterName: xds-grpc ❸

refreshDelay: 1.000s

staticResources:

clusters:

- name: xds-grpc ❹

type: STRICT_DNS

connectTimeout: 10.000s

hosts:

- socketAddress:

address: istio-pilot.istio-system

portValue: 15010

circuitBreakers: ❺

thresholds:

- maxConnections: 100000

maxPendingRequests: 100000

maxRequests: 100000

- priority: HIGH

maxConnections: 100000

maxPendingRequests: 100000

maxRequests: 100000

http2ProtocolOptions: {}❸ Uses a cluster named xds-grpc

❹ Defines the xds-grpc cluster

❺ Reliability and circuit-breaking settings

Let’s tinker with a simple static Envoy configuration file to see Envoy in action.

3.3 Envoy in action

Envoy is written in C++ and compiled to a native/specific platform. The best way to get started with Envoy is to use Docker and run a Docker container with it. We’ve been using Docker Desktop for this book, but access to any Docker daemon can be used for this section. For example, on a Linux machine, you can directly install Docker.

Start by pulling in three Docker images that we’ll use to explore Envoy’s functionality:

$ docker pull envoyproxy/envoy:v1.19.0 $ docker pull curlimages/curl $ docker pull citizenstig/httpbin

To begin, we’ll create a simple httpbin service. If you’re not familiar with httpbin, you can go to http://httpbin.org and explore the different endpoints available. It basically implements a service that can return headers that were used to call it, delay an HTTP request, or throw an error, depending on which endpoint we call. For example, navigate to http://httpbin.org/headers. Once we start the httpbin service, we’ll start up Envoy and configure it to proxy all traffic to the httpbin service. Then we’ll start up a client app and call the proxy. The simplified architecture of this example is shown in figure 3.4.

Figure 3.4 The example applications we’ll use to exercise some of Envoy’s functionality

Run the following command to set up the httpbin service running in Docker:

$ docker run -d --name httpbin citizenstig/httpbin 787b7ec9365ff01841f2525cdd4e74e154e9d345f633a4004027f7ff1926e317

Let’s test that our new httpbin service was correctly deployed by querying the /headers endpoint:

$ docker run -it --rm --link httpbin curlimages/curl

curl -X GET http://httpbin:8000/headers

{

"headers": {

"Accept": "*/*",

"Host": "httpbin:8000",

"User-Agent": "curl/7.80.0"

}

}You should see similar output; the response returns with the headers we used to call the /headers endpoint.

Now let’s run our Envoy proxy, pass --help to the command, and explore some of its flags and command-line parameters:

Some of the interesting flags are -c for passing in a configuration file, --service-zone for specifying the availability zone into which the proxy is deployed, and --service-node for giving the proxy a unique name. You may also be interested in the --log-level flag, which controls how verbose the logging is from the proxy.

$ docker run -it --rm envoyproxy/envoy:v1.19.0 envoy [2021-11-21 21:28:37.347][1][info][main] ➥[source/server/server.cc:855] exiting At least one of --config-path or --config-yaml or ➥Options::configProto() should be non-empty

What happened? We tried to run the proxy, but we did not pass in a valid configuration file. Let’s fix that and pass in a simple configuration file based on the sample configuration we saw earlier. It has this structure:

static_resources: listeners: ❶ - name: httpbin-demo address: socket_address: address: 0.0.0.0 port_value: 15001 filter_chains: - filters: - name: envoy.filters.network.http_connection_manager typed_config: "@type": type.googleapis.com/envoy.extensions.filters. ➥network.http_connection_manager.v3.HttpConnectionManager stat_prefix: ingress_http http_filters: - name: envoy.filters.http.router route_config: name: httpbin_local_route virtual_hosts: - name: httpbin_local_service domains: ["*"] routes: - match: { prefix: "/" } route: ❷ auto_host_rewrite: true cluster: httpbin_service clusters: - name: httpbin_service ❸ connect_timeout: 5s type: LOGICAL_DNS dns_lookup_family: V4_ONLY lb_policy: ROUND_ROBIN load_assignment: cluster_name: httpbin endpoints: - lb_endpoints: - endpoint: address: socket_address: address: httpbin port_value: 8000

Basically, we’re exposing a single listener on port 15001, and we route all traffic to our httpbin cluster. Let’s start up Envoy with this configuration file (ch3/simple.yaml) located at the root of the source code:

$ docker run --name proxy --link httpbin envoyproxy/envoy:v1.19.0 --config-yaml "$(cat ch3/simple.yaml)" 5d32538c078a6e14ba0d4072d6ff10592a8a439714e7c9ac9c69e1ff71aa54f2 $ docker logs proxy [2018-08-09 22:57:50.769][5][info][config] ➥all dependencies initialized. starting workers [2018-08-09 22:57:50.769][5][info][main] ➥starting main dispatch loop

The proxy starts successfully and is listening on port 15001. Let’s use a simple command-line client, curl, to call the proxy:

$ docker run -it --rm --link proxy curlimages/curl

curl -X GET http://proxy:15001/headers

{

"headers": {

"Accept": "*/*",

"Content-Length": "0",

"Host": "httpbin",

"User-Agent": "curl/7.80.0",

"X-Envoy-Expected-Rq-Timeout-Ms": "15000",

"X-Request-Id": "45f74d49-7933-4077-b315-c15183d1da90"

}

}The traffic was correctly sent to the httpbin service even though we called the proxy. We also have some new headers:

It may seem insignificant, but Envoy is already doing a lot for us. It generated a new X-Request-Id, which can be used to correlate requests across a cluster and potentially multiple hops across services to fulfill the request. The second header, X-Envoy-Expected-Rq-Timeout-Ms, is a hint to upstream services that the request is expected to time out after 15,000 ms. Upstream systems, and any other hops the request takes, can use this hint to implement a deadline. A deadline allows us to communicate timeout intentions to upstream systems and lets them cease processing if the deadline has passed. This frees up resources after a timeout has been executed.

Now, let’s alter this configuration a little and try to set the expected request timeout to one second. In our configuration file, we update the route rule:

- match: { prefix: "/" }

route:

auto_host_rewrite: true

cluster: httpbin_service

timeout: 1sFor this example, we’ve already updated the configuration file, and it’s available in the Docker image: simple_change_timeout.yaml. We can pass it as an argument to Envoy. Let’s stop our existing proxy and restart it with this new configuration file:

$ docker rm -f proxy proxy $ docker run --name proxy --link httpbin envoyproxy/envoy:v1.19.0 --config-yaml "$(cat ch3/simple_change_timeout.yaml)" 26fb84558165ae9f9d9afb67e9dd7f553c4d412989904542795a82cc721f1ce5

Now, let’s call the proxy again:

$ docker run -it --rm --link proxy curlimages/curl

curl -X GET http://proxy:15001/headers

{

"headers": {

"Accept": "*/*",

"Content-Length": "0",

"Host": "httpbin",

"User-Agent": "curl/7.80.0",

"X-Envoy-Expected-Rq-Timeout-Ms": "1000",

"X-Request-Id": "c7e9212a-81e0-4ac2-9788-2639b9898772"

}

}The expected request timeout value has changed to 1000. Next, let’s do something a little more exciting than changing the deadline hint headers.

3.3.1 Envoy’s Admin API

To explore more of Envoy’s functionality, let’s first get familiar with Envoy’s Admin API. The Admin API gives us insight into how the proxy is behaving, access to its metrics, and access to its configuration. Let’s start by running curl against http://proxy:15000/stats:

$ docker run -it --rm --link proxy curlimages/curl curl -X GET http://proxy:15000/stats

The response is a long list of statistics and metrics for the listeners, clusters, and server. We can trim the output using grep and only show those statistics that include the word retry:

$ docker run -it --rm --link proxy curlimages/curl curl -X GET http://proxy:15000/stats | grep retry cluster.httpbin_service.retry_or_shadow_abandoned: 0 cluster.httpbin_service.upstream_rq_retry: 0 cluster.httpbin_service.upstream_rq_retry_overflow: 0 cluster.httpbin_service.upstream_rq_retry_success: 0

If you call the Admin API directly, without the /stats context path, you should see a list of other endpoints you can call. Some endpoints to explore include the following:

3.3.2 Envoy request retries

Let’s cause some failures in our request to httpbin and watch how Envoy can automatically retry a request for us. First we update the configuration file to use a retry_policy:

- match: { prefix: "/" }

route:

auto_host_rewrite: true

cluster: httpbin_service

retry_policy:

retry_on: 5xx ❶

num_retries: 3 ❷Just as in the previous example, you don’t have to update the configuration file: an updated version named simple_retry.yaml is available on the Docker image. Let’s pass in the configuration file this time when we start Envoy:

$ docker rm -f proxy proxy $ docker run --name proxy --link httpbin envoyproxy/envoy:v1.19.0 --config-yaml "$(cat ch3/simple_retry.yaml)" 4f99c5e3f7b1eb0ab3e6a97c16d76827c15c2020c143205c1dc2afb7b22553b4

Now we call our proxy with the /status/500 context path. Calling httpbin (which the proxy does) with that context path forces an error:

$ docker run -it --rm --link proxy curlimages/curl curl -v http://proxy:15001/status/500

When the call completes, we shouldn’t see any response. What happened? Let’s ask Envoy’s Admin API:

$ docker run -it --rm --link proxy curlimages/curl curl -X GET http://proxy:15000/stats | grep retry cluster.httpbin_service.retry.upstream_rq_500: 3 cluster.httpbin_service.retry.upstream_rq_5xx: 3 cluster.httpbin_service.retry_or_shadow_abandoned: 0 cluster.httpbin_service.upstream_rq_retry: 3 cluster.httpbin_service.upstream_rq_retry_overflow: 0 cluster.httpbin_service.upstream_rq_retry_success: 0

Envoy encountered an HTTP 500 response when talking to the upstream cluster httpbin. This is as we expected. Envoy also automatically retried the request for us, as indicated by the stat cluster.httpbin_service.upstream_rq_retry: 3.

We just demonstrated some very basic capabilities of Envoy that automatically give us reliability in our application networking. We used static configuration files to reason about and demonstrate these capabilities; but as we saw in the previous section, Istio uses dynamic configuration capabilities. Doing so allows Istio to manage a large fleet of Envoy proxies, each with its own potentially complex configuration. Refer to the Envoy documentation (www.envoyproxy.io) or the “Microservices Patterns with Envoy Sidecar Proxy” series of blog posts (http://bit.ly/2M6Yld3) for more detail about Envoy’s capabilities.

3.4 How Envoy fits with Istio

Envoy provides the bulk of the heavy lifting for most of the Istio features we covered in chapter 2 and throughout this book. As a proxy, Envoy is a great fit for the service-mesh use case; however, to get the most value out of Envoy, it needs supporting infrastructure or components. The supporting components that allow for user configuration, security policies, and runtime settings, which Istio provides, create the control plane. Envoy also does not do all the work in the data plane and needs support. To learn more about that, see appendix B.

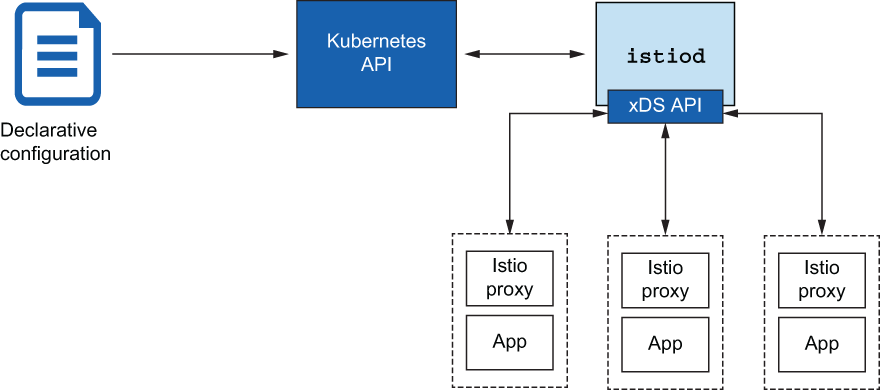

Let’s illustrate the need for supporting components with a few examples. We saw that due to Envoy’s capabilities, we can configure a fleet of service proxies using static configuration files or a set of xDS discovery services for discovering listeners, endpoints, and clusters at run time. Istio implements these xDS APIs in the istiod control-plane component.

Figure 3.5 illustrates how istiod uses the Kubernetes API to read Istio configurations, such as virtual services, and then dynamically configures the service proxies.

Figure 3.5 Istio abstracts away the service registry and provides an implementation of Envoy’s xDS API.

A related example is Envoy’s service discovery, which relies on a service registry of some sort to discover endpoints. istiod implements this API but also abstracts Envoy away from any particular service-registry implementation. When Istio is deployed on Kubernetes, Istio uses Kubernetes’ service registry for service discovery. The Envoy proxy is completely shielded from those implementation details.

Here’s another example: Envoy can emit a lot of metrics and telemetry. This telemetry needs to go somewhere, and Envoy must be configured to send it. Istio configures the data plane to integrate with time-series systems like Prometheus. We also saw how Envoy can send distributed-tracing spans to an OpenTracing engine—and Istio can configure Envoy to send its spans to that location (see figure 3.6). For example, Istio integrates with the Jaeger tracing engine (www.jaegertracing.io); Zipkin can be used as well (https://zipkin.io).

Figure 3.6 Istio helps configure and integrate with metrics-collection and distributed-tracing infrastructure.

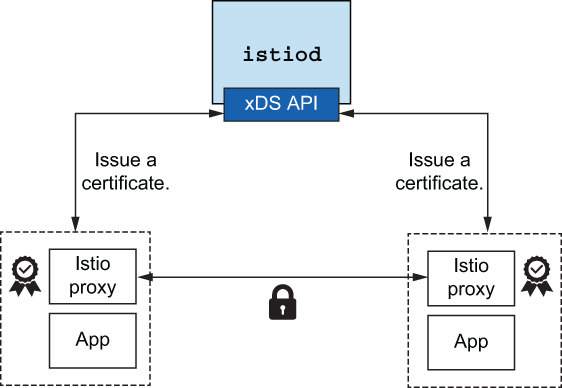

Finally, Envoy can terminate and originate TLS traffic to services in our mesh. To do this, we need supporting infrastructure to create, sign, and rotate certificates. Istio provides this with the istiod component (see figure 3.7).

Figure 3.7 istiod delivers application-specific certificates that can be used to establish mutual TLS to secure traffic between services.

Together, Istio’s components and the Envoy proxies make for a compelling service-mesh implementation. Both have thriving, vibrant communities and are geared toward next-generation services architectures. The rest of the book assumes Envoy as a data plane, so all of your learning from this chapter is transferable to the remaining chapters. From here on, we refer to Envoy as the Istio service proxy, and its capabilities are seen through Istio’s APIs—but understand that many actually come from and are implemented by Envoy.

In the next chapter, we look at how we can begin to get traffic into our service-mesh cluster by going through an edge gateway/proxy that controls traffic. When client applications outside of our cluster wish to communicate with services running inside our cluster, we need to be very clear and explicit about what traffic is and is not allowed in. We’ll look at Istio’s gateway and how it provides the functionality we need to establish a controlled ingress point. All the knowledge from this chapter will apply: Istio’s default gateway is built on the Envoy proxy.

Summary

-

Envoy is a proxy that applications can use for application-level behavior.

-

Envoy can help solve cloud reliability challenges (network failures, topology changes, elasticity) consistently and correctly.

-

Envoy uses a dynamic API for runtime control (which Istio uses).

-

Envoy exposes many powerful metrics and information about application usage and proxy internals.