Chapter 6. Feedback and Research

It’s now time to put our MVP to the test. All of our work up to this point has been based on assumptions; now we must begin the validation process. We use lightweight, continuous, and collaborative research techniques to do this.

Research with users is at the heart of most approaches to UX. Too often, teams outsource research work to specialized research teams. And too often, research activities take place only on rare occasions—either at the beginning of a project or at the end. Lean UX solves the problems these tactics create by making research both continuous and collaborative. Let’s dig in to see how to do that.

In this chapter, we cover:

Collaborative research techniques that allow you to build shared understanding with your team

Continuous research techniques that allow you to build small, informal qualitative research studies into every iteration

Which artifacts to test and what results you can expect from each of these tests

How to incorporate the voice of the customer throughout the Lean UX cycle

How to use A/B testing (described later in this chapter) in your research

How to reconcile contradictory feedback from multiple sources

Continuous and Collaborative

Lean UX takes basic UX research techniques and overlays two important ideas. First, Lean UX research is continuous; this means that you build research activities into every sprint. Instead of a costly and disruptive big bang process, we make research bite-sized so that we can fit it into our ongoing process. Second, Lean UX research is collaborative: you don’t rely on the work of specialized researchers to deliver learning to your team. Instead, research activities and responsibilities are distributed and shared across the entire team. By eliminating the handoff between researchers and team members, we increase the quality of our learning. Our goal in all of this is to create a rich shared understanding across the team. See Figure 6-1.

Collaborative Discovery

Collaborative design (covered in Chapter 4) is one of two main ways to bridge functions within a Lean UX team. Collaborative discovery—working as a team to test ideas in the market—is the other. Collaborative discovery is an approach to research that gets the entire team out of the building—literally and figuratively—to meet with and learn from customers. It gives everyone on the team a chance to see how the hypotheses are testing and, most importantly, multiplies the number of inputs the team can use to gather customer insight.

It’s essential that you and your team conduct research together; that’s why I call it collaborative discovery. Outsourcing this task dramatically reduces its value: it wastes time, it squanders team-building, and it filters the information through deliverables, handoffs, and interpretation. Don’t do it.

Researchers sometimes object to this approach to research. As trained professionals, they are right to point out that they have special knowledge that is important to the research process. I agree. That’s why you should include a researcher on your team if you can. Just don’t outsource the work to that person. Instead, use the researcher as a coach to help your team plan and execute your activities.

Collaborative discovery in the field

Collaborative discovery is a way to get out into the field with your team. Here’s how you do it:

As a team, review your questions, assumptions, hypotheses, and MVPs. Decide as a team what you need to learn.

Working as a team, decide whom you’ll need to speak to in order to address your learning goals.

Create an interview guide (see the following sidebar) that you can all use to guide your conversations.

Break your team into interview pairs, mixing up the various roles and disciplines within each pair (i.e., try not to have designers paired with designers).

Arm each pair with a version of the MVP.

Send each team out to meet with customers/users.

Have one team member conduct interviews while the other takes notes.

Start with questions, conversations, and observations.

Demonstrate the MVP later in the session and allow the customer to interact with it.

Collect notes as the customer provides feedback.

When the lead interviewer is done, switch roles to give the note-taker a chance to ask follow-up questions.

At the end of the interview, ask the customer for referrals to other people who might also provide useful feedback.

Collaborative discovery: an example

A team I worked with at PayPal set out with an Axure prototype to conduct a collaborative discovery session. The team was made up of two designers, a UX researcher, four developers, and a product manager; they split into teams of two and three. Each developer was paired with a nondeveloper. Before setting out, the team brainstormed what they’d like to learn from their prototype and used the outcome of that session to write brief interview guides. Their product was targeted at a broad consumer market, so they decided to head out to the shopping malls near their office. Each pair targeted a different mall. They spent two hours in the field stopping strangers, asking them questions, and demonstrating their prototypes. To build up their skillset, they changed roles (from lead interviewer to note taker) an hour into their research.

When they reconvened, each pair read their notes to the rest of the team. Almost immediately, they began to see patterns emerge, proving some of their assumptions and disproving others. Using this new information, they adjusted the design of their prototype and headed out again later that afternoon. After a full day of field research, it was clear which ideas had legs and which needed pruning. When they began the next sprint the following day, every member of the team was working from the same baseline of clarity, having established a shared understanding by means of collaborative discovery the day before.

Continuous Discovery

A critical best practice in Lean UX is building a regular cadence of customer involvement. Regularly scheduled conversations with customers minimize the time between hypothesis creation, experiment design, and user feedback, allowing you to validate your hypotheses quickly. In general, knowing you’re never more than a few days away from customer feedback has a powerful effect on teams. It takes the pressure away from your decision making because you know that you’re never more than a few days from getting meaningful data from the market.

Continuous discovery in the lab: three users every Thursday

Although you can create a standing schedule of fieldwork based on the techniques described in this chapter, it’s much easier to bring customers into the building—you just need to be a little creative in order to get the whole team involved.

I like to use a weekly rhythm to bring customers into the building to participate in research. I call this “3-12-1,” because it’s based on the following guidelines: three users, by 12 noon, once a week (Figure 6-2).

Here’s how the team’s activities break down:

- Monday: Recruiting and planning

Decide, as a team, what will be tested this week. Decide who you need to recruit for tests and start the recruiting process. Outsource this job if at all possible; it’s very time-consuming.

- Tuesday: Refining the components of the test

Based on the stage your MVP is in, start refining the design, the prototype, or the product to a point that will allow you to tell at least one complete story when your customers see it.

- Wednesday: Continue refining, writing the script, and finalizing recruiting

Put the final touches on your MVP. Write the test script that your moderator will follow with each participant. (Your moderator should be someone on the team, if at all possible.) Finalize the recruiting and schedule for Thursday’s tests.

- Thursday: Testing!

Spend the morning testing your MVP with customers. Spend no more than an hour with each customer. Everyone on the team should take notes. The team should plan to watch from a separate location. Review the findings with the entire project team immediately after the last participant is done.

- Friday: Planning

Use your new insight to decide whether your hypotheses were validated and what you need to do next.

Simplify Your Test Environment

Many firms have established usability labs in house; it used to be that you needed one. These days, you don’t need a lab—all you need is a quiet place in your office and a computer with a network connection and a webcam. One specialized tool I recommend is desktop recording and broadcasting software such as Morae, Silverback, or GoToMeeting.

The broadcasting software is a key element. It allows you to bring the test sessions to team members and stakeholders who can’t be present. This method has an enormous impact on collaboration because it spreads understanding of your customers deep into your organization. It’s hard to overstate how powerful this approach is.

Who Should Watch?

The short answer is: your whole team. Like almost every other aspect of Lean UX, usability testing should be a group activity. With the entire team watching the tests, absorbing the feedback, and reacting in real time, you’ll need fewer subsequent debriefings. The team will learn firsthand where their efforts are succeeding and failing. Nothing is more humbling (and motivating) than seeing a user struggle with the software you just built.

A Word about Recruiting Participants

Recruiting, scheduling, and confirming participants is time-intensive. Prevent this additional overhead by offloading the work to a third-party recruiter. The recruiter does the work and gets paid for each participant he or she brings in. In addition, the recruiter takes care of the screening, scheduling, and replacement of no-shows on testing day. These recruiters can cost anywhere from $75 to $150 per participant recruited.

Case Study: Three Users Every Thursday at Meetup

One company that has taken the concept of “three users every Thursday” to a new level is Meetup. Based in New York and under the guidance of VP of Product, Strategy, and Community Andres Glusman, Meetup started with a desire to test each and every one of their new features and products.

After pricing some outsourced options, they decided to keep things in house and take an iterative approach in their search for what they called their “minimally viable process.” Initially, they tried to test with the user, moderator, and team all in the same room. They got some decent results from this approach— and there was lots to learn from this technique, including that it can be scaled—but found that the test participants would get a bit freaked out with so many folks in the room.

Over time, they evolved to having the testing in one room with only the moderator joining the user. The rest of the team would watch the video feed from a separate conference room (Meetup originally used Morae to share the video; today they are using GoToMeeting).

Meetup doesn’t write testing scripts because they’re not sure what will be tested each day. Instead, product managers interact with test moderators, using instant messaging to help guide the conversations with users. The team debriefs immediately after the tests are complete and is able to move forward quickly.

Meetup recruited directly from the Meetup community from day one. For participants outside of their community, the team used a third-party recruiter. Ultimately, they decided to bring this responsibility in-house, assigning the work to the dedicated researcher they’d hired to handle all testing.

The team scaled up from three users once a week to testing every day except Monday. Their core objective was to minimize the time between concept and customer feedback.

Meetup’s practical minimum viable process orientation can also be seen in their approach to mobile testing. As their mobile usage numbers grew, Meetup didn’t want to delay testing on mobile platforms while waiting for fancy mobile testing equipment. Instead, they built their own—for $28 (see Figure 6-3).

As of 2012, Meetup has successfully scaled their minimum viable usability testing process to an impressive program. They run approximately 600 test sessions per year at a total cost of about $30,000 (not including staffing costs). This cost includes 100 percent video and notes coverage for every session. When you consider that this is roughly equivalent to the cost of running one major outsourced usability study, this achievement is truly amazing.

Making Sense of the Research—A Team Activity

Whether your team does fieldwork or lab work, research generates a lot of raw data. Making sense of this data can be time-consuming and frustrating, so the process is often handed over to specialists who are asked to synthesize research findings. You shouldn’t do things this way. Instead, work as hard as you can to make sense of the data as a team.

As soon as possible after the research sessions are over—preferably the same day, or at least the following day—gather the team together for a review session. When the team has reassembled, ask everyone to read their findings to each other. An efficient way to do this is to transcribe the notes people read out loud onto index cards or sticky notes, then sort the notes into themes. This process of reading, grouping, and discussing gets everyone’s input out on the table and builds the shared understanding that you seek. With themes identified, you and your team can then determine next steps for your MVP.

Confusion, Contradiction, and (Lack of) Clarity

As you and your team collect feedback from various sources and try to synthesize your findings, you will inevitably come across situations in which your data presents you with contradictions. How do you make sense of it all? Here are a couple of ways to maintain your momentum and ensure that you’re maximizing your learning:

- Look for patterns

As you review the research, keep an eye out for patterns in the data. These patterns reveal multiple instances of user opinion that represent elements to explore. If something doesn’t fall into a pattern, it is likely an outlier.

- Park your outliers

As tempting as it is to ignore outliers (or try to serve them in your solution), don’t do it. Instead, create a parking lot or backlog for them. As your research progresses over time (remember, you’re doing this every week), you may discover other outliers that match the pattern. Be patient.

- Verify with other sources

If you’re not convinced that the feedback you’re seeing through one channel is valid, look for it in other channels. Are the customer support emails reflecting the same concerns as your usability studies? Is the value of your prototype echoed by customers both inside and outside your office? If not, your sample may have been disproportionately skewed.

Identifying Patterns over Time

Most UX research programs are structured to get a conclusive answer. Typically, you will plan to do enough research to definitively answer a question or set of questions. Lean UX research puts a priority on being continuous, which means that you’re structuring your research activities very differently. Instead of running big studies, you’ll see a small number of users every week. Therefore, some questions may remain open over a couple of weeks. Another result is that interesting patterns can reveal themselves over time.

For example, our regular test sessions at TheLadders revealed an interesting change in our customers’ attitudes over time. In 2008, when we first started meeting with job seekers on a regular basis, we would discuss various ways to communicate with employers. One of the options we proposed was SMS. Because our audience was typically made up of high-income earners in their late forties and early fifties, their early responses showed a strong disdain for SMS as a legitimate communication method. To them, it was something their kids did (and that perhaps they did with their kids), but it was certainly not a “proper” way to conduct a job search.

By 2011, though, SMS messages had taken off in the United States. As text messaging gained acceptance in business culture, this attitude began to soften. Week after week, as we sat with job seekers, we began to see these opinions change. We saw job seekers become far more likely to use SMS in a mid-career job search than they would have been just a few years before.

We would never have recognized this as an audience-wide trend had we not been speaking with a sample of that audience week in and week out. As part of our regular interaction with customers, we always asked a regular set of level-setting questions to capture the “vital signs” of the job seeker’s search, no matter what other questions, features, or products we were testing. Anecdotally, these findings would not have swayed our beliefs about our target audience. Aggregated over time, they became very powerful and shaped our future product discussions and considerations.

Test Everything

In order to maintain a regular cadence of user testing, your team must adopt a “test everything” policy. Whatever is ready on testing day is what goes in front of the users. This policy liberates your team from rushing toward testing day deadlines. Instead, you’ll find yourself taking advantage of your weekly test sessions to get insight at every stage of design and development. You must, however, set expectations properly for the type of feedback you’ll be able to generate with each type of artifact.

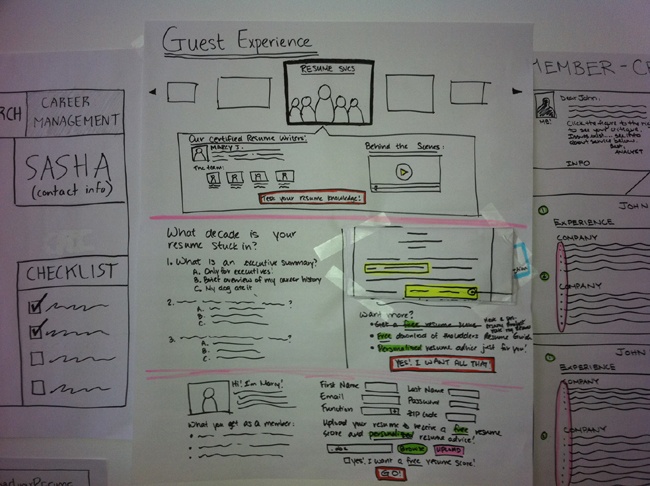

Sketches

Feedback collected on sketches (Figure 6-4) helps you validate the value of your concept. What you won’t get at this level is tactical, step-by-step feedback on the process, insight about design elements, or even meaningful feedback on copy choices. You won’t be able to learn much (if anything) about the usability of your concept.

Static Wireframes

Showing test participants wireframes (Figure 6-5) lets you assess the information hierarchy and layout of your experience. In addition, you’ll get feedback on taxonomy, navigation, and information architecture.

You’ll receive the first trickles of workflow feedback, but at this point your test participants are focused primarily on the words on the page and the selections they’re making. Wireframes provide a good opportunity to start testing copy choices.

High-Fidelity Visual Mockups (Not Clickable)

Moving into high-fidelity visual-design assets, you receive much more tactical feedback. Test participants will be able to respond to branding, aesthetics, and visual hierarchy, as well as aspects of figure/ground relationships, grouping of elements, and the clarity of your calls-to-action. Your test participants will also (almost certainly) weigh in on the effectiveness of your color palette.

Nonclickable mockups still don’t let your customers react naturally to the design or subsequent steps in the experience. Instead of asking your customers how they feel about the outcome of each click, you have to ask them what they would expect and then validate those responses against your planned experience.

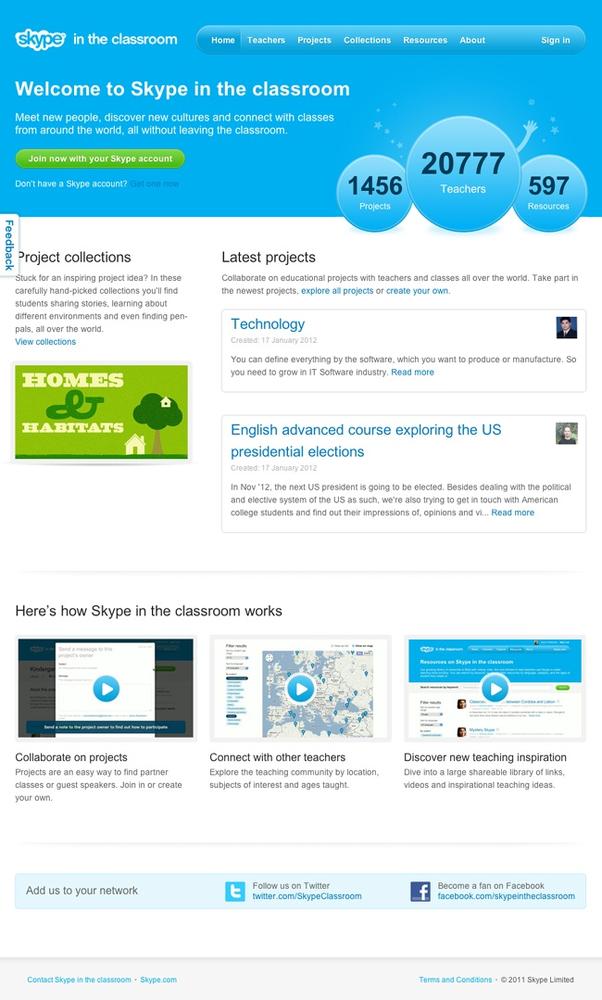

Mockups (Clickable)

A set of clickable mockups—essentially a prototype created in Axure, Fireworks, ProtoShare or any other viable prototyping tool (see Figure 6-6)—avoids the pitfalls of showing screens that don’t link together. Users see the actual results of their clicks. This type of mockup will not allow you to assess interactions with data (so you can’t test search performance, for example), but you can still learn a lot about the structure of your product.

Coded Prototypes

Live code is the best experience you can put in front of your test participants. It replicates the design, behavior, and workflow of your product. The feedback is real, and you can apply it directly to the experience. You may simulate a live-data connection or actually connect to live data; if you design your test experience well, users won’t be able to tell, but their reactions will provide you with direct insight into the way real data affects the experience (Figure 6-7).

Monitoring Techniques for Continuous, Collaborative Discovery

In the previous discussions in this chapter, I looked at ways to use qualitative research on a regular basis to evaluate your hypotheses. But once you launch your product or feature, your customers will start giving you constant feedback—and not only on your product. They will tell you about themselves, about the market, about the competition. This insight is invaluable, and comes into your organization from every corner. Seek out these treasure troves of customer intelligence within your organization and harness them to drive your ongoing product design and research.

Customer Service

Customer support agents talk to more customers on a daily basis than you will over the course of an entire project. There are multiple ways to harness their knowledge:

Reach out to them and ask them what they’re hearing from customers about the sections of the product on which you’re working.

Hold regular monthly meetings with them to understand the trends. What do customers love this month? What do they hate?

Tap their deep product knowledge to learn how they would solve the challenges your team is working on. Include them in design sessions and design reviews.

Incorporate your hypotheses into their call scripts—one of the cheapest ways to test your ideas is to suggest it as a fix to customers calling in with a relevant complaint.

In the mid-2000s, I ran the UX team at a medium-sized tech company in Portland, Oregon. One of the ways we prioritized the work was by regularly checking the pulse of the customer base. We did this with a standing monthly meeting with our customer service reps. Each month, they would provide us with the top 10 things customers were complaining about. We used this information to focus our efforts and to subsequently measure the efficacy of our work. If we attempted to solve one of these pain points, this monthly conversation gave us a clear indication of whether our efforts were bearing fruit; if the issue was not receding in the top 10 list, our solution had not worked.

An additional benefit of this effort was that the customer service team realized someone was listening to their insights and began proactively giving us customer feedback outside of our monthly meeting. The dialog that ensued provided us with a continuous feedback loop to use with many of our product hypotheses.

Onsite Feedback Surveys

Set up a feedback mechanism in your product that allows customers to send you their thoughts regularly. A few options include:

Simple email forms

Customer support forums

Third-party community sites

You can repurpose these tools for research by doing things such as:

These inbound customer feedback channels provide feedback from the point of view of your most active and engaged customers. Here are a few tactics for getting other points of view:

- Search logs

Search terms are clear indicators of what customers are seeking on your site. Search patterns indicate what they’re finding and what they’re not finding. Repeated queries with slight variations show a user’s challenge in finding certain information.

One way to use search logs for MVP validation is to launch a test page for the feature you’re planning. Following the search logs will tell you if the test content (or feature) on that page is meeting the user’s needs. If they continue to search on variations of that content, your experiment has failed.

- Site usage analytics

Site usage logs and analytics packages—especially funnel analyses—show how customers are using the site, where they’re dropping off, and how they try to manipulate the product to do the things they need or expect it to do. Understanding these reports provides realworld context for the decisions the team needs to make.

In addition, use analytics tools to determine the success of experiments that have launched publicly. How has the experiment shifted usage of the product? Are your efforts achieving the outcome you defined? These tools provide an unbiased answer.

- A/B testing

A/B testing is a technique, originally developed by marketers, to gauge which of two (or more) relatively similar concepts achieve a goal more effectively. When applied in the Lean UX framework, A/B testing becomes a powerful tool to determine the validity of your hypotheses. Applying A/B testing is relatively straightforward once your hypotheses evolve into working code.

Here’s how it works: take the proposed experience (your hypothesis) and publish it to your audience. However, instead of letting every customer see it, release it only to a small subset of users. Then, measure your success criteria for that audience. Compare it to the other group (your control cohort) and note the differences. Did your new idea move the needle in the right direction? If it did, you’ve got a winning hypothesis. If not, you’ve got an audience of customers to pull from and engage directly to understand why their behavior went unchanged.

There are several companies that offer A/B testing suites, but they all basically work the same way. The name suggests that you can only test two things, but in fact you can test as many permutations of your experience as you’d like (this is called A/B/n testing). The trick is to make sure that the changes you’re making are small enough that any change in behavior can be attributed to them directly. If you change too many things, any behavioral change cannot be directly attributed to your any one hypothesis.

Companies that offer A/B testing tools include Unbounce for landing page testing, Google Content Experiments (formerly Site Optimizer), Adobe Test&Target (formerly Omniture), and Webtrends Optimize.

Conclusion

In this chapter I covered many ways to start validating the MVPs and experiments you’ve built around your hypotheses. I looked at continuous discovery and collaborative discovery techniques. I discussed how to build a lean usability-testing process every week and covered what you should test and what to expect from those tests. I also looked at ways to monitor your customer experience in a Lean UX context and touched on the power of A/B testing.

These techniques, used in conjunction with the processes outlined in Chapter 3 through Chapter 5, make up the full Lean UX process loop. Your goal is to cycle through this loop as often as possible, refining your thinking with each iteration.

In the next section, I move away from process and take a look at how Lean UX integrates within your organization. Whether you’re a startup, a large company, or a digital agency, I’ll cover all of the organizational shifts you’ll need to make to support the Lean UX approach. These ideas will work in most existing processes, but in Chapter 7, I’ll specifically cover how to make Lean UX and Agile software development work well together.