A depth camera is a fantastic little device to capture images and estimate the distance of objects from the camera itself, but, how does the depth camera retrieve depth information? Also, is it possible to reproduce the same kind of calculations with a normal camera?

A depth camera, such as Microsoft Kinect, uses a traditional camera combined with an infrared sensor that helps the camera differentiate similar objects and calculate their distance from the camera. However, not everybody has access to a depth camera or a Kinect, and especially when you're just learning OpenCV, you're probably not going to invest in an expensive piece of equipment until you feel your skills are well-sharpened, and your interest in the subject is confirmed.

Our setup includes a simple camera, which is most likely integrated in our machine, or a webcam attached to our computer. So, we need to resort to less fancy means of estimating the difference in distance of objects from the camera.

Geometry will come to the rescue in this case, and in particular, Epipolar Geometry, which is the geometry of stereo vision. Stereo vision is a branch of computer vision that extracts three-dimensional information out of two different images of the same subject.

How does epipolar geometry work? Conceptually, it traces imaginary lines from the camera to each object in the image, then does the same on the second image, and calculates the distance of objects based on the intersection of the lines corresponding to the same object. Here is a representation of this concept:

Let's see how OpenCV applies epipolar geometry to calculate a so-called disparity map, which is basically a representation of the different depths detected in the images. This will enable us to extract the foreground of a picture and discard the rest.

Firstly, we need two images of the same subject taken from different points of view, but paying attention to the fact that the pictures are taken at an equal distance from the object, otherwise the calculations will fail and the disparity map will be meaningless.

So, moving on to an example:

import numpy as np

import cv2

def update(val = 0):

# disparity range is tuned for 'aloe' image pair

stereo.setBlockSize(cv2.getTrackbarPos('window_size', 'disparity'))

stereo.setUniquenessRatio(cv2.getTrackbarPos('uniquenessRatio', 'disparity'))

stereo.setSpeckleWindowSize(cv2.getTrackbarPos('speckleWindowSize', 'disparity'))

stereo.setSpeckleRange(cv2.getTrackbarPos('speckleRange', 'disparity'))

stereo.setDisp12MaxDiff(cv2.getTrackbarPos('disp12MaxDiff', 'disparity'))

print 'computing disparity...'

disp = stereo.compute(imgL, imgR).astype(np.float32) / 16.0

cv2.imshow('left', imgL)

cv2.imshow('disparity', (disp-min_disp)/num_disp)

if __name__ == "__main__":

window_size = 5

min_disp = 16

num_disp = 192-min_disp

blockSize = window_size

uniquenessRatio = 1

speckleRange = 3

speckleWindowSize = 3

disp12MaxDiff = 200

P1 = 600

P2 = 2400

imgL = cv2.imread('images/color1_small.jpg')

imgR = cv2.imread('images/color2_small.jpg')

cv2.namedWindow('disparity')

cv2.createTrackbar('speckleRange', 'disparity', speckleRange, 50, update)

cv2.createTrackbar('window_size', 'disparity', window_size, 21, update)

cv2.createTrackbar('speckleWindowSize', 'disparity', speckleWindowSize, 200, update)

cv2.createTrackbar('uniquenessRatio', 'disparity', uniquenessRatio, 50, update)

cv2.createTrackbar('disp12MaxDiff', 'disparity', disp12MaxDiff, 250, update)

stereo = cv2.StereoSGBM_create(

minDisparity = min_disp,

numDisparities = num_disp,

blockSize = window_size,

uniquenessRatio = uniquenessRatio,

speckleRange = speckleRange,

speckleWindowSize = speckleWindowSize,

disp12MaxDiff = disp12MaxDiff,

P1 = P1,

P2 = P2

)

update()

cv2.waitKey()In this example, we take two images of the same subject and calculate a disparity map, showing in brighter colors the points in the map that are closer to the camera. The areas marked in black represent the disparities.

First of all, we import numpy and cv2 as usual.

Let's skip the definition of the update function for a second and take a look at the main code; the process is quite simple: load two images, create a StereoSGBM instance (StereoSGBM stands for semiglobal block matching, and it is an algorithm used for computing disparity maps), and also create a few trackbars to play around with the parameters of the algorithm and call the update function.

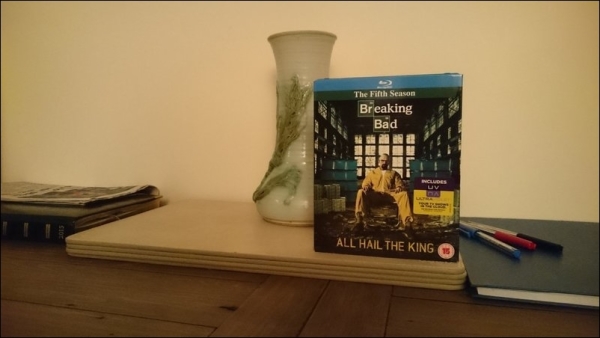

The update function applies the trackbar values to the StereoSGBM instance, and then calls the compute method, which produces a disparity map. All in all, pretty simple! Here is the first image I've used:

There you go: a nice and quite easy to interpret disparity map.

The parameters used by StereoSGBM are as follows (taken from the OpenCV documentation):

With the preceding script, you'll be able to load the images and play around with parameters until you're happy with the disparity map generated by StereoSGBM.