CHAPTER FIVE

Vector Subspaces

5.1 COLUMN, ROW, AND NULL SPACES

The essence of mathematics is not to make simple things compli cated, but to make complicated things simple.

—S. GUDDEN

Everything should be made as simple as possible, but not simpler.

—ALBERT EINSTEIN (1879–1955)

KISS Principle: Keep It Simple, Stupid!

Introduction

In Example 5 of Section 2.4, we encountered avector space that was contained in a larger vector space and sharedwith the larger space its definitions of vector addition and scalar multiplication. This is a common phenomenon, deserving our special study.

DEFINITION

A subset in a vector space is a subspace if it is nonempty and closed under the operations of adding vectors and multipling vectors by scalars.

A subspace is nonempty by the definition of the term ‘‘subspace.’’ If an element x is in the subspace, then 0 is in the subspace because 0 = 0 · x. Thus, a subspace must contain the zero element of the larger space. We can state these requirements for a subspace U as follows:

1. If x and y are in U, then x + y is in U. (U is closed under vector addition.)

2. If x is in U and c is a scalar, then cx is in U. (U is closed under scalar multiplication.)

3. U is nonempty.

One sees immediately that under those circumstances, U is a vector space, too. All the axioms listed in Section 2.4 will be true for U. Most of these axioms are true automatically because U is a subset of V, and V obeys the axioms. The closure axioms are different in nature, however. Furthermore, we want to be sure that U is nonempty. The element that must be there is, of course, 0.

We notice that two extreme cases of subspaces occur in any vector space V: namely, the subspace 0 and V itself. Any other subspace, U, must lie between these extremes: 0 ⊆ U ⊆ V. The subspace of V that contains only the zero vector is denoted by 0 or {0}. We summarize all this in a formal statement:

THEOREM 1

Let U be a subset of a vector space V. Suppose that U contains the zero element and is closed under vector addition and multiplication by scalars. Then U is a subspace of V.

THEOREM 2

The span of a nonempty set in a vector space is a subspace of that vector space.

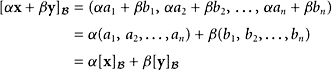

PROOF Let the vector space be V and let S be a nonempty subset of V. If x and y are two elements of Span(S), then each is a linear combination of elements of S. Simple algebra shows that the vector αx + βy will also be a linear combination of elements in S. This verifies the two closure axioms for Span(S).

There are many examples of linear subspaces, such as subspaces of polynomials, subspaces of trigonometric polynomials, subspaces of matrices ![]() m×n, subspaces of upper or lower triangular matrices, and subspaces of the image or the kernel of some linear operators such as differentiation and integration.

m×n, subspaces of upper or lower triangular matrices, and subspaces of the image or the kernel of some linear operators such as differentiation and integration.

EXAMPLE 1

In the vector space ![]() consisting of all polynomials (of any degree), consider the four monomials p0, p1, p2, and p3, where pi(t) = ti. What is the span of the set {p0, p1, p2, p3}?

consisting of all polynomials (of any degree), consider the four monomials p0, p1, p2, and p3, where pi(t) = ti. What is the span of the set {p0, p1, p2, p3}?

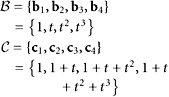

SOLUTION This is the vector space ![]() . It is a subspace of

. It is a subspace of ![]() . (The zero-polynomial has no degree.)

. (The zero-polynomial has no degree.)

EXAMPLE 2

Illustrate Theorem 2.

SOLUTION The set of polynomials of the form t ![]() at + bt2 + ct5 + dt7 is a subspace of

at + bt2 + ct5 + dt7 is a subspace of ![]() . (

. (![]() is defined in Example 1.)

is defined in Example 1.)

EXAMPLE 3

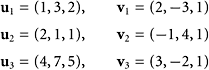

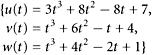

Let s and t be variables that range independently over ![]() . Consider the vectors of the form

. Consider the vectors of the form

|

Is the set of all vectors of that form a subspace of ![]() ?

?

SOLUTION We have no theorem that directly addresses such a problem. However, we may be able to see that the set in question is the linear span of asetof vectors. If so, Theorem 1 will apply. Observe that

|

|

Hence, the set of vectors considered at the beginning is identical to the span of a pair of vectors:

|

This pair isobviously linearly independent, and,therefore, generates a two-dimensional subspace of ![]() . (The concept of dimension is taken up in Section 5.2.)

. (The concept of dimension is taken up in Section 5.2.)

From two subspaces X and Y in a vector space, we can construct two more subspaces, X + Y and X ∩ Y. (These arise in General Exercises 13–14.) The vector sum, X + Y, and the intersection, X ∩ Y, are defined as follows:

|

The union of two subspaces is usually not a subspace! (See General Exercises 15–16.)

THEOREM 3

The vector sum of two subspaces in a vector space is also a subspace.

PROOF Let X and Y be two subspaces of a vector space V. Then X and Y contain the zero element of V. Therefore, X + Y contains the zero element of V. Is X + Y closed under addition? Let v and ![]() be two points in X + Y. Then v = x + y and

be two points in X + Y. Then v = x + y and ![]() for suitable points x, y,

for suitable points x, y, ![]() , and

, and ![]() . Thus, we obtain

. Thus, we obtain ![]() . A similar equation shows that X + Y is closed under scalar multiplication.

. A similar equation shows that X + Y is closed under scalar multiplication.

EXAMPLE 4

In ![]() , let U be the subspace consisting of all scalar multiples of the vector u = (1, 3, −4).Let V be the subspace consisting of all scalar multiples of thevector v = (5, −1, 2). What is U + V?

, let U be the subspace consisting of all scalar multiples of the vector u = (1, 3, −4).Let V be the subspace consisting of all scalar multiples of thevector v = (5, −1, 2). What is U + V?

SOLUTION The vector sum U + V consists of all vectors expressible as αu + βv. In other words, this is the span of the pair {u, v}.

THEOREM 4

The intersection of two subspaces in a vector space is also a subspace of that vector space.

The proofis left as General Exercise 14.

EXAMPLE 5

Let U be the set of all vectors u = (u1, u2, u3) in ![]() such that u1 + 3u2 − 4u3 = 0. Let V be the set of all vectors v = (v1, v2, v3) such that 2v1 + 3v3 = 0. What is U ∩ V?

such that u1 + 3u2 − 4u3 = 0. Let V be the set of all vectors v = (v1, v2, v3) such that 2v1 + 3v3 = 0. What is U ∩ V?

SOLUTION U ∩ V is the set of all x such that x1 +3x2 − 4x3 = 0 and 2x1 +3x2 = 0. Geometrically, we are considering two planes in ![]() and their intersection, which in this case is a line in

and their intersection, which in this case is a line in ![]() through the origin.

through the origin.

Images and inverse images of subspaces by linear transformations are also subspaces.

DEFINITION

If f is a mapping of a set X to a set Y, then for any set U in X, f[U] is defined to be the set of images of points in U. In symbols, we have

|

THEOREM 5

If f is a linear transformation from a vector space X to a vector space Y, then for any subspace U in X, f[U] is a subspace of Y.

PROOF Since U is a subspace, it contains 0.Since f is linear, f(0) = 0. Thus, 0 is in f[U]. If x and y are in U, then so are αx + βy. Hence, we have f(αx + βy) ![]() f[U]. Since f is linear, αf(x) + βf(y)

f[U]. Since f is linear, αf(x) + βf(y) ![]() f[U]. Thus, f[U] is closed under addition and scalar multiplication.

f[U]. Thus, f[U] is closed under addition and scalar multiplication.

EXAMPLE 6

This example illustrates Theorem 5 with specific vectors and subspaces. Define a linear transformation f from ![]() to

to ![]() by the equation

by the equation

|

Let W be the span of a set of two vectors in ![]() :

:

|

Thus, f maps ![]() into

into ![]() . Find a simple description of f[W].

. Find a simple description of f[W].

SOLUTION Using the formula for f we obtain

|

It follows that

|

DEFINITION

If f is a mapping from one set X to another set Y, then for any subset S in Y, f−1[S] is defined to be the set of all points in X that are mapped by f into S. This set is the inverse image of S in X. In symbols, we have

|

See Figure 5.1 for an illustration of f[U] and f−1[S]. The notation f−1[S] involves a set S. Its use here does not imply that f is an invertible mapping.

THEOREM 6

If f is a linear transformation from a vector space X to a vector space Y, then for any subspace V in Y, f−1[V] is a subspace in X.

PROOF Since V is a subspace in Y, the zero vectoris in V. Since f is a linear transformation and X is a vector space, 0 ![]() X and f(0) = 0. Hence, we have 0

X and f(0) = 0. Hence, we have 0 ![]() f−1[V]. If x and y are in f−1[V], then x = f(z) and y

f−1[V]. If x and y are in f−1[V], then x = f(z) and y ![]() f(w), for some z and w in V. Thus, we obtain

f(w), for some z and w in V. Thus, we obtain

|

|

This proves that αx + βy ![]() f−1[V] and that f−1[V] is closed under vector addition and scalar multiplication. Hence, f−1[V] is a subspace of X.

f−1[V] and that f−1[V] is closed under vector addition and scalar multiplication. Hence, f−1[V] is a subspace of X.

FIGURE 5.1 Sketch of (a) f[U] and (b) f−1[V].

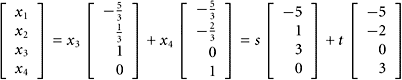

EXAMPLE 7

Continuing the previous example, let V = Span{z, w}, where z = (1, 1, 0) and w =(0, 1, 1). Describe the set f−1[V] in simple terms.

SOLUTION Letting (3x1, 2x2 − x1, x4) = (1, 1, 0), we obtain ![]() ,

, ![]() , and x4 = 0. Hence, we have

, and x4 = 0. Hence, we have ![]() . Similarly, letting (3x1, 2x2 − x1, x4) = (0, 1, 1), we have x1 = 0,

. Similarly, letting (3x1, 2x2 − x1, x4) = (0, 1, 1), we have x1 = 0, ![]() , and x4 = 1 and

, and x4 = 1 and ![]() . Consequently, we obtain

. Consequently, we obtain

|

for any s ![]()

![]()

Linear Transformations

Recall the notion of null space (or kernel) of a linear transformation. It consists of all vectors that are mapped into 0 by the transformation.

THEOREM 7

The kernel of a linear transformation is a subspace in the domain of that transformation.

PROOF Let T be a linear transformation from one vector space V to another vector space W. The kernel or null space of T is the set

|

It is obviously a subset of V. It is a subspace of V because first, 0 ∈ Ker(T). Second, if x and y belong to the kernel of T, then αx + βy also belong to the kernel because

|

EXAMPLE 8

Let (c1, c2, …, cn) be any fixed vector in ![]() . Define a linear transformation T:

. Define a linear transformation T: ![]() →

→ ![]() by the following equation, in which x = (x1, x2, …, xn):

by the following equation, in which x = (x1, x2, …, xn):

|

Describe the kernel of T.

SOLUTION It is the set of all vectors x that satisfy the equation

|

If n = 3, we can visualize this set as a plane through the origin in ![]() . This is true if the vector c is not 0. If c is zero, the subspace here is all of

. This is true if the vector c is not 0. If c is zero, the subspace here is all of ![]() . If n = 2, the set in question is a line through 0 if c ≠ 0. If c = 0, the set is all of

. If n = 2, the set in question is a line through 0 if c ≠ 0. If c = 0, the set is all of ![]() .

.

EXAMPLE 9

Draw asketch of the kernel of this linear map:

For x = (x1, x2), define T(x) = 3x1 − 2x2.

SOLUTION The kernel of T consists of all vectors in ![]() for which 3x1 − 2x2 = 0. Thus, we have

for which 3x1 − 2x2 = 0. Thus, we have ![]() . The graph of this equation is the straight line through the origin with slope

. The graph of this equation is the straight line through the origin with slope ![]() . The two points (0, 0) and (2, 3) determine this line. See Figure 5.2.

. The two points (0, 0) and (2, 3) determine this line. See Figure 5.2.

EXAMPLE 10

We can obtain a subspace by this description:

Take all the vectors x = (x1, x2, x3, x4) in ![]() that are linear combinations of (3, 7, 4, 2) and (2, 0, 1, 3), and in addition obey the equation

that are linear combinations of (3, 7, 4, 2) and (2, 0, 1, 3), and in addition obey the equation

|

Here we are taking the intersection of two subspaces to get a third subspace.

SOLUTION We have

|

FIGURE 5.2 Kernel of T(x) = 3x1 − 2x2.

We also set

|

Thus, we obtain ![]() . In the first preceding equation, replace b by this multiple of a, and arrive at

. In the first preceding equation, replace b by this multiple of a, and arrive at

|

The solution is that the points described are all the scalar multiples of the vector (11, 35, 18, 4).

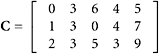

EXAMPLE 11

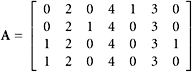

In ![]() , let U be the span of the single vector u = (3, 2, 4, 0). Let V be the kernel of the matrix

, let U be the span of the single vector u = (3, 2, 4, 0). Let V be the kernel of the matrix

|

Find a simple description of the subspace U + V in ![]() .

.

SOLUTION To understand the kernel of A, we use the standard row-reduction process to get

|

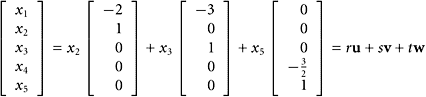

The general solution of the associated homogeneous system is given by

|

As usual, we write this as

|

|

where s and t are free parameters. Thus, the subspace in question is the span of a set of three vectors; namely,

|

THEOREM 8

The range of a linear transformation is a subspace of its co-domain.

PROOF If T is a linear transformation, say from U to V, then its range is

|

The vector 0 of V is in Range(T) because 0 = T(0). Thus, V is nonempty. If x and y are in Range(T), then x = T(u) and y = T(w), for suitable u and w in U. It follows that

|

This shows that Range(T) is closed under vector addition and scalar multiplication. Notice that we used the linearity of T and the hypotheses that U and V are vector spaces.

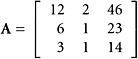

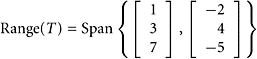

EXAMPLE 12

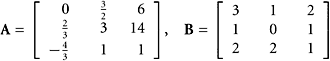

Let  , and define T by the equation T(x) = Ax. Describe the range of T in simpler terms.

, and define T by the equation T(x) = Ax. Describe the range of T in simpler terms.

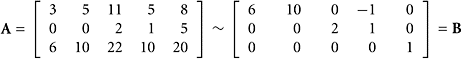

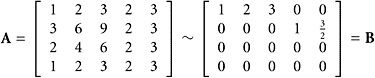

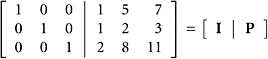

SOLUTION Every point in the range of T is of the form Ax for some x. Thus, the range of T is the span of the columns of A. If the columns of A form a linearly dependent set, then some columns are not needed in describing the range of T. A row reduction shows that

|

|

Because the systems Ax = 0 and Bx = 0 have the same solutions, we can use columns 1 and 2 in A to span the range of T:

|

|

Notice that we cannot use the columns of B to describe the range of A in this problem. Each of those columns has 0 as its last entry, whereas the range of A obviously contains some vectors that do not have that property.

Revisiting Kernels and Null Spaces

Recall from your study of elementary algebra the important topic of solving equations. For example, you learned how to find the ‘‘roots’’ of an equation such as 7x2 + 3x − 15 = 0. In linear algebra, the corresponding topic is the finding of solutions of an equation of the form L(x) = v, where L is a linear transformation. Even the simple case, L(x) = 0, requires some study. Several of the preceding chapters have dealt with the matrix version of that topic.

Recall that the terms null space and kernel are interchangable. They both refer to

|

The first case is more general as it does not require a matrix, but only a linear mapping. We define the null space of a matrix A as the set

|

which we can find by solving Ax = 0.

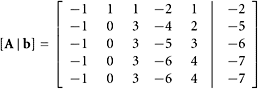

EXAMPLE 13

Let

|

Are the two vectors, u = [3, 7, −8, 9]T and v = [7, 1, 4, −5]T, in the null space of C? Are these vectors w = [5, −3] and z = [0, 0] in the left null space of CT?

SOLUTION The question is answered by computing Cu =[10, 38]T and Cv =[0, 0]T. Thus, we obtain v ![]() Null(C), but u ∉ Null(C). Also, we find that w is not in the null space of CT, but z is because CT w ≠ 0 and CTz = 0.

Null(C), but u ∉ Null(C). Also, we find that w is not in the null space of CT, but z is because CT w ≠ 0 and CTz = 0.

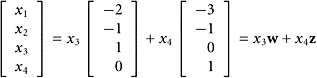

EXAMPLE 14

Use the matrix C in the preceding example, and find simple descriptions of the null spaces of C and CT.

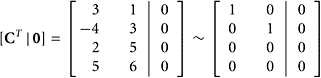

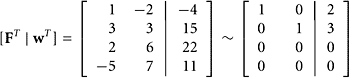

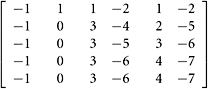

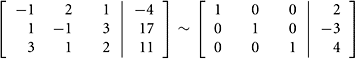

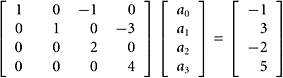

SOLUTION The null space of C consists of all solutions to the equation Cx = 0. One can use row reduction on the augmented matrix:

|

For the associated homogeneous system, the general solution is

|

This can be written as

|

|

where x3 and x4 are free variables. Thus, the null space is the span of a pair of vectors; namely, w = [−2, −1, 1, 0]T and z = [−3, −1, 0, 1]T.

With regard to the null space of CT, we obtain

|

|

Thus the null space of CT is {0}.

The Row Space and Column Space of a Matrix

Two important vector subspaces associated with a given matrix are its row space andits column space.

DEFINITION

The row space of a matrix A is the span of the set of rows in A. This is denoted by Row(A).

The column space of A is the span of the set of columns in A. This is denoted by Col(A).

Translating these definitions into alternative terminology, we can say that

|

Here we have assumed that A is an m × n matrix whose rows are ri and whose columns are aj.

THEOREM 9

If two matrices are row equivalent to each other, then they have the same row space.

PROOF Let A be any matrix. We will prove that any single row operation performed on A to produce a new matrix à has no effect on the row space of A. Once this has been established, it will follow that a sequence of row operations performed on A will also have no effect on the row space. In carrying out this proof, it suffices to consider just the two row operations labeled as Types 1 and 2 in Section 1.1. Consider first the operation of adding to one row a multiple of another (replacement). There is no loss of generality in considering the operation r1 ← r1 + αr2. This notation means replace the first row by row 1 plus α times row 2. Any element in the row space of à has the form

|

This i sclearly a linear combination of rows r1, r2, … rn. Hence, we have Row(Ã) ⊆ Row(A). The reverse inclusion is also true because the row operation in question is reversible by another row operation of the same type (explicitly, use r1 ← r1 − αr2). A similar analysis handles any row operation of Type 2 (scale, multiplying a row by a nonzero scalar).

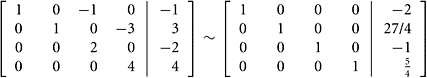

SOLUTION Because row operations generally simplify a matrix and the row space is not affected, we carry out a row reduction, arriving at

|

|

The row space of A is the row space of B, and it is spanned by the first three rowsof B. We can write

|

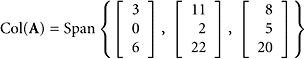

the latter being a more elegant description. The rows of an augmented matrix correspond to equations in a linear system. In the row-reduction process, each system has the same solution. Consequently, once the pivot positions are determined, we can use the corresponding rows in any of these coefficient matrices to span the row space! Conversely, the column space of A is given only by the span of the columns from A corresponding to the pivot positions. In this example, we obtain:

|

|

Think about why this is so!

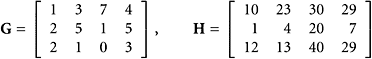

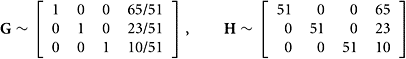

SOLUTION We examine the reduced row echelon form of each matrix.

Calling upon mathematical software and changing some numbers into simple fractions, we arrive at

|

|

The answer is yes! The row spaces are the same, and the three rows of this last matrix form a simple set of three vectors that spans the common row space. The first three columns of G form a linearly independent set that spans Col(G) = ![]() . Similarly, the first three columns of H span Col(H) =

. Similarly, the first three columns of H span Col(H) = ![]() .

.

THEOREM 10

The column space of an m × n matrix A is the set {Ax : x ![]()

![]() }.

}.

PROOF Recall that the product Ax is defined to be a linear combination of the columns of A, in which the coefficients are the components of the vector x. Thus, with the notation of the preceding paragraphs, we have

|

(We suppose that A has n columns, aj.) Because x runs over all possible vectors in ![]() , we get all the linear combinations of columns of A. In other words, we get the span of the set of columns in A.

, we get all the linear combinations of columns of A. In other words, we get the span of the set of columns in A.

SOLUTION We are asking whether some vector x exists for which Ax = b. This question is answered by attempting to solve that equation. The appropriate augmented matrix is

|

|

Yes, the system is consistent and has the solution x = [3, 2]T. Checking with an independent verification, we have 3[3, 6, 2]T + 2[−4, 1, 5]T = [1, 20, 16]T.

To describethe row space of a matrix inasimilar manner, weobserve that the row space of an m × n matrix A isthecolumn space of the n × m matrix AT . One may object that vectors inthe row space should be row vectors, whereas vectors in the column space should be column vectors. However, these distinctions come into play only when we insist on a vector being treated as a matrix. Then a column vector and a row vector must be interpreted differently. But we can take transposes to get rows from columns, and vice versa. Thus, we have

|

THEOREM 11

The row space of a matrix A is the column space of AT.

SOLUTION Because elementary row operations do not change the row space (Theorem 7), weare free to carry out the row-reduction process, obtaining

|

|

The row space is the span of the set consisting of the row vectors in matrix B:

|

The column space is spanned by columns 1, 3, and 5 of matrix A:

|

|

EXAMPLE 19

Let

|

Also, set v =[3, 12]T and w = [−4, 15, 22, 11]. Is v in the column space of F? Is w in the row space of F?

SOLUTION We see that thevector v is inthecolumn space of F because

|

So we have −3[1, −2]T + 2[3, 3]T = [3, 12]T.

To determine whether thevector w is in the row space of F, we take the transpose of the matrix and vector.

|

|

The row reduction shows that column three is a linear combination of columns one and two. Checking, we find the relationship 2[1, 3, 2, −5] + 3[−2, 3, 6, 7] = [−4, 15, 22, 11].

Here is another way to answer the question in Example 19 about a row space. The two rows of the matrix F form a linearly independent pair because neither row is a scalar multiple of the other. If we insert the vector w as a third row in this matrix, we can call the resulting matrix G. In going from F to G, the row space will either grow or remain unchanged. In the first case, the vector w is not inthe row space of F, but in the second case it is. We leave the details as General Exercise 38.

Caution

If A ~ B and the matrix B is in reducedrow echelon form, then Col(A) is thespan of the set of pivot columns in A, whereas Row(A) is the span of the set of pivot rows in B.

SUMMARY 5.1

•Asubset in a vector space V is a subspace of V if it is nonempty and closed under the operations of vector addition and scalar multiplication.

•Let U be a subset of a vector space V. Suppose that the zero element of V is in U, that U is closed under vector addition, and that U is closed under multiplication by scalars. Then U is a vector space—indeed,asubspace of V .

•The span of a nonempty set in a vector space is a subspace of that vector space.

•The kernel of a linear transformation is a subspace of the domain of that transformation.

•The vector sum of two subspaces in a vector space is also a subspace.

•The intersection of two subspaces in a vector space is also a subspace of that vector space.

•For any mapping f : X → Y, we define f[Q] = {f(x) : x ∈ Q} for any Q ⊆ X. We define f−1[S] = {x ∈ X : f(x) ∈ S} for any S ⊆ Y.

•The range of a linear transformation is a subspace of its co-domain.

•The null space of a matrix A is defined to be Null(A) = {x : Ax = 0}.

•The row space of amatrix A is defined to be the span of the set of rows in A. This is denoted by Row(A). The column space of A, denoted by Col(A), is the span of the set of columns in A.

•If two matrices are row equivalent to each other then they have the same row space.

•The column space of an m × n matrix A is the same as the set {Ax : x ∈ ![]() n}.

n}.

•Different ways in which a subspace U can be created:

•As the span of a given set of vectors: U = Span(S)

•As the kernel of a linear transformation: U = Ker(T)

•As the range of a linear transformation: U = T(V )

•As the vector sum of two subspaces: U = V + W

•As the intersection of two subspaces: U = V ∩ W

•As the image of a subspace via a linear transformation: U = T(V)

•As the inverse image of a linear subspace by a linear transformation: U = T−1(V)

KEY CONCEPTS 5.1

Subspaces, kernel, vector sum, vector intersection, images, inverse image, range, row space, column space, null space, creating subspaces, row equivalent

GENERAL EXERCISES 5.1

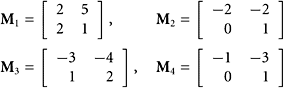

1. Determine whether the set of matrices having the form ![]() is a subspace of

is a subspace of ![]() 2×2.

2×2.

2. All invertible n × n matrices have the same row space. What is it?

3. Verify that the set {p ∈ ![]() : p′(3) = 2p(5)} is a subspace of the space

: p′(3) = 2p(5)} is a subspace of the space ![]() of all polynomials.

of all polynomials.

4. In ![]() , consider the set of all vectors that have the form (3α −2β, α + β + 2, −2β + α, 5α − πβ, α + β. Is this set a subspace of

, consider the set of all vectors that have the form (3α −2β, α + β + 2, −2β + α, 5α − πβ, α + β. Is this set a subspace of ![]() ?

?

5. Is the set of all vectorsof the form (s3 − t, 5s3 + 2t, 0) a subspace of ![]() ? Here s and t run over

? Here s and t run over ![]() .

.

6. Determine whether the set of all 2 × 2 matrices of the form ![]() is a subspace

is a subspace ![]() 2×2.

2×2.

7. In Example 1, we noted that ![]() is a subspace of

is a subspace of ![]() . Explain why we do not say that

. Explain why we do not say that ![]() is a subspace of

is a subspace of ![]() .

.

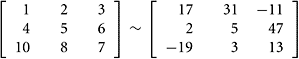

8. Do these two matrices have the same row space?

|

|

9. Define T : ![]() →

→ ![]() by T(x1, x2, x3) = (3x1 − 2x2 +5x3, 2x1 + x2 − x3). Define U = {x ∈

by T(x1, x2, x3) = (3x1 − 2x2 +5x3, 2x1 + x2 − x3). Define U = {x ∈ ![]() : x1 + x2 − 2x3 = 0}. Find a simple description of T[U].

: x1 + x2 − 2x3 = 0}. Find a simple description of T[U].

10. Describe in simple terms the row and column spaces of

11. What is the simplest example of two matrices that are row equivalent to each other yet have different column spaces?

12. The trace of a square matrix is the sum of its diagonal elements. Thus, if A is n × n, then ![]() . In the vector space

. In the vector space ![]() , consisting of all n × n matrices, is the set of matrices with zero trace a subspace?

, consisting of all n × n matrices, is the set of matrices with zero trace a subspace?

13. Give an example and a geometric interpretation of two subspaces U and W of the vector space ![]() . Note that their sum is also a subspace of

. Note that their sum is also a subspace of ![]() . The sum is defined by U + W = {u + w : u ∈ U and w ∈ W}. This illustrates Theorem 3.

. The sum is defined by U + W = {u + w : u ∈ U and w ∈ W}. This illustrates Theorem 3.

14. Establish that if U and W are subspaces of a vector space V, then their intersection is also a subspace of V. The intersection is defined by U ∩ W = {x : x ∈ U and x ∈ W}. This is Theorem 4.

15. Is the union of two subspaces in a vector space always a subspace? Explain why or find a counterexample. The union is defined by U ∪ W = {x : x ∈ U or x ∈ W}. (In mathematics, we always use the inclusive or. Thus, p or q is true when either p is true or q is true orboth are true.)

16. (Continuation.) Find the exact conditions on subspaces U and W in a vector space V in order that their union be a subspace.

17. Let ![]() be the vector space of all polynomials and let

be the vector space of all polynomials and let ![]() be the vector space of all polynomials of degree at most n. Explain why

be the vector space of all polynomials of degree at most n. Explain why ![]() may be viewed as a subspace of

may be viewed as a subspace of ![]() . Let

. Let ![]() be the collection of polynomials with only even powers of t. Is

be the collection of polynomials with only even powers of t. Is ![]() a subspace of

a subspace of ![]() ? (Assume that a constant polynomial c

? (Assume that a constant polynomial c ![]() ct0 is an even power of t.)

ct0 is an even power of t.)

18. Fix a set of vectors {u1, u2, …, un} in some vector space. Explain why the set of n-tuples (c1, c2, …, cn) such that ![]() is a subspace of

is a subspace of ![]() .

.

19. Argue that if A ~ B, then each row of B is a linear combination of rows in A.

20. Give a proof of Theorem 1.

21. Show why the set of all non invertible 2 × 2 matrices (with the usual operations) is not a vector space.

22. Explain why if T : U → V is a linear transformation, the inverse image of a subspace W in V via the transformation T is a subspace of U. The inverse image is defined by T−1[W] = {x ∈ U : T(x) ∈ W}.

Caution: The notation T−1[W] does not insinuate that T has an inverse. Every map (invertible or not) has inverse images as defined in the preceding equation.

23. Establish that if T is a linear transformation, then the image of a subspace in the domain of T is a subspace in the co-domain of T.

24. Establish that the span of a set in a vector space is the smallest subspace containing that set.

25. In a vector space, suppose that w = u + v and z = u − v. Explain why the spans of {u, v} and {w, z} are the same.

26. What conclusion can be drawn about a set S in a vector space if it satisfies the equation Span(S) = S?

27. Use the standard polynomials p0, p1, …, where pi(t) = ti. Then we write ![]() = Span{p0, p1, … pn}. Let D denote the differentiation operator. Establish that

= Span{p0, p1, … pn}. Let D denote the differentiation operator. Establish that ![]() . Also, show that Span{p1, p2, … pn} is the same as the set {p ∈

. Also, show that Span{p1, p2, … pn} is the same as the set {p ∈ ![]() : p(0) = 0}.

: p(0) = 0}.

28. Explain why two matrices cannot have the same column space if one matrix has a row of zeros and the other does not.

29. If A and B are row equivalent to each other, does it follow that A and B have the same column space? (A proof or counterexample is needed.)

30. Explain why the ith row of AB is xTB, where xT is the ith row of A.

31. Establish that if ui is the ith column of A and if ![]() is the ith row of B, then AB =

is the ith row of B, then AB = ![]() . (Notice that if A is m × n and if B is n × p, then each ui is m × 1 and each

. (Notice that if A is m × n and if B is n × p, then each ui is m × 1 and each ![]() is 1× p. Hence each

is 1× p. Hence each ![]() is m × p.)

is m × p.)

32. If f : X → Y and if U ⊆ X, then we can create a new function g by the equation g(x) = f(x) for all x in U. If U is a proper subset of X, then g ≠ f because they have different domains. The function g is called the restriction of f to U. The notation g = f|U is often used. Suppose now that X and Y are linear spaces and L is a linear map from X to Y. Let U be a subspace of X. Is L|U linear? What is its domain? Do L and L|U have the same range? (Arguments and examples are needed.)

33. Can this be used as the definition of a subspace? A subspace in a vector space is a subset that is closed under the operations of vector addition and multiplication of a vector by an arbitrary scalar.

34. Let A and B be arbitrary matrices subject only to the condition that the product AB exist. Use the notation Col to indicate the column space of a matrix. Consider these two inclusion relations: Col(AB) ⊆ Col(A) and Col(AB) ⊆ Col(B). Select the one of these that is always correct and prove it.

35. Give an example in which Col(AB) = Col(A). Give another example in which Col(AB) ≠ Col(A). Finally, give an example in which Col(AB) = Col(B).

36. Establish that each row of AB is a linear combination of the rows of B, and thus establish that Row(AB) ⊆ Row(B).

37. Let ![]() ∞ be the space of all infinite sequences of the form [x1, x2, …], where the xi are arbitrary real numbers. Let X be the subset of

∞ be the space of all infinite sequences of the form [x1, x2, …], where the xi are arbitrary real numbers. Let X be the subset of ![]() ∞ consisting of all sequences that have only finitely many nonzero terms. Is X a subspace of

∞ consisting of all sequences that have only finitely many nonzero terms. Is X a subspace of ![]() ∞?

∞?

38. In the last part of Example 19, use row reduction on the matrix G to show that w is inthe row space of F.

39. Use ![]() in this exercise.

in this exercise.

a. Find a set of vectors that spans the column space.

b. Is [−1, 1]T in the column space?

c. Find a set of vectors that spans the row space.

d. Is [−1, 1, 1, 4] in the row space?

e. Find a set of vectors that spans the null space.

f. Is [−2, 1, −6, 1]T in the null space?

40. Is the set of all vectors x =[x1, x2, x3, x4]T that are linear combinations of vectors[4, 2, 0, 1]T and [6, 3, −1, 2]T, and in addition satisfy the equation x1 = 2x2 a subspace of ![]() ? Explain why or why not.

? Explain why or why not.

41. Write out the details in the solution to Example 2.

42. Write out the details in the alternative solution to Example 19.

43. Explain why f[Span(W)] = Span(f[W]) when f is linear. Hint: This is needed in Example 6.

44. Let p0, p1, p2, …, pn be polynomials such that the degree of pk is k, for 0 ≤ k ≤ n. Explain why {p0, p1, p2…., pn} is a basis for ![]() .

.

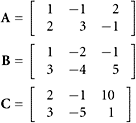

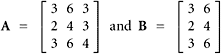

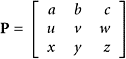

45. Let  Find the null space of each of these matrices as well as for AT and BT.

Find the null space of each of these matrices as well as for AT and BT.

46. Let U consist of all vectors in ![]() whose entries are equal. Explain why U is a subspace of

whose entries are equal. Explain why U is a subspace of ![]() and describe U geometrically.

and describe U geometrically.

47. Let U consist of the span of all 2 × 2 matrices whose second row is zero. Let V be the span of those whose second column is zero. Explain why U + W and U ∩ W are vector subspaces of ![]() 2×2, which is the vector space of all 2 × 2 matrices.

2×2, which is the vector space of all 2 × 2 matrices.

48. Let W be the subspace of ![]() spanned by these vectors: u1 = (1, 2, 1, 3, 2), u2 = (1, 3, 3, 5, 3), u3 = (3, 8, 7, 13, 8), u4 = (1, 4, 6, 9, 7), and u5 = (5, 13, 13, 25, 19). Find a basis for W.

spanned by these vectors: u1 = (1, 2, 1, 3, 2), u2 = (1, 3, 3, 5, 3), u3 = (3, 8, 7, 13, 8), u4 = (1, 4, 6, 9, 7), and u5 = (5, 13, 13, 25, 19). Find a basis for W.

49. Consider

|

|

Find bases for the row space and the column space of A.

50. Explain and discuss the following.

a. Span{v1, v2, v3, v4} is a subspace of ![]() , where in the vector vi the first i entries are one and all others are zero.

, where in the vector vi the first i entries are one and all others are zero.

b. Let Span{1, p1(x), p2(x), …, pn(x)} be a subspace of Pn, where pi(x) = (x − 1)i. Here ![]() is the vector space of all polynomials of degree less than or equal to n.

is the vector space of all polynomials of degree less than or equal to n.

c. ![]() is a subspace of

is a subspace of ![]() 2×3, where

2×3, where ![]() are 2 × 3 matrices with one in entry (i, j) and zero elsewhere. Here

are 2 × 3 matrices with one in entry (i, j) and zero elsewhere. Here ![]() m×n is the vector space of all m × n matrices for fixed m and n.

m×n is the vector space of all m × n matrices for fixed m and n.

COMPUTER EXERCISES 5.1

a. null space

b. column space

c. row space

a. kernel of T

b. range of T

a. null space

b. column space

c. row space

5.2 BASES AND DIMENSION

A thing is obvious mathematically after you see it.

—IN ROSE (ED.), MATHEMATICAL MAXIMS AND MINIMS [1988]

“Obvious” is the most dangerous word in mathematics.

—ERIC TEMPLE BELL (1883–1960)

Mathematics consists in proving the most obvious thing in the least obvious way.

—GEORGE POLYÁ (1889–1988)

Basis for a Vector Space

In this section, we explore the different ways in which the elements of a vector space can be represented by using various coordinate systems. The unifying concept is that of a basis for a vector space.

DEFINITION

In a vector space V, a linearly independent set that spans V is called a basis for V .

EXAMPLE 1

In ![]() , the set of standard unit vectors e1, e2, …, en have components given by (ei)j = δij. (Recall the Kronecker δij, which is 1 if i = j and 0 otherwise.) These vectors ej are the column vectors in the n × n identity matrix In. Is this set of vectors a basis for

, the set of standard unit vectors e1, e2, …, en have components given by (ei)j = δij. (Recall the Kronecker δij, which is 1 if i = j and 0 otherwise.) These vectors ej are the column vectors in the n × n identity matrix In. Is this set of vectors a basis for ![]() ?

?

SOLUTION Yes! This set spans ![]() and is linearly independent. Thus, it is a basis. It is often referred to as the standard basis for

and is linearly independent. Thus, it is a basis. It is often referred to as the standard basis for ![]() .

.

EXAMPLE 2

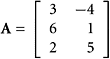

Consider

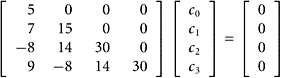

Is the set of columns in this matrix a basis for ![]() ?

?

SOLUTION We ask: “Can we solve any equation Ax = b, when b is an arbitrary vector in ![]() ?” Yes; we start with the fourth equation, which gives x1 = b4 (and there is no other choice!). Then go to the third equation. It can be solved for x2 because x1 is known. Again, there is no other possible value for x2. Continue upward through the set offour equations, noting that there are no choices to be made. The solution is uniquely determined by the vector b. Consequently, if b = 0, then x = 0 necessarily follows. We have proved that the columns formal in early independent set of vectors that spans

?” Yes; we start with the fourth equation, which gives x1 = b4 (and there is no other choice!). Then go to the third equation. It can be solved for x2 because x1 is known. Again, there is no other possible value for x2. Continue upward through the set offour equations, noting that there are no choices to be made. The solution is uniquely determined by the vector b. Consequently, if b = 0, then x = 0 necessarily follows. We have proved that the columns formal in early independent set of vectors that spans ![]() .

.

EXAMPLE 3

Let u = (3, 2, −4), v = (6, 7, 3), w = (2, 1, 0).

Do these vectors constitute a basis for ![]() ?

?

SOLUTION Put the vectors into a matrix A as columns. We ask first whether the equation Ax = 0 has any nontrivial solutions. Row reduction leads to

|

|

(Here we omit the righthand-side zero-vector.) This shows that the equation Ax = 0 can be true only if x = 0. Hence, the set of columns is linearly independent. The row reduction also shows that the equation Ax = b can be solved for any b in ![]() . Therefore, the setof columns spans

. Therefore, the setof columns spans ![]() and provides a basis for

and provides a basis for ![]() .

.

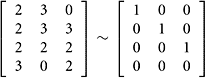

EXAMPLE 4

Let

Do the columns of this matrix form a basis for ![]() ?

?

SOLUTION We happen to notice that column three in this matrix is equal to column two minus column one. The set of columns is, therefore, a linearly dependent set. Hence, it is not a basis for anything. Of course, this set of columns spans a two-dimensional subspace in ![]() , but it is not a basis for that subspace because of its linear dependence. Columns one and two in this matrix, taken together, provide a basis for the column space of A. That is a two-dimensional subspace in

, but it is not a basis for that subspace because of its linear dependence. Columns one and two in this matrix, taken together, provide a basis for the column space of A. That is a two-dimensional subspace in ![]() . In otherwords, a plane in three-space passing through 0. (In this discussion, the term dimension is being used in an informal way. Precise definitions are forthcoming.)

. In otherwords, a plane in three-space passing through 0. (In this discussion, the term dimension is being used in an informal way. Precise definitions are forthcoming.)

The preceding four examples suggest that a general theorem can be proved.

THEOREM 1

The set of columns of any n × n invertible matrix is a basis for ![]() .

.

PROOF Let A be such a matrix. Do its columns span ![]() ? Can we solve the system of equations Ax = b for any b in

? Can we solve the system of equations Ax = b for any b in ![]() ? Yes, because A−1b solves the system. Thus, thecolumns of A span

? Yes, because A−1b solves the system. Thus, thecolumns of A span ![]() . What about the linear independence of the set of columns? Is there a non zero solution to the equation Ax = 0? No, because if Ax = 0, then x = 0, as is seen by multiplying by A−1. So the columns constitute a linearly independent set that spans

. What about the linear independence of the set of columns? Is there a non zero solution to the equation Ax = 0? No, because if Ax = 0, then x = 0, as is seen by multiplying by A−1. So the columns constitute a linearly independent set that spans ![]() .

.

SOLUTION Let us investigate the spanning property first. Given any polynomial q in ![]() , say q(t) = a0 + a1t + a2t2 + a3t3, can we express it in terms of Legendre polynomials? In otherwords, can we solve this equation for c0, c1, c2, c3?

, say q(t) = a0 + a1t + a2t2 + a3t3, can we express it in terms of Legendre polynomials? In otherwords, can we solve this equation for c0, c1, c2, c3?

|

Putting inmore detail, we have

|

Collecting similar terms on the left brings us to

|

From elementary algebra, we recall that two polynomials are the same if and only if the coefficients of each power of t are the same. Thus, we must have 2c0 − c2 = 2a0, 2c1 − 3c3 = 2a1, 3c2 = 2a2, and 5c3 = 2a3. We can indeed solve for the coefficients ci using these equations in reverse order. We arrive at ![]() ,

, ![]() ,

, ![]() , and

, and ![]() . This proves that the span of the first four Legendre polynomials is

. This proves that the span of the first four Legendre polynomials is ![]() . For the linear independence question, just substitute p = 0 in the preceding calculation to see that all cj must be zero(because a0 = a1 = a2 = a3 = 0).

. For the linear independence question, just substitute p = 0 in the preceding calculation to see that all cj must be zero(because a0 = a1 = a2 = a3 = 0).

THEOREM 2

If a basis is given for a vector space, then each vector in the space has a unique expression as a linear combination of elements in that basis.

PROOF This is established by Theorem 6 in Section 2.4. The reader can probably supply a proof without referring to Section 2.4. The proof relies on the concepts of basis, spanning, and linear independence.

Let’s look at a more provocative example, where the vectors involved are again polynomials.

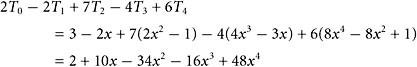

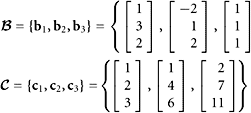

SOLUTION The correspondence between these polynomials and their terms can be displayed as follows:

The four column vectors headed by p1, p2, p3, and p4, in this table need to be analyzed to uncover any linear dependencies. A row reduction is called for:

|

|

(There is no need to keep the zero row.) The row-reduced form shows that column four is a linear combination of the first three columns. This is true of the beginning matrixaswell as the final matrix. (The row-reduction process does not disturb linear dependencies among the columns of a matrix.) Hence, a basis is {p1, p2, p3}. (We do not need p4.)

Another question for the same example: What non trivial linear relationship exists among p1, p2, p3, p4? We continue the reduction process on the preceding matrix, ending at the reduced echelon form:

|

|

We obtain ![]() ,

, ![]() , and x3 = −3.2x4. Leting x4 = 5, we have x = (8, 6, −16, 5). Therefore, we have 8p1 + 6p2 − 16p3 + 5p4 = 0. This verifies that p4 can be expressed in terms of the basis.

, and x3 = −3.2x4. Leting x4 = 5, we have x = (8, 6, −16, 5). Therefore, we have 8p1 + 6p2 − 16p3 + 5p4 = 0. This verifies that p4 can be expressed in terms of the basis.

Coordinate Vector

We almost always restrict our attention to ordered bases, so that we can refer to their elements by subscript notation. For example, we might write

|

If this is a basis for a vector space V, then each vector x in V can be written in one and only one way as ![]() . The n-tuple (c1, c2, …, cn) that arises in this way is called the coordinate vector of x associated with the (ordered) basis

. The n-tuple (c1, c2, …, cn) that arises in this way is called the coordinate vector of x associated with the (ordered) basis ![]() . It is denoted by

. It is denoted by ![]() .

.

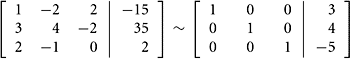

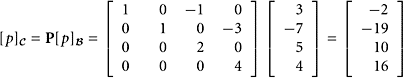

EXAMPLE 7

What is the coordinate vector of x if x = (−15, 35, 2) and if the basis used is ![]() = {u1, u2, u3} where u1 = (1, 3, 2), u2 = (−2, 4, −1), and u3 = (2, −2, 0)?

= {u1, u2, u3} where u1 = (1, 3, 2), u2 = (−2, 4, −1), and u3 = (2, −2, 0)?

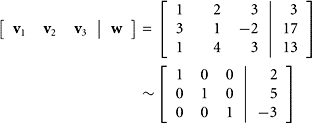

SOLUTION This problem can be turned into a system of linear equations that must be solved:

|

|

Row reduction of the augmented matrix leads to c = (3, 4, −5), as shown next:

|

|

An independent verification consists in computing

|

|

THEOREM 3

If ![]() is a basis, then the mapping of x to its coordinate vector

is a basis, then the mapping of x to its coordinate vector ![]() is linear.

is linear.

PROOF There are two aspects of linearity, which we can combine and address as follows:

|

where α and β are scalars. To see that this equation is true, let ![]() and

and ![]() . Then these three equations follow:

. Then these three equations follow:

|

Hence, we obtain

|

|

THEOREM 4

The mapping of a vector x to its coordinate vector ![]() (with respect to a given basis

(with respect to a given basis ![]() ) is surjective (onto) and injective (one-to-one).

) is surjective (onto) and injective (one-to-one).

PROOF Is this map surjective (onto)? Is every vector in ![]() an image of some x in V? Let c be any vector in

an image of some x in V? Let c be any vector in ![]() , and write c = (c1, c2, …, cn). Is c equal to

, and write c = (c1, c2, …, cn). Is c equal to ![]() for some x in V? Of course: it is the image of the vector

for some x in V? Of course: it is the image of the vector ![]() . (Refer to Section 2.3, Theorem 4.)

. (Refer to Section 2.3, Theorem 4.)

Is our mapping injective (one-to-one)? Because we know it to be linear, it suffices to verify that only the element 0 in V maps into the vector 0 of ![]() . But this is easy, because if

. But this is easy, because if ![]() , then

, then ![]() . (Refer to Section 2.3, Theorem 5.)

. (Refer to Section 2.3, Theorem 5.)

Isomorphism and Equivalence Relations

In Theorem 4, we have observed a pair of vector spaces, V and ![]() , that are related by a linear, injective, and surjective map. Such a map is called an isomorphism, and the spaces are said to be isomorphic to each other. The relationship of two spaces being isomorphic to each other is an example of an equivalence relation.

, that are related by a linear, injective, and surjective map. Such a map is called an isomorphism, and the spaces are said to be isomorphic to each other. The relationship of two spaces being isomorphic to each other is an example of an equivalence relation.

DEFINITION

A relation (written as ≡) is an equivalence relation on a set X if it has these three properties for all x, y, and z in X:

THEOREM 5

The relation of isomorphism between two vector spaces is an equivalence relation.

PROOF There are three properties to verify.

1. Is a vector space, V, isomorphic to itself? Yes, because the identity map I : V → V can serve as the isomorphism.

2. If V is isomorphic to W, does it follow that W is isomorphic to V? Yes, because if T : V → W is an isomorphism, then so is T−1 : W → V.

3. If V is isomorphic to W and if W is isomorphic to U, is V isomorphic to U? Yes: Suppose that we are given the isomorphisms T : V → W and S : W → U. To get from V to U, we use the composite mapping S ![]() T, which has the desired properties. The reader should recall that S

T, which has the desired properties. The reader should recall that S ![]() T is linear, injective, and surjective.

T is linear, injective, and surjective.

We discuss equivalence relations briefly in Section 1.1 and will return to them again in Section 5.3.

EXAMPLE 8

Are the spaces ![]() and

and ![]() isomorphic to each other?

isomorphic to each other?

SOLUTION If we think that these spaces are isomorphic to each other, we must invent an isomorphism, and prove that it is one. Given a polynomial in ![]() , write it in detail—for example, in the standard form

, write it in detail—for example, in the standard form

|

With the polynomial p, we can now associate the vectorin ![]()

|

This is another example of selecting a basis for a space and then identifying each element in the space with its coordinate vector relative to the basis. In this example, we associate ![]() with p, and the isomorphism is the map

with p, and the isomorphism is the map ![]() . Theorems 3 and 4 indicate that we have an isomorphism. What basis for

. Theorems 3 and 4 indicate that we have an isomorphism. What basis for ![]() are we using? It is the (ordered) set of functions t

are we using? It is the (ordered) set of functions t ![]() 1, t, t2, …, tn.

1, t, t2, …, tn.

EXAMPLE 9

Is ![]() isomorphic to

isomorphic to ![]() ?

?

SOLUTION Consider the map  .

.

We get all of ![]() , and L is surjective. If

, and L is surjective. If  , then

, then ![]() . Hence, L is injective. Thus, L is one-to-one and maps

. Hence, L is injective. Thus, L is one-to-one and maps ![]() onto

onto ![]() . The mapping L is linear. Hence, it is an isomorphism.

. The mapping L is linear. Hence, it is an isomorphism.

EXAMPLE 10

Is ![]() isomorphic to

isomorphic to ![]() ?

?

SOLUTION Let p(t) = a0 + a1t + a2t2 + a3t3 + a4t4 + a5t5, so that p is a generic element of ![]() . The vector of coefficients [a0, a1, a2, a3, a4, a5]T is in

. The vector of coefficients [a0, a1, a2, a3, a4, a5]T is in ![]() , and the matrix

, and the matrix ![]() is in

is in ![]() . The mapping from p to the 2 × 3 matrix is linear, injective, and surjective.

. The mapping from p to the 2 × 3 matrix is linear, injective, and surjective.

THEOREM 6

If a vector space has a finite basis, then all of its bases have the same number of elements.

PROOF I. Let ![]() be a finite basis for the vector space being considered. Let

be a finite basis for the vector space being considered. Let ![]() be another basis for the same space. Since

be another basis for the same space. Since ![]() is linearly independent and

is linearly independent and ![]() spans the space, the number of elements in

spans the space, the number of elements in ![]() is not greater than the number of elements in

is not greater than the number of elements in ![]() , by Theorem 10 in Section 2.4. Conversely, since

, by Theorem 10 in Section 2.4. Conversely, since ![]() is linearly independent and

is linearly independent and ![]() spans the space, the number of elements in

spans the space, the number of elements in ![]() is no greater than the number of elements in

is no greater than the number of elements in ![]() .

.

We give another, slightly different, proof. This is by the method of contradiction, and it uses coordinate vectors.

PROOF II. Let n denote the number of elements in ![]() , and suppose that

, and suppose that ![]() has more than n elements. Select v1, v2, …, vm in

has more than n elements. Select v1, v2, …, vm in ![]() , where m > n. We intend to show that

, where m > n. We intend to show that ![]() is linearly dependent, hence not a basis. Consider the equation

is linearly dependent, hence not a basis. Consider the equation

|

Each ![]() is a column vector having n components, because n is the number of elements in

is a column vector having n components, because n is the number of elements in ![]() . Thus, the preceding vector equation has n equations and m unknowns. By Corollary 2 in Section 1.3, this system has a nontrivial solution. By the linearity of the map

. Thus, the preceding vector equation has n equations and m unknowns. By Corollary 2 in Section 1.3, this system has a nontrivial solution. By the linearity of the map ![]() , we have

, we have

|

nontrivially. Thus, ![]() is linearly dependent.

is linearly dependent.

Finite-Dimensional and Infinite-Dimensional Vector Spaces

A vector space having a finite basis is said to be finite dimensional. The number of elements in any basis for that space is called the dimension of the space. Thus, we can now say that ![]() is n-dimensional, and

is n-dimensional, and ![]() has dimension n + 1. In both cases, it suffices to exhibit a basis of the space and count thenumber of elements in it. In the same way, we find that the dimension of

has dimension n + 1. In both cases, it suffices to exhibit a basis of the space and count thenumber of elements in it. In the same way, we find that the dimension of ![]() is mn. If V is a finite-dimensional vector space, we use Dim(V) for its dimension. Thus, for example, we have

is mn. If V is a finite-dimensional vector space, we use Dim(V) for its dimension. Thus, for example, we have

|

The space ![]() , defined in Section 2.4, Example 3, is not finite dimensional. This fact is most easily established by noting that the vectors en defined by (en)i = δni are infinite in number yet form a linearly independent set. By Theorem 6 in Section 2.4, this vector space cannot have a finite spanning set. Naturally, we call such vector spaces infinite dimensional. Many such spaces are needed in applied mathematics and they have been assiduously studied for more than a century. Hilbert spaces and Banach spaces are examples. So are Sobolev spaces. (These topics are beyond the scope of this book.)

, defined in Section 2.4, Example 3, is not finite dimensional. This fact is most easily established by noting that the vectors en defined by (en)i = δni are infinite in number yet form a linearly independent set. By Theorem 6 in Section 2.4, this vector space cannot have a finite spanning set. Naturally, we call such vector spaces infinite dimensional. Many such spaces are needed in applied mathematics and they have been assiduously studied for more than a century. Hilbert spaces and Banach spaces are examples. So are Sobolev spaces. (These topics are beyond the scope of this book.)

The linear space of all continuous real-valued functions on the interval [0, 1] is infinite dimensional. To be convinced of this, it suffices to exhibit an infinite linearly independent family of continuous functions on [0, 1]. The set of monomials, pn(t) = tn, where n = 0, 1, 2, …, serves this purpose.

DEFINITION

A vector space is finite dimensional if it has a finite basis; in that event, its dimension is the number of elements in any basis.

THEOREM 7

Two finite-dimensional vector spaces are isomorphic to each other if and only if their dimensions are the same.

PROOF First, suppose that the twospaces U and V are finite dimensional and have the same dimension. Select bases {u1, u2, …, un} for U and {v1, v2, …, vn} for V. Define a linear transformation T by writing T(ui) = vi, for i = 1, 2, …, n. Thus, the effect of T on any point

|

in U is like this:

|

Is T injective? Suppose T(u) = 0. Thus, we have

|

Yes, T is one-to-one by Theorem 5 in Section 2.3 because it follows that ![]() . Is T surjective? For any v in V, write

. Is T surjective? For any v in V, write

|

Yes, T is onto by Theorem 4 in Section 2.3.

For the other half of the proof, let T be an isomorphism from U onto V. Let {u1, u2, …, un} be a basis for U. Then {T(ui) : 1 ≤ i ≤ n} is a basis for V. This assertion has two parts, each requiring proof. First, is that set linearly independent? If

|

then

|

But, since T is an isomorphism, it is injective, and so ![]() and

and ![]() by the linear independence of {ui : 1 ≤ i ≤ n}. Second, does thesetin question span V ? If v ∈ V, then v = T(u) for some u ∈ U, because T is surjective. Then it follows that

by the linear independence of {ui : 1 ≤ i ≤ n}. Second, does thesetin question span V ? If v ∈ V, then v = T(u) for some u ∈ U, because T is surjective. Then it follows that

|

EXAMPLE 11

Are the spaces of matrices ![]() and

and ![]() isomorphic to one another? Are the spaces

isomorphic to one another? Are the spaces ![]() and

and ![]() isomorphic to each other?

isomorphic to each other?

SOLUTION The two matrix spaces have dimension mn, and those two spaces are therefore isomorphic to each other by Theorem 7. The other pair of spaces is isomorphic for the same reason: they have the same dimension, namely 21.

SOLUTION Notice that v = 2u −3w; namely, (13, 3, 4) = 2(2, 3, −1) − 3(−3, 1, −2). Thus, if we have a generic element of the span, we can do some simplifying, like this:

|

This equation shows that

|

The vector v, which was a linear combination of u and w, is not needed in describing the span. This calculation can be done in greater generality, as shown in the next result.

THEOREM 8

The span of a set is not affected by removing from the set one element that is a linear combination of other elements of that set.

PROOF Let S be the set, and suppose that

|

where the vectors v0, v1, …, vn are distinct members of S. Let w be any vector in the span of S. Write

|

We may require that m ≥ n, and we permit some coefficients ai to be zero. Then rewrite

|

where, if necessary, we have inserted some zero coefficients. Now we have

|

This shows that w is representable as a linear combination of elements in S, but without using v0.

THEOREM 9

If a set of n vectors spans an n-dimensional vector space, then the set is a basis for that vector space.

PROOF If S is the set and its span is V, we have only to prove that S is linearly independent. Suppose S is linearly dependent. Then some element of S is a linear combination of the other elements of S. Removing that element does not affect the span of the set, by Theorem 8. By Theorem 6 in Section 2.4, every linearly independent set in V can have at most n − 1 elements. Hence, we have Dim(V) ≤ n − 1, a contradiction.

THEOREM 10

In an n-dimensional vector space, every linearly independent set of n vectors is a basis.

PROOF If S is the given set and fails to be a basis, it must fail to span the vector space. Therefore, some point x in the vector space is not in the span of S. Now the set S ∪ {x} is linearly independent, and the dimension of the space must be greater than n.

SOLUTION Again, we use the principle that row-equivalent matrices have the same row space. The row-reduction process yields this reduced echelon matrix:

|

|

The dimension of the row space is three, and the first three rows of the matrix B provide a basis for the row space of A. It may or may not be true that the first three rows in A give a basis for the row space. Do not assume that this is true. (The next example illustrates this.)

SOLUTION A row reduction leads to

|

|

The first two rows of B give a simple basis for the row space of A, but the first two rowsof A do not span the row space. (Why?)

THEOREM 11

If a set of n vectors spans a vector space, then the dimension of that space is at most n.

PROOF According to Theorem 6 in Section 2.4, a linearly independent set in a vector space can have no more elements than a spanning set. Hence, a basis can have no more elements than a spanning set.

THEOREM 12

If a set of n vectors is linearly independent in a vector space, then the dimension of the space is at least n.

PROOF Let {v1, v2, …, vn} be a linearly independent set in a vector space V. By Theorem 6 in Section 2.4, any spanning set in V must have at least n elements. Hence, any basis for V must have at least n elements, and Dim(V) ≥ n.

THEOREM 13

The pivot columns of a matrix form a basis for its column space.

PROOF Let A be any matrix, and denote by B its reduced echelon form. The pivot columns in B form a linearly independent set because no pivot column in B is a linear combination of columns that precede it. (Look at Example 15, which shows a typical case.) Because A is row equivalent to B, the pivot columns of A form a linearly independent set. In this situation, remember that the vectors x that satisfy the equation Ax = 0 are the same as the vectors that satisfy Bx = 0. Each nonpivot column in A is a linear combination of the pivot columns of A. The set of pivot columns in A spans Col(A) and is linearly independent. Hence, it is a basis for the column space of A.

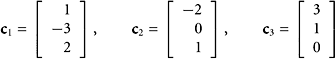

SOLUTION The row-reduction process on A yields a matrix B:

|

|

Notice that in B, columns three and four are linear combinations of columns one and two. Hence, the same is true for the columns of A. We conclude that columns one, two, and five in A constitute a basis for Col(A). Observe that columns one, two, and five in B certainly do not constitute a basis for Col(A). (Why?)

EXAMPLE 16

Let f(t) = 5t3 + 7t2 − 8t + 9.

Is the set {f, f′, f″, f![]() } a basis for

} a basis for ![]() ?

?

SOLUTION From f(t) = 5t3 + 7t2 − 8t + 9, we find

|

Suppose ![]() ; that is,

; that is,

|

or

|

Using the coefficients in these equations, we obtain this linear system

|

|

From forward substitution, we find that c0 = 0, c1 = 0, c2 = 0, and c3 = 0. The set is linearly independent. Therefore, the set is a basis for ![]() .

.

SOLUTION We create this matrix and transform it to reduced echelon form

|

|

Use columns one, two, four, and five in A as a basis. Notice that a linear combination of the first two columns gives the third column in A. The columns of B do not solve the stated problem because B does not contain the two given vectors.

Linear Transformation of a Set

The next theorem asserts that the application of a linear transformation to a set cannot increase its dimension. Stated in other terms, the dimension of the range of a linear transformation cannot be greater than the dimension of its domain.

THEOREM 14

For any finite-dimensional vector space U, for any vector space V, and for any linear transformation L from U to V, we have

|

PROOF Select a basis {u1, u2, …, un} for U. If x ∈ U, then ![]() for suitable ci. Consequently, we have

for suitable ci. Consequently, we have

|

Since x was arbitrary in U, we have

|

Hence, we obtain Dim(L[U]) ≤ n = Dim(U).

THEOREM 15

Let {u1, u2, …, un} be a basis for a vector space U. Let V be any vector space. A linear transformation T : U → V is completely determined by specifying the values of vi = T(ui) for i = 1, 2, …, n. These values, in turn, can be any vectors whatsoever in V.

PROOF If x is any point in U, its coordinates (a1, a2, …, an) with respect to the basis {u1, u2, …, un} are uniquely determined. Then

|

Since T is linear,

|

A common pitfall is to assume that a linear transformation can be defined by specifying T(wi) = yi for i = 1, 2, …, n for any vectors wi and yi. Theorem 15 does not say that, does it?

SOLUTION We are tempted to use Theorem 15. But the set {u1, u2, u3} is linearly dependent, in as much as

|

If T is a linear transformation, then

|

Putting in the details, we eventually get a contradiction:

|

|

Thus there is no such linear transformation.

THEOREM 16

In a finite-dimensional vector space, any linearly independent set can be expanded to create a basis.

PROOF Let {u1, u2, …, uk} be linearly independent. If {u1, u2, …, uk} spans the given vector space V, then it is a basis (because it is linearly independent and spans V). If{u1, u2, …, uk} does not span V, find uk+1 that is in V but not in Span{u1, u2, …, uk}. Then {u1, u2, …, uk, uk+1} is linearly independent. Repeat this process. See Theorem 11 in Section 2.4.

Dimensions of Various Subspaces

If T is a linear transformation defined on one vector space U and taking values in another vector space V, we already have two spaces related to T: its domain (U) and its co-domain (V). Then we can go on to define the kernel of T by writing

|

This is also called the null space of T and is denoted by Null(T). The nullity of a linear transformation T is the dimension of its null space. The kernel of T consists of all the vectors in U that are mapped by T into 0. Next, we can define the range of T,

|

This is the set of all images created by T when it acts on all the vectors in U. The kernel of T is a subspace of U, and the range of T is a subspace of V. These assertions have been proved in Section 5.1. (See Theorems 7 and 8 in Section 5.1.) The preceding definitions are valid without restricting U or V to be finite dimensional. However, if U is finite dimensional, we can establish some relations among the dimensions of these spaces. (All of these dimension numbers are non negative integers.) Begin by observing that if U is of dimension n, then the range of T cannot be of dimension greater than n. (Recall Theorem 14, which asserts that one cannot increase the dimension of a set by applying a linear transformation to it.)

The theorem we will prove can be illustrated in a simple case where the dimensions are easily discerned.

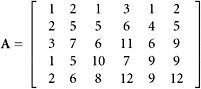

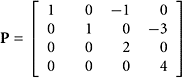

EXAMPLE 19

Consider a linear transformation T : ![]() →

→ ![]() defined by the following matrix A. Recall that this terminology means T(x) = Ax. We show also the reduced row echelon form of A as the matrix Ã.

defined by the following matrix A. Recall that this terminology means T(x) = Ax. We show also the reduced row echelon form of A as the matrix Ã.

|

|

What are the dimensions of the various subspaces associated with A?

SOLUTION The dimension of the kernel of A will be two because there are two free variables in Ã. Columns one, three, and five in à form a linearly independent set because they are pivot columns. Therefore, columns one, three, and five in A form a linearly independent set. These columns in A, (not in Ã) give a basis for the range of T. Hence, the dimension of the range of T is 3. The sum of the dimensions of the range and kernel is 5, which is also the dimension of the domain of T.

The preceding calculation should generalize to any matrix. Here is the general case, for an arbitrary matrix.

EXAMPLE 20

Let A be an m × n matrix. Let à be its reduced echelon form. Suppose there are k pivots in à or A. How are the dimensions of the column space and null space related?

SOLUTION There will be k columns having pivots and n − k columns without pivots. Each column without a pivot adds to the dimension of the null space because those columns correspond to free variables. Hence, we have

|

The columns of A that have pivot positions form a basis for the range of A. Hence, the equation (n − k) + k = n allows us to conclude that

|

This theoretical result can be formulated without referring to matrices as follows.

PROOF Select a basis {y1, y2, …, ym} for the range of T. Select a basis {x1, x2, …, xk} for the kernel of T. Select vectors ui so that T(ui) = yi for i = 1, 2, …, m. Define

|

It is to be proved that ![]() is a basis for the domain of T. Let the domain of T be the space U. First, we will prove that

is a basis for the domain of T. Let the domain of T be the space U. First, we will prove that ![]() spans U. Let v ∈ U. Then T(v) is in the range of T. Therefore, we obtain

spans U. Let v ∈ U. Then T(v) is in the range of T. Therefore, we obtain

|

|

This shows that

|

|

and that ![]() is in the kernel of T. From this it follows that

is in the kernel of T. From this it follows that ![]() , whence

, whence ![]() . Next, we prove that

. Next, we prove that ![]() is linearly independent. Suppose that

is linearly independent. Suppose that ![]() . Then

. Then

|

|

Hence, all bj are zero. Then ![]() and all ai are zero. Conclusion:

and all ai are zero. Conclusion:

|

The purely matrix form of Theorem 17 can be stated as follows.

THEOREM 18 Rank–Nullity Theorem

For any matrix, the number of columns equals the dimension of the column space plus the dimension of the null space.

Think of this theorem as stating that each column of a matrix contributes either to the dimension of the null space or the dimension of the column space. Ultimately, this depends on the very simple observation that each column either has a pivot position orit does not. The columns with pivots add to the dimension of the column space, whereas thecolumns without pivots add to the dimension of the null space (because they correspond to free variables in solving the homogeneous problem Ax = 0). In otherwords, for an m × n matrix, we have

|

The next theorem is an important one in linear algebra. It may even be surprising, because it draws a connection between the row space and the column space of a matrix. In general, these are subspaces in completely different vector spaces, ![]() and

and ![]() , if the matrix in question is m × n.

, if the matrix in question is m × n.

PROOF Let A be an m × n matrix of rank r. Let à denote its reduced row echelon form. Then r is the number of nonzero rows in Ã, by the definition of the term rank. Each nonzero row in à must have a pivot element, and these rows form a linearly independent set. Hence, they constitute a basis for the row space of A. Thus, r is the dimension of the row space of A. Because r rows have pivots, r columns have pivots. The remaining n − r columns do not have pivots. Each of these columns gives rise to a free variable, and the dimension of the null space of A equals this number n − r. The column space of A has dimension r, because the pivotal columns (of A) constitute a basis for the column space.

Another way to see that the column space has dimension r is to use Theorem 17. The dimension of the domain of A (interpreted as a linear transformation in the usual fashion x ![]() Ax) is n. The kernel of A has dimension n − r. Therefore, the range of A is of dimension r. The range of A is its column space.

Ax) is n. The kernel of A has dimension n − r. Therefore, the range of A is of dimension r. The range of A is its column space.

In some books and technical papers, you will encounter the terminology row rank and column rank of a matrix. These are the dimension of the row space and the dimension of the column space, respectively. Thus, Theorem 18 can be stated in the form ‘‘The row rank and column rank of a matrix are equal.’’

EXAMPLE 21

Is there an example of a linear transformation T from ![]() to

to ![]() whose range has dimension seven and whose kernel has dimension nine?

whose range has dimension seven and whose kernel has dimension nine?

SOLUTION Before attempting to construct such an example, we test the equation

|

The information given would lead to 9 + 7 = 17, which is incorrect. No such linear transformation exists.

EXAMPLE 22

Use Theorem 17 to prove that Dim (T[U]) ≤ Dim(U). Here T is a linear transformation defined on a vector space U.

SOLUTION In this inequality, let U be the domain of T. The notation T[U] represents the image of the vector space U under the linear transformation T. Hence, it is the range of T. By Theorem 17, the inequality follows at once, because the kernel of T cannot have a negative dimension.

EXAMPLE 23

Let

Find bases for each of the spaces Null(A), Col(A), and Row(A).

SOLUTION We find this row equivalence:

|

|

The reduced echelon form revealsthat insolving theequation Ax = 0 we obtain x1 + 2x2 + 3x3 = 0 and ![]() , or

, or

|

|

A basis for the null space is {u, v, w}. Therefore, we obtain Dim(Null(A)) = 3. A basis for the column space of A is given by columns one and four of A or

|

Consequently, we obtain Dim(Col(A)) = 2. A basis for the row space of A is given by rows one and two of B or

|

Finally, as a check, we have Dim Col(A)) + Dim (Null(A)) = 2 + 3 = 5 = n, where A is m × n = 4 × 5.

Caution

If A ~ B, where B is the reduced echelon form of A, then select pivot columns from A for the basis of Col(A), but select pivot rows from B for Row(A). Remember that row operations preserve the linear dependence among the columns but not among the rows!

SUMMARY 5.2

• In a vector space V, a linearly independent set that spans V is a basis for V.

• The set of columns of any n × n invertible matrix is a basis for ![]() .

.

• If a basis is given for a vector space, then each vector in the space has a unique expression as a linear combination of elements in that basis.

• The mapping of x to its coordinate vector ![]() (as described previously) is linear.

(as described previously) is linear.

• The mapping ![]() defined previously is injective (one-to-one) and surjective (onto) from V to

defined previously is injective (one-to-one) and surjective (onto) from V to ![]() .

.

• The relation of isomorphism between two vector spaces is an equivalence relation.

• If a vector space has a finite basis, then all of its bases have the same number of elements.

• A vector space is finite dimensional if it has a finite basis; in that event, its dimension is the number of elements in any basis.

• Two finite-dimensional vector spaces are isomorphic to each other if and only if their dimensions are the same.

• The span of a set is not affected by removing from the set one element that is a linear combination of other elements of that set.

• If a set of n vectors spans an n-dimensional vector space, then the set is a basis for that vector space.

• In an n-dimensional vector space every linearly independent set of n vectors is a basis.

• If a set of n vectors spans a vector space, then the dimension of that space is at most n.

• If a set of n vectors is linearly independent in a vector space V, then the dimension of V is at least n.

• The pivot columns of a matrix form a basis for its column space.

• For any finite-dimensional vector space U and any linear transformation L : U → V, we have Dim (L[U]) ≤ Dim(U).

• Let {u1, u2, …, un} be a basis for a vector space U. Let V be any vector space. A linear transformation T : U → V is completely determined by specifying the values of T(ui) for i = 1, 2, …, n. These values, in turn, can be any vectors whatsoever in V.

• In a finite-dimensional vector space, any linearly independent set can be expanded to get a basis.

• If T is a linear transformation whose domain is an n-dimensional vector space, then Dim (Ker(T)) + Dim (Range(T)) = n

• (Rank–Nullity Theorem.) For any matrix, the number of columns, n, equals the dimension of the column space plus the dimension of the null space:

Dim (Col(A)) + Dim (Null(A)) = n

• The row space and column space of a matrix have the same dimension:

Dim (Row(A)) = Dim (Col(A))

• Caution: If A ~ B, (the latter being the reduced echelon form) then select pivot columns from A as the basis vectors of Col(A) but select pivot rows from B for Row(A).

KEY CONCEPTS 5.2

Basis for avector space, standard basis, coordinate vector of an element in a vector space ![]() , isomorphism, isomorphic, equivalence relations, dimension of a vector space, finite dimensional and infinite dimensional, kernel, domain, range, co-domain, dimensions of various subspaces, column space, row space, null space, rank, nullity

, isomorphism, isomorphic, equivalence relations, dimension of a vector space, finite dimensional and infinite dimensional, kernel, domain, range, co-domain, dimensions of various subspaces, column space, row space, null space, rank, nullity

GENERAL EXERCISES 5.2

1. Consider

Do the rows of this matrix form a basis for a subspace in ![]() ?

?

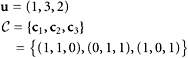

2. Let ![]() and x = (3, 2, −1). What is

and x = (3, 2, −1). What is ![]() ?

?

3. Express the polynomial p defined by p(t) = 3(t − 2) + 4(3 − 5t)2 − t3 in terms of Legendre polynomials.

4. Express the polynomial defined by p = 3P0 − 4P1 + 2P2 − P3 in standard form. (Here, Pk is the kth degree Legendre polynomial defined in Example 5.)

5. Find the coordinates of x = (−5, 1, 2) with respect to the basis consisting of u1 = (1, 3, 2), u2 = (2, 1, 4), and u3 = (1, 0, 6).