Appendix C. Lucene/contrib benchmark

The contrib/benchmark module is a useful framework for running repeatable performance tests. By creating a short script, called an algorithm (file.alg), you tell the benchmark module what test to run and how to report its results. In chapter 11 we saw how to use benchmark for measuring Lucene’s indexing performance. In this appendix we delve into more detail. Benchmark is quite new and will improve over time, so always check the Javadocs. The package-level Javadocs in the byTask sub-package have a good overview.

You might be tempted to create your own testing framework instead of learning how to use benchmark. Likely you’ve done so already many times in the past. But there are some important reasons to make the up-front investment and use benchmark instead:

- Because an algorithm file is a simple text file, it’s easily and quickly shared with others so they can reproduce your results. This is vitally important in cases where you’re trying to track down a performance anomaly and you’d like to isolate the source. Whereas for your own testing framework often there are numerous software dependencies and perhaps resources like local files or databases, that would have to be somehow transferred for someone else to run the test.

- The benchmark framework already has built-in support for common standard sources of documents (such as Reuters, Wikipedia, or TREC).

- With your own test, it’s easy to accidentally create performance overhead in the test code itself (or even sneaky bugs!), which skews results. The benchmark package—because it’s open source—is well debugged and well tuned, so it’s less likely to suffer from this issue. And it only gets better over time!

- Thanks to a great many built-in tasks, you can create rich algorithms without writing any Java code. By writing a few lines (the algorithm file) you can craft nearly any test you’d like. You only have to change your script and rerun if you want to test something else. No compilation required!

- Benchmark has multiple extension points, to easily customize the source of documents, source of queries, and how the metrics are reported in the end. For advanced cases, you can also create and plug in your own tasks, as we did in section 11.2.1.

- Benchmark already gathers important metrics, like runtime, documents per second, and memory usage, saving you from having to instrument these in your custom test code.

Let’s get started with a simple algorithm.

C.1. Running an algorithm

Save the following lines to a file, test.alg:

# The analyzer to use

analyzer=org.apache.lucene.analysis.standard.StandardAnalyzer

# Content source

content.source=org.apache.lucene.benchmark.byTask.feeds.ReutersContentSource

# Directory

directory=FSDirectory

# Turn on stored fields

doc.stored = true

# Turn on term vectors

doc.term.vectors = true

# Don't use compound-file format

compound = false

# Make only one pass through the documents

content.source.forever = false

# Repeat 3 times

{"Rounds"

# Clear the index

ResetSystemErase

# Name the contained tasks "BuildIndex"

{"BuildIndex"

# Create a new IndexWriter

-CreateIndex

# Add all docs

{ "AddDocs" AddDoc > : *

# Close the index

-CloseIndex

}

# Start a new round

NewRound

} : 3

# Report on the BuildIndex task

RepSumByPrefRound BuildIndex

As you can probably guess, this algorithm indexes the entire Reuters corpus, three times, and reports the performance of the BuildIndex step separately for each round. Those steps include creating a new index (opening an IndexWriter), adding all Reuters documents, and closing the index. Remember, when testing indexing performance it’s important to include the time to close the index because necessary time-consuming tasks happen during close(). For example, Lucene waits for any still-running background merges to finish, then syncs all newly written files in the index. To run your algorithm, use this:

ant run-task -Dtask-alg=<file.alg> -Dtask.mem=512M

Note that if you’ve implemented any custom tasks, you’ll have to include the classpath to your compiled sources by also adding this to the Ant command line:

-Dbenchmark.ext.classpath=/path/to/classes

Ant first runs a series of dependency targets—for example, making sure all sources are compiled and downloading, and unpacking the Reuters corpus. Finally, it runs your task and produces something like this under the run-task output:

Working Directory: /lucene/clean/contrib/benchmark/work Running algorithm from: /lucene/clean/contrib/benchmark/eg1.alg ------------> config properties: analyzer = org.apache.lucene.analysis.standard.StandardAnalyzer compound = false content.source =org.apache.lucene.benchmark.byTask.feeds.ReutersContentSource content.source.forever = false directory = FSDirectory doc.stored = true doc.term.vectors = true work.dir = work ------------------------------- ------------> algorithm: Seq { Rounds_3 { ResetSystemErase BuildIndex { -CreateIndex AddDocs_Exhaust { AddDoc > * EXHAUST -CloseIndex } NewRound } * 3 RepSumByPrefRound BuildIndex } ------------> starting task: Seq 1.88 sec --> main added 1000 docs 4.04 sec --> main added 2000 docs 4.48 sec --> main added 3000 docs ...yada yada yada... 12.18 sec --> main added 21000 docs --> Round 0-->1 ------------> DocMaker statistics (0): total bytes of unique texts: 17,550,748 0.2 sec --> main added 22000 docs 0.56 sec --> main added 23000 docs 0.92 sec --> main added 24000 docs ...yada yada yada... 8.02 sec --> main added 43000 docs --> Round 1-->2 0.29 sec --> main added 44000 docs 0.63 sec --> main added 45000 docs 1.04 sec --> main added 46000 docs ...yada yada yada... 9.43 sec --> main added 64000 docs --> Round 2-->3 -->Report sum by Prefix (BuildIndex) and Round (3 about 3 out of 14) Operation round runCnt recsPerRun rec/s elapsedSec avgUsedMem avgTotalMem BuildIndex 0 1 21578 1,682 12.83 26,303,608 81,788,928 BuildIndex - 1 - 1 - 21578 2,521 - 8.56 44,557,144 81,985,536 BuildIndex 2 1 21578 2,126 10.15 37,706,752 80,740,352 #################### ### D O N E !!! ### ####################

The benchmark module first prints all the settings you’re running with, under config properties. It’s best to look this over and verify the settings are what you intended. Next it “pretty-prints” the steps of the algorithm. You should also verify this algorithm is what you expected. If you put a closing } in the wrong place, this is where you will spot it. Finally, benchmark runs the algorithm and prints the status output, which usually consists of

- The content source periodically printing how many documents it has produced

- The Rounds task printing whenever it finishes a new round

When this finishes, and assuming you have reporting tasks in your algorithm, the report is generated, detailing the metrics from each round.

The final report shows one line per round, because we’re using a report task (RepSumByPrefRound) that breaks out results by round. For each round, it includes the number of records (added documents in this case), records per second, elapsed seconds, and memory usage. The average total memory is obtained by calling java.lang.Runtime.getRuntime().totalMemory(). The average used memory is computed by subtracting freeMemory() from totalMemory().

What exactly is a record? In general, most tasks count as +1 on the record count. For example, every call to AddDoc adds 1. Task sequences aggregate all records counts of their children. To prevent the record count from incrementing, you prefix the task with a hyphen (-) as we did earlier for CreateIndex and CloseIndex. This allows you to include the cost (time and memory) of creating and closing the index yet correctly amortize that total cost across all added documents.

So that was pretty simple, right? From this you could probably poke around and make your own algorithms. But to shine, you’ll need to know the full list of settings and tasks that are available.

C.2. Parts of an algorithm file

Let’s dig into the various parts of an algorithm file. This is a simple text file. Comments begin with the # character, and whitespace is generally not significant. Usually the settings, which bind global names to their values, appear at the top. Next comes the heart of the algorithm, which expresses the series of tasks to run, and in what order. Finally, there’s usually one or more reporting tasks at the very end to generate the final summary. Let’s look first at the settings.

Settings are lines that match this form:

name = value

where name is a known setting (the full list of settings is shown in tables C.1, C.2, and C.3). For example, compound = false tells the CreateIndex or OpenIndex task to create the IndexWriter with setUseCompoundFile set to false.

Table C.1. General settings

|

Name Default value |

Description |

|---|---|

|

work.dir System property benchmark.work.dir or work. |

Specifies the root directory for data and indexes. |

|

analyzer StandardAnalyzer |

Contains the fully qualified class name to instantiate as the analyzer for indexing and parsing queries. |

|

content.source SingleDocSource |

Specifies the class that provides the raw content. |

|

doc.maker DocMaker |

Specifies the class used to create documents from the content provided by the content source. |

|

content.source.forever true |

Boolean. If true, the content.source will reset itself upon running out of content and keep producing the same content forever. Otherwise, it will stop when it has made one pass through its source. |

|

content.source.verbose false |

Specifies whether messages from the content source should be printed. |

|

content.source.encoding null |

Specifies the character encoding of the content source. |

|

html.parser Not set |

Contains the class name to filter HTML to text. The default is null (no HTML parsing is invoked). You can specify org.apache.lucene.benchmark.byTask.feeds.DemoHTMLP arser to use the simple HTML parser included in Lucene’s demo package. |

|

doc.stored false |

Boolean. If true, fields added to the document by the doc.maker are created with Field.Store.YES. |

|

doc.tokenized true |

Boolean. If true, fields added to the document by the doc.maker are created with Field.Index.ANALYZED or Field.Index.ANALYZED_NO_NORMS. |

|

doc.tokenized.norms false |

Specifies whether non-body fields in the document should be indexed with norms. |

|

doc.body.tokenized.norms true |

Specifies whether the body field should be indexed with norms. |

|

doc.term.vector false |

Boolean. If true, fields are indexed with term vectors enabled. |

|

doc.term.vector.positions false |

Boolean. If true, then term vectors positions are indexed. |

|

doc.term.vector.offsets false |

Boolean. If true, term vector offsets are indexed. |

|

doc.store.body.bytes false |

Boolean. If true, the document’s fields are indexed with Field.Store.YES into the field docbytes. |

|

doc.random.id.limit -1 |

Integer. If not equal to -1 the LineDocMaker tasks will randomly pick IDs within this bound. This is useful with the UpdateDoc task for testing IndexWriter’s updateDocument performance |

|

docs.dir Depends on document source |

Contains the string directory name. Used by certain document sources as the root directory for finding document files in the file system. |

|

docs.file Not set |

Contains the string directory name. Used by certain document sources as the root filename. Used by LineDocSource, WriteLineFile, and EnwikiContentSource as the file for singleline documents. |

|

doc.index.props false |

If true, the properties set by the content source for each document will be indexed as separate fields. Presently only SortableSingleDocMaker and any HTML content processed by the HTML parser set properties. |

|

doc.reuse.fields true |

Boolean. If true, a single shared instance of Document and a single shared instance of Field for each field in the document are reused. This gains performance by avoiding allocation and GC costs. But if you create a custom task that adds documents to an index using private threads, you’ll need to turn this off. The normal parallel task sequence, which also uses threads, may leave this at true because the single instance is per thread. |

|

query.maker SimpleQueryMaker |

Contains the string class name for the source of queries. See section C.2.2 for details. |

|

file.query.maker.file Not set |

Specifies the string path to the filename used by FileBasedQueryMaker. This is the file that contains one text query per line |

|

file.query.maker.default.field body |

Specifies the field that FileBasedQueryMaker will issue its queries against. |

|

doc.delete.step 8 |

When deleting documents in steps, this is the step that’s added in between deletions. See the DeleteDoc task for more detail. |

Table C.2. Settings that affect logging

|

Name Default value |

Description |

|---|---|

|

log.step 1000 |

Integer. Specifies how often to print the progress line for non-contentsource tasks. You can also specify log.step.<TASK> (for example, log.step.AddDoc) to set a separate step per task. A value of -1 turns off logging for that task. |

|

content.source.log.step 0 |

Integer. Specifies how often to print the progress line, as measured by the number of docs created by the content source. |

|

log.queries false |

Boolean. If true, the queries returned by the query maker are printed. |

|

task.max.depth.log 0 |

Integer. Controls which nested tasks should do any logging. Set this to a lower number to limit how many tasks log. 0 means to log only the top-level tasks. |

|

writer.info.stream Not set |

Enables IndexWriter’s infoStream logging. Use SystemOut for System.out; SystemErr for System.err; or a filename to direct the output to the specified file. |

Table C.3. Settings that affect IndexWriter

|

Setting Default |

Description |

|---|---|

|

compound true |

Boolean. True if the compound file format should be used. |

|

merge.factor 10 |

Merge factor |

|

max.buffered -1 (don't flush by doc count) |

Max buffered docs |

|

max.field.length 10000 |

Maximum field length |

|

directory RAMDirectory |

Directory |

|

ram.flush.mb 16.0 |

RAM buffer size |

|

merge.scheduler org.apache.lucene.index.ConcurrentMergeScheduler |

Merge scheduler |

|

merge.policy org.apache.lucene.index.LogByteSizeMergePolicy |

Merge policy |

|

deletion.policy org.apache.lucene.index.KeepOnlyLastCommitDeletionPolicy |

Deletion policy |

Often you want to run a series of rounds where each round uses different combinations of settings. Imagine you’d like to measure the performance impact of changing RAM buffer sizes during indexing. You can do this like so:

name = header:value1:value2:value3

For example, ram.flush.mb = MB:2:4:8:16 would use a 2.0 MB, 4.0 MB, 8.0 MB, and 16.0 MB RAM buffer size in IndexWriter for each round of the test, and label the corresponding column in the report as “MB.” Table C.1 shows the general settings, table C.2 shows settings that affect logging, and table C.3 shows settings that affect IndexWriter. Be sure to consult the online documentation for an up-to-date list. Also, your own custom tasks can define their own settings.

C.2.1. Content source and document maker

When running algorithms that index documents, you’ll need to specify a source that creates documents. There are two settings:

- content.source specifies a class that provides the raw content to create documents from.

- doc.maker specifies a class that takes the raw content and produces Lucene documents.

The default doc.maker, org.apache.lucene.benchmark.byTask.feeds.DocMaker, is often sufficient. It will pull content from the content source, and based on the doc.* settings (for example, doc.stored) create the appropriate Document. The list of built-in ContentSources is shown in table C.4. In general, all content sources can decompress compressed bzip files on the fly and accept arbitrary encoding as specified by the content.source.encoding setting.

Table C.4. Built-in ContentSources

|

Name |

Description |

|---|---|

| SingleDocSource | Provides a short (~150 words) fixed English text, for simple testing. |

| SortableSingleDocSource | Like SingleDocSource, but also includes an integer field, sort_field; a country field, country; and a random short string field, random_string. Their values are randomly selected per document to enable testing sort performance on the resulting index. |

| DirContentSource | Recursively visits all files and directories under a root directory (specified with the docs.dir setting), opening any file ending with the extension.txt, and yielding the file’s contents. The first line of each file should contain the date, the second line should contain the title, and the rest of the document is the body. |

| LineDocSource | Opens a single file, specified with the docs.file setting, and reads one document per line. Each line should have title, date, and body, separated by the tab character. Generally this source has far less overhead than the others because it minimizes the I/O cost by working with only a single file. |

| EnwikiContentSource | Generates documents directly from the large XML export provided by http://wikipedia.org. The setting keep.image.only.docs, a Boolean setting that defaults to true, decides whether image-only (no text) documents are kept. Use docs.file to specify the XML file. |

| ReutersContentSource | Generates documents unpacked from the Reuters corpus. The Ant task getfiles retrieves and unpacks the Reuters corpus. Documents are created as *.txt files under the output directory work/reuters-out. The setting docs.dir, defaulting to work/reuters-out, specifies the root location of the unpacked corpus. |

| TrecContentSource | Generates documents from the TREC corpus. This assumes you have already unpacked the TREC into the directory set by docs.dir. |

Each of these classes is instantiated once, globally, and then all tasks will pull documents from this source. Table C.4 describes the built-in ContentSource classes.

You can also create your own content source or doc maker by subclassing ContentSource or DocMaker. But take care to make your class thread-safe because multiple threads will share a single instance.

C.2.2. Query maker

The query.maker setting determines which class to use for generating queries. Table C.5 describes the built-in query makers.

Table C.5. Built-in query makers

|

Name |

Description |

|---|---|

| FileBasedQueryMaker | Reads queries from a text file one per line. Set file.query.maker.default.field (defaults to body) to specify which index field the parsed queries should be issued against. Set file.query.maker.file to specify the file containing the queries. |

| ReutersQueryMaker | Generates a small fixed set of 10 queries that roughly match the Reuters corpus. |

| EnwikiQueryMaker | Generates a fixed set of 90 common and uncommon actual Wikipedia queries. |

| SimpleQueryMaker | Used only for testing. Generates a fixed set of 10 synthetic queries. |

| SimpleSloppyPhraseQueryMaker | Takes the fixed document text from SimpleDocMaker and programmatically generates a number of queries with varying degrees of slop (from 0 to 7) that would match the single document from SimpleDocMaker. |

C.3. Control structures

We’ve finished talking about settings, content sources, doc makers, and query makers. Now we’ll talk about the available control structures in an algorithm, which is the all-important “glue” that allows you to take built-in tasks and combine them in interesting ways. Here are the building blocks:

- Serial sequences are created with { ... }. The enclosed tasks are run one after another, by a single thread. For example:

{CreateIndex AddDoc CloseIndex} creates a new index, adds a single document pulled from the doc maker, then closes the index. - Run a task in the background by appending &. For example:

OpenReader

{ Search > : * &

{ Search > : * &

Wait(30)

CloseReader opens a reader, runs two search threads in the background, waits for 30 seconds, asks the background threads to stop, and closes the reader. - Parallel sequences are created with [ ... ]. A parallel sequence runs the enclosed tasks with as many threads as there are tasks, with each task running in its own thread.

For example:

[AddDoc AddDoc AddDoc AddDoc] creates four threads, each of which adds a single document, then stops. - Repeating a task multiple times is achieved by appending :N to the end. For example:

{AddDoc}: 1000 adds the next 1,000 documents from the document source. Use * to pull all documents from the doc maker. For example:

{AddDoc}: * adds all documents from the doc maker. When you use this, you must also set content.source.forever to false. - Repeating a task for a specified amount of time is achieved by appending :Xs to the end. For example:

{AddDoc}: 10.0s runs the AddDoc task for 10 seconds. - Name a sequence like this:

{"My Name" AddDoc} : 1000 This defines a single AddDoc called “My Name,” and runs that task 1,000 times. The double quotes surrounding the name are required even if the name doesn’t have spaces. Your name will then be used in the reports. - Some tasks optionally take a parameter string, in parentheses, after the task. For example, AddDoc(1024) will create documents whose body field consists of approximately 1,024 characters (an effort is made not to split words).

If you try to pass a parameter to a task that doesn’t take one, or the type isn’t correct, you’ll hit a “Cannot understand

algorithm” error. Tables C.6 and C.7 detail the parameters accepted by each task.

Table C.6. Administration tasks

Task Name

Description

ClearStats Clears all statistics. Report tasks run after this point will only include statistics from tasks run after this task. NewRound Begins a new round of a test. This command makes the most sense at the end of an outermost sequence. This increments a global “round counter.” All tasks that start will record this new round count and their statistics would be aggregated under that new round count. For example, see the RepSumByNameRound reporting task. In addition, NewRound moves to the next setting if the setting specified different settings per round. For example, with setting merge.factor=mrg:10:100:10:100, merge.factor would change to the next value after each round. Note that if you have more rounds than number of settings, it simply wraps around to the first setting again. ResetInputs Reinitializes the document and query sources back to the start. For example, it’s a good idea to insert this call after NewRound to make sure your document source feeds the exact same documents for each round. This is only necessary when you aren’t running your content source to exhaustion. ResetSystemErase Resets all index and input data, and calls System.gc(). This doesn’t reset statistics. It also calls ResetInputs. All writers and readers are closed, nulled, and deleted. The index and directory are erased. You must call CreateIndex to create a new index after this call if you intend to add documents to an index. ResetSystemSoft Just like ResetSystemErase, except the index and work directory aren’t erased. This is useful for testing performance of opening an existing index for searching or updating. You can use the OpenIndex task after this reset. Table C.7. Built-in tasks for indexing and searching

Task Name

- Description

Parameter

CreateIndex - Creates a new index with IndexWriter. You can then use the AddDoc tand UpdateDoc tasks to make changes to the index.

OpenIndex - Opens an existing index with IndexWriter. You can then use the AddDoc and UpdateDoc tasks to change the index.

commitName A string label specifying which commit should be opened. This must match the commitName passed to a previous CommitIndex call. OptimizeIndex - Optimizes the index. This task optionally takes an integer parameter, which is the maximum number of segments to optimize. This calls the IndexWriter.optimize(int maxNumSegments) method. If there’s no parameter, it defaults to 1. This requires that an IndexWriter be opened with either CreateIndex or OpenIndex.

maxNumSegments This is an integer, allowing you to perform a partial optimize if it’s greater than 1. CommitIndex - Calls commit on the currently open IndexWriter. This requires that an IndexWriter be opened with either CreateIndex or OpenIndex.

commitName A string label that’s recorded into the commit and can later be used by OpenIndex to open a specific commit. RollbackIndex - Calls IndexWriter.rollback to undo all changes done by the current IndexWriter since the last commit. This is useful for repeatable tests where you want each test to perform indexing but not commit any of the changes to the index so that each test always starts from the same index.

CloseIndex - Closes the open index.

doWait true or false, passed to IndexWriter.close. If false, the IndexWriter will abort any running merges and forcefully close. This parameter is optional and defaults to true. OpenReader - Creates an IndexReader and IndexSearcher, available for the search tasks. If a Read task is invoked, it will use the currently open reader. If no reader is open, it will open its own reader, perform its task, and then close the reader. This enables testing of various scenarios: sharing a reader, searching with a cold reader, searching with a warm reader, etc.

readOnly, commitName readOnly is true or false. commitName is a string name of the specific commit point that should be opened. NearRealtimeReader - Creates a separate thread that periodically calls getReader() on the current IndexWriter to obtain a near-real-time reader, printing to System.out how long the reopen took. This task also runs a fixed query body:1, sorting by docdate, and reports how long the query took to run.

pauseSec A float that specifies how long to wait before opening each near-real-time reader. FlushReader - Flushes but doesn’t close the currently open IndexReader. This is only meaningful if you’ve used one of the Delete tasks to perform deletions.

commitName A string name of the specific commit point that should be written. CloseReader - Closes the previously opened reader.

NewAnalyzer - Switches to a new analyzer. This task takes a single parameter, which is a comma-separated list of class names. Each class name can be shortened to just the class name, if it falls under the org.apache.lucene.analysis package; otherwise, it must be fully qualified. Each time this task is executed, it will switch to the next analyzer in its list, rotating back to the start if it hits the end.

Search - Searches an index. If the reader is already opened (with the OpenReader task), it’s searched. Otherwise, a new reader is opened, searched, and closed. This task simply issues the search but doesn’t traverse the results.

SearchWithSort - Searches an index with a specified sort.

sortDesc A comma-separated list of field:type values. For example "country:string,sort_field:int". doc means to sort by Lucene’s docID; noscore means to not compute scores; nomaxscore means to not compute the maximum score. SearchTrav - Searches an index and traverses the results. Like Search, except the top ScoreDocs are visited. This task takes an optional integer parameter, which is the number of ScoreDocs to visit. If no parameter is specified, the full result set is visited. This task returns as its count the number of documents visited.

traversalSize Integer count of how many ScoreDocs to visit. SearchTravRet - Searches an index and traverses and retrieves the results. Like SearchTrav, except for each ScoreDoc visited, the corresponding document is also retrieved from the index.

traversalSize Integer count of how many ScoreDocs to visit. SearchTravRetLoadFieldSelector - Search an index and traverse and retrieve only specific fields in the results, using FieldSelector. Like SearchTrav, except this task takes an optional comma-separated string parameter, specifying which fields of the document should be retrieved.

fieldsToLoad A comma-separated list of fields to load. SearchTravRetHighlight - Searches an index; traverses, retrieves, and highlights certain fields from the results using contrib/highlighter.

highlightDesc This task takes a comma-separated parameter list to control details of the highlighting. Please consult its Javadocs for the details. SearchTravRetVectorHighlight - Searches an index; traverses, retrieves, and highlights certain fields from the results using contrib/fast-vector-highlighter.

highlightDesc This task takes a comma-separated parameter list to control details of the highlighting. Please consult its Javadocs for the details. SetProp - Changes a property’s value. Normally a property’s value is set once when the algorithm is first loaded. This task lets you change a value midstream. All tasks executed after this one will see the new value.

propertyName, value Name and new value for the property. Warm - Warms up a previously opened searcher by retrieving all documents in the index. Note that in a real application, this isn’t sufficient as you’d also want to prepopulate the FieldCache if you’re using it, and possibly issue initial searches for commonly searched for terms. Alternatively, you could create steps in your algorithm that simply run your own sequence of queries, as your custom warm-up.

DeleteDoc - Deletes a document by document ID, or by incrementing step size to compute the document ID to be deleted. Note that this task performs its deletions using IndexReader, so you must first open one using OpenReader.

docID An integer. If the parameter is negative, deletions are done by the doc.delete.step setting. For example, if the step size is 10, then each time this task is executed it will delete the document IDs in the sequence 0, 10, 20, 30, etc. If the parameter is non-negative, then this is a fixed document ID to delete. DeleteByPercent - Removes the specified percentage of all documents (maxDoc()). If the index has already removed more than this percentage, then first undeleteAll is called, and then the target percentage is deleted. Note that this task performs its deletions using IndexReader, so you must first open one using OpenReader.

Percent Double value (from 0 to 100) specifying what percentage of all docs should be deleted. AddDoc - Adds the next document to the index. IndexWriter must already be opened.

docSize A numeric parameter indicating the size of the added document, in characters. The body of each document from the content source will be truncated to this size, with the leftover being prepended to the next document. This requires that the doc maker support changing the document size. UpdateDoc - Calls IndexWriter.updateDocument to replace documents in the index. The docid field of the incoming document is passed as the Term to specify which document should be updated. The doc.random.id.limit setting, which randomly assigns docIDs, is useful when testing updateDocument.

docSize Same meaning as AddDoc. ReadTokens - This task tests the performance of just the analyzer’s tokenizer. It simply reads the next document from the doc maker and fully tokenizes all its fields. As the count, this task returns the number of tokens encountered. This is a useful task to measure the cost of document retrieval and tokenization. By subtracting this cost from the time spent building an index, you can get a rough measure of what the actual indexing cost is.

WriteLineDocTask - Creates a line file that can then be used by LineDocMaker. See section C.4.1 for details.

docSize Same meaning as addDoc. Wait - Simply waits for the specified amount of time. This is useful when a number of prior tasks are running in the background (simply append &).

Time to wait. Append s for seconds, m for minutes, and h for hours. - Turning off statistics of the child task requires the > character instead of } or ]. This is useful for avoiding the overhead of gathering statistics when you don’t require that level of detail. For example:

{ "ManyAdds" AddDoc > : 10000 adds 10,000 docs and won’t individually track statistics of each AddDoc call (but the 10,000 added docs are tracked by the outer sequence containing "ManyAdds"). - In addition to specifying how many times a task or task sequence should be repeated, you can specify the target rate in count

per second (default) or count per minute. Do this by adding : N : R after the task. For example:

{ AddDoc } : 10000 : 100/sec adds 10,000 documents at a rate of 100 documents per second. Or

[ AddDoc ]: 10000: 3 adds 10,000 docs in parallel, spawning one thread for each added document, at a rate of three new threads per second. - Each task contributes to the records count that’s used for reporting at the end. For example, AddDoc returns 1. Most tasks return count 1, some return count 0, and some return a count greater than 1. Sometimes you don’t want

to include the count of a task in your final report. To do that, simply prepend a hyphen before your task. For example, if

you use this:

{"BuildIndex"

-CreateIndex

{AddDoc}:10000

-CloseIndex

} the report will record exactly 10,000 records, but if you leave the - out, it reports 10,002.

C.4. Built-in tasks

We’ve discussed the settings and the control structures, or glue, that allow you to combine tasks into larger sequences of tasks. Now, finally, let’s review the built-in tasks. Table C.6 describes the built-in administration tasks, and table C.7 describes the tasks for indexing and searching

If the commands available for use in the algorithm don’t meet your needs, you can add commands by adding a new task under the org.apache.lucene.benchmark.byTask.tasks package. You should extend the PerfTask abstract class. Make sure that your new task class name is suffixed by Task. For example, once you compile the class SliceBreadTask.java and ensure it’s on the classpath that you specify to Ant, then you can invoke this task by using SliceBread in your algorithm.

C.4.1. Creating and using line files

Line files are simple text files that contain one document per line. Indexing documents from a line file incurs quite a bit less overhead than other approaches, such as opening and closing one file per document, pulling files from a database, or parsing an XML file. Minimizing such overhead is important if you’re trying to measure performance of just the core indexing. If instead you’re trying to measure indexing performance from a particular content source, then you should not use a line file.

The benchmark framework provides a simple task, WriteLineDoc, to create line files from any content source. Using this task, you can translate any source into a line file. The one limitation is that each document only has a date, title, and body field. The line.file.out setting specifies the file that’s created. For example, use this algorithm to translate the Reuters corpus into a single-line file:

# Where to get documents from:

content.source=org.apache.lucene.benchmark.byTask.feeds.ReutersContentSource

# Stop after processing the document feed once:

content.source.forever=false

# Where to write the line file output:

line.file.out=work/reuters.lines.txt

# Process all documents, appending each one to the line file:

{WriteLineDoc}: *

Once you’ve done this, you can then use reuters.lines.txt and LineDocSource like this:

# Feed that knows how to process the line file format:

content.source=org.apache.lucene.benchmark.byTask.feeds.LineDocSource

# File that contains one document per line:

docs.file=work/reuters.lines.txt

# Process documents only once:

content.source.forever=false

# Create a new index, index all docs from the line file, close the

# index, produce a report.

CreateIndex

{AddDoc}: *

CloseIndex

RepSumByPref AddDoc

C.4.2. Built-in reporting tasks

Reporting tasks generate a summary report at the end of the algorithm, showing how many records per second were achieved, how much memory was used, showing one line per task or task sequence that gathered statistics. The reporting tasks themselves aren’t measured or reported. Table C.8 describes the built-in reporting tasks. If needed, additional reports can be added by extending the abstract class ReportTask and by manipulating the statistics data in Points and TaskStats.

Table C.8. Reporting tasks

|

Task name |

Description |

|---|---|

| RepAll | All (completed) tasks run. |

| RepSumByName | All statistics, aggregated by name. So, if AddDoc was executed 2,000 times, only one report line would be created for it, aggregating all those 2,000 statistic records. |

| RepSelectByPref prefix | All records for tasks whose name start with prefix. |

| RepSumByPref prefix | All records for tasks whose name start with prefix aggregated by their full task name. |

| RepSumByNameRound | All statistics, aggregated by name and by round. So, if AddDoc was executed 2,000 times in each of three rounds, three report lines would be created for it, aggregating all those 2,000 statistic records in each round. See more about rounds in the NewRound task description in table C.6. |

| RepSumByPrefRound prefix | Similar to RepSumByNameRound, except only tasks whose name starts with prefix are included. |

C.5. Evaluating search quality

How do you test the relevance or quality of your search application? Relevance testing is crucial because, at the end of the day, your users won’t be satisfied if they don’t get relevant results. Many small changes to how you use Lucene, from the analyzer chain, to which fields you index, to how you build up a Query, to how you customize scoring, can have large impacts on relevance. Being able to properly measure such effects allows you to make changes that improve your relevance.

Yet, despite being the most important aspect of a search application, quality is devilishly difficult to pin down. There are certainly many subjective approaches. You can run a controlled user trial, or you can play with the application yourself. What do you look for? Besides checking if the returned documents are relevant, there are many other things to check: Are the excerpts accurate? Is the right metadata presented? Is the UI easily consumed on quick glance? No wonder so few applications are tuned for their relevance!

That said, if you’d like to objectively measure the relevance of returned documents, you’re in luck: the quality package, under benchmark, allow you to do so. These classes provide concrete implementations based on the formats from the TREC corpus, but you can also implement your own. You’ll need a “ground truth” transcribed set of queries, where each query lists the documents that are relevant to it. This approach is entirely binary: a given document from the index is deemed either relevant or not. From these we can compute precision and recall, which are the standard metrics in the information retrieval community for objectively measuring relevance of search results. Precision measures what subset of the documents returned for each query were relevant. For example, if a query has 20 hits and only one is relevant, precision is 0.05. If only one hit was returned and it was relevant, precision is 1.0. Recall measures what percentage of the relevant documents for that query was returned. So if the query listed eight documents as being relevant but six were in the result set, that’s a recall of 0.75.

In a properly configured search application, these two measures are naturally at odds with each other. Let’s say, on one extreme, you only show the user the very best (top 1) document matching his query. With such an approach, your precision will typically be high, because the first result has a good chance of being relevant, whereas your recall would be very low, because if there are many relevant documents for a given query you have only returned one of them. If we increase top 1 to top 10, then suddenly we’ll be returning many documents for each query. The precision will necessarily drop because most likely you’re now allowing some nonrelevant documents into the result set. But recall should increase because each query should return a larger subset of its relevant documents.

Still, you’d like the relevant documents to be higher up in the ranking. To account for this, average precision is computed. This measure computes precision at each of the N cutoffs, where N ranges from 1 to a maximum value, and then takes the average. So this measure is higher if your search application generally returns relevant documents earlier in the result set. Mean average precision (MAP) then measures the mean of average precision across a set of queries. A related measure, mean reciprocal rank (MRR), measures 1/M, where M is the first rank that had a relevant document. You want both of these numbers to be as high as possible.

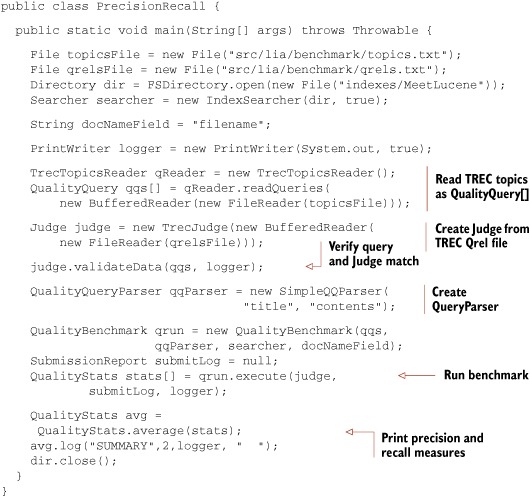

Listing C.1 shows how to use the quality package to compute precision and recall. Currently, in order to measure search quality, you must write your own Java code (there are no built-in tasks for doing so that would allow you to solely use an algorithm file). The queries to be tested are represented as an array of QualityQuery instances. The TrecTopicsReader knows how to read the TREC topic format into QualityQuery instances, but you could also implement your own. Next, the ground truth is represented with the simple Judge interface. The TrecJudge class loads TRECs Qrel format and implements Judge.QualityQueryParser translates each QualityQuery into a real Lucene query. Finally, QualityBenchmark tests the queries by running them against a provided IndexSearcher. It returns an array of QualityStats, one each for each of the queries. The QualityStats.average method computes and reports precision and recall.

Listing C.1. Computing precision and recall statistics for your IndexSearcher

When you run the code in listing C.1 by entering ant PrecisionRecall at the command line within the book’s source code directory, it will produce something like this:

SUMMARY Search Seconds: 0.015 DocName Seconds: 0.006 Num Points: 15.000 Num Good Points: 3.000 Max Good Points: 3.000 Average Precision: 1.000 MRR: 1.000 Recall: 1.000 Precision At 1: 1.000 Precision At 2: 1.000 Precision At 3: 1.000 Precision At 4: 0.750 Precision At 5: 0.600 Precision At 6: 0.500 Precision At 7: 0.429 Precision At 8: 0.375 Precision At 9: 0.333 Precision At 10: 0.300 Precision At 11: 0.273 Precision At 12: 0.250 Precision At 13: 0.231 Precision At 14: 0.214

Note that this test uses the MeetLucene index, so you’ll need to run ant Indexer if you skipped over that in chapter 1. This was a trivial test, because we ran on a single query that has exactly three correct documents (see the source files src/lia/benchmark/topics.txt for the queries and src/lia/benchmark/qrels.txt for the correct documents). You can see that the precision was perfect (1.0) for the top three results, meaning the top three results were in fact the correct answer to this query. Precision then gets worse beyond the top three results because any further document is incorrect. Recall is perfect (1.0) because all three correct documents were returned. In a real test you won’t see perfect scores.

C.6. Errors

If you make a mistake in writing your algorithm, which is in fact very easy to do, you’ll see a somewhat cryptic exception like this:

java.lang.Exception: Error: cannot understand algorithm! at org.apache.lucene.benchmark.byTask.Benchmark.<init>(Benchmark.java:63) at org.apache.lucene.benchmark.byTask.Benchmark.main(Benchmark.java:98) Caused by: java.lang.Exception: colon unexpexted: - Token[':'], line 6 at org.apache.lucene.benchmark.byTask.utils.Algorithm.<init>(Algorithm.java:120) at org.apache.lucene.benchmark.byTask.Benchmark.<init>(Benchmark.java:61)

When this happens, simply scrutinize your algorithm. One common error is a misbalanced { or }. Try iteratively simplifying your algorithm to a smaller part and run that to isolate the error.

C.7. Summary

As you’ve seen, the benchmark package is a powerful framework for quickly creating indexing and searching performance tests and for evaluating your search application for precision and recall. It saves you tons of time because all the normal overhead in creating a performance test is handled for you. Combine this with the large library of built-in tasks for common indexing and searching operations, plus extensibility to add your own report, task, document, or query source, and you’ve got one very useful tool under your belt.