The purpose of backpropagation is to update each of the weights in the network so that they cause the actual output to be closer to the target output, thereby minimizing the error for each output neuron and the network as a whole.

Let's focus on an output layer first. We are supposed to find out the impact of change in w5 on the total error.

This will be decided by  . It is the partial derivative of Etotal with respect to w5.

. It is the partial derivative of Etotal with respect to w5.

Let's apply the chain rule here:

= 0.690966 – 0.9 = -0.209034

= 0.213532

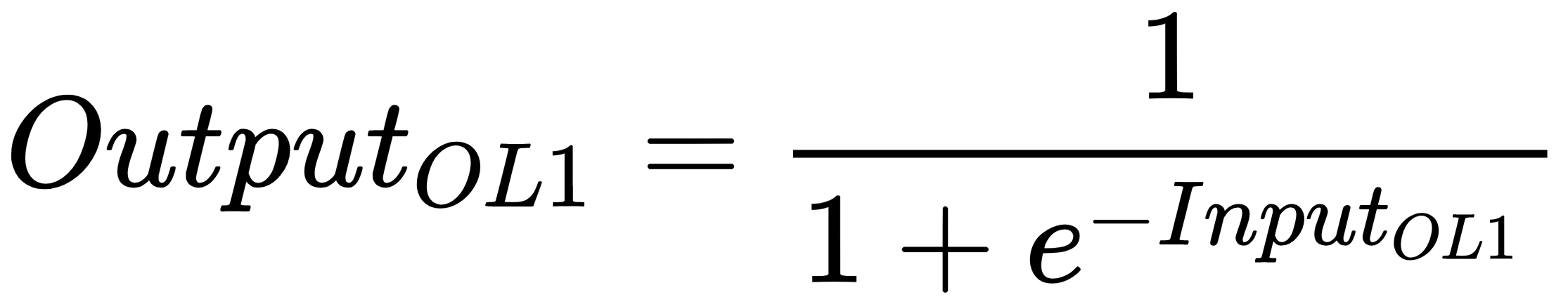

InputOL1 = w5*OutputHL1 + w7*OutputHL2 + B2

= 0.650219

= 0.650219

Now, let's get back to the old equation:

To update the weight, we will use the following formula. We have set the learning rate to be α = 0.1:

Similarly,  are supposed to be calculated. The approach remains the same. We will leave this to compute as it will help you in understanding the concepts better.

are supposed to be calculated. The approach remains the same. We will leave this to compute as it will help you in understanding the concepts better.

When it comes down to the hidden layer and computing, the approach still remains the same. However, the formula will change a bit. I will help you with the formula, but the rest of the computation has to be done by you.

We will take w1 here.

Let's apply the chain rule here:

This formula has to be utilized for w2, w3, and w4. Please ensure that you are doing partial differentiation of E_total with respect to other weights and, in the end, that you are using the learning rate formula to get the updated weight.