3

Deep Reinforcement Learning for Wireless Network

Bharti Sharma1*, R.K Saini1, Akansha Singh2 and Krishna Kant Singh3

1 Department of Computer Application, DIT University, Dehradun, Uttarakhand, India

2 Department of Computer Science Engineering, ASET, Amity University Uttar Pradesh, Noida, India

3 Department of ECE, KIET Group of Institutions, Ghaziabad, India

Abstract

The rapid introduction of mobile devices and the growing popularity of mobile applications and services create unprecedented infrastructure requirements for mobile and wireless networks. Future 5G systems are evolving to support growing mobile traffic, real-time accurate analytics, and flexible network resource management to maximize user experience. These tasks are challenging as mobile environments become increasingly complex, heterogeneous and evolving. One possible solution is to use advanced machine learning techniques to help cope with the growth of data and algorithm-based applications. The recent success of deep learning supports new and powerful tools that solve problems in this domain. In this chapter, we focus on how deep reinforcement learning should be integrated into the architecture of future wireless communication networks is presented.

Keywords: Big data, cellular network, deep learning, machine learning, neural network, reinforcement learning, wireless network, IoT

3.1 Introduction

Wireless networking landscape is undergoing a major revolution. The smart phone-oriented networks of the past years are slowly turning into a huge ecosystem of the Internet of things (IoT), which integrates a heterogeneous combination of wireless devices, from smart phones to unmanned aerial vehicles, connected vehicles, wearable devices, sensors and a virtual reality device. This exceptional transformation will not only lead to an exponential increase in wireless traffic in the foreseeable future, but also lead to the emergence of new and unverified cases of using wireless services that are significantly different from conventional multimedia or voice services. For instance, beyond the need for high data rates which has been the main driver of the wireless network evolution in the past decade—next-generation wireless networks must be able to deliver ultra-reliable, low-latency communication [1–4] that is adaptive, in real-time to a rich and dynamic IoT environment.

Future wireless networks, large volumes of data must be collected, periodically and in real-time, across a massive number of sensing and wearable devices. Such massive short-packet transmissions will add to a substantial traffics over the wireless uplink, which has traditionally been much less congested than the downlink. This same wireless network must also support cloud-based gaming [5], immersive virtual reality services, real-time HD streaming, and conventional multimedia services. This ultimately creates a radically different networking environment whose novel applications and their diverse quality-of-service (QoS) and reliability requirements mandate a fundamental change in the way in which wireless networks are modeled, analyzed, designed, and optimized.

As an important enabling technology for artificial intelligence, machine learning has been successfully applied in many areas, including computer vision, medical diagnosis, search engines and speech recognition [4]. Machine learning is a field of study that gives computers the ability to learn without being explicitly programmed. Machine learning techniques can be generally classified as supervised learning, unsupervised learning and reinforcement learning. In supervised learning, the aim of the learning agent is to learn a general rule mapping inputs to outputs with example inputs and their desired outputs provided, which constitute the labeled data set. In unsupervised learning, no labeled data is needed, and the agent tries to find some structures from its input. While in reinforcement learning, the agent continuously interacts with an environment and tries to generate a good policy according to the immediate reward/cost fed back by the environment. In recent years, the development of fast and massively parallel graphical processing units and the significant growth of data have contributed to the progress in deep learning, which can achieve more powerful representation capabilities. For machine learning, it has the following advantages to overcome the drawbacks of traditional resource management, networking, mobility management and localization algorithms.

The first advantage is that machine learning has the ability to learn useful information from input data, which can help improve network performance. For example, convolution neural networks and recurrent neural networks can extract spatial features and sequential features from time-varying received signal strength indicator (RSSI), which can mitigate the ping-pong effect in mobility management [6], and more accurate indoor localization for a three-dimensional space can be achieved by using an auto-encoder to extract robust finger print patterns from noisy RSSI measurements [7]. Second, machine learning based resource management, networking and mobility management algorithms can well adapt to the dynamic environment. For instance, by using the deep neural network proven to be an universal function approximator, traditional high complexity algorithms can be closely approximated, and similar performance can be achieved but with much lower complexity [8], which makes it possible to quickly response to environmental changes. In addition, reinforcement learning can achieve fast network control based on learned policies. Third, machine learning helps to realize the goal of network self-organization. For example, using multi-agent reinforcement learning, each node in the network can self optimize its transmission power, sub-channel allocation and so on? At last, by involving transfer learning, machine learning has the ability to quickly solve a new problem. It is known that there exist some temporal and spatial relevancies in wireless systems such as traffic loads between neighboring regions [9]. Hence, it is possible to transfer the knowledge acquired in one task to another relevant task, which can speed up the learning process for the new task. However, in traditional algorithm design, such prior knowledge is often not utilized.

This book chapter includes the increasing use of machine leaning methods in different subfields of wireless network like resource management, networking, mobility management, localization and computation resource management and covering the use of deep reinforcement learning in wireless networking as cutting edges technologies in mobile network analysis and management are jointly reviewed from other machine leaning algorithms angle.

The remainder of this chapter is organized as follows: Section 3.3 discusses related work and Section 3.4 describes the growth of machine learning to deep learning. Section 3.4.1 describes a brief introduction of advance machine learning techniques. Applications of machine leaning including deep reinforcement learning in wireless network are discussed in Section 3.5. The advantages of deep reinforcement are tackled in Section 3.5.4, followed by the conclusion.

3.2 Related Work

Wireless and deep learning problems have been researched mostly independently. Only recently crossovers between the two areas have emerged. Several notable works paint a comprehensives picture of the deep learning and/or wireless networking research landscape. LeCun et al. discussed the goal of deep learning and introduced many frequently used models, and focused on the potential of deep neural networks [10]. Schmidhuber gave the detailed survey of deep learning including the evolution, methods, applications, and open research problems [11]. Liu et al. reviewed the fundamental principles of deep learning models, and discussed the deep learning developments in selected applications, [12]. Arulkumaran et al. presented fundamental algorithms and many architectures for deep reinforcement learning, covering trust region policy optimization, deep Q networks and asynchronous advantage actor–critic [13] and in this study authors highlighted the performance of deep neural networks in different control problem and the applications of deep reinforcement learning have also been surveyed in [14]. Zhang et al. discussed the application of deep learning in recommender systems [15]. In this study author also focused on the potential of deep learning in mobile advertising. As deep learning becomes increasingly popular, Goodfellow et al. provided a broad lecture series on deep learning in a book that included prerequisite knowledge, underlying principles, and frequently used applications [16]. Intelligent wireless networking is a popular topic of research in the area of research nowadays and related research works by different researchers in the literature have been reviewed in the same direction [17–21, 24].

Jiang et al. discussed the capabilities of machine learning in 5G network applications covering massive MIMO and smart grids [25]. In this study author also identified research gaps between ML and 5G. Li et al. discussed the potential and challenges of artificial intelligence (AI) into future wireless network architectures and discussed the importance of AI in the 5G era [26]. Klaine et al. presented the several successful ML algorithms in self-organizing networks (SONs) and discussed the pros and cons of different algorithms, and discovered the future research directions [27]. Potential of artificial intelligence and big data for energy efficiency purposes [28] was discussed. Chen et al. presented traffic offloading approaches in wireless networks, and proposed a novel reinforcement learning based solution [29] which opened a new research field in embedding machine learning to greening cellular networks.

More recently, Fadlullah et al. presented a broad survey on the development of deep learning in different areas including the application of deep learning in the network traffic control systems [30]. In the study author also focused on the several unsolved research issues for future study. Ahad et al. introduced various algorithms, applications, and rules for using neural networks in the wireless networking problems [31]. This study discussed traditional neural networks models in depth and their applications in current mobile networks. Lane et al. investigated the strength and advantages of deep learning in mobile sensing and focused on the potential for accurate inference on wireless devices [32]. Ota et al. presented deep learning applications in mobile multimedia. Their survey included the state-of-the-art of deep learning algorithms in mobile health and mobile security and speech recognition. Mohammadi et al. surveyed recent deep learning methods for Internet of things (IoT) based data analytics [33]. They reviewed the detailed existing works that incorporated deep learning into the IoT domain. Mao et al. reviewed the deep learning in wireless networking [34]. Their work surveyed the state of-the-art of the deep learning in wireless networks and discussed research challenges to be solved in the future.

3.3 Machine Learning to Deep Learning

Machine learning is a combination of computer programs that can be trained from data as a replacement for pre-programmed instructions (as traditional programming language). Machine learning concepts are usually categorized into supervised learning, unsupervised learning, and reinforcement learning. Nowadays, many researchers have had increasing interest in combining supervised and unsupervised approaches to form semi-supervised learning. In supervised learning, the model is made by the mapping of input to output using a given dataset. Classification is a frequently solved problem using supervised learning. In classification, every sample in the dataset belongs to one of the M classes. The class of each sample is given by a label that has a discrete value {0,…,M−1}. When the labels in the above example are real values (continuous), this task is known as regression problem. In unsupervised learning, the dataset is a collection of samples without labels. Typically, the aim of unsupervised learning is to find patterns that have some form of regularity. Usually, some model is fit to the data with the goal of modeling the input distribution. This is known as density estimation in statistics. Unsupervised learning can also be used for extracting features for supervised learning. In semi-supervised learning, the number of labeled samples is too small, while there are usually a large number of unlabeled samples. This is also a typical case in real-world situations. The goal of semi-supervised learning is to find a mapping from input to output but also to somehow make the unlabeled information useful for the mapping task. At least, the performance of the semi-supervised model needs to improve compared to the supervised model.

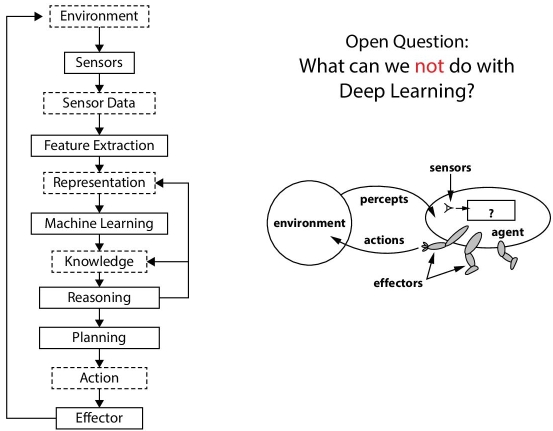

In recent times, deep learning has gotten a lot of consideration over machine learning algorithms due to its performance in many applications. Deep learning does better than the traditional machine learning approaches due to its different characteristics like greater computation power, automatic feature extraction, and the development of GPUs. Deep learning paid attention to a famous problem in machine learning i.e. the problem of representation. The inspiration for deep learning is to model how the human brain works. Figure 3.1 shows the fundamental difference between machine and deep learning. Deep learning has been able to attain major discovery in speech recognition, object recognition, image segmentation, and machine translation. The scientific contributions and developments of the deep learning method provided the ground work for the advances in such a large variety of applications [35]. So, in the same way cellular networks can obtain the benefits of deep learning research, especially in the era of 5G [36, 37].

3.3.1 Advance Machine Learning Techniques

3.3.1.1 Deep Learning

The fundamental concepts of deep learning is based on the same concepts of machine learning. The recent success of deep learning is mainly dependent on the artificial neural networks (ANN). Research on artificial neural networks was started back with the attempt to find something new in the 1940s [35]. Initially, ANNs were not very popular, and that is the reason the current recognition appears new. Broadly, there have been three eras of research in deep learning: the early 1940s–1960s, when the field was known as cybernetics; the 1980s–1990s, when it was called connection-ism; and the current surge that started in 2006, which is called deep learning. Initial growth on deep learning algorithms was motivated by computational models of how the brain learns. This is the reason that research in deep learning went by the name of artificial neural networks (ANN).The first neuron model was presented in [39]. Later, the first perceptron learning algorithm was presented in [40]. Since then, many varied models and learning algorithms have been introduced such as the Hopfield networks [41], self-organizing maps [42], Boltzmann machines [43], multi-layer perceptions [44], radial-basis function networks [45], autoencoders [46], and sigmoid belief networks [47]. Deep learning solves the problem of representation learning by enabling computers to build complex concepts out of simpler concepts.

Figure 3.1 The machine and deep learning pictorial representation [38].

3.3.2 Deep Reinforcement Learning (DRL)

Behavioral psychology has inspired a new machine learning technique, and that is reinforcement learning that has emerged as an advanced technology and as a new subcategory in machine learning. Figure 3.2 shows the learning method of reinforcement learning. DRL is concerned with an agents’s reward or utility connected to surroundings via perception and action which produces an adaptation. In reinforcement learning (RL), the agent aims to optimize a long term objective by interacting with the environment based on a trial and error process.

Figure 3.2 Deep reinforcement learning [48].

Specifically, the following reinforcement learning algorithms have been applied by different researchers.

3.3.2.1 Q-Learning

One of the most commonly adopted reinforcement learning algorithms is Q-learning. Specifically, the RL agent interacts with the environment to learn the Q values, based on which the agent takes an action. The Q value is defined as the discounted accumulative reward, starting at a tuple of a state and an action and then following a certain policy. Once the Q values are learned after a sufficient amount of time, the agent can make a quick decision under the current state by taking the action with the largest Q value. More details about Q learning can be referred to [49]. In addition, to handle continuous state spaces, fuzzy Q learning can be used [50].

3.3.2.2 Multi-Armed Bandit Learning (MABL)

In an MABL model with a single agent, the agent sequentially takes an action and then receives a random reward generated by a corresponding distribution, aiming at maximizing an aggregate reward. In this model, there exists a tradeoff between taking the current, best action (exploitation) and gathering information to achieve a larger reward in the future (exploration). While in the MAB model with multiple agents, the reward an agent receives after playing an action is not only dependent on this action but also on the agents taking the same action. In this case, the model is expected to achieve some steady states or equilibrium [51]. More details about MAB can be referred to [52].

3.3.2.3 Actor–Critic Learning (ACL)

The actor–critic learning algorithm is composed of an actor, a critic and an environment with which the actor interacts. In this algorithm, the actor first selects an action according to the current strategy and receives an immediate cost. Then, the critic updates the state value function based on a time difference error, and next, the actor will update the policy. As for the strategy, it can be updated based on learned policy using Boltzmann distribution. When each action is revisited infinitely for each state, the algorithm will converge to the optimal state values [53].

3.3.2.4 Joint Utility and Strategy Estimation-Based Learning

In this algorithm each agent holds an estimation of the expected utility, whose update is based on the immediate reward, and the probability to select each action, named as strategy, is updated in the same iteration based on the utility estimation [54]. The main benefit of this algorithm lies in that it is fully distributed when the reward can be directly calculated locally, as, for example, the data rate between a transmitter and its paired receiver. Based on this algorithm, one can further estimate the regret of each action based on utility estimations and the received immediate reward, and then update strategy using regret estimations. This algorithm is often connected with some equilibrium concepts in game theory like logit equilibrium and coarse correlated equilibrium.

3.4 Applications of Machine Learning Models in Wireless Communication

The next generation of wireless communication will offer high speed supporting innovative applications. Specially, the next generation of wireless communication systems has to acquire different characteristics of users with their human behavior, in order to separately determine the optimal system configurations. These smart mobile terminals have to trust on sophisticated learning and decision-making algorithms. There are different machine learning techniques like yielding regression algorithms, instance-based algorithms, regularization algorithms, decision tree algorithms, Bayesian algorithms, clustering algorithms, association rule-based learning algorithms, artificial neural networks, deep learning algorithms, dimension reduction algorithms, and ensemble algorithms, and all are different from their structure and functionality.

This section covers how the machine learning models can help next generation wireless communication systems in order to improve the performance of the network. The current generation of the wireless network has several technical problems and these technical problems can be dealt with by applying different machine learning algorithms. The 5G Cellular network requires new technologies to provide predefined services to the intelligent wireless communication network. The 5G cellular network operators are in a tough condition to meet different service requirements and solve complex configurations as user and network are dynamic. Such a future requirement can be met by empowering machines and systems with intelligence. So it is vital to understand how the application of artificial intelligence is useful in the management of 5G communication network development [55–57].

3.4.1 Regression, KNN and SVM Models for Wireless

The relationship among the variables can be estimated using statistical analysis which will help regression models. The aim of regression model is to predict diverged values for a set of input variable with single or multi-dimensions. The linear regression model is linear in nature. The logistic regression model is sigmoid curve in nature. The support vector machine (SVM) and K-nearest neighbors (KNN) algorithms are based on object classification. In The K-nearest neighbors algorithm (KNN) object classification is based on k value of the object’s neighbors and SVM algorithm relies on nonlinear mapping and transforming data into separable and searchable dimensions then it separates one class from another based on optimal linear separating hyper plane [56].

K-nearest neighbors algorithm (KNN) and support vector machine (SVM) models can be used to predict and estimate the radio parameters of a particular mobile user. The potential of addressing search problems by KNN and SVM models, the detection and channel estimation in massive MIMO systems were implemented using both of these machine learning techniques and MIMO-aided wireless used the hierarchical—support vector machine (H-SVM) for the same [58]. It also found the Gaussian channel’s noise level between transmit antennas and receive antennas. The optimal solution for handover issues was using support vector machine (SVM) and K-nearest neighbors algorithm (KNN).The next generation wireless mobile user terminal’s parameters like usage pattern can be used to train support vector machine (SVM) and K-NN. The K-nearest neighbor algorithms are used for the prediction of demand of energy. The user location and energy consumption rates can be used to train the machine learning models to predict energy demand [59]. The supervised machine learning models can interpret the patterns and learn from the user presence and usage to efficiently subdivide the signals into current system state for saving the energy and best user management.

3.4.2 Bayesian Learning for Cognitive Radio

The Bayesian learning model found the posteriori probability distribution of input signals. The special features of next generation wireless networks would be acquired and estimated by Bayesian learning. The main issue of the massive MIMO systems is that pilot contamination can be focused by estimating the channel parameters of both desired links in a target cell and interfering links of adjacent cells and the estimation of the channel parameters for addressing the pilot contamination issue can be made by applying Bayesian learning techniques. In this approach, Gaussians mixture model was defined based on received signal and channel parameters with weighted sum of Gaussian distribution and estimation carried by using expectation maximization [60].

Two-state hidden Markov model was applied to estimate the presence and absence of primary users. The expectation maximization algorithm was used to estimate the amount of time the available channels perform at optimized level [61]. The tomography model in Bayesian learning was used to estimate the prevalent parameters and interference patterns of data link and network layer in cognitive radio. The path delay and proportion of successful packer receptions in link layer and network layer of cogitative radio are estimated by tomography model [62].

3.4.3 Deep Learning in Wireless Network

It is widely acknowledged that the performance of traditional ML algorithms is low in the term of feature engineering [63]. The main advantage of deep learning is that it can automatically extract high-level features from data that has complex structure and inner correlations. The learning process is tremendously simplified due to prior feature handcrafting [64]. The importance of this in the context of mobile networks, as mobile data is usually generated by heterogeneous sources, is often noisy, and exhibits non-trivial spatial/temporal patterns [65], whose labeling would otherwise require outstanding human effort. Deep learning is capable of handling large amounts of data. Mobile networks generate high volumes of different types of data at fast pace. Training traditional ML algorithms (e.g. support vector machine (SVM) [66] and Gaussian process (GP) [67]) sometimes requires storing all the data in memory, which is computationally infeasible under big data scenarios. Furthermore, the performance of ML does not grow significantly with large volumes of data and plateaus relatively fast [68]. Deep neural networks further benefit as training with big data prevents model over-fitting. Traditional supervised learning is only effective when sufficient labeled data is available. However, most current mobile systems generate unlabeled or semi-labeled data [65]. Deep learning provides a variety of methods that allow exploiting unlabeled data to learn useful patterns in an unsupervised manner, e.g., restricted Boltzmann machine (RBM) [69], generative adversarial network (GAN) [70]. Applications include clustering [68], data distributions approximation [71], un/semi-supervised learning [72, 73], and one/zero shot learning [74, 75], among others. Compressive representations learned by deep neural networks can be shared across different tasks, while this is limited or difficult to achieve in other ML paradigms (e.g., linear regression, random forest, etc.) [76]. Deep learning is effective in handing geometric mobile data [77], while this is a conundrum for other ML approaches. Geometric data refers to multivariate data represented by coordinates, topology, metrics and order [78]. Mobile data, such as mobile user location and network connectivity can be naturally represented by point clouds and graphs, which have important geometric properties. These data can be effectively modelled by dedicated deep learning architectures, such as PointNet++ [79] and Graph CNN [80]. Employing these architectures has great potential to revolutionize the geometric mobile data analysis [81].

3.4.4 Deep Reinforcement Learning in Wireless Network

Artificial intelligence (AI) including deep learning (DL) and deep reinforcement learning (DRL) approaches, well known from computer science (CS) disciplines is beginning to emerge in wireless communications. These AI approaches were first widely applied to the upper layers of wireless communication systems for various purposes like routing establishment, optimization and deployment of cognitive radio and communication network. These system models and algorithms designed with DL technology greatly improve the performance of communication systems based on traditional methods. New features of future communications, such as complex scenarios with unknown channel models, high speed and accurate processing requirements, make traditional methods no longer suitable, and provide many more potential applications of DL. DL technology has become a new hotspot in the research of physical-layer wireless communications and challenges conventional communication theories. Currently, DL-based ‘black-box’ methods show promising performance improvements but have certain limitations, such as the lack of solid analytical tools and the use of architectures specifically designed for communication and implementation research. With the development of DL technology, in addition to the traditional neural network-based data-driven model, the model-driven deep network model and the DRL model (i.e. DQN) which combined DL with reinforcement learning are more suitable for dealing with future complex communication systems. As in most cases of wireless resource allocation, there are no definite samples to train the model; hence DRL, which trains the model by maximizing the reward associated with different actions, can be adopted. Deep reinforcement is used to solve some issues in communications and networking. The issues include traffic engineering and routing, resource sharing and scheduling, and data collection. The Figure 3.3 shows how the deep reinforcement learning has been used in different areas of communications and networking.

Figure 3.3 Taxonomy of the applications of deep reinforcement learning for communications and networking [82].

3.4.5 Traffic Engineering and Routing

Traffic engineering and routing traffic engineering (TE) in communication networks refers to network utility maximization (NUM) by optimizing a path to forward the data traffic, given a set of network flows from source to destination nodes. Traditional NUM problems are mostly model-based. However, with the advances of wireless communication technologies, the network environment becomes more complicated and dynamic, which makes it hard to model, predict, and control. The recent development of DQL methods provides a feasible and efficient way to design experience-driven and model-free schemes that can learn and adapt to the dynamic wireless network from past observations. Routing optimization is one of the major control problems in traffic engineering. The authors in [83] present the first attempt to use the DQL for the routing optimization. Through the interaction with the network environment, the DQL agent at the network controller determines the paths for all source–destination pairs. The system state is represented by the bandwidth request between each source–destination pair, and the reward is a function of the mean network delay. The DQL agent leverages the actor–critic method for solving the routing problem that minimizes the network delay, by adapting routing configurations automatically to current traffic conditions. The DQL agent is trained using the traffic information generated by a gravity model [84]. The routing solution is then evaluated by OMNet+ discrete event simulator [85]. The well-trained DQL agent can produce a near-optimal routing configuration in a single step and thus the agent is agile for real-time network control. The proposed approach is attractive as the traditional optimization-based techniques require a large number of steps to produce a new configuration.

3.4.6 Resource Sharing and Scheduling

System capacity is one of the most important performance metrics in wireless communication networks. System capacity enhancements can be based on the optimization of resource sharing and scheduling among multiple wireless nodes. The integration of DRL into 5G systems would revolutionize the resource sharing and scheduling schemes from model-based to model-free approaches and meet various application demands by learning from the network environment. The authors in [86] study the user scheduling in a multi user massive MIMO system. User scheduling is responsible for allocating resource blocks to BSs and mobile users, taking into account the channel conditions and QoS requirements. Based on this user scheduling strategy, a DRL-based coverage and capacity optimization is proposed to obtain dynamically the scheduling parameters and a unified threshold of QoS metric. The performance indicators are calculated as the average spectrum efficiency of all the users. The system state is an indicator of the average spectrum efficiency. The action of the scheduler is a set of scheduling parameters to maximize the reward as a function of the average spectrum efficiency. The DRL scheme uses policy gradient method to learn a policy function (instead of a Q-function) directly from trajectories generated by the current policy. The policy network is trained with a variant of the REINFORCE algorithm [87]. The simulation results in [86] show that compared with the optimization-based algorithms that suffer from incomplete network information, the policy gradient method achieves much better performance in terms of network coverage and capacity.

3.4.7 Power Control and Data Collection

With the prevalence of IoT and smart mobile devices, mobile crowd sensing becomes a cost-effective solution for network information collection to support more intelligent operations of wireless systems. The authors in [88] leverage the DQL framework for sensing and control problems in a wireless sensor and actor network (WSAN), which is a group of wireless devices with the ability to sense events and to perform actions based on the sensed data shared by all sensors. The system state includes processing power, mobility abilities, and functionalities of the actors and sensors. The mobile actor can choose its moving direction, networking, sensing and actuation policies to maximize the number of connected actor nodes and the number of sensing events. The authors in [89] focus on mobile crowd sensing paradigm, where data inference is incorporated to reduce sensing costs while maintaining the quality of sensing. The target sensing area is split into a set of cells. The objective of a sensing task is to collect data (e.g., temperature, air quality) in all the cells. A DQL-based cell selection mechanism is proposed for the mobile sensors to decide which cell is a better choice to perform sensing tasks. The system state includes the selection matrices for a few past decision epochs. The reward function is determined by the sensing quality and cost in the chosen cells. To extract temporal correlations in learning, the authors propose the DRQN that uses LSTM layers in DQL to capture the hidden patterns in state transitions. Considering inter-data correlations, the authors use the transfer learning method to reduce the amount of data in training. That is, the cell selection strategy learned for one task can benefit another correlated task. Hence, the parameters of DRQN can be initialized by another DRQN with rich training data. Simulations are conducted based on two real-life datasets collected from sensor networks.

3.5 Conclusion

Machine learning is playing an increasingly important role in the mobile and wireless networking domain. In this chapter, the basic and advance concept of machine learning models was discussed. This chapter also includes their applications to mobile networks across different application scenarios. We also discussed how the deep reinforcement learning getting attention over other traditional machine learning models in wireless network. DRL can obtain the solution of sophisticated network optimizations. Thus, it enables network controllers. It also allows network entities to learn and build knowledge about the communication and networking environment including autonomous decision-making.

This chapter also discussed that the DRL accepted the advantage of deep neural networks (DNNs) to train the learning process, thereby improving the learning speed and the performance of reinforcement learning algorithms. As a result, DRL has been adopted in numerous applications of reinforcement learning in practice. In the areas of communications and networking, DRL has been recently used as an emerging tool to effectively address various problems and challenges. In particular, modern networks such as Internet of things (IoT), heterogeneous networks (HetNets), and unmanned aerial vehicle (UAV) network become more decentralized, ad-hoc, and autonomous in nature. Network entities such as IoT devices, mobile users, and UAVs need to make local and autonomous decisions, e.g., spectrum access, data rate selection, transmit power control, and base station association, to achieve the goals of different networks including, e.g., throughput maximization and energy consumption minimization.

References

- 1. Boccardi, F., Heath, R.W., Lozano, A., Marzetta, T.L., Popovski, P., Five disruptive technology directions for 5G. IEEE Commun. Mag., 52, 2, 74–80, Feb. 2014.

- 2. Popovski, P., Ultra-reliable communication in 5G wireless systems. International Conference on 5G for Ubiquitous Connectivity (5GU), Akaslompolo, Finland, Feb. 2014.

- 3. Johansson, N.A., Wang, Y.P.E., Eriksson, E., Hessler, M., Radio access for ultra-reliable and low-latency 5G communications. IEEE International Conference on Communication Workshop (ICCW), London, UK, Sept. 2015.

- 4. Yilmaz, O.N.C., Wang, Y.P.E., Johansson, N.A., Brahmi, Ashraf, N., S.A., Sachs, J., Analysis of ultra-reliable and low-latency 5G communication for a factory automation use case. Proc. of IEEE International Conference on Communication Workshop (ICCW), London, UK, Sept. 2015, pp. 1190–1195.

- 5. Gopal, D.G. and Kaushik, S., Emerging technologies and applications for cloud-based gaming: Review on cloud gaming. Emerg. Technol. Appl. Cloud-Based Gaming, 41, 07, 79–89, 2016.

- 6. Motlagh, N.H., Bagaa, M., Taleb, T., UAV selection for a UAV-based integrative IoT platform. IEEE Global Communications Conference (GLOBECOM), Washington DC, USA, Dec. 2016.

- 7. Kawamoto, Y., Nishiyama, H., Kato, N., Yoshimura, N., Yamamoto, S., Internet of things (IoT): Present state and future prospects. IEICE Trans. Inf. Syst., 97, 10, 2568–2575, Oct. 2014.

- 8. Motlagh, N.H., Bagaa, M., Taleb, T., UAV-based IoT platform: A crowd surveillance use case. IEEE Commun. Mag., 55, 2, 128–134, Feb. 2017.

- 9. Zhou, J., Cao, X., Dong, Z., Vasilakos, A.V., Security and privacy for cloud-based IoT: Challenges. IEEE Commun. Mag., 55, 1, 26–33, Jan. 2017.

- 10. LeCun, Y., Bengio, Y., Hinton, G., Deep learning. Nature, 521, 7553, 436–444, 2015.

- 11. Schmidhuber, J., Deep learning in neural networks: An overview. Neural Netw., 61, 85–117, 2015.

- 12. Liu, W., Wang, Z., Liu, X., Zeng, N., Liu, Y., Alsaadi, F.E., A survey of deep neural network architectures and their applications. Neurocomputing, 234, 11–26, 2017.

- 13. Arulkumaran, K., Deisenroth, M.P., Brundage, M., Bharath, A.A., Deep reinforcement learning: A brief survey. IEEE Signal Processing Mag., 34, 6, 26–38, 2017.

- 14. Li, Y., Deep reinforcement learning: An overview, http://arxiv.org/abs/1701.07274, 2017.

- 15. Zhang, S., Yao, L., Sun, A., Deep learning based recommender system: A survey and new perspectives, http://arxiv.org/abs/1707.07435, 2017.

- 16. Goodfellow, I., Bengio, Y., Courville, A., Deep learning, MIT press, Springer, 2016.

- 17. Jiang, C., Zhang, H., Ren, Y., Han, Z., Chen, K.C., Hanzo, L., Machine learning paradigms for nextgeneration wireless networks. IEEE Wirel. Commun., 24, 2, 98–105, 2017.

- 18. Alsheikh, M.A., Lin, S., Niyato, D., Tan, H.P., Machine learning in wireless sensor networks: Algorithms, strategies, and applications. IEEE Commun. Surv. Tut., 16, 4, 1996–2018, 2014.

- 19. Bkassiny, M., Li, Y., Jayaweera, S.K., A survey on machine-learning techniques in cognitive radios. IEEE Commun. Surv. Tut., 15, 3, 1136–1159, 2013.

- 20. Buda, T.S., Assem, H., Xu, L., Raz, D., Margolin, U., Rosensweig, E., Lopez, D.R. et al., Can machine learning aid in delivering new use cases and scenarios in 5G?, in: IEEE/IFIP Network Operations and Management Symposium (NOMS), pp. 1279–1284, 2016.

- 21. Keshavamurthy, B. and Ashraf, M., Conceptual design of proactive SONs based on the big data framework for 5G cellular networks: A novel machine learning perspective facilitating a shift in the son paradigm. IEEE International Conference on System Modeling & Advancement in Research Trends (SMART), pp. 298–304, 2016.

- 22. Klaine, P.V., Imran, M.A., Onireti, O., Souza, R.D., A survey of machine learning techniques applied to self organizing cellular networks. IEEE Commun. Surv. Tut., 19, 4, 2392–2431, 2017.

- 23. Li, R., Zhao, Z., Zhou, X., Ding, G., Chen, Y., Wang, Z., Zhang, H., Intelligent 5G: When cellular networks meet artificial intelligence. IEEE Wirel. Commun., 24, 5, 175–183, 2017.

- 24. Bui, N., Cesana, M., Hosseini, S.A., Liao, Q., Malanchini, I., Widmer, J., A survey of anticipatory mobile networking: Context-based classification, prediction methodologies, and optimization techniques. IEEE Commun. Surv. Tut., 19, 3, 1790–1821, 2017.

- 25. Jiang, C., Zhang, H., Ren, Y., Han, Z., Chen, K.C., Hanzo, L., Machine learning paradigms for next generation wireless networks. IEEE Wirel. Commun., 24, 2, 98–105, 2017.

- 26. Li, R., Zhao, Z., Zhou, X., Ding, G., Chen, Y., Wang, Z., Zhang, H., Intelligent 5G: When cellular networks meet artificial intelligence. IEEE Wirel. Commun., 24, 5, 175–183, 2017.

- 27. Klaine, P.V., Imran, M.A., Onireti, O., Souza, R.D., A survey of machine learning techniques applied to self organizing cellular networks. IEEE Commun. Surv. Tut., 19, 4, 2392–2431, 2017.

- 28. Jinsong, W., Song, G., Jie, L., Deze, Z., Big data meet green challenges: Big data toward green applications. IEEE Syst. J., 10, 3, 888–900, 2016.

- 29. Xianfu, C., Jinsong, W., Yueming, C., Honggang, Z., Tao, C., Energy-efficiency oriented traffic offloading in wireless networks: A brief survey and a learning approach for heterogeneous cellular networks. IEEE J. Sel. Areas. Commun., 33, 4, 627–640, 2015.

- 30. Fadlullah, Z., Tang, F., Mao, B., Kato, N., Akashi, O., Inoue, T., Mizutani, K., State-of-the-art deep learning: Evolving machine intelligence toward tomor-row’s intelligent network traffic control systems. IEEE Commun. Surv. Tut., 19, 4, 2432–2455, 2017.

- 31. Ahad, N., Qadir, J., Ahsan, N., Neural networks in wireless networks: Techniques, applications and guidelines. J. Netw. Comput. Appl., 68, 1–27, 2016.

- 32. Lane, N.D. and Georgiev, P., Can deep learning revolutionize mobile sensing?, in: Proc. 16th ACM International Workshop on Mobile Computing Systems and Applications, pp. 117–122, 2015.

- 33. Mehdi, M., Ala, A., Sameh, S., Mohsen, G., Deep Learning for IoT Big Data and Streaming Analytics: A Survey. IEEE Commun. Surv. Tut., 4, 2923–2960, 2018.

- 34. Mao, Q., Hu, F., Hao, Q., Deep learning for intelligent wireless networks: A comprehensive survey. IEEE Commun. Surv. Tut., 20, 4, pp. 2595–2621, 2018.

- 35. Goodfellow, I., Bengio, Y., Courville, A., Deep Learning, MIT Press, Cambridge, MA, USA, 2016.

- 36. Moysen, J., Giupponi, L., From 4G to 5G: Self-organized Network Management meets Machine Learning, http://arxiv.org/abs/1707.09300, 2017.

- 37. Li, R., Zhao, Z., Zhou, X., Ding, G., Chen, Y., Wang, Z., Zhang, H., Intelligent 5G: When cellular networks meet artificial intelligence. IEEE Wirel. Commun., 24, 175–183, 2017.

- 38. https://www.guru99.com/machine-learning-vs-deep-learning.html

- 39. McCulloch, W.S. and Pitts, W., A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biophys., 5, 115–133, 1943.

- 40. Rosenblatt, F., The perceptron: A probabilistic model for information storage and organization in the brain. Psychol. Rev., 65, 386, 1958.

- 41. Hopfield, J.J., Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. U.S.A. 1982, 79, 2554–2558, 1982.

- 42. Kohonen, T., Self-organized formation of topologically correct feature maps. Biol. Cybern., 43, 59–69, 1982.

- 43. Ackley, D.H., Hinton, G.E., Sejnowski, T.J., A learning algorithm for Boltzmann machines, in: Readings in Computer Vision, pp. 522–533, Elsevier, Amsterdam, the Netherlands, 1987.

- 44. Rumelhart, D.E., Hinton, G.E., Williams, R.J., Learning representations by back-propagating errors. Nature 1986, 323, 533, 1986.

- 45. Broomhead, D.S. and Lowe, D., Radial Basis Functions, Multi-Variable Functional Interpolation and Adaptive Networks, in: Technical Report, Royal Signals and Radar Establishment, Malvern, UK, 1988.

- 46. Baldi, P. and Hornik, K., Neural networks and principal component analysis: Learning from examples without local minima. Neural Netw., 2, 53–58, 1989.

- 47. Neal, R.M., Connectionist learning of belief networks. Artif. Intell., 56, 71–113, 1992.

- 48. https://ailephant.com/overview-deep-reinforcement-learning/

- 49. Sutton, R. and Barto, A., Reinforcement learning: An introduction, MIT Press, Cambridge, MA, 1998.

- 50. Glorennec, P.Y., Fuzzy Q-learning and dynamical fuzzy Q-learning. Proceedings of 1994 IEEE 3rd International Fuzzy Systems Conference, Orlando, FL, USA, Jun. 1994, pp. 474–479.

- 51. Maghsudi, S. and Hossain, E., Distributed user association in energy harvesting dense small cell networks: A mean-field multi-armed bandit approach. IEEE Access, 5, 3513–3523, Mar. 2017.

- 52. Bubeck, S. and Cesa-Bianchi, N., Regret analysis of stochastic and nonstochastic multi-armed bandit problems. Found. Trends Mach. Learn., 5, 1, 1–122, 2012.

- 53. Singh, S., Jaakkola Jaakkola, T., Littman, M., Szepesvri, C., Convergence results for single-step on-policy reinforcement-learning algorithms. Mach. Learn., 38, 3, 287–308, Mar. 2000.

- 54. Perlaza, S., Tembine, M., H., Lasaulce, S., How can ignorant but patient cognitive terminals learn their strategy and utility? Proceedings of SPAWC, Marrakech, Morocco, pp. 1–5, Jun. 2010.

- 55. Li, R. et al., Intelligent 5G: When Cellular Networks Meet Artificial Intelligence. IEEE Wirel. Commun., 24, 5, 175–183, 2017.

- 56. Jiang, C., Zhang, H., Ren, Y., Han, Z., Chen, K.C., Hanzo, L., Machine Learning Paradigms for Next-Generation Wireless Networks. IEEE Wirel. Commun., 24, 2, 98–105, Apr. 2017.

- 57. Alarcon, M.E. and Cabellos, A., A machine learning-based approach for virtual network function modeling, in: 2018 IEEE Wireless Communications and Networking Conference Workshops (WCNCW), pp. 237–242, 2018.

- 58. Feng, V. and Chang, S.Y., Determination of Wireless Networks Parameters through Parallel Hierarchical Support Vector Machines. IEEE Trans. Parallel Distrib. Syst., 23, 3, 505–512, Mar. 2012.

- 59. Donohoo, K., Ohlsen, C., Pasricha, S., Xiang, Y., Anderson, C., Context-Aware Energy Enhancements for Smart Mobile Devices. IEEE Trans. Mob. Comput., 13, 8, 1720–1732, Aug. 2014.

- 60. Choi, K.W. and Hossain, E., Estimation of Primary User Parameters in Cognitive Radio Systems via Hidden Markov Model. IEEE Trans. Signal Process, 61, 3, 782–795, Feb. 2013.

- 61. Yang, A., J., Champagne, B., An EM Approach for Cooperative Spectrum Sensing in Multiantenna CR Networks. IEEE Trans. Veh. Technol., 65, 3, 1229–1243, Mar. 2016.

- 62. Yu, C.K., Chen, K.C., Cheng, S.M., Cognitive Radio Network Tomography. IEEE Trans. Veh. Technol., 59, 4, 1980–1997, May 2010.

- 63. Domingos, P., A few useful things to know about machine learning. Commun. ACM, 55, 10, 78–87, 2012.

- 64. LeCun, Y., Bengio, Y., Hinton, G., Deep learning. Nature, 521, 7553, 436–444, 2015.

- 65. Alsheikh, M.A., Niyato, D., Lin, S., Tan, H.P., Han, Z., Mobile big data analytics using deep learning and Apache Spark. IEEE Netw., 30, 3, 22–29, 2016.

- 67. Tsang, I.W., Kwok, J.T., Cheung, P.M., Core vector machines: Fast SVM training on very large data sets. J. Mach. Learn. Res. , 6, 363–392, 2005.

- 68. Rasmussen, C.E. and Williams, C.K., Gaussian processes for machine learning, vol. 1, MIT press, Cambridge, 2006.

- 69. Goodfellow, I., Bengio, Y., Courville, A., Deep learning, MIT press, Springer, 2016.

- 70. Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., Bengio, Y., Generative adversarial nets, in: Advances in neural information processing systems, pp. 2672–2680, 2014.

- 71. Schroff, F., Kalenichenko, D., Facenet, J.P., A unified embedding for face recognition and clustering, in: Proc. IEEE Conference on Computer Vision and Pattern Recognition, pp. 815– 823, 2015.

- 72. Kingma, D.P., Mohamed, S., Rezende, D.J., Welling, M., Semi-supervised learning with deep generative models, in: Advances in Neural Information Processing Systems, pp. 3581– 3589, 2014.

- 73. Stewart, R. and Ermon, S., Label-free supervision of neural networks with physics and domain knowledge, in: Proc. National Conference on Artificial Intelligence (AAAI), pp. 2576–2582, 2017.

- 74. Rezende, D., Danihelka, I., Gregor, K., Wierstra, D. et al., One-shot generalization in deep generative models, in: Proc. International Conference on Machine Learning (ICML), pp. 1521–1529, 2016.

- 75. Socher, R., Ganjoo, M., Manning, C.D., Ng, A., Zero-shot learning through cross-modal transfer, in: Advances in neural information processing systems, pp. 935–943, 2013.

- 76. Georgiev, P., Bhattacharya, S., Lane, N.D., Mascolo, C., Low-resource multitask audio sensing for mobile and embedded devices via shared deep neural network representations. Proc. ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (IMWUT), vol. 1, p. 50, 2017.

- 78. Monti, F., Boscaini, D., Masci, J., Rodola, E., Svoboda, J., Bronstein, M.M., Geometric deep learning on graphs and manifolds using mixture model CNNs, in: Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), vol. 1, p. 3, 2017.

- 79. Roux, B.L. and Rouanet, H., Geometric data analysis: from correspondence analysis to structured data analysis, Springer Science & Business Media, New York, 2004.

- 80. Qi, C.R., Yi, L., Su, H., Guibas, L.J., PointNet++: Deep hierarchical feature learning on point sets in a metric space, in: Advances in Neural Information Processing Systems, pp. 5099–5108, 2017.

- 81. Kipf, T.N. and Welling, M., Semi-supervised classification with graph convolutional networks, in: Proc. International Conference on Learning Representations (ICLR), 2017.

- 82. Luong, N.C., Hoang, D.T., Gong, S., Niyato, D., Wang, P., Liang, Y.C., Kim, D., Applications of Deep Reinforcement Learning in Communications and Networking: A Survey, 2018. https://ieeexplore.ieee.org/document/8714026.

- 83. Stampa, G., Arias, M., Sanchez-Charles, D., Muntes-Mulero, V., Cabellos, A., A deep-reinforcement learning approach for software-defined networking routing optimization, http://arxiv.org/abs/1709.07080, 2017.

- 84. Roughan, M., Simplifying the synthesis of internet traffic matrices. ACM SIGCOMM Computer Communication Review, 35, 5, 93–96, 2015. Online Available: http://arxiv.org/abs/1710.02913.

- 85. Varga, A. and Hornig, R., An overview of the OMNeT++ simulation environment, in: proc. Int’l Conf. Simulation Tools and Techniques for Communications, Networks and Systems & Workshops, 2008.

- 86. Yang, Y., Li, Y., Li, K., Zhao, S., Chen, R., Wang, J., Ci, S., Decco: Deep-learning enabled coverage and capacity optimization for massive mimo systems, IEEE, 2018,https://ieeexplore.ieee.org/document/8344405.

- 87. Sutton, R., McAllester, S., Singh, D.S., Mansour, Y., Policy gradient methods for reinforcement learning with function approximation. 12th International Neural Inform. Process. Syst, pp. 1057–1063, 1999.

- 88. Oda, T., Obukata, R., Ikeda, Barolli, M., L., Takizawa, M., Design and implementation of a simulation system based on deep q-network for mobile actor node control in wireless sensor and actor networks. International Conference on Advanced Information Networking and Applications Workshops (WAINA), pp. 195–200, 2017.

- 89. Wang, L., Liu, W., Zhang, D., Wang, Y., Wang, E., Yang, Y., Cell selection with deep reinforcement learning in sparse mobile crowd sensing, http://arxiv.org/abs/1804.07047, 2018.

Note

- * Corresponding author: [email protected]