Microsoft Cognitive Services are collections of APIs and services that help developers create smarter applications and reports. By using Cognitive Services, developers can add such intelligent features as face recognition, emotion recognition, text analytics, and so forth, to their applications. This chapter first presents an overview of Cognitive Services and then explains how to use them for text analytics in Power BI Report. Finally, how to use Cognitive Services in a Windows application is explored briefly.

Overview of Cognitive Services

Main Cognitive Services categories

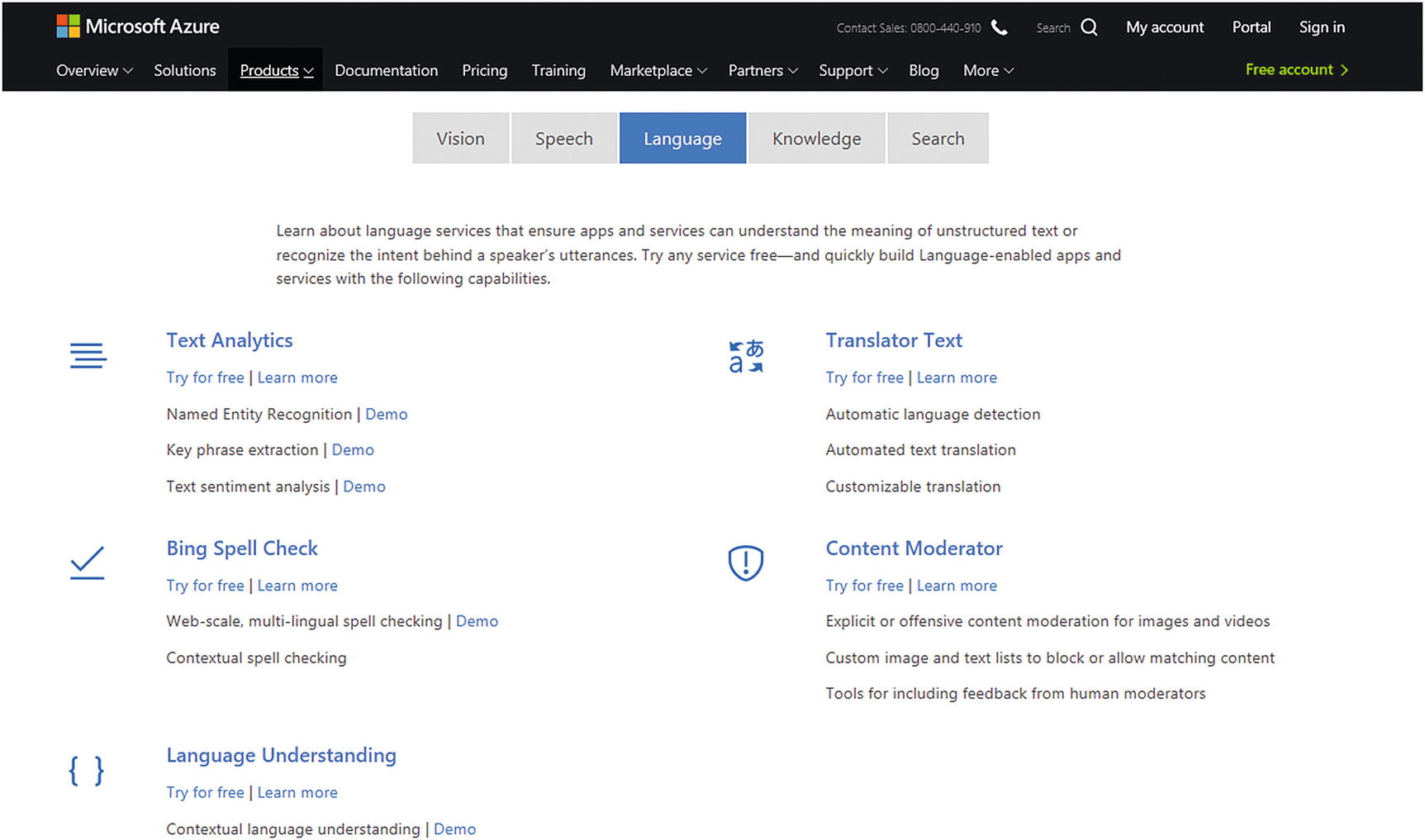

Language category in Cognitive Services

The other services in the Language category are about translating a text, identifying the primary intent, and objectives in conversations and texts.

These features can be combined. For example, it is possible to integrate the Language Understanding service with the Speech service, for instant speech-to-intent processing, and, with a Bot application (Chapter 19), to develop a more sophisticated application.

In this chapter, you will see how you can perform text analytics for the purpose of detecting main keywords in customer feedback, how much of the feedback is positive, detecting the language in Power BI, by using Text Analytics services.

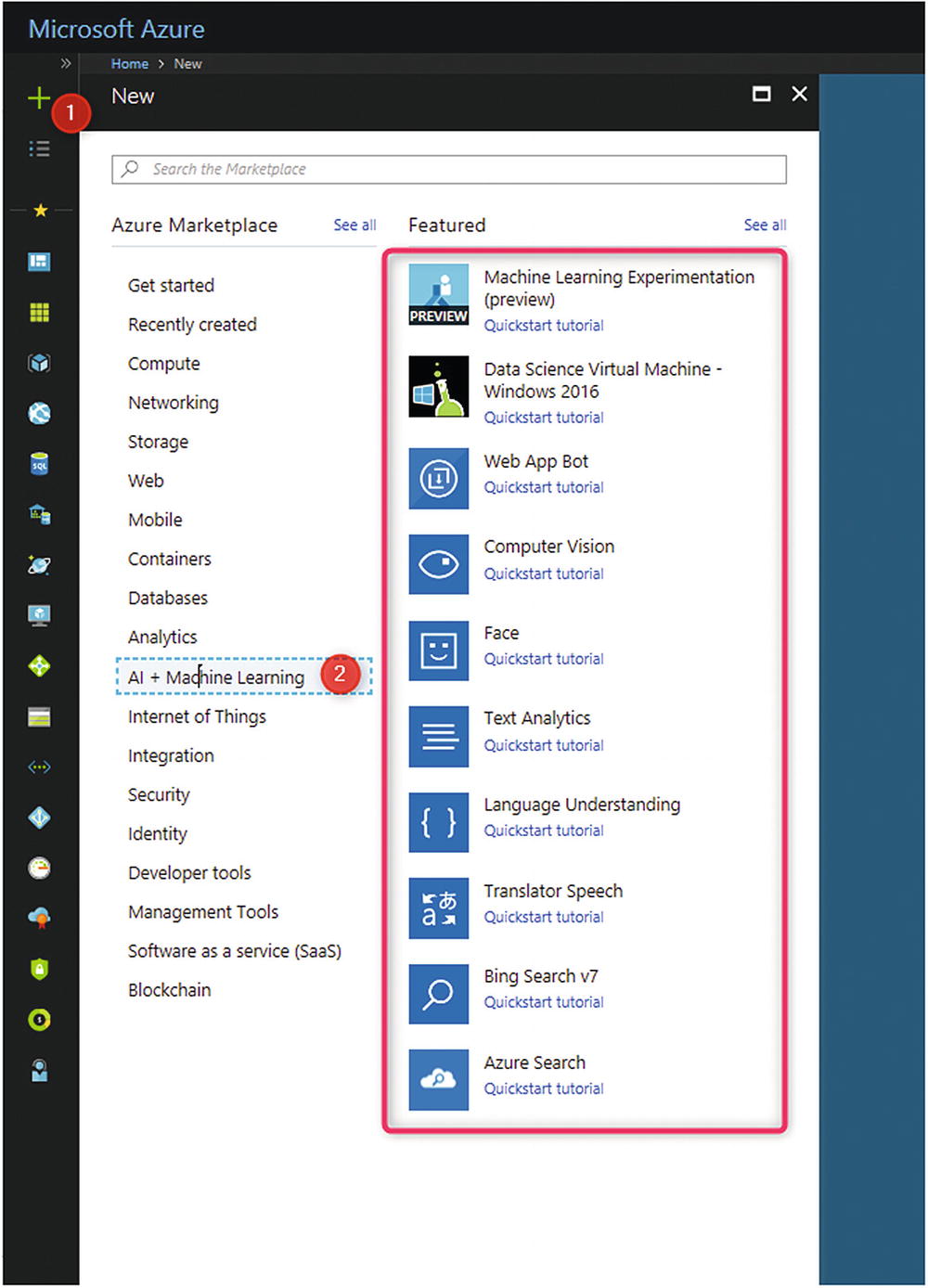

Different Cognitive Services accounts

Different Cognitive Services features

Text Analytics Services

Not all collected data is about numbers and structured data. To gain a more holistic perspective about a customer, products, and so forth, collecting and analyzing the text can be used, to enhance company performance. Text Analytics is the process of converting unstructured text data into meaningful data, to understand customer needs and feedback [2].

In this section, a case study will be presented that explains the process of using the Text Analytics API in Power BI and is applied to customer feedback.

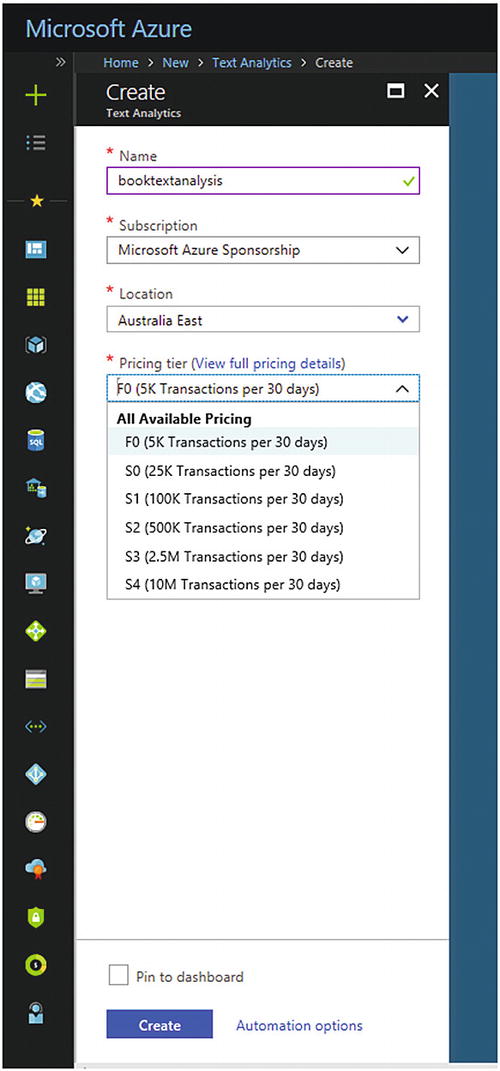

Setting Up Text Analytics in the Azure portal

Pricing tiers for Text Analytics

Accessing the URL and key

After creating the service from the Azure portal, we must call it inside the Power BI Desktop and apply it on the available data there.

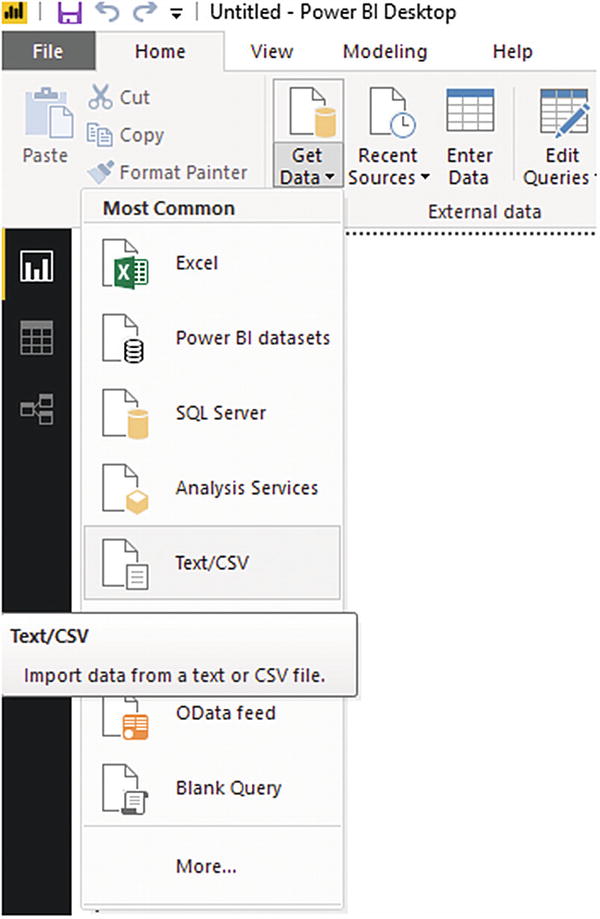

Data Set

Fabrikam is a mock production company. It has received e-mails from customers regarding shipping, tech support, and other concerns. The business intelligence (BI) manager wants to perform some analytics on the e-mail the company is receiving from customers, to better understand the main points of customers’ e-mails and to determine whether they are satisfied with the company’s services. You can download the data set from https://github.com/Kaiqb/KaiqbRepo0731190208/blob/master/CognitiveServices/TextAnalytics/FabrikamComments.csv [3].

Getting a CSV file from Power BI

Editing data in Power Query

Merging Subject and Comment columns

After creating a new column, we must rename it to Customer Feedback.

Create a Text Analytics Function

Creating a blank query

Power BI Query Editor

As you can see in the preceding code, the first line is about the function input which is text. In line 2, the API key collected from Azure Service must be pasted here. In line 3, the required URL for connecting to the API must be provided. As you can see at the end of the URL, the service that we are going to use from Cognitive Services is shown. In this example, we are using sentiment analysis. Lines 4 to 11 indicate the required code for connecting to Cognitive Services and getting the result in JSON format. The last line is the result column that shows the sentiment score for each comment.

Now, replace the preceding code with the previous one and click OK. The blank query will change to a function type, and a page with a text box will appear. You can test it by writing a sentence, such as “the weather is so nice today,” and click the Invoke button, to see the result.

Text Analytics function test in Power Query

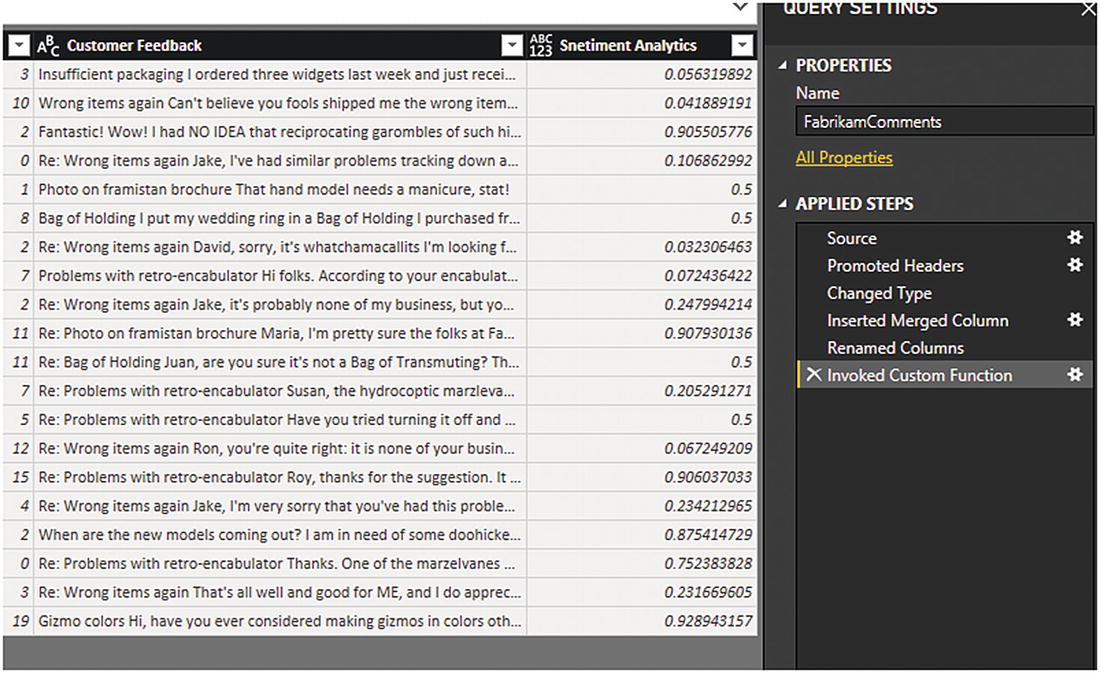

The output of the sentiment analysis is a number from 0 to 1. A number closer to 1 means that the comment is positive, one closer to 0 means the feedback is negative.

Invoke a custom function for sentiment analysis

Sentiment analysis result in Power BI Desktop

The preceding example was about using Cognitive Services in Power BI. In the next section, I will show you how to use the Face (that is, face recognition) service in a Windows application.

Intelligence Application, Face Recognition Services

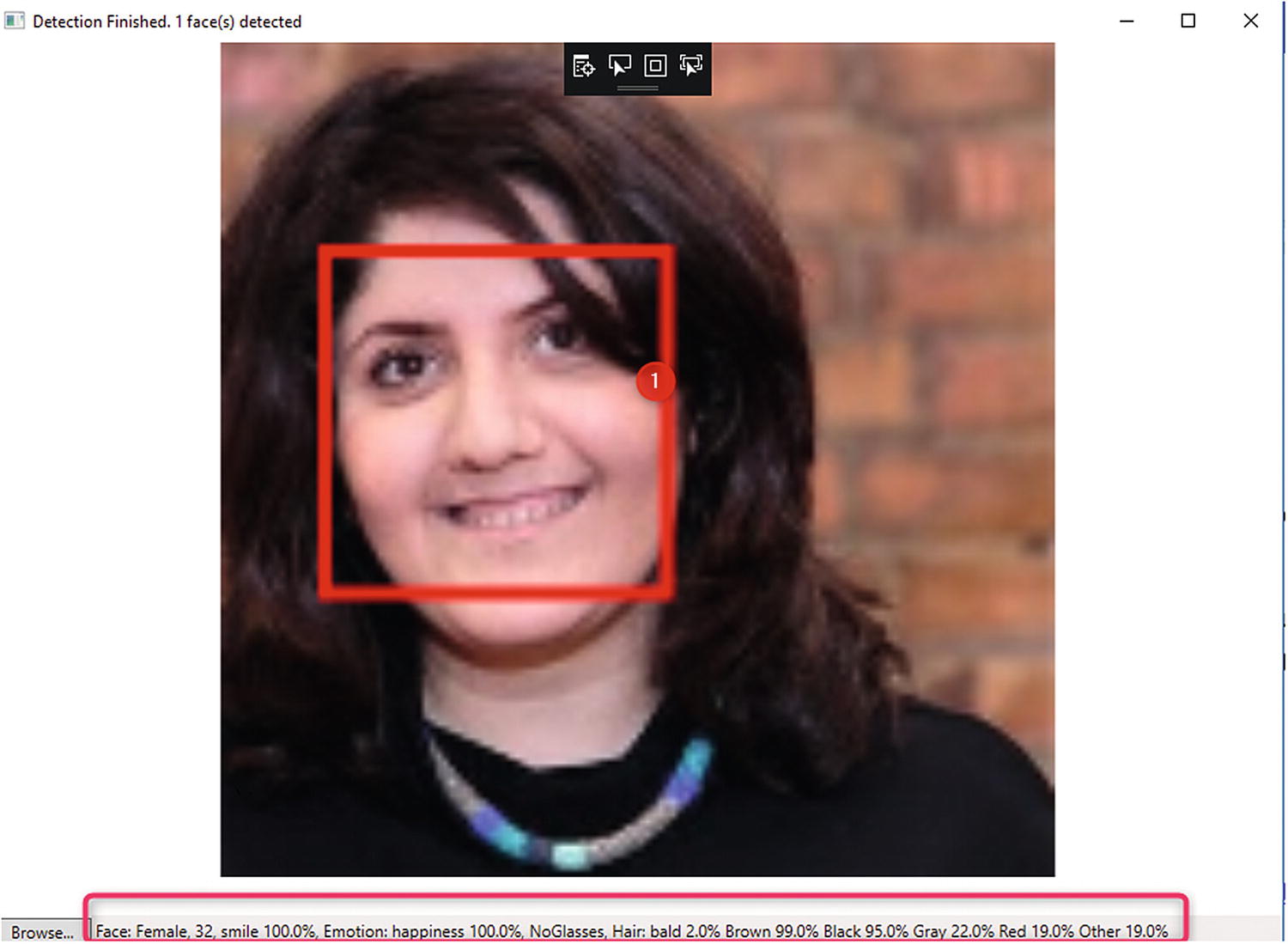

Another feature in Cognitive Services is the Face (facial recognition) API. This API performs face detection from an image, in addition to emotion detection and finding similar facial features of faces from two images.

Cognitive Service Face Recognition

Image emotion detection demo

We are going to create a Windows application that is able to detect the image using the Face API in Cognitive Services.

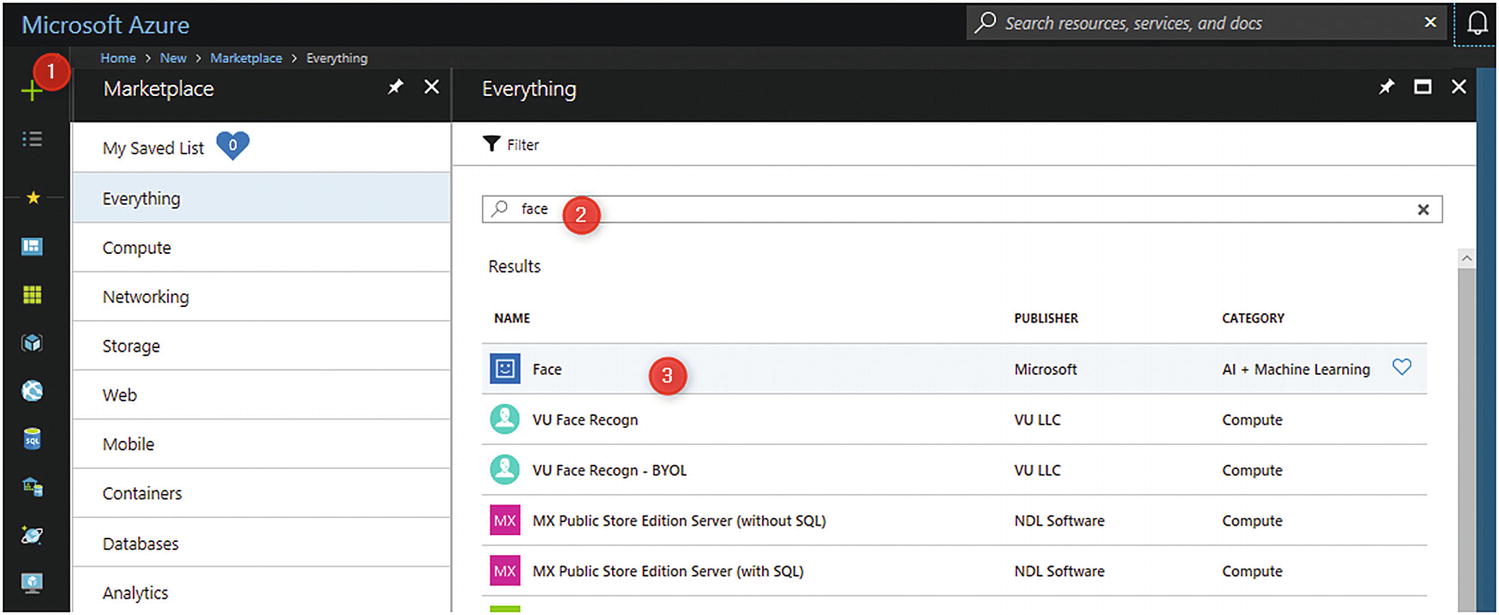

Face recognition feature in Azure

Collecting the Face API key

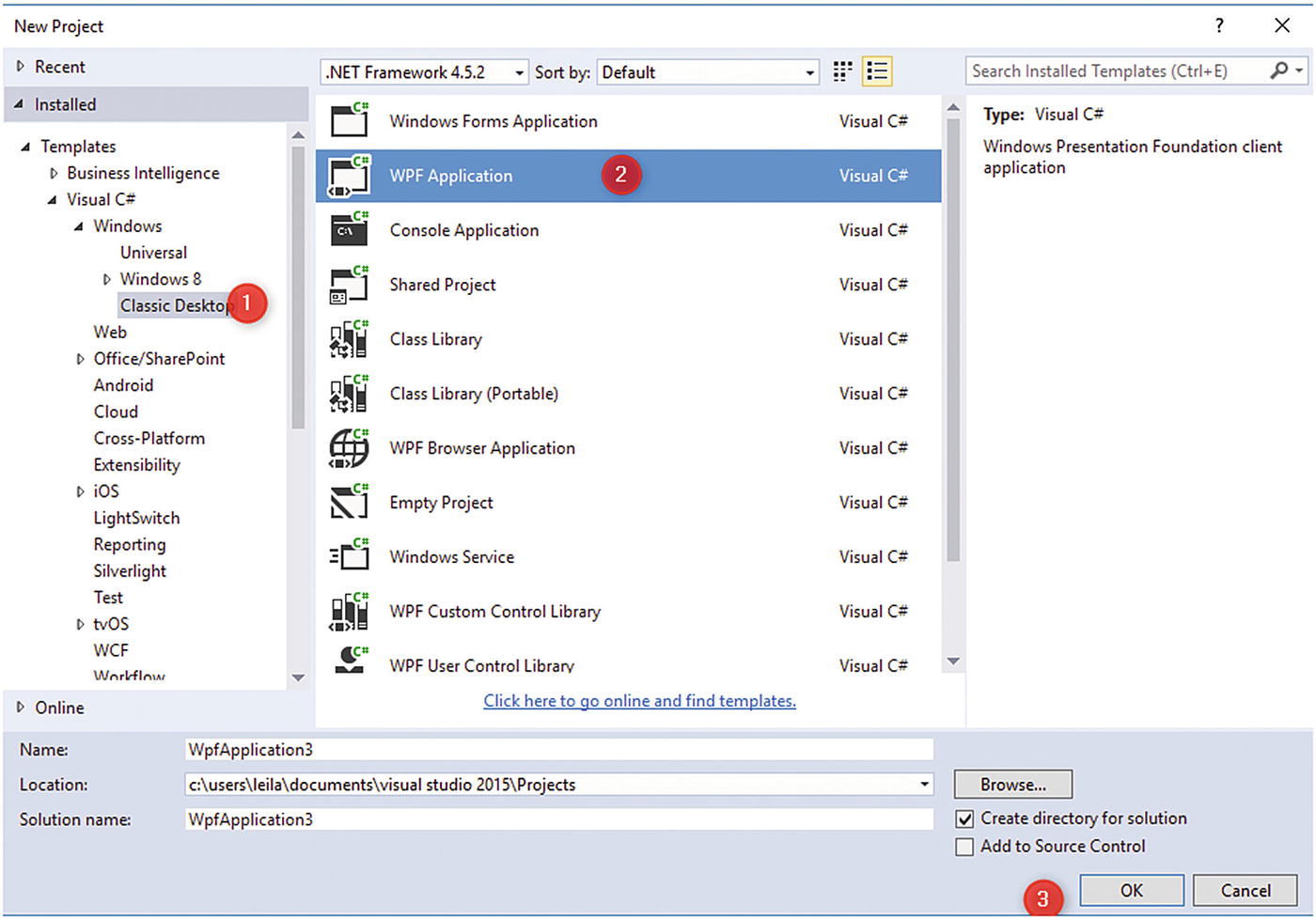

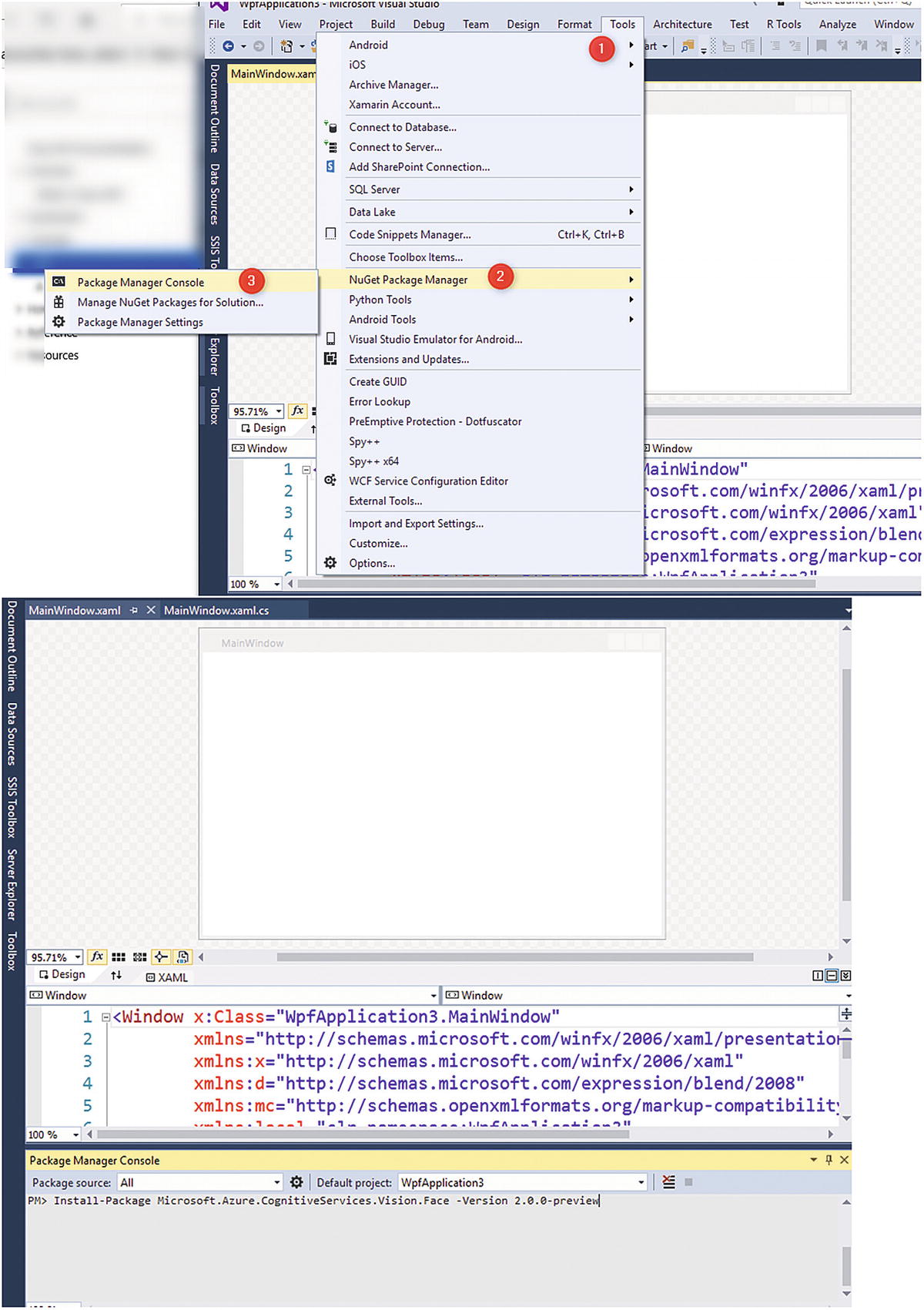

Create a Windows Application

Installing the Face API in Windows

Changing the code

Add Libraray for Face Recognition

If you ensure that all libraries and reference work are in order, you will gain proper access to the Azure Cognitive Service Library inside the .NET application.

Face emotion detection

Summary

This chapter presented a brief introduction to the easy-to-use AI tools available in Microsoft Cognitive Services. A brief description of Cognitive Services and how they can be accessed was provided. Then how to use Cognitive Services Text Analytics API in some other Microsoft tools, such as Power BI, was explained. Next, the process of using Cognitive APIs in a Windows application was discussed, and all related codes were shown. In the next chapter, Bot Framework, another tool for creating smart applications, will be explored.

References

- [1]

Microsoft Azure, “Cognitive Services,” https://azure.microsoft.com/en-us/services/cognitive-services/ , 2019.

- [2]

PAT Research, “What is Text Analytics,” www.predictiveanalyticstoday.com/text-analytics/ .

- [3]

Kamran Iqbal, Fabrikam data set, GitHub, https://github.com/Kaiqb/KaiqbRepo0731190208/blob/master/CognitiveServices/TextAnalytics/FabrikamComments.csv , September 6, 2017.

- [4]

Patrick Farley et al., “Tutorial: Create a WPF app to display face data in an image,” Microsoft Azure, https://docs.microsoft.com/en-us/azure/cognitive-services/face/tutorials/faceapiincsharptutorial , February 5, 2019.

- [5]

Microsoft Visual Studio, “Visual Studio 2019,” Downloads https://visualstudio.microsoft.com/downloads/ , 2019.