Virtualization isn't just about running Windows applications on Mac hardware. It's about maximizing your computing investment by running multiple operating systems and applications simultaneously. Running virtualized operating systems also helps protect your host computing environment by isolating the virtual machines from the host machine and each other, which is great for testing new versions of software and isolating programming efforts (rather than risk testing on the host machine). In addition, virtualization takes full advantage of multicore environments.

A multicore processor is like having multiple computers in one PC. Running Revit on VMS means that when one computer-intensive task starts, you'll still be able to manage other tasks. For example, while rendering your project in one VM, you can create a family component in another, while saving your project to the central file on another VM—all at the same time.

Until recently, Autodesk didn't officially support running its applications in virtualization. But this changed in the fall of 2009 when official support was announced. Although most components of virtualization run at nearly full speed, emulated graphics for 3D-intensive applications are certainly a challenge for virtual environments. However, there have been some recent and exciting developments in this area that are removing even the most stringent barriers to adoption. In this chapter, you'll learn to:

Understand the benefits of virtualization

Take advantage of virtualization

Virtualization was originally pioneered more than 40 years ago by IBM in order to distribute costly mainframe resources and run multiple applications. At the time, computers were far too expensive to be tied up computing single tasks. Virtualization allowed the host computer to run many more "virtual" computers at the same time—duplicates of the host computer. Pretty cool!

Eventually the necessity and cost of maintaining expensive, distributed computing decreased, so eventually virtualization was no longer necessary. The decrease in mainframe computing coincided with advances in personal computers (PCs). And personal computing meant that end users finally had the ability to run both their operating system and their applications locally, right at their desk—on their personal computer. So, in some aspects, computing was becoming more and more "decentralized." Another viewpoint is that they've actually become "hyper-centralized," because nearly everyone has a computer right at their desk!

By today's standards, early PCs were quite primitive. "Top-of-the-line" personal computers came with a 60MHz processor and a 250MB hard drive. Only 15 years later, the phone in your pocket probably has a 400MHz processor (a more than 6 increase) and an 8GB hard drive (a more than 30 increase in capacity).

As for today's personal computing, processor speeds once measured in megahertz (MHz) are now measured in gigahertz (GHz), and single processing systems have given way to processors that may in turn contain multiple "cores." This means that a single processor system may be composed of two or more independent cores. And it's not uncommon to have computers with multiple processors. That's a lot of computing power. And in many cases, it's a lot of underused computing power.

Did you know that you might actually have a computer with multiple processors, each of which contains multiple cores? That means you effectively have many potential computing environments. For example, if your computer contains two quad-core processors, you potentially have eight simultaneous computing environments!

You're probably beginning to understand why virtualization is making a comeback. Those 40-year-old computing principles are being applied to personal computing. A multicore CPU will allow applications to run simultaneously and faster (within a single operating system). Virtualization allows multiple operating systems to run concurrently on the same computer.

This is because each VM is allocating the physical resources of the multiprocessor/core computer while "virtualizing" other physical hardware resources required by an operating system. So each virtualized session contains its very own operating system—Windows, Linux, and so on. And within those virtualized operating systems, multiple applications are able to operate.

A lot of great applications run in Windows, but some very industry-specific applications run in Linux. Others may only run in Mac OS X (which is Unix based). Virtualization allows you to create a fantastic best-of-breed solution, where you get to choose not only the application but also the operating system for that application.

Just imagine that not too long ago, radios were AM only, and then later FM, and eventually both AM and FM. Even the first TVs were VHF (channels 2–13) and then later UHF (channels 14–83) was added. But if you wanted AM and FM, or VHF and UHF, you had to buy two radios and two televisions! Well, that certainly seems unimaginable now. Yet now the same goes for personal computing. You no longer have to buy multiple computers to run multiple operating systems because you effectively already have multiple computers in your "one" computer. You're just not taking advantage of them!

Figure 23.1 illustrates this with four concurrent "spaces" in Mac OS X (which is being used as the host computer). In turn, this host computer is running two virtual machines. One of the VMs is of Windows XP, which is running Revit 2010 (upper right). The other VM is running Vista 64, which is running a beta of Revit 2011 (lower right). And at the same time, the upper and lower spaces on the left contain instant messaging sessions, email, web browser, and word processing applications (running on the host computer).

To get started with virtualization, you'll need a computer with multiprocessors (or a single processor with multiple cores). You'll also need to have enough memory to allocate to your host and your guest (or virtual) computers: 4GB of RAM will do nicely. How many real and virtual processors you have will help you determine how many computing environments can run simultaneously. For example, if you have four processing cores and 8GB of RAM (and you want to allocate each computer (real or virtual) at least 2GB of RAM, then you'll be able to run up to four computers at the same time (one host computer and three guest computers).

The host computer is the actual physical machine along with its operating system (OS). Windows, Linux, and Mac OS X may all operate as the host computer and operating system. In turn, the host computer will simply host the virtual OSs. And in turn those virtual operating systems will run their virtualized applications (this is the guest computer). Standard x86 hardware and Intel or AMD architecture may host virtual machines.

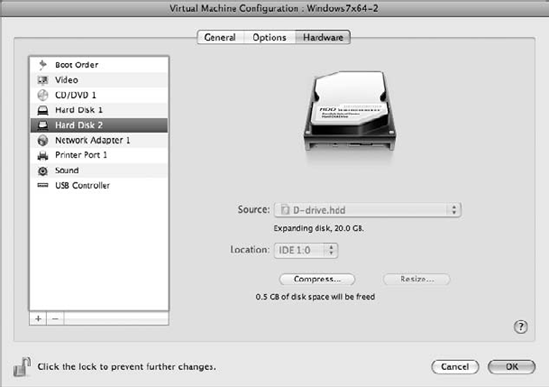

The guest computer is simply a virtual machine. The VM contains the virtual OS, applications, and even user files (like a document, spreadsheet, and so on) all contained in a single file.

The guest may reside on the same hard drive as the host machine. But the guest machine may also be stored (as well as backed up) on another hard drive or even external hard drive or other portable media.

Essentially, each VM exists as a separate, virtual disk image. And yet files on the host machine may be accessed by either the host or guest machine, or vice versa. For example, a Microsoft Word document residing on a host machine (running OS X) may be accessed, opened, and saved by a guest VM running Microsoft XP and Microsoft Office. And the guest VM may contain files accessed by the host machine. It's really quite flexible.

As a result, it's important to keep in mind that the files that you need to access within a VM (or guest machine) need not reside "inside" the VM. Your files may reside on the host machine or even at a location that can be accessed by either the host or the guest, such as a LAN or WAN network drive or even an external hard drive.

Basically, it's all about flexibility and being able to select a best-of-breed solution that suits your business and working style. Having to choose one "right" personal computer for work (or your family, for that matter) is a thing of the past. Instead, you can choose one right host'likely the one that suits a majority of your needs. Then you're free to virtualize the other operating systems and applications that you'll run from your host of choice.

From the standpoint of IT and end-user support issues, there are numerous advantages when allowing end users to utilize virtual environments. First, the rollout of approved disk images is greatly simplified (containing both OS and applications—and even user files and templates if necessary). Everything is self-contained in a single file. Rolling out prebuilt images of VMs can make new installations and upgrades easier, predictable, and far less time-consuming. Even installing operating systems in VMs takes far less time because you're writing the data to RAM.

Another advantage is that the entire OS and all required applications may be quickly restored. In the event of a corrupted hard drive (where the VM resides), a duplicate VM can be replaced in a matter of minutes rather than hours or days. Just copy the at-risk VM to a new hard drive as you would any other file. No reinstalling the OS, applications, user settings, and preferences, and so on—it's all already there in the VM.

If you are in the position of having to support legacy operating systems and applications, then virtualization is a no-brainer. Rather than having to maintain aging hardware, out-of-date operating systems, and legacy applications, you can simply emulate everything in a single file. This keeps your teams and project managers happy while you don't having to worry about maintaining or replacing aging equipment.

And if you've been in IT for only a short time, it doesn't take long to experience a first-person account of the unintended consequence of a rogue OS or software update or patch that completely ruined an otherwise useful computer! In these cases, two people (at least) are stuck: the person who was until a few moments ago being quite productive and the person who will have to troubleshoot the computer back into usefulness—in other words, you. Well, virtualized computer software and operating systems can be tested first in virtualization without the risk of impacting the host system.

Finally, networking and hardware connectivity and emulation may be completely isolated from the host machine. This allows for virus containment of the VM without risking the host machine or other virtual machines on the host. Just delete the suspect VM and restore from a backup (or roll the VM back to a previous state).

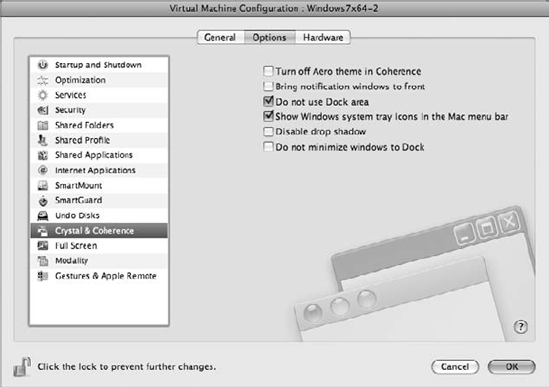

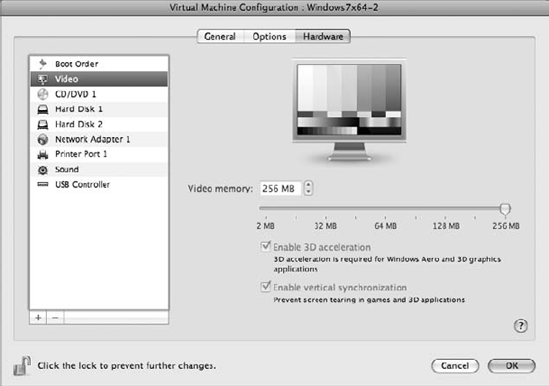

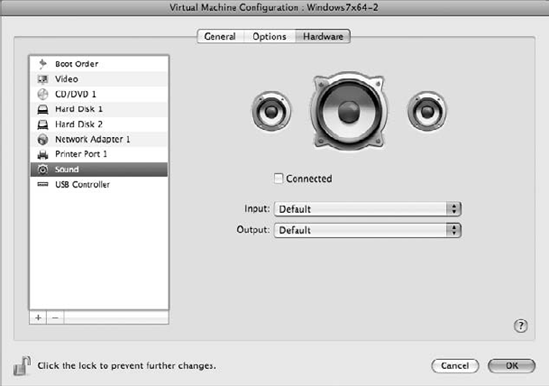

There are also numerous, compelling advantages for the end users running virtual machines. First, computing resources may be easily allocated to the guest OS and applications depending on specific requirements. Of course, 3D-centric applications will need more resources. But word processing and spreadsheets will require far less. Less hardware-demanding applications won't compete with operating systems and applications, which require more resources, and all of this can be defined within the settings of each VM.

Second, customized VMs and applications can be created for individual users quickly and easily. Of course, economies of scale often prevail in large organizations, where choosing the "right" hardware, OS, and applications is paramount. But with virtualization, you can define best-of-breed solutions for your associates and deploy them quickly and easily. This "mass customization" can go a long way toward employee satisfaction. And not only this, but in many cases, portability is also a key concern. So, it's important to keep in mind that a modest external hard drive can contain dozens of virtual machines. Let the end user choose the "computer" that's right for them. And if that computer fails, simply take the portable hard drive to another computer for minimal downtime.

End users may operate multiple VM simultaneously, which takes full advantage of multicore computing resources. Of course, the licensing of operating systems and applications is an important business concern. Yet, network licensing of operating systems and applications allows users to run multiple operating systems and applications at the same time while being fully compliant from a licensing standpoint. The virtual machines simply need to be able to access licenses from the network, which is not much different from how the host computer already operates.

Of course, every computer crashes, even virtual ones! But if an application or OS within a VM crashes, the host OS and remaining VMs are isolated. As a result, your host computer is typically not affected by a crash of the guest computer. The end user simply restarts the virtual machine and its applications.

But even when computers don't crash, it's still pretty easy to tell when they're "struggling" to complete one task before they allow you to start another. This is referred to the "white screen of death"—since your computer simply hangs with a white screen until it's done with whatever it was working on and ready to let you do something else.

When running processor- and memory-intensive applications and processes in virtualization, end users can quickly select another VM and continue working in another application and process, avoiding the dreaded "white screen of death." In other words, although Revit is saving to Central, or completing a rendering in a virtual machine, you're free to work in another virtual machine or the host computer while the task in the VM completes. Multitasking indeed!

And finally, not only will the end users be able to choose the best-of-breed operating system and applications, but as we've illustrated earlier, they'll also be able to run them concurrently! There's no rebooting between operating systems or other sessions. Nor will your end users need to keep multiple computers within arm's reach to multitask. It's all there in one single computer!

Just like any computer, there are practical limitations to running virtual computers. Depending on the application that you're running in virtualization, response times are going to be nearly as fast as running it natively—or significantly slower. Real-world experience indicates that bottlenecks in speed tend to occur in applications that are graphically intensive: video, video games, and 3D graphics.

From the standpoint of your host computer, it's important that each of your virtual machines have one (or more) processors preallocated to the virtual machine. This means that every time you start your VM, the host computer will dedicate a processor of your host computer to the VM.

Keep in mind that when your VM is opened, the entire computer—operating system, applications, files—will try to operate in RAM, rather than writing back and forth to your hard drive. So, how much memory you allocate to a VM is really important. But there is a point of "diminished returns" because the more memory that you allocate to the guest machine, the less will be available to the host machine. But since the host machine is responsible for running your virtualization software, the less memory available to your host machine will impact how efficiently your guest machine will run.

The file size being opened by your virtualized application (on your virtual machine) will also impact how efficiently your guest machine runs. Keep an eye on your virtualized task manager. As a rule of thumb, we suggest that you allocate at least 20x in memory of your opened Revit file. So if you have a file that is 100MB in size, you'll want to allocate at least 2GB in memory to that Revit file alone. But keep in mind that you also have the operating system and the application to consider.

Interestingly enough, virtual machines will run nearly as fast as if they were running natively as the host operating system. In some cases that require intensive reading and writing to the physical hard drive, your virtual machine may actually perform better than running on a host machine because in many cases it is faster to write data to RAM. So, where is the bottleneck we all keep reading about? Graphics.

Virtual machines do their best to emulate graphics cards, but just as the VM preallocates dedicated processing, there's no substitute for the "real" thing. Graphics emulation will get you close when it comes to most business applications (word processing, spreadsheets, and so on). But graphics-intensive applications will stress your virtual machine.

What is needed is dedicated graphics. In other words, just like the VM relies on a dedicated, physical processor, a virtual machine that is tasked with running graphically intensive applications could take advantage of dedicated graphics hardware. We'll talk about this later in the chapter when we discuss some exciting recent developments in virtualization.

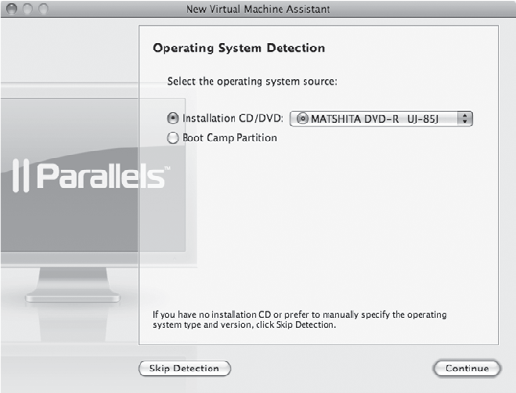

There are two primary methods of creating a virtual machine. You can start from scratch and create a clean installation of your desired operating system (Figure 23.2), or if you already have a computer that you know you want to use, you can convert your physical computer to a virtual one.

If you want to start from scratch and create a clean installation of an operating system and applications, simply start your VM software and select File

Depending on the operating system, expect the VM to restart a few times during the process. But overall it should take significantly less time to create a VM than to install an operating system on a host computer. When the installation is complete, remember to check for recent updates to the operating system. We've found that downloading and installing these updates (which usually require the guest computer to reboot after install) can take as long to complete as the installation of the whole operating system.

What about network and Internet access on the VM? Unless you intend to completely isolate your guest computer from the network and Internet, it's a good idea to install virus protection software just as you would on any host computer. Fortunately, both VMware and Parallels offer subscriptions to virus protection software as part of the purchase of either virtual solution.

Keep in mind that after you've created your clean install (and installed any suggested critical updates), you may not want to start installing any applications right away. Why? Because you've just spent a fair amount of time creating a brand-new virtual computer! So, before you start spending a lot of time installing applications and moving files to your guest computer, why not create a copy for safekeeping? The original clean install will be updated from time to time through system updates. But the copy will be a starting point for installing your applications.

By creating a copy of your new guest computer, you'll have a great starting point for testing out new applications without risking the rest of your virtual environments. And when you're done testing a new application, simply delete the VM and copy a new one. In some cases, installing an application on a clean, duplicate guest computer is faster than going through the process of uninstalling an application and reinstalling it in the same VM.

In some cases, you don't want to spend the time to create a VM that is a duplicate of a physical machine, particularly if it means trying to re-create a physical machine that contains an operating system, numerous applications, and dozens of user-created files that has grown and evolved over time. Trying to exactly re-create such a physical computer would be nearly impossible, especially if the physical computer you're trying to duplicate is a work-issued desktop or laptop that contains applications and settings that you can't install or duplicate.

Fortunately, both Parallels and VMware have applications that allow you to turn your physical computer into a virtual computer, in effect migrating the entire computer into a VM. This is commonly referred to as physical-to-virtual (P2V) technology. The applications to complete the P2V migration are provided on a complimentary basis by the software vendors. Obviously it's in their interest to get you to give virtualization a try! Not only that, but both Parallels and VMware offer 30-day trials of their virtual solutions so you can create or convert a trial virtual computer.

To download Parallels Transporter, go here:

www.parallels.com/products/pvc45/technology/transporter/

To download VMware Converter, go here:

www.vmware.com/products/converter/

Once you've downloaded either (or both) P2V conversion solution, simply install the application, start it, and follow the on-screen directions. It's pretty straightforward, but it may take some time (up to a few hours), and while the conversion is taking place, it's best to not attempt to work on the host computer.

When the conversion is complete, you'll have a single file that is very close to the size of the used capacity of your physical computer. For example, if your physical computer's operating system, applications, files, settings, and so on take up 60GB of space on your hard drive, you'll have a 60GB file when the P2V conversion is complete. Therefore, it's important to have more free space on your hard drive than you're presently using before you start the conversion process. Or better yet, you can write the conversion (the creation of the virtual computer) to another hard drive, even an external hard drive.

Prepare yourself when you open this VM for the first time. Expect everything to look and feel the same as your physical computer. But the first time you launch your virtual machine, expect to be at least a little "giddy" when everything is just there and looks the same. All the settings, software, files—even the desktop—will look just like your physical computer, except now your physical computer may reside on an external hard drive that is no longer dependent on a specific location. Any computer that contains the virtual software is capable of running your virtual computer. So if your workstation or laptop is unavailable or in need of repair, you can still be up and running!

- Understand the benefits of virtualization.

There are advantages of virtualization for both IT support as well as end users.

- Master It

What are the advantages of virtualization?

- Take advantage of virtualization.

Having to maintain multiple operating systems is hardly uncommon. But rather than maintain obsolete hardware and applications, why not utilize virtualization and maintain best-of-breed solutions?

- Master It

What's the easiest way to try out virtualization?