4

Model‐Based Processors

4.1 Introduction

In this chapter, we introduce the fundamental concepts of model‐based signal processing using state‐space models 1,2. We first develop the paradigm using the linear, (time‐varying) Gauss–Markov model of the previous chapter and then investigate the required conditional expectations leading to the well‐known linear Kalman filter (LKF) processor 3. Based on this fundamental theme, we progress to the idea of the linearization of a nonlinear, state‐space model also discussed in the previous chapter, where we investigate a linearized processor – the linearized Kalman filter (LZKF). It is shown that the resulting processor can provide a solution (time‐varying) to the nonlinear state estimation. We then develop the extended Kalman filter (EKF) as a special case of the LZKF, linearizing about the most available state estimate, rather than a reference trajectory. Next we take it one step further to briefly discuss the iterated–extended Kalman filter (IEKF) demonstrating improved performance.

We introduce an entirely different approach to Kalman filtering – the unscented Kalman filter (UKF) that evolves from a statistical linearization, rather than a dynamic model linearization of the previous suite of nonlinear approaches. The theory is based on the concept of “sigma‐point” transformations that enable a much better matching of first‐ and second‐order statistics of the assumed posterior distribution with even higher orders achievable.

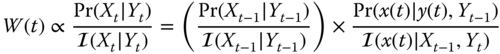

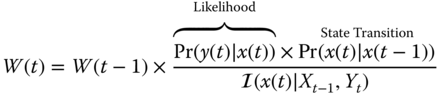

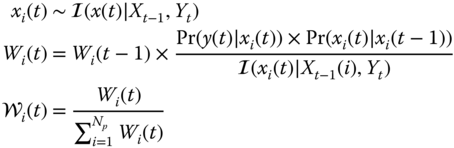

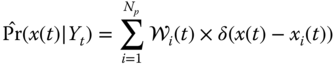

Finally, we return to the basic Bayesian approach of estimating the posterior state distribution directly leading to the particle filter (PF). Here following the Bayesian paradigm, the processor, which incorporates the nonlinear state‐space model, produces a nonparametric estimate of the desired posterior at each time‐step. Computationally more intense, but it is capable of operating in both non‐Gaussian and multimodal distribution environments. From this perspective, it is important to realize that the LKF is essentially an efficient recursive technique that estimates the conditional mean and covariance of the posterior Gaussian distribution, but is incapable of dealing with multimodal, non‐Gaussian problems effectively.

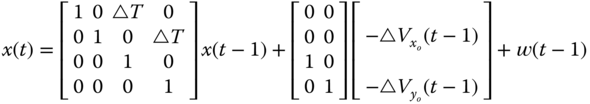

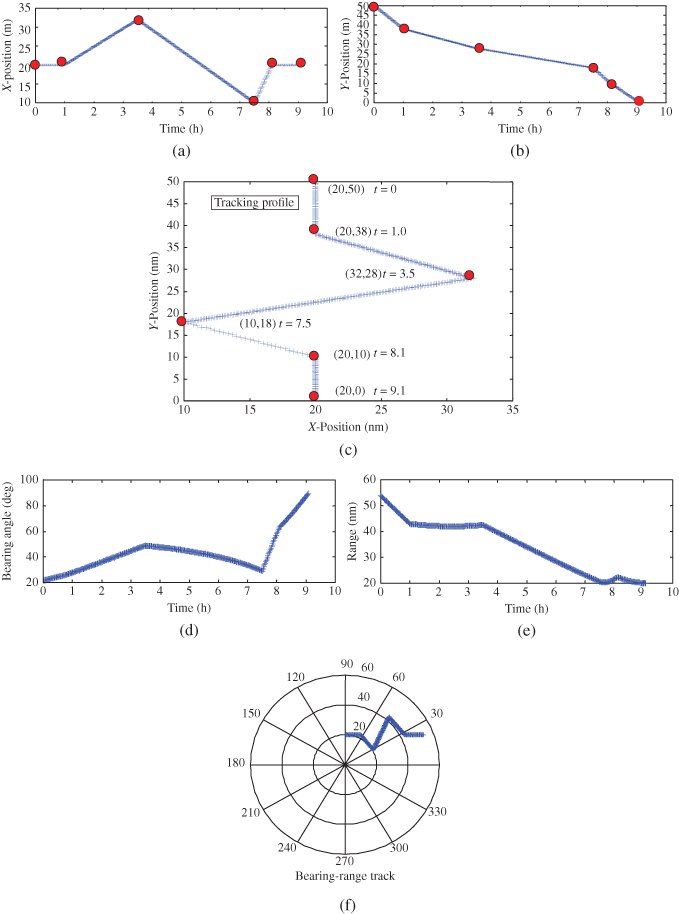

We summarize the results with a case study implementing a 2D‐tracking filter.

4.2 Linear Model‐Based Processor: Kalman Filter

We develop the model‐based processors (MBP) for the dynamic estimation problem, that is, the estimation of state processes that vary with time. Here the state‐space representation is employed as the basic model embedded in the algorithm. To start with, we present the Kalman filter algorithm in the predictor–corrector form. We use this form because it provides insight into the operation of this state‐space (MBP) as well as the other recursive processors to follow.

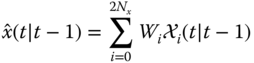

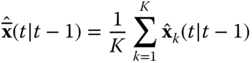

Table 4.1 Linear Kalman filter algorithm (predictor–corrector form).

| Prediction | |

|

|

(State Prediction) |

|

|

(Measurement Prediction) |

|

|

(Covariance Prediction) |

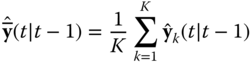

| Innovations | |

|

|

(Innovations) |

|

|

(Innovations Covariance) |

| Gain | |

|

|

(Gain or Weight) |

| Correction | |

|

|

(State Correction) |

|

|

(Covariance Correction) |

| Initial conditions | |

|

|

|

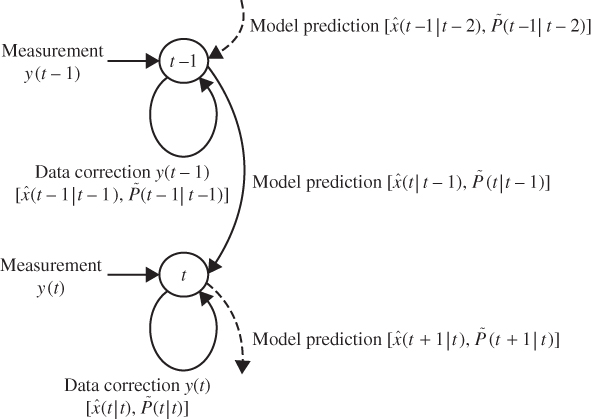

Figure 4.1 Predictor–corrector form of the Kalman filter: Timing diagram.

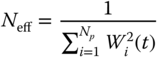

The operation of the MBP algorithm can be viewed as a predictor–corrector algorithm as in standard numerical integration. Referring to the algorithm in Table 4.1 and Figure 4.1, we see its inherent timing in the algorithm. First, suppose we are currently at time ![]() and have not received a measurement,

and have not received a measurement, ![]() as yet. We have the previous filtered estimate

as yet. We have the previous filtered estimate ![]() and error covariance

and error covariance ![]() and would like to obtain the best estimate of the state based on

and would like to obtain the best estimate of the state based on ![]() data samples. We are in the “prediction phase” of the algorithm. We use the state‐space model to predict the state estimate

data samples. We are in the “prediction phase” of the algorithm. We use the state‐space model to predict the state estimate ![]() and its associated error covariance

and its associated error covariance ![]() . Once the prediction based on the model is completed, we then calculate the innovations covariance

. Once the prediction based on the model is completed, we then calculate the innovations covariance ![]() and gain

and gain ![]() . As soon as the measurement at time

. As soon as the measurement at time ![]() , that is,

, that is, ![]() , becomes available, then we determine the innovations

, becomes available, then we determine the innovations ![]() . Now we enter the “correction phase” of the algorithm. Here we correct or update the state based on the new information in the measurement – the innovations. The old, or predicted, state estimate

. Now we enter the “correction phase” of the algorithm. Here we correct or update the state based on the new information in the measurement – the innovations. The old, or predicted, state estimate ![]() is used to form the filtered, or corrected, state estimate

is used to form the filtered, or corrected, state estimate ![]() and

and ![]() . Here we see that the error, or innovations, is the difference between the actual measurement and the predicted measurement

. Here we see that the error, or innovations, is the difference between the actual measurement and the predicted measurement ![]() . The innovations is weighted by the gain matrix

. The innovations is weighted by the gain matrix ![]() to correct the old state estimate (predicted)

to correct the old state estimate (predicted) ![]() . The associated error covariance is corrected as well. The algorithm then awaits the next measurement at time

. The associated error covariance is corrected as well. The algorithm then awaits the next measurement at time ![]() . Observe that in the absence of a measurement, the state‐space model is used to perform the prediction, since it provides the best estimate of the state.

. Observe that in the absence of a measurement, the state‐space model is used to perform the prediction, since it provides the best estimate of the state.

The covariance equations can be interpreted in terms of the various signal (state) and noise models (see Table 4.1

). The first term of the predicted covariance ![]() relates to the uncertainty in predicting the state using the model

relates to the uncertainty in predicting the state using the model ![]() . The second term indicates the increase in error variance due to the contribution of the process noise

. The second term indicates the increase in error variance due to the contribution of the process noise ![]() or model uncertainty. The corrected covariance equation indicates the predicted error covariance or uncertainty due to the prediction, decreased by the effect of the update (KC), thereby producing the corrected error covariance

or model uncertainty. The corrected covariance equation indicates the predicted error covariance or uncertainty due to the prediction, decreased by the effect of the update (KC), thereby producing the corrected error covariance ![]() . The application of the LKF to the

. The application of the LKF to the ![]() circuit was shown in Figure 1.8, where we see the noisy data, the “true” (mean) output voltage, and the Kalman filter predicted measurement voltage. As illustrated, the filter performs reasonably compared to the noisy data and tracks the true signal quite well. We derive the Kalman filter algorithm from the innovations viewpoint to follow, since it will provide the foundation for the nonlinear algorithms to follow.

circuit was shown in Figure 1.8, where we see the noisy data, the “true” (mean) output voltage, and the Kalman filter predicted measurement voltage. As illustrated, the filter performs reasonably compared to the noisy data and tracks the true signal quite well. We derive the Kalman filter algorithm from the innovations viewpoint to follow, since it will provide the foundation for the nonlinear algorithms to follow.

4.2.1 Innovations Approach

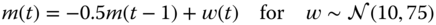

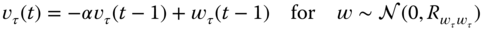

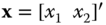

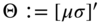

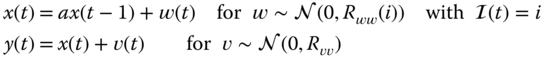

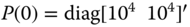

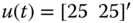

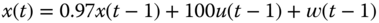

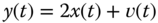

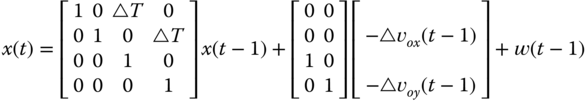

In this section, we briefly develop the (linear) Kalman filter algorithm from the innovations perspective following the approach by Kailath 4–6. First, recall from Chapter 2 that a model of a stochastic process can be characterized by the Gauss–Markov model using the state‐space representation

where ![]() is assumed zero‐mean and white with covariance

is assumed zero‐mean and white with covariance ![]() and

and ![]() and

and ![]() are uncorrelated. The measurement model is given by

are uncorrelated. The measurement model is given by

where ![]() is a zero‐mean, white sequence with covariance

is a zero‐mean, white sequence with covariance ![]() and

and ![]() is (assumed) uncorrelated with

is (assumed) uncorrelated with ![]() and

and ![]() .

.

The linear state estimation problem can be stated in terms of the preceding state‐space model as

GIVEN a set of noisy measurements ![]() , for

, for ![]() characterized by the measurement model of Eq. (4.2), FIND the linear minimum (error) variance estimate of the state characterized by the state‐space model of Eq. (4.1). That is, find the best estimate of

characterized by the measurement model of Eq. (4.2), FIND the linear minimum (error) variance estimate of the state characterized by the state‐space model of Eq. (4.1). That is, find the best estimate of ![]() given the measurement data up to time

given the measurement data up to time ![]() ,

, ![]() .

.

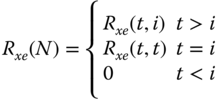

First, we investigate the batch minimum variance estimator for this problem, and then we develop an alternative solution using the innovations sequence. The recursive solution follows almost immediately from the innovations. Next, we outline the resulting equations for the predicted state, gain, and innovations. The corrected, or filtered, state equation then follows. Details of the derivation can be found in 2 .

Constraining the estimator to be linear, we see that for a batch of ![]() ‐data, the minimum variance estimator is given by

1

‐data, the minimum variance estimator is given by

1

where ![]() and

and ![]() , and

, and ![]() . Similarly, the linear estimator can be expressed in terms of the

. Similarly, the linear estimator can be expressed in terms of the ![]() data samples as

data samples as

where ![]() .

.

Here we are investigating a “batch” solution to the state estimation problem, since all the ![]() ‐vector data

‐vector data ![]() are processed in one batch. However, we require a recursive solution (see Chapter 1) to this problem of the form

are processed in one batch. However, we require a recursive solution (see Chapter 1) to this problem of the form

In order to achieve the recursive solution, it is necessary to transform the covariance matrix ![]() to be block diagonal.

to be block diagonal. ![]() block diagonal implies that all the off‐diagonal block matrices

block diagonal implies that all the off‐diagonal block matrices ![]() , for

, for ![]() , which in turn implies that the

, which in turn implies that the ![]() must be uncorrelated or equivalently orthogonal. Therefore, we must construct a sequence of independent

must be uncorrelated or equivalently orthogonal. Therefore, we must construct a sequence of independent ![]() ‐vectors, say

‐vectors, say ![]() , such that

, such that

The sequence ![]() can be constructed using the orthogonality property of the minimum variance estimator such that

can be constructed using the orthogonality property of the minimum variance estimator such that

We define the innovations or new information 4 as

with the orthogonality property that

Since ![]() is a time‐uncorrelated

is a time‐uncorrelated ![]() ‐vector sequence by construction, we have that the block diagonal innovations covariance matrix is

‐vector sequence by construction, we have that the block diagonal innovations covariance matrix is ![]() with each

with each ![]() .

.

The correlated measurement vector can be transformed to an uncorrelated innovations vector through a linear transformation, say ![]() , given by

, given by

where ![]() is a nonsingular transformation matrix and

is a nonsingular transformation matrix and ![]() . Multiplying Eq. (4.8) by its transpose and taking expected values, we obtain

. Multiplying Eq. (4.8) by its transpose and taking expected values, we obtain

Similarly, we obtain

Substituting these results into Eq. (4.4) gives

Since the ![]() are time‐uncorrelated by construction,

are time‐uncorrelated by construction, ![]() is block diagonal. From the orthogonality properties of

is block diagonal. From the orthogonality properties of ![]() ,

, ![]() is lower‐block triangular, that is,

is lower‐block triangular, that is,

where ![]() , and

, and ![]() .

.

The recursive filtered solution now follows easily, if we realize that we want the best estimate of ![]() given

given ![]() ; therefore, any block row can be written (for

; therefore, any block row can be written (for ![]() ) as

) as

If we extract the last (![]() th) term out of the sum (recall from Chapter 1), we obtain

th) term out of the sum (recall from Chapter 1), we obtain

or

where ![]() and

and ![]() .

.

So we see that the recursive solution using the innovations sequence instead of the measurement sequence has reduced the computations to inverting a ![]() matrix

matrix ![]() instead of a

instead of a ![]() matrix,

matrix, ![]() . Before we develop the expression for the filtered estimate of Eq. (4.11), let us investigate the innovations sequence more closely. Recall that the minimum variance estimate of

. Before we develop the expression for the filtered estimate of Eq. (4.11), let us investigate the innovations sequence more closely. Recall that the minimum variance estimate of ![]() is just a linear transformation of the minimum variance estimate of

is just a linear transformation of the minimum variance estimate of ![]() , that is,

, that is,

Thus, the innovations can be decomposed using Eqs. ( 4.2 ) and (4.12) as

for ![]() – the predicted state estimation error. Consider the innovations covariance

– the predicted state estimation error. Consider the innovations covariance ![]() using this equation:

using this equation:

This expression yields the following, since ![]() and

and ![]() are uncorrelated:

are uncorrelated:

The cross‐covariance ![]() is obtained as

is obtained as

Using the definition of the estimation error ![]() , substituting for

, substituting for ![]() and from the orthogonality property of the estimation error for dynamic variables 3

, that is,

and from the orthogonality property of the estimation error for dynamic variables 3

, that is,

we obtain

Thus, we see that the weight or gain matrix is given by

Before we can calculate the corrected state estimate, we require the predicted, or old estimate, that is,

If we employ the state‐space model of Eq. ( 4.1 ), then we have from the linearity properties of the conditional expectation

or

However, from the orthogonality property (whiteness) of ![]() , we have

, we have

which is not surprising, since the best estimate of zero‐mean, white noise is zero (unpredictable). Thus, the prediction is given by

To complete the algorithm, the expressions for the predicted and corrected error covariances must be determined. From the definition of predicted estimation error covariance, we see that ![]() satisfies

satisfies

Since ![]() and

and ![]() are uncorrelated, the predicted error covariance

are uncorrelated, the predicted error covariance ![]() can be determined from this relation 2

to give

can be determined from this relation 2

to give

The corrected error covariance ![]() is calculated using the corrected state estimation error and the corresponding state estimate of Eq. ( 4.11

) as

is calculated using the corrected state estimation error and the corresponding state estimate of Eq. ( 4.11

) as

Using this expression, we can calculate the required error covariance from the orthogonality property, ![]() , and Eq. (4.15) 2

yielding the final expression for the corrected error covariance as

, and Eq. (4.15) 2

yielding the final expression for the corrected error covariance as

This completes the brief derivation of the LKF based on the innovations sequence (see 2 for more details). It is clear to see that the innovations sequence holds the “key” to unlocking the mystery of Kalman filter design. In Section 4.2.3, we investigate the statistical properties of the innovations sequence that will enable us to develop a simple procedure to “tune” the MBP.

4.2.2 Bayesian Approach

The Bayesian approach to Kalman filtering follows in this brief development leading to the maximum a posteriori (MAP) estimate of the state vector under the Gauss–Markov model assumptions (see Chapter 3). Detailed derivations can be found in 2 or 7.

We would like to obtain the MAP estimator for the state estimation problem where the underlying Gauss–Markov model with ![]() ,

, ![]() , and

, and ![]() Gaussian distributed. We know that the corresponding state estimate will also be Gaussian, since it is a linear transformation of Gaussian variables.

Gaussian distributed. We know that the corresponding state estimate will also be Gaussian, since it is a linear transformation of Gaussian variables.

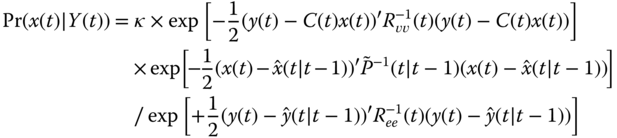

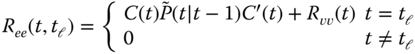

It can be shown using Bayes' rule that the a posteriori probability 2 , 7 can be expressed as

Under the Gauss–Markov model assumptions, we know that each of the conditional distributions can be expressed in terms of the Gaussian distribution as follows:

Substituting these probabilities into Eq. (4.22) and combining all constants into a single constant ![]() , we obtain

, we obtain

Recognizing the measurement noise, state estimation error, innovations, and taking natural logarithms, we obtain the log a posteriori probability in terms of the Gauss–Markov model as

The MAP estimate is then obtained by differentiating this expression, setting it to zero and solving to obtain

This relation can be simplified by using a form of the matrix inversion lemma 3 , enabling us to eliminate the first bracketed term in Eq. (4.24) to give

Solving for ![]() and substituting gives

and substituting gives

Multiplying out, regrouping terms, and factoring, this relation can be rewritten as

or finally

Further manipulations lead to equivalent expressions for the Kalman gain as

which completes the Bayes' approach to the Kalman filter. A complete detailed derivation is available in 2 or 7 for the interested reader.

4.2.3 Innovations Sequence

In this subsection, we investigate the properties of the innovations sequence, which have been used to develop the Kalman filter 2 . It is interesting to note that since the innovations sequence depends directly on the measurement and is linearly related to it, then it spans the measurement space in which all of our data reside. In contrast, the states or internal variables are not measured directly and are usually not available; therefore, our designs are accomplished using the innovations sequence along with its statistical properties to assure optimality. We call this design procedure minimal (error) variance design.

Recall that the innovations or equivalently the one‐step prediction error is given by

where we define ![]() for

for ![]() – the state estimation error prediction. Using these expressions, we can now analyze the statistical properties of the innovations sequence based on its orthogonality to the measured data. We will state each property first and refer to 2

for proof.

– the state estimation error prediction. Using these expressions, we can now analyze the statistical properties of the innovations sequence based on its orthogonality to the measured data. We will state each property first and refer to 2

for proof.

The innovations sequence is zero‐mean:

The second term is null by definition and the first term is null because ![]() is an unbiased estimator.

is an unbiased estimator.

The innovations sequence is white since

or

The innovations sequence is determined recursively using the Kalman filter and acts as a “Gram–Schmidt orthogonal decomposer” or equivalently whitening filter.

The innovations sequence is also uncorrelated with the deterministic input ![]() since

since

Assuming that the measurement evolves from a Gauss–Markov process as well, then the innovations sequence is merely a linear transformation of Gaussian vectors and is therefore Gaussian with ![]() .

.

Finally, the innovations sequence is related to the measurement by an invertible linear transformation; therefore, it is an equivalent sequence under linear transformations, since either sequence can be constructed from knowledge of the second‐order statistics of the other 2 .

We summarize these properties of the innovations sequence as follows:

- Innovations sequence

is zero‐mean.

is zero‐mean. - Innovations sequence

is white.

is white. - Innovations sequence

is uncorrelated in time and with input

is uncorrelated in time and with input  .

. - Innovations sequence

is Gaussian with statistics,

is Gaussian with statistics,  , under the Gauss–Markov assumptions.

, under the Gauss–Markov assumptions. - Innovations

and measurement

and measurement  sequences are equivalent under linear invertible transformations.

sequences are equivalent under linear invertible transformations.

The innovations sequence spans the measurement or data space, but in the Kalman filter design problem, we are concerned with the state‐space. Analogous to the innovations sequence in the output space is the predicted state estimation error ![]() in the state‐space. It is easy to show that from the orthogonality condition of the innovations sequence that the corresponding state estimation error is also orthogonal to

in the state‐space. It is easy to show that from the orthogonality condition of the innovations sequence that the corresponding state estimation error is also orthogonal to ![]() , leading to the state orthogonality condition (see 2

for more details).

, leading to the state orthogonality condition (see 2

for more details).

This completes the discussion of the orthogonality properties of the innovations sequence. Next we consider more practical aspects of processor design and how these properties can be exploited to produce a minimum variance processor.

4.2.4 Practical Linear Kalman Filter Design: Performance Analysis

In this section, we heuristically provide an intuitive feel for the operation of the Kalman filter using the state‐space model and Gauss–Markov assumptions. These results coupled with the theoretical points developed in 2 lead to the proper adjustment or “tuning” of the processor. Tuning the processor is considered an art, but with proper statistical tests, the performance can readily be evaluated and adjusted. As mentioned previously, this approach is called the minimum (error) variance design. In contrast to standard filter design procedures in signal processing, the minimum variance design adjusts the statistical parameters (e.g. covariances) of the processor and examines the innovations sequence to determine if the LKF is properly tuned. Once tuned, then all of the statistics (conditional means and variances) are valid and may be used as reasonable estimates. Here we discuss how the parameters can be adjusted and what statistical tests can be performed to evaluate the filter performance.

Heuristically, the Kalman filter can be viewed simply by its update equation:

where ![]() and

and ![]() .

.

Using this representation of the KF, we see that we can view the old or predicted estimate ![]() as a function of the state‐space model

as a function of the state‐space model ![]() and the prediction error or innovations

and the prediction error or innovations ![]() as a function primarily of the new measurement, as indicated in Table 4.1

. Consider the new estimate under the following cases:

as a function primarily of the new measurement, as indicated in Table 4.1

. Consider the new estimate under the following cases:

So we can see that the operation of the processor is pivoted about the values of the gain or weighting matrix ![]() . For small

. For small ![]() , the processor “believes” the model, while for large

, the processor “believes” the model, while for large ![]() , it believes the measurement.

, it believes the measurement.

Let us investigate the gain matrix and see if its variations are consistent with these heuristic notions. First, it was shown in Eq. (4.28) that the alternative form of the gain equation is given by

Thus, the condition where ![]() is small can occur in two cases: (i)

is small can occur in two cases: (i) ![]() is small (fixed

is small (fixed ![]() ), which is consistent because small

), which is consistent because small ![]() implies that the model is adequate; and (ii)

implies that the model is adequate; and (ii) ![]() is large (

is large (![]() fixed), which is also consistent because large

fixed), which is also consistent because large ![]() implies that the measurement is noisy, so again believe the model.

implies that the measurement is noisy, so again believe the model.

For the condition where ![]() is large, two cases can also occur: (i)

is large, two cases can also occur: (i) ![]() is large when

is large when ![]() is large (fixed

is large (fixed ![]() ), implying that the model is inadequate, so believe the measurement; and (ii)

), implying that the model is inadequate, so believe the measurement; and (ii) ![]() is small (

is small (![]() fixed), implying the measurement is good (high signal‐to‐noise ratio [SNR]). So we see that our heuristic notions are based on specific theoretical relationships between the parameters in the KF algorithm of Table 4.2.

fixed), implying the measurement is good (high signal‐to‐noise ratio [SNR]). So we see that our heuristic notions are based on specific theoretical relationships between the parameters in the KF algorithm of Table 4.2.

Table 4.2 Heuristic notions for Kalman filter tuning.

| Kalman filter heuristics | ||

| Condition | Gain | Parameter |

| Believe model | Small |

|

|

|

||

| Believe measurement | Large |

|

|

|

||

Summarizing, a Kalman filter is not functioning properly when the gain becomes small and the measurements still contain information necessary for the estimates. The filter is said to diverge under these conditions. In this case, it is necessary to detect how the filter is functioning and how to adjust it if necessary, but first we consider the tuned LKF.

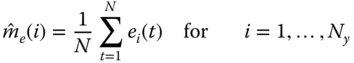

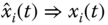

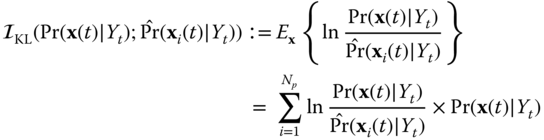

When the processor is “tuned,” it provides an optimal or minimum (error) variance estimate of the state. The innovations sequence, which was instrumental in deriving the processor, also provides the starting point to check the KF operation. A necessary and sufficient condition for a Kalman filter to be optimal is that the innovations sequence is zero‐mean and white (see Ref. 8 for the proof). These are the first properties that must be evaluated to ensure that the processor is operating properly. If we assume that the innovations sequence is ergodic and Gaussian, then we can use the sample mean as the test statistic to estimate, ![]() , the population mean. The sample mean for the

, the population mean. The sample mean for the ![]() th component of

th component of ![]() is given by

is given by

where ![]() and

and ![]() is the number of data samples. We perform a statistical hypothesis test to “decide” if the innovations mean is zero 2

. We test that the mean of the

is the number of data samples. We perform a statistical hypothesis test to “decide” if the innovations mean is zero 2

. We test that the mean of the ![]() th component of the innovations vector

th component of the innovations vector ![]() is

is

As our test statistic, we use the sample mean. At the ![]() ‐significance level, the probability of rejecting the null hypothesis

‐significance level, the probability of rejecting the null hypothesis ![]() is given by

is given by

Therefore, the zero‐mean test 2

on each component innovations ![]() is given by

is given by

Under the null hypothesis ![]() , each

, each ![]() is zero. Therefore, at the 5% significance level (

is zero. Therefore, at the 5% significance level (![]() ), we have that the threshold is

), we have that the threshold is

where ![]() is the sample variance (assuming ergodicity) estimated by

is the sample variance (assuming ergodicity) estimated by

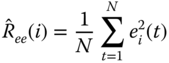

Under the same assumptions, we can perform a whiteness test 2

, that is, check statistically that the innovations covariance corresponds to that of an uncorrelated (white) sequence. Again assuming ergodicity of the innovations sequence, we use the sample covariance function as our test statistic with the ![]() th‐component covariance given by

th‐component covariance given by

We actually use the normalized covariance test statistic

Asymptotically for large ![]() , it can be shown that (see Refs. 2

,9)

, it can be shown that (see Refs. 2

,9)

Therefore, the ![]() confidence interval estimate is

confidence interval estimate is

Hence, under the null hypothesis, ![]() of the

of the ![]() values must lie within this confidence interval, that is, for each component innovations sequence to be considered statistically white. Similar tests can be constructed for the cross‐covariance properties of the innovations 10 as well, that is,

values must lie within this confidence interval, that is, for each component innovations sequence to be considered statistically white. Similar tests can be constructed for the cross‐covariance properties of the innovations 10 as well, that is,

The whiteness test of Eq. (4.38) is very useful for detecting model inaccuracies from individual component innovations. However, for complex systems with a large number of measurement channels, it becomes computationally burdensome to investigate each innovation component‐wise. A statistic capturing all of the innovations information is the weighted sum‐squared residual (WSSR) 9

–11. It aggregates all of the innovations vector information over some finite window of length ![]() . It can be shown that the WSSR is related to a maximum‐likelihood estimate of the normalized innovations variance 2

, 9

. The WSSR test statistic is given by

. It can be shown that the WSSR is related to a maximum‐likelihood estimate of the normalized innovations variance 2

, 9

. The WSSR test statistic is given by

and is based on the hypothesis test

with the WSSR test statistic

Under the null hypothesis, the WSSR is chi‐squared distributed, ![]() . However, for

. However, for ![]() ,

, ![]() is approximately Gaussian

is approximately Gaussian ![]() (see 12 for more details). At the

(see 12 for more details). At the ![]() ‐significance level, the probability of rejecting the null hypothesis is given by

‐significance level, the probability of rejecting the null hypothesis is given by

For a level of significance of ![]() , we have

, we have

Thus, the WSSR can be considered a “whiteness test” of the innovations vector over a finite window of length ![]() . Note that since

. Note that since ![]() are obtained from the state‐space MBP algorithm directly, they can be used for both stationary and nonstationary processes. In fact, in practice, for a large number of measurement components, the WSSR is used to “tune” the filter, and then the component innovations are individually analyzed to detect model mismatches. Also note that the adjustable parameter of the WSSR statistic is the window length

are obtained from the state‐space MBP algorithm directly, they can be used for both stationary and nonstationary processes. In fact, in practice, for a large number of measurement components, the WSSR is used to “tune” the filter, and then the component innovations are individually analyzed to detect model mismatches. Also note that the adjustable parameter of the WSSR statistic is the window length ![]() , which essentially controls the width of the window sliding through the innovations sequence.

, which essentially controls the width of the window sliding through the innovations sequence.

Other sets of “reasonableness” tests can be performed using the covariances estimated by the LKF algorithm and sample variances estimated using Eq. (4.35). The LKF provides estimates of the respective processor covariances ![]() and

and ![]() from the relations given in Table 4.2

. Using sample variance estimators, when the filter reaches steady state, (process is stationary), that is,

from the relations given in Table 4.2

. Using sample variance estimators, when the filter reaches steady state, (process is stationary), that is, ![]() is constant, the estimates can be compared to ensure that they are reasonable. Thus, we have

is constant, the estimates can be compared to ensure that they are reasonable. Thus, we have

Plotting the ![]() and

and ![]() about the component innovations

about the component innovations ![]() and component state estimation errors

and component state estimation errors ![]() , when the true state is known, provides an accurate estimate of the Kalman filter performance especially when simulation is used. If the covariance estimates of the processor are reasonable, then

, when the true state is known, provides an accurate estimate of the Kalman filter performance especially when simulation is used. If the covariance estimates of the processor are reasonable, then ![]() of the sequence samples should lie within the constructed bounds. Violation of these bounds clearly indicates inadequacies in modeling the processor statistics. We summarize these results in Table 4.3 and examine the RC‐circuit design problem in the following example to demonstrate the approach in more detail.

of the sequence samples should lie within the constructed bounds. Violation of these bounds clearly indicates inadequacies in modeling the processor statistics. We summarize these results in Table 4.3 and examine the RC‐circuit design problem in the following example to demonstrate the approach in more detail.

Table 4.3 Statistical tuning tests for Kalman filter.

| Kalman filter design/validation | ||||

| Data | Property | Statistic | Test | Assumptions |

| Innovation |

|

Sample mean | Zero mean | Ergodic, Gaussian |

|

|

Sample covariance | Whiteness | Ergodic, Gaussian | |

|

|

WSSR | Whiteness | Gaussian | |

|

|

Sample cross‐covariance | Cross‐covariance | Ergodic, Gaussian | |

|

|

Sample cross‐covariance | Cross‐covariance | Ergodic, Gaussian | |

| Covariances | Innovation |

Sample variance ( |

|

Ergodic |

| Innovation |

Variance ( |

Confidence interval about |

Ergodic | |

| Estimation error |

Sample variance ( |

|

Ergodic, |

|

| Estimation error |

Variance ( |

Confidence interval about |

|

|

Next we discuss a special case of the KF – a steady‐state Kalman filter that will prove a significant component of the subspace identification algorithms in subsequent chapters.

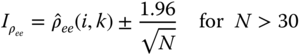

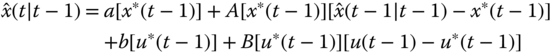

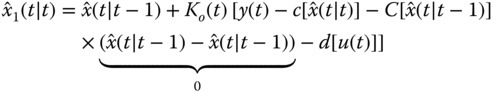

Figure 4.2 LKF design for  ‐circuit problem. (a) Estimated state (voltage) and error. (b) Filtered voltage measurement and error (innovations). (c) WSSR and zero‐mean/whiteness tests.

‐circuit problem. (a) Estimated state (voltage) and error. (b) Filtered voltage measurement and error (innovations). (c) WSSR and zero‐mean/whiteness tests.

4.2.5 Steady‐State Kalman Filter

In this section, we discuss a special case of the state‐space LKF – the steady‐state design. Here the data are assumed stationary, leading to a time‐invariant state‐space model, and under certain conditions a constant error covariance and corresponding gain. We first develop the processor and then show how it is precisely equivalent to the classical Wiener filter design. In filtering jargon, this processor is called the steady‐state Kalman filter 13,14.

We briefly develop the steady‐state KF technique in contrast to the usual recursive time‐step algorithms. By steady state, we mean that the processor has embedded time invariant, state‐space model parameters, that is, the underlying model is defined by a set of parameters, ![]() . We state without proof the fundamental theorem (see 3

, 8

, 14

for details).

. We state without proof the fundamental theorem (see 3

, 8

, 14

for details).

If we have a stationary process implying the time‐invariant system, ![]() , and additionally the system is completely controllable and observable and stable (eigenvalues of A lie within the unit circle) with

, and additionally the system is completely controllable and observable and stable (eigenvalues of A lie within the unit circle) with ![]() , then the KF is asymptotically stable. What this means from a pragmatic point of view is that as time increases (to infinity in the limit), the initial error covariance is forgotten as more and more data are processed and that the computation of

, then the KF is asymptotically stable. What this means from a pragmatic point of view is that as time increases (to infinity in the limit), the initial error covariance is forgotten as more and more data are processed and that the computation of ![]() is computationally stable. Furthermore, these conditions imply that

is computationally stable. Furthermore, these conditions imply that

Therefore, this relation implies that we can define a steady‐state gain associated with this covariance as

Let us construct the corresponding steady‐state KF using the correction equation of the state estimator and the steady‐state gain with

therefore, substituting we obtain

but since

we have by substituting into Eq. (4.47) that

with the corresponding steady‐state gain given by

Examining Eq. (4.49) more closely, we see that using the state‐space model, ![]() , and known input sequence,

, and known input sequence, ![]() , we can process the data and extract the corresponding state estimates. The key to the steady‐state KF is calculating

, we can process the data and extract the corresponding state estimates. The key to the steady‐state KF is calculating ![]() , which, in turn, implies that we must calculate the corresponding steady‐state error covariance. This calculation can be accomplished efficiently by combining the prediction and correction relations of Table 4.1

. We have that

, which, in turn, implies that we must calculate the corresponding steady‐state error covariance. This calculation can be accomplished efficiently by combining the prediction and correction relations of Table 4.1

. We have that

which in steady state becomes

There are a variety of efficient methods to calculate the steady‐state error covariance and gain 8

, 14

; however, a brute‐force technique is simply to run the standard predictor–corrector algorithm implemented in UD‐sequential form 15 until the ![]() and therefore

and therefore ![]() converge to constant matrix. Once they converge, it is necessary to only run the algorithm again to process the data using

converge to constant matrix. Once they converge, it is necessary to only run the algorithm again to process the data using ![]() , and the corresponding steady‐state gain will be calculated directly. This is not the most efficient method to solve the problem, but it clearly does not require the development of a new algorithm.

, and the corresponding steady‐state gain will be calculated directly. This is not the most efficient method to solve the problem, but it clearly does not require the development of a new algorithm.

We summarize the steady‐state KF in Table 4.4. We note from the table that the steady‐state covariance/gain calculations depend on the model parameters, ![]() , not the data, implying that they can be precalculated prior to processing the actual data. In fact, the steady‐state processor can be thought of as a simple (multi‐input/multi‐output) digital filter, which is clear if we abuse the notation slightly to write

, not the data, implying that they can be precalculated prior to processing the actual data. In fact, the steady‐state processor can be thought of as a simple (multi‐input/multi‐output) digital filter, which is clear if we abuse the notation slightly to write

For its inherent simplicity compared to the full time‐varying processor, the steady‐state KF is desirable and adequate in many applications, but realize that it will be suboptimal in some cases during the initial transients of the data. However, if we developed the model from first principles, then the underlying physics has been incorporated in the KF, yielding a big advantage over non‐physics‐based designs. This completes the discussion of the steady‐state KF. Next let us reexamine our ![]() ‐circuit problem.

‐circuit problem.

Table 4.4 Steady‐state Kalman filter algorithm.

| Covariance | |

|

|

(steady‐state covariance) |

| Gain | |

|

|

(steady‐state gain) |

| Correction | |

|

|

|

| (state estimate) | |

| Initial Conditions | |

|

|

|

This completes the example. Next we investigate the steady‐state KF and its relation to the Wiener filter.

4.2.6 Kalman Filter/Wiener Filter Equivalence

In this subsection, we show the relationship between the Wiener filter and its state‐space counterpart, the Kalman filter. Detailed proofs of these relations are available for both the continuous and discrete cases 13 . Our approach is to state the Wiener solution and then show that the steady‐state Kalman filter provides a solution with all the necessary properties. We use frequency‐domain techniques to show the equivalence. We choose the frequency domain for historical reasons since the classical Wiener solution has more intuitive appeal. The time‐domain approach will use the batch innovations solution discussed earlier.

The Wiener filter solution in the frequency domain can be solved by spectral factorization 8 since

where ![]() has all its poles and zeros within the unit circle. The classical approach to Wiener filtering can be accomplished in the frequency domain by factoring the power spectral density (PSD) of the measurement sequence; that is,

has all its poles and zeros within the unit circle. The classical approach to Wiener filtering can be accomplished in the frequency domain by factoring the power spectral density (PSD) of the measurement sequence; that is,

The factorization is unique, stable, and minimum phase (see 8 for proof).

Next we show that the steady‐state Kalman filter or the innovations model (ignoring the deterministic input) given by

where ![]() is the zero‐mean, white innovations with covariance

is the zero‐mean, white innovations with covariance ![]() , is stable and minimum phase and, therefore, in fact, the Wiener solution. The “transfer function” of the innovations model is defined as

, is stable and minimum phase and, therefore, in fact, the Wiener solution. The “transfer function” of the innovations model is defined as

and the corresponding measurement PSD is

Using the linear system relations of Chapter 2, we see that

Thus, the measurement PSD is given in terms of the innovations model as

Since ![]() and

and ![]() , then the following (Cholesky) factorization always exists as

, then the following (Cholesky) factorization always exists as

Substituting these factors into Eq. (4.60) gives

Combining like terms enables ![]() to be written in terms of its spectral factors as

to be written in terms of its spectral factors as

or simply

which shows that the innovations model indeed admits a spectral factorization of the type desired. To show that ![]() is the unique, stable, minimum‐phase spectral factor, it is necessary to show that

is the unique, stable, minimum‐phase spectral factor, it is necessary to show that ![]() has all its poles within the unit circle (stable). It has been shown (e.g. 4

, 8

,16) that

has all its poles within the unit circle (stable). It has been shown (e.g. 4

, 8

,16) that ![]() does satisfy these constraints.

does satisfy these constraints.

This completes the discussion on the equivalence of the steady‐state Kalman filter and the Wiener filter. Next we consider the development of nonlinear processors.

4.3 Nonlinear State‐Space Model‐Based Processors

In this section, we develop a suite of recursive, nonlinear state‐space MBPs. We start by using the linearized perturbation model of Section 3.8 and the development of the linear MBP of Section 4.2 to motivate the development of the LZKF. This processor is important in its own right, if a solid reference trajectory is available (e.g. roadmap for a self‐driving vehicle). From this processor, it is possible to easily understand the motivation for the “ad hoc” EKF that follows. Here we see that the reference trajectory is replaced by the most available state estimate. It is interesting to see how the extended filter evolves directly from this relationship. Next we discuss what has become a very popular and robust solution to this nonlinear state estimation problem – the so‐called UKF. Here a statistical linearization replaces the state‐space model linearization approach. Finally, we briefly develop the purely Bayesian approach to solve the nonlinear state estimation problem for both non‐Gaussian and multimodal posterior distribution estimation problems followed by a 2D‐tracking case study completing this chapter.

4.3.1 Nonlinear Model‐Based Processor: Linearized Kalman Filter

In this section, we develop an approximate solution to the nonlinear processing problem involving the linearization of the nonlinear process model about a “known” reference trajectory followed by the development of a MBP based on the underlying linearized state‐space model. Many processes in practice are nonlinear rather than linear. Coupling the nonlinearities with noisy data makes the signal processing problem a challenging one. Here we limit our discussion to discrete nonlinear systems. Continuous‐time solutions to this problem are developed in 3 – 6 , 8 13– 16 .

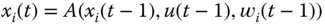

Recall from Chapter 3 that our process is characterized by a set of nonlinear stochastic vector difference equations in state‐space form as

with the corresponding measurement model

where ![]() ,

, ![]() ,

, ![]() ,

, ![]() are nonlinear vector functions of

are nonlinear vector functions of ![]() ,

, ![]() , and

, and ![]() with

with ![]() ,

, ![]() and

and ![]() ,

, ![]() .

.

In Section 3.8, we linearized a deterministic nonlinear model using a first‐order Taylor series expansion for the functions, ![]() ,

, ![]() , and

, and ![]() and developed a linearized Gauss–Markov perturbation model valid for small deviations given by

and developed a linearized Gauss–Markov perturbation model valid for small deviations given by

with ![]() ,

, ![]() , and

, and ![]() the corresponding Jacobian matrices and

the corresponding Jacobian matrices and ![]() ,

, ![]() zero‐mean, Gaussian.

zero‐mean, Gaussian.

We used linearization techniques to approximate the statistics of Eqs. (4.65) and (4.66) and summarized these results in an “approximate” Gauss–Markov model of Table 3.3. Using this perturbation model, we will now incorporate it to construct a processor that embeds the (![]() ) Jacobian linearized about the reference trajectory [

) Jacobian linearized about the reference trajectory [![]() ]. Each of the Jacobians is deterministic and time‐varying, since they are updated at each time‐step. Replacing the

]. Each of the Jacobians is deterministic and time‐varying, since they are updated at each time‐step. Replacing the ![]() matrices and

matrices and ![]() in Table 4.1

, respectively, by the Jacobians and

in Table 4.1

, respectively, by the Jacobians and ![]() , we obtain the estimated state perturbation

, we obtain the estimated state perturbation

For the Bayesian estimation problem, we are interested in the state estimate ![]() not in its deviation

not in its deviation ![]() . From the definition of the perturbation defined in Section 4.8, we have

. From the definition of the perturbation defined in Section 4.8, we have

where the reference trajectory ![]() was defined as

was defined as

Substituting this relation along with Eq. (4.68) into Eq. (4.69) gives

The corresponding perturbed innovations can also be found directly

where reference measurement ![]() is defined as

is defined as

Using the linear KF with deterministic Jacobian matrices results in

and using this relation and Eq. 4.73 for the reference measurement, we have

Therefore, it follows that the innovations is

The updated estimate is easily found by substituting Eq. ( 4.69 ) to obtain

which yields the identical update equation of Table 4.1

. Since the state perturbation estimation error is identical to the state estimation error, the corresponding error covariance is given by ![]() and, therefore,

and, therefore,

The gain is just a function of the measurement linearization, ![]() completing the algorithm. We summarize the discrete LZKF in Table 4.5.

completing the algorithm. We summarize the discrete LZKF in Table 4.5.

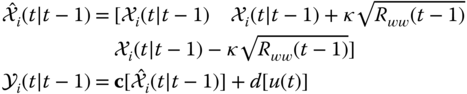

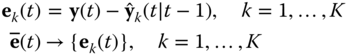

Table 4.5 Linearized Kalman filter (LZKF) algorithm.

| Prediction | |

|

|

|

|

|

|

| (State Prediction) | |

|

|

|

| (Covariance Prediction) | |

| Innovation | |

|

|

|

| (Innovations) | |

|

|

(Innovations Covariance) |

| Gain | |

|

|

(Gain or Weight) |

| Update | |

|

|

(State Update) |

|

|

(Covariance Update) |

| Initial conditions | |

|

|

|

| Jacobians | |

|

|

|

|

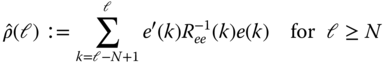

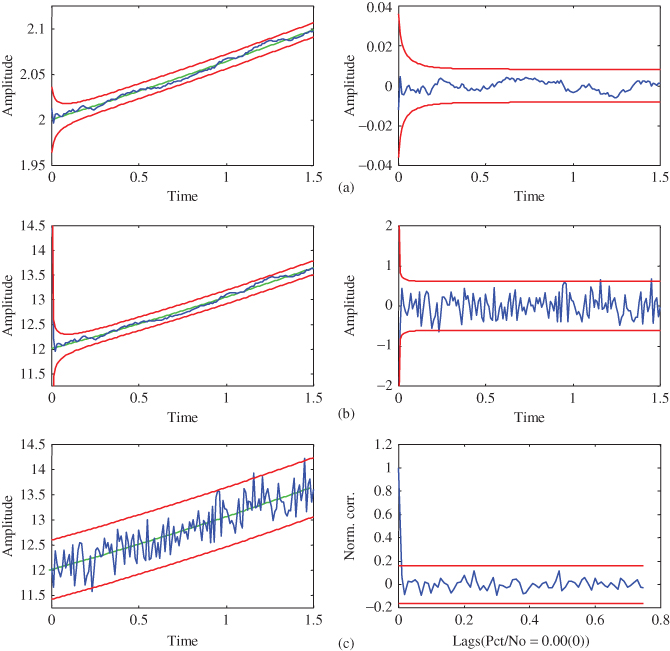

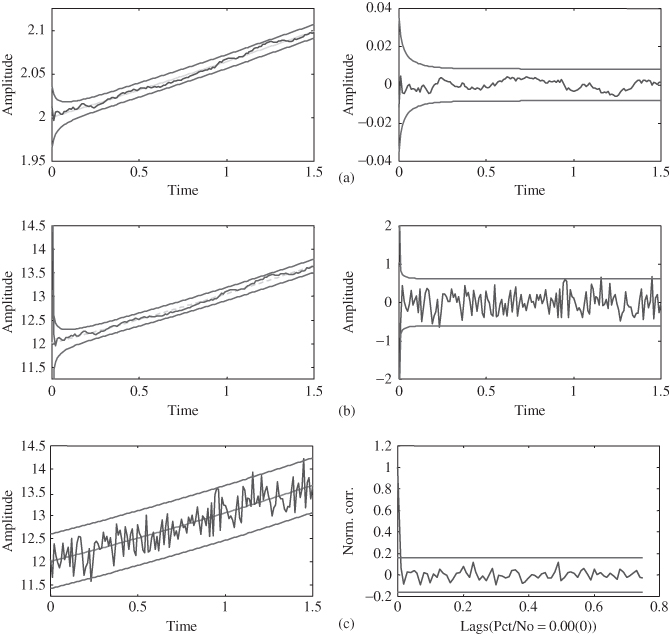

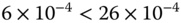

Figure 4.3 Nonlinear trajectory estimation: linearized Kalman filter (LZKF). (a) Estimated state ( out) and error (

out) and error ( out). (b) Filtered measurement (

out). (b) Filtered measurement ( out) and error (innovations) (

out) and error (innovations) ( out). (c) Simulated measurement and zero‐mean/whiteness test (

out). (c) Simulated measurement and zero‐mean/whiteness test ( and

and  out.

out.

4.3.2 Nonlinear Model‐Based Processor: Extended Kalman Filter

In this section, we develop the EKF. The EKF is ad hoc in nature, but has become one of the workhorses of (approximate) nonlinear filtering 3

– 6

, 8

13

–17 more recently being replaced by the UKF 18. It has found applicability in a wide variety of applications such as tracking 19, navigation 3

, 13

, chemical processing 20, ocean acoustics 21, seismology 22 (for further applications see 23). The EKF evolves directly from the linearized processor of Section 4.3.1 in which the reference state, ![]() , used in the linearization process is replaced with the most recently available state estimate,

, used in the linearization process is replaced with the most recently available state estimate, ![]() – this is the step that makes the processor ad hoc. However, we must realize that the Jacobians used in the linearization process are deterministic (but time‐varying) when a reference or perturbation trajectory is used. However, using the current state estimate is an approximation to the conditional mean, which is random, making these associated Jacobians and subsequent relations random. Therefore, although popularly ignored, most EKF designs should be based on ensemble operations to obtain reasonable estimates of the underlying statistics.

– this is the step that makes the processor ad hoc. However, we must realize that the Jacobians used in the linearization process are deterministic (but time‐varying) when a reference or perturbation trajectory is used. However, using the current state estimate is an approximation to the conditional mean, which is random, making these associated Jacobians and subsequent relations random. Therefore, although popularly ignored, most EKF designs should be based on ensemble operations to obtain reasonable estimates of the underlying statistics.

With this in mind, we develop the processor directly from the LZKF. If, instead of using the reference trajectory, we choose to linearize about each new state estimate as soon as it becomes available, then the EKF algorithm results. The reason for choosing to linearize about this estimate is that it represents the best information we have about the state and therefore most likely results in a better reference trajectory (state estimate). As a consequence, large initial estimation errors do not propagate; therefore, linearity assumptions are less likely to be violated. Thus, if we choose to use the current estimate ![]() , where

, where ![]() is

is ![]() or

or ![]() , to linearize about instead of the reference trajectory

, to linearize about instead of the reference trajectory ![]() , then the EKF evolves. That is, let

, then the EKF evolves. That is, let

Then, for instance, when ![]() , the predicted perturbation is

, the predicted perturbation is

Thus, it follows immediately that when ![]() , then

, then ![]() as well.

as well.

Substituting the current estimate, either prediction or update into the LZKF algorithm, it is easy to see that each of the difference terms ![]() is null, resulting in the EKF algorithm. That is, examining the prediction phase of the linearized algorithm, substituting the current available updated estimate,

is null, resulting in the EKF algorithm. That is, examining the prediction phase of the linearized algorithm, substituting the current available updated estimate, ![]() , for the reference and using the fact that (

, for the reference and using the fact that (![]() ), we have

), we have

yielding the prediction of the EKF

Now with the predicted estimate available, substituting it for the reference in Eq. (4.76) gives the innovations sequence as

where we have the new predicted or filtered measurement expression

The updated state estimate is easily obtained by substituting the predicted estimate for the reference (![]() ) in Eq. (4.77)

) in Eq. (4.77)

The covariance and gain equations are identical to those in Table 4.5

, but with the Jacobian matrices ![]() ,

, ![]() ,

, ![]() , and

, and ![]() linearized about the predicted state estimate,

linearized about the predicted state estimate, ![]() . Thus, we obtain the discrete EKF algorithm summarized in Table 4.6. Note that the covariance matrices,

. Thus, we obtain the discrete EKF algorithm summarized in Table 4.6. Note that the covariance matrices, ![]() , and the gain,

, and the gain, ![]() , are now functions of the current state estimate, which is the approximate conditional mean estimate and therefore is a single realization of a stochastic process. Thus, ensemble Monte Carlo

(MC) techniques should be used to evaluate estimator performance. That is, for new initial conditions selected by a Gaussian random number generator (either

, are now functions of the current state estimate, which is the approximate conditional mean estimate and therefore is a single realization of a stochastic process. Thus, ensemble Monte Carlo

(MC) techniques should be used to evaluate estimator performance. That is, for new initial conditions selected by a Gaussian random number generator (either ![]() or

or ![]() ), the algorithm is executed generating a set of estimates, which should be averaged over the entire ensemble using this approach to get an “expected” state, etc. Note also in practice that this algorithm is usually implemented using sequential processing and UD (upper diagonal/square root) factorization techniques (see 15

for details).

), the algorithm is executed generating a set of estimates, which should be averaged over the entire ensemble using this approach to get an “expected” state, etc. Note also in practice that this algorithm is usually implemented using sequential processing and UD (upper diagonal/square root) factorization techniques (see 15

for details).

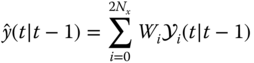

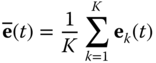

Table 4.6 Extended Kalman filter (EKF) algorithm.

| Prediction | |

|

|

(State Prediction) |

|

|

|

| (Covariance Prediction) | |

| Innovation | |

|

|

(Innovations) |

|

|

(Innovations Covariance) |

| Gain | |

|

|

(Gain or Weight) |

| Update | |

|

|

(State Update) |

|

|

(Covariance Update) |

| Initial conditions | |

|

|

|

| Jacobians | |

|

|

|

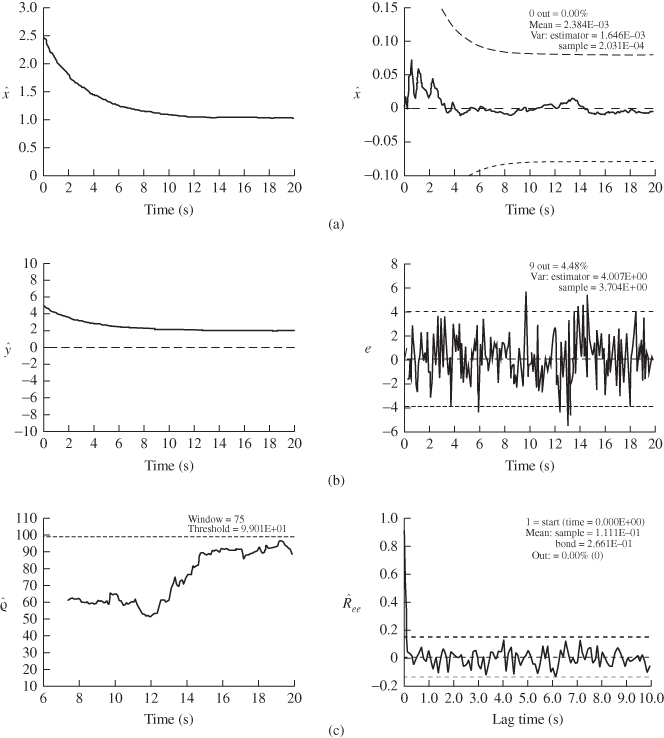

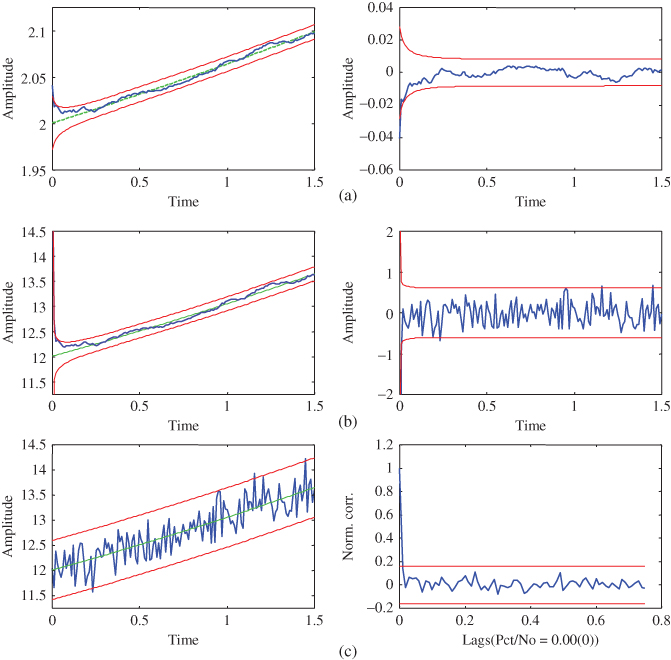

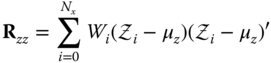

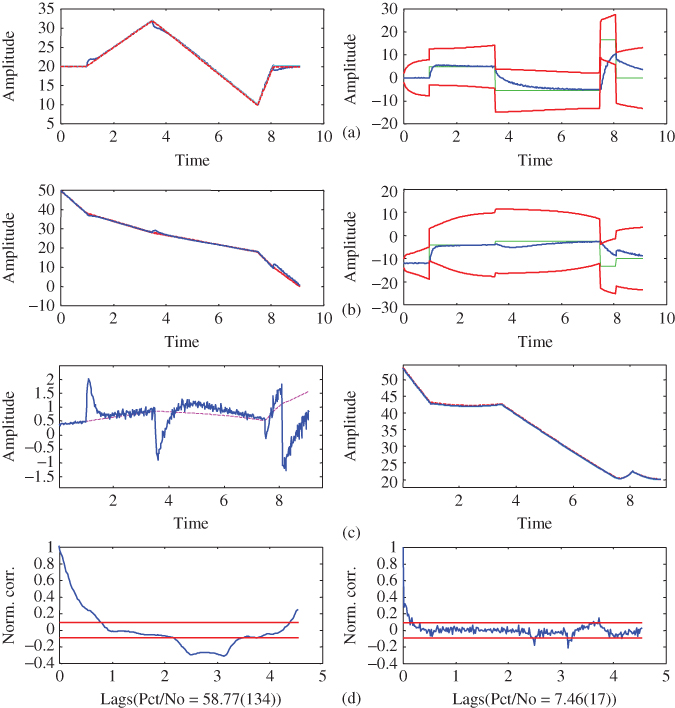

Figure 4.4 Nonlinear trajectory estimation: extended Kalman filter (EKF). (a) Estimated state ( out) and error (

out) and error ( out). (b) Filtered measurement (

out). (b) Filtered measurement ( out) and error (innovations) (

out) and error (innovations) ( out). (c) Simulated measurement and zero‐mean/whiteness test (

out). (c) Simulated measurement and zero‐mean/whiteness test ( and

and  out).

out).

4.3.3 Nonlinear Model‐Based Processor: Iterated–Extended Kalman Filter

In this section, we discuss an extension of the EKF to the IEKF. This development from the Bayesian perspective coupled to numerical optimization is complex and can be found in 2

. Here we first heuristically motivate the technique and then apply it. This algorithm is based on performing “local” iterations (not global) at a point in time, ![]() to improve the reference trajectory and therefore the underlying estimate in the presence of significant measurement nonlinearities 3

. A local iteration implies that the inherent recursive structure of the processor is retained providing updated estimates as the new measurements are made available.

to improve the reference trajectory and therefore the underlying estimate in the presence of significant measurement nonlinearities 3

. A local iteration implies that the inherent recursive structure of the processor is retained providing updated estimates as the new measurements are made available.

To develop the iterated–extended processor, we start with the linearized processor update relation substituting the “linearized” innovations of Eq. ( 4.76 ) of the LZKF, that is,

where we have explicitly shown the dependence of the gain on the reference trajectory, ![]() through the measurement Jacobian. The EKF algorithm linearizes about the most currently available estimate,

through the measurement Jacobian. The EKF algorithm linearizes about the most currently available estimate, ![]() and

and ![]() in this case. Theoretically, the updated estimate,

in this case. Theoretically, the updated estimate, ![]() , is a better estimate and closer to the true trajectory. Suppose we continue and relinearize about

, is a better estimate and closer to the true trajectory. Suppose we continue and relinearize about ![]() when it becomes available and then recompute the corrected estimate and so on. That is, define the

when it becomes available and then recompute the corrected estimate and so on. That is, define the ![]() th iterated estimate as

th iterated estimate as ![]() , then the corrected or updated iterator equation becomes

, then the corrected or updated iterator equation becomes

Now if we start with the 0th iterate as the predicted estimate, that is, ![]() , then the EKF results for

, then the EKF results for ![]() . Clearly, the corrected estimate in this iteration is given by

. Clearly, the corrected estimate in this iteration is given by

where the last term in this expression is null leaving the usual innovations. Also note that the gain is reevaluated on each iteration as are the measurement function and Jacobian. The iterations continue until there is little difference in consecutive iterates. The last iterate is taken as the updated estimate. The complete (updated) iterative loop is given by

A typical stopping rule is

The IEKF algorithm is summarized in Table 4.7. It is useful in reducing the measurement function nonlinearity approximation errors, improving processor performance. It is designed for measurement nonlinearities and does not improve the previous reference trajectory, but it will improve the subsequent one.

Table 4.7 Iterated–extended Kalman filter (IEKF) algorithm.

| Prediction | |

|

|

(State Prediction) |

|

|

|

| (Covariance Prediction) | |

|

LOOP: |

|

| Innovation | |

|

|

(Innovations) |

|

|

(Innovations Covariance) |

| Gain | |

|

|

(Gain or Weight) |

| Update | |

|

|

(State Update) |

|

|

(Covariance Update) |

| Initial conditions | |

|

|

|

| Jacobians | |

|

|

|

| Stopping rule | |

|

|

|

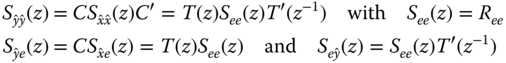

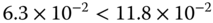

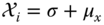

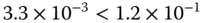

Figure 4.5 Nonlinear trajectory estimation: Iterated–extended Kalman filter (IEKF). (a) Estimated state ( out) and error (

out) and error ( out). (b) Filtered measurement (

out). (b) Filtered measurement ( out) and error (innovations) (

out) and error (innovations) ( out). (c) Simulated measurement and zero‐mean/whiteness test (

out). (c) Simulated measurement and zero‐mean/whiteness test ( and

and  out).

out).

Next we consider a different approach to nonlinear estimation.

4.3.4 Nonlinear Model‐Based Processor: Unscented Kalman Filter

In this section, we discuss an extension of the approximate nonlinear Kalman filter suite of processors that takes a distinctly different approach to the nonlinear Gaussian problem. Instead of attempting to improve on the linearized model approximation in the nonlinear EKF scheme discussed in Section 4.3.1 or increasing the order of the Taylor series approximations 16 , a statistical transformation approach is developed. It is founded on the basic idea that “it is easier to approximate a probability distribution, than to approximate an arbitrary nonlinear transformation function” 18 ,24,25. The nonlinear processors discussed so far are based on linearizing nonlinear (model) functions of the state and input to provide estimates of the underlying statistics (using Jacobians), while the transformation approach is based upon selecting a set of sample points that capture certain properties of the underlying probability distribution. This set of samples is then nonlinearly transformed or propagated to a new space. The statistics of the new samples are then calculated to provide the required estimates. Once this transformation is performed, the resulting processor, the UKF evolves. The UKF is a recursive processor that resolves some of the approximation issues 24 and deficiencies of the EKF of the previous sections. In fact, it has been called “unscented” because the “EKF stinks.” We first develop the idea of nonlinearly transforming the probability distribution. Then apply it to our Gaussian problem, leading to the UKF algorithm. We apply the processor to the previous nonlinear state estimation problem and investigate its performance.

The unscented transformation (UT) is a technique for calculating the statistics of a random variable that has been transformed, establishing the foundation of the processor. A set of samples or sigma‐points are chosen so that they capture the specific properties of the underlying distribution. Each of the ![]() ‐points is nonlinearly transformed to create a set of samples in the new space. The statistics of the transformed samples are then calculated to provide the desired estimates.

‐points is nonlinearly transformed to create a set of samples in the new space. The statistics of the transformed samples are then calculated to provide the desired estimates.

Consider propagating an ![]() ‐dimensional random vector,

‐dimensional random vector, ![]() , through an arbitrary nonlinear transformation

, through an arbitrary nonlinear transformation ![]() to generate a new random vector 24

to generate a new random vector 24

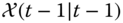

The set of ![]() ‐points,

‐points, ![]() , consists of

, consists of ![]() vectors with appropriate weights,

vectors with appropriate weights, ![]() , given by

, given by ![]() . The weights can be positive or negative, but must satisfy the normalization constraint that

. The weights can be positive or negative, but must satisfy the normalization constraint that

The problem then becomes

GIVEN these ![]() ‐points and the nonlinear transformation

‐points and the nonlinear transformation ![]() , FIND the statistics of the transformed samples,

, FIND the statistics of the transformed samples, ![]()

The UT approach is to

- Nonlinearly transform each point to obtain the set of new

‐points:

‐points:

- Estimate the posterior mean by its weighted average:

- Estimate the posterior covariance by its weighted outer product:

The critical issues to decide are as follows: (i) ![]() , the number of

, the number of ![]() ‐points; (ii)

‐points; (ii) ![]() , the weights assigned to each

, the weights assigned to each ![]() ‐point; and (iii) where the

‐point; and (iii) where the ![]() ‐points are to be located. The

‐points are to be located. The ![]() ‐points should be selected to capture the “most important” properties of the random vector,

‐points should be selected to capture the “most important” properties of the random vector, ![]() . This parameterization captures the mean and covariance information and permits the direct propagation of this information through the arbitrary set of nonlinear functions. Here we accomplish this (approximately) by generating the discrete distribution having the same first and second (and potentially higher) moments where each point is directly transformed. The mean and covariance of the transformed ensemble can then be computed as the estimate of the nonlinear transformation of the original distribution (see 7

for more details).

. This parameterization captures the mean and covariance information and permits the direct propagation of this information through the arbitrary set of nonlinear functions. Here we accomplish this (approximately) by generating the discrete distribution having the same first and second (and potentially higher) moments where each point is directly transformed. The mean and covariance of the transformed ensemble can then be computed as the estimate of the nonlinear transformation of the original distribution (see 7

for more details).

The UKF is a recursive processor developed to eliminate some of the deficiencies (see 7

for more details) created by the failure of the linearization process to first‐order (Taylor series) in solving the state estimation problem. Different from the EKF, the UKF does not approximate the nonlinear process and measurement models, it employs the true nonlinear models and approximates the underlying Gaussian distribution function of the state variable using the UT of the ![]() ‐points. In the UKF, the state is still represented as Gaussian, but it is specified using the minimal set of deterministically selected samples or

‐points. In the UKF, the state is still represented as Gaussian, but it is specified using the minimal set of deterministically selected samples or ![]() ‐points. These points completely capture the true mean and covariance of the Gaussian distribution. When they are propagated through the true nonlinear process, the posterior mean and covariance are accurately captured to the second order for any nonlinearity with errors only introduced in the third‐order and higher order moments as above.

‐points. These points completely capture the true mean and covariance of the Gaussian distribution. When they are propagated through the true nonlinear process, the posterior mean and covariance are accurately captured to the second order for any nonlinearity with errors only introduced in the third‐order and higher order moments as above.

Suppose we are given an ![]() ‐dimensional Gaussian distribution having covariance,

‐dimensional Gaussian distribution having covariance, ![]() , then we can generate a set of

, then we can generate a set of ![]() ‐points having the same sample covariance from the columns (or rows) of the matrices

‐points having the same sample covariance from the columns (or rows) of the matrices ![]() . Here

. Here ![]() is a scaling factor. This set is zero‐mean, but if the original distribution has mean

is a scaling factor. This set is zero‐mean, but if the original distribution has mean ![]() , then simply adding

, then simply adding ![]() to each of the

to each of the ![]() ‐points yields a symmetric set of

‐points yields a symmetric set of ![]() samples having the desired mean and covariance. Since the set is symmetric, its odd central moments are null, so its first three moments are identical to those of the original Gaussian distribution. This is the minimal number of

samples having the desired mean and covariance. Since the set is symmetric, its odd central moments are null, so its first three moments are identical to those of the original Gaussian distribution. This is the minimal number of ![]() ‐points capable of capturing the essential statistical information. The basic UT technique for a multivariate Gaussian distribution 24

is

‐points capable of capturing the essential statistical information. The basic UT technique for a multivariate Gaussian distribution 24

is

- Compute the set of

‐points from the rows or columns of

‐points from the rows or columns of  . Compute

. Compute  .

where

.

where

is a scalar,

is a scalar,  is the

is the  th row or column of the matrix square root of

th row or column of the matrix square root of  and

and  is the weight associated with the

is the weight associated with the  th

th  ‐point.

‐point. - Nonlinearly transform each point to obtain the set of

‐points:

‐points:  .

. - Estimate the posterior mean of the new samples by its weighted average

- Estimate the posterior covariance of the new samples by its weighted outer product

The discrete nonlinear process model is given by

with the corresponding measurement model

for ![]() and

and ![]() . The critical conditional Gaussian distribution for the state variable statistics is the prior 7

. The critical conditional Gaussian distribution for the state variable statistics is the prior 7

and with the eventual measurement statistics specified by

where ![]() and

and ![]() are the respective corrected state and error covariance based on the data up to time

are the respective corrected state and error covariance based on the data up to time ![]() and

and ![]() ,

, ![]() are the predicted measurement and residual covariance. The idea then is to use the “prior” statistics and perform the UT (under Gaussian assumptions) using both the process and nonlinear transformations (models) as specified above to obtain a set of transformed

are the predicted measurement and residual covariance. The idea then is to use the “prior” statistics and perform the UT (under Gaussian assumptions) using both the process and nonlinear transformations (models) as specified above to obtain a set of transformed ![]() ‐points. Then, the selected

‐points. Then, the selected ![]() ‐points for the Gaussian are transformed using the process and measurement models yielding the corresponding set of

‐points for the Gaussian are transformed using the process and measurement models yielding the corresponding set of ![]() ‐points in the new space. The predicted means are weighted sums of the transformed

‐points in the new space. The predicted means are weighted sums of the transformed ![]() ‐points and covariances are merely weighted sums of their mean‐corrected, outer products.

‐points and covariances are merely weighted sums of their mean‐corrected, outer products.

To develop the UKF we must:

- PREDICT the next state and error covariance,

, by UT transforming the prior,

, by UT transforming the prior,  , including the process noise using the

, including the process noise using the  ‐points

‐points  and

and  .

. - PREDICT the measurement and residual covariance,

by using the UT transformed

by using the UT transformed  ‐points

‐points  and performing the weighted sums.

and performing the weighted sums. - PREDICT the cross‐covariance,

in order to calculate the corresponding gain for the subsequent correction step.

in order to calculate the corresponding gain for the subsequent correction step.

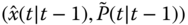

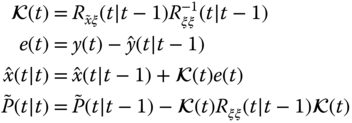

We use these steps as our road map to develop the UKF. The ![]() ‐points for the UT transformation of the “prior” state information is specified with

‐points for the UT transformation of the “prior” state information is specified with ![]() and

and ![]() ; therefore, we have the UKF algorithm given by

; therefore, we have the UKF algorithm given by

- Select the

‐points as:

‐points as:

- Transform (UT) process model:

- Estimate the posterior predicted (state) mean by:

- Estimate the posterior predicted (state) residual and error covariance by:

- Transform (UT) measurement (model) with augmented

‐points to account for process noise as:

‐points to account for process noise as:

- Estimate the predicted measurement as:

- Estimate the predicted residual and covariance as:

- Estimate the predicted cross‐covariance as:

Clearly with these vectors and matrices available the corresponding gain and update equations follow immediately as

We note that there are no Jacobians calculated and the nonlinear models are employed directly to transform the ![]() ‐points to the new space. Also in the original problem definition, both process and noise sources

‐points to the new space. Also in the original problem definition, both process and noise sources ![]() were assumed additive, but not necessary. For more details of the general process, see the following Refs. 26–28. We summarize the UKF algorithm in Table 4.8.

were assumed additive, but not necessary. For more details of the general process, see the following Refs. 26–28. We summarize the UKF algorithm in Table 4.8.

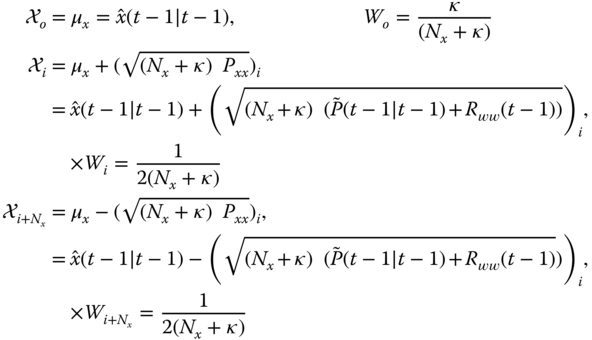

Table 4.8 Discrete unscented Kalman filter (UKF) algorithm.

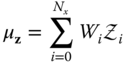

| State: σ‐points and weights |

|

|

|

|

|

|

| State prediction |

|

|

|

|

| State error prediction |

|

|

| Measurement: |

| Measurement prediction |

|

|

| Residual prediction |

|

|

|

|

| Gain |

|

|

|

|

| State update |

|

|

|

|

| Initial conditions |

|

|

Before we conclude this discussion, let us apply the UKF to the nonlinear trajectory estimation problem and compare its performance to the other nonlinear processors discussed previously.

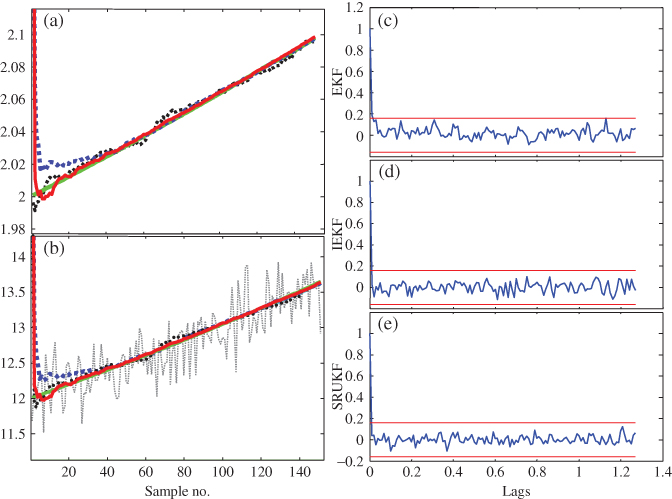

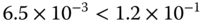

Figure 4.6 Nonlinear trajectory estimation: unscented Kalman filter (UKF). (a) Trajectory (state) estimates using the EKF (thick dotted), IEKF (thin dotted), and UKF (thick solid). (b) Filtered measurement estimates using the EKF (thick dotted), IEKF (thin dotted), and UKF (thick solid). (c) Zero‐mean/whiteness tests for EKF: ( out). (d) Zero‐mean/whiteness tests for IEKF: (

out). (d) Zero‐mean/whiteness tests for IEKF: ( out). (e) Zero‐mean/whiteness tests for UKF: (

out). (e) Zero‐mean/whiteness tests for UKF: ( out).

out).

4.3.5 Practical Nonlinear Model‐Based Processor Design: Performance Analysis

The suite of nonlinear processor designs was primarily developed based on Gaussian assumptions. In the linear Kalman filter case, a necessary and sufficient condition for optimality of the filter is that the corresponding innovations or residual sequence must be zero‐mean and white (see Section 4.2 for details). In lieu of this constraint, a variety of statistical tests (whiteness, uncorrelated inputs, etc.) were developed evolving from this known property. When the linear Kalman filter was “extended” to the nonlinear case, the same tests can be performed based on approximate Gaussian assumptions. Clearly, when noise is additive Gaussian, these arguments can still be applied for improved design and performance evaluation.