Chapter Two

A Fire Upon the Deep1

In his much acclaimed book, Sapiens,2 Yuval Harari points out that humans are a social species by design as much as by choice; in other words, social behavior is actually written into our biology. Harari suggests that the taming of fire was the trigger that would eventually lead to the development of language, cognition and social skills. Fire allowed early humans to survive in unfriendly environments. Being able to cook food instead of eating it raw meant fewer infections and less time spent eating and digesting. Fire gave us control over our surroundings. Armed with fire, even the most fragile members of the tribe could burn down a whole forest in no time and, once the fire had died down, could harvest large amounts of everything from torched animals to nuts and kernels.

Being able to eat more in less time and gaining access to more opportunity is believed to have slowly changed our biology over a period of about one hundred thousand years. We no longer needed a Herculean muscle mass and sharp teeth just to get through an ordinary day in the jungle or forest. This freed up nutritional resources that were better invested in the power of cognition. Gradually, we became more agile and flexible creatures with bigger brains.

Michael Tomasello, Esther Hermann et al. proposed the cultural intelligence hypothesis in 2005,3 a theory that elaborates on how the human brain developed intelligence a lot faster and more effectively than any other primate on the planet through a couple of mediating processes that was partially based on culture and the social nature of mankind.

Whereas primates in general have evolved sophisticated social-cognitive skills for better competition and cooperation purposes, humans—being ultra-social—evolved additional skills, which enabled us to create specific cultural groups, each operating with a distinctive set of artifacts, symbols, social practices and institutions. To function effectively within the cultural context, human children must learn to use these artifacts and tools through more or less formalized learning processes.

This requires special social-cognitive skills of learning, communication and bonding and this especially powerful and early use of social-cultural cognition is proposed to serve as a kind of “bootstrap” or mediating process for the distinctively complex development of human cognition in general. Again, yet another ramification of why the human brain is highly susceptible to anything that triggers our socially wired brain patterns.

Developing Bigger Brains Was an Evolutionary Gamble

There was a trade-off to getting a bigger brain. On one hand, it made us better at hunting, gathering, figuring things out, planning ahead, communicating and organizing ourselves into groups, clans, tribes and eventually societies. But it also meant danger and added risk. Bigger brains meant bigger heads and thus an increased risk of mother and/or child dying during birth. Furthermore, getting that big brain to work properly meant that children needed many years of nurturing before reaching self-sufficiency. In turn, arose the need to organize ways in which children and parents could remain together and could remain safe over long periods of time. Quite a few anthropologists (in particular in the field of biological anthropology) believe that this dependency created “social brains.”4

Our understanding of human evolution is to a large extent based on looking at what our ancestors left behind (including their skulls!). Mapping the modern, conscious human brain is a puzzle game for anthropologists, archaeologists and sociologists trying to understand the thought processes behind the artifacts left behind.5 One thing that most researchers agree on, however, is that symbolic thinking—the ability to imagine things—is crucial to the development of conscious thought and the development of modern humanity. What we do know is that Homo sapiens apparently first appeared in East Africa around 150 000 years ago. Back then, we were a fairly inconsequential species, but around 70 000 years ago an extraordinary cognitive revolution erupted.

It's hard to pinpoint exactly when the modern human brain actually evolved. Is our ability to think and reason a function of possessing language or are there other factors involved? After all, many species of animals have some sort of language. Current anthropological and paleoanthropological6 thinking has identified different factors that when brought together may have been the basis for the emergence of human consciousness and cognition:

- The need to warn or orient other members of your group. For example, green monkeys can warn each other of an approaching lion.7

- The need for sharing information about the internal workings of groups (hierarchies, ranking of groups, social strata) as a means of giving birth to more complex language.

- The ability to imagine things that do not exist. The truly unique feature of human language is not its ability to transmit information about men and lions but to imagine things that do not exist at all; in other words, symbolic thinking.8

In a relatively brief span of time—by most estimates as little as 30 000 to 40 000 years—we Homo sapiens became cognizant, developed language and social skills, increased our life span, started travelling, and made a myriad of inventions (clothing, tools, weapons). We even developed a range of new intellectual concepts such as art, religion and trading. All of this took place because something just as stupendous was going on inside our skulls—the human brain was growing and adapting, getting larger, smarter and much more capable of solving problems, laying plans and using tools.

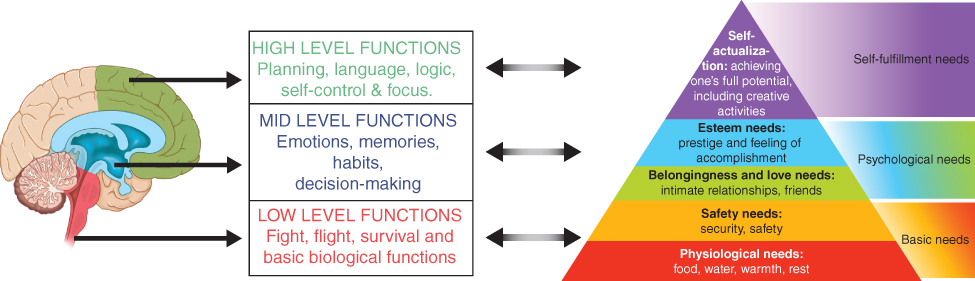

The Three Layers of Your Brain9

To fully appreciate the invasiveness of current brain-hacking techniques, it's helpful to learn a few brain basics. A good place to start is the triune brain model, formulated in the 1960s by neuroscientist Paul D. MacLean.10 Although the model is highly simplified and in some details inaccurate and therefore a bit outdated, it still provides an easy-to-understand approximation of the hierarchy of brain functions. The triune model describes the brain as an inverted pyramid with three tiers, each of which formed as a response to a major evolutionary challenge requiring the brain to cope with increasingly complex demands.

The brain's three main components—the triune—developed independently of each other, starting with the most primitive layer that controls basic, involuntary functions and progressing to the most evolved layer, the one containing most of the functions that distinguishes humans from other animals. Although in terms of practical function the relationships among the three tiers of the brain are more complex and nuanced with areas of overlap, the model gives the essence of brain mechanics.

The nervous system, the brain stem, the deep nuclei and cerebellum came first. These parts of your brain run basic functions such as breathing and heart rate. They also manage features such as balance, motor skills and coordination.

Next came your limbic brain, also known as the mammal brain, which manages emotions and feelings (including anxiety and the fight–flight–freeze response).

While all of this came early, your neocortical brain with its frontal lobes (sometimes also known as your cerebrum) probably came somewhat later in the history of human evolution. This is the part of your brain that enables you to speak, think, solve problems, remember phone numbers and so forth.

In essence, as you move through life, making decisions and checking your mail, driving your car or walking down steps, different neural operating systems are functioning simultaneously. Because these systems are mostly running on autopilot, you normally don't need to worry about them. Beneath the level of consciousness, your lower brain keeps you breathing while your higher brain keeps you in your lane.

The brain is brilliant in spontaneously rearranging its division of labor between layers. Learning is handled by the “newest” part of your brain, the neocortical brain and frontal lobes. But once the task is routine, its required actions are passed on to the cerebellum and deep nuclei where they can run on “autopilot.”

This is why you can have a conversation while driving or practice the guitar while watching a movie. This is obviously a very practical feature of your brain. However, it is also a feature that can be “hacked” by the addictive design techniques embedded in your smartphone or the notification stream of your social media. More about that later in this book.11

So, it's clear that the mammalian brain evolved to such an extent that it (happened to) meet an ever-shifting array of mammalian needs. But what are these needs? Although not without its critics, Maslow's hierarchy of needs is widely accepted as a useful baseline for most discussions about fundamental human necessities.12

Recent research supports Maslow's core premise that we experience a fulfilling and satisfying life when certain needs are met. In 2011, for example, researchers from the University of Illinois led a study13 that put the hierarchy of needs to the test worldwide. The researchers found that humans have specific universal needs and that we feel we have achieved fulfillment when these needs are met. Lead investigator Ed Diener concluded:

Our findings suggest that Maslow's theory is largely correct. In cultures all over the world the fulfilment of his proposed needs correlates with happiness. However, an important departure from Maslow's theory is that we found that a person can report having good social relationships and self-actualization even if their basic needs and safety needs are not completely fulfilled.

In other words, close social ties and intimacy are needs, not accessories.

Interestingly, when the triune brain model and Maslow's hierarchy of needs are put side by side as shown on the opposite page, there seems to be a near-perfect match between the brain's three-tiered functionality and our three categories of needs. The brain's three tiers—the primal brain stem, the psycho-emotional limbic system and the super-egoic neocortex—neatly reflect Maslow's three categories of need.

Your experience of basic needs being met is felt through impulses, reflexes and hormones and regulated mostly below conscious control. The emotional needs, met by the “emotional brain” experiencing a rise in certain hormonal levels, make us feel that we are loved or cared about. Finally, the need for self-fulfillment is in many ways the product of thought processes coming from the high-level functions that allow us to plan ahead and undertake complex analytical thought processes.

One reason that social media has the power to absorb and engage us is that it mimics the fulfillment of many social needs. However, mimicking fulfillment of social needs is not the same as actually fulfilling those needs: digital “fake socialization” fails to deliver a sufficient rise in the brain's level of hormones, needed to create the actual feeling of belonging or being loved. Especially in younger people whose social skills are still being formed, social media mimicking can supplant the necessity of taking the real-life emotional risks necessary to reach intimacy with others, making it even harder to develop the necessary social skills to be used in the physical world.

Moreover, as anyone with about five minutes' experience with digital communications knows, heavy use of texting to communicate feelings in a relationship can go off track quickly and badly. In fact, researchers have found that if you text too much with your partner, there might be less satisfaction in the relationship, especially if you are male. This texting study also concluded that:

There is a large body of research indicating that negative communication can have an absorbing effect in a relationship and ultimately lead to destabilization and eventual dissolution, so setting up a ratio of positives to negatives is a way to counteract those effects. And since verbal and facial cues are an incremental part of communication, and these cues are missing in non-vocal types of communication, such as texting and emailing, partners may misconstrue messages and attribute emotional meaning that is absent, because emotional cues are often intuited from vocal inflection. Clinicians can use this information to encourage clients to be mindful and purposeful about the content of messages sent in romantic relationships, and to be slow to interpret meaning in technology communications.14

In other words: make sure that you don't let heart emojis get in the way of showing real human affection because it simply doesn't fill that need.

As with other potentially addictive or compulsive activities, the context in which you engage in it can make all the difference. To wit, a 2017 Duke University study published in the journal Current Directions in Psychological Science15 found that college students' experience of technology, be it positive or negative, had less to do with how much they used it than it did with how they used it.

The researchers found that college freshmen were mainly using Facebook to keep in touch with their friends from high school, and the more time they spent online, the less time they had for building new friendships on campus. This inevitably led to increased feelings of loneliness. In contrast, college seniors were using Facebook mainly to communicate with friends on campus. So, the more time they spent online, the more connected they felt. And while there are clear drawbacks to allowing the digital world to become one's primary point of contact with humanity, we are not completely defenseless. One of our best weapons against this mental army of occupation is awareness of our intention.

The fact is, our analog brain isn't wired for the concept of “digital friendships.” Being online does not allow us to read social cues and bond the way we evolved to do in the physical presence of others. It is true that when you engage in social media, the brain ticks off a box in the social relations category. But it's a little like someone whose health relies on a certain medication, but the person is given sugar pills by mistake. The placebo effect may carry them along for a while, but if the medication is essential, the body will eventually start to break down. Just as is the case with what you actually get out of social media interactions.

In essence you are being tricked into thinking you've gotten something you urgently need—but without having actually obtained it. The “it” in this case being nothing less than human connection. It's like trying to water a plant with a picture of water or paying a bill by jangling change.

Digital communication typically doesn't allow you to see, feel or even sense the other person you just interacted with, yet your brain has marked the need to do this down as “accomplished.”

Obviously if you have few or no friends at all, then surely having friends online is better than no friends at all. And of course, it's also true that the kind of social relations that people form online surely can fill some of the need for contact with other humans—however, it's important that we don't forget the obvious: humans need humans. Not by choice, but by evolutionary design. Our powerful psychological and emotional needs are an inherent part of our humanity.

Our hierarchy of needs starts with the elemental substances like water and food. Moving up the pyramid we ascend to the psycho-emotional realm with our need for love and affection. And finally, we wind up with the more sublime concept of “self-actualization,” meaning that we can now set our sights beyond our own immediate need for survival and project our energies into creative, altruistic or more universal considerations.

We can agree on a certain baseline of needs merely to sustain a beating heart: water, a certain number of calories, protection from food and cold. But step one yard outside the iron circle of strict biological sustenance, and the word “need” becomes very subjective. Whether or not one's needs are being met can be a matter of perception: does one “need” a certain kind of car, a certain amount of space to live in, a gym membership, or for that matter a better smartphone?

Because our sense of “need” is largely conditioned, it can be reconditioned. On the Internet, we will always be convinced of needs we didn't know we had. Et voila! A new item—on sale!—will suddenly appear to match this need. There is also the encouragement to “share” your purchase online, which means that if your peer group engages in such exhibitionism, you may be persuaded that your lack of the Bird Man Hybrid Jetpack recently acquired by three of your friends (that you've never met personally) is a tragic injustice.

Facebook has been shown strongly to trigger and reinforce social comparison, a psychological trait that biases you toward perceiving superior traits in others. This naturally evokes negative feelings of inferiority, envy and depression, according to a 2017 review of research on social media usage quoted by the Harvard Business Review.16 Of course, media in general and social media in particular are powerful reinforcers of popular notions about attractiveness and other aspects of the desire realm.

But today's digital user gets it from all sides: glimpses into the “lives” of others that may look more attractive than one's own, followed by a left hook in the form of banner ads and biased search returns that continually shrink our horizons by anticipating and reinforcing our desires.

Other research cited by the Association for Psychological Science has found:

- The more time you spend on Facebook and the more actual strangers you have as Facebook friends, the more likely you are to feel that others have got better lives than you do.17

- In daily diary research, more time spent on Facebook was associated with more social comparison, which was in turn associated with higher levels of depression; reversed models that attempted to treat depression as the mediator between Facebook use and social comparison did not fit the data.18

- People who are more likely overall to compare themselves with others are both more likely to use Facebook and more likely to suffer from lower self-esteem after Facebook use.19

- Experimental work also confirms that comparing oneself with superior others' profiles on social network sites can result in greater dissatisfaction with one's achievements.20

While it is true that “self-esteem” is an intangible and that any measurement of it is based on subjective reporting, low self-esteem as well as real or perceived threats to one's self-image is correlated to the amount of cortisol—the stress hormone—present in your body. This brings us to a little talked about part of the brain known as the hypothalamic pituitary adrenal, or HPA, axis, which regulates the body's stress response and release of cortisol and other stress hormones.

Researchers have only recently begun to look into the interplay between digital behavior and the HPA axis, but in one study they were able to show that “body shaming” and negative physical social comparisons measurably triggered cortisol response and HPA axis activity in 44 healthy students.21

What's more, it seems likely to emerge that giving young children a free hand with smartphones and tablets could set them up for stress problems later in life. A 2014 study in the journal Psychoneuroendocrinology found that young children who suffer social isolation—which overuse of digital devices can certainly engender—may sustain permanent damage to their HPA axis and experience difficulties coping with stress later in life.22

As noted, research into social media and stress reveals some opposing and sometimes contradictory threads. It's hard to know in what proportions one's relationship to digitalia is a projection of the user or a function of the medium manipulating the user. But it's easy to see how constant engagement, especially for younger people, could reinforce isolation in those who already have a tendency toward it.

Children whose self-image is still being formed and who may suffer from normal transitional insecurities are vulnerable for obvious reasons; Facebook use has indeed been shown both to enhance healthy social habits as well as exaggerate bad ones. But it's one thing to solidify one's sense of self on a school playground and quite another to do it amidst an onslaught of wishfully constructed personality profiles in a never-ending pageant of faux self-satisfaction.

According to social self-preservation theory, the HPA axis is a key part of the individual's social self-preservation system, the job of which is to scan the environment for threats to social status or social esteem. Threats trigger both cortisol production and negative thought-streams and emotions.

“Given that Facebook use has been associated with social status and social esteem,” speculates the journal Frontiers in Psychology, “it is possible that Facebook use could be viewed as a threat to self-preservation and may induce similar physiological effects.”23

This comment was in relation to a study in which participants were asked to engage with their own Facebook profile immediately after being dealt an acute social stressor. These people experienced “significantly higher levels of objective stress compared to control participants (i.e. their recovery from stress was delayed).” The study surmised that the stress was in response to the perception that one's self-preservation was under attack.

It is clear that living with a smartphone as a quasi-extension of self is like having a huge bundle of exposed nerves growing out of your palm; the additional stimuli that you absorb are going to keep your fight or flight response perpetually triggered. The constant self-generated pressure to check calls, notifications and alerts, texts, social media tags and email keeps the adrenal glands in a constant state of agitation. This, along with spikes in cortisol, is a recipe for heart disease and numerous other mortal pathologies. And we're only beginning to contemplate the long-term implications. It is quite possible that we'll see a continuing rise in heart-related problems as these cortisol spikes lead to an avalanche of issues including high blood pressure, increased heart rate and anxiety.

None of this, of course, is going to improve anyone's overall mental performance or sense of well-being.

Prefiltering and Neuroplasticity: Your Adaptable Brain

Every second of the day, your brain is bombarded by a staggering amount of information, a process also known as sensory input. This information stream is so massive that your brain's processing power cannot cope with all of it. To deal with this overflow, the brain has developed two different coping mechanisms: prefiltering and neuroplasticity.

The first term, prefiltering, is an autonomous process that has a couple of important functions. Not only is most of your sensory input filtered without any conscious interaction, it also allows you to categorize your sensory inputs in larger and meaningful parts to make it easier to process information about the world around you. It's a bit like your own mental overload protection. But it was not designed to protect you against the kind of mind hacking that we'll be discussing later.

The second term, neuroplasticity,24 covers the biological processes that keep your brain centers renewed, allowing some new nerve cells to be created but mostly keeping existing nerve cells active and fully functional25 by continuously revising the connections between nerve cells. Neuroplasticity also enables your brain to create new connections and networks—in essence to rewire itself to an almost unbelievable degree. And as we'll see in later chapters of this book, the businesses that target and resell your attention have a vested interest in exploiting this rewiring capability to get you hooked on their products.

Is Your Consciousness Time-Shifted?

In his book, The User Illusion—Cutting Consciousness Down to Size,27 Tor Nørretranders explains just how little of our own supposed “consciousness” we actually control.28 Our sensory devices (eyes, ears, smell, taste, tactility) generate huge amounts of data. This data first gains entry through the subconscious parts of the brain where the already-mentioned prefiltering takes place and data is processed and sorted, after which only one part in a million is forwarded to your “consciousness.” This separating of the relevant from the irrelevant takes place entirely without any conscious interaction.29

Subconscious processes also play a dominant role in what you would normally consider conscious physical actions. In one famous study by Benjamin Libet,30 subjects were wired with electrodes recording the electrical activity in their brain and then asked to look at a light moving up and down a pole with markers. They were told to bend their finger whenever they wished but to remember where on the pole the light was when they made the decision to bend their finger. Surprisingly, the experiment showed that electrical activity in the cortical areas of the brain began approximately half a second before the subjects watching the timer believed they had decided to trigger the movement.

This half-second delay—the “hesitation of consciousness”—gave rise to fierce discussion between researchers focusing on consciousness and neuroscience. Because when a touch on the skin is felt with virtually no delay, to claim that a time lapse occurs when you bend your finger didn't seem logical. However, this delay has since been further proven in electrical brain stimulation experiments during open skull operations and it is now clear that something is going on “under the hood,” before we realize it “ourselves.” Subconscious parts of your brain may already be in the process of acting before “you” become aware of it consciously.

According to Nørretranders, the explanation for this strange discrepancy is as spectacular as it is counter-intuitive: he—together with other researchers such as Libet—claims that everything we experience with our consciousness is an illusion. It's an illusion that has arisen because our subconscious brain doesn't just summarize our sensory input but also time-shifts it to make experienced sensation fit our conscious experience. The subconscious part of the brain censors and sequences reality for us and what seems to our consciousness to be going on in “real time” is in reality time-shifted by about half a second by subconscious processes in order to keep your consciousness running in sync with your sensory input. Another, less deterministic way to view our consciousness, would be to claim that every conscious thought starts as a subconscious signal, and that the generation of consciousness is the endpoint of cogitation that enables us to communicate our experiences to others and to learn from them ourselves.

Not everyone agrees with the idea of the “hesitation of consciousness” and the claim has been made that the subjects in the Libet study were not unaware of the task, even though they were free to choose a time to move. The task is foremost in their consciousness and constantly activates a series of possible neuronal actions, only one of which is recorded when they finally choose to act.

However, regardless of whether the “hesitation of consciousness” theory is right or not, there is little doubt that the subconscious processing and filtering function of our brains provides an explanation as to why our consciousness is so relatively easily won over by technologies that constantly seek our attention.31

To Be or Not to Be Conscious

The American philosopher and psychologist William James suggested32 that people generally possess two types of “thinking”: associative and reason-based. Associative thinking is reactive and based on past experiences while reason-based thinking is suited to situations where you lack experience and must reason your way to a decision. In his book Thinking, Fast and Slow, Nobel Memorial Prize-winning psychologist and economist Daniel Kahneman33 and his associate Amos Tversky describe how we use these two different types of thought processes or systems when making decisions.34 Kahneman researched these two systems extensively and has been able to show that your autopilot (what William James called “reactive thinking”) makes “shortcuts” into areas where consciousness does not follow.35 Your autopilot is constantly active. It sorts impressions and renders verdicts, but its judgments are based not on reason and analysis but on instincts and associations, and it often “shoots from the hip.”36

For example, imagine that you are given a description of Simone and Bob. Simone is intelligent, diligent, impulsive, critical, stubborn and envious. Bob is envious, stubborn, critical, impulsive, diligent and intelligent. If you are like most people, you will immediately consider Simone more sympathetic than Bob. But the reality is that the two people were described in exactly the same words, just in a different order. This example demonstrates that the order of adjectives colors your overall experience because your fast-moving autopilot bases its judgments on associations and first-hand impressions. The first words are recorded as either positive or negative and then color the rest of the experience.37

According to Kahneman, in contrast to your “autopilot,” the conscious part of your decision-making system is slow and lazy. It requires a lot of energy and discipline to operate. It keeps an eye on the autopilot and reflects on whether your automatic reactions or “gut feelings” are inappropriate or problematic. Its tasks are all characterized by the need for attention and resources, such as analyzing the data on an Excel sheet, making business decisions or value-based choices.

In modern cognition and consciousness research it is noteworthy that the two different thought processes for practical reasons are named the reflective and pre-reflective self-consciousness, indicating that you are always conscious to some extent, but the difference between the two states is whether or not you reflect on what you're conscious about.38

Out of Control

Recently the American professor of psychology Ezequiel Morsella proposed a theory of consciousness in line with Kahneman and Nørretranders but describing consciousness as being far more automated and far less purposeful than previously assumed. Morsella's passive frame theory, based on 10 years of research and testing, suggests that the conscious mind is like an interpreter helping speakers of different languages communicate. “The interpreter presents the information but is not the one making any arguments or acting upon the knowledge that is shared,” Morsella said. “Similarly, the information we perceive in our consciousness is not created by conscious processes, nor is it reacted to by conscious processes. Consciousness is the middle-man, and it doesn't do as much work as you think."39

According to Morsella, the “free will” that people typically attribute to their conscious mind—the idea that our consciousness acts as “decider” and guides us to a course of action—does not exist. Instead, consciousness only relays information to control “voluntary” action or goal-oriented movement involving the skeletal muscle system.

Another way to understand Morsella's theory is to use the Internet as an analogy. Here you can buy books, book hotel rooms and do thousands of other things. But in reality, the Internet does not do anything on its own. It is the user behind the screen or smartphone who decides what to do. The Internet simply performs the actions it was originally designed and programmed to perform. The concept of “free will” is based on the idea that your “consciousness” determines what it wants to happen and then tries to make it happen. But the truth is actually the reverse, according to Morsella. All of the “planning” preceding a physical action is in fact taken care of by parts of your brain that don't allow any conscious control or input into this process! Because the human mind experiences its own consciousness as a process of sifting through urges, thoughts, feelings and physical actions, people believe that their consciousness is in control of these myriad impulses. But in reality, Morsella argues, consciousness does the same simple task over and over, giving the impression that it is doing more than it actually is. “We have long thought consciousness solved problems and had many moving parts, but it's much more basic and static,” Morsella said. “This theory is very counterintuitive. It goes against our everyday way of thinking."40

According to Morsella, the reason for this is straightforward. As you know, the newest part of the human brain is just a few hundred thousand years old. As with all other animals, the more primitive part of the brain manages the reflexes and instincts that keep us breathing, tell us to run when we're threatened, tell us that we're hungry or thirsty, or that it's time for a nap. These primal functions are constant and need not burn energy by burdening the higher brain with analysis.

However, as humans became more complex and social beings, as we began to speak, feel, use tools and, in general, interact in a more reasoned way with our world, new brain functions were required and these manifested as overlays on top of existing brain parts, each improving the species' chances for survival by making us more adaptable to our environments. In other words, the development of these brain functions that we call “higher brain functions,” is in reality just an adaptation function. They're simple extensions that the primitive brain has developed in order to carry out required thoughts or actions faster or more easily. However, the heaviest part of the work is done long before “consciousness” and the “higher brain functions” kick in. One of Morsella's explanations for our difficulty in understanding the function of consciousness is that we simply are not conscious enough to understand the explanation or its implications on our behavior.

Is Consciousness a Question of Being in the Spotlight?

Bernard J. Baars, a senior fellow at the Neurosciences Institute in La Jolla, California, offers a different model to help us understand how consciousness acts to match conscious and subconscious processes. His model, global workspace theory,41 can be explained in terms of a “theater metaphor.”

In the “theater of consciousness” a “spotlight of selective attention” shines a bright spot on stage. The bright spot reveals the contents of consciousness, actors moving in and out, making speeches or interacting with each other. The audience is not lit up—it is in the dark (i.e. subconscious) watching the play. Behind the scenes and also in the dark, are the director (executive processes), stage hands, script writers, scene designers and the like. They shape the visible activities in the bright spot but are themselves invisible.42

In other words, consciousness is a constant flow of presence and information but is only available to us as self-understood consciousness when directed onto a particular item or task. Interestingly, both the Morsella and the Baars models are capable of accounting for how information flows between consciousness and subconscious processes—between our new neocortex and our older cerebellum and limbic systems—between what Kahneman labels as respectively “slow” and “fast” thinking.

Models Are Not Reality

The models presented by Nørretranders, Kahneman, Morsella and Baars all deal with the issues of how consciousness manifests and how cognitive processes rely on being able to shift back and forth between different processing modes in the brain. But these models do not represent “truth” as much as they are models conceived by our own limited human brains, and they are designed to explain phenomena we can observe and measure. The best models are “good” because they deliver a high degree of predictability and help explain our observations in a way that supports further investigation.

It's easy to see that frames of reference and models are extremely valuable tools. They help us understand the world around us. Imagine sitting at a wooden table and letting your hands glide over the surface, feeling the smoothness of the grain. But what are you really “feeling” and how are you “interpreting” what you feel or sense? A physicist could argue that the table is just a cloud of loosely connected atoms. Or a quantum physicist could argue that the table you “see” is just a stream of photons that might have resided on the Moon a nanosecond earlier. The point is that the “models” are simply tools for describing phenomena, but they are not the phenomena themselves. The model can be scaled to match a particular point of view. This also applies to the cognitive models presented by Nørretranders, Kahneman, Morsella and Baars. They are merely explanatory models—they represent a way of describing reality, but they are not “reality” as such.

Still, we find them useful for shedding light on what researchers believe is going on inside our head. What these models show is that there is still much that we do not understand about how we think and what consciousness really is. This understanding should perhaps make us become just a little more careful about how we use technology and to what extent we allow it to engage cognitive processes that are beyond the reach of our consciousness.

Complicated? It's about to get even worse. The models on cognitive processes and consciousness that we have discussed in this chapter are complex but at least they deal with what we can observe and share and hence discuss and form opinions about. But we are about to dive even deeper into the rabbit hole—into that which we know exists but cannot prove …

The Hard Question of Consciousness

David Chalmers is a neuroscientist and a professor of philosophy at the Australian National University. Known for his definition of the “hard problem of consciousness,” Chalmers claims that the various models of consciousness all deal with “easy questions” but fail to answer the equally crucial but much more difficult question of why and how “phenomenal experiences” are created by our awareness of sensory information. Or as Chalmers phrases it:

Why is it that when our cognitive systems engage in visual and auditory information-processing, we have a visual or auditory experience: the quality of deep blue, the sensation of middle C? How can we explain why there is something it is like to entertain a mental image, or to experience an emotion? It is widely agreed that experience arises from a physical basis, but we have no good explanation of why and how it so arises. Why should physical processing give rise to a rich inner life at all? It seems objectively unreasonable that it should, and yet it does.43

Chalmers claims that consciousness is something more than the biological or sociological rails that provide it, and that the nature of consciousness may not be open to inspection, understanding or modeling. He has characterized his view as “naturalistic dualism”: naturalistic because he believes mental states are caused by physical systems (such as brains); dualist because he believes that the created mental state (e.g. consciousness) is distinct from and not reducible to the physical systems that create it.44 For readers interested in this distinction (and it is fascinating stuff) looking up the term “qualia” is recommended. The Stanford Encyclopedia of Philosophy has a good review of the concept.45 ![]()

The Social Brain

We humans are extremely social creatures who thrive when surrounded by others. This desire for social inclusion and interpersonal exchange is what made us adapt to social media so avidly and rapidly. Now, barely into our second decade since the advent of social media, evidence is emerging that makes it clearer than ever that our inborn sociability is deeply wired into our brains and this makes us vulnerable to certain appeals based on our sociability. Traveling salesmen have known this since long before the days of the Silk Road, but the man selling vacuum cleaners had neither the science nor the technology to literally hack into your brain.

In the past several years, even more evidence has emerged to show how our brain's architecture supports human evolutionary development, particularly in the form of our complex social behaviors.

The human brain as a whole is much larger than in any other mammal or primate of equivalent size. The brain's outermost layer, the neocortex, is particularly outsized, and that's the dashboard for our sociability. That evolution has allowed us to become social animals tells us that it is a survival trait, as was the development of brains that could reason, solve problems, develop tools, learn math and eventually figure out how to build airplanes, submarines, smartphones, satellites, laptops and so on.46

Although we cannot know for sure, the most likely explanation for the development of the social brain is that it evolved into a bigger brain with more flexibility. Bigger brains mean giving birth to children who need many years of rearing before they are able to survive on their own. The development of socially monogamous pair bonds and the inclusion of paternal care (in opposition to many other species) provides the foundation for a society capable of rearing children over longer periods of times. Developing a “social brain” makes this easier and allows for society to become more complex, more multifaceted, more dynamic and flexible. When parents stay together to raise kids the risk of predation and infanticide drops, which in turn lifts the whole species' chance for survival. And when parents began long-term parenting, this required the development of new and more complex social skills than are needed by species that are not sociable.

It is almost certain that our collective capacity for social cohesion arose out of the social tools that evolved to support cooperative parenting. Being able to set up and live in complex social environments facilitated the development of larger social groups, extended families, clans and tribes, all of which promoted higher rates of survival and reproduction. And there is a feedback loop here: the more that heightened social interaction helps the species survive and prosper, the more our brains will develop along lines that deliver more and better social capabilities.

As Harari points out in his book Sapiens, our brain's ability to engage in complex social behaviors is the seed of our much larger group affiliations, from having a sense of neighborhood to being citizens of a nation. Our brains are wired to make us feel rewarded when we engage in social interaction and to feel sensations similar to physical pain when socially rejected. In a sense, evolution has provided us with the perfect brain that lets us live in an ever more crowded world—as well as the perfect brain for getting absorbed by smartphones and social media. Its obvious benefits aside, complex social cooperation comes at a price. First, there's the requirement of a long-term parental investment, which for humans continues far beyond childhood. Second, although many social skills can only be learned through peer activities during adolescence, parents still have crucial roles to play, protecting, sheltering and nurturing.

Or as Dr. Pascal Vrticka at the Max Planck Institute for Human Cognitive and Brain Sciences puts it:

An individual's attachment style is a measure for the quality of his/her social bonds with others. It is crucially shaped through interactions with caregivers in early life, such as a child's parents. If others close to a child are responsive and caring, the child develops a secure attachment style. If they are unresponsive or inconsistent in their behaviour towards the child, however, the child develops an insecure, either avoidant or anxious attachment style. Once acquired, the attachment style of a person is believed to remain rather stable throughout the lifespan, and to even be transmitted from one generation to the next. It is therefore likely to circularly influence many of the steps involved in social brain development and skill acquisition during childhood, adolescence, and even adulthood.47