Cinder provides block store or the equivalent of an LUN as it were to a virtual machine, which can then format it at its will. We can create logical volumes with these disks or any other thing that we may want to do with the block storage.

Cinder service has a few components that will run in the management node and a few that will run on the storage node. The following components are part of Cinder service:

- API

- Scheduler

- Volume

Out of these, the first two are installed in the controller node and the volume service is installed on the compute node. The following diagram shows the architecture and the communication pattern of the Cinder components:

The API receives the request from the client (either the Cinder client or an external API call) and passes the request on to the scheduler, which then passes the request to one of the Cinder volumes.

The Cinder volumes use one of the physical/virtual storage presented to them as an LVM and provision a part of it as a block storage device, which can be attached to the Guest VM on Nova using iSCSI.

We will need the following information to complete the install on the controller node, so let us fill the following checklist:

|

Name |

Info |

|---|---|

|

Access to the Internet |

Yes |

|

Proxy needed |

No |

|

Proxy IP and port |

Not applicable |

|

Node name |

OSControllerNode |

|

Node IP address |

172.22.6.95 |

|

Node OS |

Ubuntu 14.04.1 LTS |

|

Cinder DB password |

|

|

Cinder Keystone password |

|

We will create a blank database after logging in to the MySQL (MariaDB) server:

create database cinder;

This will create an empty database called Cinder. Let us now set up the Cinder database user credentials:

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'localhost' IDENTIFIED BY 'c1nd3rpwd'; GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' IDENTIFIED BY 'c1nd3rpwd';

This allows the username called cinder using our password to access the database called Cinder.

We will install three packages on the controller node: the scheduler, the API, and the client package.

We will use the aptitude package manager to do this by running the following command:

sudo apt-get install cinder-scheduler python-cinderclient cinder-api

Once the installation is complete, we can move on to the next step.

Let us proceed to the configuration tasks. We will need to export the variables that we did in the beginning of the chapter. You could use the tip to save them in a file and source them.

We will create the user using the keystone command as shown in the following screenshot. The output will show the UID of the user created. Note that the UID generated will be a different one for you:

keystone user-create --name cinder --pass c1nd3rkeypwd

As the next step, we will add the user to the admin role in the service tenant that we created while installing Glance:

keystone user-role-add --user cinder --tenant service --role admin

This command doesn't provide any output, so if you see nothing, it is indeed good news!

We will create the service in Keystone so that Keystone can publish it to all the services that need it. However, in Cinder, we need to create two services, one each for version 1 and version 2.

keystone service-create --name cinder --type volume --description "OpenStack Block Storage" keystone service-create --name cinderv2 --type volumev2 --description "OpenStack Block Storage"

You should see the following result:

We will also have to note down the IDs of both the services that we have used to create the endpoints, so note it down.

We will need to create two endpoints as well, one per service. At this point, you must know that Cinder uses 8776 as the default port.

keystone endpoint-create --service-id a3e71c643105452cb4c8239d98b85245 --publicurl http://OScontrollerNode:8776/v1/%(tenant_id)s --internalurl http://OScontrollerNode:8776/v1/%(tenant_id)s --adminurl http://OScontrollerNode:8776/v1/%(tenant_id)s --region dataCenterOne keystone endpoint-create --service-id d9f4e0983eda4e8ab7a540441d3f5f87 --publicurl http://OScontrollerNode:8776/v2/%(tenant_id)s --internalurl http://OScontrollerNode:8776/v2/%(tenant_id)s --adminurl http://OScontrollerNode:8776/v2/%(tenant_id)s --region dataCenterOne

You will notice that the URLs for this are different from the other endpoints so far. This is because it has the tenant ID as a variable, and the URL will be modified during runtime by the client using the endpoint.

We modify the configuration file located at /etc/cinder/cinder.conf. We will modify three sections:

[default]section[database]section[keystone_authtoken]section

Note that some sections themselves don't exist in the default configuration shipped with the distro. You should create them and add the options as follows:

- In the

[database]section, add the MySQL user credentials:connection = mysql://cinder:c1nd3rpwd@OSControllerNode/cinder - In the

[default]section, modify the RabbitMQ user credentials and themy_ip. Themy_ipis used to allow Cinder to listen on the IP address of the controller node:rpc_backend = rabbit rabbit_host = OSControllerNode rabbit_password = rabb1tmqpass auth_strategy = keystone my_ip = 172.22.6.95 verbose = true

- The

[keystone_authtoken]section will have the following information:auth_uri = http://OScontrollerNode:5000/v2.0 identity_uri = http://OScontrollerNode:35357 admin_tenant_name = service admin_user = cinder admin_password = c1nd3rkeypwd

The Cinder installation on the controller node is now complete. We will delete the SQLite database that is installed with the Ubuntu packages:

rm -rf /var/lib/cinder/cinder.sqlite

Let us restart the API and scheduler service to complete this part of the install:

service cinder-scheduler restart service cinder-api restart

This concludes the first part of Cinder installation.

In the second part of the installation, we will install the cinder-volume component on the storage node.

As a prerequisite, we will need to ensure the following:

- Storage node IP address is added in the host file of the controller node (or) DNS server

- Storage node has the second hard disk attached to it

As the first step, we will install the LVM tools to ensure we create the volume groups:

apt-get install lvm2

The next step in the process is to create a volume group on the second hard disk. If we are doing the install in a production environment, the second hard drive or the other drives will be a part of disk array and will be connected using FC or iSCSI. If we are using a VM for the production deployment, the storage node can be connected to the disks using virtual disks or even raw device mappings.

We should check the presence of the disks and that they can be detected by the storage node. This can be done executing the following command:

fdisk –l

In our case, you can see that we have sdb and sdc. We are going to use sdb for the Cinder service. We will create a partition on the sdb drive:

fdisk /dev/sdb

Create a new partition by using the menu and entering n for new partition and p for primary partition. Choose the defaults for other options. Finally, enter w to write to the disks and exit fdisk.

The following screenshot demonstrates the result:

As the next step, we will create an LVM physical volume:

pvcreate /dev/sdb1

On that, we will create a volume group called cinder-volumes:

vgcreate cinder-volumes /dev/sdb1

Note

As a best practice, we will add sda and sdb to the filter configuration in the /etc/lvm/lvm.conf file.

The sdb is the LVM physical volume for the volumes used by Cinder and sda is the operating system partition, which is also LVM in our case. However, if you don't have LVM in the operating system volume, then only sdb needs to be added:

filter = [ "a/sda/","a/sdb/","r/.*/" ]

Therefore, the preceding filter means that it will accept LVMs on the sda and sdb and reject everything else.

Next, we will add host entries to the /etc/hosts file so that OSControllerNode and OSStorageNode knows the IP address of each other.

- On the storage node:

echo "172.22.6.95 OSControllerNode" >> /etc/hosts - On the controller node:

echo "172.22.6.96 OSStorageNode" >> /etc/hosts

After this, verify that both the servers are able to ping each other by name.

Now, let us look at the checklist for the configuration, presented as follows:

|

Name |

Info |

|---|---|

|

Access to the Internet |

Yes |

|

Proxy needed |

No |

|

Proxy IP and port |

Not applicable |

|

Node name |

|

|

Node IP address |

172.22.6.96 |

|

Node OS |

Ubuntu 14.04.1 LTS |

|

Cinder DB password |

|

|

Cinder Keystone password |

|

|

Rabbit MQ password |

|

Before working on this node, ensure that you have added the Juno repository shown in Chapter 2, Authentication and Authorization Using Keystone.

We have already created the Cinder database when we were performing actions on the controller node, so we can directly start with installation of the packages.

We will install the cinder-volume package; we will install the Python client and drivers for MySQL, as this node will need to access the database on the controller node:

apt-get install cinder-volume python-mysqldb

Ensure that the installation is complete.

We will need to modify the /etc/cinder/cinder.conf file as we had done it on the controller node. We will modify the following sections:

[database]section[default]section[keystone_authtoken]section

It is going to be exactly same as we did in the controller node, except for the my_ip directive, which will in this case, have the IP address of the storage node rather than the controller node. The configuration modification will look like this:

[default] rpc_backend = rabbit rabbit_host = OSControllerNode rabbit_password = rabb1tmqpass auth_strategy = keystone my_ip = 172.22.6.96 verbose = true [database] connection = mysql://cinder:c1nd3rpwd@OSControllerNode/cinder [keystone_authtoken] auth_uri = http://OScontrollerNode:5000/v2.0 identity_uri = http://OScontrollerNode:35357 admin_tenant_name = service admin_user = cinder admin_password = c1nd3rkeypwd

To finalize the installation, we will remove the SQLite db that came with the Ubuntu packages, as we will not use it:

rm -f /var/lib/cinder/cinder.sqlite

We will then restart the Linux target framework that controls iSCSI connections and then finally the cinder-volume itself:

service tgt restart service cinder-volume restart

We now have completed the installation of Cinder.

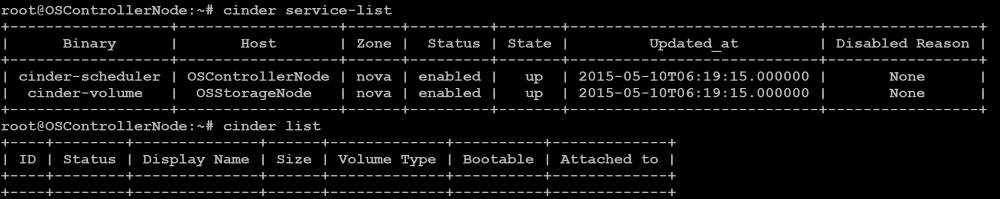

We will perform a simple validation by executing the following commands on the controller node:

- cinder service-list

- cinder list

You should see something like the following:

The service-list will show all the nodes that take part in the Cinder service. As you can see, our two nodes show up, which is as expected. The cinder-list command shows us the virtual volumes that are created (to be connected to the Nova instances), but since we don't have any, we don't expect to see any output there.