![]()

Compression

Oracle Release 11.2 offers Advanced Compression, a way to reduce the overall size of your data by compressing commonly occurring values and strings with tokens, mnemonics that can represent much larger strings and values. Two types of compression are offered on systems not using Exadata-type storage: Basic and OLTP . Basic compression is available without the Advanced Compression licensing. A third compression option, Hybrid Columnar Compression (HCC) , is available on Exadata systems and non-Exadata systems using Exadata-type storage. This is offered in two basic levels: QUERY and ARCHIVE.

These compression options provide space savings, reducing table sizes to almost half the uncompressed size. Of course, such features do not come without a price, and may not behave as you would expect them to. When used with caution and care, storage requirements can be reduced, sometimes significantly. It’s knowing when to use compression and which compression level to implement that makes this a valuable, usable feature.

How Can I Compress Thee

There are three general levels of compression: Basic, OLTP, and Hybrid Columnar Compression (which we will refer to as HCC), available with Exadata. By far, the most aggressive of these is HCC, but it can throw in some surprises for the uninitiated. Also, be aware that only one Advanced Compression option can be in use for a nonpartitioned table; it can be OLTP or HCC but not both. Partitioned tables differ in that each partition can use different compression methods. For example, the current partition, partitioned by date, can use OLTP compression to make updates and inserts less time-consuming; partitions for the next six months can use QUERY LOW compression; and archive partitions (those older than six months) can use ARCHIVE HIGH compression to allow space savings for partitions that are no longer updated.

Basic compression is aptly named; it compresses table data simply and fairly quickly, resulting in considerable space savings:

SQL> select segment_name, bytes

2 from user_segments;

SEGMENT_NAME BYTES

----------------------------------- ----------

DEPT 65536

SALGRADE 65536

DUMMY 65536

EMP 713031680

SQL> alter table emp move compress;

Table altered.

SQL> select segment_name, bytes

2 from user_segments;

SEGMENT_NAME BYTES

----------------------------------- ----------

DEPT 65536

SALGRADE 65536

DUMMY 65536

EMP 184549376

To affect the current data, as well as future inserts and updates, we have to move the table and compress it. A simple alter table emp compress ; would have set the table for compression, but the current data would have remained unaffected, providing no immediate space savings. The move allowed Oracle to compress the existing data in the table.

OLTP

OLTP compression is the first of the Advanced Compression options, which require separate licensing to use. Unlike Basic compression, OLTP compression does provide for automatic compression after updates; however, it’s performed in a batch-type operation on a block-by-block basis. It, too, can provide substantial savings on the storage tier:

SQL> select segment_name, bytes

2 from user_segments;

SEGMENT_NAME BYTES

----------------------------------- ----------

EMP 721420288

DEPT 65536

SALGRADE 65536

DUMMY 65536

SQL> alter table emp move compress for oltp;

Table altered.

SQL> select segment_name, bytes

2 from user_segments;

SEGMENT_NAME BYTES

----------------------------------- ----------

EMP 201326592

DEPT 65536

SALGRADE 65536

DUMMY 65536

SQL>

The compressed size using OLTP compression is a bit larger than that for Basic compression, but you get the added benefit of eventual recompression after updated blocks are filled. As updated blocks hit the compression threshold, they are compressed using the OLTP algorithm, so that it is not necessary to manually re-compress the table. This does, of course, leave the updated table in a mixed state of compressed and uncompressed blocks, but the uncompressed blocks will return to the compressed state, once the conditions for compression are reached.

Hybrid Columnar Compression (HCC)

Hybrid Columnar Compression is a compression option available only for tables residing on Exadata storage. (As well as Exadata, this includes Axiom, the SPARC SuperCluster, and the Sun ZFS Storage Appliance.) If you try this on any other system, you will receive the following informative message:

SQL> alter table emp move compress for query high;

alter table emp move compress for query high

*

ERROR at line 1:

ORA-64307: hybrid columnar compression is not supported for tablespaces on this storage type

SQL>

The phrase this storage type means any storage other than those listed at the beginning of this section. With that in mind, we want to mention that HCC can be a showstopper for Data Guard, when the destination database is not on Exadata or using Exadata storage. Tables in the affected Data Guard instance will need to be uncompressed before transactions can be applied to them, so all of the space savings will be lost. This is also true for database backups when HCC is enabled. Restoring a backup where HCC compression is used to a non-Exadata system will necessitate uncompressing those tables. This can easily cause you to run out of storage before all the tables are uncompressed, if the storage requirements were determined from the Exadata side.

HCC works differently than the two options mentioned earlier in this chapter, as it reorganizes data into Compression Units (CU). How it works will be discussed in the next section. For the moment, we will concentrate on implementing HCC in its four forms and on how those forms differ. There are two basic types under the HCC banner, QUERY and ARCHIVE, each having the options of HIGH and LOW. The first pair of HCC options we will discuss falls under the QUERY type. We will then discuss the ARCHIVE type.

QUERY

This type is less aggressive than ARCHIVE, but it still initially compresses data more than OLTP compression. We first compress our EMP table forQUERY HIGH:

SQL>

SQL> --

SQL> -- Current storage for the EMP table

SQL> -- (this is simply a test table for this example)

SQL> --

SQL> select segment_name, sum(bytes) total_space

2 from user_segments

3 group by segment_name;

SEGMENT_NAME TOTAL_SPACE

----------------------------------- -----------

DEPT 65536

DUMMY 65536

EMP 713031680

EMP_IDX 478150656

SALGRADE 65536

Elapsed: 00:00:00.82

SQL>

SQL> --

SQL> -- Compress the table for query high (use HCC)

SQL> --

SQL> -- Note elapsed time to compress

SQL> --

SQL> alter table emp move compress for query high;

Table altered.

Elapsed: 00:00:35.65

SQL>

SQL> --

SQL> -- Index is now invalid

SQL> --

SQL> -- Must rebuild to make it usable

SQL> --

SQL> -- Note elapsed time

SQL> --

SQL> alter index emp_idx rebuild;

Index altered.

Elapsed: 00:01:13.70

SQL>

SQL> --

SQL> -- Current compression type, storage for table/index

SQL> -- initially after compression is enabled

SQL> --

SQL> select table_name, compression, compress_for

2 from user_tables;

TABLE_NAME COMPRESS COMPRESS_FOR

------------------------------ -------- ------------

DEPT DISABLED

BONUS DISABLED

SALGRADE DISABLED

DUMMY DISABLED

EMP ENABLED QUERY HIGH

Elapsed: 00:00:00.20

SQL>

SQL> select segment_name, sum(bytes) total_space

2 from user_segments

3 group by segment_name;

SEGMENT_NAME TOTAL_SPACE

----------------------------------- -----------

DEPT 65536

DUMMY 65536

EMP 8388608

EMP_IDX 260046848

SALGRADE 65536

Elapsed: 00:00:00.03

SQL>

The resulting size is much smaller than either of the Basic or OLTP compression options. Even at the QUERY LOW compression rate, the size is still less than OLTP compression can provide:

SQL>

SQL> --

SQL> -- Initial storage

SQL> --

SQL> select segment_name, sum(bytes) total_space

2 from user_segments

3 group by segment_name;

SEGMENT_NAME TOTAL_SPACE

----------------------------------- -----------

DEPT 65536

DUMMY 65536

EMP 713031680

EMP_IDX 478150656

SALGRADE 65536

Elapsed: 00:00:00.25

SQL>

SQL> --

SQL> -- Compress for QUERY LOW

SQL> --

SQL> -- Note elapsed time

SQL> --

SQL> alter table emp move compress for query low;

Table altered.

Elapsed: 00:00:16.16

SQL>

SQL> alter index emp_idx rebuild;

Index altered.

Elapsed: 00:00:43.08

SQL>

SQL> --

SQL> -- These figures are the same as those generated

SQL> -- AFTER the HCC compressed data was updated the first time

SQL> --

SQL> select table_name, compression, compress_for

2 from user_tables;

TABLE_NAME COMPRESS COMPRESS_FOR

------------------------------ -------- ------------

DEPT DISABLED

BONUS DISABLED

SALGRADE DISABLED

DUMMY DISABLED

EMP ENABLED QUERY LOW

Elapsed: 00:00:00.02

SQL>

SQL> select segment_name, sum(bytes) total_space

2 from user_segments

3 group by segment_name;

SEGMENT_NAME TOTAL_SPACE

----------------------------------- -----------

DEPT 65536

DUMMY 65536

EMP 14680064

EMP_IDX 260046848

SALGRADE 65536

Elapsed: 00:00:00.02

SQL>

ARCHIVE

The HCC compression type ARCHIVE is definitely the most aggressive in terms of space savings, but it’s also intended for data that is designated read-only and is or will be archived for occasional use. It certainly isn’t intended for actively updated tables; the space savings would be lost due to uncompressing the data, to allow the updates and recompressing at the lower OLTP level. We’ll compress our standard EMP table for ARCHIVE HIGH and see what space savings are generated:

SQL> --

SQL> -- Current storage for the EMP table

SQL> -- (this is simply a test table for this example)

SQL> --

SQL> select segment_name, sum(bytes) total_space

2 from user_segments

3 group by segment_name;

SEGMENT_NAME TOTAL_SPACE

----------------------------------- -----------

DEPT 65536

DUMMY 65536

EMP 713031680

EMP_IDX 478150656

SALGRADE 65536

Elapsed: 00:00:00.02

SQL>

SQL> --

SQL> -- Compress the table for archive high

SQL> --

SQL> -- Note elapsed time to compress

SQL> --

SQL> alter table emp move compress for archive high;

Table altered.

Elapsed: 00:00:38.55

SQL>

SQL> --

SQL> -- Index is now invalid

SQL> --

SQL> -- Must rebuild to make it usable

SQL> --

SQL> -- Note elapsed time

SQL> --

SQL> alter index emp_idx rebuild;

Index altered.

Elapsed: 00:00:39.45

SQL>

SQL> --

SQL> -- Current compression type, storage for table/index

SQL> -- initially after compression is enabled

SQL> --

SQL> select table_name, compression, compress_for

2 from user_tables;

TABLE_NAME COMPRESS COMPRESS_FOR

------------------------------ -------- ------------

DEPT DISABLED

BONUS DISABLED

SALGRADE DISABLED

DUMMY DISABLED

EMP ENABLED ARCHIVE HIGH

Elapsed: 00:00:00.02

SQL>

SQL> select segment_name, sum(bytes) total_space

2 from user_segments

3 group by segment_name;

SEGMENT_NAME TOTAL_SPACE

----------------------------------- -----------

DEPT 65536

DUMMY 65536

EMP 4194304

EMP_IDX 260046848

SALGRADE 65536

Elapsed: 00:00:00.01

SQL>

The space savings are substantial, taking the table from its original size of 680 megabytes down to 4 megabytes, a savings of 99.41 percent. Using ARCHIVE LOW instead of ARCHIVE HIGH still produces impressive results:

SQL> --

SQL> -- Initial storage

SQL> --

SQL> select segment_name, sum(bytes) total_space

2 from user_segments

3 group by segment_name;

SEGMENT_NAME TOTAL_SPACE

----------------------------------- -----------

DEPT 65536

DUMMY 65536

EMP 713031680

EMP_IDX 478150656

SALGRADE 65536

Elapsed: 00:00:01.31

SQL>

SQL> --

SQL> -- Compress for ARCHIVE LOW

SQL> --

SQL> -- Note elapsed time

SQL> --

SQL> alter table emp move compress for archive low;

Table altered.

Elapsed: 00:00:34.16

SQL>

SQL> alter index emp_idx rebuild;

Index altered.

Elapsed: 00:00:48.44

SQL>

SQL> --

SQL> -- These figures are the same as those generated

SQL> -- AFTER the HCC compressed data was updated the first time

SQL> --

SQL> select table_name, compression, compress_for

2 from user_tables;

TABLE_NAME COMPRESS COMPRESS_FOR

------------------------------ -------- ------------

DEPT DISABLED

BONUS DISABLED

SALGRADE DISABLED

DUMMY DISABLED

EMP ENABLED ARCHIVE LOW

Elapsed: 00:00:00.03

SQL>

SQL> select segment_name, sum(bytes) total_space

2 from user_segments

3 group by segment_name;

SEGMENT_NAME TOTAL_SPACE

----------------------------------- -----------

DEPT 65536

DUMMY 65536

EMP 8388608

EMP_IDX 260046848

SALGRADE 65536

Elapsed: 00:00:00.02

SQL>

Using ARCHIVE LOW, the table ends up twice as large as it did using ARCHIVE HIGH, but the table is 98.82 percent smaller than it was originally, before compression. This is the same level of compression afforded by QUERY HIGH.

Oracle provides a function in the DBMS_COMPRESSION package named GET_COMPRESSION_TYPE. This function returns a number representing the compression type and level in use for a given table. We have a query to report the owner, table_name, numeric compression id, and the type name for a given table. We pass in the owner and table name as inputs.

set verify off

select distinct

'&&owner' owner,

'&&tabname' table_name,

dbms_compression.get_compression_type('&&owner','&&tabname', rowid) comp_typ,

decode(dbms_compression.get_compression_type('&&owner','&&tabname', rowid),

1, 'NONE',

2, 'OLTP',

4, 'QUERY HIGH',

8, 'QUERY LOW',

16, 'ARCHIVE HIGH',

32, 'ARCHIVE LOW') type_name

from

&&owner..&&tabname

/

Undefine owner

Undefine tabname

The output follows:

SQL> @get_compression_type.sql

Enter value for owner: BING

Enter value for tabname: EMP

OWNE TABL COMP_TYP TYPE_NAME

---- ---- ---------- ------------

BING EMP 8 QUERY LOW

SQL>

The Nuts and Bolts of HCC

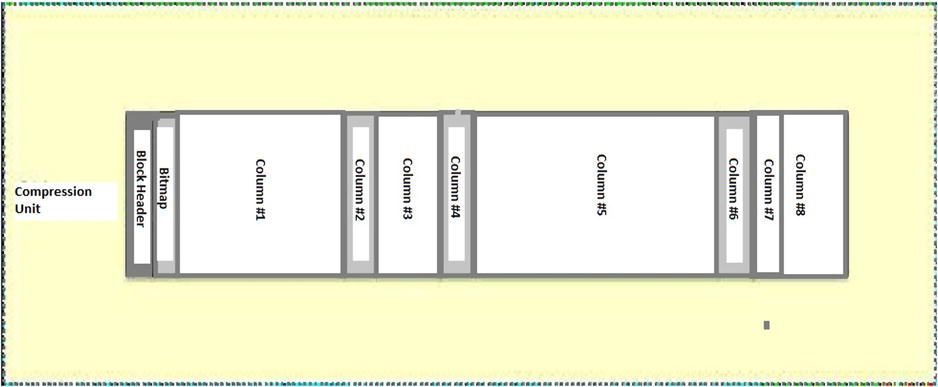

Using HCC causes some changes in how Oracle stores table data, altering the storage format from the usual heap-style block population to a column-centric format, still using data blocks, called Compression Units, or CUs. This is a logical change, as Compression Units consist of multiple Oracle blocks with a unique layout. First, Oracle collects a number of similar rows for a Compression Unit and organizes them in a columnar format, similar to Figure 6-1.

Figure 6-1. Initial data organization for HCC Compression Unit

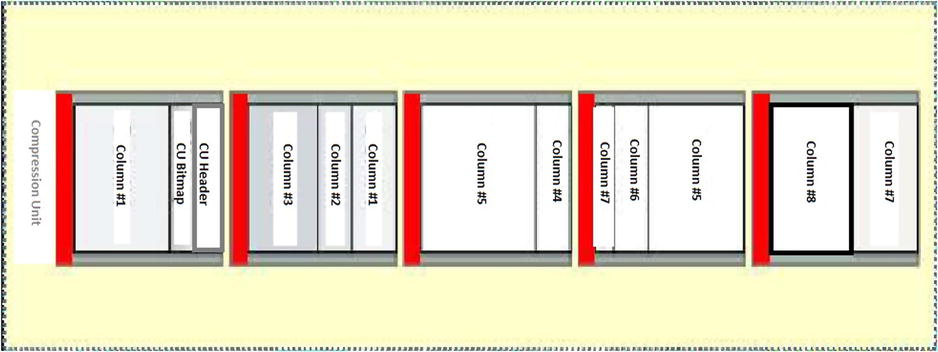

Collecting rows of similar data makes it easier to compress the data using HCC, as common elements that can be replaced with tokens are colocated in the same Compression Unit. Oracle, by design, is a row-centric DBMS, so this interim structure is converted back to a row format, similar to Figure 6-2.

Figure 6-2. HCC Compression Unit layout

Five data blocks are used to store the data from this example, with each column being a row in the block. Some columns won’t fit into a single block, so they are chained across multiple blocks, as illustrated. The block header is shown in red; the CU header is in the first block of the Compression Unit, as is the Compression Unit bitmap, showing where the rows are located. The data columns follow the CU Header and CU Bitmap rows in block 1, with Column 1 data starting at the third row of the block.

As mentioned previously, if a column won’t fit entirely in a single block, it is chained across to the next block in the Compression Unit. This strategy continues until all the columns are stored. Because like data is stored together, HCC can provide exceptionally high compression ratios, as compared to either Basic or OLTP compression. Also, creating Compression Units in this fashion prevents rows from being split across CUs, so that an entire row can be read from a single CU.

Performance

Depending on whether you are inserting, updating, or querying data, the performance of compressed tables may not be what you would expect. Querying data is usually a fast operation. Inserting for all but OLTP compression requires parallel or direct load inserts, so that the newly inserted rows are compressed. Updates take longer, as the data has to be uncompressed before it can be updated. Carefully analyze how a table is used before deciding on compression; heavily updated tables are better served with OLTP compression than either of the HCC compression types. Remember that engineering is often described as the art of making tradeoffs. You can’t have your cake and eat it too.

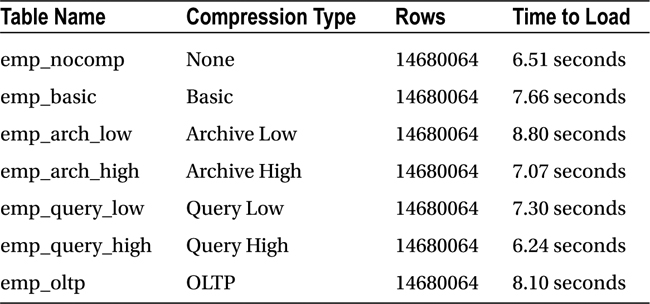

When You Load

Loading data into compressed tables, with the lone exception of OLTP compression, requires parallel or direct-path inserts. Of course, loading into compressed tables can take longer than the same process for noncompressed tables. We created empty tables using the various compression methods and loaded them from the EMP table we populated in earlier examples. The results of those loads, and the times required for those loads, are shown in the following example:

SQL>

SQL> --

SQL> -- Build compressed tables, keep them empty

SQL> --

SQL>

SQL> create table emp_arch_low as select * from emp where 0=1;

Table created.

Elapsed: 00:00:01.42

SQL> create table emp_arch_high as select * from emp where 0=1;

Table created.

Elapsed: 00:00:00.01

SQL> create table emp_query_low as select * from emp where 0=1;

Table created.

Elapsed: 00:00:00.01

SQL> create table emp_query_high as select * from emp where 0=1;

Table created.

Elapsed: 00:00:00.01

SQL> create table emp_oltp as select * from emp where 0=1;

Table created.

Elapsed: 00:00:00.01

SQL> create table emp_basic as select * from emp where 0=1;

Table created.

Elapsed: 00:00:00.01

SQL>

SQL> --

SQL> -- Create a copy of emp, uncompressed

SQL> --

SQL>

SQL> create table emp_nocomp as select * From emp where 0=1;

Table created.

Elapsed: 00:00:00.02

SQL>

SQL> --

SQL> --

SQL> -- We load the tables and see how long each

SQL> -- compression level takes

SQL> --

SQL> --

SQL>

SQL> insert /*+ append */ into emp_nocomp select * from emp;

14680064 rows created.

Elapsed: 00:00:06.51

SQL> insert /*+ append */ into emp_arch_low select * from emp;

14680064 rows created.

Elapsed: 00:00:08.80

SQL> insert /*+ append */ into emp_arch_high select * from emp;

14680064 rows created.

Elapsed: 00:00:07.07

SQL> insert /*+ append */ into emp_query_low select * from emp;

14680064 rows created.

Elapsed: 00:00:07.30

SQL> insert /*+ append */ into emp_query_high select * from emp;

14680064 rows created.

Elapsed: 00:00:06.24

SQL> insert /*+ append */ into emp_oltp select * from emp;

14680064 rows created.

Elapsed: 00:00:08.10

SQL> insert /*+ append */ into emp_basic select * from emp;

14680064 rows created.

Elapsed: 00:00:07.66

SQL>

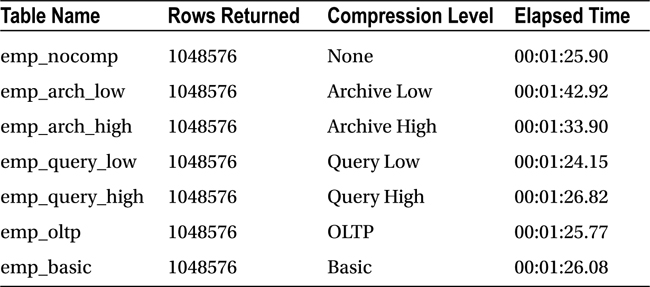

We have collected the data and presented it in Table 6-1, so that it’s easier to understand.

Table 6-1. Load Times and Data Volumes for Compressed Tables

For all but the QUERY HIGH compression, the load times exceed the time to load the uncompressed table. Your execution times may vary from those we generated, because they depend on the load the system is experiencing when you perform your test. If Smart Scans are used, some of the processing will be offloaded to the storage cells, allowing them to reduce the workload on the database servers. This can result in load times that are less than the time to load uncompressed data.

When You Query

Queries can also take longer to process when the data is compressed. Smart Scans also play a part here, as some of the work (such as uncompressing the data) falls to the storage cells, rather than the database servers. We have run the same query against each of the tables representing the various compression types; the results of those tests follow:

SQL>

SQL> --

SQL> -- Let's select from each table and see how long it takes

SQL> --

SQL>

SQL> select /*+ parallel 4 */ *

2 from emp_nocomp

3 where empno > 7930;

EMPNO ENAME JOB MGR HIREDATE SAL COMM DEPTNO

---------- ---------- --------- ---------- --------- ---------- ---------- ----------

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

...

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

1048576 rows selected.

Elapsed: 00:01:25.90

SQL>

SQL> select /*+ parallel 4 */ *

2 from emp_arch_low

3 where empno > 7930;

EMPNO ENAME JOB MGR HIREDATE SAL COMM DEPTNO

---------- ---------- --------- ---------- --------- ---------- ---------- ----------

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

...

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

1048576 rows selected.

Elapsed: 00:01:42.92

SQL>

SQL> select /*+ parallel 4 */ *

2 from emp_arch_high

3 where empno > 7930;

EMPNO ENAME JOB MGR HIREDATE SAL COMM DEPTNO

---------- ---------- --------- ---------- --------- ---------- ---------- ----------

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

...

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

1048576 rows selected.

Elapsed: 00:01:33.90

SQL>

SQL> select /*+ parallel 4 */ *

2 from emp_query_low

3 where empno > 7930;

EMPNO ENAME JOB MGR HIREDATE SAL COMM DEPTNO

---------- ---------- --------- ---------- --------- ---------- ---------- ----------

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

...

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

1048576 rows selected.

Elapsed: 00:01:24.15

SQL>

SQL> select /*+ parallel 4 */ *

2 from emp_query_high

3 where empno > 7930;

EMPNO ENAME JOB MGR HIREDATE SAL COMM DEPTNO

---------- ---------- --------- ---------- --------- ---------- ---------- ----------

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

...

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

1048576 rows selected.

Elapsed: 00:01:26.82

SQL>

SQL> select /*+ parallel 4 */ *

2 from emp_oltp

3 where empno > 7930;

EMPNO ENAME JOB MGR HIREDATE SAL COMM DEPTNO

---------- ---------- --------- ---------- --------- ---------- ---------- ----------

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

...

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

1048576 rows selected.

Elapsed: 00:01:25.77

SQL>

SQL> select /*+ parallel 4 */ *

2 from emp_basic

3 where empno > 7930;

EMPNO ENAME JOB MGR HIREDATE SAL COMM DEPTNO

---------- ---------- --------- ---------- --------- ---------- ---------- ----------

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

...

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

7934 MILLER CLERK 7782 23-JAN-82 1300 10

1048576 rows selected.

Elapsed: 00:01:26.08

SQL>

The summarized data is shown in Table 6-2.

Table 6-2. Query Execution Times for Compressed Tables

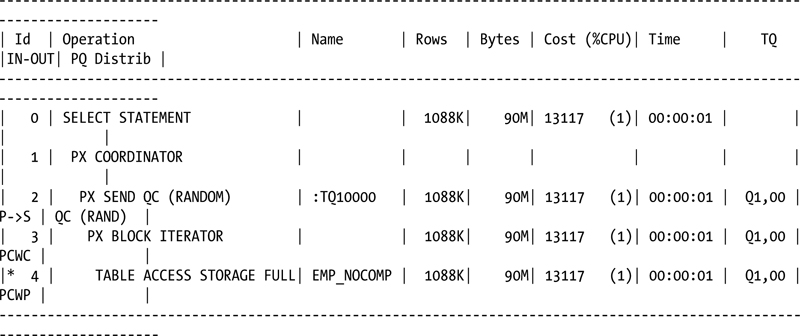

Notice that all but the tables compressed for ARCHIVE HIGH and ARCHIVE LOW were very close in execution time to the uncompressed table. The execution plan is the same for all queries, as follows:

Execution Plan

----------------------------------------------------------

Plan hash value: 398743904

Predicate Information (identified by operation id):

---------------------------------------------------

4 - storage("EMPNO">7930)

filter("EMPNO">7930)

Note

-----

- dynamic sampling used for this statement (level=2)

- automatic DOP: Computed Degree of Parallelism is 2

Auto DOP and a Smart Scan made the query times essentially no worse than for the uncompressed data, a testament to both features of Exadata. The Smart Scan also provided extra CPU cycles from the storage cells, as the data was uncompressed at the storage cells, rather than at the database servers.

When You Update

Updates pose a problem to Basic-level compression, as the data has to be uncompressed and remains so after the updates.

SQL> update emp set sal=sal*1.10 where empno = 7566;

1048576 rows updated.

SQL> select segment_name, bytes

2 from user_segments;

SEGMENT_NAME BYTES

----------------------------------- ----------

DEPT 65536

SALGRADE 65536

DUMMY 65536

EMP 243269632

SQL>

Notice that the overall bytes that EMP consumes increased after the updates were completed. Subsequent updates will cause the table to increase in size, as blocks are processed. The table will have to be compressed again to regain the initial savings, as follows:

SQL> alter table emp move compress;

Table altered.

SQL> select segment_name, bytes

2 from user_segments;

SEGMENT_NAME BYTES

----------------------------------- ----------

DEPT 65536

SALGRADE 65536

DUMMY 65536

EMP 184549376

SQL>

There is no provision for automatic compression after updates occur. Even though Basic compression is available without additional licensing, its use could be limited to tables that aren’t updated or are updated infrequently.

Updates to compressed tables also take longer than they would for uncompressed tables, because the blocks affected by the update have to be uncompressed before the updates can occur. The elapsed time for a bulk update of a table compressed using Basic compression is as follows:

SQL> update emp set sal=sal*1.10 where empno = 7566;

1048576 rows updated.

Elapsed: 00:01:05.85

SQL>

This was executed on a system with no load and only one connected user. On more heavily loaded systems, the elapsed time can be greater and, interestingly, the table size can increase over the original size, if the bulk update affects a large portion of the table data.

SQL>

SQL> --

SQL> -- Current storage for the EMP table

SQL> -- (this is simply a test table for this example)

SQL> --

SQL> select segment_name, sum(bytes) total_space

2 from user_segments

3 group by segment_name;

SEGMENT_NAME TOTAL_SPACE

----------------------------------- -----------

DEPT 65536

DUMMY 65536

EMP 713031680

EMP_IDX 469762048

SALGRADE 65536

Elapsed: 00:00:01.46

SQL>

SQL> --

SQL> -- Compress the table basic

SQL> --

SQL> -- Note elapsed time to compress

SQL> --

SQL> alter table emp move compress;

Table altered.

Elapsed: 00:00:15.57

SQL>

SQL> --

SQL> -- Index is now invalid

SQL> --

SQL> -- Must rebuild to make it usable

SQL> --

SQL> -- Note elapsed time

SQL> --

SQL> alter index emp_idx rebuild;

Index altered.

Elapsed: 00:00:53.01

SQL>

SQL> --

SQL> -- Current compression type, storage for table/index

SQL> -- initially after compression is enabled

SQL> --

SQL> select table_name, compression, compress_for

2 from user_tables;

TABLE_NAME COMPRESS COMPRESS_FOR

------------------------------ -------- ------------

DEPT DISABLED

BONUS DISABLED

SALGRADE DISABLED

DUMMY DISABLED

EMP ENABLED BASIC

Elapsed: 00:00:00.03

SQL>

SQL> select segment_name, sum(bytes) total_space

2 from user_segments

3 group by segment_name;

SEGMENT_NAME TOTAL_SPACE

----------------------------------- -----------

DEPT 65536

DUMMY 65536

EMP 184549376

EMP_IDX 260046848

SALGRADE 65536

Elapsed: 00:00:00.02

SQL>

SQL> --

SQL> -- Perform an update on the compressed data

SQL> --

SQL> -- Note elapsed time to complete

SQL> --

SQL> update emp set sal=sal*1.08 where empno > 7350;

14680064 rows updated.

Elapsed: 00:14:25.18

SQL>

SQL> commit;

Commit complete.

Elapsed: 00:00:00.00

SQL>

SQL> --

SQL> -- Compression type has not changed in the

SQL> -- data dictionary but the consumed space

SQL> -- has increased beyond the original size

SQL> -- of the table

SQL> --

SQL> select table_name, compression, compress_for

2 from user_tables;

TABLE_NAME COMPRESS COMPRESS_FOR

------------------------------ -------- ------------

DEPT DISABLED

BONUS DISABLED

SALGRADE DISABLED

DUMMY DISABLED

EMP ENABLED BASIC

Elapsed: 00:00:00.03

SQL>

SQL> select segment_name, sum(bytes) total_space

2 from user_segments

3 group by segment_name;

SEGMENT_NAME TOTAL_SPACE

----------------------------------- -----------

DEPT 65536

DUMMY 65536

EMP 956301312

EMP_IDX 260046848

SALGRADE 65536

Elapsed: 00:00:00.01

SQL>

SQL> --

SQL> -- Perform a second update

SQL> --

SQL> -- Note elapsed time

SQL> --

SQL> update emp set sal=sal*1.08 where empno > 7350;

14680064 rows updated.

Elapsed: 00:03:48.95

SQL>

SQL> commit;

Commit complete.

Elapsed: 00:00:00.01

SQL>

SQL> --

SQL> -- Current storage consumed after second update

SQL> --

SQL> select table_name, compression, compress_for

2 from user_tables;

TABLE_NAME COMPRESS COMPRESS_FOR

------------------------------ -------- ------------

DEPT DISABLED

BONUS DISABLED

SALGRADE DISABLED

DUMMY DISABLED

EMP ENABLED BASIC

Elapsed: 00:00:00.03

SQL>

SQL> select segment_name, sum(bytes) total_space

2 from user_segments

3 group by segment_name;

SEGMENT_NAME TOTAL_SPACE

----------------------------------- -----------

DEPT 65536

DUMMY 65536

EMP 956301312

EMP_IDX 260046848

SALGRADE 65536

Elapsed: 00:00:00.01

SQL>

The table has gained size, due to the updates to compressed data using the Basic compression algorithm. Such behavior makes Basic compression an invalid option for actively updated tables.

OLTP compression makes it easier to keep a good portion of the space savings generated by the initial compression, although it may not be immediately realized, because not all of the updated blocks are filled. The following example illustrates this:

SQL>

SQL> --

SQL> -- Initial storage

SQL> --

SQL> select segment_name, sum(bytes) total_space

2 from user_segments

3 group by segment_name;

SEGMENT_NAME TOTAL_SPACE

----------------------------------- -----------

DEPT 65536

DUMMY 65536

EMP 713031680

EMP_IDX 478150656

SALGRADE 65536

Elapsed: 00:00:00.03

SQL>

SQL> --

SQL> -- Compress for OLTP rather than QUERY HIGH

SQL> --

SQL> -- Note elapsed time

SQL> --

SQL> alter table emp move compress for oltp;

Table altered.

Elapsed: 00:00:19.36

SQL>

SQL> alter index emp_idx rebuild;

Index altered.

Elapsed: 00:00:52.12

SQL>

SQL> --

SQL> -- These figures are the same as those generated

SQL> -- AFTER the HCC compressed data was updated the first time

SQL> --

SQL> select table_name, compression, compress_for

2 from user_tables;

TABLE_NAME COMPRESS COMPRESS_FOR

------------------------------ -------- ------------

DEPT DISABLED

BONUS DISABLED

SALGRADE DISABLED

DUMMY DISABLED

EMP ENABLED OLTP

Elapsed: 00:00:00.04

SQL>

SQL> select segment_name, sum(bytes) total_space

2 from user_segments

3 group by segment_name;

SEGMENT_NAME TOTAL_SPACE

----------------------------------- -----------

DEPT 65536

DUMMY 65536

EMP 201326592

EMP_IDX 260046848

SALGRADE 65536

Elapsed: 00:00:00.01

SQL>

SQL> --

SQL> -- Perform an update

SQL> --

SQL> -- Note elapsed time (less than the previous compressed update)

SQL> --

SQL> update emp set sal=sal*1.08 where empno > 7350;

14680064 rows updated.

Elapsed: 00:20:40.64

SQL>

SQL> commit;

Commit complete.

Elapsed: 00:00:00.01

SQL>

SQL> --

SQL> -- Current compression level and storage

SQL> --

SQL> -- Looks like the storage after the second

SQL> -- update to the HCC compressed data

SQL> --

SQL> select table_name, compression, compress_for

2 from user_tables;

TABLE_NAME COMPRESS COMPRESS_FOR

------------------------------ -------- ------------

DEPT DISABLED

BONUS DISABLED

SALGRADE DISABLED

DUMMY DISABLED

EMP ENABLED OLTP

Elapsed: 00:00:00.03

SQL>

SQL> select segment_name, sum(bytes) total_space

2 from user_segments

3 group by segment_name;

SEGMENT_NAME TOTAL_SPACE

----------------------------------- -----------

DEPT 65536

DUMMY 65536

EMP 511705088

EMP_IDX 260046848

SALGRADE 65536

Elapsed: 00:00:00.01

SQL>

The overall table size is still less than it was originally. The partially filled data blocks are still in an uncompressed state, contributing to the size increase. As these blocks fill up, they will be automatically compressed in a batch-style operation, reducing the table size further.

Updates to data compressed using HCC reveal behavior you should expect, after reading the examples provided in this chapter. However, in this scenario, the compression algorithm changes, due to the updates. Originally compressed for QUERY HIGH after updates, the compression becomes OLTP and remains there until modified by you. The data dictionary still reports that HCC is in effect, and it is, but because of the updates, Oracle now treats this data as though it were compressed using the OLTP compression scheme. We will update data using the EMP table, compressed for QUERY HIGH, then compressed for QUERY LOW, to illustrate this behavior.

SQL> --

SQL> -- Perform an update on the compressed data

SQL> --

SQL> -- Note elapsed time to complete

SQL> --

SQL> update emp set sal=sal*1.08 where empno > 7350;

14680064 rows updated.

Elapsed: 00:42:34.40

SQL>

SQL> commit;

Commit complete.

Elapsed: 00:00:00.03

SQL>

SQL> --

SQL> -- Compression type has not changed in the

SQL> -- data dictionary but the consumed space

SQL> -- has increased to the level of a table

SQL> -- initially compressed for OLTP

SQL> --

SQL> select table_name, compression, compress_for

2 from user_tables;

TABLE_NAME COMPRESS COMPRESS_FOR

------------------------------ -------- ------------

DEPT DISABLED

BONUS DISABLED

SALGRADE DISABLED

DUMMY DISABLED

EMP ENABLED QUERY HIGH

Elapsed: 00:00:00.03

SQL>

SQL> select segment_name, sum(bytes) total_space

2 from user_segments

3 group by segment_name;

SEGMENT_NAME TOTAL_SPACE

----------------------------------- -----------

DEPT 65536

DUMMY 65536

EMP 226492416

EMP_IDX 721420288

SALGRADE 65536

Elapsed: 00:00:00.33

SQL>

SQL> --

SQL> -- Perform a second update

SQL> --

SQL> -- Note elapsed time

SQL> --

SQL> update emp set sal=sal*1.08 where empno > 7350;

14680064 rows updated.

Elapsed: 00:03:15.00

SQL>

SQL> commit;

Commit complete.

Elapsed: 00:00:00.02

SQL>

SQL> --

SQL> -- Current storage consumed after second update

SQL> --

SQL> select table_name, compression, compress_for

2 from user_tables;

TABLE_NAME COMPRESS COMPRESS_FOR

------------------------------ -------- ------------

DEPT DISABLED

BONUS DISABLED

SALGRADE DISABLED

DUMMY DISABLED

EMP ENABLED QUERY HIGH

Elapsed: 00:00:00.02

SQL>

SQL> select segment_name, sum(bytes) total_space

2 from user_segments

3 group by segment_name;

SEGMENT_NAME TOTAL_SPACE

----------------------------------- -----------

DEPT 65536

DUMMY 65536

EMP 452984832

EMP_IDX 721420288

SALGRADE 65536

Elapsed: 00:00:00.21

SQL>

Note that the index associated with the table also increases in size after the first update and remains at that size during subsequent updates to the table. We will now perform the same updates to the EMP table compressed for QUERY LOW, as follows:

SQL> --

SQL> -- Perform an update

SQL> --

SQL> -- Note elapsed time (less than the previous compressed update)

SQL> --

SQL> update emp set sal=sal*1.08 where empno > 7350;

14680064 rows updated.

Elapsed: 00:47:52.75

SQL>

SQL> commit;

Commit complete.

Elapsed: 00:00:00.02

SQL>

SQL> --

SQL> -- Current compression level and storage

SQL> --

SQL> -- Looks like the storage after the second

SQL> -- update to the HCC compressed data

SQL> --

SQL> select table_name, compression, compress_for

2 from user_tables;

TABLE_NAME COMPRESS COMPRESS_FOR

------------------------------ -------- ------------

DEPT DISABLED

BONUS DISABLED

SALGRADE DISABLED

DUMMY DISABLED

EMP ENABLED QUERY LOW

Elapsed: 00:00:00.03

SQL>

SQL> select segment_name, sum(bytes) total_space

2 from user_segments

3 group by segment_name;

SEGMENT_NAME TOTAL_SPACE

----------------------------------- -----------

DEPT 65536

DUMMY 65536

EMP 243269632

EMP_IDX 612368384

SALGRADE 65536

Elapsed: 00:00:00.49

SQL>

SQL> --

SQL> -- Perform a second update

SQL> --

SQL> -- Note elapsed time

SQL> --

SQL> update emp set sal=sal*1.08 where empno > 7350;

14680064 rows updated.

Elapsed: 00:02:20.24

SQL>

SQL> commit;

Commit complete.

Elapsed: 00:00:00.02

SQL>

SQL> --

SQL> -- Current storage consumed after second update

SQL> --

SQL> select table_name, compression, compress_for

2 from user_tables;

TABLE_NAME COMPRESS COMPRESS_FOR

------------------------------ -------- ------------

DEPT DISABLED

BONUS DISABLED

SALGRADE DISABLED

DUMMY DISABLED

EMP ENABLED QUERY LOW

Elapsed: 00:00:00.03

SQL>

SQL> select segment_name, sum(bytes) total_space

2 from user_segments

3 group by segment_name;

SEGMENT_NAME TOTAL_SPACE

----------------------------------- -----------

DEPT 65536

DUMMY 65536

EMP 511705088

EMP_IDX 612368384

SALGRADE 65536

Elapsed: 00:00:02.80

SQL>

Note the slight size difference between the results from the updates to QUERY HIGH and QUERY LOW compression. The real differences are shown in the overall elapsed times to complete the desired updates. The first update to the table takes the longest time, as the fully compressed data is being uncompressed to complete the transaction. The second update takes longer than for an uncompressed table, but it still takes considerably less time than the initial update. This is one of the issues with using compression on an active table.

Using either of the ARCHIVE compression types, the situation is no different. Because the ARCHIVE levels provide the best initial compression ratios, it should be expected that updates to tables compressed in either of these two ways should take greater time to complete, especially the first update after compression has completed. We perform the same updates to these two compression levels, as we did to the other levels, and report the results. First, for ARCHIVE HIGH:

SQL> --

SQL> -- Perform an update on the compressed data

SQL> --

SQL> -- Note elapsed time to complete

SQL> --

SQL> update emp set sal=sal*1.08 where empno > 7350;

14680064 rows updated.

Elapsed: 00:50:36.26

SQL>

SQL> commit;

Commit complete.

Elapsed: 00:00:00.02

SQL>

SQL> --

SQL> -- Compression type has not changed in the

SQL> -- data dictionary but the consumed space

SQL> -- has increased to the level of a table

SQL> -- initially compressed for OLTP

SQL> --

SQL> select table_name, compression, compress_for

2 from user_tables;

TABLE_NAME COMPRESS COMPRESS_FOR

------------------------------ -------- ------------

DEPT DISABLED

BONUS DISABLED

SALGRADE DISABLED

DUMMY DISABLED

EMP ENABLED ARCHIVE HIGH

Elapsed: 00:00:00.03

SQL>

SQL> select segment_name, sum(bytes) total_space

2 from user_segments

3 group by segment_name;

SEGMENT_NAME TOTAL_SPACE

----------------------------------- -----------

DEPT 65536

DUMMY 65536

EMP 234881024

EMP_IDX 679477248

SALGRADE 65536

Elapsed: 00:00:00.11

SQL>

SQL> --

SQL> -- Perform a second update

SQL> --

SQL> -- Note elapsed time

SQL> --

SQL> update emp set sal=sal*1.08 where empno > 7350;

14680064 rows updated.

Elapsed: 00:02:23.52

SQL>

SQL> commit;

Commit complete.

Elapsed: 00:00:00.02

SQL>

SQL> --

SQL> -- Current storage consumed after second update

SQL> --

SQL> select table_name, compression, compress_for

2 from user_tables;

TABLE_NAME COMPRESS COMPRESS_FOR

------------------------------ -------- ------------

DEPT DISABLED

BONUS DISABLED

SALGRADE DISABLED

DUMMY DISABLED

EMP ENABLED ARCHIVE HIGH

Elapsed: 00:00:00.06

SQL>

SQL> select segment_name, sum(bytes) total_space

2 from user_segments

3 group by segment_name;

SEGMENT_NAME TOTAL_SPACE

----------------------------------- -----------

DEPT 65536

DUMMY 65536

EMP 452984832

EMP_IDX 679477248

SALGRADE 65536

Elapsed: 00:00:03.00

SQL>

All the space savings from using ARCHIVE HIGH were lost, putting the table back into the range of OLTP compression after the same updates. Notice that the first update consumed about five more minutes of elapsed time, as compared to the QUERY-compressed tables. To finish out the space changes that can be expected from using HCC on active tables, we perform the same updates on our EMP table compressed for ARCHIVE LOW, as follows:

SQL>

SQL> --

SQL> -- Perform an update

SQL> --

SQL> -- Note elapsed time (less than the previous compressed update)

SQL> --

SQL> update emp set sal=sal*1.08 where empno > 7350;

14680064 rows updated.

Elapsed: 00:07:29.25

SQL>

SQL> commit;

Commit complete.

Elapsed: 00:00:00.02

SQL>

SQL> --

SQL> -- Current compression level and storage

SQL> --

SQL> -- Looks like the storage after the second

SQL> -- update to the HCC compressed data

SQL> --

SQL> select table_name, compression, compress_for

2 from user_tables;

TABLE_NAME COMPRESS COMPRESS_FOR

------------------------------ -------- ------------

DEPT DISABLED

BONUS DISABLED

SALGRADE DISABLED

DUMMY DISABLED

EMP ENABLED ARCHIVE LOW

Elapsed: 00:00:00.03

SQL>

SQL> select segment_name, sum(bytes) total_space

2 from user_segments

3 group by segment_name;

SEGMENT_NAME TOTAL_SPACE

----------------------------------- -----------

DEPT 65536

DUMMY 65536

EMP 218103808

EMP_IDX 637534208

SALGRADE 65536

Elapsed: 00:00:00.02

SQL>

SQL> --

SQL> -- Perform a second update

SQL> --

SQL> -- Note elapsed time

SQL> --

SQL> update emp set sal=sal*1.08 where empno > 7350;

14680064 rows updated.

Elapsed: 00:02:12.42

SQL>

SQL> commit;

Commit complete.

Elapsed: 00:00:00.03

SQL>

SQL> --

SQL> -- Current storage consumed after second update

SQL> --

SQL> select table_name, compression, compress_for

2 from user_tables;

TABLE_NAME COMPRESS COMPRESS_FOR

------------------------------ -------- ------------

DEPT DISABLED

BONUS DISABLED

SALGRADE DISABLED

DUMMY DISABLED

EMP ENABLED ARCHIVE LOW

Elapsed: 00:00:00.06

SQL>

SQL> select segment_name, sum(bytes) total_space

2 from user_segments

3 group by segment_name;

SEGMENT_NAME TOTAL_SPACE

----------------------------------- -----------

DEPT 65536

DUMMY 65536

EMP 452984832

EMP_IDX 637534208

SALGRADE 65536

Elapsed: 00:00:00.02

SQL>

Again, the total space consumed by the EMP table returns to the level of OLTP compression after two updates, so the space saved after the table was initially compressed has been lost.

Ratios—What to Expect

We have shown that when the data is initially compressed, HCC can provide a substantial reduction in table size. Reasonably accurate estimates can be obtained from Oracle.

What Oracle Thinks

To return a reasonably accurate compression ratio, the DBMS_COMPRESSION.GET_COMPRESSION_RATIO procedure can be used.

SQL>

SQL> --

SQL> -- Compute compression estimates

SQL> --

SQL>

SQL> set serveroutput on size 1000000

SQL>

SQL> declare

2 blockct_comp number;

3 blockct_uncomp number;

4 rows_comp number;

5 rows_uncomp number;

6 comp_rat number;

7 comp_type varchar2(40);

8 begin

9 dbms_compression.get_compression_ratio('&&tblspc','&&ownr','&&tblname',null,

dbms_compression.comp_for_oltp, blockct_comp, blockct_uncomp,rows_comp, rows_uncomp,

comp_rat, comp_type);

10 dbms_output.put_line('Compression type: '||comp_type||' Compression ratio (est):

'||comp_rat);

11 dbms_compression.get_compression_ratio('&&tblspc','&&ownr','&&tblname',null,

dbms_compression.comp_for_query_low, blockct_comp, blockct_uncomp,rows_comp,

rows_uncomp, comp_rat, comp_type);

12 dbms_output.put_line('Compression type: '||comp_type||' Compression ratio (est):

'||comp_rat);

13 dbms_compression.get_compression_ratio('&&tblspc','&&ownr','&&tblname',null,

dbms_compression.comp_for_query_high, blockct_comp, blockct_uncomp,rows_comp,

rows_uncomp, comp_rat, comp_type);

14 dbms_output.put_line('Compression type: '||comp_type||' Compression ratio (est):

'||comp_rat);

15 dbms_compression.get_compression_ratio('&&tblspc','&&ownr','&&tblname',null,

dbms_compression.comp_for_archive_low, blockct_comp, blockct_uncomp,rows_comp,

rows_uncomp, comp_rat, comp_type);

16 dbms_output.put_line('Compression type: '||comp_type||' Compression ratio (est):

'||comp_rat);

17 dbms_compression.get_compression_ratio('&&tblspc','&&ownr','&&tblname',null,

dbms_compression.comp_for_archive_high, blockct_comp, blockct_uncomp,rows_comp,

rows_uncomp, comp_rat, comp_type);

18 dbms_output.put_line('Compression type: '||comp_type||' Compression ratio (est):

'||comp_rat);

19 end;

20 /

Enter value for tblspc: USERS

Enter value for ownr: BING

Enter value for tblname: EMP

old 9: dbms_compression.get_compression_ratio('&&tblspc','&&ownr','&&tblname',null,

dbms_compression.comp_for_oltp, blockct_comp, blockct_uncomp,rows_comp, rows_uncomp,

comp_rat, comp_type);

new 9: dbms_compression.get_compression_ratio('USERS','BING','EMP',null,dbms_compression.

comp_for_oltp, blockct_comp, blockct_uncomp,rows_comp, rows_uncomp, comp_rat, comp_type);

old 11: dbms_compression.get_compression_ratio('&&tblspc','&&ownr','&&tblname',null,

dbms_compression.comp_for_query_low, blockct_comp, blockct_uncomp,rows_comp, rows_uncomp,

comp_rat, comp_type);

new 11: dbms_compression.get_compression_ratio('USERS','BING','EMP',null, dbms_compression.

comp_for_query_low, blockct_comp, blockct_uncomp,rows_comp, rows_uncomp, comp_rat, comp_type);

old 13: dbms_compression.get_compression_ratio('&&tblspc','&&ownr','&&tblname',null, dbms_compression.comp_

for_query_high, blockct_comp, blockct_uncomp,rows_comp, rows_uncomp, comp_rat, comp_type);

new 13: dbms_compression.get_compression_ratio('USERS','BING','EMP',null, dbms_compression.comp_

for_query_high, blockct_comp, blockct_uncomp,rows_comp, rows_uncomp, comp_rat, comp_type);

old 15: dbms_compression.get_compression_ratio('&&tblspc','&&ownr','&&tblname',null, dbms_compression.comp

_for_archive_low, blockct_comp, blockct_uncomp,rows_comp, rows_uncomp, comp_rat, comp_type);

new 15: dbms_compression.get_compression_ratio('USERS','BING','EMP',null, dbms_compression.comp_

for_archive_low, blockct_comp, blockct_uncomp,rows_comp, rows_uncomp, comp_rat, comp_type);

old 17: dbms_compression.get_compression_ratio('&&tblspc','&&ownr','&&tblname',null, dbms_compression.comp_

for_archive_high, blockct_comp, blockct_uncomp,rows_comp, rows_uncomp, comp_rat, comp_type);

new 17: dbms_compression.get_compression_ratio('USERS','BING','EMP',null, dbms_compression.comp_

for_archive_high, blockct_comp, blockct_uncomp,rows_comp, rows_uncomp, comp_rat, comp_type);

Compression type: "Compress For OLTP" Compression ratio (est): 3.5

Compression Advisor self-check validation successful. select count(*) on both Uncompressed and EHCC

Compressed format = 1000001 rows

Compression type: "Compress For Query Low" Compression ratio (est): 51.1

Compression Advisor self-check validation successful. select count(*) on both Uncompressed and EHCC

Compressed format = 1000001 rows

Compression type: "Compress For Query High" Compression ratio (est): 183.9

Compression Advisor self-check validation successful. select count(*) on both Uncompressed and EHCC

Compressed format = 1000001 rows

Compression type: "Compress For Archive Low" Compression ratio (est): 183.9

Compression Advisor self-check validation successful. select count(*) on both Uncompressed and EHCC

Compressed format = 1000001 rows

Compression type: "Compress For Archive High" Compression ratio (est): 189.8

PL/SQL procedure successfully completed.

Elapsed: 00:04:30.02

SQL>

Table 6-3 summarizes the estimated compression ratios for the various compression types.

Table 6-3. Estimated Compression Ratios

Compression Type |

Estimated Ratio |

|---|---|

| OLTP | 3.5 |

| Query Low | 51.1 |

| Query High | 183.9 |

| Archive Low | 183.9 |

| Archive High | 189.8 |

What You Get

The data in our EMP table was very compressible (simply, the same 14 rows loaded again and again, to reach our target size and row count), so the compression ratios should be impressive. Your data may not be as compressible (it likely isn’t), so your estimates and actual ratios will probably differ. The actual ratios for our data agreed fairly well with the estimates; Table 6-4 summarizes those results.

Table 6-4. Actual Observed Compression Ratios

Compression Type |

Actual Ratio |

|---|---|

| OLTP | 3.5 |

| Query Low | 48.6 |

| Query High | 85.0 |

| Archive Low | 85.0 |

| Archive High | 170.0 |

Your results may differ, but for three out of the five listed compression types, Oracle did a very good job of estimating the compression ratios. Be aware that what Oracle reports may not be exactly what you get in actual use.

Things to Know

There are three general levels of compression, two of which require additional licensing: Basic, OLTP, and Hybrid Columnar Compression (HCC).

HCC has two types (QUERY and ARCHIVE), and each of those has two subtypes (HIGH and LOW), to provide four distinct levels of HCC. In general, QUERY-type compression is intended for somewhat active data, whereas ARCHIVE compression is intended for data that will be queried fairly infrequently and will not be updated.

HCC does its magic by collecting rows with similar data and compressing them into a Compression Unit (CU). Each unit is composed of multiple data blocks that contain entire rows of data across the CU. This makes it easier and more efficient for Oracle to return the data, because only one CU has to be accessed to return entire rows.

Compression levels vary with the compression type. Basic provides the least compression, and ARCHIVE HIGH provides the greatest space savings at the time of compression.

Loading data into compressed tables usually requires parallel processing and/or direct path loads (the latter supplied by SQL*Loader). The /*+ append */ hint parallelizes the inserts, allowing them to be compressed on insert. It can take longer to load compressed tables than it can uncompressed tables.

Queries are a mixed bag when it comes to execution times, as Smart Scans can make a query of compressed data run faster by offloading the uncompression activities to the storage cells. Queries of compressed data that don’t use Smart Scans can run longer (and most likely will) than the same query run against uncompressed data.

Updates to compressed tables will cause the table to increase in size. Basic compression has no provision for automatic re-compression, so tables using this method will have to be periodically re-compressed if they are actively updated. OLTP compression will automatically re-compress data blocks that are full, making room for more uncompressed data. HCC compression silently reverts to OLTP after data is updated, so the original level of compression is lost, but the OLTP level of compression remains, including the automatic compression of full data blocks.

Compression ratios can be estimated by the DBMS_COMPRESSION.GET_COMPRESSION_RATIO procedure, and in many cases, will agree with the observed compression ratios on user data. Highly compressible data can show large discrepancies between the estimated and actual compression ratios, so do not be alarmed if you don’t get exactly what Oracle predicts.