Chapter 6. Web server and web application testing

Information in this chapter:

• Objective

• Approach

• Core Technologies

• Open Source Tools

• Case Study: The Tools in Action

• Hands-On Challenge

It's a fact of life today: Vulnerabilities exist on Web servers and within Web applications. And attackers can take advantage of these vulnerabilities to compromise a remote system or software. This could mean gaining a shell on the remote server, or exposing the information in an application database through SQL injection or other techniques. The goal of a penetration tester in this scenario is to gain access to information which is not intended to be exposed. The goal of this chapter is to discuss Web server and Web application vulnerabilities so that readers can find them in the systems they're testing and compromise them. Topics covered include objectives in compromising Web applications, and the use of stack overflows to compromise the Web server daemon, default pages left open on the Web server, and vulnerabilities within the Web application itself. A topically relevant case study and hands-on challenge complete the chapter.

This chapter covers vulnerabilities associated with port 80. A responsive port 80 (or 443) raises several questions for attackers and penetration testers:

• Can I compromise the web server due to vulnerabilities in the server daemon itself?

• Can I compromise the web server due to its unhardened state?

• Can I compromise the application running on the web server due to vulnerabilities within the application?

• Can I compromise the web server due to vulnerabilities within the application?

Throughout this chapter, we will go through the approach and techniques used to answer these questions. We'll also discuss the core technologies and associated tools which we will be utilizing to accomplish our penetration testing. Finally, we'll go over a real-life scenario in a case study to see how to actually accomplish the testing that we discuss.

This chapter will arm the penetration tester with enough knowledge to be able to assess web servers and web applications. The topics covered in this chapter are broad; therefore, we will not cover every tool or technique available. Instead, this chapter aims to arm readers with enough knowledge of the underlying technology to enable them to perform field testing.

6.1. Objective

Attacking or assessing companies over the Internet has changed over the past few years, from assessing a multitude of services to assessing just a handful. It is rare today to find an exposed world-readable Network File Server (NFS) share on a host or on an exposed vulnerability (such as fingerd). Network administrators have long known the joys of “default deny rule bases,” and, in most cases, vendors no longer leave publicly disclosed bugs unpatched on public networks for months. Chances are good that when you are connected to a server on the Internet you are using the Hypertext Transfer Protocol (HTTP) versus Gopher or File Transfer Protocol (FTP).

Our objective is to take advantage of the vulnerabilities which may exist on hosts or in hosted applications through which we can compromise the remote system or software. This could mean gaining a shell on the remote server or exposing the information stored in an application database through SQL injection or other techniques. Our primary goal as a penetration tester in this scenario is to gain access to information which is not intended to be exposed by our client.

The tools and techniques that we will discuss should give you a good understanding of what types of vulnerabilities exist on web servers and within web applications. Using that knowledge, you will then be able to find vulnerabilities in the systems you are testing and compromise them. It would be impossible to cover penetration techniques for every known web application, but by understanding the basic vulnerabilities which can be exploited and the methods for doing so, you can leverage that knowledge to compromise any unsecure web host or application.

6.1.1. Web server vulnerabilities: a short history

For as long as there have been web servers, there have been security vulnerabilities. As superfluous services have been shut down, security vulnerabilities in web servers have become the focal point of attacks. The once fragmented web server market, which boasted multiple players, has filtered down to two major players: Apache's Hyper Text Transfer Protocol Daemon (HTTPD) and Microsoft's Internet Information Server (IIS). According to www.netcraft.com, these two servers account for over 80 percent of the market share [1].

Both of these servers have a long history of abuse due to remote root exploits that were discovered in almost every version of their daemons. Both companies have reinforced their security, but they are still huge targets. As you are reading this, somewhere in the world researchers are trying to find the next remote HTTP server vulnerability. The game of cat and mouse between web server developer and security researcher is played constantly.

As far back as 1995, security notices were being posted and users warned about a security flaw being exploited in NCSA servers. A year later, the Apache PHF bug gave attackers a point-and-click method of attacking Web servers. Patches were developed and fixes put in place only to be compromised through different methods. About six years later, while many positive changes in security had been made, vulnerabilities still existed in web server software. The target this time was Microsoft's IIS servers with the use of the Code-Red and Nimda worms which resulted in millions of servers worldwide being compromised and billions of dollars in costs for cleanup, system inspection, patching, and lost productivity. These worms were followed swiftly by the less prolific Slapper worm, which targeted Apache.

Both vendors made determined steps to reduce the vulnerabilities in their respective code bases. This, of course, led to security researchers digging deeper and finding other vulnerabilities. As the web server itself became more difficult to compromise, research began on the applications hosted on the servers and new techniques and methods of compromising systems were developed.

6.1.2. Web applications: the new challenge

As the web made its way into the mainstream, publishing corporate information with minimal technical know-how became increasingly alluring. This information rapidly changed from simple static content, to database-driven content, to full-featured corporate web sites. A staggering number of vendors quickly responded with web publishing solutions, thus giving non-technical personnel the ability to publish applications with database back-ends to the Internet in a few simple clicks. Although this fueled World Wide Web hype, it also gave birth to a generation of “developers” that considered the Hypertext Markup Language (HTML) to be a programming language.

This influx of fairly immature developers, coupled with the fact that HTTP was not designed to be an application framework, set the scene for the web application testing field of today. A large company may have dozens of web-driven applications strewn around that are not subjected to the same testing and QA processes that regular development projects undergo. This is truly an attacker's dream.

Prior to the proliferation of web applications, an attacker may have been able to break into the network of a major airline, may have rooted all of its UNIX servers and added him or herself as a domain administrator, and may have had “superuser” access to the airline mainframe; but unless the attacker had a lot of airline experience, it was unlikely that he or she was granted first class tickets to Cancun. The same applied to attacking banks. Breaking into a bank's corporate network was relatively easy; however, learning the SWIFT codes and procedures to steal the money was more involved. Then came web applications, where all of those possibilities opened up to attackers in (sometimes) point-and-click fashion.

6.2. Approach

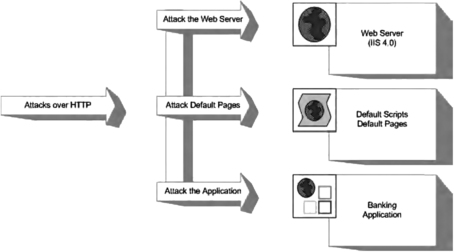

Before delving into the actual testing processes and the core technologies used, we must clarify the distinction between testing web servers, default pages, and web applications. Imagine that a bank has decided to deploy its new Internet banking service on an ancient NT4 server. The application is thrown on top of the unhardened IIS4 web server (the NT4 default web server) and is exposed to the Internet. Obviously, there is a high likelihood of a large number of vulnerabilities, which can be roughly grouped into three families, as listed here and shown in Fig. 6.1:

• Vulnerabilities in the server

• Vulnerabilities due to exposed Common Gateway Interface (CGI) scripts, default pages, or default applications

• Vulnerabilities within the banking application itself

This then leads into a three-target approach for penetration testing of the overall system: web server, default pages, and web application.

6.2.1. Web server testing

Essentially, you can test a web server for vulnerabilities in two distinct scenarios:

• Testing the web server for the existence of a known vulnerability

• Discovering a previously unknown vulnerability in the web server

Testing the server for the existence of a known vulnerability is a task often left to automatic scanners due to the very basic nature of the task. Essentially, the scanner is given a stimulus and response pair along with a mini-description of the problem. The scanner submits the stimulus to the server and then decides whether the problem exists, based on the server's response. This “test” can be a simple request to obtain the server's running version or it can be as complex as going through several handshaking steps before actually obtaining the results it needs. Based on the server's reply, the scanner may suggest a list of vulnerabilities to which the server might be vulnerable. The test may also be slightly more involved, whereby the specific vulnerable component of the server is prodded to determine the server's response, with the final step being an actual attempt to exploit the vulnerable service.

For example, say a vulnerability exists in the .printer handler on the imaginary SuperServer2010 web server (for versions 1.x–2.2). This vulnerability allows for the remote execution of code by an attacker who submits a malformed request to the .printer subsystem. In this scenario, you could use the following checks during testing:

1. You issue a

HEAD request to the web server. If the server returns a server header containing the word “SuperServer2010” and has a version number between 1 and 2.2, it is reported as vulnerable.

2. You take the findings from step 1 and additionally issue a request to the .printer subsystem (

GET mooblah.printer HTTP/1.1). If the server responds with a “

Server Error,” the .printer subsystem is installed. If the server responds with a generic “

Page not Found: 404” error, this subsystem has been removed. You rely on the fact that you can spot sufficient differences consistently between hosts that are not vulnerable to a particular problem.

3. You use an exploit/exploit framework to attempt to exploit the vulnerability. The objective here is to compromise the server by leveraging the vulnerability, making use of an exploit.

Discovering new or previously unpublished vulnerabilities in a web server has long been considered a “black” art. However, the past few years have seen an abundance of quality documentation in this area. During this component of an assessment, analysts try to discover programmatic vulnerabilities within a target HTTP server using some variation or combination of code analysis or application stress testing/fuzzing.

Code analysis requires that you search through the code for possible vulnerabilities. You can do this with access to the source code or by examining the binary through a disassembler (and related tools). Although tools such as Flawfinder (http://www.dwheeler.com/flawfinder), Rough Auditing Tool for Security (RATS—https://www.fortify.com/ssa-elements/threat-intelligence/rats.html), and “It's The Software Stupid! Security Scanner” (ITS4—http://www.cigital.com/its4/) have been around for a long time, they were not heavily used in the mainstream until fairly recently.

Fuzzing and application stress testing is another relatively old concept that has recently become both fashionable and mainstream, with a number of companies adding hefty price tags to their commercial fuzzers. These techniques are used to find unexpected behaviors in applications when they are hit with unexpected inputs.

6.2.2. CGI and default pages testing

Testing for the existence of vulnerable CGIs and default pages is a simple process. You have a database of known default pages and known insecure CGIs that are submitted to the web server; if they return with a positive response, a flag is raised. Like most things, however, the devil is in the details.

Let's assume that our database contains three entries:

1. /login.cgi

2. /backup.cgi

3. /vulnerable.cgi

A simple scanner then submits these three requests to the victim web server to observe the results:

1. Scanner submits

GET /login.cgi HTTP/1.0:

a. Server responds with

404 File not Found.

b. Scanner concludes that it is not there.

2. Scanner submits

GET /backup.cgi HTTP/1.0:

a. Server responds with

404 File not Found.

b. Scanner concludes that the file is not there.

3. Scanner submits

GET /vulnerable.cgi HTTP/1.0:

a. Server responds with

200 OK.

b. Scanner decides that the file is there.

However, there are a few problems with this method. What happens when the scanner returns a friendly error message (e.g., the web server is configured to return a “

200 OK” along with a page saying “Sorry … not found”) instead of the standard 404? What should the scanner conclude if the return result is a

500 Server Error? The automation provided by scanners can be helpful and certainly speed up testing, but keep in mind challenges such as these, which reduce the reliability of automated testing.

6.2.3. Web application testing

Web application testing is a current hotbed of activity, with new companies offering tools to both attack and defend applications. Most testing tools today employ the following method of operation:

• Enumerate the application's entry points.

• Fuzz each entry point.

• Determine whether the server responds with an error.

This form of testing is prone to errors and misses a large proportion of the possible bugs in an application.

6.3. Core technologies

In this section, we will discuss the underlying technology and systems that we will assess in the chapter. Although a good toolkit can make a lot of tasks easier and greatly increases the productivity of a proficient tester, skillful penetration testers are always those individuals with a strong understanding of the fundamentals.

6.3.1. Web server exploit basics

Exploiting the actual servers hosting web applications is a complex process. Typically, it requires many hours of research and testing to find new vulnerabilities. Of course, when knowledge of these vulnerabilities is publicly published, exploits which take advantage of the vulnerability quickly follow. This section aims at clarifying the concepts regarding these sorts of attacks.

The first buffer overflow attack to hit the headlines was used in the infamous “Morris” worm in 1988. Robert Morris Jr. released the Morris worm by mistake. This worm exploited known vulnerabilities (as well as weak passwords) in a number of processes including UNIX sendmail, Finger, and rsh/rexec. The core of the worm infected Digital Equipment Corporation's VAX machines running BSD and Sun 3 systems. Years later, in June of 2001, the Code-Red worm used the same attack vector (a buffer overflow) to attack hosts around the world.

A buffer is simply a defined contiguous piece of memory. Buffer overflow attacks aim to manipulate the amount of data stored in memory to alter execution flow. This chapter briefly covers the following attacks:

• Stack-based buffer overflows

• Heap-based buffer overflows

6.3.1.1. Stack-based overflows

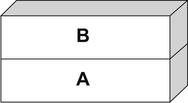

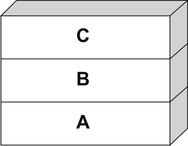

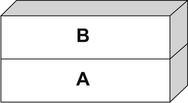

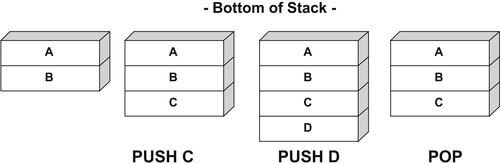

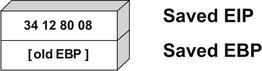

A stack is simply a last in, first out (LIFO) abstract data type. Data is pushed onto a stack or popped off it (see Fig. 6.2).

The simple stack shown in Fig. 6.2 has [A] at the bottom and [B] at the top. Now, let's push something onto the stack using a

PUSH C command (see Fig. 6.3).

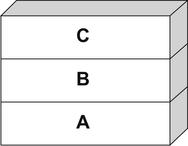

Let's push another for good measure:

PUSH D (see Fig. 6.4).

Now let's see the effects of a

POP command.

POP effectively removes an element from the stack (see Fig. 6.5).

Notice that [D] has been removed from the stack. Let's do it again for good measure (see Fig. 6.6).

Notice that [C] has been removed from the stack.

Stacks are used in modern computing as a method for passing arguments to a function and they are used to reference local function variables. On x86 processors, the stack is said to be inverted, meaning that the stack grows downward (see Fig. 6.7).

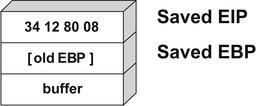

When a function is called, its arguments are pushed onto the stack. The calling function's current address is also pushed onto the stack so that the function can return to the correct location once the function is complete. This is referred to as the saved Extended Instruction Pointer (EIP) or simply the Instruction Pointer (IP). The address of the base pointer is also then saved onto the stack.

Look at the following snippet of code:

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

int foo()

{

char buffer[8];/* Point 2 */

strcpy(buffer, “AAAAAAAAAA”;

/* Point 3 */

return 0;

}

int main(int argc, char **argv)

{

foo();

/* Point 1 */

return 1;

/* address 0x08801234 */

During execution, the stack frame is set up at Point 1. The address of the next instruction after Point 1 is noted and saved on the stack with the previous value of the 32-bit Base Pointer (EBP). This is illustrated in Fig. 6.8.

Next, space is reserved on the stack for the buffer char array (eight characters) as shown in Fig. 6.9.

Now, let's examine whether the

strcpy function was used to copy eight As as specified in our defined buffer or 10 As as defined in the actual string (see Fig. 6.10).

On the left of Fig. 6.10 is an illustration of what the stack would have looked like had we performed a

strcopy of six As into the buffer. The example on the right shows the start of a problem. In this instance, the extra As have overrun the space reserved for buffer [8], and have begun to overwrite the previously stored [EBP]. Let's see what happens if we copy 13 As and 20 As, respectively. This is illustrated in Fig. 6.11.

In Fig. 6.11, we can see that the old EIP value was completely overwritten when 20 characters were sent to the eight-character buffer. Technically, sixteen characters would have done the trick in this case. This means that once the

foo() function was finished, the processor tried to resume execution at the address A A A A (0x41414141). Therefore, a classic stack overflow attack aims at overflowing a buffer on the stack to replace the saved EIP value with the address of the attacker's choosing. The goal would be to have the attacker's code available somewhere in memory and, using a stack overflow, cause that memory location to be the next instruction executed.

Note

A lot of this information may seem to be things that the average penetration tester doesn't need to know. Why would you need to understand how a stack overflow actually works when you can just download the latest Metasploit update?

In many cases, a company will have patches in place for the most common vulnerabilities and you may need to uncover uncommon or previously unknown exploits to perform your testing. In addition, sometimes the exploit will be coded for a specific software version on a specific operating system and need to be tweaked a little to work in your specific scenario. Having a solid understanding of these basics is very important.

6.3.1.2. Heap-based overflows

Variables that are dynamically declared (usually using

malloc at runtime) are stored on the heap. The operating system in turn manages the amount of space allocated to the heap. In its simplest form, a heap-based overflow can be used to overwrite or corrupt other values on the heap (see Fig. 6.12).

In Fig. 6.12, we can see that the buffer currently holding “A A A A” can be overflowed in a manner similar to a stack overflow and that the potential exists for the

PASSWORD variable to be overwritten. Heap-based exploitation was long considered unlikely to produce remote code execution because it did not allow an attacker to directly manipulate the value of EIP. However, developments over the past few years have changed this dramatically. Function pointers that are stored on the heap become likely targets for being overwritten, allowing the attacker to replace a function with the address to malicious code. Once that function is called, the attacker gains control of the execution path.

6.3.2. CGI and default page exploitation

In the past, web servers often shipped with a host of sample scripts and pages to demonstrate either the functionality of the server or the power of the scripting languages it supported. Many of these pages were vulnerable to abuse, and databases were soon cobbled together with lists of these pages. By simply running a basic scanner, it was fairly simple to see which CGI scripts a web server had available and exploit them.

In 1999, RFP (Rain Forest Puppy) released Whisker, a Perl-based CGI scanner that had the following design goals:

•

Intelligent. Conditional scanning, reduction of false positives, directory checking

•

Flexible. Easily adapted to custom configurations

•

Scriptable. Easily updated by just about anyone

•

Bonus feature. Intrusion detection system (IDS) evasion, virtual hosts, authentication brute forcing

Whisker was the first scanner that checked for the existence of a subdirectory before firing off thousands of requests to files within it. It also introduced RFP's

sendraw() function, which was then put into a vast array of similar tools because it had the socket dependency that is a part of the base Perl install. RFP eventually rereleased Whisker as libWhisker, an API to be used by other scanners. According to its README, libWhisker:

• Can communicate over HTTP 0.9, 1.0, and 1.1

• Can use persistent connections (keepalives)

• Has proxy support

• Has anti-IDS support

• Has Secure Sockets Layer (SSL) support

• Can receive chunked encoding

• Has nonblock/timeout support built in (platform dependent)

• Has basic and NT LAN Manager (NTLM) authentication support (both server and proxy)

libWhisker has since become the foundation for a number of tools and the basic technique for CGI scanning has remained unchanged although the methods have improved over time. We'll talk more about specific tools in the Open source tools section of this chapter.

6.3.3. Web application assessment

Custom-built web applications quickly shot to the top of the list as targets for exploitation. The reason they are targeted so frequently is because the likelihood of a vulnerability existing in a web application is very, very high. Before we examine how to test for web application errors, we must gain a basic understanding of what they are and why they exist.

HTTP is essentially a stateless medium, which means that for a stateful application to be built on top of HTTP, the responsibility lies in the hands of the developers to manage the session state. Couple this with the fact that very few developers traditionally sanitize the input they receive from their users, and you can account for the majority of the bugs.

Typically, web application bugs allow one or more attacks which can be organized into one of the following classes:

• Information gathering attacks

• File system and directory traversal attacks

• Command execution attacks

• Database query injection attacks

• Cross-site scripting attacks

• Impersonation attacks (authentication and authorization)

• Parameter passing attacks

6.3.3.1. Information gathering attacks

These attacks attempt to glean information from the application that the attacker will find useful in compromising the server/service. These range from simple comments in the HTML document to verbose error messages that reveal information to the alert attacker. These sorts of flaws can be extremely difficult to detect with automated tools which, by their nature, are unable to determine the difference between useful and innocuous data. This data can be harvested by prompting error messages or by observing the server's responses.

6.3.3.2. File system and directory traversal attacks

These sorts of attacks are used when the web application is seen accessing the file system based on user-submitted input. A CGI that displayed the contents of a file called foo.txt with the URL http://victim/cgi-bin/displayFile?name=foo is clearly making a file system call based on our input. Traversal attacks would simply attempt to replace foo with another filename, possibly elsewhere on the machine. Testing for this sort of error is often done by making a request for a file that is likely to exist such as /etc/passwd and comparing the results to a request for a file that most likely will not exist such as /jkhweruihcn or similar random text.

6.3.3.3. Command execution attacks

These sorts of attacks can be leveraged when the web server uses user input as part of a command that is executed. If an application runs a command that includes parameters “tainted” by the user without first sanitizing it, the possibility exists for the user to leverage this sort of attack. An application that allows you to ping a host using

CGI http://victim/cgi-bin/ping?ip=10.1.1.1 is clearly running the

ping command in the back-end using our input as an argument. The idea as an attacker would be to attempt to chain two commands together. A reasonable test would be to try

http://victim/cgi-bin/ping?ip=10.1.1.1;whoami.

If successful, this will run the

ping command and then the

whoami command on the victim server. This is another simple case of a developer's failure to sanitize the input.

6.3.3.4. Database query injection attacks

Most custom web applications operate by interfacing with some sort of database behind the scenes. These applications make calls to the database using a scripting language such as the Structured Query Language (SQL) and a database connection. This sort of application becomes vulnerable to attack once the user is able to control the structure of the SQL query that is sent to the database server. This is another direct result of a programmer's failure to sanitize the data submitted by the end-user.

SQL introduces an additional level of vulnerability with its capability to execute multiple statements through a single command. Modern database systems introduce even more capability due to the additional functionality built into these systems in the form of stored procedures and batch commands. As we discussed in Chapter 5, database servers have the ability to perform very complex operations using locally stored scripts.

These stored procedures can be used to execute commands on the host server. SQL insertion/injection attacks attempt to add valid SQL statements to the SQL queries designed by the application developer in order to alter the application's behavior.

Imagine an application that simply selected all of the records from the database that matched a specific

QUERYSTRING. This application could have a URL such as http://victim/cgi-bin/query.cgi?searchstring=BOATS which relates to a snippet of code such as the following:

SELECT * from TABLE WHERE name = searchstring

In this case, the resulting query would be:

SELECT * from TABLE WHERE name = 'BOATS'

Once more we find that an application which fails to sanitize the user's input could fall prone to having input that extends an SQL query such as

http://victim/cgi-bin/query.cgi?searchstring=BOATS'DROP TABLE. This would change the query sent to the database to the following:

SELECT * from TABLE WHERE name = 'BOATS'' DROP TABLE

6.3.3.5. Cross-site scripting attacks

Cross-site scripting vulnerabilities have been the death of many a security mail list, with literally thousands of these bugs found in web applications. They are also often misunderstood. During a cross-site scripting attack, an attacker uses a vulnerable application to send a piece of malicious code (usually JavaScript) to a user of the application. Because this code runs in the context of the application, it has access to objects such as the user's cookie for that site. For this reason, most cross-site scripting (XSS) attacks result in some form of cookie theft.

Testing for XSS is reasonably easy to automate, which in part explains the high number of such bugs found on a daily basis. A scanner only has to detect that a piece of script submitted to the server was returned sufficiently un-mangled by the server to raise a red flag.

6.3.3.6. Impersonation attacks

Authentication and authorization attacks aim at gaining access to resources without the correct credentials. Authentication specifically refers to how an application determines who you are, and authorization refers to the application limiting your access to only that which you should see.

Due to their exposure, web-based applications are prime candidates for authentication brute-force attempts, whether they make use of NTLM, basic authentication, or forms-based authentication. This can be easily scripted and many open source tools offer this functionality.

Authorization attacks, however, are somewhat harder to automatically test because programs find it nearly impossible to detect whether the applications have made a subtle authorization error (e.g., if a human logged into Internet banking and saw a million dollars in their bank account, they would quickly realize that some mistake was being made; however, this is nearly impossible to consistently do across different applications with an automated program).

6.3.3.7. Parameter passing attacks

A problem that consistently appears in dealing with forms and user input is that of exactly how information is passed to the system. Most web applications use HTTP forms to capture and pass this information to the system. Forms use several methods for accepting user input, from freeform text areas to radio buttons and checkboxes. It is pretty common knowledge that users have the ability to edit these form fields (even the hidden ones) prior to form submission. The trick lies not in the submission of malicious requests, but rather in how we can determine whether our altered form had any impact on the web application.

6.4. Open source tools

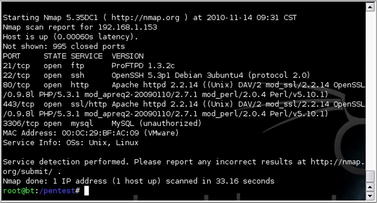

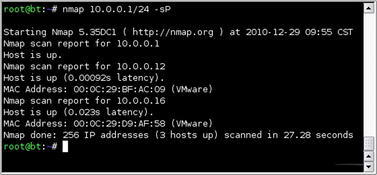

In Chapter 3, we discussed a number of tools which can be used for scanning and enumeration. The output of these tools forms the first step of penetration testing of web servers and web applications. For example, using the nmap tool can give us a great deal of information such as open ports and software versions that we can make use of when testing a target system. Fig. 6.13 shows the nmap results from scanning a target running the Damn Vulnerable Web Application (DVWA) live CD available from www.dvwa.co.uk.

Based on that scan, we have identified that the target in question is running Apache httpd 2.2.14 with a number of extensions installed. There also appears to be an FTP server, an SSH daemon, and a MySQL database server on this system. Since our focus for this chapter is web servers and web applications, our next step would be to look at what is on that web server a little more closely.

6.4.1. WAFW00F

First, let's see if there is a Web Application Firewall (WAF) in the way. A WAF is a specific type of firewall which is tailored to work with web applications. It intercepts HTTP or HTTPS traffic and imposes a set of rules that are specific to the functionality of the web application. These rules include features such as preventing SQL injection attacks or cross-site scripting. In our case, we need to know if there is a WAF that will interfere with our penetration testing.

A great tool for testing for WAFs is WAFW00F, the Web Application Firewall Detection Tool. This Python script, available at http://code.google.com/p/waffit/, accepts one or more URLs as arguments and runs a series of tests to determine whether or not a WAF is running between your host and the target. To execute the tool, simply run the command

python wafw00f.py [URL]. You can see an example of this in Fig. 6.14.

wafw00f.py USAGE

How to use:

Input fields:

[URLx] is a valid HTTP or HTTPS prefixed URL (e.g. http://faircloth.is-a-geek.com).

[Options] is one or more of the following options:

• -h – Help message

• -v – Verbose mode

• -a – Find all WAFs (versus stopping scanning at the first detected WAF)

• -r – Disable redirect requests (3xx responses)

• -t TEST – Test for a specific WAF

• -l – List all detectable WAFs

• --xmlrpc – Switch on XML–RPC interface

• --xmlrpcport

=

XMLRPCPORT – Specify alternate listening port

• -V – Version

Output:

Scans target URL(s) for WAFs and reports results.

6.4.2. Nikto

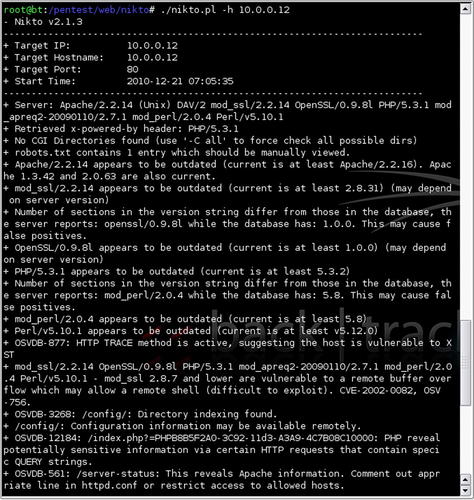

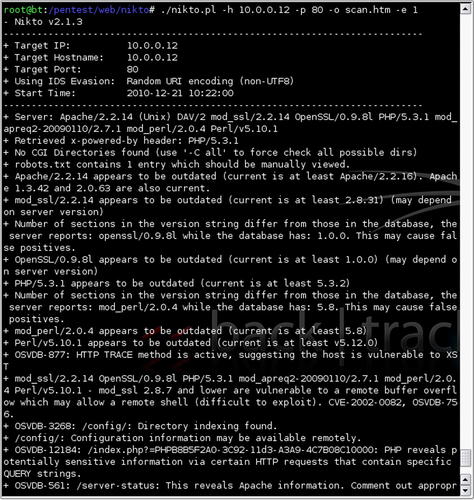

Nikto, from www.cirt.net, runs on top of LibWhisker2 and is an excellent web application scanner. The people at cirt.net maintain plugin databases, which are released under the GPL and are available on their site. Nikto has evolved over the years and has grown to have a large number of options for customizing your scans and even evading detection by an IDS. By default, Nikto scans are very “noisy,” but this behavior can be modified to perform stealthier scans.

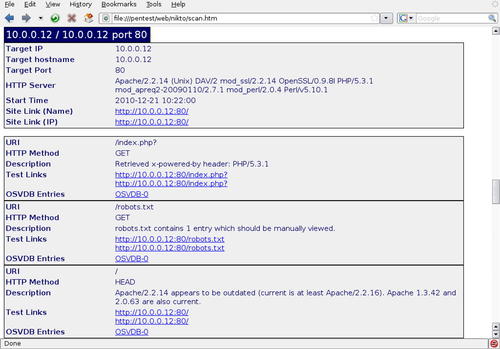

The most basic scan can be performed by using the default options along with a host IP or DNS address. The command line for this would be

nikto.pl -h [host]. Fig. 6.15 shows the results of a sample scan.

The scan shown in Fig. 6.15 reveals a number of details about the scanned host. First, Nikto detects the server version information and does a basic scan for CGI directories and robots.txt. The version details of the web server and associated plug-ins can be used to identify whether vulnerable versions of those pieces of software exist on the web server. Additionally, Nikto scans for and identifies some default directories such as “/config/” or “/admin/” as well as default files such as “test-cgi.”

Many additional options exist to tailor our scan with Nikto. For example, we can use the -p option to choose specific ports to scan or include a protocol prefix (such as https://) in the host name. A listing of all valid options can be found at http://cirt.net/nikto2-docs/options.html. Some common options are shown in the Nikto Usage sidebar of this chapter. An example of a Nikto scan using some of these options can be seen in Fig. 6.16 with the results shown in Fig. 6.17.

Nikto USAGE

How to use:

nikto.pl [Options]

Input fields:

[Options] includes one or more of the following common options:

• -H – Help

• -D V – Verbose mode

• -e [1-8,A,B] – Chooses IDS evasion techniques

• 1 – Random URI encoding (non-UTF8)

• 2 – Directory self-reference (/./)

• 3 – Premature URL ending

• 4 – Prepend long random string

• 5 – Fake parameter

• 6 – TAB as request spacer

• 7 – Change the case of the URL

• 8 – Use Windows directory separator ()

• A – Use a carriage return (0x0d) as a request spacer

• B – Use binary value 0x0b as a request spacer

• -h [host] – Host (IP, host name, text filename)

• -o [filename] – Output results to specified filename using format appropriate to specified extension

• -P [plug-ins] – Specifies which plug-ins should be executed against the target

• -p [port] – Specify ports for scanning

• -root [directory] – Prepends this value to all tests; used when you want to scan against a specific directory on the server

• -V – version

• -update – Updates Nikto plugins and databases from cirt.net

Output:

Scans target host(s) for a variety of basic web application vulnerabilities.

6.4.3. Grendel-Scan

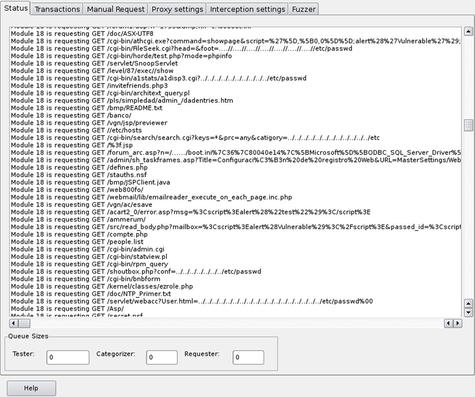

Grendel-Scan is another tool, similar to Nikto, which does automated scanning for web application vulnerabilities. It's available at http://grendel-scan.com/ and is designed as a cross-platform Java application which allows it to run on a variety of operating systems.

Running the tool presents you with a GUI interface allowing for a number of configuration options including URLs to scan, number of threads, report details, authentication options, and test modules. With Grendel-Scan, all of the tests are modularized so that you can pick and choose exactly what types of vulnerabilities that you wish to scan for. Some examples of included modules are file enumeration, XSS, and SQL injection. While none of these are designed to actually exploit a vulnerability, they do give you a good idea of what attacks the host may be vulnerable to.

Epic Fail

With the introduction of name-based virtual hosting, it became possible for people to run multiple web sites on the same Internet Protocol (IP) address. This is facilitated by an additional Host Header that is sent along with the request. This is an important factor to keep track of during an assessment, because different virtual sites on the same IP address may have completely different security postures. For example, a vulnerable CGI may sit on www.victim.com/cgi-bin/hackme.cgi. An analyst who scans http://10.10.10.10 (its IP address) or www.secure.com (the same IP address) will not discover the vulnerability. You should keep this in mind when specifying targets with scanners otherwise you may completely miss important vulnerabilities!

Another interesting feature of Grendel-Scan is its use of a built-in proxy server. By proxying all web requests, you are able to intercept specific requests and instruct the tool to make changes to the request or response. There are also options to generate manual requests or run a built-in fuzzing utility as part of your scan.

Tip

It is very important to note that using a proxy server when performing penetration testing is pretty important. This allows for you to capture requests in-line and modify them if needed. Even if you're not using the proxy to modify data, you can use it to snag information on variables being passed via cookies or POST variables. Another option besides running a proxy server is to use a browser plugin to perform the same function of capturing actual data sent to and received from the web site.

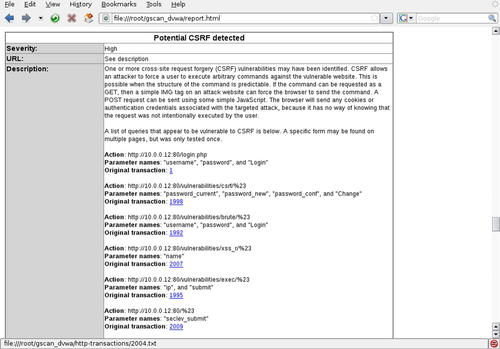

For example, if we were to want to scan a web application for a variety of vulnerabilities, we would configure Grendel-Scan with the appropriate URL(s), credentials (if known), reporting options, and select the appropriate modules for our scan. The scan itself can be seen in Fig. 6.18 with the results in Fig. 6.19.

As you can see from the results shown in Fig. 6.19, this particular web application appears to be vulnerable to cross-site request forgery (CSRF) attacks. Having identified this vulnerability with Grendel-Scan, we can move on to either manually exploiting the discovered vulnerability or using another tool to perform the exploitation.

Tip

One important thing to remember about Grendel-Scan is that it, like many other automated scanners, executes every script and sends every request that it can find. This creates a lot of noise in log files, similar to Nikto, but can have some other unexpected side effects as well. If there is a request/page that could potentially damage the web site, you will want to add that regex to the URL blacklist before scanning. For example, when using the Damn Vulnerable Web Application (DVWA) ISO for testing, it is a good idea to blacklist pages which allow for the DVWA DB to be reinitialized.

6.4.4. fimap

fimap, available at http://code.google.com/p/fimap/, is an automated tool which scans web applications for local and remote file inclusion (LFI/RFI) bugs. It allows you to scan a URL or list of URLs for exploitable vulnerabilities and even includes the ability to mine Google for URLs to scan. It includes a variety of options which include the ability to tailor the scan, route your scan through a proxy, install plugins to the tool, or automatically exploit a discovered vulnerability.

fimap USAGE

How to use:

fimap.py [Options]

Input fields:

[Options] includes one or more of the following common options:

• -h – Help

• -u [URL] – URL to scan

• -m – Mass scan

• -l [filename] – List of URLs for mass scan

• -g – Perform Google search to find URLs

• -q – Google search query

• -H – Harvests a URL recursively for additional URLs to scan

• -w [filename] – Write URL list for mass scan

• -b – Enables blind testing where errors are not reported by the web application

• -x – Exploit vulnerabilities

• --update-def – Updates definition files

Output:

Scans target URL(s) for RFI/LFI bugs and, optionally, allows you to exploit any discovered vulnerabilities.

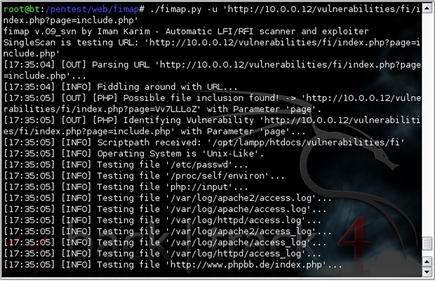

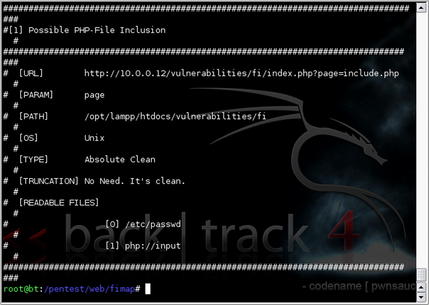

In this example, we instantiated the scan shown in Fig. 6.20 and it was able to successfully identify a file inclusion bug in the web application. Fig. 6.21 shows the data which resulted from the scan. This information can be used to further exploit the vulnerable system either manually or with another tool. On the other hand, we can also use fimap's internal attack features by adding a “-x” parameter to the command line. Doing so provides us an interactive attack console which can be used to gain a remote shell on the vulnerable host. Fig. 6.22 shows an example of this attack in action.

6.4.5. SQLiX

SQLiX, available at http://www.owasp.org/index.php/Category:OWASP_SQLiX_Project, is an SQL injection scanner which can be used to test for and exploit SQL injection vulnerabilities in web applications. To use the tool, you'll need to know the URL to scan and either include the parameter(s) to attempt to exploit or use the tool's internal crawler capability to scan the target from the root URL.

SQLiX also allows you to specify injection vectors to use such as the HTTP referrer, HTTP user agent, or even a cookie. In addition, you can choose from a variety of injection methods or simply use all of the available methods in your scan. Depending on the scan results, an attack module can then be used to exploit the vulnerable application and run specific functions against it. This includes the ability to run system commands against the host in some cases.

Figure 6.23 shows what this tool looks like when running against a vulnerable host.

SQLiX USAGE

How to use:

SQLiX.pl [Options]

Input fields:

[Options] includes one or more of the following common options:

• -h – Help

• -url [URL] – URL to scan

• -post_content [content] – Add content to the URL and use POST instead of GET

• -file [filename] – Scan a list of URIs

• -crawl [URL] – Crawl a web site from the provided root

• -referer – Use HTTP Referrer injection vector

• -agent – Use HTTP user agent injection vector

• -cookie [cookie] – Use cookie injection vector

• -all – Uses all injection methods

• -exploit – Exploits the web application to gather DB version information

• -function [function] – Exploits the web application to run the specified function

• -v

=

X – Changes verbosity level where X is 0, 2, or 5 depending on the level of verbosity.

Output:

Scans target URL(s) for SQL injection bugs and, optionally, allows you to exploit any discovered vulnerabilities.

Typical output:

6.4.6. sqlmap

Another excellent tool for scanning for SQL injection vulnerabilities is sqlmap. sqlmap, available from http://sqlmap.sourceforge.net/, has many of the same features as SQLiX as well as some additional scanning and exploitation capabilities. The options for sqlmap are very extensive, but a basic scan can be run using the command line

sqlmap.py -u [URL]. This will run a scan against the defined URL and determine if any SQL injection vulnerabilities can be detected.

If the web application is found to be vulnerable, sqlmap has a large array of available exploits including enumerating the database, dumping data from the database, running SQL commands of your choice, running remote commands, or even opening up a remote shell. It also has the ability to link in to Metasploit and open up a Meterpreter shell.

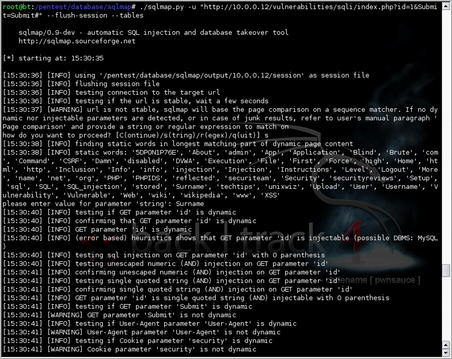

This very powerful tool can be used against most major databases and can quickly identify and exploit vulnerabilities. An example of the tool in action can be seen in FIGURE 6.24 and FIGURE 6.25.

6.4.7. DirBuster

DirBuster, available at http://www.owasp.org/index.php/Category:OWASP_DirBuster_Project, is a brute-force web directory scanner which can help you to index a web site. In many cases, spidering the site using a tool which follows links will be sufficient to find vulnerabilities in the site. However, what about those “hidden” directories which have no links to them? This is where tools such as DirBuster come into play.

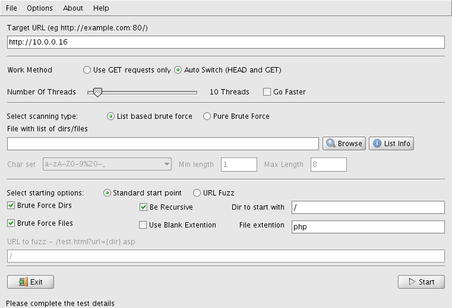

After executing the jar file, DirBuster presents you with an intuitive interface allowing you to put in details related to the site, the number of threads to use for the scan, a file containing directory names, as well as a few other details to tweak the scan. The configuration screen is shown in Fig. 6.26.

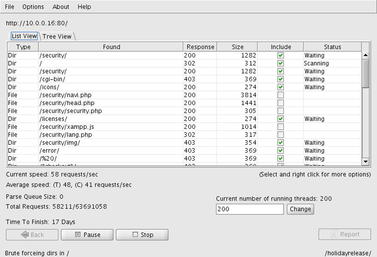

Most important is the file containing the directory names as this will directly impact the accuracy and duration of your scan. DirBuster comes with a number of files pre-populated with common directory names. These range from their “small” file with over 87,000 entries to a large list with 1,273,819 entries. With these, a majority of common “hidden” directories can be quickly located on a web site. Fig. 6.27 shows the scanning tool in operation.

6.5. Case study: the tools in action

We've looked at a pretty wide variety of tools and techniques which can be used for performing a penetration test on a web server or web application. Let's practice using some of that knowledge against a real-world scenario.

In this case, we have a scenario where we've been asked to perform some basic penetration testing of a client's internal web servers. The client suspects that the quality of code that they've received from an offshore contracting firm may be questionable. They have provided us with a Class C subnet (10.0.0.0/24) where all of their web servers are located at so we'll start from there.

First, let's scan the client network within the provided subnet and see which hosts are alive. We'll do this using Nmap as shown in Fig. 6.28.

Based on this, it appears that there are three hosts active. The first, 10.0.0.1, is our scanning machine which leaves us 10.0.0.12 and 10.0.0.16 as available targets. Let's get a little more info on those machines using Nmap. Our Nmap scan is shown in Fig. 6.29.

So it looks like these would be the web servers that we're looking for. Both are running Apache and MySQL as well as some FTP services. It also looks like one system is Windows (10.0.0.16) and one is Linux (10.0.0.12). This should give us enough information to get started.

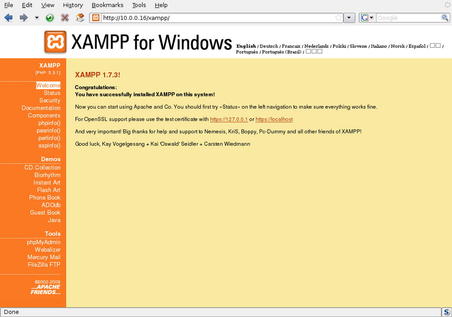

Generally the best starting point for any web application is knocking at the front door. We'll start with bringing up the web site for one of the hosts, 10.0.0.16. This is shown in Fig. 6.30.

Next, even though we're working on an internal network, it never hurts to confirm whether or not a WAF is between us and the web server. WAFW00F is the right tool for this task. The results of the scan are shown in Fig. 6.31 and it indicates that we're good to go with no WAF in place.

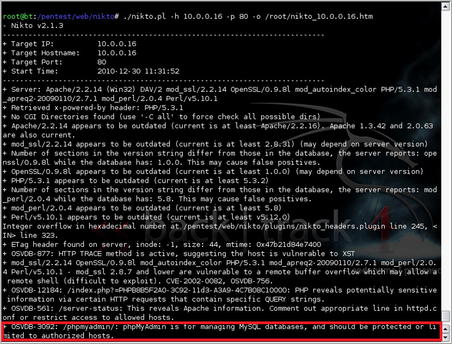

Let's go ahead and run a Nikto scan against the server also and see if it comes up with any results. The scan is shown in Fig. 6.32.

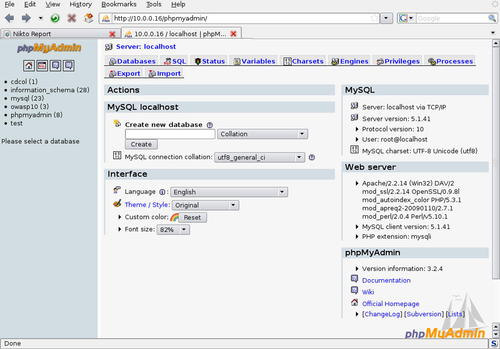

Pay special attention to the last line of the scan shown in Fig. 6.32 (the boxed section). This indicates that phpMyAdmin may be unprotected. Let's take a look at the phpmyadmin directory of the site and see what it looks like. The resulting web page is shown in Fig. 6.33.

Okay, that seems pretty vulnerable and we should absolutely talk to our clients about this issue and include it in our report. However, our client seemed concerned about code quality as well. When performing penetration testing, it's important to ensure that our focus isn't just on compromising the system, but also helping the client achieve their goals. That means we have a little more work to do.

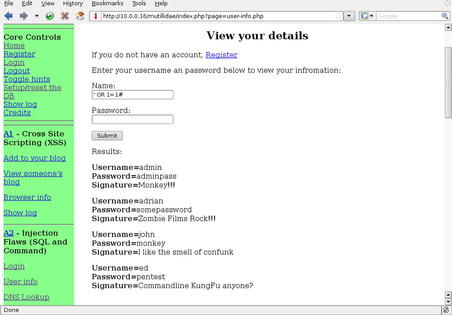

One of the directories found was “http://10.0.0.16/mutillidae/index.php?page=user-info.php” (for more information on this application, please see Chapter 10). Taking a quick look at this page shows us the form in Fig. 6.34.

This looks pretty straightforward for a login form. First, let's try a manual SQL injection check by just putting a

' into the form and see what we get. The results are shown in Fig. 6.35.

In Fig. 6.35, you can see an SQL Error being presented when we submitted a

' in the form. This means that the developers not only aren't validating input, they're not even handling error messages. The client was right to be worried. We can do a pretty basic test here manually without even using our tools just to further prove the point. For example, let's try putting the following string into the Name field:

' OR 1

=

1#.

As you can see from the results in Fig. 6.36, this site is vulnerable to very basic SQL injection and is coded so poorly that it doesn't even stop at displaying one row of data. It appears to loop through all returned results from the query which makes it even more useful to us for penetration testing. We could go through a few more manual tests to determine the number of columns coming back, perform function calls to get the DB version or password hashes, etc., but we have tools for speeding that up, so let's use them.

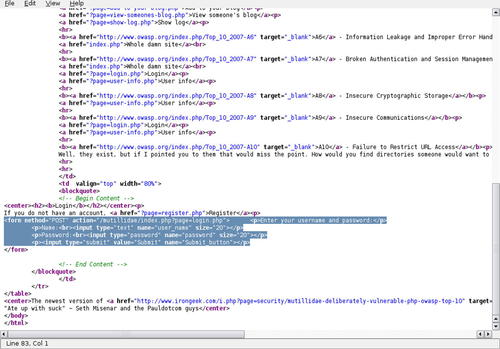

Let's look at the actual login page now since we have some credentials to use. Looking at the source code for the page as shown in Fig. 6.37, we can determine the way the authentication form is submitted. It looks like it uses POST with fields of “user_name,” “password,” and “Submit_button.” So a normal request would be a POST statement with a query of

user_name=[name]&password=[password]&Submit_button=Submit.

Let's plug that info into sqlmap and use it to enumerate the databases available to us through the site. The command line for this would be

./sqlmap.py -u "http://10.0.0.16/mutillidae/index.php?page=login.php" --method "POST" --data "user_name=admin&password=adminpass&Submit_button=Submit" --dbs. After running through a series of tests, sqlmap successfully compromised the site using SQL injection. As you can see in Fig. 6.38, we now have a list of databases on the remote system. This should be what our client was looking for to prove the vulnerability of their outsourced code. And if they need more details, we can always start dumping data out of those databases for them.

6.6. Hands-on challenge

At this point you should have a good understanding of how penetration testing is performed for web applications. For this hands-on challenge, you'll need a system to use as a target. A great application to test is available as an ISO image at http://www.badstore.net. Badstore is a web application running under Trinux that is very poorly designed and vulnerable to a number of attacks.

Warning

Before you begin, remember to always perform testing like this in an isolated test lab! Making systems running vulnerable applications such as this available on your personal LAN risks the possibility of an intruder leveraging them to compromise your own systems. Always be very, very careful when testing using images such as this.

For this challenge, set up the Badstore system as well as your penetration testing system. Use your skills and the tools we've discussed to identify vulnerabilities within the target and exploit them. Your goal should be to access customer information from the “store.”

Summary

We covered a lot of material in this chapter associated with vulnerabilities within web applications. We started by going over the basic objectives in compromising web applications. Asking the questions of whether we can compromise the web server through daemon vulnerabilities, web server misconfigurations, or through the web application itself provides the basic premise behind our testing.

Some basic techniques that we discussed were the use of technologies such as stack overflows to compromise the web server daemon, the use of default pages left open on the web server, and the use of vulnerabilities within the web application itself. Among those, one of the most powerful is SQL injection, but others such as XSS can provide other details which can be used to compromise the remote system.

The sheer number of tools available for web application testing is growing tremendously and we only touched on a few of the most common tools available. Many more open source tools are out there to experiment with and use for your penetration testing purposes. However, those that we did discuss comprise a core toolset which can be used for most penetration testing of web applications. By utilizing your understanding of the technologies being exploited by the tools, you can use them to speed up and assist you in compromising the target.

As always, remember that a tool is only as good as the person wielding it. You must have a solid understanding of what you're using the tool to accomplish in order to be successful. While “point-and-click” testing tools exist, they are never going to be as capable or successful as a penetration tester with knowledge, experience, and the tools to leverage them.

To further reinforce the proper use of the tools, we went through a basic case study of compromising a web application. Using a number of different tools and techniques, we were able to identify the remote systems, scan them for vulnerabilities, and compromise the system using the discovered vulnerabilities. As part of the case study, we were also able to help our client achieve their goals of proving that the code they had hired to be written had serious vulnerabilities.

Lastly, you were given a challenge to accomplish on your own. You are highly encouraged to try out our hands-on challenge in your test lab and play with the tools that we've talked about in this chapter. In addition, you can find many, many new tools out there to test out. Knowing the results of testing with tools that we've gone over can help you gauge the effectiveness and usefulness of any new tools that you discover on your own.

Endnote

[1]

Netcraft,

November 2010 web server survey.

(

2010) ;

<http://news.netcraft.com/archives/2010/11/05/november-2010-web-server-survey.html> (

2010);

[accessed 28.12.10].

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.