CHAPTER 8: ATTACKING WEB APPLICATIONS

The Web provides business opportunities that would have been unimaginable only a decade or so ago. Every organization can reach customers globally and provide service, support and communications for clients, employees and business partners – no matter where in the world they are and what time zone they are in. However, the Web is also open for attack 24 hours a day, seven days a week. Attackers can pose a threat to an organization from any country, without ever stepping a foot in the country the organization operates in.

For the most part, hackers are lazy and will gravitate towards making the easiest types of attack. For that reason, we see instances where organizations have a common vulnerability for several years but remained unhurt. The attackers do not find the vulnerability and the organization may even believe that they are secure, since no one is successfully attacking them. The reality, however, is just that the hackers are missing the vulnerability. When the first security breach is finally found, then – like wolves circling wounded prey – the hackers converge on the newly discovered victim and, often, will expose dozens of security vulnerabilities within a few weeks.

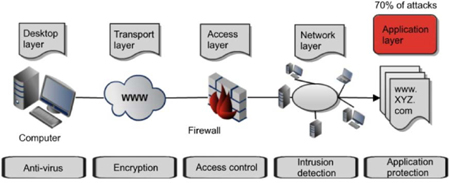

The route to an attack is often challenging for the attacker. They must overcome layers of defense and multiple obstacles that are intended to make an attack difficult and capture any information about an ongoing attack. As seen in the diagram below, the attacker must often know how to circumvent multiple technologies before they reach their ultimate goal. Firewalls, network partitions, anti-virus software, IPS and access controls will all attempt to deter or prevent the attack from succeeding.

Figure 32: Overcoming layered defense

When an organization – such as a bank, for example – was only operating in a physical (bricks and mortar) world, the one way to steal money from it was through physical access. A thief had to either be physically present at the location to make an unauthorized withdrawal, or they had to have access to the transportation network that was carrying money to or from the bank. It would have been extremely difficult for a thief in one country to have robbed money from a bank in another country – unless they travelled to the country where the victim bank was. Then came the Web. Banks (as just one example) realized the opportunities that online services could provide. Now, people can access their accounts anytime and from anywhere. The only problem here is that everyone else has the opportunity to access the bank’s online system as well – so any vulnerabilities in the configuration of the bank’s networks or applications, or in the security of the client’s browsers, become a road of opportunity for an attacker. Now, a thief in one country is able to probe, evaluate and exploit any vulnerabilities in a banking system anywhere in the world. Furthermore, it is questionable if the current legal frameworks and law enforcement personnel are adequate to investigate and prosecute such attacks. After all, if an attacker in country “A” routes through several networks in countries “B” and “C” to attack a bank in country “D,” where can it be said the crime was committed? At the location of the victim, or the attacker? And will law enforcement be able to gather the information required from the intermediary countries to investigate and trace the crime? These problems are some of the reasons that web applications have become one of the most frequent places of attack. In fact, it is estimated that up to 70% of all attacks today are focused on web applications. An application is open 24 hours a day, seven days a week, and the protection in front of it is often inadequate: the firewall may be open on ports 80 and 443 to allow traffic to reach the web server; the attack may be encrypted, so that it cannot be read by the IPS and firewall; or the client’s system my be compromised. Moreover, an application is the conduit to all the data and business information located in the background on databases, files and servers.

The steps in attacking a web application

An attack against a web application starts in much the same way as any other attack on an organization: scanning and reconnaissance; information gathering; testing; planning the attack; and launching the attack. Starting with scanning for open ports and services, the attacker will gather as much information as possible about the web service – the operating system, the structure of the uniform resource locator (also known as the universal resource locator, or URL), the file structures, and the business functions allowed – and then test for obvious vulnerabilities. Once a potential vulnerability has been found, the attacker will plan and execute the attack.

A key resource for web developers and architects is the Open Web Application Security Project (OWASP). OWASP lists the ten most common web application-related vulnerabilities every year on its website. It also provides advice and tools like WebScarab to help organizations avoid those vulnerabilities.

OWASP Top 10 2010

The OWASP top 10 web application security risks for 2010 are:

1. Injection

2. Cross-site scripting (XSS)

3. Broken authentication and session management

4. Insecure direct object references

5. Cross-site request forgery (CSRF)

6. Security misconfiguration

7. Insecure cryptographic storage

8. Failure to restrict URL access

9. Insufficient transport layer protection

10. Unvalidated redirects and forwards.

www.owasp.org/index.php/Top_10_2010

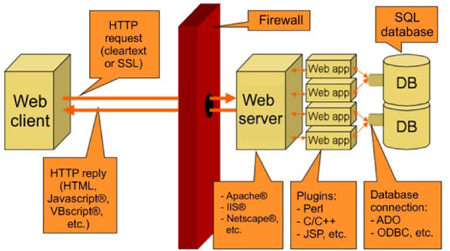

When attacking a web application, there are many attack vectors that a pen tester or attacker can use. The challenge for the administrator is to ensure that each component of the system is protected, hardened and monitored.

Figure 34: Web application components

Information gathering and discovery

The pen tester begins by learning as much as possible about the configuration and layout of the target system. This includes discovering IP addresses, open ports, operating system fingerprints and files or processes being used by the system.

As seen earlier, this may be accomplished through TCP-connect or TCP-half scanning, NULL scanning and port scans. Information may also be gathered through social engineering attacks.

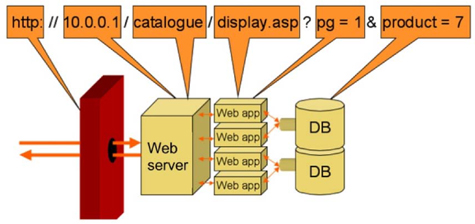

Exploiting URL mapping

The web application is reached via the URL for the site. The URL guides the process through the various steps necessary to provide the requested service. This URL will include the address, the path, the application, and the database parameters for a database query.

Many attackers have been able to reach hidden files or other unauthorized data by manipulating the URL. For example, a recent survey sent out by a company via e-mail provided URLs to click on to fill out the survey to win a prize; however, the surveys were in sequential order and the survey number was in the URL. This allowed anyone to enter in the numbers for other people’s surveys and read or alter the survey data – and create multiple entries to the survey for themselves.

URL login hacking

When a person logs into a remote site, the log-in data is often included in the URL sent to the remote server. By manipulating the data in the URL, an attacker may be able to log in as another user (horizontal privilege escalation) or even change their log-in level to a higher level of access (vertical privilege escalation).

| User | URL path |

| Lee | https://site/index.php?id=lee&isadmin=false&menu=basic |

| Jason | https://site/index.php?id=jason&isadmin=false&menu=basic |

| Wayne | https://site/index.php?id=wayne&isadmin=true&menu=full |

Login as Lee, then change the URL to:

If the request is successful, then the application is vulnerable to horizontal privilege escalation.

Cross-site scripting (XSS)

Cross-site scripting is a common vulnerability of many web applications. It allows an attacker to execute script on a remote victim’s machine. By planting a script on a web server – which executes when the victim visits that site – the attacker can gain access to the user session, retrieve data, and monitor the pages viewed by the user.

Unicode and other injection attacks

One of the methods used by attackers is to use alternate forms of data entry to bypass firewall rules or monitoring software. There are many ways to submit the same command to a system. These include Unicode values and other forms of canonicalization. For example, “%c0%af” and “%c1%9c” are the Unicode representations for “/” and “”. With canonicalization, there are many ways to write a filename or command; for example, the command “C:inetpubwwwrootcgibin......WindowsSystem32cmd.exe” is really a canonicalization of the real command “C:WindowsSystem32cmd.exe”.

Internet Information Services® (IIS®)

Fortunately for attackers, there has always been a wealth of attack vectors and vulnerabilities available. A common platform for web services has been Internet Information Services (IIS) and, like all other platforms, it has been subject to many types of attacks over the years. Each version improves the security over the previous versions and many of the vulnerabilities that led to attacks, such as Slammer, have been patched. The attacking community will usually target the most popular systems; that way, their attack will have the most effect. After all, where is the prestige in attacking a system that only has two users around the world?

Most of the attacks against IIS have been centered on unvalidated and improper input, such as bounds checking and buffer overflows. The primary method of preventing an attack via any platform is to apply the security patches as quickly as possible and harden the systems by turning off unnecessary services.

Case study: Telco

The client organization had a mix of Windows® and UNIX-based systems that they wanted to test. They wanted an in-depth and wide-ranging test – spanning the entire 15,000-employee organization and all its departments and operations. The testing team was creative, determined and not easily discouraged. The first thing they discovered was that the organization had taken extraordinary steps to filter all incoming traffic from the Internet, with thousands of rules on the firewalls and vigorous monitoring of all network traffic. The only problem was that the organization allowed Secure Shell® (SSH®) sessions and, since those were encrypted, the firewalls were ineffective at monitoring and controlling SSH traffic.

The testing team found that the administrators of the Windows® systems were very careful and always kept patches up to date and systems hardened. The Windows® administrators were paranoid of any breach or problem and locked their systems down well.

The problem, however, was with the UNIX systems. The admins there were not as diligent. They knew that their systems were not as vulnerable as the Windows® systems and they became slack in their duties. In a matter of moments, the pen testers had uncovered flaws in the UNIX systems that granted them root access and unlimited control. They discovered the UNIX administrators’ passwords and “owned” those systems. Sometimes, it is good to be a little afraid and, therefore, a lot more careful. The greatest irony of all was that the password used by one of the UNIX administrators was the same password he used for his Windows® system, so when the UNIX systems were broken into, the Windows® systems were also compromised.

Protecting web applications

The most basic rule for protecting a web application is to start with input validation. All input to a web server must be considered untrusted and subject to review. Too often, an attacker is able to modify hidden fields in a form and adjust data that the attacker should not be able to modify – such as the price of a product in an online purchase. The application may check the quantity or data in other fields that the user of an e-commerce website is expected to change, but if the application accepts all the other fields without verifying if they have been changed, then the organization may ship the product to the customer at a fraction of the real price. All input should be validated against a list of allowable values.

The next step in protecting a web application is to use proper architecture. Web servers and other internet-facing services should be located behind a firewall and in an isolated DMZ or extranet.

Error handling is also a problem with many web applications. The error codes provided in the event of a user error are often too verbose and can provide enough information to assist the attacker in refining and launching their attack.

Directory traversal

Directory traversal allows a person to move up levels in a directory and thereby access files at a higher level than they are currently at. For an administrator this is usually necessary, however, users accessing a system remotely should not be able to escalate their privilege levels this way. This attack is usually done by sending the command to move up in the directory stack to the system in a format (Unicode, for example) that eludes the protection from the firewall.

Cookies

Cookies are useful tools to make visiting a website more user-friendly for the user. The cookie stores information about the user and the session that facilitates the interaction between the user and the web server; however, the cookie may contain sensitive information that can be useful for session hijacking or lead to information disclosure. An attacker may also manipulate the cookie using tools like CookieSpy.

Questions

1. Attacks against e-commerce sites are usually achieved by stealing data in:

a) Storage and processing

b) Transport and input fields

c) Output or reports

d) Storage and transport.

Answer: D

2. One of the greatest challenges to the investigation of computer crime is:

a) Lack of good forensics tools

b) Obtaining evidence and gaining co-operation from international sources

c) Decrease in serious criminal activity

d) Law enforcement not being willing to pursue incident reports.

Answer: B

3. Improper data can be sneaked through a firewall by:

a) Encrypting the packets carrying malicious code

b) Disabling the firewall and altering the rule set

c) Flooding the firewall with UDP or ICMP packets

d) Poisoning the ARP table to divert malicious traffic.

Answer: A

4. One open source tool that can be used to test the security of a web application is:

a) Nmap

b) hping2

c) WebScarab

d) SAINT.

Answer: C

5. A user manipulating their user permissions to appear as another legitimate peer on the system is an example of:

a) Vertical escalation

b) Unicode-based URL manipulation

c) Horizontal escalation

d) SQL injection.

Answer: C

6. A common resource used by an attacker to gain knowledge about a target system is:

a) DNS poisoning

b) Error messages

c) Deletion of ARP tables

d) Misuse case modeling.

Answer: B