Cryptography

Cryptography

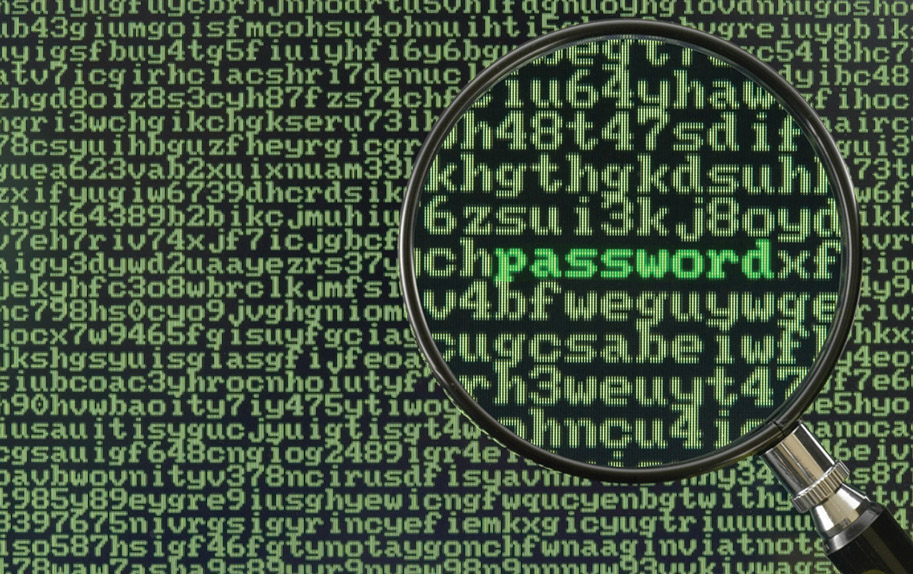

Cryptography is the science of encrypting, or hiding, information—something people have sought to do since they began using language. Although language allowed people to communicate with one another, those in power attempted to hide information by controlling who was taught to read and write. Eventually, more complicated methods of concealing information by shifting letters around to make the text unreadable were developed. These complicated methods are cryptographic algorithms, also known as ciphers. The word cipher comes from the Arabic word sifr, meaning empty or zero.

When material, called plaintext, needs to be protected from unauthorized interception or alteration, it is encrypted into ciphertext. This is done using an algorithm and a key, and the rise of digital computers has provided a wide array of algorithms and increasingly complex keys. The choice of a specific algorithm depends on several factors, which will be examined in this chapter.

Cryptanalysis, the process of analyzing available information in an attempt to return the encrypted message to its original form, required advances in computer technology for complex encryption methods. The birth of the computer made it possible to easily execute the calculations required by more complex encryption algorithms. Today, the computer almost exclusively powers how encryption is performed. Computer technology has also aided cryptanalysis, allowing new methods to be developed, such as linear and differential cryptanalysis. Differential cryptanalysis is done by comparing the input plaintext to the output ciphertext to try and determine the key used to encrypt the information. Linear cryptanalysis is similar in that it uses both plaintext and ciphertext, but it puts the plaintext through a simplified cipher to try and deduce what the key is likely to be in the full version of the cipher.

Cryptography in Practice

Cryptography in Practice

Although cryptography may be a science, it performs critical functions in the enabling of trust across computer networks both in business and at home. Before we dig deep into the technical nature of cryptographic practices, an overview of current capabilities is useful. Examining cryptography from a high level reveals several relevant points today.

Cryptography has been a long-running event of advances both on the side of cryptography and the side of breaking it via analysis. With the advent of digital cryptography, the advantage has clearly swung to the side of cryptography. Modern computers have also increased the need for, and lowered the cost of, employing cryptography to secure information. In the past, the effectiveness rested in the secrecy of the algorithm, but with modern digital cryptography, the strength is based on sheer complexity. The power of networks and modern algorithms has also been employed in automatic key management.

![]()

Cryptography is much more than encryption. Cryptographic methods enable data protection, data hiding, integrity checks, nonrepudiation services, policy enforcement, key management and exchange, and many more elements used in modern computing. If you used the Web today, odds are you used cryptography without even knowing it.

Cryptography has many uses besides just enabling confidentiality in communication channels. Cryptographic functions are used in a wide range of applications, including, but not limited to, hiding data, resisting forgery, resisting unauthorized change, resisting repudiation, enforcing policy, and exchanging keys. In spite of the strengths of modern cryptography, it still fails due to other issues; known plaintext attacks, poorly protected keys, and repeated passphrases are examples of how strong cryptography is rendered weak via implementation mistakes.

Modern cryptographic algorithms are far stronger than needed given the state of cryptanalysis. The weaknesses in cryptosystems come from the system surrounding the algorithm, implementation, and operationalization details. Adi Shamir—the S in RSA—states it clearly: “Attackers do not break crypto; they bypass it.”

Over time, weaknesses and errors, as well as shortcuts, are found in algorithms. When an algorithm is reported as “broken,” the term can have many meanings. It could mean that the algorithm is of no further use, or it could mean that it has weaknesses that may someday be employed to break it, or anything between these extremes. As all methods can be broken with brute force, one question is how much effort is required, and at what cost, when compared to the value of the asset under protection.

When you’re examining the strength of a cryptosystem, it is worth examining the following types of levels of protection:

1. The mechanism is no longer useful for any purpose.

2. The cost of recovering the clear text without benefit of the key has fallen to a low level.

3. The cost has fallen to equal to or less than the value of the data or the next least-cost attack.

4. The cost has fallen to within several orders of magnitudes of the cost of encryption or the value of the data.

5. The elapsed time of attack has fallen to within magnitudes of the life of the data, regardless of the cost thereof.

6. The cost has fallen to less than the cost of a brute force attack against the key.

7. Someone has recovered one key or one message.

This list of conditions shows the risks/benefits in descending order, where conditions 6 and 7 are regular occurrences in cryptographic systems and are generally not worth worrying about at all. In fact, it is not until the fourth condition that one has to have real concerns. With all this said, most organizations consider replacement between conditions 5 and 6. If any of the first three are positive, the organization seriously needs to consider changing its cryptographic methods.

Fundamental Methods

Modern cryptographic operations are performed using both an algorithm and a key. The choice of algorithm depends on the type of cryptographic operation that is desired. The subsequent choice of key is then tied to the specific algorithm. Cryptographic operations include encryption (for the protection of confidentiality), hashing (for the protection of integrity), digital signatures (to manage nonrepudiation), and a bevy of specialty operations such as key exchanges.

The methods used to encrypt information are based on two separate operations: substitution and transposition. Substitution is the replacement of an item with a different item. Transposition is the changing of the order of items. Pig Latin, a child’s cipher, employs both operations in simplistic form and is thus easy to decipher. These operations can be done on words, characters, and, in the digital world, bits. What makes a system secure is the complexity of the changes employed. To make a system reversible (so you can reliably decrypt it), there needs to be a basis for the pattern of changes. Historical ciphers used relatively simple patterns, and ones that required significant knowledge (at the time) to break.

Modern cryptography is built around complex mathematical functions. These functions have specific properties that make them resistant to reversing or solving by means other than the application of the algorithm and key.

Assurance is a specific term in security that means that something is not only true but can be proven to be so to some specific level of certainty.

While the mathematical specifics of these operations can be very complex and are beyond the scope of this level of material, the knowledge to properly employ them is not. Cryptographic operations are characterized by the quantity and type of data as well as the level and type of protection sought. Integrity protection operations are characterized by the level of assurance desired. Data can be characterized by its state: data in transit, data at rest, or data in use. It is also characterized in how it is used, either in block form or stream form.

Comparative Strengths and Performance of Algorithms

Several factors play a role in determining the strength of a cryptographic algorithm. First and most obvious is the size of the key and the resulting keyspace. The keyspace is defined as a set of possible key values. One method of attack is to simply try all the possible keys in a brute force attack. The other factor is referred to as work factor, which is a subjective measurement of the time and effort needed to perform operations. If the work factor is low, then the rate at which keys can be tested is high, meaning that larger keyspaces are needed. Work factor also plays a role in protecting systems such as password hashes, where having a higher work factor can be part of the security mechanism.

Tech Tip

Keyspace Comparisons

Because the keyspace is a numeric value, it is very important to ensure that comparisons are done using similar key types. Comparing a key made of 1 bit (two possible values) and a key made of 1 letter (26 possible values) would not yield accurate results. Fortunately, the widespread use of computers has made almost all algorithms state their keyspace values in terms of bits.

A larger keyspace allows the use of keys of greater complexity, and thus more security, assuming the algorithm is well designed. It is easy to see how key complexity affects an algorithm when you look at some of the encryption algorithms that have been broken. The Data Encryption Standard (DES) uses a 56-bit key, allowing 72,000,000,000,000,000 possible values, but it has been broken by modern computers. The modern implementation of DES, Triple DES (3DES), uses three 56-bit keys, for a total key length of 168 bits (although for technical reasons the effective key length is 112 bits), or 340,000,000,000,000,000,000,000,000,000,000,000,000 possible values.

When an algorithm lists a certain number of bits as a key, it is defining the keyspace. Some algorithms have key lengths of 8192 bits or more, resulting in very large keyspaces, even by digital computer standards.

Modern computers have also challenged work factor elements, as algorithms can be rendered very quickly by specialized hardware such as high-end graphic chips. To defeat this, many algorithms have repeated cycles to add to the work and reduce the ability to parallelize operations inside processor chips. This is done to increase the inefficiency of a calculation, but in a manner that still results in suitable performance when given the key and still complicates matters when done in a brute force manner with all keys.

Key Length

The strength of a cryptographic function typically depends on the strength of a key—a larger key has more entropy and adds more strength to an encryption. Because different algorithms use different methods with a key, direct comparison of key strength between different algorithms is not easily done. Some cryptographic systems have fixed key lengths, such as 3DES, while others, such as AES, have multiple lengths (for example, AES-128, AES-192, and AES-256).

Some algorithms have choices, and as a general rule longer is more secure, but also will take longer to compute. With regard to the tradeoff of security versus usability, there are some recommended minimum key lengths:

![]() Symmetric key lengths of at least 80–112 bits.

Symmetric key lengths of at least 80–112 bits.

![]() Elliptic curve key lengths of at least 160–224 bits.

Elliptic curve key lengths of at least 160–224 bits.

![]() RSA key lengths of at least 2048 bits. In particular, the CA/Browser Forum Extended Validation (EV) Guidelines require a minimum key length of 2048 bits.

RSA key lengths of at least 2048 bits. In particular, the CA/Browser Forum Extended Validation (EV) Guidelines require a minimum key length of 2048 bits.

![]() DSA key lengths of at least 2048 bits.

DSA key lengths of at least 2048 bits.

Cryptographic Objectives

Cryptographic Objectives

Cryptographic methods exist for a purpose: to protect the integrity and confidentiality of data. There are many associated elements with this protection to enable a system-wide solution. Elements such as perfect forward secrecy, nonrepudiation, and others enable successful cryptographic implementations.

Diffusion

Diffusion is the principle that the statistical analysis of plaintext and ciphertext results in a form of dispersion rendering one structurally independent of the other. In plain terms, a change in one character of plaintext should result in multiple changes in the ciphertext in a manner that changes in ciphertext do not reveal information as to the structure of the plaintext.

Confusion

Confusion is a principle that affects the randomness of an output. The concept is operationalized by ensuring that each character of ciphertext depends on several parts of the key. Confusion places a constraint on the relationship between the ciphertext and the key employed, forcing an effect that increases entropy.

Obfuscation

Obfuscation is the masking of an item to render it unreadable, yet still usable. Take a source code example: if the source code is written in a manner that it is easily understood, then its functions can be easily recognized and copied. Code obfuscation is the process of making the code unreadable because of the complexity invoked at the time of creation. This “mangling” of code makes it impossible to easily understand, copy, fix, or maintain. Using cryptographic functions to obfuscate materials is more secure in that it is not reversible without the secret element, but this also renders the code unusable until it is decoded.

Program obfuscation can be achieved in many forms, from tangled C functions with recursion and other indirect references that make reverse engineering difficult, to proper encryption of secret elements. Storing secret elements directly in source code does not really obfuscate them because numerous methods can be used to find hard-coded secrets in code. Proper obfuscation requires the use of cryptographic functions against a nonreversible element. An example is the storing of password hashes—if the original password is hashed with the addition of a salt, reversing the stored hash is practically not feasible, making the key information, the password, obfuscated.

Perfect Forward Secrecy

Perfect forward secrecy (PFS) is a property of a public key system in which a key derived from another key is not compromised even if the originating key is compromised in the future. This is especially important in session key generation, where the compromise of future communication sessions may become compromised; if perfect forward secrecy were not in place, then past messages that had been recorded could be decrypted.

![]()

Perfect forward secrecy gives assurance that session keys will not be compromised.

Security Through Obscurity

Security via obscurity alone has never been a valid method of protecting secrets. This has been known for centuries. However, this does not mean obscurity has no role in security. Naming servers after a progressive set of objects, like Greek gods, planets, and so on, provides an attacker an easier path once they start obtaining names. Obscurity has a role, making it hard for an attacker to easily guess critical pieces of information, but it should not be relied upon as a singular method of protection.

Historical Perspectives

Historical Perspectives

Cryptography is as old as secrets. Humans have been designing secret communication systems for as long they’ve needed to keep communication private. The Spartans of ancient Greece would write on a ribbon wrapped around a cylinder with a specific diameter (called a scytale). When the ribbon was unwrapped, it revealed a strange string of letters. The message could be read only when the ribbon was wrapped around a cylinder of the same diameter. This is an example of a transposition cipher, where the same letters are used but the order is changed. In all these cipher systems, the unencrypted input text is known as plaintext and the encrypted output is known as ciphertext.

Algorithms

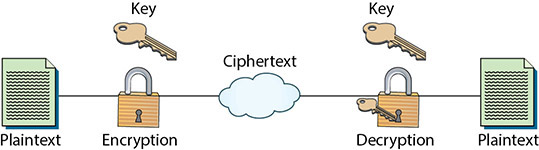

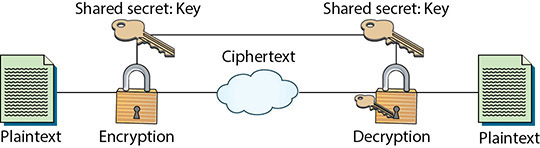

Every current encryption scheme is based on an algorithm, a step-by-step, recursive computational procedure for solving a problem in a finite number of steps. A cryptographic algorithm—what is commonly called an encryption algorithm or cipher—is made up of mathematical steps for encrypting and decrypting information. The following illustration shows a diagram of the encryption and decryption process and its parts. Three types of encryption algorithms are commonly used: hashing, symmetric, and asymmetric. Hashing is a very special type of encryption algorithm that takes an input and mathematically reduces it to a unique number known as a hash, which is not reversible. Symmetric algorithms are also known as shared secret algorithms, as the same key is used for encryption and decryption. Finally, asymmetric algorithms use a very different process by employing two keys, a public key and a private key, making up what is known as a key pair.

Tech Tip

XOR

A popular function in cryptography is eXclusive OR (XOR), which is a bitwise function applied to data. The XOR function is the key element of most stream ciphers, as it is the operation between the key and the data resulting in the stream cipher. When you apply a key to data using XOR, then a second application undoes the first operation. This makes for speedy encryption/decryption but also makes the system totally dependent on the secrecy of the key.

A key is a special piece of data used in both the encryption and decryption processes. The algorithms stay the same in every implementation, but a different key is used for each, which ensures that even if someone knows the algorithm you use to protect your data, they cannot break your security. The key in cryptography is analogous to a key in a common door lock, as shown in Figure 5.1.

• Figure 5.1 While everyone knows how to use a knob to open a door, without the key to unlock the deadbolt, that knowledge is useless.

Comparing the strength of two different algorithms can be mathematically very challenging; fortunately for the layperson, there is a rough guide. Most current algorithms are listed with their key size in bits, e.g., AES256. Unless a specific algorithm has been shown to be flawed, in general, the greater number of bits will yield a more secure system. This works well for a given algorithm but is meaningless for comparing different algorithms. The good news is that most modern cryptography is more than strong enough for all but technical uses, and for those uses experts can determine appropriate algorithms and key lengths to provide the necessary protections.

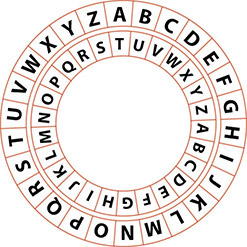

Substitution Ciphers

The Romans typically used a different method known as a shift cipher. In this case, one letter of the alphabet is shifted a set number of places in the alphabet for another letter. A common modern-day example of this is the ROT13 cipher, in which every letter is rotated 13 positions in the alphabet: n is written instead of a, o instead of b, and so on. These types of ciphers are commonly encoded on an alphabet wheel, as shown in Figure 5.2.

• Figure 5.2 Any shift cipher can easily be encoded and decoded on a wheel of two pieces of paper with the alphabet set as a ring; by moving one circle the specified number in the shift, you can translate the characters.

These ciphers were simple to use and also simple to break. Because hiding information was still important, more advanced transposition and substitution ciphers were required. As systems and technology became more complex, ciphers were frequently automated by some mechanical or electromechanical device. A famous example of a relatively modern encryption machine is the German Enigma machine from World War II (see Figure 5.3). This machine used a complex series of substitutions to perform encryption, and, interestingly enough, it gave rise to extensive research in computers.

• Figure 5.3 One of the surviving German Enigma machines

Caesar’s cipher uses an algorithm and a key: the algorithm specifies that you offset the alphabet either to the right (forward) or to the left (backward), and the key specifies how many letters the offset should be. For example, if the algorithm specifies offsetting the alphabet to the right, and the key is 3, the cipher substitutes an alphabetic letter three to the right for the real letter, so d is used to represent a, f represents c, and so on. In this example, both the algorithm and key are simple, allowing for easy cryptanalysis of the cipher and easy recovery of the plaintext message.

![]()

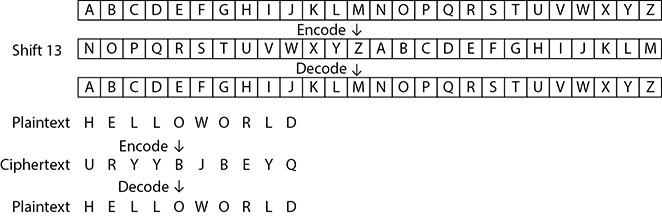

Try This!

ROT13

ROT13 is a special case of a Caesar substitution cipher where each character is replaced by a character 13 places later in the alphabet. Because the basic Latin alphabet has 26 letters, ROT13 has the property of undoing itself when applied twice. The illustration demonstrates ROT13 encoding of “HelloWorld.” The top two rows show encoding, while the bottom two show decoding replacement.

The ease with which shift ciphers were broken led to the development of substitution ciphers, which were popular in Elizabethan England (roughly the second half of the 16th century) and more complex than shift ciphers. Substitution ciphers work on the principle of substituting a different letter for every letter: a becomes g, b becomes d, and so on. This system permits 26 possible values for every letter in the message, making the cipher many times more complex than a standard shift cipher. Simple analysis of the cipher could be performed to retrieve the key, however. By looking for common letters such as e and patterns found in words such as ing, you can determine which cipher letter corresponds to which plaintext letter. The examination of ciphertext for frequent letters is known as frequency analysis. Making educated guesses about words will eventually allow you to determine the system’s key value (see Figure 5.4).

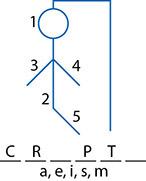

• Figure 5.4 Making educated guesses is much like playing hangman—correct guesses can lead to more or all of the key being revealed.

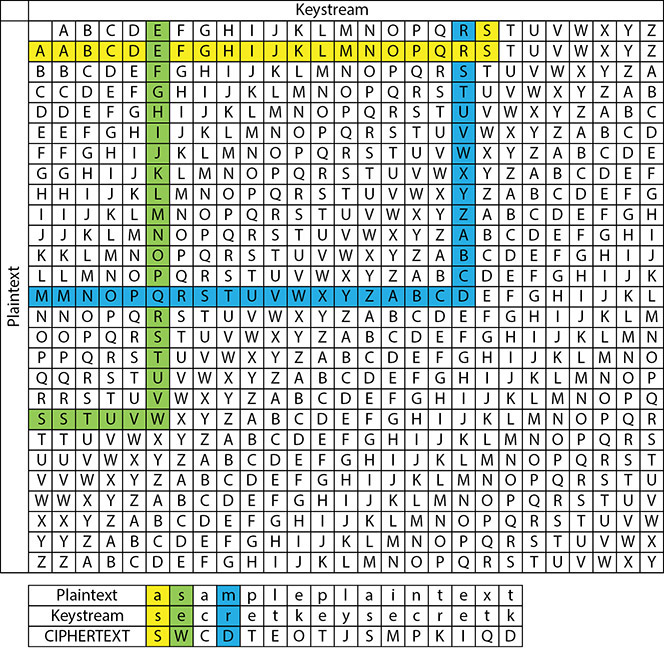

To correct this problem, more complexity had to be added to the system. The Vigenère cipher works as a polyalphabetic substitution cipher that depends on a password. This is done by setting up a substitution table like the one in Figure 5.5.

• Figure 5.5 Polyalphabetic substitution cipher

Then the password is matched up to the text it is meant to encipher. If the password is not long enough, the password is repeated until one character of the password is matched up with each character of the plaintext. For example, if the plaintext is A Sample plaintext and the password is secretkey, Figure 5.5 illustrates the encryption and decryption process.

The cipher letter is determined by use of the grid, matching the plaintext character’s row with the password character’s column, resulting in a single ciphertext character where the two meet. Consider the first letters, A (from plaintext—rows) and S (from keystream—columns): when plugged into the grid they output a ciphertext character of S. This is shown in yellow on Figure 5.5. The second letter is highlighted in green, and the fourth letter in blue. This process is repeated for every letter of the message. Once the rest of the letters are processed, the output is SWCDTEOTJSMPKIQD.

In this example, the key in the encryption system is the password. The example also illustrates that an algorithm can be simple and still provide strong security. If someone knows about the table, they can determine how the encryption was performed, but they still will not know the key to decrypting the message. This example also shows what happens with a bad password—that is, one with a lot of common letters, such as A, as this would reveal a lot of the message. Try using the grid and the keystream “AB” and see what happens.

![]()

Try This!

Vigenère Cipher

Make a simple message that’s about two sentences long and then choose two passwords: one that’s short and one that’s long. Then, using the substitution table presented in this section, perform simple encryption on the message. Compare the two ciphertexts; since you have the plaintext and the ciphertext, you should be able to see a pattern of matching characters. Knowing the algorithm used, see if you can determine the key used to encrypt the message.

The more complex the key, the greater the security of the system. The Vigenère cipher system and systems like it make the algorithms rather simple but the key rather complex, with the best keys comprising very long and very random data. Key complexity is achieved by giving the key a large number of possible values.

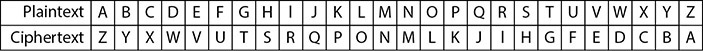

Atbash Cipher

The Atbash cipher is a specific form of a monoalphabetic substitution cipher. The cipher is formed by taking the characters of the alphabet and mapping to them in reverse order. The first letter becomes the last letter, the second letter becomes the second-to-last letter, and so on. Historically, the Atbash cipher traces back to the time of the Bible and the Hebrew language. Because of its simple form, it can be used with any language or character set. Figure 5.6 shows an Atbash cipher for the standard ASCII character set of letters.

• Figure 5.6 Atbash cipher

One-Time Pads

One-time pads are an interesting form of encryption in that they theoretically are perfect and unbreakable. The key is the same size or larger than the material being encrypted. The plaintext is XORed against the key producing the ciphertext. What makes the one-time pad “perfect” is the size of the key. If you use a keyspace full of keys, you will decrypt every possible message of the same length as the original, with no way to discriminate which one is correct. This makes a one-time pad unable to be broken by even brute force methods, provided that the key is not reused. This makes a one-time pad less than practical for any mass use.

![]()

One-time pads are examples of perfect ciphers from a mathematical point of view. But when put into practice, the implementation creates weaknesses that result in less-than-perfect security. This is an important reminder that perfect ciphers from a mathematical point of view do not create perfect security in practice because of the limitations associated with the implementation.

Key Management

Because the security of the algorithms relies on the key, key management is of critical concern. Key management includes anything having to do with the exchange, storage, safeguarding, and revocation of keys. It is most commonly associated with asymmetric encryption because asymmetric encryption uses both public and private keys. To be used properly for authentication, a key must be current and verified. If you have an old or compromised key, you need a way to check to see that the key has been revoked.

Key management is also important for symmetric encryption, because symmetric encryption relies on both parties having the same key for the algorithm to work. Since these parties are usually physically separate, key management is critical to ensure keys are shared and exchanged easily. They must also be securely stored to provide appropriate confidentiality of the encrypted information. There are many different approaches to the secure storage of keys, such as putting them on a USB flash drive or smart card. While keys can be stored in many different ways, new PC hardware often includes the Trusted Platform Module (TPM), which provides a hardware-based key storage location that is used by many applications. (More specific information about the management of keys is provided later in this chapter and in Chapters 6 and 7.)

Random Numbers

Many digital cryptographic algorithms have a need for a random number to act as a seed and provide true randomness. One of the strengths of computers is that they can do a task over and over again in the exact same manner—no noise or randomness. This is great for most tasks, but in generating a random sequence of values, it presents challenges. Software libraries have pseudo-random number generators—functions that produce a series of numbers that statistically appear random. But these random number generators are deterministic in that, given the sequence, you can calculate future values. This makes them inappropriate for use in cryptographic situations.

Tech Tip

Randomness Issues

The importance of proper random number generation in cryptosystems cannot be underestimated. Recent reports by the Guardian and the New York Times assert that the U.S. National Security Agency (NSA) has put a backdoor into the Cryptographically Secure Random Number Generator (CSPRNG) algorithms described in NIST SP 800-90A, particularly the Dual_EC_DRBG algorithm. Further allegations are that the NSA paid RSA $10 million to use the resulting standard in its product line.

The level or amount of randomness is referred to as entropy. Entropy is the measure of uncertainty associated with a series of values. Perfect entropy equates to complete randomness, such that given any string of bits, there is no computation to improve guessing the next bit in the sequence. A simple “measure” of entropy is in bits, where the bits are the power of 2 that represents the number of choices. So if there are 2048 options, then this would represent 11 bits of entropy. In this fashion, one can calculate the entropy of passwords and measure how “hard they are to guess.”

![]()

The level or amount of randomness is referred to as entropy.

To resolve the problem of appropriate randomness, there are systems to create cryptographic random numbers. The level of complexity of the system is dependent on the level of pure randomness needed. For some functions, such as master keys, the only true solution is a hardware-based random number generator that can use physical properties to derive entropy. In other, less demanding cases, a cryptographic library call can provide the necessary entropy. While the theoretical strength of the cryptosystem depends on the algorithm, the strength of the implementation in practice can depend on issues such as the key. This is a very important issue, and mistakes made in implementation can invalidate even the strongest algorithms in practice.

Salting

To provide sufficient entry for low entropy inputs to hash functions, the addition of a high entropy piece of data concatenated with the material being hashed can be used. The term salt refers to this initial data piece. Salts are particularly useful when the material being hashed is short and low in entropy. The addition of a high entropy (say, a 30-character) salt to a 3-character password greatly increases the entropy of the stored hash.

Another term used in this regard is initialization vector, or IV, and this is used in several ciphers, particularly in the wireless space, to achieve randomness even with normally deterministic inputs. IVs can add randomness and are used in block ciphers to initiate modes of operation.

Tech Tip

Salts and Password Hashes

Passwords are stored in the form of a hash, making them unrecoverable, but exposing a separate problem: if multiple users have the same hash, then their passwords are the same. A salt adds additional entropy, or randomness, to the encryption key, specifically providing separation between equal inputs such as identical passwords on different accounts.

A nonce is a number used only once, and is similar to a salt, or an IV, but it is only used once, and if needed again, a different value is used. Nonces provide random, nondeterministic entropy to cryptographic functions and are commonly used in stream ciphers to break stateful properties when the key is reused.

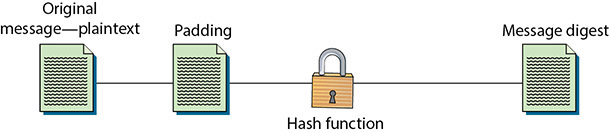

Hashing Functions

Hashing Functions

Hashing functions are commonly used encryption methods. A hashing function or hash function is a special mathematical function that performs a one-way function, which means that once the algorithm is processed, there is no feasible way to use the ciphertext to retrieve the plaintext that was used to generate it. Also, ideally, there is no feasible way to generate two different plaintexts that compute to the same hash value. The hash value is the output of the hashing algorithm for a specific input. The following illustration shows the one-way nature of these functions:

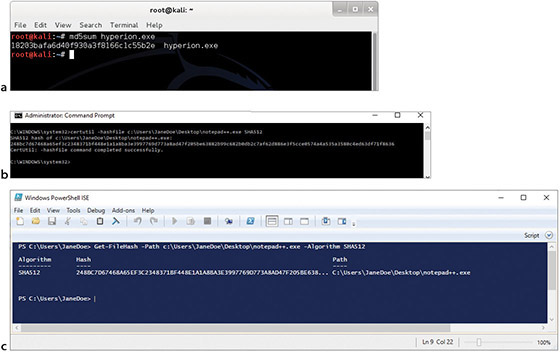

Common uses of hashing algorithms are to store computer passwords and to ensure message integrity. The idea is that hashing can produce a unique value that corresponds to the data entered, but the hash value is also reproducible by anyone else running the same algorithm against the same data. So you could hash a message to get a message authentication code (MAC), and the computational number of the message would show that no intermediary has modified the message. This process works because hashing algorithms are typically public, and anyone can hash data using the specified algorithm. It is computationally simple to generate the hash, so it is simple to check the validity or integrity of something by matching the given hash to one that is locally generated. Several programs can compute hash values for an input file, as shown in Figure 5.7. Hash-based message authentication code (HMAC) is a special subset of hashing technology. It is a hash algorithm applied to a message to make a MAC, but it is done with a previously shared secret. So the HMAC can provide integrity simultaneously with authentication. HMAC-MD5 is used in the NT LAN Manager version 2 challenge/response protocol.

• Figure 5.7 Several programs are available that accept an input and produce a hash value, letting you independently verify the integrity of downloaded content.

A hash algorithm can be compromised with what is called a collision attack, in which an attacker finds two different messages that hash to the same value. This type of attack is very difficult and requires generating a separate algorithm that attempts to find a text that will hash to the same value of a known hash. This must occur faster than simply editing characters until you hash to the same value, which is a brute force type of attack. The consequence of a hash function that suffers from collisions is a loss of integrity. If an attacker can make two different inputs purposefully hash to the same value, they might trick people into running malicious code and cause other problems. Popular hash algorithms are the Secure Hash Algorithm (SHA) series, ChaCha20, the RIPEMD algorithms, and the Message Digest (MD) hash of varying versions (MD2, MD4, and MD5). Because of weaknesses and collision attack vulnerabilities, many hash functions are now considered to be insecure, including MD2, MD4, and MD5 as well as the SHA series shorter than 384 bits.

Tech Tip

Why Hashes and Not CRC-32?

CRC-32 is a 32-bit error detection algorithm. You can use it to determine small errors during transmission of small items. However, cyclic redundancy checks (CRCs) can be tricked; by modifying any four consecutive bytes in a file, you can change the file’s CRC to any value you choose. This technique demonstrates that the CRC-32 function is extremely malleable and unsuitable for protecting a file from intentional modifications.

Hashing functions are very common and play an important role in the way information, such as passwords, is stored securely and the way in which messages can be signed. By computing a digest of the message, less data needs to be signed by the more complex asymmetric encryption, and this still maintains assurances about message integrity. This is the primary purpose for which the protocols were designed, and their success will allow greater trust in electronic protocols and digital signatures.

Tech Tip

Warning on Deprecated Hashing Algorithms

The hashing algorithms in common use are MD2, MD4, and MD5 as well as SHA-1, SHA-256, SHA-384, SHA-512, and SHA-3. Because of potential collisions, MD2, MD4, and MD5, as well as SHA-1 and SHA-256, have been deprecated by many groups; although not considered secure, they are still found in use—a testament to the slow adoption of better security. Going forward, only SHA-384, SHA-512, and SHA-3 should be used.

Message Digest

Message Digest (MD) is the generic version of one of several algorithms designed to create a message digest or hash from data input into the algorithm. MD algorithms work in the same manner as SHA in that they use a secure method to compress the file and generate a computed output of a specified number of bits. The MD algorithms were all developed by Ronald L. Rivest of MIT.

MD2

MD2 was developed in 1989 and is in some ways an early version of the later MD5 algorithm. It takes a data input of any length and produces a hash output of 128 bits. It is different from MD4 and MD5 in that MD2 is optimized for 8-bit machines, whereas the other two are optimized for 32-bit machines.

MD4

MD4 was developed in 1990 and is optimized for 32-bit computers. It is a fast algorithm, but it is subject to more attacks than more secure algorithms such as MD5. An extended version of MD4 computes the message in parallel and produces two 128-bit outputs—effectively a 256-bit hash.

MD5

MD5 was developed in 1991 and is structured after MD4, but with additional security to overcome the problems in MD4. Therefore, it is very similar to the MD4 algorithm, only slightly slower and more secure.

MD5 creates a 128-bit hash of a message of any length.

Czech cryptographer Vlastimil Klíma published work showing that MD5 collisions can be computed in about eight hours on a standard home PC. In November 2007, researchers published results showing the ability to have two entirely different Win32 executables with different functionality but the same MD5 hash. This discovery has obvious implications for the development of malware. The combination of these problems with MD5 has pushed people to adopt a strong SHA version for security reasons.

SHA

Secure Hash Algorithm (SHA) refers to a set of hash algorithms designed and published by the National Institute of Standards and Technology (NIST) and the National Security Agency (NSA). These algorithms are included in the SHA standard Federal Information Processing Standards (FIPS) 180-2 and 180-3. The individual standards are named SHA-1, SHA-224, SHA-256, SHA-384, and SHA-512. The latter three variants are occasionally referred to collectively as SHA-2. The newest version is known as SHA-3, which is specified in FIPS 202.

SHA-1

SHA-1, developed in 1993, was designed as the algorithm to be used for secure hashing in the U.S. Digital Signature Standard (DSS). It is modeled on the MD4 algorithm and implements fixes in that algorithm discovered by the NSA. It creates message digests 160 bits long that can be used by the Digital Signature Algorithm (DSA), which can then compute the signature of the message. This is computationally simpler, as the message digest is typically much smaller than the actual message—smaller message, less work.

Tech Tip

Block Mode in Hashing

Most hash algorithms use block mode to process; that is, they process all input in set blocks of data such as 512-bit blocks. The final hash is typically generated by adding the output blocks together to form the final output string of 160 or 512 bits.

SHA-1 works, as do all hashing functions, by applying a compression function to the data input. It accepts an input of up to 264 bits or less and then compresses down to a hash of 160 bits. SHA-1 works in block mode, separating the data into words first, and then grouping the words into blocks. The words are 32-bit strings converted to hex; grouped together as 16 words, they make up a 512-bit block. If the data that is input to SHA-1 is not a multiple of 512, the message is padded with zeros and an integer describing the original length of the message. Once the message has been formatted for processing, the actual hash can be generated. The 512-bit blocks are taken in order until the entire message has been processed.

![]()

Try to keep attacks on crypto-systems in perspective. While the theory of attacking hashing through collisions is solid, finding a collision still takes enormous amounts of effort. In the case of attacking SHA-1, the collision is able to be found faster than a pure brute force method, but by most estimates will still take several years.

At one time, SHA-1 was one of the more secure hash functions, but it has been found to be vulnerable to a collision attack. The longer versions (SHA-256, SHA-384, and SHA-512) all have longer hash results, making them more difficult to attack successfully. The added security and resistance to attack in SHA-2 does require more processing power to compute the hash.

SHA-2

SHA-2 is a collective name for SHA-224, SHA-256, SHA-384, and SHA-512. SHA-256 is similar to SHA-1 in that it also accepts input of less than 264 bits and reduces that input to a hash. This algorithm reduces to 256 bits instead of SHA-1’s 160. Defined in FIPS 180-2 in 2002, SHA-256 is listed as an update to the original FIPS 180 that defined SHA. Similar to SHA-1, SHA-256 uses 32-bit words and 512-bit blocks. Padding is added until the entire message is a multiple of 512. SHA-256 uses sixty-four 32-bit words, eight working variables, and results in a hash value of eight 32-bit words, hence 256 bits. SHA-224 is a truncated version of the SHA-256 algorithm that results in a 224-bit hash value. There are no known collision attacks against SHA-256; however, an attack on reduced-round SHA-256 is possible.

SHA-512 is also similar to SHA-1, but it handles larger sets of data. SHA-512 accepts 2128 bits of input, which it pads until it has several blocks of data in 1024-bit blocks. SHA-512 also uses 64-bit words instead of SHA-1’s 32-bit words. It uses eight 64-bit words to produce the 512-bit hash value. SHA-384 is a truncated version of SHA-512 that uses six 64-bit words to produce a 384-bit hash.

Although SHA-2 is not as common as SHA-1, more applications are starting to utilize it after SHA-1 was shown to be potentially vulnerable to a collision attack.

SHA-3

SHA-3 is the name for the SHA-2 replacement. In 2012, the Keccak hash function won the NIST competition and was chosen as the basis for the SHA-3 method. Because the algorithm is completely different from the previous SHA series, it has proved to be more resistant to attacks that are successful against them. SHA-3 is not commonly used, but is approved in U.S. FIPS Pub 202.

The SHA-2 and SHA-3 series are currently approved for use. SHA-1 has been deprecated and its use discontinued in many strong cipher suites.

RIPEMD

RACE Integrity Primitives Evaluation Message Digest (RIPEMD) is a hashing function developed by the RACE Integrity Primitives Evaluation (RIPE) consortium. It originally provided a 128-bit hash and was later shown to have problems with collisions. RIPEMD was strengthened to a 160-bit hash known as RIPEMD-160 by Hans Dobbertin, Antoon Bosselaers, and Bart Preneel. There are also 256-and 320-bit versions of the algorithm known as RIPEMD-256 and RIPEMD-320.

RIPEMD-160

RIPEMD-160 is an algorithm based on MD4, but it uses two parallel channels with five rounds. The output consists of five 32-bit words to make a 160-bit hash. There are also larger output extensions of the RIPEMD-160 algorithm. These extensions, RIPEMD-256 and RIPEMD-320, offer outputs of 256 bits and 320 bits, respectively. While these offer larger output sizes, this does not make the hash function inherently stronger.

Hashing Summary

Hashing functions are very common, and they play an important role in the way information, such as passwords, is stored securely and the way in which messages can be signed. By computing a digest of the message, less data needs to be signed by the more complex asymmetric encryption, and this still maintains assurances about message integrity. This is the primary purpose for which the protocols were designed, and their success will allow greater trust in electronic protocols and digital signatures. The following illustration shows hash calculations in (a) Linux, (b) Windows 10, and (c) Microsoft PowerShell:

Symmetric Encryption

Symmetric Encryption

Symmetric encryption is the older and simpler method of encrypting information. The basis of symmetric encryption is that both the sender and the receiver of the message have previously obtained the same key. This is, in fact, the basis for even the oldest ciphers—the Spartans needed the exact same size cylinder, making the cylinder the “key” to the message, and in shift ciphers both parties need to know the direction and amount of shift being performed. All symmetric algorithms are based on this shared secret principle, including the unbreakable one-time pad method.

Figure 5.8 is a simple diagram showing the process that a symmetric algorithm goes through to provide encryption from plaintext to ciphertext. This ciphertext message is, presumably, transmitted to the message recipient, who goes through the process to decrypt the message using the same key that was used to encrypt the message. Figure 5.8 shows the keys to the algorithm, which are the same value in the case of symmetric encryption.

• Figure 5.8 Layout of a symmetric algorithm

Unlike with hash functions, a cryptographic key is involved in symmetric encryption, so there must be a mechanism for key management (discussed earlier in the chapter). Managing the cryptographic keys is critically important in symmetric algorithms because the key unlocks the data that is being protected. However, the key also needs to be known by, or transmitted in a confidential way to, the party with which you wish to communicate. A key must be managed at all stages, which requires securing it on the local computer, securing it on the remote one, protecting it from data corruption, protecting it from loss, and, probably the most important step, protecting it while it is transmitted between the two parties. Later in the chapter we will look at public key cryptography, which greatly eases the key management issue, but for symmetric algorithms the most important lesson is to store and send the key only by known secure means.

Some of the more popular symmetric encryption algorithms in use today are AES, ChaCha20, CAST, Twofish, and IDEA.

Tech Tip

How Many Keys Do You Need?

Since the same key is used for encryption and decryption in a symmetric scheme, the number of keys needed for a group to communicate secretly depends on whether or not individual messages are to be kept secret from members of the group. If you have K members in your group, and your only desire is to communicate secretly with respect to people outside of the K members, then one key is all that is needed. But then all K members of the group can read every message. If you desire to have protected communications in the group, then K * (K – 1)/2 keys are needed to manage all pairwise communications in the group. If the group has 10 members, this is 45 keys. If the group has 100 members, it is 4950 keys, and for 1000 members it is 499,500! Clearly there is a scale issue. One of the advantages of asymmetric encryption is that the pairwise number is K—clearly a huge scale advantage.

DES

DES, the Data Encryption Standard, was developed in response to the National Bureau of Standards (NBS), now known as the National Institute of Standards and Technology (NIST), and was adopted as a federal standard in 1976. DES is what is known as a block cipher; it segments the input data into blocks of a specified size, typically padding the last block to make it a multiple of the block size required. This is in contrast to a stream cipher, which encrypts the data bit by bit. In the case of DES, the block size is 64 bits, which means DES takes a 64-bit input and outputs 64 bits of ciphertext. This process is repeated for all 64-bit blocks in the message. DES uses a key length of 56 bits, and all security rests within the key. The same algorithm and key are used for both encryption and decryption.

At the most basic level, DES performs a substitution and then a permutation (a form of transposition) on the input, based on the key. This action is called a round, and DES performs this 16 times on every 64-bit block. The algorithm goes step by step, producing 64-bit blocks of ciphertext for each plaintext block. This is carried on until the entire message has been encrypted with DES. As mentioned, the same algorithm and key are used to decrypt and encrypt with DES. The only difference is that the sequence of key permutations is used in reverse order.

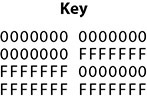

Over the years that DES has been a cryptographic standard, a lot of cryptanalysis has occurred, and while the algorithm has held up very well, some problems have been encountered. Weak keys are keys that are less secure than the majority of keys allowed in the keyspace of the algorithm. In the case of DES, because of the way the initial key is modified to get the subkey, certain keys are weak keys. The weak keys equate in binary to having all 1’s or all 0’s, like those shown in Figure 5.9, or to having half the key all 1’s and the other half all 0’s.

• Figure 5.9 Weak DES keys

Semi-weak keys, where two keys will encrypt plaintext to identical ciphertext, also exist, meaning that either key will decrypt the ciphertext. The total number of possibly weak keys is 64, which is very small relative to the 256 possible keys in DES. With 16 rounds and not using a weak key, DES was found to be reasonably secure and, amazingly, has been for more than two decades. In 1999, a distributed effort consisting of a supercomputer and 100,000 PCs over the Internet was made to break a 56-bit DES key. By attempting more than 240 billion keys per second, the effort was able to retrieve the key in less than a day. This demonstrates an incredible resistance to cracking the then 20-year-old algorithm, but it also demonstrates that more stringent algorithms are needed to protect data today.

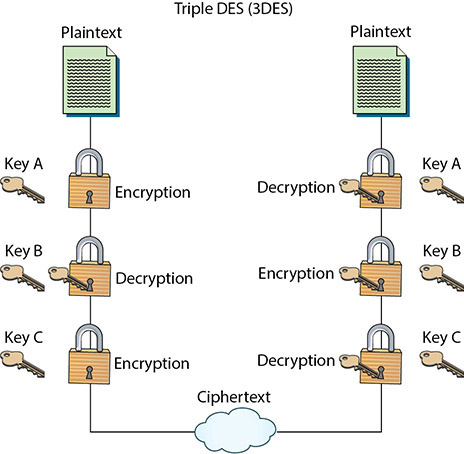

3DES

Triple DES (3DES) is a variant of DES. Depending on the specific variant, it uses either two or three keys instead of the single key that DES uses. It also spins through the DES algorithm three times via what’s called multiple encryption. This significantly improves the strength.

Multiple encryption can be performed in several different ways. The simplest method of multiple encryption is just to stack algorithms on top of each other—taking plaintext, encrypting it with DES, then encrypting the first ciphertext with a different key, and then encrypting the second ciphertext with a third key. In reality, this technique is less effective than the technique that 3DES uses. One of the modes of 3DES (EDE mode) is to encrypt with one key, then decrypt with a second, and then encrypt with a third, as shown in Figure 5.10.

• Figure 5.10 Diagram of 3DES

This greatly increases the number of attempts needed to retrieve the key and is a significant enhancement of security. The additional security comes at a price, however. It can take up to three times longer to compute 3DES than to compute DES. However, the advances in memory and processing power in today’s electronics make this problem irrelevant in all devices except for very small low-power handhelds.

The only weaknesses of 3DES are those that already exist in DES. However, due to the use of different keys in the same algorithm, which results in a longer key length by adding the first keyspace to the second keyspace, and the greater resistance to brute forcing, 3DES has less actual weakness. While 3DES continues to be popular and is still widely supported, AES has taken over as the symmetric encryption standard.

AES

The current gold standard for symmetric encryption is the AES algorithm. In response to a worldwide call in the late 1990s for a new symmetric cipher, a group of Dutch researchers submitted a method called Rijndael (pronounced rain doll).

Tech Tip

AES in Depth

AES is a 128-bit block cipher, and its blocks are represented as 4×4 arrays of bytes that are called a “state.” The AES key sizes refer to the number of “rounds” that the plaintext will be put through as it’s encrypted:

![]() 128-bit key = 10 rounds

128-bit key = 10 rounds

![]() 192-bit key = 12 rounds

192-bit key = 12 rounds

![]() 256-bit key = 14 rounds

256-bit key = 14 rounds

Each round the “state” undergoes includes substitutions from a lookup table, rows shifted cyclically, and a linear mixing operation that combines the 4 bytes in each column. For decryption, a set of reverse rounds is performed. For a more in-depth description of AES, see the NIST document http://csrc.nist.gov/publications/fips/fips197/fips-197.pdf.

In the fall of 2000, NIST picked Rijndael to be the new AES. It was chosen for its overall security as well as its good performance on limited-capacity devices. Rijndael cipher can also be configured to use blocks of 192 or 256 bits, but AES has standardized on 128-bit blocks. AES can have key sizes of 128, 192, and 256 bits, with the size of the key affecting the number of rounds used in the algorithm. Longer key versions are known as AES-192 and AES-256, respectively.

The Rijndael/AES algorithm has been well tested and has a suitable key length to provide security for many years to come. Currently, no attack methods are known to exist against AES. AES as a block cipher is operated using modes, and using Galois Counter Mode (GCM) it can support Authenticated Encryption with Associated Data (AEAD) and is the most widely used block cipher in TLS 1.2 and 1.3. When run in GCM and CCM (Counter with CBC_MAC) mode, AES provides a stream cipher function to support message authentication for TLS 1.3, making it an AEAD as opposed to a plain block cipher.

CAST

CAST is an encryption algorithm that is similar to DES in its structure. It was designed by Carlisle Adams and Stafford Tavares. CAST uses a 64-bit block size for 64- and 128-bit key versions, and a 128-bit block size for the 256-bit key version. Like DES, it divides the plaintext block into a left half and a right half. The right half is then put through function f and then is XORed with the left half. This value becomes the new right half, and the original right half becomes the new left half. This is repeated for eight rounds for a 64-bit key, and the left and right outputs are concatenated to form the ciphertext block. The algorithm in CAST-256 form was submitted for the AES standard but was not chosen. CAST has undergone thorough analysis, with only minor weaknesses discovered that are dependent on low numbers of rounds. Currently, no better way is known to break high-round CAST than by brute forcing the key, meaning that with a sufficient key length, CAST should be placed with other trusted algorithms.

RC

RC is a general term for several ciphers all designed by Ron Rivest—RC officially stands for Rivest Cipher. RC1, RC2, RC3, RC4, RC5, and RC6 are all ciphers in the series. RC1 and RC3 never made it to release, but RC2, RC4, RC5, and RC6 are all working algorithms.

RC2

RC2 was designed as a DES replacement, and it is a variable-key-size block-mode cipher. The key size can be from 8 bits to 1024 bits, with the block size being fixed at 64 bits. RC2 breaks up the input blocks into four 16-bit words and then puts them through 18 rounds of either mix or mash operations, outputting 64 bits of ciphertext for 64 bits of plaintext.

According to RSA, RC2 is up to three times faster than DES. RSA maintained RC2 as a trade secret for a long time, with the source code eventually being illegally posted on the Internet. The ability of RC2 to accept different key lengths is one of the larger vulnerabilities in the algorithm. Any key length below 64 bits can be easily retrieved by modern computational power. Additionally, there is a related key attack that needs 234 chosen plaintexts to work. Considering these weaknesses, RC2 is not recommended as a strong cipher.

RC5

RC5 is a block cipher written in 1994. It has multiple variable elements, numbers of rounds, key sizes, and block sizes. This algorithm is relatively unproven, but if configured to run enough rounds, RC5 seems to provide adequate security for current brute forcing technology. Rivest recommends using at least 12 rounds. With 12 rounds in the algorithm, cryptanalysis in a linear fashion proves less effective than brute-force against RC5, and differential analysis fails for 15 or more rounds. A newer algorithm is RC6.

RC6

RC6 is based on the design of RC5. It uses a 128-bit block size, separated into four words of 32 bits each. It uses a round count of 20 to provide security, and it has three possible key sizes: 128, 192, and 256 bits. RC6 is a modern algorithm that runs well on 32-bit computers. With a sufficient number of rounds, the algorithm makes both linear and differential cryptanalysis infeasible. The available key lengths make brute force attacks extremely time-consuming. RC6 should provide adequate security for some time to come.

RC4

RC4 was created before RC5 and RC6, and it differs in operation. RC4 is a stream cipher, whereas all the symmetric ciphers we have looked at so far have been block ciphers. A stream cipher works by enciphering the plaintext in a stream, usually bit by bit. This makes stream ciphers faster than block-mode ciphers. Stream ciphers accomplish this by performing a bitwise XOR with the plaintext stream and a generated keystream.

RC4 operates in this manner. It was developed in 1987 and remained a trade secret of RSA until it was posted to the Internet in 1994. RC4 can use a key length of 8 to 2048 bits, though the most common versions use 128-bit keys. The key is used to initialize a 256-byte state table. This table is used to generate the pseudo-random stream that is XORed with the plaintext to generate the ciphertext. Alternatively, the stream is XORed with the ciphertext to produce the plaintext.

RC4 finally fell to various vulnerabilities and was removed from supported versions of TLS by most trusted browsers by 2019.

Tech Tip

RC4 Deprecated

RC4 is considered to be no longer secure and has been deprecated by all the major players in the security industry. It is replaced by using AES with GCM and CCM in a pseudo-stream-generation mode or by newer stream ciphers like ChaCha20.

Blowfish

Blowfish was designed in 1994 by Bruce Schneier. It is a block-mode cipher using 64-bit blocks and a variable key length from 32 to 448 bits. It was designed to run quickly on 32-bit microprocessors and is optimized for situations with few key changes. Encryption is done by separating the 64-bit input block into two 32-bit words, and then a function is executed every round. Blowfish has 16 rounds; once the rounds are completed, the two words are then recombined to form the 64-bit output ciphertext. The only successful cryptanalysis to date against Blowfish has been against variants that used a reduced number of rounds. There does not seem to be a weakness in the full 16-round version.

Tech Tip

S-Boxes

S-boxes, or substitution boxes, are a method used to provide confusion, a separation of the relationship between the key bits and the ciphertext bits. Used in most symmetric schemes, they perform a form of substitution and can provide significant strengthening of an algorithm against certain forms of attack. They can be in the form of lookup tables, either static like DES or dynamic (based on the key) in other forms such as Twofish.

Twofish

Twofish was developed by Bruce Schneier, David Wagner, Chris Hall, Niels Ferguson, John Kelsey, and Doug Whiting. Twofish was one of the five finalists for the AES competition. Like other AES entrants, it is a block cipher, utilizing 128-bit blocks with a variable-length key of up to 256 bits. It uses 16 rounds and splits the key material into two sets—one to perform the actual encryption and the other to load into the algorithm’s S-boxes. This algorithm is available for public use and has proven to be secure.

IDEA

IDEA (International Data Encryption Algorithm) started out as PES, or Proposed Encryption Cipher, in 1990, and it was modified to improve its resistance to differential cryptanalysis and its name was changed to IDEA in 1992. It is a block-mode cipher using a 64-bit block size and a 128-bit key. The input plaintext is split into four 16-bit segments: A, B, C, and D. The process uses eight rounds, with a final four-step process. The output of the last four steps is then concatenated to form the ciphertext.

All current cryptanalysis on full, eight-round IDEA shows that the most efficient attack would be to brute force the key. The 128-bit key would prevent this attack being accomplished, given current computer technology. The only known issue is that IDEA is susceptible to a weak key—like a key that is made of all 0’s. This weak key condition is easy to check for, and the weakness is simple to mitigate.

ChaCha20

ChaCha20 is a relatively new stream cipher, developed by Daniel Bernstein as a follow-on to Salsa20. ChaCha20 uses a 256-bit key and a 96-bit nonce and uses 20 rounds. It is considered to be highly efficient in software implementations and can be significantly faster than AES. ChaCha20 was adopted as an option for TLS 1.3 because when it is used with the Poly1305 authenticator, it can replace RC4 and act as a crucial component to Authenticated Encryption with Associated Data (AEAD) implementations.

ChaCha20 also uses the current recommended construction for combining encryption and authentication. It’s built using an Authenticated Encryption with Associated Data (AEAD) construction. AEAD is a way of combining a cipher and an authenticator together to get the combined properties of encryption and authentication. AEAD is covered in more detail later in the chapter.

Cipher Modes

In symmetric or block algorithms, there is a need to deal with multiple blocks of identical data to prevent multiple blocks of cyphertext that would identify the blocks of identical input data. There are multiple methods of dealing with this, called modes of operation. Descriptions of the common modes ECB, CBC, CTM, and GCM are provided in the following sections.

ECB

Electronic Codebook (ECB) is the simplest mode operation of all. The message to be encrypted is divided into blocks, and each block is encrypted separately. This has several major issues; the most notable is that identical blocks yield identical encrypted blocks, telling the attacker that the blocks are identical. ECB is not recommended for use in cryptographic protocols.

ECB is not recommended for use in any cryptographic protocol because it does not provide protection against input patterns or known blocks.

CBC

Cipher Block Chaining (CBC) is defined as a block mode where each block is XORed with the previous ciphertext block before being encrypted. An example is in AES-CBC which is the used of CBC with the AES algorithm. To obfuscate the first block, an initialization vector (IV) is XORed with the first block before encryption. CBC is one of the most common modes used, but it has two major weaknesses. First, because there is a dependence on previous blocks, the algorithm cannot be parallelized for speed and efficiency. Second, because of the nature of the chaining, a plaintext block can be recovered from two adjacent blocks of ciphertext. An example of this is in the POODLE (Padding Oracle On Downgraded Legacy Encryption) attack. This type of padding attack works because a 1-bit change to the ciphertext causes complete corruption of the corresponding block of plaintext as well as inverts the corresponding bit in the following block of plaintext, but the rest of the blocks remain intact.

Counter

Counter Mode (CTM) uses a “counter” function to generate a nonce that is used for each block encryption. Different blocks have different nonces, enabling parallelization of processing and substantial speed improvements. The sequence of operations is to take the counter function value (nonce), encrypt using the key, and then XOR with plaintext. Each block can be done independently, resulting in the ability to multithread the processing. CTM is also abbreviated CTR in some circles.

CCM is a mode of operation involving CBC (Cipher Block Chaining, described previously) with a MAC, or CBC-MAC. This method was designed for block ciphers with a length of 128 bits, and the length of the message and any associated data must be known in advance. This means it is not an “online” form of AEAD, which is characterized as allowing any length of input.

Galois Counter Mode (GCM) is an extension of CTM via the addition of a Galois mode of authentication. This adds an authentication function to the cipher mode, and the Galois field used in the process can be parallelized, providing efficient operations. GCM is employed in many international standards, including IEEE 802.1ad and 802.1AE. NIST has recognized AES-GCM as well as GCM and GMAC. AES-GCM cipher suites for TLS are described in IETF RFC 5288.

Authenticated Encryption with Associated Data (AEAD)

Authenticated Encryption with Associated Data (AEAD) is a form of encryption designed to provide both confidentiality and authenticity services. A wide range of authenticated modes are available for developers, including GCM, OCB, and EAX.

Tech Tip

Authenticated Encryption

Why do you need authenticated encryption? To protect block ciphers against a wide range of chosen ciphertext attacks, such as POODLE, one needs a second layer of protection, using a MAC implementation such as HMAC-SHA. This is done as follows:

1. Computing the MAC on the ciphertext, not the plaintext

2. Use of different keys—one for encryption and a different one for the MAC

This specific, yet generic prescription adds steps and complications for developers. To resolve this, special modes for block ciphers called Authenticated Encryption (AE) or Authenticated Encryption with Associated Data (AEAD) were devised. These provide the same protection as the block cipher–MAC combination, but in a single function with a single key. AE(AD) modes were developed to make solutions easier for implementations, but adoptions have been slow. Use of AEAD is one of the significant improvements in TLS 1.3.

OCB is Offset Codebook Mode, a patented implementation that offers the highest performance, but because of patents, it is not included in any international standards. EAX solves the patent problem, but likewise has not been adopted by any international standards. This leaves GCM, which was described in the previous section.

Block vs. Stream

When encryption operations are performed on data, there are two primary modes of operation: block and stream. Block operations are performed on blocks of data, enabling both transposition and substitution operations. This is possible when large pieces of data are present for the operations. Stream data has become more common with audio and video across the Web. The primary characteristic of stream data is that it is not available in large chunks, but either bit by bit or byte by byte—pieces too small for block operations. Stream ciphers operate using substitution only and therefore offer less robust protection than block ciphers. Table 5.1 compares and contrasts block and stream ciphers.

Table 5.1 Comparison of Block and Stream Ciphers

Symmetric Encryption Summary

Symmetric algorithms are important because they are comparatively fast and have few computational requirements. Their main weakness is that two geographically distant parties both need to have a key that matches the other key exactly (see Figure 5.11).

• Figure 5.11 Symmetric keys must match exactly to encrypt and decrypt the message.

Asymmetric Encryption

Asymmetric Encryption

Asymmetric encryption is more commonly known as public key cryptography. Asymmetric encryption is in many ways completely different from symmetric encryption. While both are used to keep data from being seen by unauthorized users, asymmetric cryptography uses two keys instead of one. It was invented by Whitfield Diffie and Martin Hellman in 1975. The system uses a pair of keys: a private key that is kept secret and a public key that can be sent to anyone. The system’s security relies on resistance to deducing one key, given the other, and thus retrieving the plaintext from the ciphertext.

Asymmetric encryption creates the possibility of digital signatures and also addresses the main weakness of symmetric cryptography. The ability to send messages securely without senders and receivers having had prior contact has become one of the basic concerns with secure communication. Digital signatures will enable faster and more efficient exchange of all kinds of documents, including legal documents. With strong algorithms and good key lengths, security can be ensured.

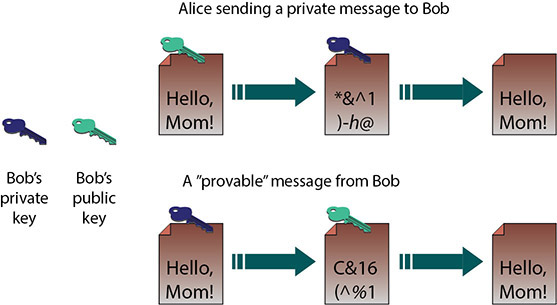

Asymmetric encryption involves two separate but mathematically related keys. The keys are used in an opposing fashion. One key undoes the actions of the other, and vice versa. So, as shown in Figure 5.12, if you encrypt a message with one key, the other key is used to decrypt the message. In the top example, Alice wishes to send a private message to Bob, so she uses Bob’s public key to encrypt the message. Then, because only Bob’s private key can decrypt the message, only Bob can read it. In the lower example, Bob wishes to send a message, with proof that it is from him. By encrypting it with his private key, anyone who decrypts it with his public key knows the message came from Bob.

• Figure 5.12 Using an asymmetric algorithm

Tech Tip

Key Pairs

Public key cryptography always involves two keys—a public key and a private key—that together are known as a key pair. The public key is made widely available to anyone who may need it, while the private key is closely safeguarded and shared with no one.

Asymmetric keys are distributed using certificates. A digital certificate contains information about the association of the public key to an entity, along with additional information that can be used to verify the current validity of the certificate and the key. When keys are exchanged between machines, such as during an SSL/TLS handshake, the exchange is done by passing certificates.

![]()

Asymmetric methods are significantly slower than symmetric methods and therefore are typically not suitable for bulk encryption.

Public key systems typically work by using hard math problems. One of the more common methods relies on the difficulty of factoring large numbers. These functions are often called trapdoor functions, as they are difficult to process without the key but easy to process when you have the key—the trapdoor through the function. For example, given a prime number (say, 293) and another prime (such as 307), it is an easy function to multiply them together to get 89,951. Given 89,951, it is not simple to find the factors 293 and 307 unless you know one of them already. Computers can easily multiply very large primes with hundreds or thousands of digits but cannot easily factor the product.

The strength of these functions is very important: because an attacker is likely to have access to the public key, they can run tests of known plaintext and produce ciphertext. This allows instant checking of guesses that are made about the keys of the algorithm. Public key systems, because of their design, also form the basis for digital signatures, a cryptographic method for securely identifying people. RSA, Diffie-Hellman, elliptic curve cryptography (ECC), and ElGamal are all popular asymmetric protocols. We will look at all of them and their suitability for different functions.

![]()

Cross Check

Digital Certificates

In Chapter 7 you will learn more about digital certificates and how encryption is important to a public key infrastructure. Why is an asymmetric algorithm so important to digital signatures?

Diffie-Hellman

The Diffie-Hellman (DH) algorithm was created in 1976 by Whitfield Diffie and Martin Hellman. This protocol is one of the most common encryption protocols in use today. Diffie-Hellman is important because it enables the sharing of a secret key between two people who have not contacted each other before. It plays a role in the electronic key exchange method of Transport Layer Security (TLS), Secure Shell (SSH), and IP Security (IPSec) protocols.

The protocol, like RSA, uses large prime numbers to work. Two users agree to two numbers, P and G, with P being a sufficiently large prime number and G being the generator. Both users pick a secret number, a and b. Then both users compute their public number:

User 1 X = Ga mod P, with X being the public number

User 2 Y = Gb mod P, with Y being the public number

The users then exchange public numbers. User 1 knows P, G, a, X, and Y.

User 1 Computes Ka = Ya mod P

User 2 Computes Kb = Xb mod P

With Ka = Kb = K, now both users know the new shared secret, K.

This is the basic algorithm, and although methods have been created to strengthen it, Diffie-Hellman is still in wide use. It remains very effective because of the nature of what it is protecting—a temporary, automatically generated secret key that is good only for a single communication session.

Variations of Diffie-Hellman include Diffie-Hellman Ephemeral (DHE), Elliptic Curve Diffie-Hellman (ECDH), and Elliptic Curve Diffie-Hellman Ephemeral (ECDHE).

Diffie-Hellman is the gold standard for key exchange, and for the CompTIA Security+ exam, you should understand the subtle differences between the different forms: DH, DHE, ECDH, and ECDHE.

Groups

Diffie-Hellman (DH) groups determine the strength of the key used in the key exchange process. Higher group numbers are more secure, but require additional time to compute the key. DH group 1 consists of a 768-bit key, group 2 consists of a 1024-bit key, and group 5 comes with a 1536-bit key. Higher number groups are also supported, with correspondingly longer keys.

DHE

There are several variants of the Diffie-Hellman key exchange. Diffie-Hellman Ephemeral (DHE) is a variant where a temporary key is used in the key exchange rather than the same key being reused over and over. An ephemeral key is a key that is not reused, but rather is only used once, thus improving security by reducing the amount of material that can be analyzed via cryptanalysis to break the cipher.

![]()

Ephemeral keys improve security. They are cryptographic keys that are used only once after generation.

ECDHE

Elliptic Curve Diffie-Hellman (ECDH) is a variant of the Diffie-Hellman protocol that uses elliptic curve cryptography. ECDH can also be used with ephemeral keys, becoming Elliptic Curve Diffie-Hellman Ephemeral (ECDHE), to enable perfect forward security.

RSA Algorithm

The RSA algorithm is one of the first public key cryptosystems ever invented. It can be used for both encryption and digital signatures. RSA is named after its inventors, Ron Rivest, Adi Shamir, and Leonard Adleman, and was first published in 1977.

This algorithm uses the product of two very large prime numbers and works on the principle of difficulty in factoring such large numbers. It’s best to choose large prime numbers that are from 100 to 200 digits in length and are equal in length. These two primes will be P and Q. Randomly choose an encryption key, E, so that E is greater than 1, is less than P * Q, and is odd. E must also be relatively prime to (P – 1) and (Q – 1). Then compute the decryption key D:

D = E–1 mod ((P – 1)(Q – 1))

Now that the encryption key and decryption key have been generated, the two prime numbers can be discarded, but they should not be revealed.

To encrypt a message, it should be divided into blocks less than the product of P and Q. Then

Ci = MiE mod (P * Q)

C is the output block of ciphertext matching the block length of the input message, M. To decrypt a message, take ciphertext, C, and use this function:

Mi = CiD mod (P * Q)

The use of the second key retrieves the plaintext of the message.

This is a simple function, but its security has withstood the test of more than 20 years of analysis. Considering the effectiveness of RSA’s security and the ability to have two keys, why are symmetric encryption algorithms needed at all? The answer is speed. RSA in software can be 100 times slower than DES, and in hardware it can be even slower.

RSA can be used to perform both regular encryption and digital signatures. Digital signatures try to duplicate the functionality of a physical signature on a document using encryption. Typically, RSA and the other public key systems are used in conjunction with symmetric key cryptography. Public key, the slower protocol, is used to exchange the symmetric key (or shared secret), and then the communication uses the faster symmetric key protocol. This process is known as electronic key exchange.

Because the security of RSA is based on the supposed difficulty of factoring large numbers, the main weaknesses are in the implementations of the protocol. Until recently, RSA was a patented algorithm, but it was a de facto standard for many years.

ElGamal

ElGamal can be used for both encryption and digital signatures. Taher Elgamal designed the system in the early 1980s. This system was never patented and is free for use. It is used as the U.S. government standard for digital signatures.

The system is based on the difficulty of calculating discrete logarithms in a finite field. Three numbers are needed to generate a key pair. User 1 chooses a prime, P, and two random numbers, F and D. F and D should both be less than P. Then user 1 can calculate the public key A like so:

A = DF mod P

Then A, D, and P are shared with the second user, with F being the private key. To encrypt a message, M, a random key, k, is chosen that is relatively prime to P – 1. Then

C1 = Dk mod P

C2 = Ak M mod P

C1 and C2 make up the ciphertext. Decryption is done by

M = C2/C1F mod P

ElGamal uses a different function for digital signatures. To sign a message, M, once again choose a random value, k, that is relatively prime to P –1. Then

C1 = Dk mod P

C2 = (M – C1 * F)/k (mod P – 1)

C1 concatenated to C2 is the digital signature.

ElGamal is an effective algorithm and has been in use for some time. It is used primarily for digital signatures. Like all asymmetric cryptography, it is slower than symmetric cryptography.