Infrastructure Security

Infrastructure Security

Infrastructure security begins with the design of the infrastructure itself. The proper use of components improves not only performance but security as well. Network components are not isolated from the computing environment and are an essential aspect of a total computing environment. From the routers, switches, and cables that connect the devices, to the firewalls and gateways that manage communication, from the network design, to the protocols that are employed—all these items play essential roles in both performance and security.

Devices

Devices

A complete network computer solution in today’s business environment consists of more than just client computers and servers. Devices are needed to connect the clients and servers and to regulate the traffic between them. Devices are also needed to expand this network beyond simple client computers and servers to include yet other devices, such as wireless and handheld systems. Devices come in many forms and with many functions—from hubs and switches, to routers, wireless access points, to special-purpose devices such as virtual private network (VPN) devices. Each device has a specific network function and plays a role in maintaining network infrastructure security.

![]()

Cross Check

The Importance of Availability

In Chapter 2 we examined the CIA of security: confidentiality, integrity, and availability. Unfortunately, the availability component is often overlooked, even though availability is what has moved computing into the modern networked framework and plays a significant role in security.

Security failures can occur in two ways. First, a failure can allow unauthorized users access to resources and data they are not authorized to use, thus compromising information security. Second, a failure can prevent a user from accessing resources and data the user is authorized to use. This second failure is often overlooked, but it can be as serious as the first. The primary goal of network infrastructure security is to allow all authorized use and deny all unauthorized use of resources.

Workstations

Most users are familiar with the client computers used in the client/server model called workstation devices. The workstation is the machine that sits on the desktop and is used every day for sending and reading e-mail, creating spreadsheets, writing reports in a word processing program, and playing games. If a workstation is connected to a network, it is an important part of the security solution for the network. Many threats to information security can start at a workstation, but much can be done in a few simple steps to provide protection from many of these threats.

![]()

Cross Check

Workstations and Servers

Servers and workstations are key nodes on networks. The specifics for securing these devices are covered in Chapter 14.

Servers

Servers are the computers in a network that host applications and data for everyone to share. Servers come in many sizes—from small single-CPU boxes that may be less powerful than a workstation, to multiple-CPU monsters, up to and including mainframes. The operating systems used by servers range from Windows Server, to Linux, to Multiple Virtual Storage (MVS) and other mainframe operating systems. The OS on a server tends to be more robust than the OS on a workstation system and is designed to service multiple users over a network at the same time. Servers can host a variety of applications, including web servers, databases, e-mail servers, file servers, print servers, and servers for middleware applications.

Mobile Devices

Mobile devices such as laptops, tablets, and mobile phones are the latest devices to join the corporate network. Mobile devices can create a major security gap, as a user may access separate e-mail accounts—one personal, without anti-malware protection, and the other corporate. Mobile devices are covered in detail in Chapter 12.

Device Security, Common Concerns

As more and more interactive devices (that is, devices you can interact with programmatically) are being designed, a new threat source has appeared. In an attempt to build security into devices, typically, a default account and password must be entered to enable the user to access and configure the device remotely. These default accounts and passwords are well known in the hacker community, so one of the first steps you must take to secure such devices is to change the default credentials. Anyone who has purchased a home office router knows the default configuration settings and can check to see if another user has changed theirs. If they have not, this is a huge security hole, allowing outsiders to “reconfigure” their network devices.

Tech Tip

Default Accounts

Always reconfigure all default accounts on all devices before exposing them to external traffic. This is to prevent others from reconfiguring your devices based on known access settings.

Network-Attached Storage

Because of the speed of today’s Ethernet networks, it is possible to manage data storage across the network. This has led to a type of storage known as network-attached storage (NAS). The combination of inexpensive hard drives, fast networks, and simple application-based servers has made NAS devices in the terabyte range affordable for even home users. Because of the large size of video files, this has become popular for some users as a method of storing TV and video libraries. Because NAS is a network device, it is susceptible to various attacks, including sniffing of credentials and a variety of brute force attacks to obtain access to the data.

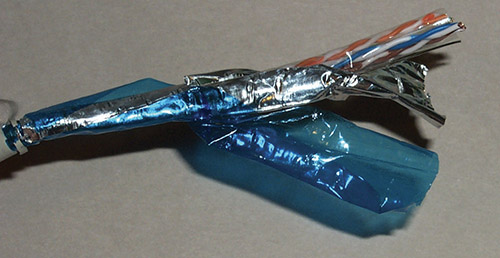

Removable Storage

Because removable devices can move data outside of the corporate-controlled environment, their security needs must be addressed. Removable devices can bring unprotected or corrupted data into the corporate environment. All removable devices should be scanned by antivirus software upon connection to the corporate environment. Corporate policies should address the copying of data to removable devices. Many mobile devices can be connected via USB to a system and used to store data—and in some cases vast quantities of data. This capability can be used to avoid some implementations of data loss prevention (DLP) mechanisms.

Virtualization

Virtualization

Virtualization technology is used to allow a computer to have more than one OS present and, in many cases, operating at the same time. Virtualization is an abstraction of the OS layer, creating the ability to host multiple OSs on a single piece of hardware. One of the major advantages of virtualization is the separation of the software and the hardware, creating a barrier that can improve many system functions, including security. The underlying hardware is referred to as the host machine, and on it is a host OS. Either the host OS has built-in hypervisor capability or an application is needed to provide the hypervisor function to manage the virtual machines (VMs). The virtual machines are typically referred to as the guest OSs.

Newer OSs are designed to natively incorporate virtualization hooks, enabling virtual machines to be employed with greater ease. There are several common virtualization solutions, including Microsoft Hyper-V, VMware, Oracle VM VirtualBox, Parallels, and Citrix Xen. It is important to distinguish between virtualization and boot loaders that allow different OSs to boot on hardware. Apple’s Boot Camp allows you to boot into Microsoft Windows on Apple hardware. This is different from Parallels, a product with complete virtualization capability for Apple hardware.

Virtualization offers much in terms of host-based management of a system. From snapshots that allow easy rollback to previous states, faster system deployment via preconfigured images, ease of backup, and the ability to test systems, virtualization offers many advantages to system owners. The separation of the operational software layer from the hardware layer can offer many improvements in the management of systems.

Hypervisor

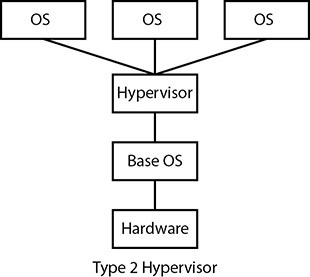

To enable virtualization, a hypervisor is employed. A hypervisor is a low-level program that allows multiple operating systems to run concurrently on a single host computer. Hypervisors use a thin layer of code to allocate resources in real time. The hypervisor acts as the traffic cop that controls I/O and memory management. Two types of hypervisors exist: Type 1 and Type 2.

![]()

A hypervisor is the interface between a virtual machine and the host machine hardware. Hypervisors are the layer that enables virtualization.

Type 1

Type 1 hypervisors run directly on the system hardware. They are referred to as a native, bare-metal, or embedded hypervisors in typical vendor literature. Type 1 hypervisors are designed for speed and efficiency, as they do not have to operate through another OS layer. Examples of Type 1 hypervisors include KVM (Kernel-based Virtual Machine, a Linux implementation), Xen (Citrix Linux implementation), Microsoft Windows Server Hyper-V (a headless version of the Windows OS core), and VMware’s vSphere/ESXi platforms. All of these are designed for the high-end server market in enterprises and allow multiple VMs on a single set of server hardware. These platforms come with management toolsets to facilitate VM management in the enterprise.

Type 2

Type 2 hypervisors run on top of a host operating system. In the beginning of the virtualization movement, Type 2 hypervisors were the most popular. Administrators could buy the VM software and install it on a server they already had running. Typical Type 2 hypervisors include Oracle’s VirtualBox and VMware’s VMware Workstation Player. These are designed for limited numbers of VMs, typically in a desktop or small server environment.

Application Cells/Containers

A hypervisor-based virtualization system enables multiple OS instances to coexist on a single hardware platform. Application cells/containers are the same idea, but rather than having multiple independent OSs, a container holds the portions of an OS that it needs separate from the kernel. In essence, multiple containers can share an OS, yet have separate memory, CPU, and storage threads, thus guaranteeing that they will not interact with other containers. This allows multiple instances of an application or different applications to share a host OS with virtually no overhead. This also allows portability of the application to a degree separate from the OS stack. There are multiple major container platforms in existence, and the industry has coalesced around a standard form called the Open Container Initiative, designed to enable standardization and the market stability of the container marketplace.

One can think of containers as the evolution of the VM concept into the application space. A container consists of an entire runtime environment—an application, plus all the dependencies, libraries and other binaries, and configuration files needed to run it, all bundled into one package. This eliminates the differences between the development, test, and production environments because the differences are in the container as a standard solution. Because the application platform, including its dependencies, is containerized, any differences in OS distributions, libraries, and underlying infrastructure are abstracted away and rendered moot.

VM Sprawl Avoidance

VM sprawl is the uncontrolled spreading of disorganization caused by a lack of an organizational structure when many similar elements require management. Just as you can lose a file or an e-mail and have to go hunt for it, virtual machines can suffer from being misplaced. When you only have a few files, sprawl isn’t a problem, but when you have hundreds of files, developed over a long period of time, and not necessarily in an organized manner, sprawl does become a problem. The same is happening to virtual machines in the enterprise. In the end, a virtual machine is a file that contains a copy of a working machine’s disk and memory structures. If an enterprise only has a couple of virtual machines, then keeping track of them is relatively easy. But as the number grows, sprawl can set in. VM sprawl is a symptom of a disorganized structure. If the servers in a server farm could move between racks at random, there would be an issue finding the correct machine when you needed to go physically find it. The same effect occurs with VM sprawl. As virtual machines are moved around, finding the one you want in a timely manner can be an issue. VM sprawl avoidance is a real thing and needs to be implemented via policy. You can fight VM sprawl by using naming conventions and proper storage architectures so that the files are in the correct directories, thus making finding a specific VM easy and efficient. But like any filing system, it is only good if it is followed; therefore, policies and procedures need to ensure that proper VM naming and filing are done on a regular basis.

VM Escape Protection

When multiple VMs are operating on a single hardware platform, one concern is VM escape. This occurs when software (typically malware) or an attacker escapes from one VM to the underlying OS and then resurfaces in a different VM. When you examine the problem from a logical point of view, you see that both VMs use the same RAM, the same processors, and so on; therefore, the difference is one of timing and specific combinations of elements within the VM environment. The VM system is designed to provide protection, but as with all things of larger scale, the devil is in the details. Large-scale VM environments have specific modules designed to detect escape and provide VM escape protection to other modules.

Virtual environments have several specific topics that may be asked on the exam. Understand the difference between Type 1 and Type 2 hypervisors, and where you would use each. Understand the differences between VM sprawl and VM escape as well as the effects of each.

Snapshots

A snapshot is a point-in-time saving of the state of a virtual machine. Snapshots have great utility because they are like a savepoint for an entire system. Snapshots can be used to roll a system back to a previous point in time, undo operations, or provide a quick means of recovery from a complex, system-altering change that has gone awry. Snapshots act as a form of backup and are typically much faster than normal system backup and recovery operations.

Patch Compatibility

Having an OS operate in a virtual environment does not change the need for security associated with the OS. Patches are still needed and should be applied, independent of the virtualization status. Because of the nature of a virtual environment, it should have no effect on the utility of patching because the patch is for the guest OS.

Host Availability/Elasticity

When you set up a virtualization environment, protecting the host OS and hypervisor level is critical for system stability. The best practice is to avoid the installation of any applications on the host-level machine. All apps should be housed and run in a virtual environment. This aids in the system stability by providing separation between the application and the host OS. The term elasticity refers to the ability of a system to expand/contract as system requirements dictate. One of the advantages of virtualization is that a virtual machine can be moved to a larger or smaller environment based on need. If a VM needs more processing power, then migrating the VM to a new hardware system with greater CPU capacity allows the system to expand without you having to rebuild it.

Security Control Testing

When applying security controls to a system to manage security operations, you need to test the controls to ensure they are providing the desired results. Putting a system into a VM does not change this requirement. In fact, it may complicate it because of the nature of the relationship between the guest OS and the hypervisor. It is essential to specifically test all security controls inside the virtual environment to ensure their behavior is still effective.

Sandboxing

Sandboxing refers to the quarantining or isolation of a system from its surroundings. Virtualization can be used as a form of sandboxing with respect to an entire system. You can build a VM, test something inside the VM, and, based on the results, make a decision with regard to stability or whatever concern was present.

Networking

Networking

Networks are used to connect devices together. Networks are composed of components that perform networking functions to move data between devices. Networks begin with network interface cards and then continue in layers of switches and routers. Specialized networking devices are used for specific purposes, such as security and traffic management.

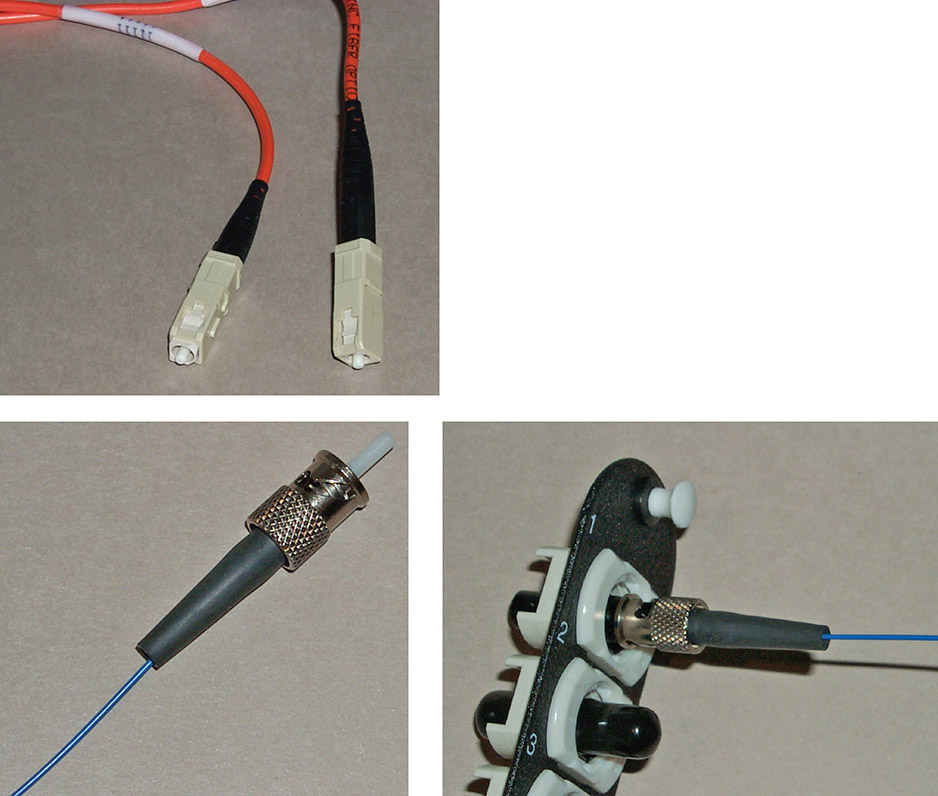

Network Interface Cards

To connect a server or workstation to a network, a device known as a network interface card (NIC) is used. A NIC is a card with a connector port for a particular type of network connection, either Ethernet or Token Ring. The most common network type in use for LANs is the Ethernet protocol, and the most common connector is the RJ-45 connector. While the term card is retained in the name, most network interfaces are built into the motherboard of a system. Only in servers with the need for multiple network connections is one likely to find an actual card for network connectivity.

A NIC is the physical connection between a computer and the network. The purpose of a NIC is to provide lower-level protocol functionality from the OSI (Open Systems Interconnection) model. Because the NIC defines the type of physical layer connection, different NICs are used for different physical protocols. NICs come in single-port and multiport varieties, and most workstations use only a single-port NIC, as only a single network connection is needed. For servers, multiport NICs are used to increase the number of network connections, thus increasing the data throughput to and from the network.

Each NIC port is serialized with a unique code, 48 bits long, referred to as a Media Access Control (MAC) address. These are created by the manufacturer, with 24 bits representing the manufacturer and 24 bits being a serial number, guaranteeing uniqueness. MAC addresses are used in the addressing and delivery of network packets to the correct machine and in a variety of security situations. Unfortunately, these addresses can be changed, or “spoofed,” rather easily. In fact, it is common for personal routers to clone a MAC address to allow users to use multiple devices over a network connection that expects a single MAC.

Tech Tip

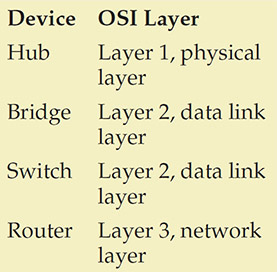

Device/OSI Level Interaction

Different network devices operate using different levels of the OSI networking model to move packets from device to device, as detailed in the following table:

Hubs

A hub is networking equipment that connects devices that use the same protocol at the physical layer of the OSI model. A hub allows multiple machines in an area to be connected together in a star configuration, with the hub as the center. This configuration can save significant amounts of cable and is an efficient method of configuring an Ethernet backbone. All connections on a hub share a single collision domain, a small cluster in a network where collisions occur. As network traffic increases, it can become limited by collisions. The collision issue has made hubs obsolete in newer, higher-performance networks, with inexpensive switches and switched Ethernet keeping costs low and usable bandwidth high. Hubs also create a security weakness in that all connected devices see all traffic, enabling sniffing and eavesdropping to occur. In today’s networks, hubs have all but disappeared, being replaced by low-cost switches.

Bridges

Bridges are networking equipment that connect devices using the same protocol at the data link layer of the OSI model. A bridge operates at the data link layer, filtering traffic based on MAC addresses. Bridges can reduce collisions by separating pieces of a network into two separate collision domains, but this only cuts the collision problem in half. Although bridges are useful, a better solution is to use switches for network connections.

Switches

A switch forms the basis for connections in most Ethernet-based LANs. Although hubs and bridges still exist, in today’s high-performance network environment, switches have replaced both. A switch has separate collision domains for each port. This means that for each port, two collision domains exist: one from the port to the client on the downstream side, and one from the switch to the network upstream. When full duplex is employed, collisions are virtually eliminated from the two nodes: host and client.

Switches operate at the data link layer, while routers act at the network layer. For intranets, switches have become what routers are on the Internet—the device of choice for connecting machines. As switches have become the primary network connectivity device, additional functionality has been added to them. A switch is usually a Layer 2 device, but Layer 3 switches incorporate routing functionality.

Hubs have been replaced by switches because switches perform a number of features that hubs cannot perform. For example, the switch improves network performance by filtering traffic. It filters traffic by only sending the data to the port on the switch where the destination system resides. The switch knows what port each system is connected to and sends the data only to that port. The switch also provides security features, such as the option to disable a port so that it cannot be used without authorization. The switch also supports a feature called port security, which allows the administrator to control which systems can send data to each of the ports. The switch uses the MAC address of the systems to incorporate traffic-filtering and port security features, which is why it is considered a Layer 2 device.

![]()

MAC filtering can be employed on switches, permitting only specified MACs to connect to them. This can be bypassed if an attacker can learn an allowed MAC because they can clone the permitted MAC onto their own NIC and spoof the switch. To filter edge connections, IEEE 802.1X is more secure (it’s covered in Chapter 11). This can also be referred to as MAC limiting. Be careful to pay attention to context on the exam, however, because MAC limiting also can refer to preventing flooding attacks on switches by limiting the number of MAC addresses that can be “learned” by a switch.

Port Security

Switches can perform a variety of security functions. Switches work by moving packets from inbound connections to outbound connections. While moving the packets, it is possible for switches to inspect the packet headers and enforce security policies. Port security is a capability provided by switches that enables you to control which devices and how many of them are allowed to connect via each port on a switch. Port security operates through the use of MAC addresses. Although not perfect—MAC addresses can be spoofed—port security can provide useful network security functionality.

Port address security based on Media Access Control (MAC) addresses can determine whether a packet is allowed or blocked from a connection. This is the very function that a firewall uses for its determination, and this same functionality is what allows an 802.1X device to act as an “edge device.”

Port security has three variants:

![]() Static learning A specific MAC address is assigned to a port. This is useful for fixed, dedicated hardware connections. The disadvantage is that the MAC addresses need to be known and programmed in advance, making this good for defined connections but not good for visiting connections.

Static learning A specific MAC address is assigned to a port. This is useful for fixed, dedicated hardware connections. The disadvantage is that the MAC addresses need to be known and programmed in advance, making this good for defined connections but not good for visiting connections.

![]() Dynamic learning Allows the switch to learn MAC addresses when they connect. Dynamic learning is useful when you expect a small, limited number of machines to connect to a port.

Dynamic learning Allows the switch to learn MAC addresses when they connect. Dynamic learning is useful when you expect a small, limited number of machines to connect to a port.

![]() Sticky learning Also allows multiple devices to a port, but also stores the information in memory that persists through reboots. This prevents the attacker from changing settings through power cycling the switch.

Sticky learning Also allows multiple devices to a port, but also stores the information in memory that persists through reboots. This prevents the attacker from changing settings through power cycling the switch.

Broadcast Storm Prevention

One form of attack is a flood. There are numerous types of flooding attacks: ping floods, SYN floods, ICMP floods (Smurf attacks), and traffic flooding. Flooding attacks are used as a form of denial of service (DoS) to a network or system. Detecting flooding attacks is relatively easy, but there is a difference between detecting the attack and mitigating the attack. Flooding can be actively managed through dropping connections or managing traffic. Flood guards act by managing traffic flows. By monitoring the traffic rate and percentage of bandwidth occupied by broadcast, multicast, and unicast traffic, a flood guard can detect when to block traffic to manage flooding.

![]()

Flood guards are commonly implemented in firewalls and IDS/IPS solutions to prevent DoS and DDoS attacks.

Bridge Protocol Data Unit (BPDU) Guard

To manage the Spanning Tree Protocol (STP), devices and switches can use Bridge Protocol Data Unit (BPDU) packets. These are specially crafted messages with frames that contain information about the Spanning Tree Protocol. The issue with BPDU packets is, while necessary in some circumstances, their use results in a recalculation of the STP, and this consumes resources. An attacker can issue multiple BPDU packets to a system to force multiple recalculations that serve as a network denial-of-service attack. To prevent this form of attack, edge devices can be configured with Bridge Protocol Data Unit (BPDU) guards that detect and drop these packets. While this eliminates the use of this functionality from some locations, the resultant protection is worth the minor loss of functionality.

Loop Prevention

Switches operate at layer 2 of the OSI reference model, and at this level there is no countdown mechanism to kill packets that get caught in loops or on paths that will never resolve. This means that a mechanism is needed for loop prevention. On layer 3, a time-to-live (TTL) counter is used, but there is no equivalent on layer 2. The layer 2 space acts as a mesh, where potentially the addition of a new device can create loops in the existing device interconnections. Open Shortest Path First (OSPF) is a link-state routing protocol that is commonly used between gateways in a single autonomous system. To prevent loops, a technology called spanning trees is employed by virtually all switches. STP allows for multiple, redundant paths, while breaking loops to ensure a proper broadcast pattern. STP is a data link layer protocol and is approved in IEEE standards 802.1D, 802.1w, 802.1s, and 802.1Q. It acts by trimming connections that are not part of the spanning tree connecting all of the nodes. STP messages are carried in BPDU frames described in the previous section.

BPDU guards, MAC filtering, and loop detection are all mechanisms used to provide port security. Understand the differences in their actions. MAC filtering verifies MAC addresses before allowing a connection, BPDU guards prevent tampering with BPDU packets, and loop detection finds loops in local networks.

Dynamic Host Configuration Protocol (DHCP) Snooping

When an administrator sets up a network, they usually assign IP addresses to systems in one of two ways: statically or dynamically through DHCP. A static IP address assignment is fairly simple: the administrator decides what IP address to assign to a server or PC, and that IP address stays assigned to that system until the administrator decides to change it. The other popular method is through the Dynamic Host Configuration Protocol (DHCP). Under DHCP, when a system boots up or is connected to the network, it sends out a broadcast query looking for a DHCP server. All available DHCP servers reply to this request. Should there be more than one active DHCP server within the network, the client uses the one whose answer reaches them first. From this DHCP server, the client then receives the address assignment. DHCP is very popular in large user environments where the cost of assigning and tracking IP addresses among hundreds or thousands of user systems is extremely high.

The weakness of using the first response received allows a rogue DNS server to reconfigure the network. A rogue DHCP server can route the client to a different gateway, an attack known as DHCP spoofing. Attackers can use a fake gateway to record data transfers, obtaining sensitive information, before sending data on to its intended destination, which is known as a man-in-the-middle attack. Incorrect addresses can lead to a DoS attack blocking key network services. Dynamic Host Configuration Protocol (DHCP) snooping is a defensive measure against an attacker that attempts to use a rogue DHCP device. DHCP snooping prevents malicious DHCP servers from establishing contact by examining DHCP responses at the switch level and not sending those from unauthorized DHCP servers. This method is detailed in RFC 7513, co-authored by Cisco and adopted by many switch vendors.

Media Access Control (MAC) Filtering

MAC filtering is the selective admission of packets based on a list of approved Media Access Control (MAC) addresses. Employed on switches, this method is used to provide a means of machine authentication. In wired networks, this enjoys the protection afforded by the wires, making interception of signals to determine their MAC addresses difficult. In wireless networks, this same mechanism suffers from the fact that an attacker can see the MAC addresses of all traffic to and from the access point and then can spoof the MAC addresses that are permitted to communicate via the access point.

![]()

MAC filtering can be employed on wireless access points but can be bypassed by attackers observing allowed MAC addresses and spoofing the allowed MAC address for the wireless card.

Port address security based on MAC addresses can determine whether a packet is allowed or blocked from a connection. This is the very function that a firewall uses for its determination, and this same functionality is what allows an 802.1X device to act as an “edge device.”

![]()

Network traffic segregation by switches can also act as a security mechanism, preventing access to some devices from other devices. This can prevent someone from accessing critical data servers from a machine in a public area.

One of the security concerns with switches is that, like routers, they are intelligent network devices and are therefore subject to hijacking by hackers. Should a hacker break into a switch and change its parameters, they might be able to eavesdrop on specific or all communications, virtually undetected. Switches are commonly administered using the Simple Network Management Protocol (SNMP) and Telnet protocol, both of which have a serious weakness in that they send passwords across the network in cleartext. A hacker armed with a sniffer that observes maintenance on a switch can capture the administrative password. This allows the hacker to come back to the switch later and configure it as an administrator. An additional problem is that switches are shipped with default passwords, and if these are not changed when the switch is set up, they offer an unlocked door to a hacker.

To secure a switch, you should disable all access protocols other than a secure serial line or a secure protocol such as Secure Shell (SSH). Using only secure methods to access a switch will limit the exposure to hackers and malicious users. Maintaining secure network switches is even more important than securing individual boxes because the span of control to intercept data is much wider on a switch, especially if it’s reprogrammed by a hacker.

Switches are also subject to electronic attacks, such as ARP poisoning and MAC flooding. ARP poisoning is when a device spoofs the MAC address of another device, attempting to change the ARP tables through spoofed traffic and the ARP table-update mechanism. MAC flooding is when a switch is bombarded with packets from different MAC addresses, flooding the switch table and forcing the device to respond by opening all ports and acting as a hub. This enables devices on other segments to sniff traffic.

Routers

A router is a network traffic management device used to connect different network segments together. Routers operate at the network layer (Layer 3) of the OSI model, using the network address (typically an IP address) to route traffic and using routing protocols to determine optimal routing paths across a network. Routers form the backbone of the Internet, moving traffic from network to network, inspecting packets from every communication as they move traffic in optimal paths.

Routers operate by examining each packet, looking at the destination address, and using algorithms and tables to determine where to send the packet next. This process of examining the header to determine the next hop can be done in quick fashion.

![]()

Access control lists (ACLs) can require significant effort to establish and maintain. Creating them is a straightforward task, but their judicious use will yield security benefits with a limited amount of maintenance at scale.

Routers use access control lists (ACLs) as a method of deciding whether a packet is allowed to enter the network. With ACLs, it is also possible to examine the source address and determine whether or not to allow a packet to pass. This allows routers equipped with ACLs to drop packets according to rules built into the ACLs. This can be a cumbersome process to set up and maintain, and as the ACL grows in size, routing efficiency can be decreased. It is also possible to configure some routers to act as quasi–application gateways, performing stateful packet inspection and using contents as well as IP addresses to determine whether or not to permit a packet to pass. This can tremendously increase the time for a router to pass traffic and can significantly decrease router throughput. Configuring ACLs and other aspects of setting up routers for this type of use are beyond the scope of this book.

One serious security concern regarding router operation is limiting who has access to the router and control of its internal functions. Like a switch, a router can be accessed using SNMP and Telnet and programmed remotely. Because of the geographic separation of routers, this can become a necessity because many routers in the world of the Internet can be hundreds of miles apart, in separate locked structures. Physical control over a router is absolutely necessary because if any device—be it a server, switch, or router—is physically accessed by a hacker, it should be considered compromised. Therefore, such access must be prevented. As with switches, it is important to ensure that the administrator password is never passed in the clear, that only secure mechanisms are used to access the router, and that all of the default passwords are reset to strong passwords.

As with switches, the most ensured point of access for router management control is via the serial control interface port. This allows access to the control aspects of the router without having to deal with traffic-related issues. For internal company networks, where the geographic dispersion of routers may be limited, third-party solutions to allow out-of-band remote management exist. This allows complete control over the router in a secure fashion, even from a remote location, although additional hardware is required.

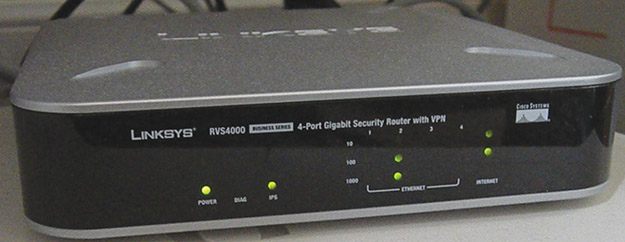

Routers are available from numerous vendors and come in sizes big and small. A typical small home office router for use with cable modem/DSL service is shown in Figure 10.1. Larger routers can handle traffic of up to tens of gigabytes per second per channel, using fiber-optic inputs and moving tens of thousands of concurrent Internet connections across the network. These routers, which can cost hundreds of thousands of dollars, form an essential part of e-commerce infrastructure, enabling large enterprises such as Amazon and eBay to serve many customers’ use concurrently.

• Figure 10.1 A small home office router for cable modem/DSL

Security Devices

Security Devices

A range of devices can be employed at the network layer to instantiate security functionality. Devices can be used for intrusion detection, network access control, and a wide range of other security functions. Each device has a specific network function and plays a role in maintaining network infrastructure security.

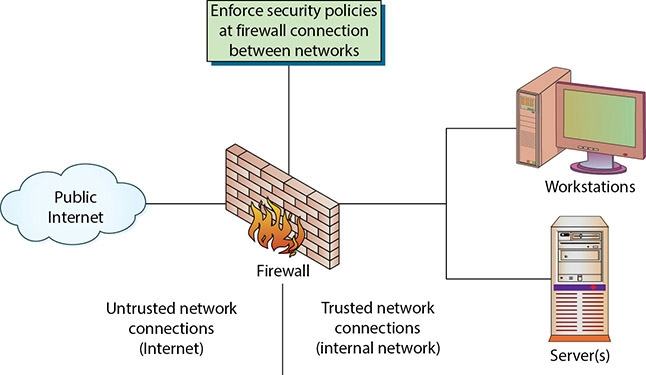

Firewalls

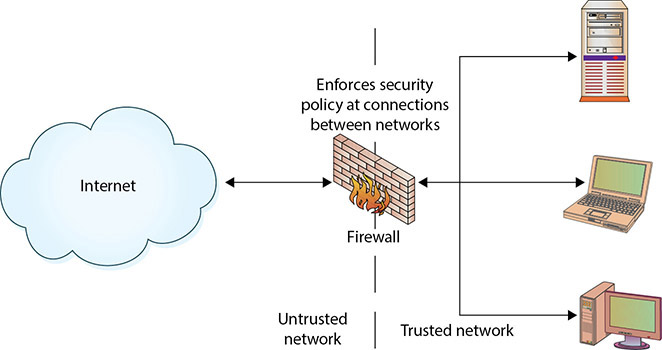

A firewall is a network device—hardware, software, or a combination thereof—whose purpose is to enforce a security policy across its connections by allowing or denying traffic to pass into or out of the network. A firewall is a lot like a gate guard at a secure facility. The guard examines all the traffic trying to enter the facility—cars with the correct sticker or delivery trucks with the appropriate paperwork are allowed in; everyone else is turned away (see Figure 10.2).

• Figure 10.2 How a firewall works

A firewall is a network device (hardware, software, or combination of the two) that enforces a security policy. All network traffic passing through the firewall is examined; traffic that does not meet the specified security criteria or violates the firewall policy is blocked.

The heart of a firewall is the set of security policies that it enforces. Management determines what is allowed in the form of network traffic between devices, and these policies are used to build rule sets for the firewall devices used to filter traffic across the network.

Tech Tip

Firewall Rules

Firewalls are in reality policy enforcement devices. Each rule in a firewall should have a policy behind it, as this is the only manner of managing firewall rule sets over time. The steps for successful firewall management begin and end with maintaining a policy list by firewall of the traffic restrictions to be imposed. Managing this list via a configuration-management process is important to prevent network instabilities from faulty rule sets or unknown “left-over” rules.

Firewall security policies are a series of rules that defines what traffic is permissible and what traffic is to be blocked or denied. These are not universal rules, and there are many different sets of rules for a single company with multiple connections. A web server connected to the Internet may be configured only to allow traffic on ports 80 (HTTP) and 443 (HTTPS) and have all other ports blocked. An e-mail server may have only necessary ports for e-mail open, with others blocked. A key to security policies for firewalls is the same as has been seen for other security policies—the principle of least access. Only allow the necessary access for a function; block or deny all unneeded functionality. How an organization deploys its firewalls determines what is needed for security policies for each firewall. You may even have a small office/home office (SOHO) firewall at your house, such as the RVS4000 shown in Figure 10.3. This device from Linksys provides both routing and firewall functions.

• Figure 10.3 Linksys RVS4000 SOHO firewall

Orphan or left-over rules are rules that were created for a special purpose (testing, emergency, visitor or vendor, and so on) and then forgotten about and not removed after their use ended. These rules can clutter up a firewall and result in unintended challenges to the network security team.

The security topology determines what network devices are employed at what points in a network. At a minimum, the corporate connection to the Internet should pass through a firewall, as shown in Figure 10.4. This firewall should block all network traffic except that specifically authorized by the security policy. This is actually easy to do: blocking communications on a port is simply a matter of telling the firewall to close the port. The issue comes in deciding what services are needed and by whom, and thus which ports should be open and which should be closed. This is what makes a security policy useful but, in some cases, difficult to maintain.

• Figure 10.4 Logical depiction of a firewall protecting an organization from the Internet

The perfect firewall policy is one that the end user never sees and one that never allows even a single unauthorized packet to enter the network. As with any other perfect item, it is rare to find the perfect security policy for a firewall.

To develop a complete and comprehensive security policy, it is first necessary to have a complete and comprehensive understanding of your network resources and their uses. Once you know what your network will be used for, you will have an idea of what to permit. Also, once you understand what you need to protect, you will have an idea of what to block. Firewalls are designed to block attacks before they get to a target machine. Common targets are web servers, e-mail servers, DNS servers, FTP services, and databases. Each of these has separate functionality, and each of these has separate vulnerabilities. Once you have decided who should receive what type of traffic and what types should be blocked, you can administer this through the firewall.

Stateless vs. Stateful

The typical network firewall operates on IP addresses and ports, in essence a stateless interaction with the traffic. The most basic firewalls simply shut off either ports or IP addresses, dropping those packets upon arrival. While useful, they are limited in their abilities, as many services can have differing IP addresses, and maintaining the list of allowed IP addresses is time consuming and, in many cases, not practical. But for internal systems (say, a database server) that have no need to connect to a myriad of other servers, having a simple IP-based firewall in front can limit access to the desired set of machines.

A stateful packet inspection firewall can act upon the state condition of a conversation—is this a new conversation or a continuation of a conversation, and did it originate inside or outside the firewall? This provides greater capability, but at a processing cost that has scalability implications. To look at all packets and determine the need for each and its data requires stateful packet filtering. Stateful means that the firewall maintains, or knows, the context of a conversation. In many cases, rules depend on the context of a specific communications connection. For instance, traffic from an outside server to an inside server may be allowed if it is requested but blocked if it is not. A common example is a request for a web page. This request is actually a series of requests to multiple servers, each of which can be allowed or blocked. As many communications will be transferred to high ports (above 1023), stateful monitoring will enable the system to determine which sets of high-port communications are permissible and which should be blocked. A disadvantage of stateful monitoring is that it takes significant resources and processing to perform this type of monitoring, and this reduces efficiency and requires more robust and expensive hardware.

How Do Firewalls Work?

Firewalls enforce the established security policies. They can do this through a variety of mechanisms, including the following:

![]() Network Address Translation (NAT) As you may remember from Chapter 9, NAT translates private (nonroutable) IP addresses into public (routable) IP addresses.

Network Address Translation (NAT) As you may remember from Chapter 9, NAT translates private (nonroutable) IP addresses into public (routable) IP addresses.

![]() Basic packet filtering Basic packet filtering looks at each packet entering or leaving the network and then either accepts the packet or rejects the packet based on user-defined rules. Each packet is examined separately.

Basic packet filtering Basic packet filtering looks at each packet entering or leaving the network and then either accepts the packet or rejects the packet based on user-defined rules. Each packet is examined separately.

![]() Stateful packet filtering Stateful packet filtering also looks at each packet, but it can examine the packet in its relation to other packets. Stateful firewalls keep track of network connections and can apply slightly different rule sets based on whether or not the packet is part of an established session.

Stateful packet filtering Stateful packet filtering also looks at each packet, but it can examine the packet in its relation to other packets. Stateful firewalls keep track of network connections and can apply slightly different rule sets based on whether or not the packet is part of an established session.

![]() Access control lists (ACLs) ACLs are simple rule sets applied to port numbers and IP addresses. They can be configured for inbound and outbound traffic and are most commonly used on routers and switches.

Access control lists (ACLs) ACLs are simple rule sets applied to port numbers and IP addresses. They can be configured for inbound and outbound traffic and are most commonly used on routers and switches.

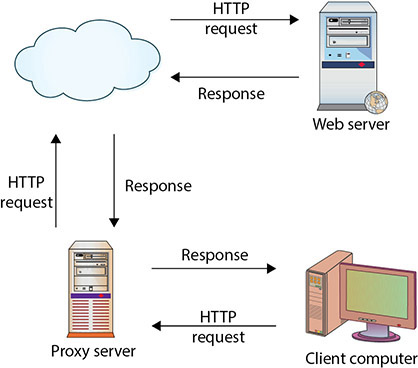

![]() Application layer proxies An application layer proxy can examine the content of the traffic as well as the ports and IP addresses. For example, an application layer has the ability to look inside a user’s web traffic, detect a malicious website attempting to download malware to the user’s system, and block the malware.

Application layer proxies An application layer proxy can examine the content of the traffic as well as the ports and IP addresses. For example, an application layer has the ability to look inside a user’s web traffic, detect a malicious website attempting to download malware to the user’s system, and block the malware.

![]()

NAT is the process of modifying network address information in datagram packet headers while in transit across a traffic-routing device, such as a router or firewall, for the purpose of remapping a given address space into another. See Chapter 9 for a more detailed discussion on NAT.

One of the most basic security functions provided by a firewall is NAT. This service allows you to mask significant amounts of information from outside of the network. This allows an outside entity to communicate with an entity inside the firewall without truly knowing its address.

Basic packet filtering, also known as stateless packet inspection, involves looking at packets, their protocols and destinations, and checking that information against the security policy. Telnet and FTP connections may be prohibited from being established to a mail or database server, but they may be allowed for the respective service servers. This is a fairly simple method of filtering based on information in each packet header, like IP addresses and TCP/UDP ports. This will not detect and catch all undesired packets, but it is fast and efficient.

To look at all packets, determining the need for each and its data, requires stateful packet filtering. Advanced firewalls employ stateful packet filtering to prevent several types of undesired communications. Should a packet come from outside the network, in an attempt to pretend that it is a response to a message from inside the network, the firewall will have no record of it being requested and can discard it, blocking access. As many communications will be transferred to high ports (above 1023), stateful monitoring will enable the system to determine which sets of high-port communications are permissible and which should be blocked. The disadvantage to stateful monitoring is that it takes significant resources and processing to do this type of monitoring, and this reduces efficiency and requires more robust and expensive hardware. However, this type of monitoring is essential in today’s comprehensive networks, particularly given the variety of remotely accessible services.

Tech Tip

Firewalls and Access Control Lists

Many firewalls read firewall and ACL rules from top to bottom and apply the rules in sequential order to the packets they are inspecting. Typically they will stop processing rules when they find a rule that matches the packet they are examining. If the first line in your rule set reads “allow all traffic,” then the firewall will pass any network traffic coming into or leaving the firewall—ignoring the rest of your rules below that line. Many firewalls have an implied “deny all” line as part of their rule sets. This means that any traffic that is not specifically allowed by a rule will get blocked by default.

As they are in routers, switches, servers, and other network devices, ACLs are a cornerstone of security in firewalls. Just as you must protect the device from physical access, ACLs do the same task for electronic access. Firewalls can extend the concept of ACLs by enforcing them at a packet level when packet-level stateful filtering is performed. This can add an extra layer of protection, making it more difficult for an outside hacker to breach a firewall.

Many firewalls contain, by default, an implicit deny at the end of every ACL or firewall rule set. This simply means that any traffic not specifically permitted by a previous rule in the rule set is denied.

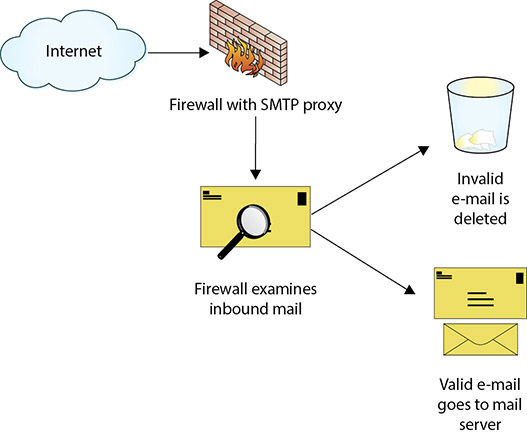

Some high-security firewalls also employ application layer proxies. As the name implies, packets are not allowed to traverse the firewall, but data instead flows up to an application that in turn decides what to do with it. For example, an SMTP proxy may accept inbound mail from the Internet and forward it to the internal corporate mail server, as depicted in Figure 10.5. While proxies provide a high level of security by making it very difficult for an attacker to manipulate the actual packets arriving at the destination, and while they provide the opportunity for an application to interpret the data prior to forwarding it to the destination, they generally are not capable of the same throughput as stateful packet-inspection firewalls. The tradeoff between performance and speed is a common one and must be evaluated with respect to security needs and performance requirements.

• Figure 10.5 Firewall with SMTP application layer proxy

Tech Tip

Firewall Operations

Application layer firewalls such as proxy servers can analyze information in the header and data portion of the packet, whereas packet-filtering firewalls can analyze only the header of a packet.

Firewalls can also act as network traffic regulators in that they can be configured to mitigate specific types of network-based attacks. In denial-of-service (DoS) and distributed denial-of-service (DDoS) attacks, an attacker can attempt to flood a network with traffic. Firewalls can be tuned to detect these types of attacks and act as flood guards, mitigating the effect on the network.

![]()

Firewalls can act as flood guards, detecting and mitigating specific types of DoS/DDoS attacks.

Next-Generation Firewall (NGFW)

Firewalls operate by inspecting packets and by using rules associated with IP addresses and ports. A next-generation firewall (NGFW) has significantly more capability and is characterized by these features:

![]() Deep packet inspection

Deep packet inspection

![]() A move beyond port/protocol inspection and blocking

A move beyond port/protocol inspection and blocking

![]() Added application-level inspection

Added application-level inspection

![]() Added intrusion prevention

Added intrusion prevention

![]() Bringing intelligence from outside the firewall

Bringing intelligence from outside the firewall

A next-generation firewall is more than just a firewall and IDS coupled together; it offers a deeper look at what the network traffic represents. In a legacy firewall, with port 80 open, all web traffic is allowed to pass. Using a next-generation firewall, traffic over port 80 can be separated by website, or even activity on a website (for example, allow Facebook, but not games on Facebook). Because of the deeper packet inspection and the ability to create rules based on content, traffic can be managed based on content, not merely site or URL.

Firewall Placement

Firewalls at their base level are policy-enforcement engines that determine whether or not traffic can pass, based on a set of rules. Regardless of the type of firewall, the placement is easy—firewalls must be inline with the traffic they are regulating. If there are two paths for data to get to a server farm, then either the firewall must have both paths go through it or two firewalls are necessary. One place for firewalls is on the edge of the network—a perimeter defense. The challenge to this is simple: an enterprise network can have many different types of traffic, and trying to sort/filter at the edge is not efficient.

Firewalls are commonly placed between network segments, thereby examining traffic that enters or leaves a segment. This allows them the ability to isolate a segment while avoiding the cost or overhead of doing this on each and every system. A firewall placed directly in front of a set of database servers only has to manage database connections, not all the different traffic types found on the network as a whole. Firewalls are governed by the rules they enforce, and the more limited the traffic types, the simpler the rule set. Simple rule sets are harder to bypass; in the example of the database servers previously mentioned, there is no need for web traffic, so all web traffic can be excluded. This leads to easier-to-maintain and better security in these instances.

Web Application Firewalls (WAFs) vs. Network Firewalls

Increasingly, the term firewall is getting attached to any device or software package that is used to control the flow of packets or data into or out of an organization. For example, web application firewall (WAF) is the term given to any software package, appliance, or filter that applies a rule set to HTTP/HTTPS traffic. Web application firewalls shape web traffic and can be used to filter out SQL injection attacks, malware, cross-site scripting (XSS), and so on. By contrast, a network firewall is a hardware or software package that controls the flow of packets into and out of a network. Web application firewalls operate on traffic at a much higher level than network firewalls, as web application firewalls must be able to decode the web traffic to determine whether or not it is malicious. Network firewalls operate on much simpler aspects of network traffic such as source/destination port and source/destination address.

Open Source vs. Proprietary

Firewalls come in many forms and types, and one method of differentiating them is to separate them into open source and proprietary (commercial) solutions. Open source firewalls are exemplified by iptables, a built-in functionality in Linux systems. Iptables and other open source solutions have the cost advantage of being free, but the initial cost of a firewall solution is not the only factor. Ease of maintenance and rule management are the key drivers for long-term use, and many proprietary solutions have worked to increase the utility of their offerings through improving these interfaces.

One of the most common firewalls employed is Microsoft Windows Defender Firewall, a proprietary firewall built into the Windows OS.

Hardware vs. Software

Firewalls can be physical devices, hardware, or a set of software services running on a system. For use on a host, a software solution like Microsoft Windows Defender Firewall or iptables on a Linux host may well fit the bill. For use in an enterprise setting at a network level, with a need to separate different security zones, a dedicated hardware device is more efficient and economical.

Appliance vs. Host Based vs. Virtual

Firewalls can be located on a host, either as a separate application or part of the operating system itself. In software-defined networking (SDN) networks, firewalls can be instantiated as virtual network functions, providing all of the features under a virtual software solution. Firewalls can also be instantiated via an appliance, acting as a network segregation device, separating portions of a network based on firewall rules.

VPN Concentrator

Network devices called concentrators act as traffic-management devices, managing flows from multiple points into single streams. Concentrators typically act as endpoints for a particular protocol, such as SSL/TLS or VPN. The use of specialized hardware can enable hardware-based encryption and provide a higher level of specific service than a general-purpose server. This provides both architectural and functional efficiencies.

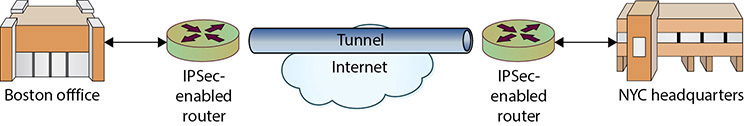

A virtual private network (VPN) is a construct used to provide a secure communication channel between users across public networks such as the Internet. A VPN concentrator is a special endpoint inside a network designed to accept multiple VPN connections and integrate these independent connections into the network in a scalable fashion. The most common implementation of VPN is via IPSec, a protocol for IP security. IPSec is mandated in IPv6 and is optional in IPv4. IPSec can be implemented in hardware, software, or a combination of both, and it’s used to encrypt all IP traffic. In Chapter 11, a variety of techniques are described that can be employed to instantiate a VPN connection. The use of encryption technologies allows either the data in a packet to be encrypted or the entire packet to be encrypted. If the data is encrypted, the packet header can still be sniffed and observed between source and destination, but the encryption protects the contents of the packet from inspection. If the entire packet is encrypted, it is then placed into another packet and sent via a tunnel across the public network. Tunneling can protect even the identity of the communicating parties.

A VPN concentrator is a hardware device designed to act as a VPN endpoint, managing VPN connections to an enterprise.

A VPN concentrator takes multiple individual VPN connections and terminates them into a single network point. This single endpoint is what should define where the VPN concentrator is located in the network. The VPN side of the concentrator is typically outward facing, exposed to the Internet. The inward-facing side of the device should terminate in a network segment where you would allow all of the VPN users to connect their machines directly. If you have multiple different types of VPN users with different security profiles and different connection needs, then you might have multiple concentrators with different endpoints that correspond to appropriate locations inside the network.

Wireless Devices

Wireless devices bring additional security concerns. There is, by definition, no physical connection to a wireless device; radio waves or infrared carries the data, allowing anyone within range access to the data. This means that unless you take specific precautions, you have no control over who can see your data. Placing a wireless device behind a firewall does not do any good, because the firewall stops only physically connected traffic from reaching the device. Outside traffic can come literally from the parking lot directly to the wireless device and into the network.

![]()

To prevent unauthorized wireless access to the network, configuration of remote access protocols to a wireless access point is common. Forcing authentication and verifying authorization comprise a seamless method for performing basic network security for connections in this fashion. These access protocols are covered in Chapter 11.

The point of entry from a wireless device to a wired network is performed at a device called a wireless access point (WAP). Wireless access points can support multiple concurrent devices accessing network resources through the network node they create. A typical wireless access point, a Google mesh Wi-Fi access point, is shown here.

Most PCs, laptops, and tablets sold today have built-in wireless, so the addition of wireless is not necessary. In most cases, these devices will determine the type of wireless that one employs in their home, because most people are not likely to update their devices just to use faster wireless as it becomes available.

Modems

Modems were once a slow method of remote connection that was used to connect client workstations to remote services over standard telephone lines. Modem is a shortened form of modulator/demodulator, converting analog signals to digital, and vice versa. Connecting a digital computer signal to the analog telephone line required one of these devices. Today, the use of the term has expanded to cover devices connected to special digital telephone lines (DSL modems), cable television lines (cable modems), and fiber modems. Although these devices are not actually modems in the true sense of the word, the term has stuck through marketing efforts directed at consumers. Fiber and cable modems offer broadband high-speed connections and the opportunity for continuous connections to the Internet. In most cases, residential connections are terminated with a provider device (modem) that converts the signal into both a wired connection via RJ-45 connections and wireless signal.

Cable modems were designed to share a party line in the terminal signal area, and the cable modem standard, Data Over Cable Service Interface Specification (DOCSIS), was designed to accommodate this concept. DOCSIS includes built-in support for security protocols, including authentication and packet filtering. Although this does not guarantee privacy, it prevents ordinary subscribers from seeing others’ traffic without using specialized hardware.

Figure 10.6 shows a modern cable modem. It has an imbedded wireless access point, a Voice over IP (VoIP) connection, a local router, and a DHCP server. The size of the device is fairly large, but it has a built-in lead-acid battery to provide VoIP service when power is out.

• Figure 10.6 Modern cable modem

Both cable and fiber services are designed for a continuous connection, which brings up the question of IP address life for a client. Although some services originally used a static IP arrangement, virtually all have now adopted the Dynamic Host Configuration Protocol (DHCP) to manage their address space. A static IP address has the advantage of remaining the same and enabling convenient DNS connections for outside users. Because cable and DSL services are primarily designed for client services, as opposed to host services, this is not a relevant issue. The security issue with a static IP address is that it is a stationary target for hackers. The move to DHCP has not significantly lessened this threat, however, because the typical IP lease on a cable modem DHCP server is for days. This is still relatively stationary, and some form of firewall protection needs to be employed by the user.

Tech Tip

Cable/Fiber Security

The modem equipment provided by the subscription service converts the cable or fiber signal into a standard Ethernet signal that can then be connected to a NIC on the client device. This is still just a direct network connection, with no security device separating the two. The most common security device used in cable/fiber connections is a router that acts as a hardware firewall. With continuous connection to the Internet, changing the device password and other protections are still needed.

Telephony

A private branch exchange (PBX) is an extension of the public telephone network into a business. Although typically considered separate entities from data systems, PBXs are frequently interconnected and have security requirements as part of this interconnection, as well as security requirements of their own. PBXs are computer-based switching equipment designed to connect telephones into the local phone system. Basically digital switching systems, they can be compromised from the outside and used by phone hackers (known as phreakers) to make phone calls at the business’s expense. Although this type of hacking has decreased as the cost of long-distance calling has decreased, it has not gone away, and as several firms learn every year, voicemail boxes and PBXs can be compromised and the long-distance bills can get very high, very fast.

Tech Tip

Coexisting Communications

Data and voice communications have coexisted in enterprises for decades. Recent connections inside the enterprise of VoIP and traditional private branch exchange solutions increase both functionality and security risks. Specific firewalls to protect against unauthorized traffic over telephony connections are available to counter the increased risk.

Another problem with PBXs arises when they are interconnected to the data systems, either by corporate connection or by rogue modems in the hands of users. In either case, a path exists for connection to outside data networks and the Internet. Just as a firewall is needed for security on data connections, one is needed for these connections as well. Telecommunications firewalls are a distinct type of firewall designed to protect both the PBX and the data connections. The functionality of a telecommunications firewall is the same as that of a data firewall: it is there to enforce security policies. Telecommunication security policies can be enforced even to cover hours of phone use, to prevent unauthorized long-distance usage through the implementation of access codes and/or restricted service hours.

Intrusion Detection Systems

Intrusion detection systems (IDSs) are an important element of infrastructure security. IDSs are designed to detect, log, and respond to unauthorized network or host use, both in real time and after the fact. IDSs are available from a wide selection of vendors and are an essential part of a comprehensive network security program. These systems are implemented using software, but in large networks or systems with significant traffic levels, dedicated hardware is typically required as well. IDSs can be divided into two categories: network-based systems and host-based systems.

![]()

Cross Check

Intrusion Detection

From a network infrastructure point of view, network-based IDSs can be considered part of infrastructure, whereas host-based IDSs are typically considered part of a comprehensive security program and not necessarily infrastructure. Two primary methods of detection are used: signature-based and anomaly-based. IDSs are covered in detail in Chapter 13.

Network Access Control

Networks comprise connected workstations and servers. Managing security on a network involves managing a wide range of issues, from various connected hardware and the software operating these devices. Assuming that the network is secure, each additional connection involves risk. Managing the endpoints on a case-by-case basis as they connect is a security methodology known as network access control (NAC). NAC is built around the idea that the network should be able to enforce a specific level of endpoint security before it accepts a new connection. The initial vendors were Microsoft and Cisco, but NAC now has a myriad of vendors, with many different solutions providing different levels of “health” checks before allowing a device to join a network.

Agent and Agentless

In agent-based solutions, code is stored on the host machine for activation and use at time of connection. Having agents deployed to endpoints can provide very fine-grained levels of scrutiny to the security posture of the endpoint. In recognition that deploying agents to machines can be problematic in some instances, vendors have also developed agentless solutions for NAC. Rather than have the agent wait on the host for activation and use, the agent can operate from within the network itself, rendering the host in effect agentless. In agentless solutions, the code resides on the network and is deployed to memory for use in a machine requesting connections, but since it never persists on the host machine, it is referred to as agentless. In most instances, there is no real difference in the performance of agent versus agentless solutions when properly deployed. The real difference comes in the issues of having agents on boxes versus persistent network connections for agentless.

Agentless NAC is often implemented in a Microsoft domain through an Active Directory (AD) controller. For example, NAC code verifies devices are in compliance with access policies when a domain is joined by a user or when they log in or log out. Agentless NAC is also often implemented through the use of intrusion prevention systems.

![]()

NAC agents are installed on devices that connect to networks in order to produce secure network environments. With agentless NAC, the NAC code resides not on the connecting devices, but on the network, and it’s deployed to memory for use in a machine requesting connection to the network.

Network Monitoring/Diagnostic

A computer network itself can be considered a large computer system, with performance and operating issues. Just as a computer needs management, monitoring, and fault resolution, so too do networks. SNMP was developed to perform this function across networks. The idea is to enable a central monitoring and control center to maintain, configure, and repair network devices, such as switches and routers, as well as other network services, such as firewalls, IDSs, and remote access servers. SNMP has some security limitations, and many vendors have developed software solutions that sit on top of SNMP to provide better security and better management tool suites.

SNMP, the Simple Network Management Protocol, is a part of the Internet Protocol suite of protocols. It is an open standard, designed for transmission of management functions between devices. Do not confuse this with SMTP, the Simple Mail Transfer Protocol, which is used to transfer mail between machines.

The concept of a network operations center (NOC) comes from the old phone company network days, when central monitoring centers supervised the health of the telephone network and provided interfaces for maintenance and management. This same concept works well with computer networks, and companies with midsize and larger networks employ the same philosophy. The NOC allows operators to observe and interact with the network, using the self-reporting and, in some cases, self-healing nature of network devices to ensure efficient network operation. Although generally a boring operation under normal conditions, when things start to go wrong, as in the case of a virus or worm attack, the NOC can become a busy and stressful place, as operators attempt to return the system to full efficiency while not interrupting existing traffic.

Because networks can be spread out literally around the world, it is not feasible to have a person visit each device for control functions. Software enables controllers at NOCs to measure the actual performance of network devices and make changes to the configuration and operation of devices remotely. The ability to make remote connections with this level of functionality is both a blessing and a security issue. Although this allows for efficient network operations management, it also provides an opportunity for unauthorized entry into a network. For this reason, a variety of security controls are used, from secondary networks to VPNs and advanced authentication methods with respect to network control connections.

Tech Tip

Virtual IPs

In a load-balanced environment, the IP addresses for the target servers of a load balancer will not necessarily match the address associated with the router sending the traffic. Load balancers handle this through the concept of virtual IP addresses, or virtual IPs, which allow for multiple systems to be reflected back as a single IP address.

Network monitoring is an ongoing concern for any significant network. In addition to monitoring traffic flow and efficiency, monitoring of security-related events is necessary. IDSs act merely as alarms, indicating the possibility of a breach associated with a specific set of activities. These indications still need to be investigated and an appropriate response needs to be initiated by security personnel. Simple items such as port scans may be ignored by policy, but an actual unauthorized entry into a network router, for instance, would require NOC personnel to take specific actions to limit the potential damage to the system. In any significant network, coordinating system changes, dynamic network traffic levels, potential security incidents, and maintenance activities are daunting tasks requiring numerous personnel working together. Software has been developed to help manage the information flow required to support these tasks. Such software can enable remote administration of devices in a standard fashion so that the control systems can be devised in a hardware vendor–neutral configuration.

SNMP is the main standard embraced by vendors to permit interoperability. Although SNMP has received a lot of security-related attention of late due to various security holes in its implementation, it is still an important part of a security solution associated with network infrastructure. Many useful tools have security issues; the key is to understand the limitations and to use the tools within correct boundaries to limit the risk associated with the vulnerabilities. Blind use of any technology will result in increased risk, and SNMP is no exception. Proper planning, setup, and deployment can limit exposure to vulnerabilities. Continuous auditing and maintenance of systems with the latest patches is a necessary part of operations and is essential to maintaining a secure posture.

Out-of-Band Management

Management of a system across the network can be either in-band or out-of-band. In in-band management systems, the management channel is the same channel as the data channel. This has an advantage in physical connection simplicity and a disadvantage that if a problem occurs due to data flows, the management commands may not be able to access the device. For important network devices and services, an out-of-band management channel is recommended. Out-of-band management channels are physically separate connections, via separate interfaces that permit the active management of a device even when the data channel is blocked for some reason.

Load Balancers

Certain systems, such as servers, are more critical to business operations and should therefore be the object of fault-tolerance measures. A common technique that is used in fault tolerance is load balancing through the use of a load balancer. Load balancing involves the use of devices that move loads across a set of resources in an effort not to overload individual servers. This technique is designed to distribute the processing load over two or more systems. It is used to help improve resource utilization and throughput but also has the added advantage of increasing the fault tolerance of the overall system since a critical process may be split across several systems. Should any one system fail, the others can pick up the processing it was handling. While there may be an impact to overall throughput, the operation does not go down entirely. Load balancing is often utilized for systems handling websites, high-bandwidth file transfers, and large Internet Relay Chat (IRC) networks. Load balancing works by a series of health checks that tell the load balancer which machines are operating and by a scheduling mechanism to spread the work evenly. Load balancing is best for stateless systems, as subsequent requests can be handled by any server, not just the one that processed the previous request.

Load balancers take incoming traffic from one network location and distribute it across multiple network operations. A load balancer must reside in the traffic path between the requestors of a service and the servers that are providing the service. The role of the load balancer is to manage the workloads on multiple systems by distributing the traffic to and from them. To do this, it must be located within the traffic pathway. For reasons of efficiency, load balancers are typically located close to the systems for which they are managing the traffic.

Scheduling

When a load balancer moves loads across a set of resources, it decides which machine gets a request via a scheduling algorithm. There are a couple of commonly used scheduling algorithms: affinity-based scheduling and round-robin scheduling.

Affinity

Affinity-based scheduling is designed to keep a host connected to the same server across a session. Some applications, such as web applications, can benefit from affinity-based scheduling. The method used by affinity-based scheduling is to have the load balancer keep track of where it last balanced a particular session and direct all continuing session traffic to the same server. If it is a new connection, the load balancer establishes a new affinity entry and assigns the session to the next server in the available rotation.

Round-Robin

Round-robin scheduling involves sending each new request to the next server in rotation. All requests are sent to servers in equal amounts, regardless of the server load. Round-robin schemes are frequently modified with a weighting factor, known as weighted round-robin, to take the server load or other criteria into account when assigning the next server.

![]()

Round-robin and weighted round-robin are scheduling algorithms used for load-balancing strategies.

Active/Active