Security Tools and Techniques

Security Tools and Techniques

Having a solid security program is more than just building a correct set of defensive elements. In today’s environment, there needs to be a series of testing and investigative elements that assist in the tasks of incident response and threat hunting, not to mention environmental testing. Security tools are an essential part of an enterprise security teams strategy.

Network Reconnaissance and Discovery Tools

Network Reconnaissance and Discovery Tools

A network is like most infrastructure—you never see or care about it until it isn’t working. And when you do want to look, how do you do it? A wide range of tools can be used to permit you to see the inner workings of a network, and they are covered in the sections that follow.

tracert/traceroute

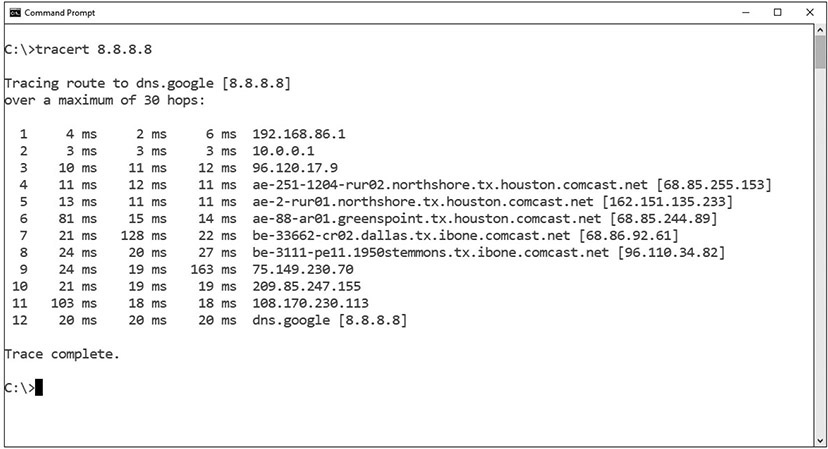

The tracert command is a Windows command for tracing the route that packets take over the network. The tracert command provides a list of the hosts, switches, and routers in the order in which a packet passes through them, providing a trace of the network route from source to target. As tracert uses Internet Control Message Protocol (ICMP), if ICMP is blocked, tracert will fail to provide information. On Linux and macOS systems, the command with similar functionality is traceroute. Figure 16.1 shows using the tracert command to trace the route from a Windows system on a private network to a Google DNS server.

![]()

The tracert and traceroute commands display the route a packet takes to a destination, recording the number of hops along the way. These are excellent tools to use to see where a packet may get hung up during transmission.

• Figure 16.1 tracert example

nslookup/dig

The Domain Name System (DNS) is used to convert a human-readable domain name into an IP address. This is not a single system but rather a hierarchy of DNS servers—from root servers on the backbone of the Internet, to copies at your Internet service provider (ISP), your home router, and your local machine, each in the form of a DNS cache. To examine a DNS query for a specific address, you can use the nslookup command. Figure 16.2 shows a series of DNS queries executed on a Windows machine. In the first request, the DNS server was with an ISP, while the second request, the DNS server was from a virtual private network (VPN) connection. Between the two requests, the network connections were changed, resulting in different DNS lookups.

• Figure 16.2 nslookup of a DNS query

At times, nslookup will return a nonauthoritative answer, as shown in Figure 16.3. This typically means the result is from a cache as opposed to a server that has an authoritative (that is, known to be current) answer, such as from a DNS server.

• Figure 16.3 Cache response to a DNS query

While nslookup works on Windows systems, the command dig, which stands for Domain Information Groper, works on Linux systems. One difference is that dig is designed to return answers in a format that is easy to parse and include in scripts, which is a common trait of Linux command-line utilities.

ipconfig/ifconfig

Both ipconfig (for Windows) and ifconfig (for Linux) are command-line tools to manipulate the network interfaces on a system. They have the ability to list the interfaces and connection parameters, alter parameters, and release/renew connections. If you are having network connection issues, this is one of the first tools you should use, to verify the network setup of the operating system and its interfaces.

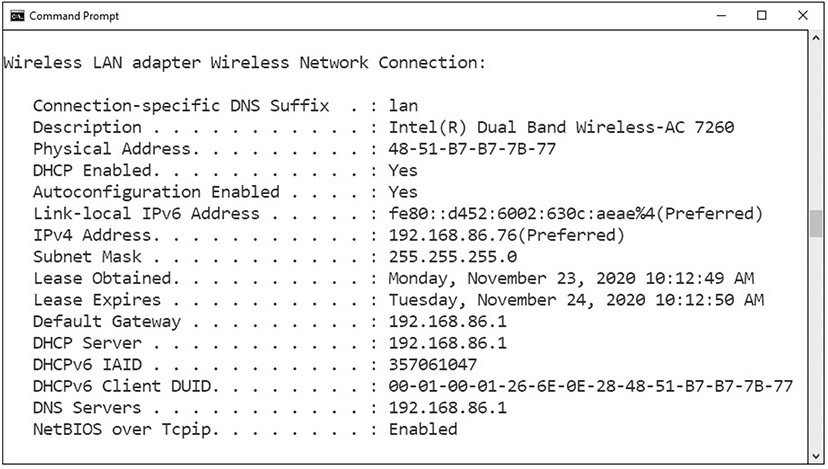

The ip command in Linux is used to show and manipulate routing, devices, policy routing, and tunnels. The ipconfig command is an important command for troubleshooting because it displays current TCP/IP configurations on a local system. The command displays adapter information such as MAC address, current IP addresses (both IPv4 and IPv6), subnet mask, default gateway, as well as DNS servers and whether DHCP is enabled. Figure 16.4 shows some of the information available from ipconfig on a Windows machine. This is an important troubleshooting tool because when you can’t connect to something, it is the first place to start exploring network connections, as it gives you all of your settings.

• Figure 16.4 ipconfig example

nmap

Nmap is a free, open source port scanning tool developed by Gordon Lyon and has been the standard network mapping utility for Windows and Linux since 1999. The nmap command is the command to launch and run the nmap utility. Nmap is used to discover what systems are on a network and the open ports and services on those systems. This tool has many other additional functions, such as OS fingerprinting, finding rogue devices, and discovering services and even application versions. It operates via the command line, so it’s very scriptable. It also has a GUI interface called Zenmap. Nmap works on a wide range of operating systems, including Microsoft Windows, Linux, and macOS. This is one of the top ten tools used by system administrators on a regular basis. The nmap utility includes a scripting engine using the Lua programming language to write, save, and share scripts that can automate different types of scans. All sorts of tasks can be automated, including regular checks for well-known network infrastructure vulnerabilities.

ping/pathping

The ping command sends echo requests to a designated machine to determine if communication is possible. The syntax is ping [options] targetname/address. The options include items such as name resolution, how many pings, data size, TTL counts, and more. Figure 16.5 shows a ping command on a Windows machine.

• Figure 16.5 ping command

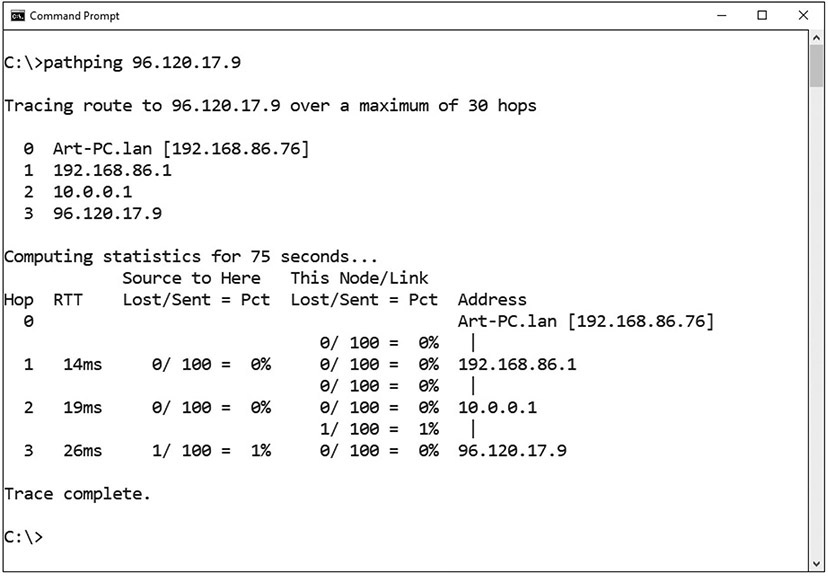

Pathping is a TCP/IP-based utility that provides additional data beyond that of a ping command. Pathping will first display your path results as if you were using tracert or traceroute. Pathping then calculates loss information, as shown in Figure 16.6.

The ping command is used to test connectivity between systems.

• Figure 16.6 pathping example

hping

Hping is a TCP/IP packet creation tool that allows a user to craft raw IP, TCP, UDP, and ICMP packets from scratch. This tool provides a means of performing a wide range of network operations; anything that you can do with those protocols can be crafted into a packet. This includes port scanning, crafting ICMP packets, host discovery, and more. The current version is hping3, and it is available on most operating systems, including Windows and Linux.

Like all Linux commands, hping can be programmed in BASH scripts to achieve greater functionality. Outputs can also be piped to other commands. Hping also works with an embedded Tcl scripting functionality, which further extends its usefulness for system administrators. Between the range of options and the native scripting capability, hping offers a wide range of functions, including creating password-protected backdoors that are piped to other services. The power comes from the programmability, the options, and the creative work of system administrators.

netstat

The netstat command is used to monitor network connections to and from a system. The following are some examples of how you can use netstat:

![]() netstat –a Lists all active connections and listening ports

netstat –a Lists all active connections and listening ports

![]() netstat –at Lists all active TCP connections

netstat –at Lists all active TCP connections

![]() netstat –an Lists all active UDP connections

netstat –an Lists all active UDP connections

Many more options are available and useful. The netstat command is available on Windows and Linux, but the availability of certain netstat command switches and other netstat command syntax may differ from operating system to operating system.

The netstat command is useful for viewing all listening ports on a computer and determining which connections are active.

netcat (nc)

Netcat is the network utility designed for Linux environments. The netcat utility is the tool of choice in Linux for reading from and writing to network connections using TCP or UDP. Like all Linux command-line utilities, it is designed for scripts and automation. Netcat has a wide range of functions. It acts as a connection to the network and can act as a transmitter or a receiver, and with redirection it can turn virtually any running process into a server. It can listen on a port and pipe the input it receives to the process identified. Netcat has been ported to Windows but is not regularly used in Windows environments.

The actual command to invoke netcat is nc – options – address.

IP Scanners

IP scanners do just what the name implies: they scan IP networks and can report on the status of IP addresses. There are a wide range of free and commercial scanning tools, and most come with significantly greater functionality than just reporting on address usage. If all you want are addresses, a variety of simple command-line network discovery tools can provide those answers. For instance, if you only want to scan your local LAN, arp – a will do just that. If you want more functionality, you can use the nmap program covered earlier in the chapter. Another solution is Nessus, a commercial offering covered later in the chapter.

arp

The arp command is designed to interface with the operating system’s Address Resolution Protocol (ARP) caches on a system. In moving packets between machines, a device sometimes needs to know where to send a packet using the MAC or layer 2 address. ARP handles this problem through four basic message types:

![]() ARP request “Who has this IP address?”

ARP request “Who has this IP address?”

![]() ARP reply “I have that IP address; my MAC address is…”

ARP reply “I have that IP address; my MAC address is…”

![]() Reverse ARP (RARP) request “Who has this MAC address?”

Reverse ARP (RARP) request “Who has this MAC address?”

![]() RARP reply “I have that MAC address; my IP address is…”

RARP reply “I have that MAC address; my IP address is…”

These messages are used in conjunction with a device’s ARP table, where a form of short-term memory associated with these data elements resides. The commands are used as a simple form of lookup. When a machine sends an ARP request to the network, the reply is received and entered into all devices that hear the reply. This facilitates efficient address lookups but also makes the system subject to attack.

The arp command allows a system administrator the ability to see and manipulate the ARP cache on a system. This way, the system administrator can see if entries have been spoofed or if other problems, such as errors, occur.

route

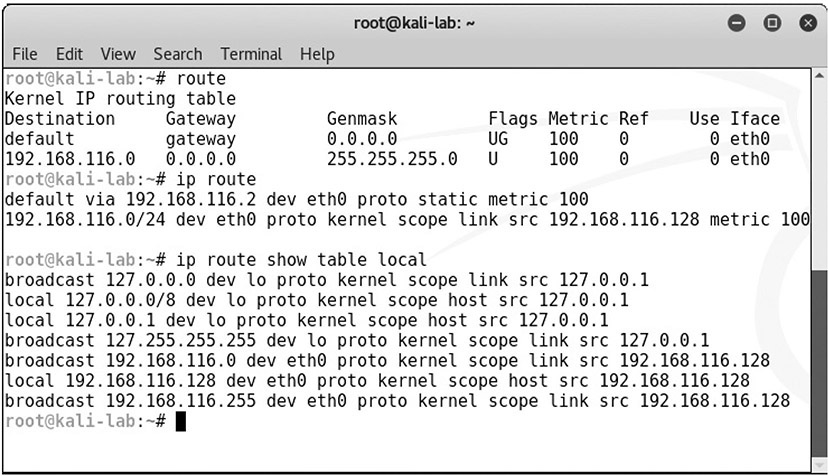

The route command works in Linux and Windows systems to provide information on current routing parameters and to manipulate these parameters. In addition to listing the current routing table, it has the ability to modify the table. Figure 16.7 shows three examples of the route command on a Linux system. The first is a simple display of the kernel IP routing table. The second shows a similar result using the ip command. The last is the use of the ip command to get the details of the local table with destination addresses that are assigned to localhost.

• Figure 16.7 The route and ip commands in Linux

curl

Curl is a tool designed to transfer data to or from a server, without user interaction. It supports a long list of protocols (DICT, FILE, FTP, FTPS, Gopher, HTTP, HTTPS, IMAP, IMAPS, LDAP, LDAPS, MQTT, POP3, POP3S, RTMP, RTMPS, RTSP, SCP, SFTP, SMB, SMBS, SMTP, SMTPS, Telnet, and TFTP) and acts like a Swiss army knife for interacting with a server. Originally designed to interact with URLs, curl has expanded into a jack-of-all-trades supporting numerous protocols. It works on both Linux and Windows systems, although the command options are slightly different.

Here’s a simple example of using curl to simulate a GET request for a website URL:

curl https://www.example.com

theHarvester

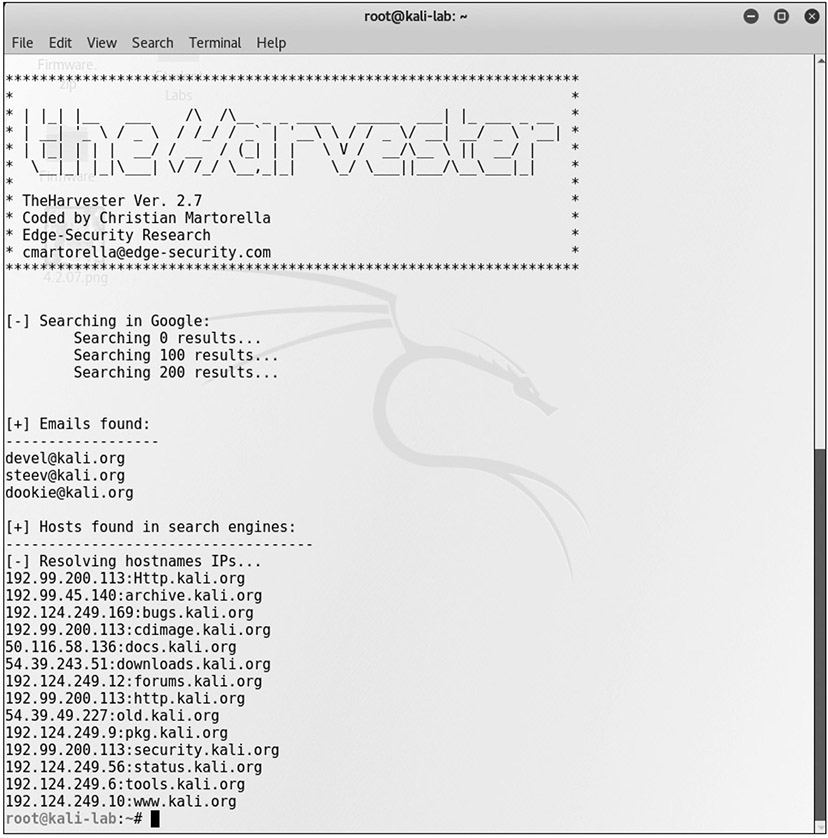

theHarvester is a Python-based program designed to assist penetration testers in the gathering of information during the reconnaissance portion of a penetration test. This is a useful tool for exploring what is publicly available about your organization on the Web, and it can provide information on employees, e-mails, and subdomains using different public sources such as search engines, PGP key servers, and Shodan databases. Designed for Linux and included as part of Kali and other penetration testing distributions, theHarvester is shown in Figure 16.8 searching for the first 500 e-mails from the domain kali.org using Google.

• Figure 16.8 theHarvester

sn1per

Sn1per is a Linux-based tool used by penetration testers. Sn1per is an automated scanner designed to collect a large amount of information while scanning for vulnerabilities. It runs a series of automated scripts to enumerate servers, open ports, and vulnerabilities, and it’s designed to integrate with the penetration testing tool Metasploit. Sn1per goes further than just scanning; it can also brute-force open ports, brute-force subdomains and DNS systems, scan web applications for common vulnerabilities, and run targeted nmap scripts against open ports as well as targeted Metasploit scans and exploit modules. This tool suite comes as a free community edition, with limited scope, as well as an unlimited professional version for corporations and penetration testers.

scanless

Scanless is a command-line utility to interface with websites that can perform port scans as part of a penetration test. When you use this tool, the source IP address for the scan is the website, not your testing machine. Written in Python, with a simple interface, scanless anonymizes your port scans.

dnsenum

Dnsenum is a Perl script designed to enumerate DNS information. Dnsenum will enumerate DNS entries, including subdomains, MX records, and IP addresses. It can interface with Whois, a public record that identifies domain owners, to gather additional information. Dnsenum works on Linux distros that support Perl.

![]()

DNS enumeration can be used to collect information such as usernames and IP addresses of targeted systems.

Nessus

Nessus is one of the leading vulnerability scanners in the marketplace. It comes in a free version, with limited IP address capability, and fully functional commercial versions. Nessus is designed to perform a wide range of testing on a system, including the use of user credentials, patch level testing, common misconfigurations, password attacks, and more. Designed as a full suite of vulnerability and configuration testing tools, Nessus is commonly used to audit systems for compliance to various security standards such as PCI DSS, SOX, and other compliance schemes. Nessus free version was the original source of the OpenVAS fork, which is a popular free vulnerability scanner.

Cuckoo

Cuckoo is a sandbox used for malware analysis. Cuckoo is designed to allow a means of testing a suspicious file and determining what it does. It is open source, free software that can run on Linux and Windows. Cuckoo is a common security tool used to investigate suspicious files, as it can provide reports on system calls, API calls, network analysis, and memory analysis.

File Manipulation Tools

File Manipulation Tools

In computer systems, most information can be represented as a file. Files are files, as are directories and even entire storage systems. The concept of a file is the basic interface to information. Because of this, file manipulation tools have the ability to manage a lot of tasks. As many operations are scripted, the ability to manipulate a file, returning specific elements or records, has great utility. This section looks at a bunch of tools used to manipulate files in Linux systems.

head

Head is a utility designed to return the first lines of a file. A common option is the number of lines one wishes to return. For example, head -5 returns the first five lines of a file.

tail

Tail is a utility designed to return the last lines of a file. A common option is the number of lines one wishes to return. For example, tail -5 returns the last five lines of a file.

cat

Cat is a Linux command, short for concatenate, that can be used to create and manipulate files. It can display the contents of a file, handle multiple files, and can be used to input data from stdin, which is a stream of input, to a file if the file does not exist. Here is an example:

# cat textfile.txt

The cat command can be piped through more or less to limit scrolling of long files:

# cat textfile.txt | more

If you need line numbers in the output, you can add the -n option. The output can be piped through various other Linux commands, providing significant manipulation capability. For instance, you can combine four files and sort the output into a fifth file, like so:

# cat textfile1.txt textfile2.txt textfile3.txt textfile4.txt

| sort > textfile5.txt

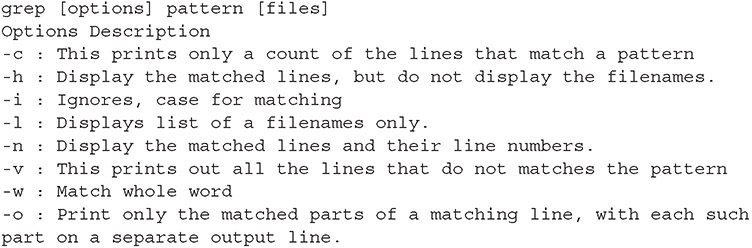

grep

Grep is a Linux utility that can perform pattern-matching searches on file contents. The name grep comes from “Globally search for Regular Expression and Print the matching lines.” Grep dates back to the beginning of the UNIX OS and was written by Ken Thompson. Today, the uses of grep are many. It can count the number of matches, and it can find lines with matching expressions, either case sensitive or case insensitive. It can use anchors (matching based on beginning or ending of a word), wildcards, and negative searches (finding lines that do not contain a specified element), and it works with other tools through the redirection of inputs and outputs.

Grep has many options, including the use of regular expressions to perform matching. Here’s a sampling of the more common options:

There are many other options, including the display of lines before and after matches. To get a full feel of the breadth of options, consult the man page for grep.

chmod

Chmod is the Linux command used to change access permissions of a file. The general form of the command is

chmod <options> <permissions> <filename>

Permissions can be entered either in symbols or octal numbers. Let’s assume we want to set the following permissions: The user can read, write, and execute the file. Members of the group can read and execute it, and all others may only read it. In this case, we can use either of the following two commands, which are identical in function:

chmod u=rwx,g=rx,o=r <filename>

chmod 754 <filename>

The octal notation works as follows: 4 stands for “read,” 2 stands for “write,” 1 stands for “execute,” and 0 stands for “no permission.” Thus, for the user, 7 is the combination of permissions 4+2+1 (read, write, and execute). For the group, 5 is 4+0+1 (read, no write, and execute), and for all others, 4 is 4+0+0 (read, no write, and no execute).

logger

The Linux command logger is how you can add log file information to /var/log/syslog. The logger command works from the command line, from scripts, or from other files, thus providing a versatile means of making log entries. The syntax is simple:

logger <message to put in the log>

This command will put the text in the option into the syslog file.

Shell and Script Environments

Shell and Script Environments

One of the more powerful aspects of the Linux environment is the ability to create shell scripts. By combining a series of functions, and through the use of redirecting inputs and outputs, one can do significant data manipulation. Take a PCAP file for instance. Let’s assume you need to extract specific data elements. You want only ping (echo) replies to a specific IP address. And for those records, you only want 1 byte in the data section. Using a series of commands in a shell script, you can create a dissector that takes the PCAP, reads it with tcpdump, extracts the fields, and then writes the desired elements to a file. You could do this with Python as well, and with some tools, you can get partway there. Bottom line: there is a lot you can do using the OS shell and scripts.

SSH

SSH (Secure Shell) is a cryptographically secured means of communicating to and managing a network over an unsecured connection. It was originally designed as a replacement for the plaintext protocols of Telnet and other tools. When remotely accessing a system, it is important not to use a plaintext communication channel, as that would expose information such as passwords and other sensitive items to interception.

![]()

SSH is a cryptographically secured means of communi-cating and managing a network. SSH uses port 22 and is the secure replacement for Telnet.

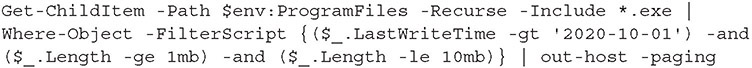

PowerShell

PowerShell is a Microsoft Windows–based task automation and configuration management framework, consisting of a command-line shell and scripting language. PowerShell is built on top of the .NET Common Language Runtime (CLR) and accepts and returns .NET objects. The commands used in PowerShell are called cmdlets, and they can be combined to process complex tasks. PowerShell can be run from a PowerShell Console prompt, or through the Windows PowerShell Integrated Scripting Environment (ISE), which is a host application for Windows PowerShell. The following example finds all executables within the Program Files folder that were last modified after October 1, 2020, and that are neither smaller than 1 MB nor larger than 10 MB:

Because the Microsoft Windows object model is included, as well as numerous cmdlets designed to perform specific data access operations, PowerShell is an extremely powerful tool for managing Windows systems in an enterprise. With its latest release, PowerShell has been modified to run on multiple platforms, including Windows, macOS, and Linux.

![]()

PowerShell is a powerful command-line scripting interface. PowerShell files use the .ps1 file extension.

Python

Python is a computer language commonly used for scripting and data analysis tasks facing system administrators and security personnel. Python is a full-fledged computer language. It supports objects, functional programming, and garbage collection, and most importantly has a very large range of libraries that can be used to bring functionality to a program. The downside is that it is interpreted, so speed is not a strong attribute. However, usability is high, and coupled with the library support, Python is a must-learn language for most security professionals.

![]()

Python is a general-purpose computer programming language that uses the file extension .py.

OpenSSL

OpenSSL is a general-purpose cryptography library that offers a wide range of cryptographic functions on Windows and Linux systems. Designed to be a full-featured toolkit for the Transport Layer Security (TLS) and Secure Sockets Layer (SSL) protocols, it provides so much more for real-world daily challenges. OpenSSL can perform the following tasks in either scripts or programs, offering access to cryptographic functions without having to develop the code:

![]() Work with RSA and ECDSA keys

Work with RSA and ECDSA keys

![]() Create certificate signing requests (CSRs)

Create certificate signing requests (CSRs)

![]() Verify CSRs

Verify CSRs

![]() Create certificates

Create certificates

![]() Generate self-signed certificates

Generate self-signed certificates

![]() Convert between encoding formats (PEM, DER) and container formats (PKCS12, PKCS7)

Convert between encoding formats (PEM, DER) and container formats (PKCS12, PKCS7)

![]() Check certificate revocation status

Check certificate revocation status

![]() And more

And more

One can view OpenSSL as a Swiss army knife for all things involving cryptography functions.

Packet Capture and Replay Tools

Packet Capture and Replay Tools

Computers communicate and exchange data via network connections by way of packets. Software tools that enable the capturing, editing, and replaying of the packet streams can be very useful for a security professional. Whether you’re testing a system or diagnosing a problem, having the ability to observe exactly what is flowing between machines and being able to edit the flows is of great utility. The tools in this section provide this capability. They can operate either on live network traffic or recorded traffic in the form of packet capture (PCAP) files.

tcpreplay

Tcpreplay is the name for both a tool and a suite of tools. As a suite, tcpreplay is a group of free, open source utilities for editing and replaying previously captured network traffic. As a tool, it specifically replays a PCAP file on a network. Originally designed as an incident response tool, tcpreplay has utility in a wide range of circumstances where network packets are used. It can be used to test all manner of security systems through the use of crafted PCAP files to trip certain controls. It is also used to test online services such as web servers. If you have a need to send network packets to another machine, tcpreplay suite has your answer.

tcpdump

The tcpdump utility is designed to analyze network packets either from a network connection or a recorded file. You also can use tcpdump to create files of packet captures, called PCAP files, and perform filtering between input and output, making it a valuable tool to lessen data loads on other tools. For example, if you have a complete packet capture file that has hundreds of millions of records, but you are only interested in one server’s connections, you can make a copy of the PCAP file containing only the packets associated with the server of interest. This file will be smaller and easier to analyze with other tools.

Wireshark

Wireshark is the gold standard for graphical analysis of network protocols. With dissectors that allow the analysis of virtually any network protocol, this tool can allow you to examine individual packets, monitor conversations, carve out files, and more. When it comes to examining packets, Wireshark is the tool. When it comes to using this functionality in a scripting environment, TShark provides the same processing in a scriptable form, producing a wide range of outputs, depending on the options set. Wireshark has the ability to capture live traffic, or it can use recorded packets from other sources.

![]()

When you’re examining packets, the differentiator is what do you need to do. Wireshark allows easy exploration. Tcpdump captures packets into PCAP files, and tcpreplay has a suite of editing tools.

Forensic Tools

Forensic Tools

Digital forensics is the use of specific methods to determine who did what on a system at a specific time, or some variant of this question. Computers have a wide range of artifacts that can be analyzed to make these determinations. There are tools to collect these artifacts as well as tools used to analyze the data collected. In this section, we examine some of the primary tools used in these efforts. Digital forensic processes and procedures are covered in detail in Chapter 23. This is just an examination of some of the tools used.

dd

Data dump (dd) is a Linux command-line utility used to convert and copy files. On Linux systems, virtually everything is represented in storage as a file, and dd can read and/or write from/to these files, provided that function is implemented in the respective drivers. As a result, dd can be used for tasks such as backing up the boot sector of a hard drive, obtaining a fixed amount of random data, or copying (backing up) entire disks. The dd program can also perform conversions on the data as it is copied, including byte order swapping and conversion to and from the ASCII and EBCDIC text encodings. dd has the ability to copy everything, back up/restore a partition, and create/restore an image of an entire disk. Some common examples follow.

Here’s how to back up an entire hard disk:

# dd if = /dev/sda of = /dev/sdb

Here, if represents input file and of represents output file. Therefore, the exact copy of /dev/sda will be available in /dev/sdb. If there are any errors, the preceding command will fail. If you give the parameter conv=noerror, it will continue to copy if there are read errors. Note that input file and output file should be checked very carefully because mistakes can overwrite data, causing you to lose all your data.

Here’s how to create an image of a hard disk:

# dd if = /dev/hda of = ~/hdadisk.img

When doing a forensics data capture, rather than taking a backup of the hard disk, you should create an image file of the hard disk and save it on another storage device. There are many advantages to backing up your data to a disk image—one being the ease of use. Image files contain all the information on the associated source, including unused and previously used space.

memdump

Linux has a utility program called memory dumper, or memdump. This program dumps system memory to the standard output stream, skipping over any holes in memory maps. By default, the program dumps the contents of physical memory (/dev/mem). The output from memdump is in the form of a raw dump. Because running memdump uses memory, it is important to send the output to a location that is off the host machine being copied, using a tool such as nc.

WinHex

WinHex is a hexadecimal file editor. This tool is very useful in forensically investigating files, and it provides a whole host of forensic functions such as the ability to read almost any file, display contents of the file, convert between character sets and encoding, perform hash verification functions, and compare files. As a native file reader/hex editor, it can examine specific application files without invoking the application and changing the data. WinHex is a commercial program that is part of the X-Ways forensic suite, which is a comprehensive set of digital forensic tools.

FTK Imager

FTK Imager is the company AccessData’s answer to dd. FTK Imager is a commercial program, free for use, and is designed to capture an image of a hard drive (or other device) in a forensic fashion. Forensic duplications are bit-by-bit copies, supported by hashes to demonstrate that the copy and the original are exact duplicates in all ways. As with all forensically sound collection tools, FTK Imager retains the filesystem metadata (and the file path) and creates a log of the files copied. This process does not change file access attributes. FTK Imager is part of the larger, and commercial, FTK suite of forensic tools.

Autopsy

Autopsy is the open source answer for digital forensic tool suites. This suite, developed by Brian Carrier, has evolved over that past couple decades into a community-supported open source project that can perform virtually all digital forensic functions. It runs on Windows and offers a comprehensive set of tools that can enable network-based collaboration and automated, intuitive workflows. It has tools to support hard drives, removable devices, and smartphones. It supports MD5 hash creation and lookup, deleted file carving, EXIF data extraction from JPEG images, indexed keyword searches, extension mismatch detections, e-mail message extractions, and artifact extractions from web browsers.

It has case management tools to support the functions of case analysis and reporting, including managing timelines.

Tool Suites

Tool Suites

A variety of toolsets are used by security professionals that can also be used for malicious purposes. These toolsets are used by penetration testers when testing the security posture of a system, but the same tools in the hands of an adversary can be used for malicious purposes.

Metasploit

Metasploit is a framework that enables attackers to exploit systems (bypass controls) and inject payloads (attack code). Metasploit is widely distributed, powerful, and one of the most popular tools used by attackers. When new vulnerabilities are discovered in systems, Metasploit exploit modules are quickly created in the community, making this the go-to tool for most professionals.

Kali

Kali is a Linux distribution that is preloaded with many security tools primarily designed for penetration testing. The current version is called Kali Linux 2021.1. It’s regularly updated by the development team and includes a whole host of preconfigured, preloaded tools, including Metasploit, Social-Engineering Toolkit, and others. It can be found at https://kali.org.

Parrot OS

Parrot OS is a GNU/Linux distribution based on Debian and designed with tools for penetration testing and incident response operations. It has tools to support a wide range of cyber security operations, from penetration testing to digital forensics and reverse engineering of malware. This is a free distribution and is available at https://parrotsec.org.

Security Onion

Security Onion is a Linux distribution that is preloaded with many security tools primarily designed for use during incident response, threat hunting, enterprise security monitoring, and log management. It includes TheHive, Playbook and Sigma, Fleet and osquery, CyberChef, Elasticsearch, Logstash, Kibana, Suricata, Zeek, Wazuh, and many other security tools. It can also be configured as a collector and used in an enterprise to automate the collection and processing of information.

Social-Engineering Toolkit

The Social-Engineering Toolkit (SET) is a set of tools that can be used to target attacks at the people using systems. It has applets that can be used to create phishing e-mails, Java attack code, and other social engineering–type attacks. The SET is included in BackTrack/Kali and other distributions.

Cobalt Strike

Cobalt Strike is a powerful application that can replicate advanced threats and assist in the execution of targeted attacks on systems. Cobalt Strike expands the Armitage tool’s capabilities, adding advanced attack methods.

Core Impact

Core Impact is an expensive commercial suite of penetration test tools. It has a wide spectrum of tools and proven attack capabilities across an enterprise. Although it’s expensive, the level of automation and integration makes this a powerful suite of tools.

Burp Suite

Burp Suite began as a port scanner tool with limited additional functionality in the arena of intercepting proxies, web application scanning, and web-based content. Burp Suite is a commercial tool, but it is reasonably priced, well liked, and highly utilized in the pen-testing marketplace.

Penetration Testing

Penetration Testing

Understanding a system’s risk exposure is not, in actuality, a simple task. Using a series of tests, one can determine an estimate of the risk that a system has to the enterprise. Vulnerability tests detail the known vulnerabilities and the degree to which they are exposed. It is important to note that zero-day vulnerabilities will not be known, and the risk from them still remains unknown. A second form of testing, penetration testing, is used to simulate an adversary to see whether the controls in place perform to the desired level.

A penetration test (or pen test) simulates an attack from a malicious outsider, probing your network and systems for a way in (often any way in). Pen tests are often the most aggressive form of security testing and can take on many forms, depending on what is considered “in” or “out” of scope. For example, some pen tests simply seek to find a way into the network—any way in. This can range from an attack across network links to social engineering to having a tester physically break into the building. Other pen tests are limited—only attacks across network links are allowed, with no physical attacks.

Regardless of the scope and allowed methods, the goal of a pen test is the same: to determine whether an attacker can bypass your security and access your systems. Unlike a vulnerability assessment, which typically just catalogs vulnerabilities, a pen test attempts to exploit vulnerabilities to see how much access a vulnerability allows. Penetration tests are useful in the following ways:

![]() They can show relationships between a series of “low-risk” items that can be sequentially exploited to gain access (making them a “high-risk” item in the aggregate).

They can show relationships between a series of “low-risk” items that can be sequentially exploited to gain access (making them a “high-risk” item in the aggregate).

![]() They can be used to test the training of employees, the effectiveness of your security measures, and the ability of your staff to detect and respond to potential attackers.

They can be used to test the training of employees, the effectiveness of your security measures, and the ability of your staff to detect and respond to potential attackers.

![]() They can often identify and test vulnerabilities that are difficult or even impossible to detect with traditional scanning tools.

They can often identify and test vulnerabilities that are difficult or even impossible to detect with traditional scanning tools.

An effective penetration test offers several critical elements. First, it focuses on the most commonly employed threat vectors seen in the current threat environment. Using zero-day exploits that no one else has does not help an organization understand its security defenses against the existing threat environment. It is important to mimic real-world attackers if that is what the company wants to test its defenses against. The second critical element is to focus on real-world attacker objectives, such as getting to and stealing intellectual property. Just bypassing defenses but not obtaining the attacker’s objectives, again, does not provide a full exercise of security capabilities. The objective is to measure actual risk under real-world conditions.

Penetration Testing Authorization

Penetration tests are used by organizations that want a real-world test of their security. Unlike actual attacks, penetration tests are conducted with the knowledge of the organization, although some types of penetration tests occur without the knowledge of the employees and departments being tested.

Obtaining penetration testing authorization is the first step in penetration testing. This permission step is the time that the testing team, in advance, obtains permission from the system owner to perform the penetration test. The penetration test authorization document is a key element in the communication plan for the test. Penetration tests are typically used to verify threats or to test security controls. They do this by bypassing security controls and exploiting vulnerabilities using a variety of tools and techniques, including the attack methods discussed earlier in this book. Social engineering, malware, and vulnerability exploit tools are all fair game when it comes to penetration testing. Penetration tests actively test security controls by bypassing them and exploiting vulnerabilities, and this helps to verify that a risk exists.

Reconnaissance

After the penetration test is planned, reconnaissance is the first step in performing a penetration test. The objective of reconnaissance is to obtain an understanding of the system and its components that someone wants to attack. Multiple methods can be employed to achieve this objective, and in most cases, multiple methods will be employed to ensure good coverage of the systems and to find the potential vulnerabilities that may be present. There are two classifications for reconnaissance activities: active and passive. Active reconnaissance testing involves tools that actually interact with the network and systems in a manner that their use can be observed. Active reconnaissance can provide a lot of useful information; you just need to be aware that this may alert defenders to the impending attack. Passive reconnaissance is the use of tools that do not provide information to the network or systems under investigation. Google hacking is a prime example; Google and other third parties such as Shodan allow you to gather information without sending packets to a system where they could be observed.

Passive vs. Active Tools

Tools can be classified as active or passive. Active tools interact with a target system in a fashion where their use can be detected. Scanning a network with nmap (Network Mapper) is an active act that can be detected. In the case of nmap, the tool may not be specifically detectable, but its use, the sending of packets, can be detected. When you need to map out your network or look for open services on one or more hosts, a port scanner is probably the most efficient tool for the job. Passive tools are those that do not interact with the system in a manner that would permit detection through sending packets or altering traffic. An example of a passive tool is Tripwire, which can detect changes to a file based on hash values. Another passive example is OS mapping by analyzing TCP/IP traces with a tool such as Wireshark. Passive sensors can use existing traffic to provide data for analysis.

Pivoting

Pivoting is a key method used by a pen tester or attacker to move across a network. The first step is the attacker obtaining a presence on a machine; let’s call it machine A. The attacker then remotely, through this machine, examines the network again, using machine A’s IP address. This enables an attacker to see sections of networks that were not observable from the previous position. Performing a pivot is not easy because the attacker not only must establish access to machine A but also must move their tools to machine A and control those tools remotely from another machine, all while not being detected. This activity, also referred to as traversing a network, is one place where defenders can observe the attacker’s activity. When an attacker traverses the network, network security monitoring tools will detect the activity as unusual with respect to both the account being utilized and the actual traversing activity.

Initial Exploitation

A key element of a penetration test is the actual exploitation of a vulnerability. Exploiting the vulnerabilities encountered serves two purposes. First, it demonstrates the level of risk that is actually present. Second, it demonstrates the viability of the mechanism of the attack vector. During a penetration test, the exploitation activity stops short of destructive activity. The initial exploitation is the first step because just being able to demonstrate that a vulnerability is present and exploitable does not demonstrate that the objective of the penetration test is achievable. In many cases, multiple methods, including pivoting (network traversal) and escalation of privilege to perform activities with administrator privileges, are used to achieve the final desired effect.

Persistence

Persistence is one of the key elements of a whole class of attacks referred to as advanced persistent threats (APTs). APTs place two elements at the forefront of all activity: invisibility from defenders and persistence. APT actors tend to be patient and use techniques that make it difficult to remove them once they have gained a foothold. Persistence can be achieved via a wide range of mechanisms—from agents that beacon back out to malicious accounts, to vulnerabilities introduced to enable reinfection.

Escalation of Privilege

Escalation of privilege is the movement to an account that enables root or higher-level privilege. Typically this occurs when a normal user account exploits a vulnerability on a process that is operating with root privilege, and as a result of the specific exploit, the attacker assumes the privileges of the exploited process at the root level. Once this level of privilege is achieved, additional steps are taken to provide a persistent access back to the privileged level. With root access, things such as log changes and other changes are possible, expanding the ability of the attacker to achieve their objective and to remove information, such as logs that could lead to detection of the attack.

Vulnerability Testing

Vulnerability Testing

Vulnerability tests are used to scan for specific vulnerabilities or weaknesses. These weaknesses, if left unguarded, can result in loss. Obtaining vulnerability testing authorization from management before commencing the test is the step designed to prevent avoidable accidents. Just as it is important to obtain authorization for penetration tests, it is important to obtain permission for penetration tests in the active machines. This permission is usually a multiperson process and involves explaining the risk of these tests and their purpose to the people running the system. The vulnerability tests are then analyzed with respect to how the security controls respond and notify management of the adequacy of the defenses in place.

Vulnerability Scanning Concepts

One valuable method that can help administrators secure their systems is vulnerability scanning, which is the process of examining your systems and network devices for holes, weaknesses, and issues and then finding them before a potential attacker does. Specialized tools called vulnerability scanners are designed to help administrators discover and address vulnerabilities. But there is much more to vulnerability scanning than simply running tools and examining the results—administrators must be able to analyze any discovered vulnerabilities and determine their severity, how to address them if needed, and whether any business processes will be affected by potential fixes. Vulnerability scanning can also help administrators identify common misconfigurations in account setup, patch level, applications, and operating systems. Most organizations look at vulnerability scanning as an ongoing process because it is not enough to scan systems once and assume they will be secure from that point on.

Vulnerability scanning is also used to examine services on computer systems for known vulnerabilities in software. This is basically a simple process of determining the specific version of a software program and then looking up the known vulnerabilities. The Common Vulnerabilities and Exposures (CVE) database (https://cve.mitre.org/cve/) can be used as a repository; it has recorded over 145,000 specific vulnerabilities. This makes the task more than just a manual one; numerous software programs can be used to perform this function.

False Positives

Any system that uses a measurement of some attribute to detect some other condition can be subject to errors. When a measurement is used as part of a decision process, external factors can introduce errors. In turn, these errors can influence a measurement to a condition that creates an error in the final number. When a measurement is used in a decision process, the possibility of errors and their influence must be part of the decision process. For example, when a restaurant cooks a steak to a medium temperature, the easiest way to determine if the steak is cooked correctly would be to cut it open and look. But this can’t be done in the kitchen, so other measures are used, such as time, temperature, and so on. When the customer cuts into the steak is the moment of truth, because then the actual condition is revealed.

![]()

False positives and false negatives depend on the results of the test and the true outcome. If you test for something, get a positive indication, but the indication is wrong, that is a false positive. If you test for something, do not get an indication, but the results should have been true, this is a false negative.

![]()

A false positive occurs when expected or normal behavior is wrongly identified as malicious. The detection of a failed login followed by a successful login being labeled as malicious, when the activity was caused by a user making a mistake after recently changing their password, is an example of a false positive.

False Negatives

False negative results are the opposite of false positive results. If you test something and it comes back negative, but it was in fact positive, then the result is a false negative. For example, if you scan ports to find any open ones and you miss a port that is open because the scanner could not detect it being open, and you do not run a test because of this false result, you are suffering from a false negative error.

![]()

When an intrusion detection system (IDS) does not generate an alert from a malware attack, this is a false negative.

Log Reviews

A properly configured log system can provide tremendous insight into what has happened on a computer system. The key is in proper configuration so that you capture the events you want without adding extraneous data. That being said, a log system is a potential treasure trove of useful information to someone attacking a system. It will have information on systems, account names, what has worked for access, and what hasn’t. Log reviews can provide information as to security incidents, policy violations (or attempted policy violations), and other abnormal conditions that require further analysis.

Credentialed vs. Non-Credentialed

Vulnerability scans can be performed with and without credentials. Performing a scan without credentials can provide some information as to the state of a service and whether or not it might be vulnerable. This is the view of a true outsider on the network. It can be done quickly, in an automated fashion, across large segments of a network. However, without credentials, it is not possible to see the detail that a login provides. Credentialed vulnerability scans can look deeper into a host and return more accurate and critical risk information. Frequently these scans are used together. First, a non-credentialed scan is performed across large network segments using automated tools. Then, based on these preliminary results, more detailed credentialed scans are run on machines with the most promise for vulnerabilities.

![]()

Credentialed scans are more involved, requiring credentials and extra steps to log in to a system, whereas non-credentialed scans can be done more quickly across multiple machines using automation. Credentialed scans can reveal additional information over non-credentialed scans.

Intrusive vs. Non-Intrusive

Vulnerability scans can be intrusive or non-intrusive to the system being scanned. A non-intrusive scan is typically a simple scan of open ports and services, whereas an intrusive scan attempts to leverage potential vulnerabilities through an exploit to demonstrate the vulnerabilities. This intrusion can result in system crashes and is therefore referred to as intrusive.

Applications

Applications are the software programs that perform data processing on the information in a system. Being the operational element with respect to the data, as well as the typical means of interfacing between users and the data, applications are common targets of attackers. Vulnerability scans assess the strength of a deployed application against the desired performance of the system when being attacked. Application vulnerabilities represent some of the riskier problems in the enterprise because the applications are necessary, and there are fewer methods to handle miscommunications of data the higher up the stack one goes.

Web Applications

Web applications are just applications that are accessible across the Web. This method of accessibility brings convenience and greater potential exposure to unauthorized activity. All the details of standard applications still apply, but the placing of the system on the Web adds additional burdens on the system to prevent unauthorized access and keep web-based risks under control. From a vulnerability scan perspective, a web application is like an invitation to explore how well it is secured. At greatest risk are homegrown web applications because they seldom have the level of input protections needed for a hostile web environment.

Network

The network is the element that connects all the computing systems together, carrying data between the systems and users. The network can also be used in vulnerability scanning to access connected systems. The most common vulnerability scans are performed across the network in a sweep, where all systems are scanned, mapped, and enumerated per the ports and services. This information can then be used to further target specific scans of individual systems in a more detailed fashion, using credentials and potentially intrusive operations.

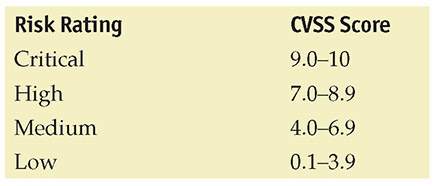

Common Vulnerabilities and Exposures (CVE)/Common Vulnerability Scoring System (CVSS)

The Common Vulnerabilities and Exposures (CVE) enumeration is a list of known vulnerabilities in software systems. Each vulnerability in the list has an identification number, a description, and reference. This list is the basis for most vulnerability scanner systems, as the scanners determine the software version and look up known or reported vulnerabilities. The Common Vulnerability Scoring System (CVSS) is a scoring system to determine how risky a vulnerability can be to a system. The CVSS score ranges from 0 to 10. As the CVSS score increases, so does the severity of risk from the vulnerability. Although the CVSS can’t take into account where the vulnerability is in an enterprise, it can help determine severity using metrics such as whether it’s easy to exploit, whether it requires user intervention, what level of privilege is required, and so on. Together, these two sets of information can provide a lot of data on the potential risk associated with a specific software system.

CVSS scores and their associated risk severity are as follows:

![]()

The Common Vulnerabilities and Exposures (CVE) is a list of known vulnerabilities, each with an identification number, description, and reference. The Common Vulnerability Scoring System (CVSS) determines how risky a vulnerability can be to a system. The CVSS score ranges from 0 to 10. As it increases, so does the severity of risk from the vulnerability.

Configuration Review

System configurations play a significant role in system security. Misconfigurations leave a system in a more vulnerable state, sometimes even causing security controls to be bypassed completely. Verification of system configurations is an important vulnerability check item; if you find a misconfiguration, the chances are high that it exposes a vulnerability. Configuration reviews are important enough that they should be automated and performed on a regular basis. There are protocols and standards for measuring and validating configurations. The Common Configuration Enumeration (CCE) and Common Platform Enumeration (CPE) guides, as part of the National Vulnerability Database (NVD) maintained by NIST, are places to start for details.

Passively Test Security Controls

When an automated vulnerability scanner is used to examine a system for vulnerabilities, one of the side effects is the passive testing of the security controls. This is referred to as passive testing because the target of the vulnerability scanner is the system, not the controls. If the security controls are effective, then the vulnerability scan may not properly identify the vulnerability. If the security control prevents a vulnerability from being attacked, then it may not be exploitable.

Identify Vulnerability

Vulnerabilities are known entities; otherwise, the scanners would not have the ability to scan for them. When a scanner finds a vulnerability present in a system, it makes a log of the fact. In the end, an enumeration of the vulnerabilities that were discovered is part of the vulnerability analysis report.

Identify Lack of Security Controls

If a vulnerability is exposed to the vulnerability scanner, then a security control is needed to prevent the vulnerability from being exploited. As vulnerabilities are discovered, the specific environment of each vulnerability is documented. As the security vulnerabilities are all known in advance, the system should have controls in place to protect against exploitation. Part of the function of the vulnerability scan is to learn where controls are missing or are ineffective.

Identify Common Misconfigurations

One source of failure with respect to vulnerabilities is in the misconfiguration of a system. Common misconfigurations include access control failures and failure to protect configuration parameters. Vulnerability scanners can be programmed to test for these specific conditions and report on them.

Tech Tip

Misconfiguration Testing

One of the key objectives of testing and penetration testing is to discover misconfigurations or weak configurations. Misconfigurations and/or weak configurations represent vulnerabilities in systems that can increase risk to the system. Discovering them so that appropriate mitigations can be employed is an essential security process.

False Results

Tools are not perfect. Sometimes they will erroneously report things as an issue when they really are not a problem, and other times they won’t report an issue at all. As previously discussed, a false positive is an incorrect finding—something that is incorrectly reported as a vulnerability. The scanner tells you there is a problem when in reality nothing is wrong. A false negative is when the scanner fails to report a vulnerability that actually does exist; the scanner simply missed the problem or didn’t report it as a problem.

System Testing

Systems can be tested in a variety of manners. One method of describing the test capabilities relates to the information given to the tester. Testers can have varying levels of detail, from complete knowledge of a system and how it works to zero knowledge. These differing levels of testing are referred to as white box, gray box, and black box testing.

Black Box Testing

Black box testing is a testing technique where testers have no knowledge of the internal workings of the software they are testing. They treat the entire software package as a “black box”—they put input in and look at the output. They have no visibility into how the data is processed inside the application, only the output that comes back to them. Test cases for black box testing are typically constructed around intended functionality (what the software is supposed to do) and focus on providing both valid and invalid inputs. Black box software testing techniques are useful for examining any web-based application. Web-based applications are typically subjected to a barrage of valid, invalid, malformed, and malicious input from the moment they are exposed to public traffic.

White Box Testing

White box testing is almost the polar opposite of black box testing. Sometimes called clear-box testing, white box techniques test the internal structures and processing within an application for bugs, vulnerabilities, and so on. A white box tester will have detailed knowledge of the application they are examining—they’ll develop test cases designed to exercise each path, decision tree, input field, and processing routine of the application. White box testing is often used to test paths within an application (if X, then go do this; if Y, then go do that), data flows, decision trees, and so on.

Gray Box Testing

What happens when you mix a bit of black box testing and a bit of white box testing? You get gray box testing. In a gray box test, the testers typically have some knowledge of the software, network, or systems they are testing. For this reason, gray box testing can be efficient and effective because testers can often quickly eliminate entire testing paths, test cases, and toolsets and can rule out things that simply won’t work and are not worth trying.

Pen Testing vs. Vulnerability Scanning

Penetration testing is the examination of a system for vulnerabilities that can be exploited. The key is exploitation. There may be vulnerabilities in a system. In fact, one of the early steps in penetration testing is the examination for vulnerabilities, but the differentiation comes in the follow-on steps, which examine the system in terms of exploitability.

Auditing

Auditing

Auditing, in the financial community, is done to verify the accuracy and integrity of financial records. Many standards have been established in the financial community about how to record and report a company’s financial status correctly. In the computer security world, auditing serves a similar function. It is a process of assessing the security state of an organization compared against an established standard.

The important elements here are the standards. Organizations from different communities may have widely different standards, and any audit will need to consider the appropriate elements for the specific community. Audits differ from security or vulnerability assessments in that assessments measure the security posture of the organization but may do so without any mandated standards against which to compare them. In a security assessment, general security “best practices” can be used, but they may lack the regulatory teeth that standards often provide. Penetration tests can also be encountered—these tests are conducted against an organization to determine whether any holes in the organization’s security can be found. The goal of the penetration test is to penetrate the security rather than measure it against some standard. Penetration tests are often viewed as white hat hacking in that the methods used often mirror those that attackers (often called black hats) might use.

![]()

CompTIA updated a number of terms in the most recent exam objectives for CompTIA Security+ and has moved away from using terms like white hat and black hat for attackers and is now using authorized and unauthorized instead. Originally these terms referred to the cowboy movies of the late 1950s, where the good guy wore a white hat and the bad guy wore a black hat, which in the era of monochrome (black-and-white) TV made them immediately recognizable.

You should conduct some form of security audit or assessment on a regular basis. Your organization might spend quite a bit on security, and it is important to measure how effective the efforts have been. In certain communities, audits can be regulated on a periodic basis with very specific standards that must be measured against. Even if your organization is not part of such a community, periodic assessments are important.

Many particulars can be evaluated during an assessment, but at a minimum, the security perimeter (with all of its components, including host-based security) should be examined, as well as the organization’s policies, procedures, and guidelines governing security. Employee training is another aspect that should be studied, since employees are the targets of social engineering and password-guessing attacks.

Security audits, assessments, and penetration tests are a big business, and a number of organizations can perform them for you. The costs of these vary widely depending on the extent of the tests you want, the background of the company you are contracting with, and the size of the organization to be tested.

A powerful mechanism for detecting security incidents is the use of security logs. For logs to be effective, however, they require monitoring. Monitoring of event logs can provide information concerning the events that have been logged. This requires making decisions in advance about the items to be logged. Logging too many items uses a lot of space and increases the workload for personnel who are assigned the task of reading those logs. The same is true for security, access, audit, and application-specific logs. The bottom line is that, although logs are valuable, preparation is needed to determine the correct items to log and the mechanisms by which logs are reviewed. Security information event management (SIEM) software can assist in log file analysis.

![]()

One of the key management principles involves the measurement of a process. When referring to security, until it is measured, one should take answers with a grain of salt. Logging information is only good if you examine the logs and analyze them. Security controls work, but auditing their use provides assurance of their protection.

Performing Routine Audits

As part of any good security program, administrators must perform periodic audits to ensure things are “as they should be” with regard to users, systems, policies, and procedures. Installing and configuring security mechanisms is important, but they must be reviewed on a regularly scheduled basis to ensure they are effective, up to date, and serving their intended function. Here are some examples of items, but by no means a complete list, that should be audited on a regular basis:

![]() User access Administrators should review which users are accessing the systems, when they are doing so, what resources they are using, and so on. Administrators should look closely for users accessing resources improperly or accessing legitimate resources at unusual times.

User access Administrators should review which users are accessing the systems, when they are doing so, what resources they are using, and so on. Administrators should look closely for users accessing resources improperly or accessing legitimate resources at unusual times.

![]() User rights When a user changes jobs or responsibilities, they will likely need to be assigned different access permissions; they may gain access to new resources and lose access to others. To ensure that users have access only to the resources and capabilities they need for their current positions, all user rights should be audited periodically.

User rights When a user changes jobs or responsibilities, they will likely need to be assigned different access permissions; they may gain access to new resources and lose access to others. To ensure that users have access only to the resources and capabilities they need for their current positions, all user rights should be audited periodically.

![]() Storage Many organizations have policies governing what can be stored on “company” resources and how much space can be used by a given user or group. Periodic audits help to ensure that no undesirable or illegal materials exist on organizational resources.

Storage Many organizations have policies governing what can be stored on “company” resources and how much space can be used by a given user or group. Periodic audits help to ensure that no undesirable or illegal materials exist on organizational resources.

![]() Retention In some organizations, how long a particular document or record is stored can be as important as what is being stored. A record’s retention policy helps to define what is stored, how it is stored, how long it is stored, and how it is disposed of when the time comes. Periodic audits help to ensure that records or documents are removed when they are no longer needed.

Retention In some organizations, how long a particular document or record is stored can be as important as what is being stored. A record’s retention policy helps to define what is stored, how it is stored, how long it is stored, and how it is disposed of when the time comes. Periodic audits help to ensure that records or documents are removed when they are no longer needed.

![]() Firewall rules Periodic audits of firewall rules are important to ensure the firewall is filtering traffic as desired and to help ensure that “temporary” rules do not end up as permanent additions to the ruleset.

Firewall rules Periodic audits of firewall rules are important to ensure the firewall is filtering traffic as desired and to help ensure that “temporary” rules do not end up as permanent additions to the ruleset.

Vulnerabilities

Vulnerabilities

Vulnerabilities are weaknesses in systems that can be exploited by attackers. Tools are the pathway to discovering the vulnerabilities in your systems. Finding them and patching them before an adversary finds them is important. This section lists some of the common vulnerabilities that attackers try to exploit.

Cloud-based vs. On-premises Vulnerabilities

Cloud computing has been described by pundits as computing on someone else’s computer, and to a degree there is truth in that statement. As vulnerabilities exist in all systems, then regardless of whether a system is on premises or cloud based, it will always have potential vulnerabilities. With on-premises vulnerabilities, the enterprise has unfettered access to the infrastructure elements, making the discovery and remediation of vulnerabilities a problem defined by scope and resources. With the cloud, the economies of scale and standardized environments give cloud providers an advantage in the scope and resource side of the equation. What is lacking in vulnerability management from the enterprise point of view is a lack of visibility into the infrastructure element itself, as this is under the purview of the cloud provider.

![]()

Data can be stored locally on premises or remotely in the cloud. It is important to remember that no matter where data is stored, there will always be potential vulnerabilities that exist.

Zero Day

Zero day is a term used to define vulnerabilities that are newly discovered and not yet addressed by a patch. Most vulnerabilities exist in an unknown state until discovered by a researcher or developer. If a researcher or developer discovers a vulnerability but does not share the information, then this vulnerability can be exploited without the vendor’s ability to fix it, because for all practical knowledge, the issue is unknown except to the person who found it. From the time of discovery until a fix or patch is made available, the vulnerability goes by the name “zero day,” indicating that it has not been addressed yet. The most frightening thing about a zero-day threat is the unknown factor—its capability and effect on risk are unknown because it is unknown. Although there are no patches for zero-day vulnerabilities, you can use compensating controls to mitigate the risk.

![]()

Zero-day threats have become a common topic in the news and are a likely target for exam questions. Keep in mind that defenses exist, such as compensating controls, which are controls that mitigate the risk indirectly; for example, a compensating control may block the path to the vulnerability rather than directly address the vulnerability.

Weak Configurations

Most systems have significant configuration options that administrators can adjust to enable or disable functionality based on usage. When a system suffers from misconfiguration or weak configuration, it may not achieve all of the desired performance or security objectives. Configuring a database server to build a complete replica of all actions as a backup system can result in a system that is bogged down and not capable of proper responses when usage is high. Similarly, old options, such as support for legacy protocols, can lead to vulnerabilities. Misconfiguration can result from omissions as well, such as when the administrator does not change default credentials, which is equivalent to having no credentials at all, thus leaving the system vulnerable. This form of vulnerability provides a means for an attacker to gain entry or advance their level of privilege, and because this can happen on components with a wide span of control, such as routers and switches, in some cases an attacker can effectively gain total ownership of an enterprise.

Open Permissions

Permissions is the term used to describe the range of activities permitted on an object by an actor in a system. Having properly configured permissions is one of the defenses that can be employed in the enterprise. Managing permissions can be tedious, and as the size of the enterprise grows, the scale of permissions requires automation to manage. When permissions are not properly set, the condition of open permissions exists. The risk associated with an open permission is context dependent, as for some items, unauthorized access leads to little or no risk, whereas in other systems it can be catastrophic. The vulnerability of open permissions is equivalent to no access control for an item, and this needs to be monitored in accordance with the relative risk of the element in the enterprise.

Unsecure Root Accounts

Unsecure root accounts are like leaving master keys to the enterprise outside on the curb. Root accounts have access to everything and the ability to do virtually any activity on a network. All root accounts should be monitored, and all accesses should be verified as correct. One method of protecting high-value accounts such as root accounts is through access control vaults, where credentials are checked out before use. This prevents unauthorized activity using these accounts.

![]()

Strong configurations include secure root (Linux) and Administrator (Windows) accounts. Without securing these accounts, anything they are connected to, including processes and services, is exposed to vulnerabilities.

Errors

Errors are the condition where something has gone wrong. Every system will experience errors, and the key to managing this condition is in establishing error trapping and responses. How a system handles errors is everything, because unhandled errors are eventually handled at some level, and the higher up through a system an error goes, the less likely it will be handled correctly. One of the biggest weaknesses exploited by attackers is improper input validations. Whether against a program input, an API, or any other interface, inserting bad information that causes an error and forces a program into a non-normal operating state can result in an exploitable vulnerability. Trapping and handling errors can reduce the possibility of an error becoming exploitable.

Errors should be trapped by the program and appropriate log files generated. For example, web server logs include common error logs, customized logs, and W3C logs. W3C logs are web server logs that focus on recording specific web-related events. The Windows System log records operating system error messages. Windows can be configured to log records of success and failure of login attempts and other audited events. The Windows Application log records events related to local system applications.

Weak Encryption

Cryptographic errors come from several common causes. One typical mistake is choosing to develop your own cryptographic algorithm. Development of a secure cryptographic algorithm is far from an easy task, and even when it’s attempted by experts, weaknesses can be discovered that make the algorithm unusable. Cryptographic algorithms become trusted only after years of scrutiny and repelling attacks, so any new algorithms would take years to join the trusted set. If you instead decide to rely on secret algorithms, be warned that secret or proprietary algorithms have never provided the desired level of protection. A similar mistake to attempting to develop your own cryptographic algorithm is to attempt to write your own implementation of a known cryptographic algorithm. Errors in coding implementations are common and lead to weak implementations of secure algorithms that are vulnerable to bypass. Do not fall prey to creating a weak implementation; instead, use a proven, vetted cryptographic library.

The second major cause of cryptographic weakness, or weak encryption, is the employment of deprecated or weak cryptographic algorithms. Weak cipher suites are those that at one time were considered secure but are no longer considered secure. As the ability to use ever faster hardware has enabled attackers to defeat some cryptographic methods, the older, weaker methods have been replaced by newer, stronger ones. Failure to use the newer, stronger methods can result in weakness. A common example of this is SSL; all versions of SSL are now considered deprecated and should not be used. Everyone should switch their systems to TLS-based solutions.

The impact of cryptographic failures is fairly easy to understand: whatever protection that was provided is no longer there, even if it is essential for the security of the system.

Unsecure Protocols

Another important weak configuration to guard against in the enterprise is unsecure protocols. One of the most common protocols used, HTTP, is by its own nature unsecure. Adding TLS to HTTP, using HTTPS, is a simple configuration change that should be enforced everywhere. But what about all the other protocol stacks that come prebuilt in OSs and are just waiting to become a vulnerability, such as FTP, Telnet, and SNMP? Improperly secured communication protocols and services and unsecure credentials increase the risk of unauthorized access to the enterprise. Network infrastructure devices can include routers, switches, access points, gateways, proxies, and firewalls. When infrastructure systems are deployed, these devices remain online for years, and many of them are rarely rebooted, patched, or upgraded.

Default Settings

Default settings can be a security risk unless they were created with security in mind. Older operating systems used to have everything enabled by default. Old versions of some systems had hidden administrator accounts, and Microsoft’s SQL Server used to have a blank system administrator password by default. Today, most vendors have cleaned these issues up, setting default values with security in mind. But when you instantiate something in your enterprise, it is then yours. Therefore, you should make the settings what you need and only what you need, and you should create these settings as the default configuration baseline. This way, the settings and their security implications are understood. Not taking these steps leaves too many unknowns within an enterprise.

Open Ports and Services

For a service to respond to a request, its port must be open for communication. Having open ports is like having doors in a building. Even a bank vault has a door. Having excess open services only leads to pathways into your systems that must be protected. Disabling unnecessary services, closing ports, and using firewalls to prevent communications except on approved channels creates a barrier to entry by unauthorized users. Many services run with elevated privileges by default, and malware takes advantage of this. Security professionals should make every effort to audit services and disable any that aren’t required.

Improper or Weak Patch Management