PyTorch has been evolving as a larger framework for writing dynamic models. Because of that, it is very popular among data scientists and data engineers deploying large-scale deep learning frameworks. This book provides a structure for the experts in terms of handling activities while working on a practical data science problem. As evident from applications that we use in our day-to-day lives, there are layers of intelligence embedded with the product features. Those features are enabled to provide a better experience and better services to the user.

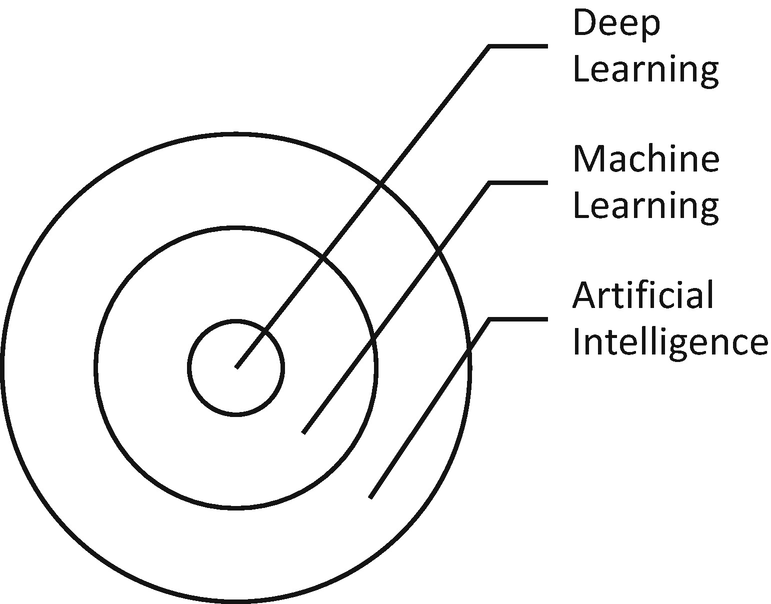

The world is moving toward artificial intelligence. There are two main components of it: deep learning and machine learning. Without deep learning and machine learning, it is impossible to visualize artificial intelligence.

PyTorch is the most optimized high-performance tensor library for computation of deep learning tasks on GPUs (graphics processing units) and CPUs (central processing units). The main purpose of PyTorch is to enhance the performance of algorithms in large-scale computing environments. PyTorch is a library based on Python and the Torch tool provided by Facebook’s Artificial Intelligence Research group, which performs scientific computing.

NumPy-based operations on a GPU are not efficient enough to process heavy computations. Static deep learning libraries are a bottleneck for bringing flexibility to computations and speed. From a practitioner’s point of view, PyTorch tensors are very similar to the N-dimensional arrays of a NumPy library based on Python. The PyTorch library provides bridge options for moving a NumPy array to a tensor array, and vice versa, in order to make the library flexible across different computing environments.

Autograd . This module provides functionality for automatic differentiation of tensors. A recorder class in the program remembers the operations and retrieves those operations with a trigger called backward to compute the gradients. This is immensely helpful in the implementation of neural network models.

Optim . This module provides optimization techniques that can be used to minimize the error function for a specific model. Currently, PyTorch supports various advanced optimization methods, which includes Adam, stochastic gradient descent (SGD), and more.

NN . NN stands for neural network model. Manually defining the functions, layers, and further computations using complete tensor operations is very difficult to remember and execute. We need functions that automate the layers, activation functions, loss functions, and optimization functions and provides a layer defined by the user so that manual intervention can be reduced. The NN module has a set of built-in functions that automates the manual process of running a tensor operation.

Industries in which artificial intelligence is applied include banking, financial services, insurance, health care, manufacturing, retail, clinical trials, and drug testing. Artificial intelligence involves classifying objects, recognizing the objects to detecting fraud, and so forth. Every learning system requires three things: input data, processing, and an output layer. Figure 1-1 explains the relationship between these three topics. If the performance of any learning system improves over time by learning from new examples or data, it is called a machine learning system. When a machine learning system becomes too difficult to reflect reality, it requires a deep learning system.

In a deep learning system, more than one layer of a learning algorithm is deployed. In machine learning, we think of supervised, unsupervised, semisupervised, and reinforcement learning systems. A supervised machine-learning algorithm is one where the data is labeled with classes or tagged with outcomes. We show the machine the input data with corresponding tags or labels. The machine identifies the relationship with a function. Please note that this function connects the input to the labels or tags.

In unsupervised learning, we show the machine only the input data and ask the machine to group the inputs based on association, similarities or dissimilarities, and so forth.

In semisupervised learning, we show the machine input features and labeled data or tags; however we ask the machine to predict the untagged outcomes or labels.

In reinforcement learning, we introduce a reward and penalty mechanism, where every correct action is rewarded and every incorrect action is penalized.

In all of these examples of machine learning algorithms, we assume that the dataset is small, because getting massive amounts of tagged data is a challenge, and it takes a lot of time for machine learning algorithms to process large-scale matrix computations. Since machine learning algorithms are not scalable for massive datasets, we need deep learning algorithms.

Relationships among ML, DL, and AI

After preprocessing and feature creation, you can observe hundreds of thousands of features that need to be computed to produce output. If we train a machine learning supervised model, it would take months to run and to produce output. To achieve scalability in this task, we need deep learning algorithms, such as a recurrent neural network. This is how the artificial intelligence is connected to deep learning and machine learning.

There are various challenges in deploying deep learning models that require large volumes of labeled data, faster computing machines, and intelligent algorithms. The success of any deep learning system requires good labeled data and better computing machines because the smart algorithms are already available.

Speech recognition

Video analysis

Anomaly detection from videos

Natural language processing

Machine translation

Speech-to-text conversion

The development of the NVIDIA GPU computing for processing large-scale data is another path-breaking innovation. The programming language that is required to run in a GPU environment requires a different programming framework. Two major frameworks are very popular for implementing graphical computing: TensorFlow and PyTorch. In this book, we discuss PyTorch as a framework to implement data science algorithms and make inferences.

In TensorFlow, we have to define the tensors, initialize the session, and keep placeholders for the tensor objects; however, we do not have to do these operations in PyTorch.

In TensorFlow, let’s consider sentiment analysis as an example. Input sentences are tagged with positive or negative tags. If the input sentence’s length is not equal, then we set the maximum sentence length and add zero to make the length of other sentences equal, so that the recurrent neural network can function; however, this is a built-in functionality in PyTorch, so we do not have to define the length of the sentences.

In PyTorch, the debugging is much easier and simpler, but it is a difficult task in TensorFlow.

In terms of data visualization, model deployment definitely better in TensorFlow; however, PyTorch is evolving and we expect to eventually see the same functionality in the future.

TensorFlow has definitely undergone many changes to reach a stable state. PyTorch is just entering the game, so it will take some time to realize the full potential of this tool.

What Is PyTorch?

PyTorch is a machine learning and deep learning tool developed by Facebook’s artificial intelligence division to process large-scale image analysis, including object detection, segmentation and classification. It is not limited to these tasks, however. It can be used with other frameworks to implement complex algorithms. It is written using Python and the C++ language. To process large-scale computations in a GPU environment, the programming languages should be modified accordingly. PyTorch provides a great framework to write functions that automatically run in a GPU environment.

PyTorch Installation

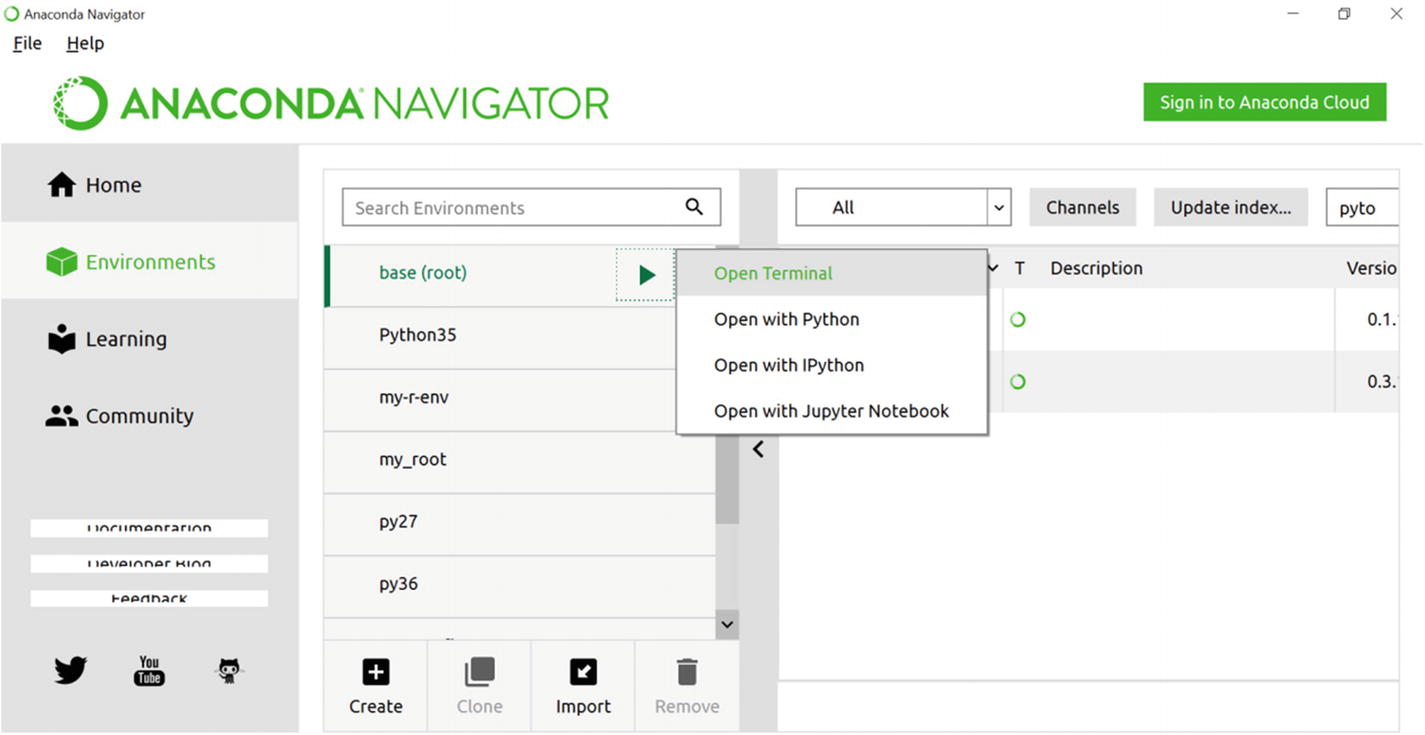

- 1.Open the Anaconda navigator and go to the environment page, as displayed in the screenshot shown in Figure 1-2.

Figure 1-2

Figure 1-2Relationships among ML, DL, and AI

- 2.

Open the terminal and terminal and type the following:

conda install -c peterjc123 pytorch - 3.

Launch Jupyter and open the IPython Notebook.

- 4.

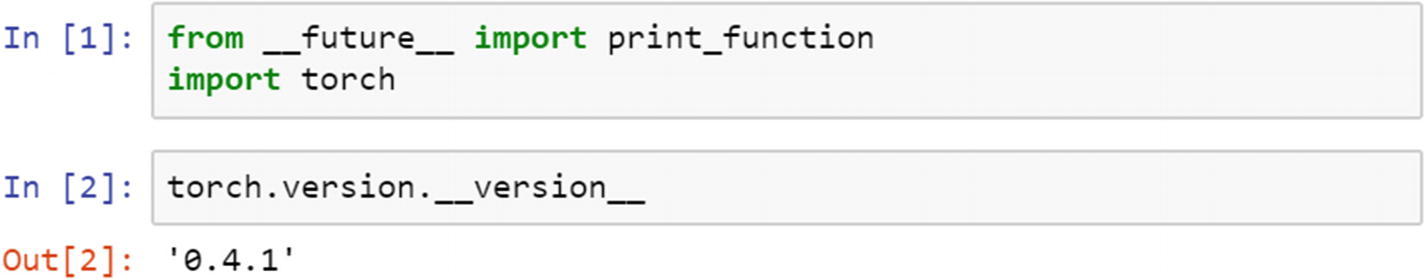

Type the following command to check whether the PyTorch is installed or not.

- 5.

Check the version of the PyTorch.

This installation process was done using a Microsoft Windows machine. The process may vary by operating system, so please use the following URLs for any issue regarding installation and errors.

There are two ways to install it: Conda (Anaconda) library management or the Pip3 package management framework. Also, installations for a local system (such as macOS, Windows, or Linux) and a cloud machine (such as Microsoft Azure, AWS, and GCP) are different. To set up according to your platform, please follow the official PyTorch installation documents at https://PyTorch.org/get-started/cloud-partners/ .

Torch has functionalities similar to NumPy with GPU support.

Autograd’s torch.autograd provides classes, methods, and functions for implementing automatic differentiation of arbitrary scalar valued functions. It requires minimal changes to the existing code. You only need to declare class:'Tensor's, for which gradients should be computed with the requires_grad=True keyword.

NN is a neural network library in PyTorch.

Optim provides optimization algorithms that are used for the minimization and maximization of functions.

Multiprocessing is a useful library for memory sharing between multiple tensors.

Utils has utility functions to load data; it also has other functions.

Now we are ready to proceed with the chapter.

Recipe 1-1. Using Tensors

Problem

The data structure used in PyTorch is graph based and tensor based, therefore, it is important to understand basic operations and defining tensors.

Solution

The solution to this problem is practicing on the tensors and its operations, which includes many examples that use various operations. Though it is assumed that the user is familiar with PyTorch and Python basics, a refresher on PyTorch is essential to create interest among new users.

How It Works

Let’s have a look at the following examples of tensors and tensor operation basics, including mathematical operations.

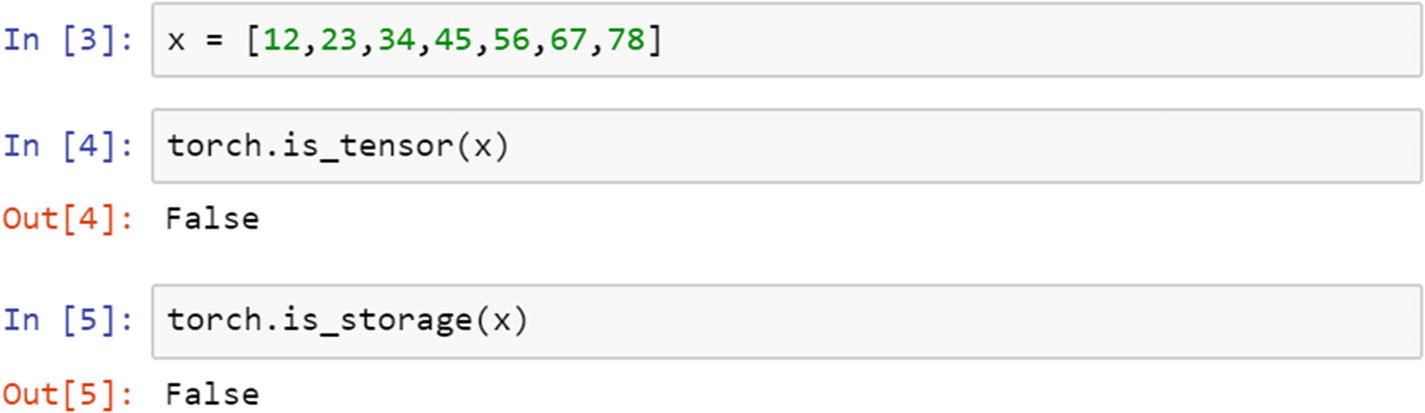

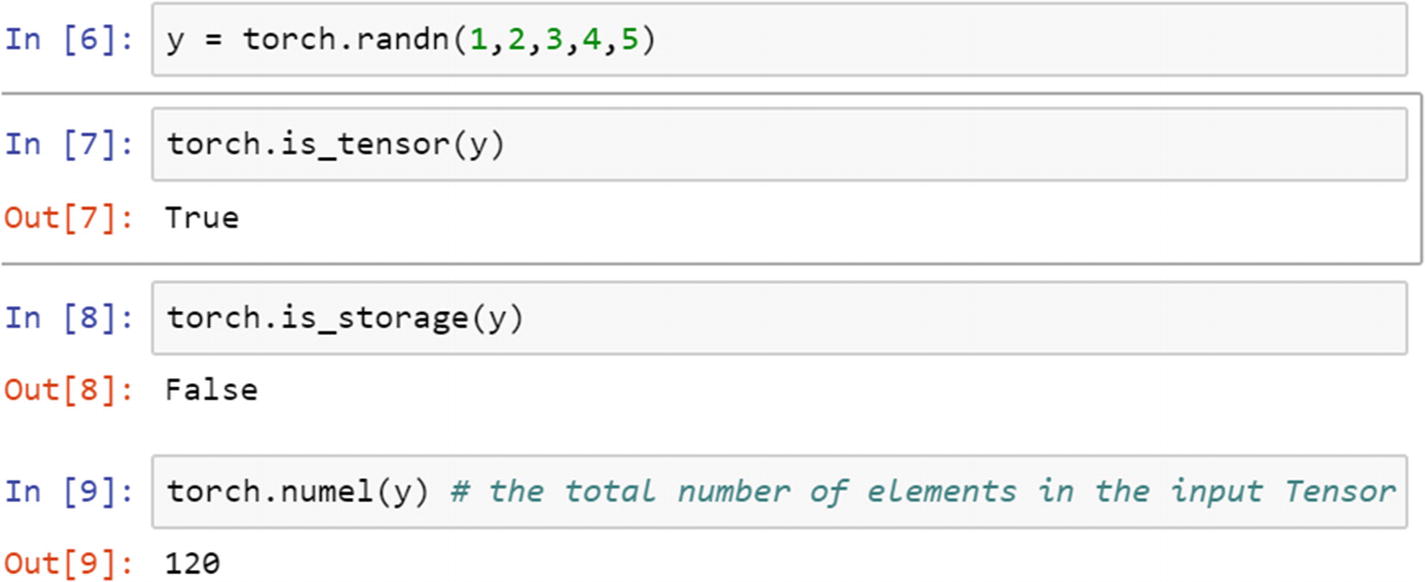

The x object is a list. We can check whether an object in Python is a tensor object by using the following syntax. Typically, the is_tensor function checks and the is_storage function checks whether the object is stored as tensor object.

Now, let’s create an object that contains random numbers from Torch, similar to NumPy library. We can check the tensor and storage type.

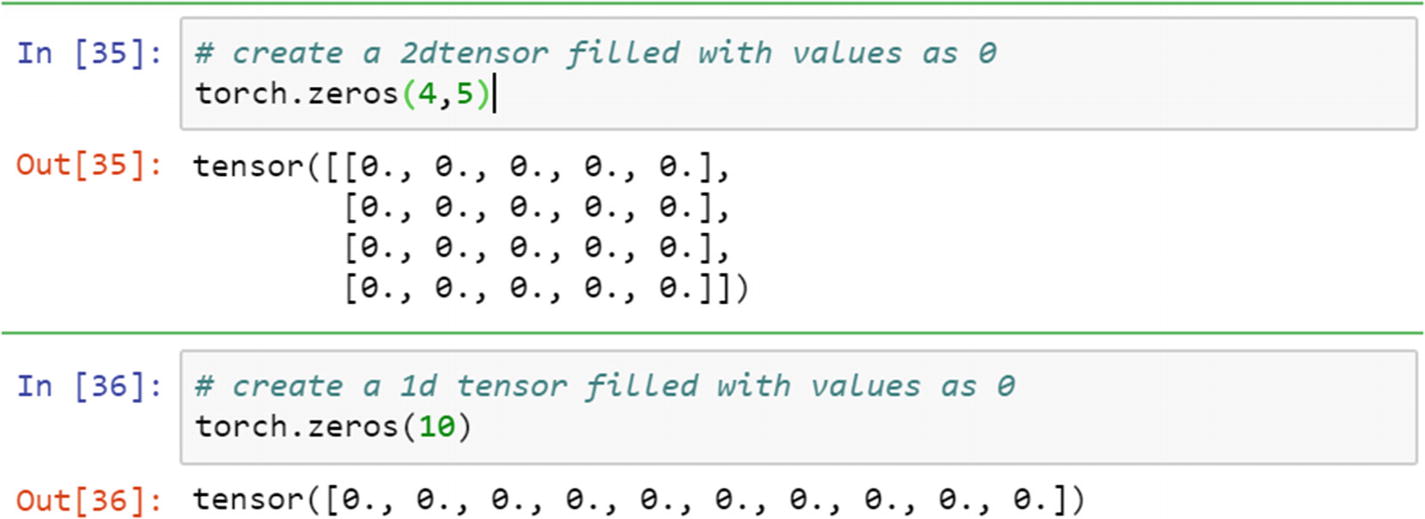

The y object is a tensor; however, it is not stored. To check the total number of elements in the input tensor object, the numerical element function can be used. The following script is another example of creating zero values in a 2D tensor and counting the numerical elements in it.

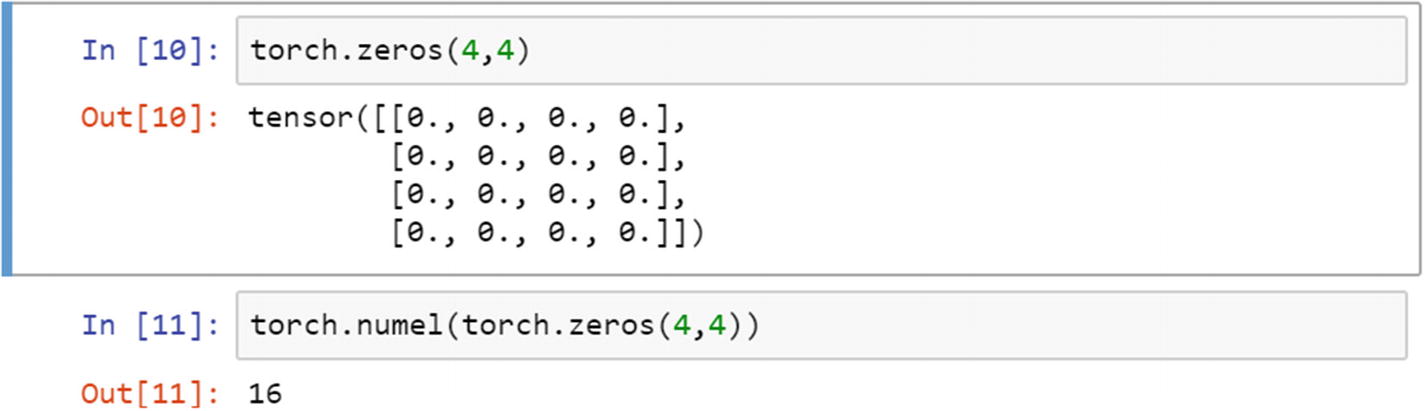

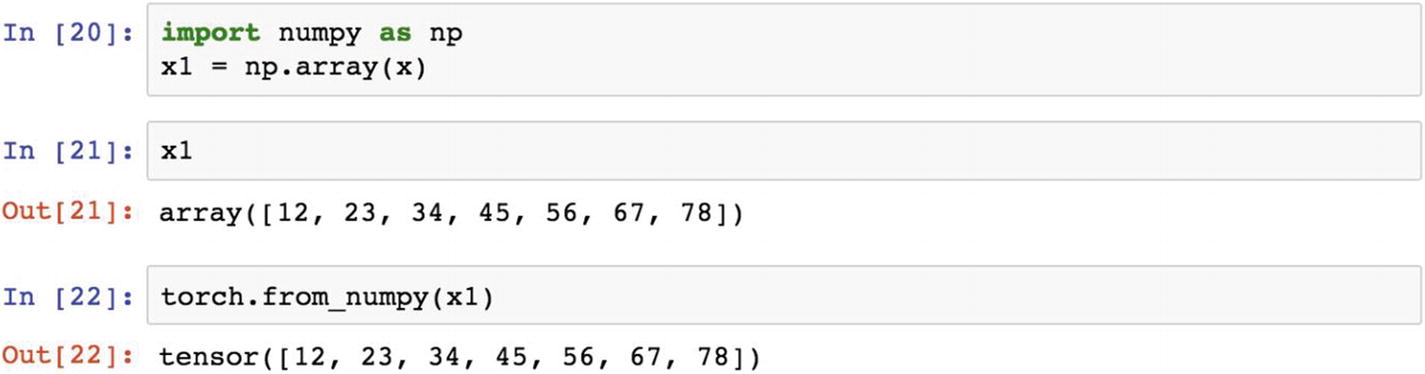

Like NumPy operations, the eye function creates a diagonal matrix, of which the diagonal elements have ones, and off diagonal elements have zeros. The eye function can be manipulated by providing the shape option. The following example shows how to provide the shape parameter.

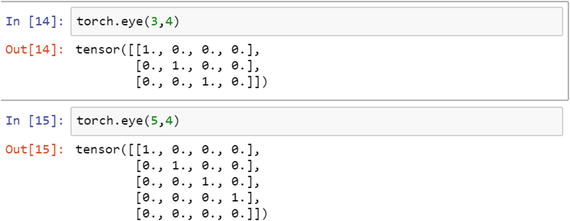

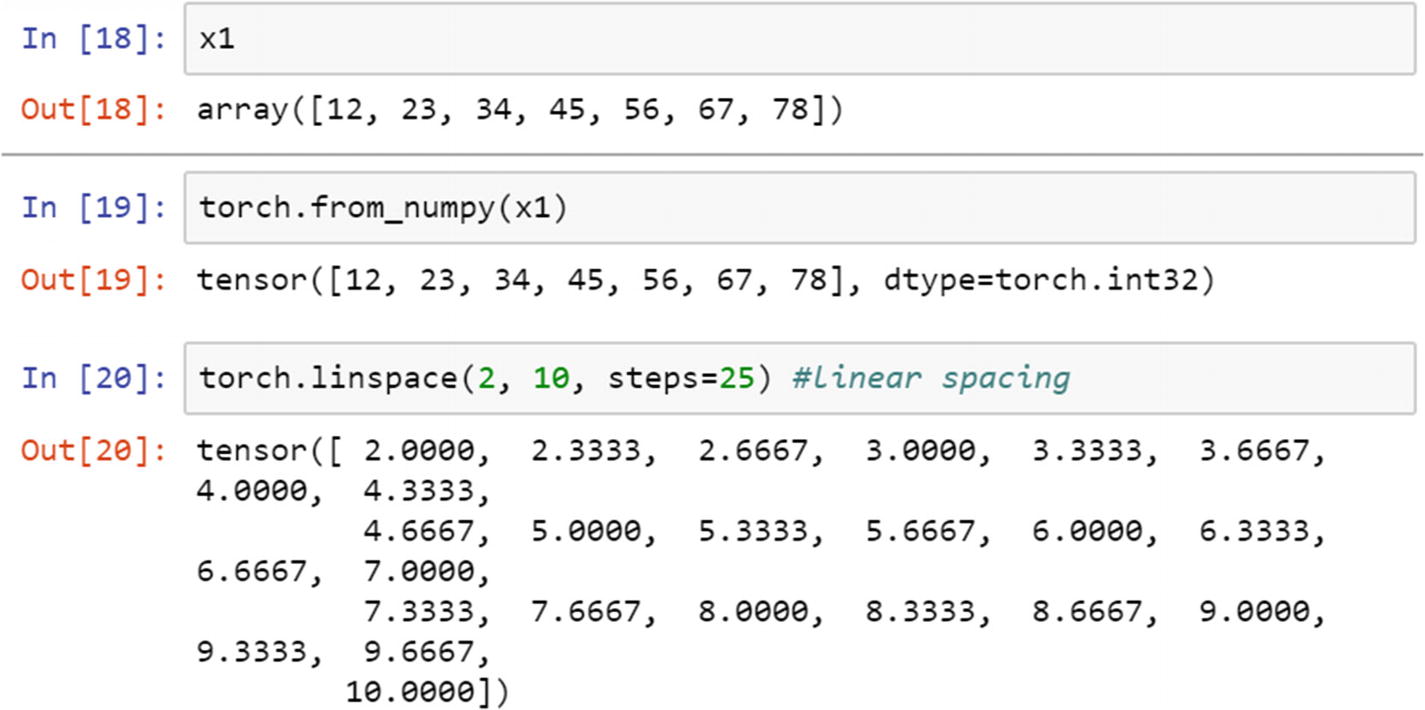

Linear space and points between the linear space can be created using tensor operations. Let’s use an example of creating 25 points in a linear space starting from value 2 and ending with 10. Torch can read from a NumPy array format.

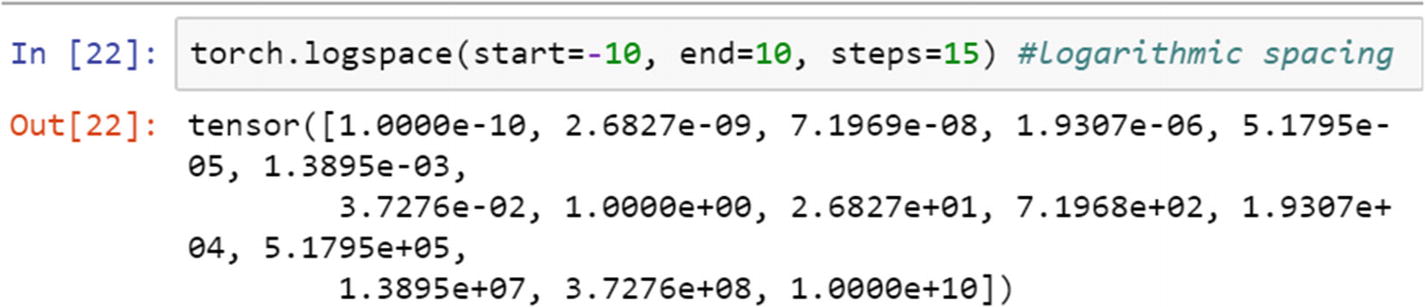

Like linear spacing, logarithmic spacing can be created.

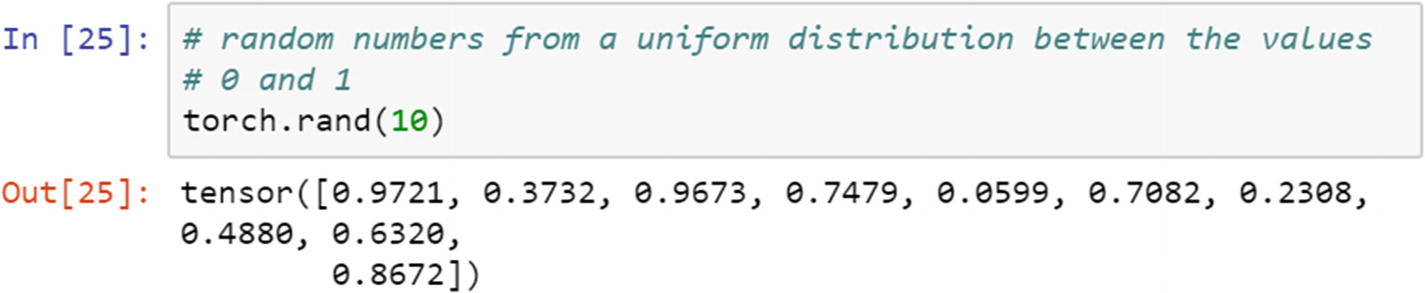

Random number generation is a common process in data science to generate or gather sample data points in a space to simulate structure in the data. Random numbers can be generated from a statistical distribution, any two values, or a predefined distribution. Like NumPy functions, the random number can be generated using the following example. Uniform distribution is defined as a distribution where each outcome has equal probability of happening; hence, the event probabilities are constant.

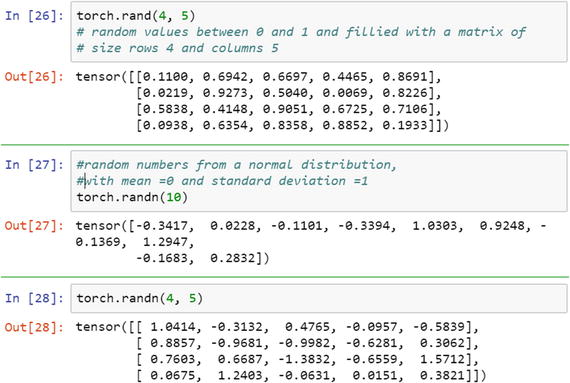

The following script shows how the random number from two values, 0 and 1, are selected. The result tensor can be reshaped to create a (4,5) matrix. The random numbers from a normal distribution with arithmetic mean 0 and standard deviation 1 can also be created, as follows.

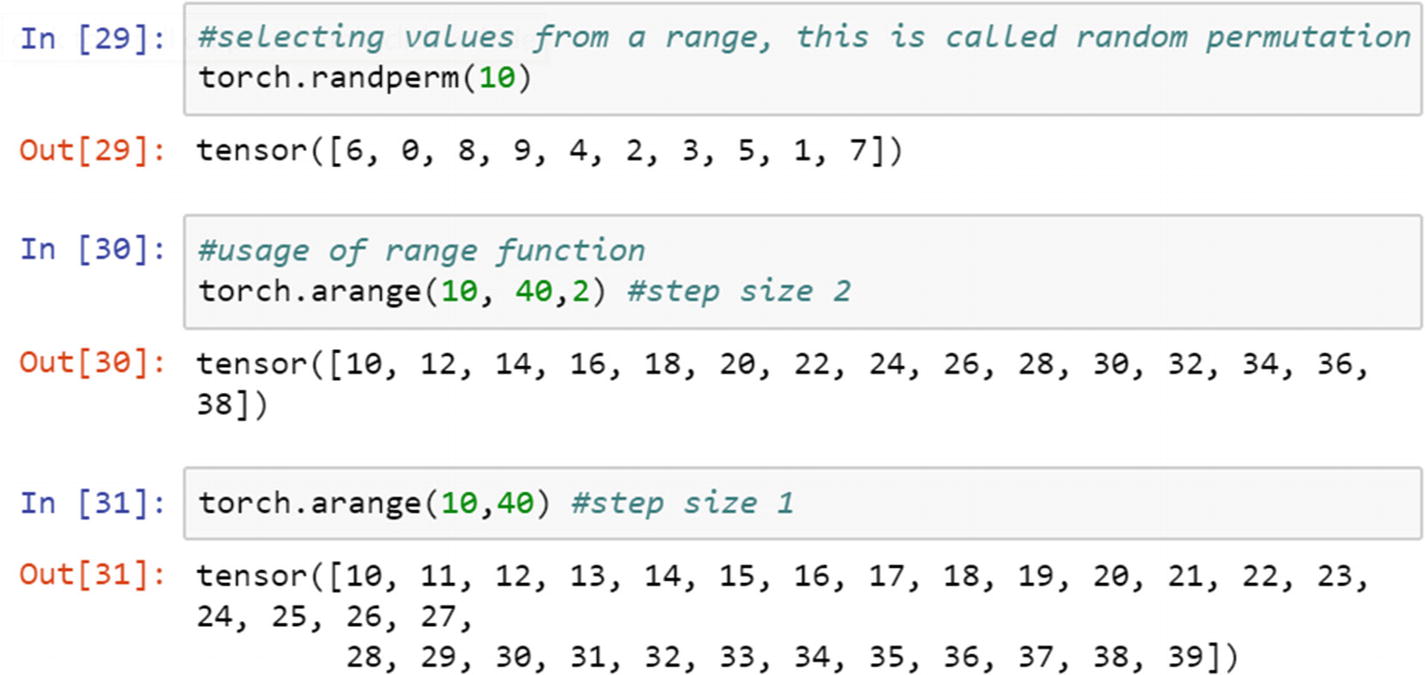

To select random values from a range of values using random permutation requires defining the range first. This range can be created by using the arrange function. When using the arrange function, you must define the step size, which places all the values in an equal distance space. By default, the step size is 1.

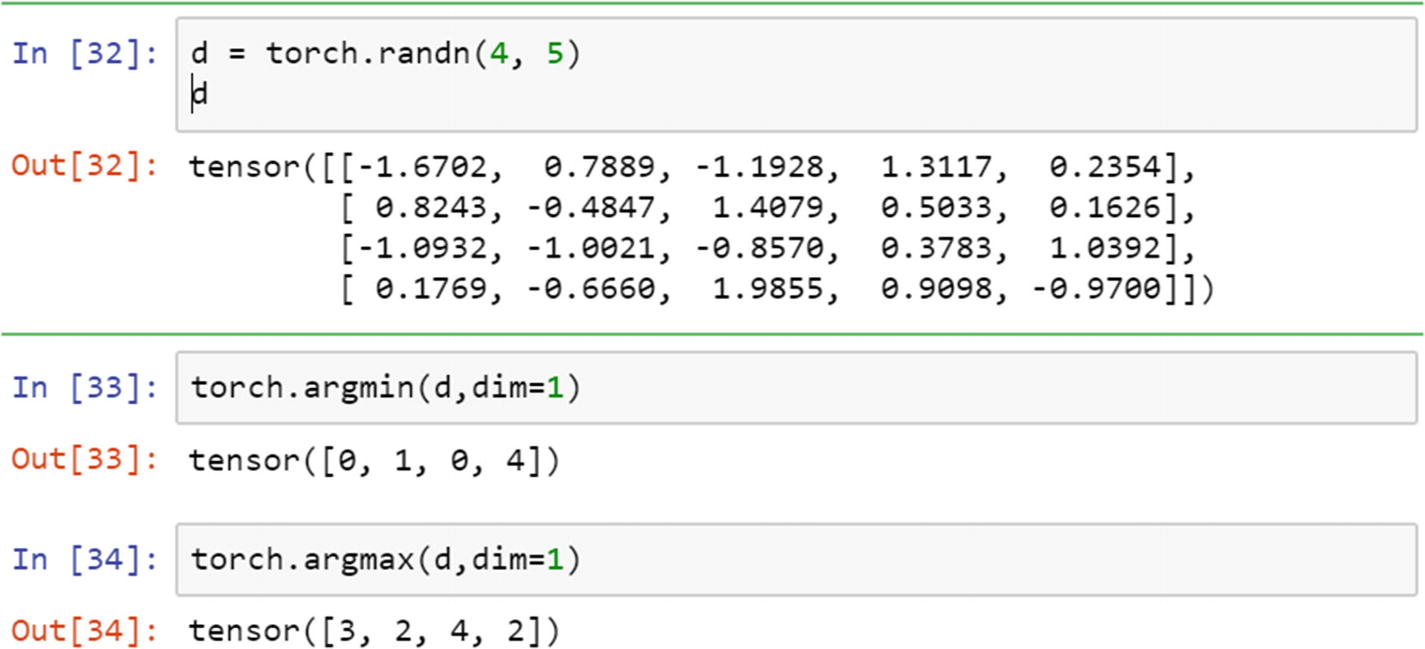

To find the minimum and maximum values in a 1D tensor, argmin and argmax can be used. The dimension needs to be mentioned if the input is a matrix in order to search minimum values along rows or columns.

If it is either a row or column, it is a single dimension and is called a 1D tensor . If the input is a matrix, in which rows and columns are present, it is called a 2D tensor . If it is more than two-dimensional, it is called a multidimensional tensor .

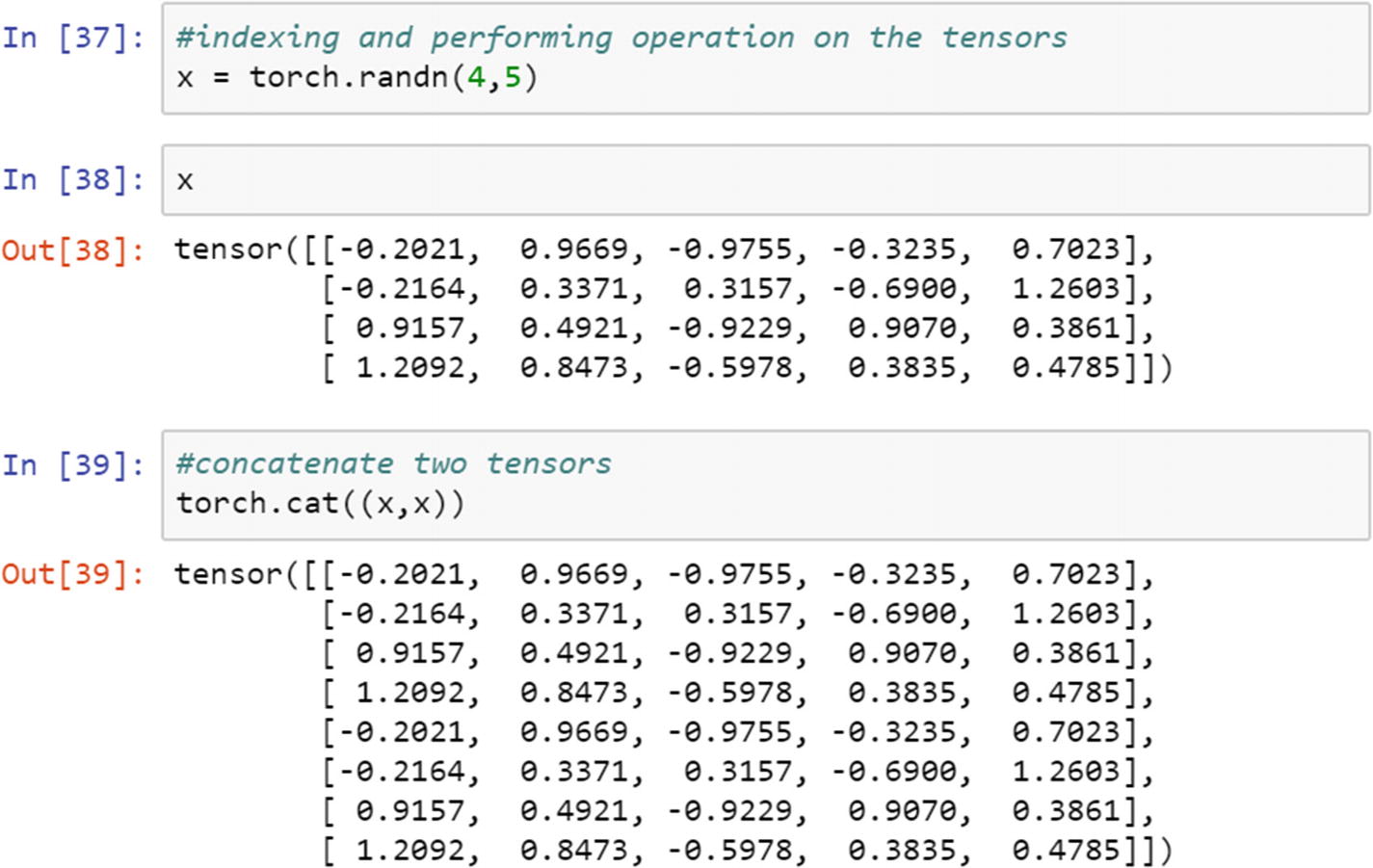

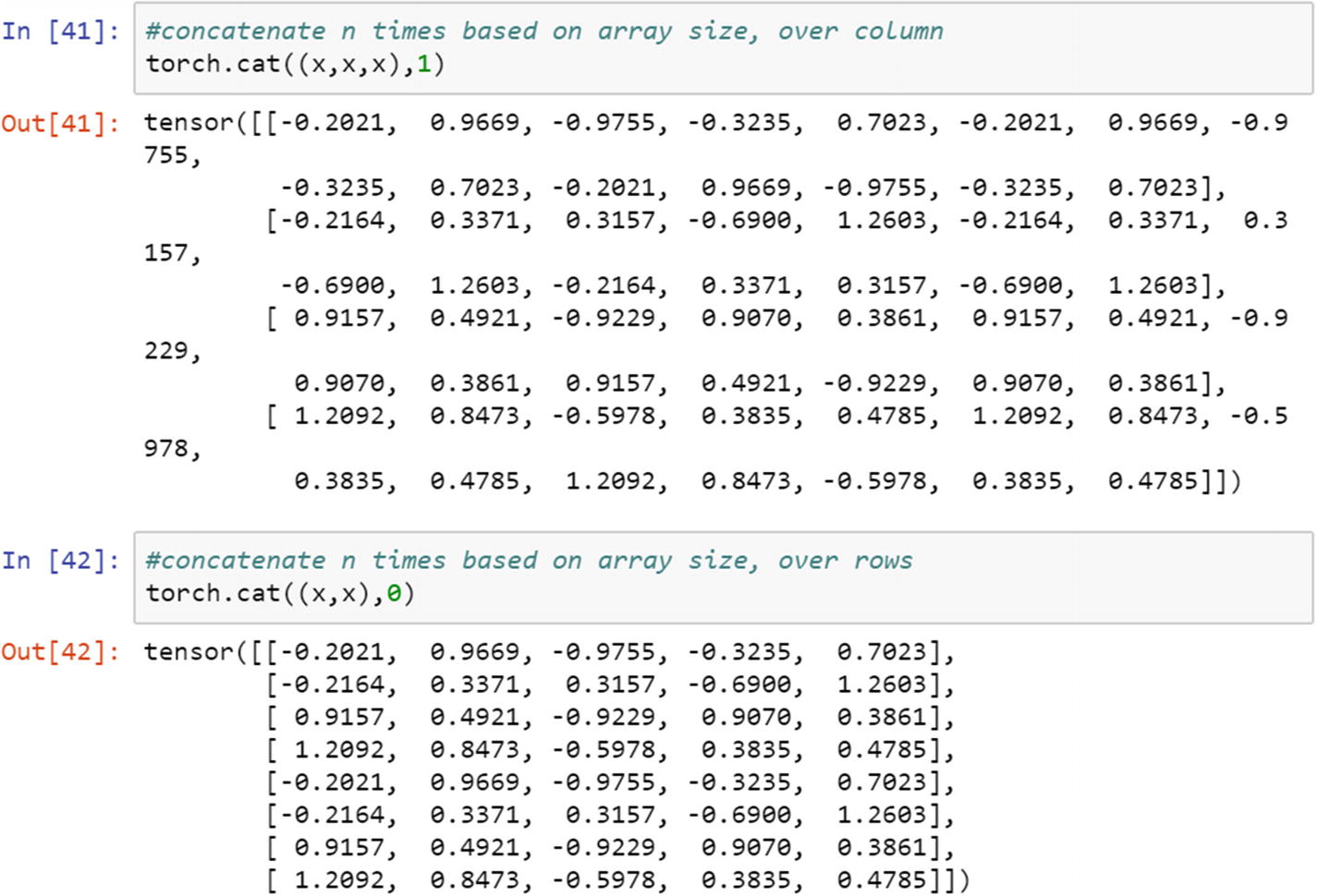

Now, let’s create a sample 2D tensor and perform indexing and concatenation by using the concat operation on the tensors.

The sample x tensor can be used in 3D as well. Again, there are two different options to create three-dimensional tensors; the third dimension can be extended over rows or columns.

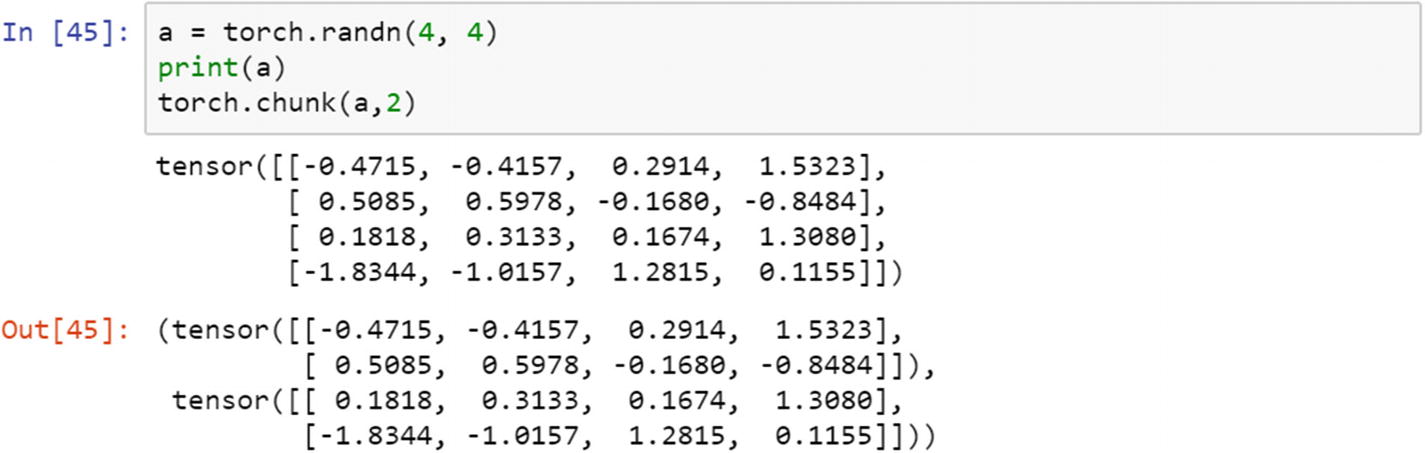

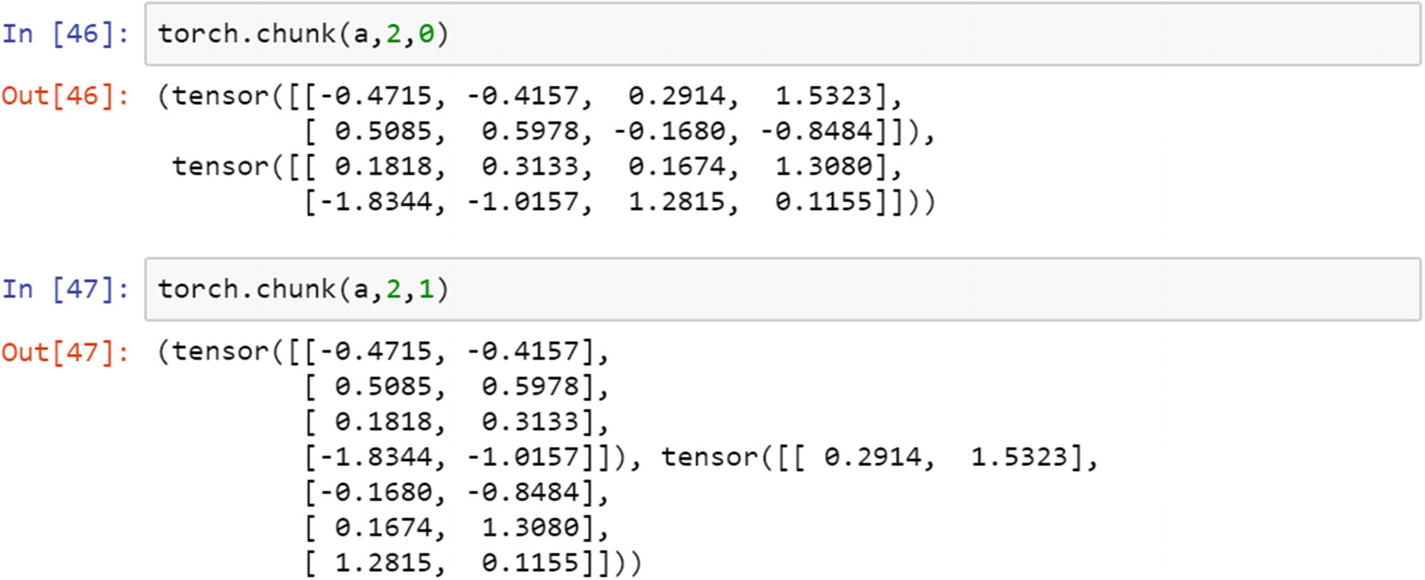

A tensor can be split between multiple chunks. Those small chunks can be created along dim rows and dim columns. The following example shows a sample tensor of size (4,4). The chunk is created using the third argument in the function, as 0 or 1.

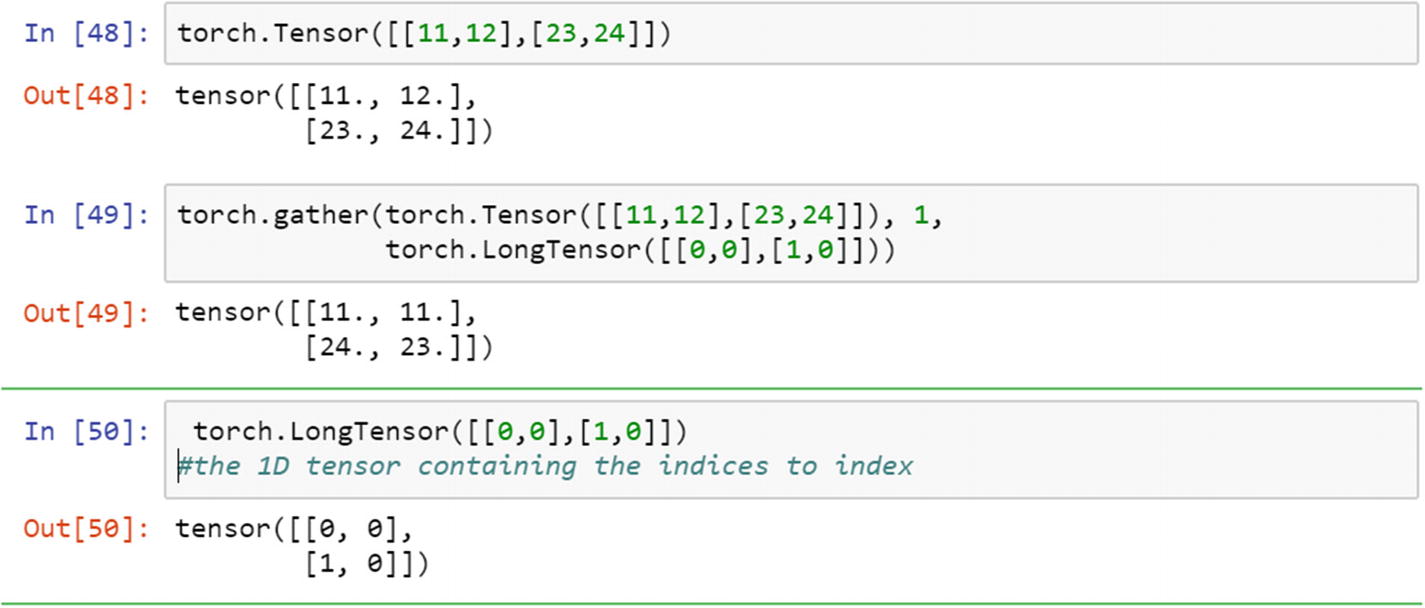

The gather function collects elements from a tensor and places it in another tensor using an index argument. The index position is determined by the LongTensor function in PyTorch.

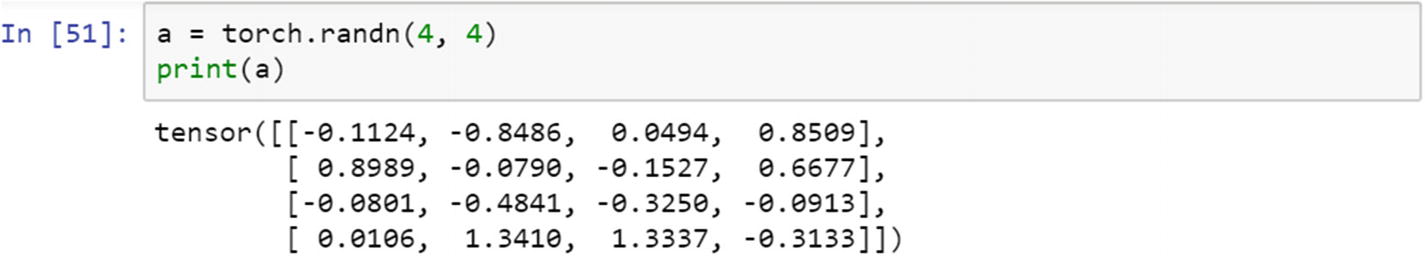

The LongTensor function or the index select function can be used to fetch relevant values from a tensor. The following sample code shows two options: selection along rows and selection along columns. If the second argument is 0, it is for rows. If it is 1, then it is along the columns.

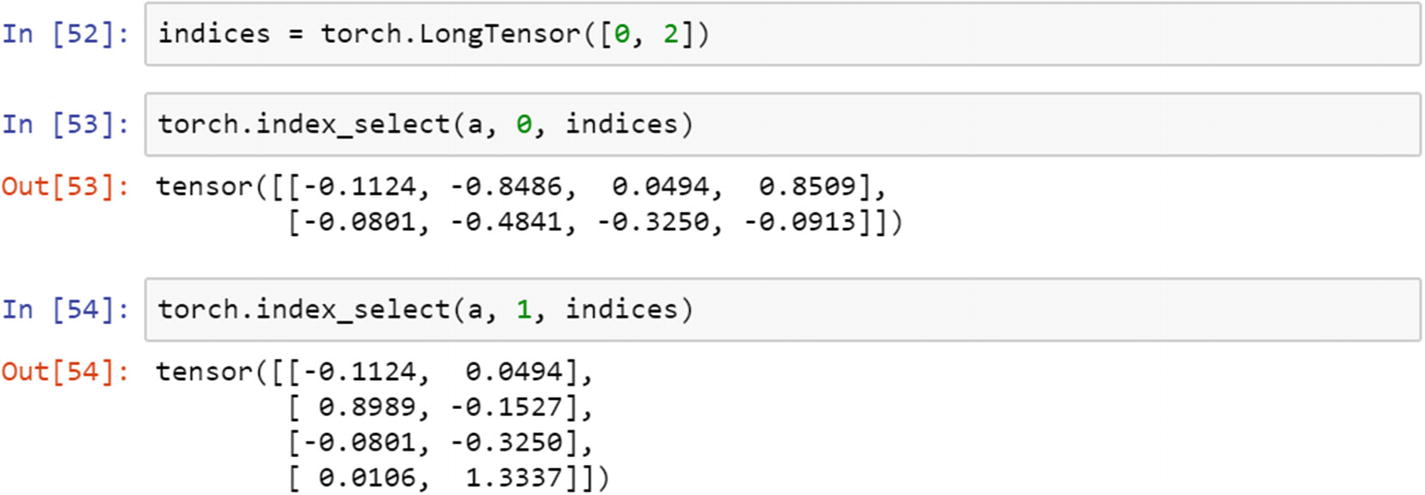

It is a common practice to check non-missing values in a tensor, the objective is to identify non-zero elements in a large tensor.

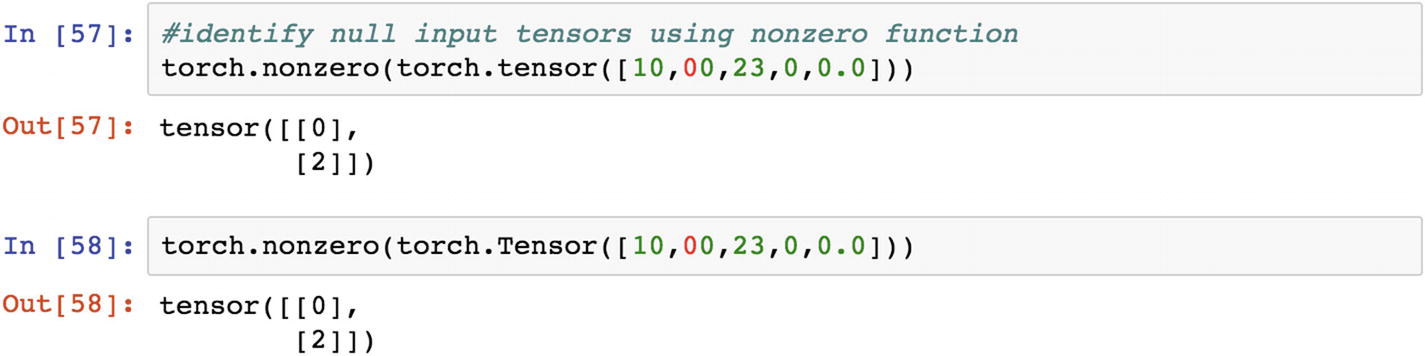

Restructuring the input tensors into smaller tensors not only fastens the calculation process, but also helps in distributed computing. The split function splits a long tensor into smaller tensors.

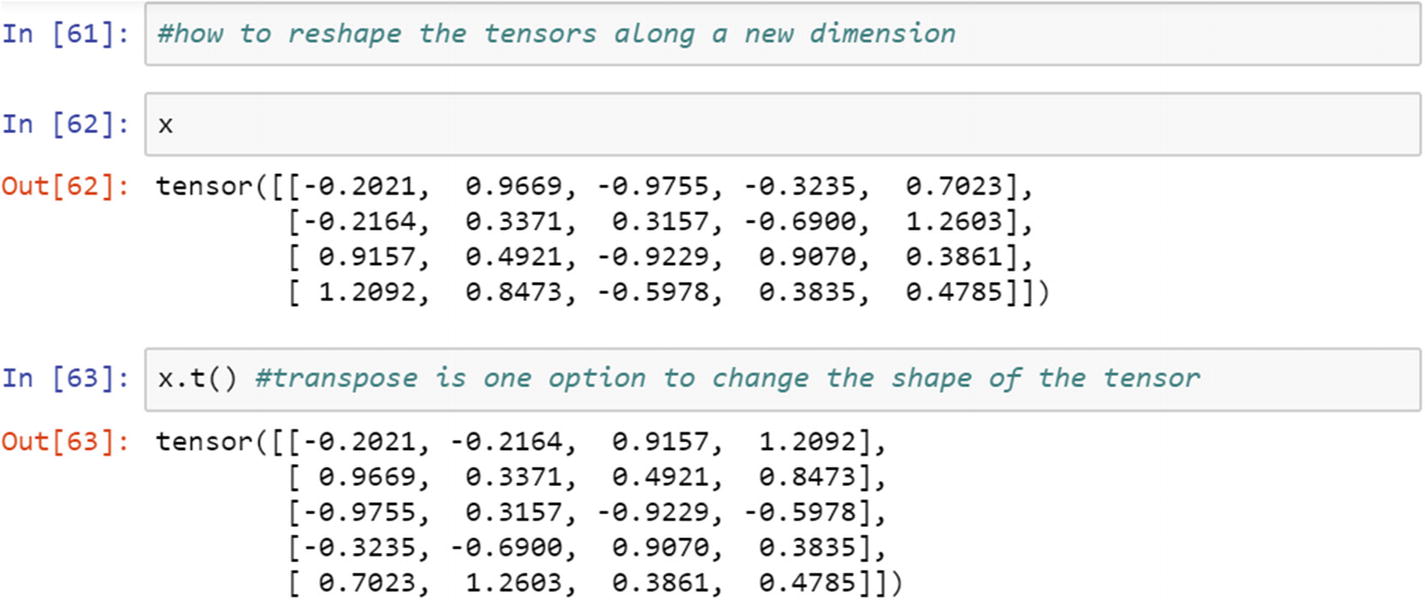

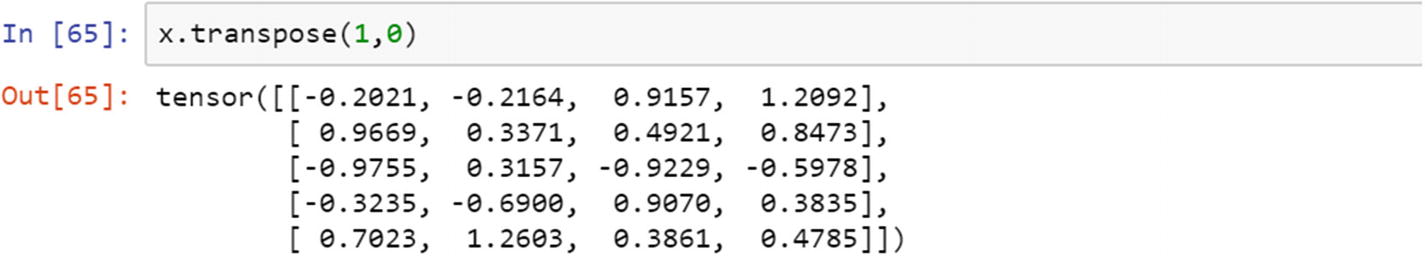

Now, let’s have a look at examples of how the input tensor can be resized given the computational difficulty. The transpose function is primarily used to reshape tensors. There are two ways of writing the transpose function: .t and .transpose.

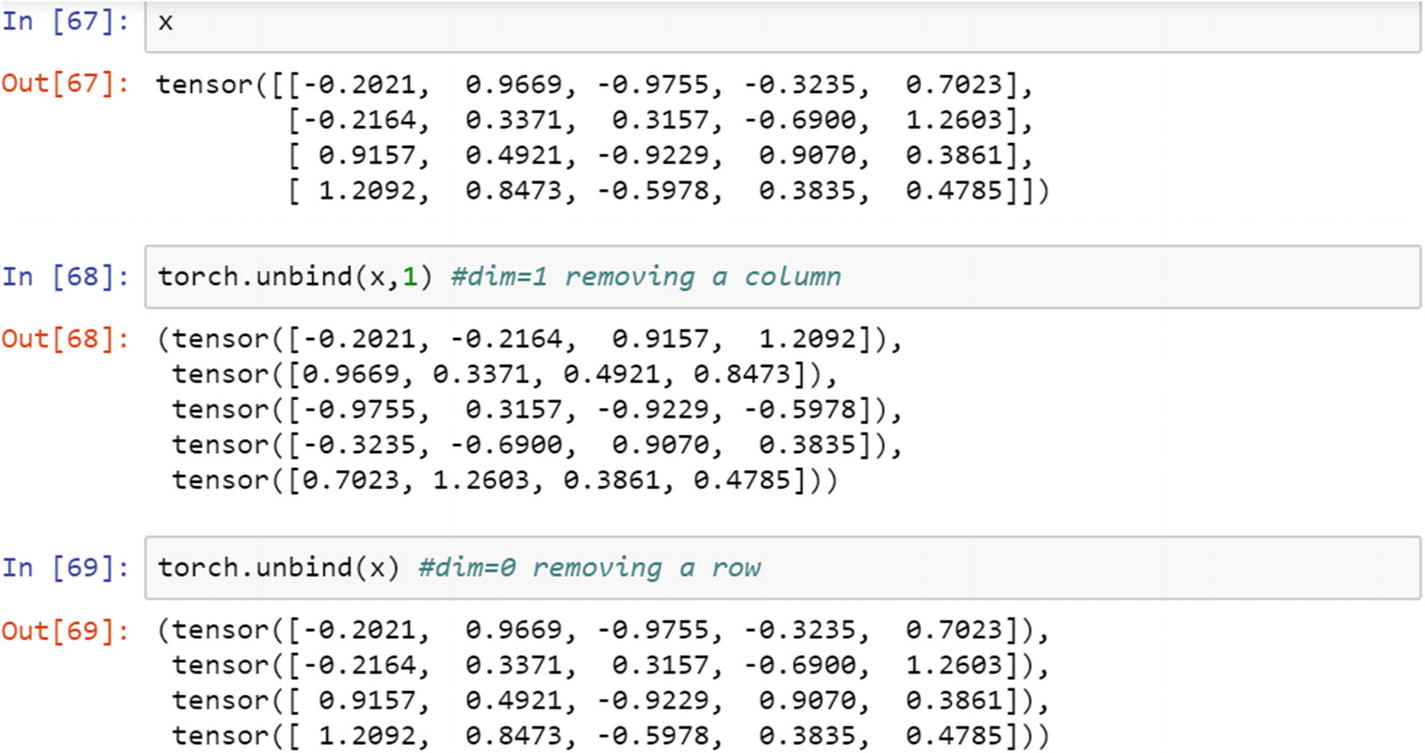

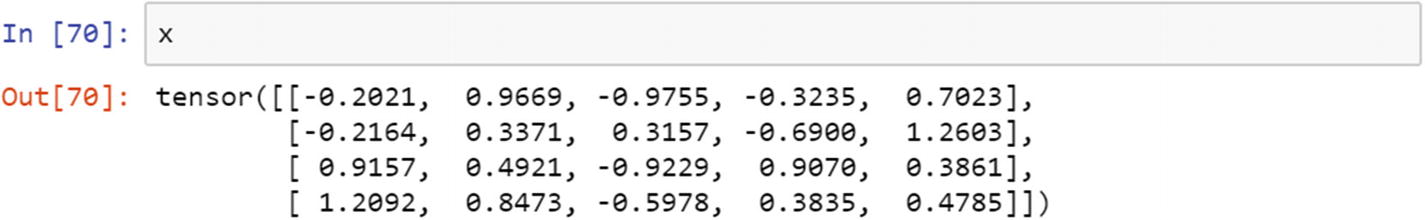

The unbind function removes a dimension from a tensor. To remove the dimension row, the 0 value needs to be passed. To remove a column, the 1 value needs to be passed.

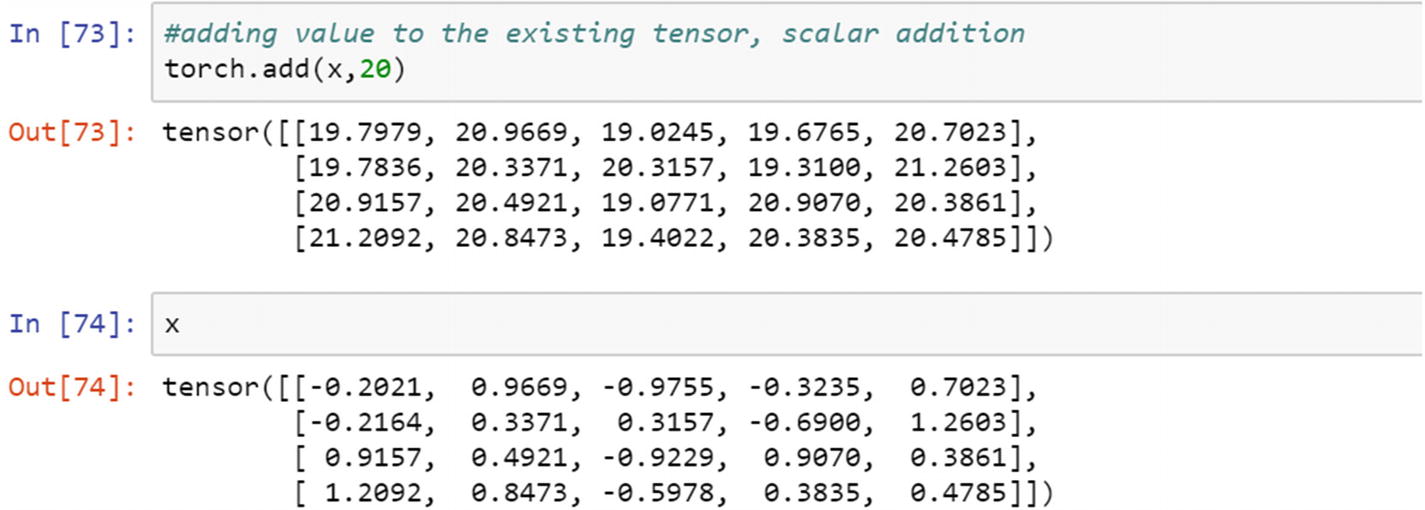

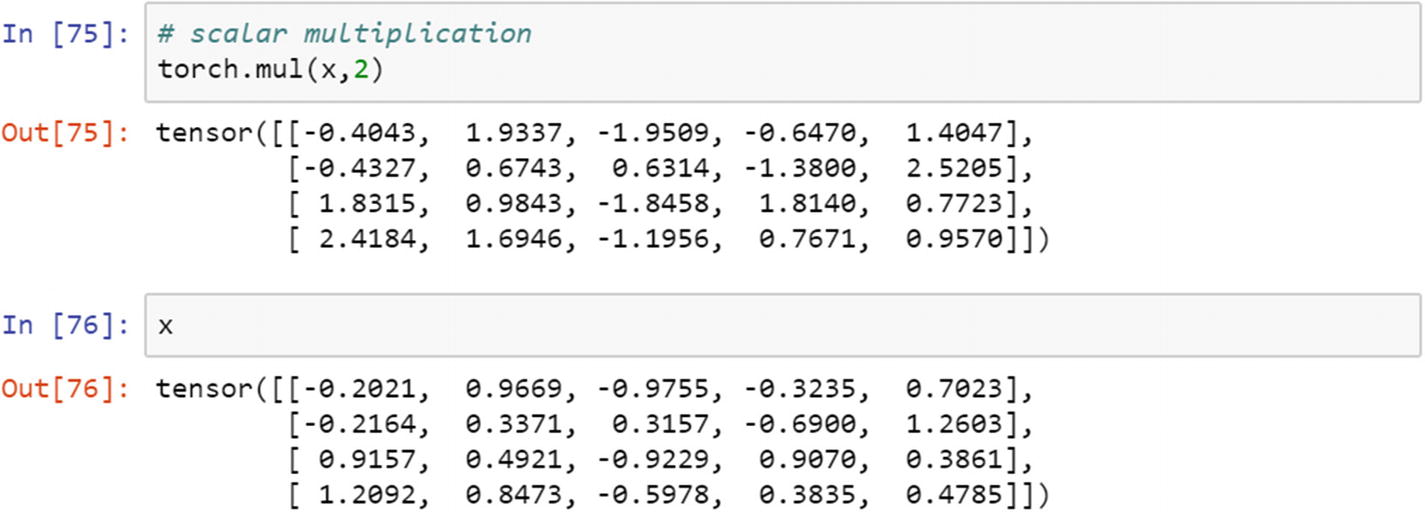

Mathematical functions are the backbone of implementing any algorithm in PyTorch; therefore, it is needed to go through functions that help perform arithmetic-based operations. A scalar is a single value, and a tensor 1D is a row, like NumPy. The scalar multiplication and addition with a 1D tensor are done using the add and mul functions.

The following script shows scalar addition and multiplication with a tensor.

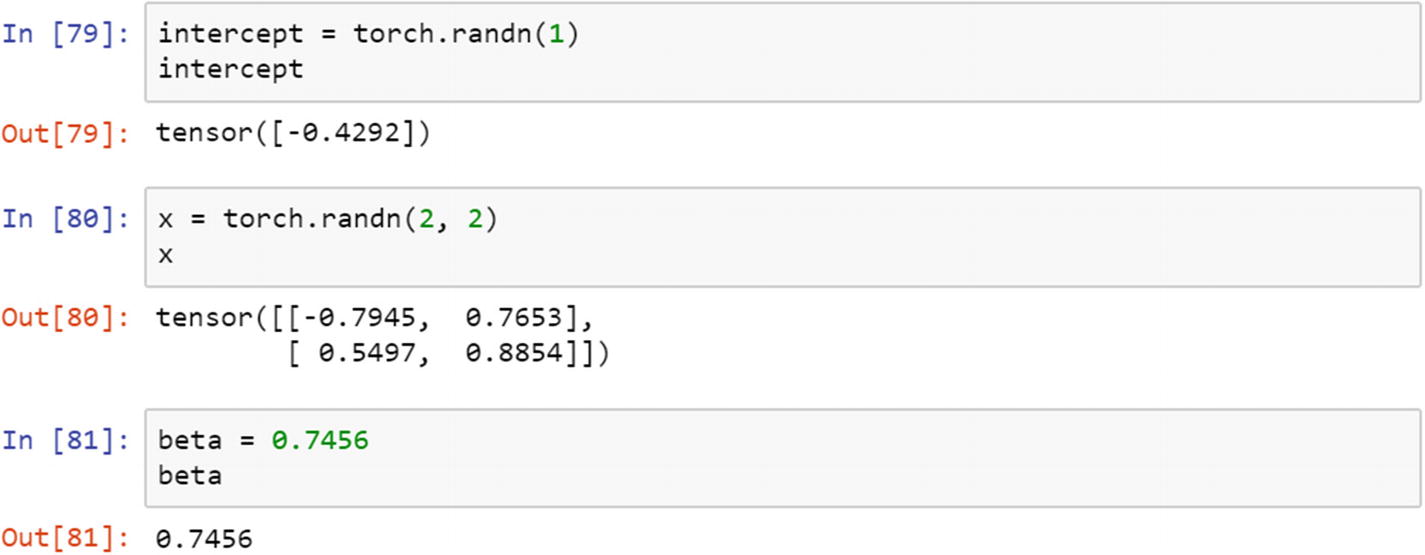

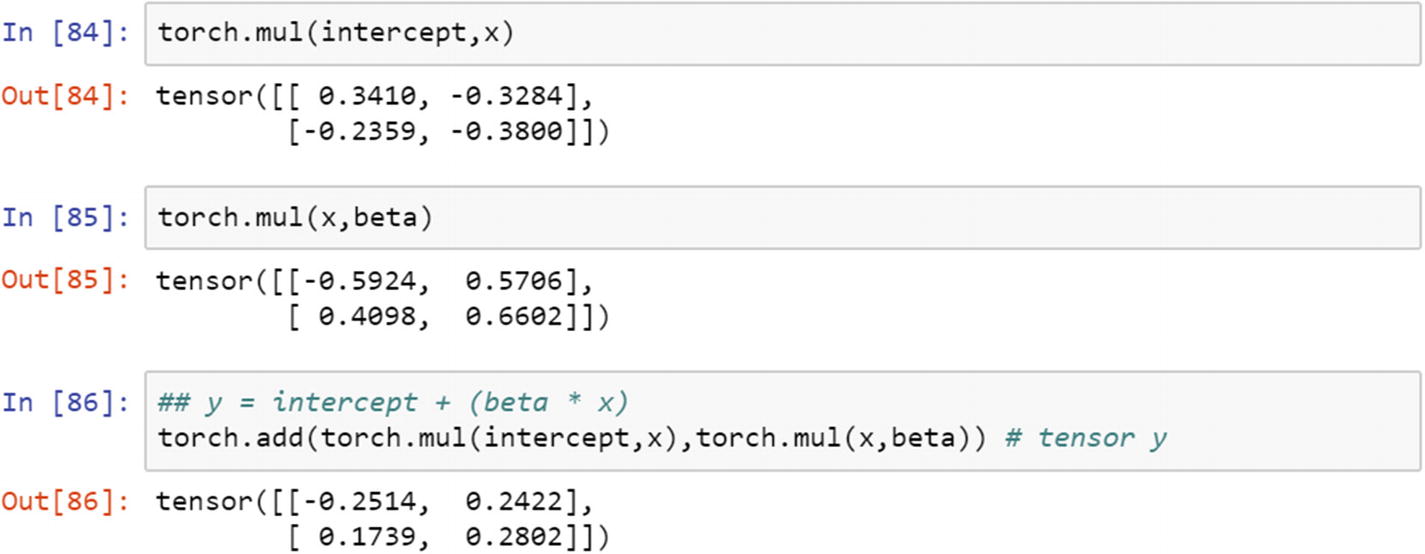

Combined mathematical operations, such as expressing linear equations as tensor operations can be done using the following sample script. Here we express the outcome y object as a linear combination of beta values times the independent x object, plus the constant term.

Output = Constant + (beta * Independent)

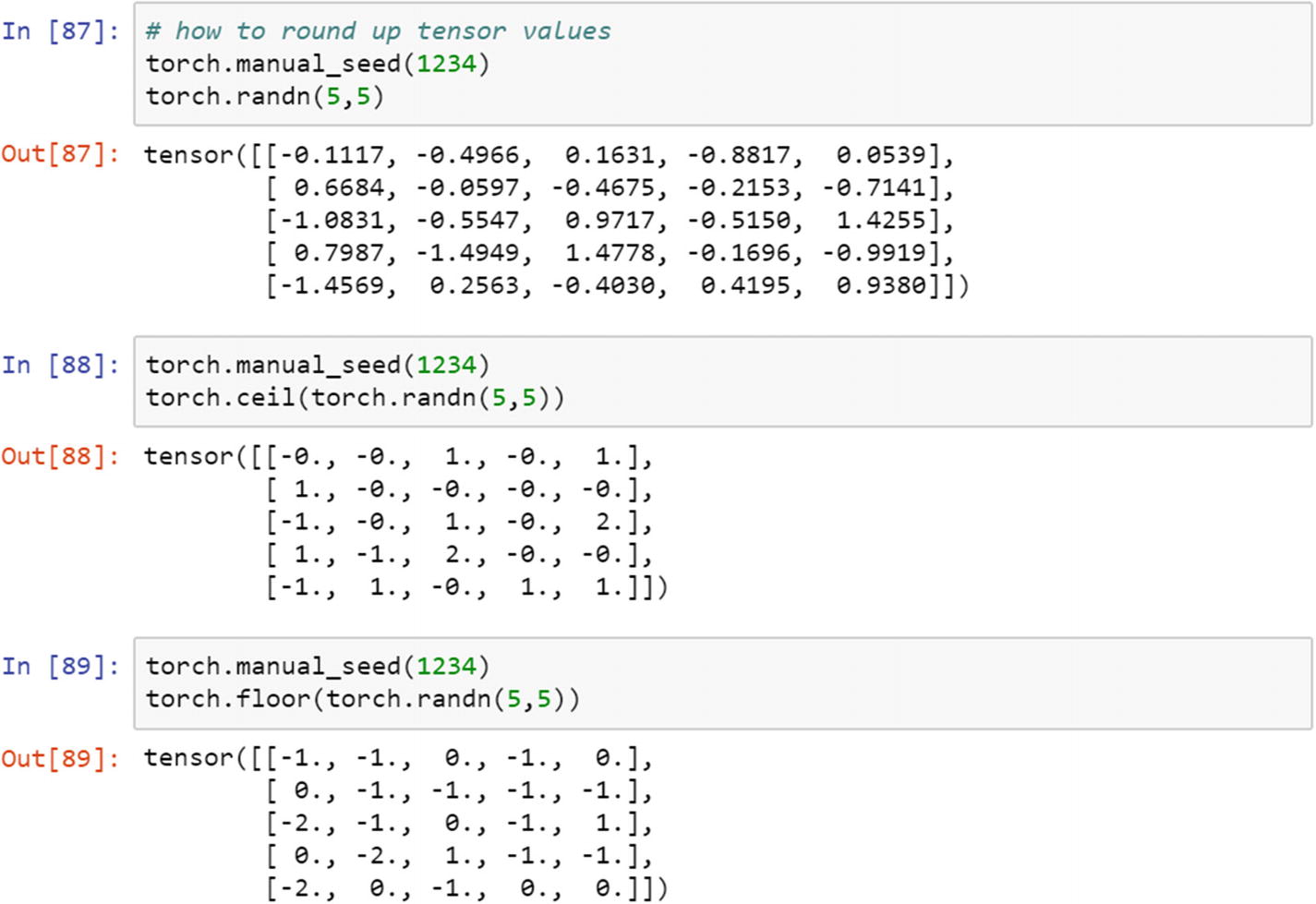

Like NumPy operations, the tensor values must be rounded up by using either the ceiling or the flooring function, which is done using the following syntax.

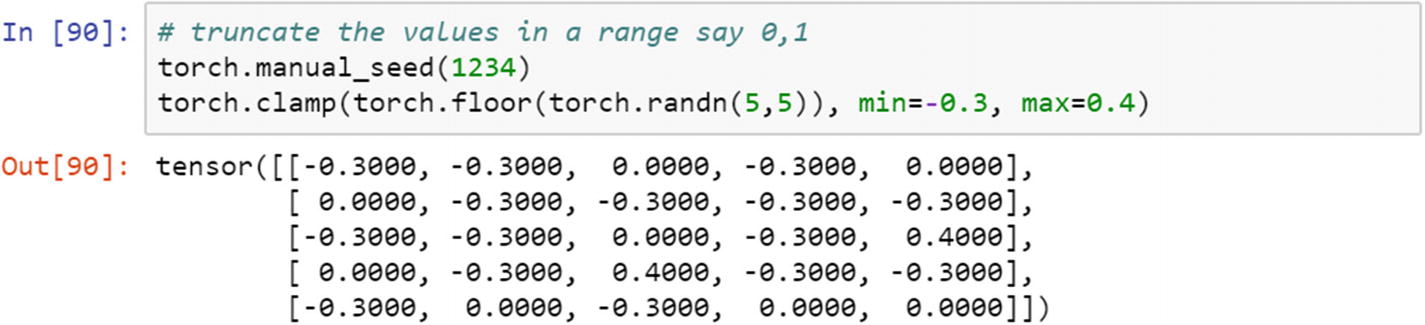

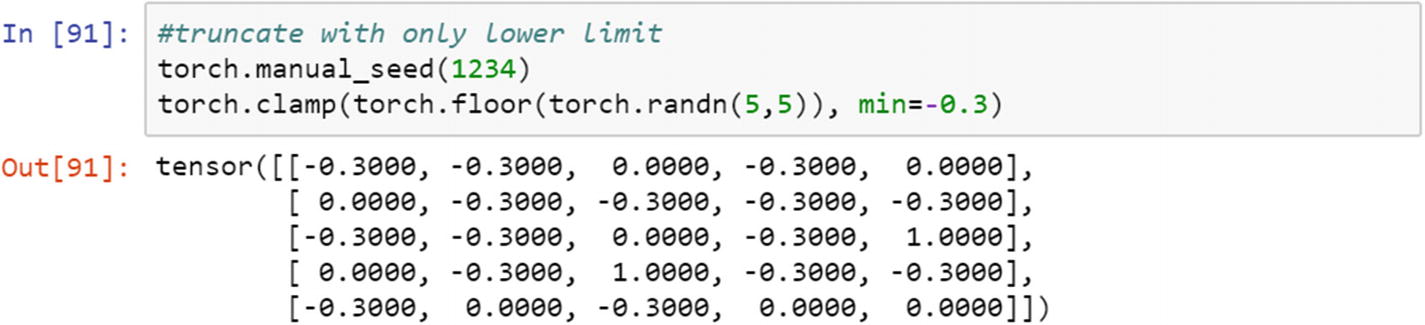

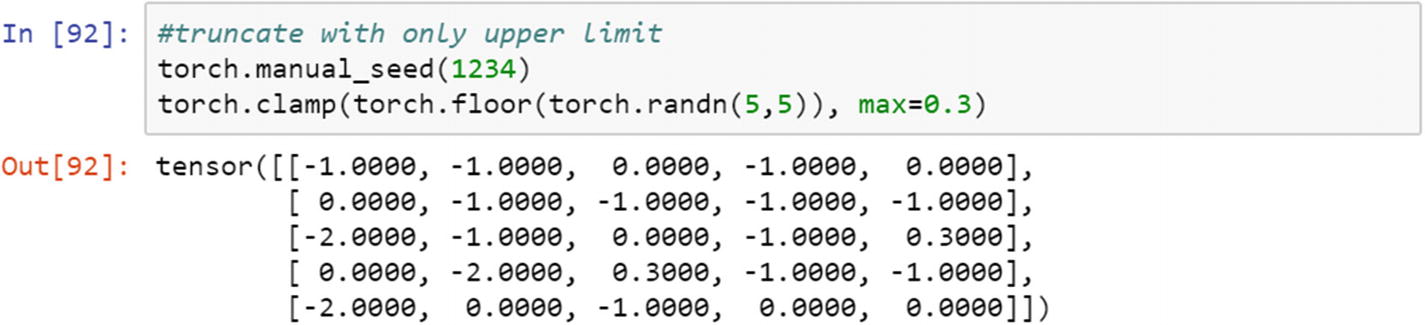

Limiting the values of any tensor within a certain range can be done using the minimum and maximum argument and using the clamp function. The same function can apply minimum and maximum in parallel or any one of them to any tensor, be it 1D or 2D; 1D is the far simpler version. The following example shows the implementation in a 2D scenario.

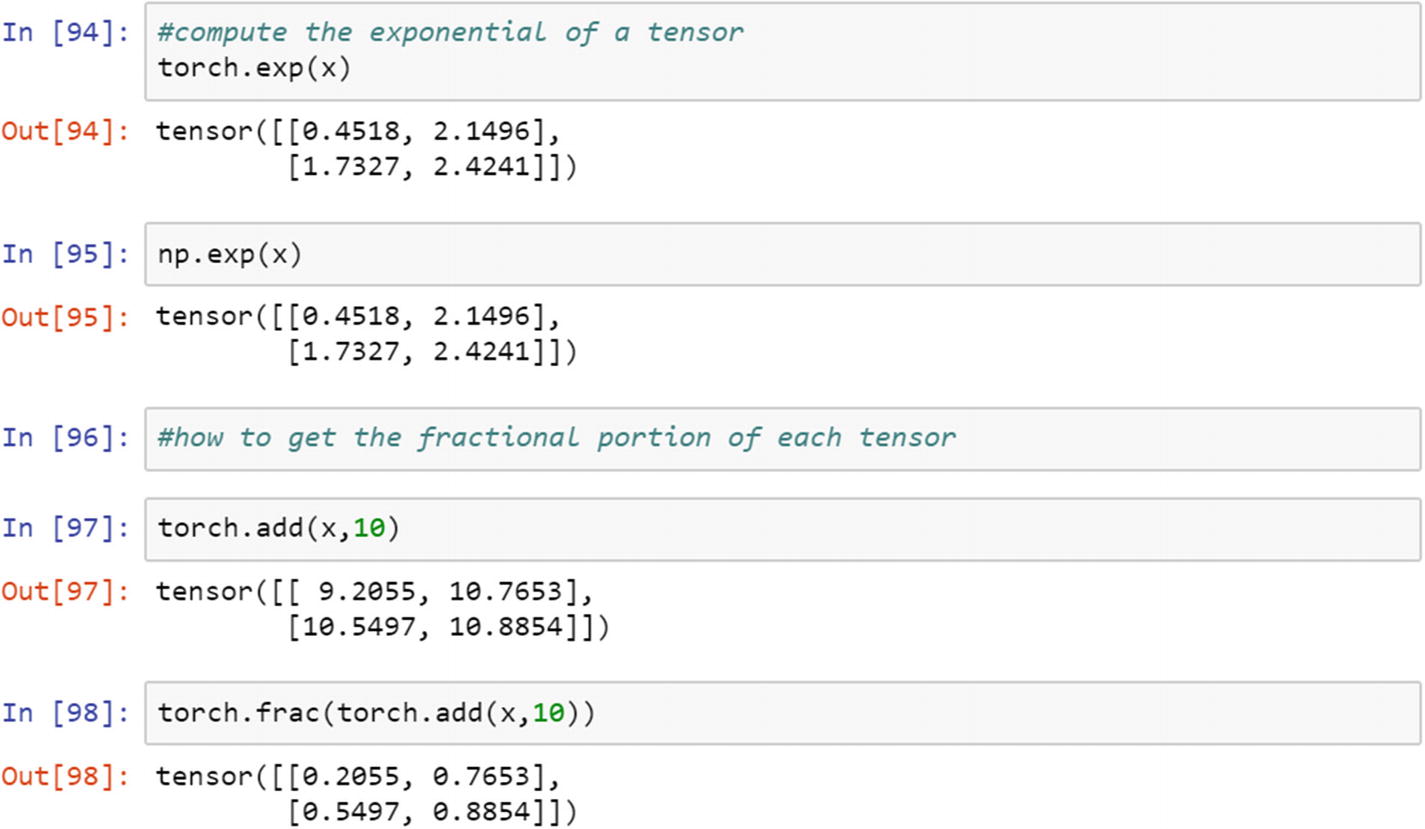

How do we get the exponential of a tensor? How do we get the fractional portion of the tensor if it has decimal places and is defined as a floating data type?

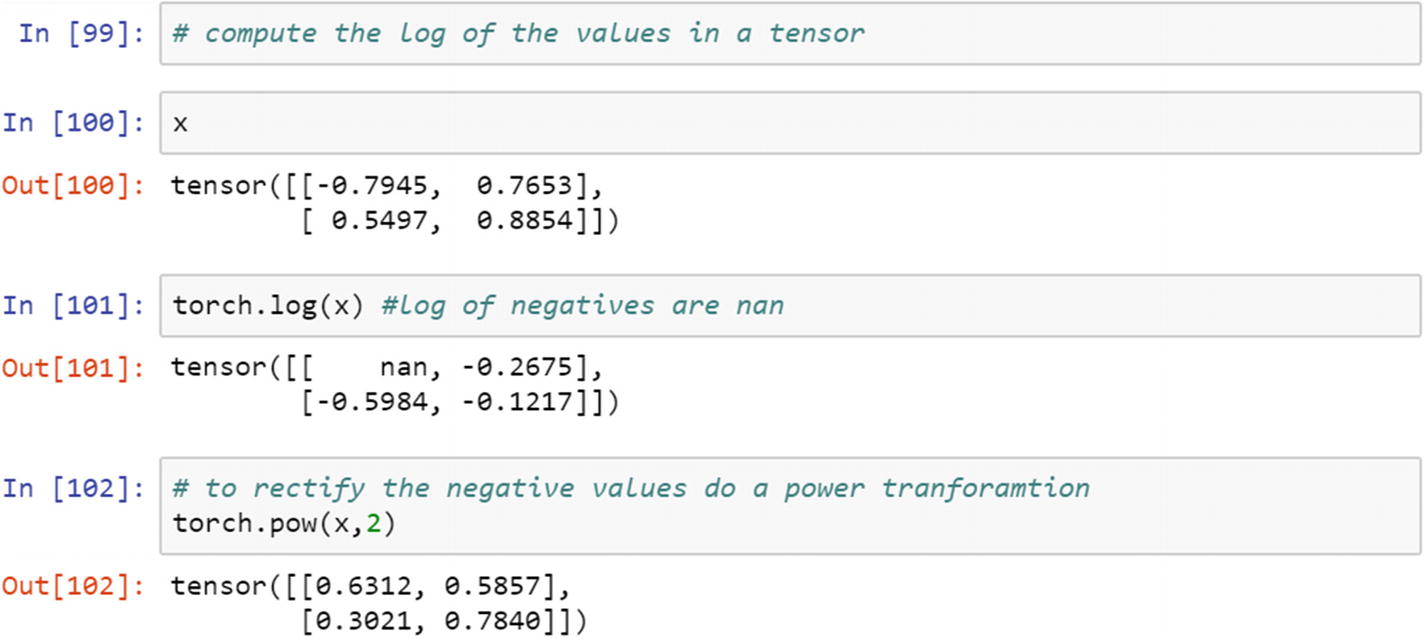

The following syntax explains the logarithmic values in a tensor. The values with a negative sign are converted to nan. The power function computes the exponential of any value in a tensor.

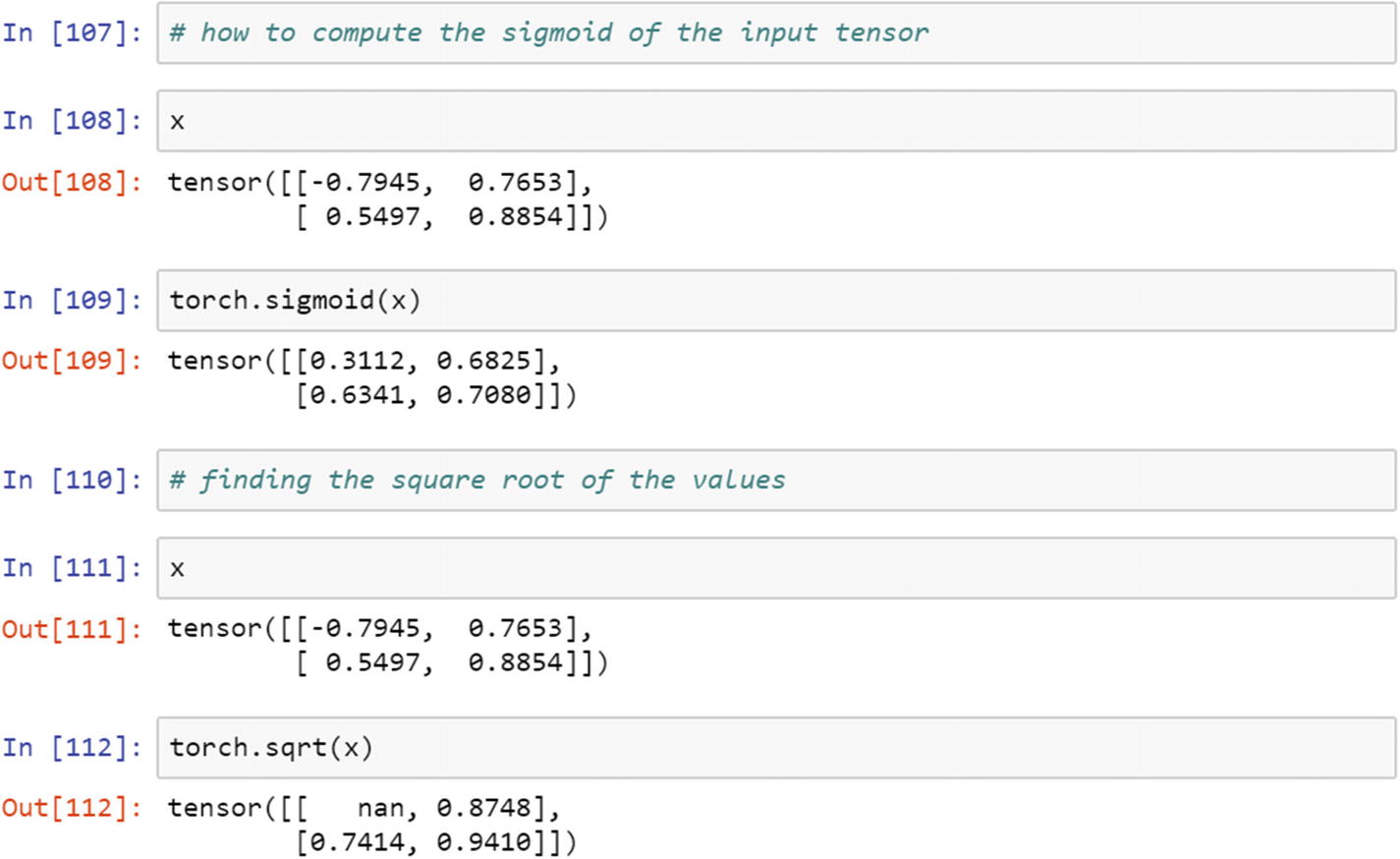

To compute the transformation functions (i.e., sigmoid, hyperbolic tangent, radial basis function, and so forth, which are the most commonly used transfer functions in deep learning), you must construct the tensors. The following sample script shows how to create a sigmoid function and apply it on a tensor.

Conclusion

This chapter is a refresher for people who have prior experience in PyTorch and Python. It is a basic building block for people who are new to the PyTorch framework. Before starting the advanced topics, it is important to become familiar with the terminology and basic syntaxes. The next chapter is on using PyTorch to implement probabilistic models, which includes the creation of random variables, the application of statistical distributions, and making statistical inferences.